Abbreviations: L = left hemisphere; R = right hemisphere.

Speech production is an effortless task for most people, but one that is orchestrated by the coordinated activity of a tremendous number of neurons across a large expanse of cortical and subcortical brain regions. Early evidence for the localization of functions related to speech motor output to the left inferior frontal cortex was famously provided by the French neurosurgeon Paul Broca (1861, 1865), and for many years associations between impaired behaviors and postmortem localization of damaged tissue offered our only window into the brain’s speech systems. Starting in the 1930s, Wilder Penfield and his colleagues began to perfect and systematize electrical stimulation mapping in patients undergoing neurosurgery, providing a much finer picture of the localization of the upper motor neurons that drive the articulators and better defining the areas of the cerebral cortex that are important for speaking (Penfield and Roberts, 1959). The advent and availability of non-invasive brain imaging techniques, however, provided a critical turning point for research into the neural bases of speech, enabling the testing of hypotheses driven by conceptual and theoretical models in healthy and disordered populations.

Neuroimaging methods, which include positron emission tomography (PET), functional magnetic resonance imaging (fMRI), and electro- and magneto-encephalography (EEG/MEG), have provided substantial insight into the organization of neural systems important for speech and language processes (see reviews by Turkeltaub, 2002; Indefrey and Levelt, 2004; Demonet et al., 2005; Price, 2012). Research on speech production (distinct from language) focuses primarily on the motor control processes that enable rapid sequencing, selection, and articulation of phonemes, syllables, and words, as well as how the brain integrates incoming sensory information to guide motor output. We consider this domain to intersect the theoretical constructs of articulatory and auditory phonetics, encompassing how the brain enacts sound patterns and perceives and utilizes self-produced speech signals. Speech is also inherently coupled with phonology (the organization of speech sounds in the language) especially for planning multiple syllables and words. While this chapter touches on these aspects of language, the “extra-linguistic” aspects of speech production are its primary focus.

In this chapter we first present a brief overview of the mental processes proposed to underlie the brain’s control of speech production, followed by a review of the neural systems that are consistently engaged during speech, with focus on results from fMRI. Finally, we review the DIVA (Directions Into Velocities of Articulators) model (Guenther, 1995, 2016; Guenther et al., 1998, 2006; Tourville and Guenther, 2011) and its extension as GODIVA (Gradient Order DIVA; Bohland et al., 2010), which provide frameworks for not only understanding and integrating the results of imaging studies, but also for formulating new hypotheses to test in follow-up experiments (Golfinopoulos et al., 2010).

Influential conceptual models have delineated a series of stages involved in the process of speaking, from conceptualization to articulation (e.g., Garrett, 1975; Dell and O’Seaghdha, 1992; Levelt, 1999; Levelt et al., 1999). Such models propose a role for abstract phonological representations of the utterance to be produced, as well as somewhat lower-level auditory or motor phonetic and articulatory representations. These components are theoretically invoked after the specification of the phonological word form (i.e., a more abstract representation of the speech plan). The neural correlates of these different types of information may be, to a certain degree, anatomically separable, with distinct pools of neurons involved in representing speech in different reference frames or at different levels of abstraction. Additional groups of neurons and synaptic pathways may then serve to coordinate and transform between these different representations.

Different speech tasks naturally highlight different theoretical processes, and experiments investigating speech motor control vary accordingly in at least four key ways. First, in some studies, subjects are asked to produce speech overtly, involving articulation and the production of an audible speech signal, while in others speech is covert, involving only internal rehearsal without movement of the articulators; in each case, it is assumed that phonetic representations of speech are engaged during task performance. Covert speaking protocols have been used often to avoid contamination of images with movement-related artefacts, but a number of differences have been shown in comparing activation patterns in overt vs. covert speech in regions associated with motor control and auditory perception (Riecker, Ackermann, Wildgruber, Dogil, et al., 2000; Palmer et al., 2001; Shuster and Lemieux, 2005), including reduced activity in the motor cortex, auditory cortex, and superior cerebellar cortex for covert speech. Second, some studies use real words – entries in the speaker’s mental lexicon1 – while others use nonwords, typically comprising phonotactically legal phoneme sequences in the speaker’s language (i.e., forming “pseudowords”). The latter are often used to control for effects related to lexical retrieval and/or semantic processes; such studies therefore may provide a purer probe for speech motor control processes. However, since pseudowords lack the long-term memory representations available for words, their production may also tax short-term phonological working memory more heavily than word production (Gathercole, 1995) and may thus increase task difficulty. These changes may result not only in a higher rate of speech errors during task performance but also in domain-general increases in cortical activity (e.g., Fedorenko, et al., 2013). Third, speech in the scanner may be guided by online reading processes, cued by orthographic display, repeated after auditory presentation of the target sounds, or triggered by other external cues such as pictures. Each of these cues leads to expected responses in different brain areas and pathways (such as those involved in reading or speech perception), and requires careful consideration of control conditions. Finally, the length of the speech stimulus can range from a single vowel or syllable to full sentences, with the latter engaging areas of the brain responsible for syntax and other higher-level language processes.

In most cases, the speech materials in speech production studies are parameterized according to phonological and/or phonetic variables of interest. For example, comparing the neural correlates associated with the production of sound sequences contrasted by their complexity (in terms of phonemic content or articulatory requirements) highlights the brain regions important for representing and executing complex speech patterns. Modern techniques such as repetition suppression fMRI (Grill-Spector et al., 2006) and multi-voxel pattern analysis (Norman et al., 2006) have recently been used to help determine the nature of speech-related neural representations in a given brain region, helping to tease apart domain-general activations from speech-specific neural codes.

An important additional focus involves the integration of sensory and motor processes, which is fundamental to acquiring and maintaining speech and to identifying and correcting errors online. The coupling of these neural components is described in more detail later in this chapter (see “Neurocomputational modeling of speech production”). Functional imaging studies have attempted to identify components underlying sensorimotor integration in speech by comparing activity while listening and producing or rehearsing speech (Buchsbaum et al., 2001; Hickok et al., 2003, 2009) and through the online use of altered auditory or somatosensory feedback (e.g., Hashimoto and Sakai, 2003; Tremblay et al., 2003; Heinks-Maldonado et al., 2006; Tourville et al., 2008; Golfinopoulos et al., 2011). Together, neuroimaging results have provided a detailed, though still incomplete, view of the neural bases of speech production.

Here we review current understanding of the neural bases of speech production in neurologically healthy speakers. Speaking engages a distributed set of brain regions across the cerebral cortex and subcortical nuclei in order to generate the signals transmitted along the cranial nerves that activate the muscles involved in articulation. In this section we first discuss key subcortical structures, followed by cortical regions that are activated during speech production tasks.

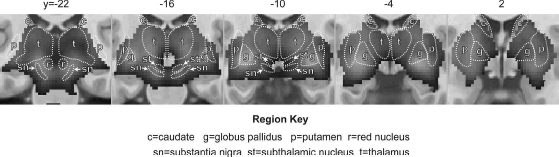

Subcortical brain regions reliably activated during speech production are illustrated in Figure 6.1, which shows regions of the basal ganglia and thalamus,2 and Figure 6.2, which shows the cerebellum. Blood oxygenation level dependent (BOLD) fMRI responses in the brain regions shown in the dark gray continuum are significantly higher during speech production (reading aloud from an orthographic cue) than during a silent control task (viewing letter strings or non-letter characters approximately matched to the text viewed in the speech task). Statistical maps were derived from pooled analysis (Costafreda, 2009) of eight studies of speech production that includes data from 92 unique neurologically healthy speakers reading aloud one- or two-syllable words or nonwords. Not pictured are the cranial nerve nuclei that contain the motoneurons that activate the vocal tract musculature.

Neural signals are transmitted to the articulators along the cranial nerves, primarily involving 6 of the 12 cranial nerve bundles. These fibers originate in the cranial nerve nuclei within the brainstem. A primary anatomical subdivision can be made between control of vocal fold vibration, respiration, and articulation. The intrinsic laryngeal muscles are important during vocal fold opening, closure, and lengthening, and are innervated by motoneurons originating in the nucleus ambiguus, which form the external branch of the superior laryngeal nerve and the recurrent nerve. The extrinsic laryngeal muscles are primarily engaged in modulations of pitch, and receive projections from motoneurons located near the caudal hypoglossal nucleus (Jürgens 2002; Simonyan and Horwitz, 2011). Motoneuron signals project to the laryngeal muscles via the, Xth and XIth cranial nerves (Zemlin, 1998). Supralaryngeal articulatory information is carried via nerves originating in the trigeminal nucleus (forming the Vth nerve) and the facial motor nucleus (forming the VIIth nerve) in the pons, and from the hypoglossal nucleus in the medulla (forming the XIIth cranial nerve). These motor nuclei receive projections mainly from primary motor cortex, which provides the “instructions” for moving the articulators via a set of coordinated muscle activations. The VIIIth cranial nerve, which transmits auditory (as well as vestibular) information from the inner ear to the cochlear nucleus in the brainstem, is also relevant for speech production, forming the first neural component in the auditory feedback pathway involved in hearing one’s own speech.

Brain imaging studies are limited by the available spatial resolution and rarely report activation of the small cranial nerve nuclei; furthermore, many studies do not even include these nuclei (or other motor nuclei located in the spinal cord important for respiratory control) within the imaging field of view. Activation of the pons (where many of the cranial nerve nuclei reside) is a relatively common finding in studies involving overt articulation (Paus et al., 1996; Riecker, Ackermann, Wildgruber, Dogil, et al., 2000; Bohland and Guenther, 2006; Christoffels et al., 2007), but these results typically lack the spatial resolution to resolve individual motor nuclei.

The basal ganglia are a group of subcortical nuclei that heavily interconnect with the frontal cortex via a series of largely parallel cortico-basal ganglia-thalamo-cortical loops (Alexander et al., 1986; Alexander and Crutcher, 1990; Middleton and Strick, 2000). The striatum, comprising the caudate nucleus and putamen, receives input projections from a wide range of cortical areas. Prefrontal areas project mostly to the caudate, and sensorimotor areas to the putamen. A “motor loop” involving sensorimotor cortex and the SMA, passing largely through the putamen and ventrolateral thalamus (Alexander et al., 1986), is especially engaged in speech motor output processes. fMRI studies demonstrate that components of this loop are active even for production of individual monosyllables (Ghosh et al., 2008).

Figure 6.1 demonstrates that speech is accompanied by bilateral activity throughout much of the basal ganglia and thalamus, with a peak response in the globus pallidus, an output nucleus of the basal ganglia. Caudate activity is predominantly localized to the anterior, or head, region. Significant activation is shown throughout the thalamus bilaterally, with the strongest response in the ventrolateral nucleus (image at y = −16). An additional peak appears in the substantia nigra (y = −22), which forms an important part of the cortico-basal ganglia-thalamo-cortical loop, providing dopaminergic input to the striatum and GABAergic input to the thalamus.

The architecture of basal ganglia circuits is suitable for selecting one output from a set of competing alternatives (Mink and Thach, 1993; Mink, 1996; Kropotov and Etlinger, 1999), a property that is formalized in several computational models of basal ganglia function (e.g., Redgrave et al., 1999; Brown et al., 2004; Gurney et al., 2004). Due to the overall degree of convergence and fan-out of thalamo-cortical projections, basal ganglia circuits involved in speech are not likely to be involved in selecting the precise muscle patterns that drive articulation. Instead, as discussed later, the motor loop, which includes the SMA, is likely involved in the selection and initiation of speech motor programs represented elsewhere in the neocortex. A higher-level loop, traversing through the caudate nucleus and interconnecting with more anterior cortical areas, may also be involved in selection processes during speech planning and phonological encoding (as implemented in the GODIVA model described later in this chapter; Bohland et al., 2010).

While the precise functional contributions of the basal ganglia and thalamus in speech motor control are still debated, it is clear that damage and/or electrical stimulation to these regions is associated with speech disturbances. For example, Pickett et al. (1998) reported the case study of a woman with bilateral damage to the putamen and head of the caudate nucleus whose speech was marked by a general articulatory sequencing deficit, with a particular inability to rapidly switch from one articulatory target to the next. Electrical stimulation studies (for a review, see Johnson and Ojemann, 2000) have suggested involvement of the left ventrolateral thalamus (part of the motor loop described earlier) in the motor control of speech, including respiration. Schaltenbrand (1975) reported that stimulation of the anterior nuclei of the thalamus in some subjects gave rise to compulsory speech that they could not inhibit. Stimulation of the dominant head of the caudate has also been shown to evoke word production (Van Buren, 1963), and Crosson (1992) notes similarities between the results of stimulation in the caudate and the anterior thalamic nuclei, which are both components of a higher-level cortico-basal ganglia-thalamo-cortical loop with the prefrontal cortex. This suggests that these areas may serve similar functions, and that basal ganglia loops may be critical for the maintenance and release of a speech/language plan.

Functional imaging of the basal ganglia during speech has not always provided consistent results, but a few notable observations have been made. For example, striatal activity appears to decrease with increased speaking rate for both covert (Wildgruber et al., 2001) and overt speech (Riecker et al., 2006), which is in contrast to an approximately linear positive relationship between speech rate and BOLD response in cortical speech areas. Bohland and Guenther (2006) found an increased response in the putamen bilaterally when subjects produced three-syllable sequences overtly (compared to preparation alone). This coincided with additional motor cortical activation and is likely part of the motor loop described earlier. Furthermore, when stimuli were made more phonologically complex, activation increased in the anterior thalamus and caudate nucleus, as well as portions of prefrontal cortex. Similarly, Sörös et al. (2006) showed that caudate activity was increased for polysyllabic vs. monosyllabic utterances. Increased engagement of this loop circuit is likely due to the increased sequencing/planning load for these utterances. Basal ganglia circuits also appear to be critical for speech motor learning, as they are for non-speech motor skill learning (Doyon et al., 2009). For example, individuals with Parkinson’s Disease exhibit deficits in learning novel speech utterances (Schulz et al., 2000; Whitfield and Goberman, 2017). Furthermore, in healthy adults learning to produce syllables containing phonotactically illegal consonant clusters (e.g., /ɡvazf/), activity in the left globus pallidus was greater when these syllables were novel than for syllables that had been previously practiced (Segawa et al., 2015).

While thalamic activation is often reported in neuroimaging studies of speech, localization of activity to specific nuclei within the thalamus is often imprecise due to their small size and close proximity. The spatial resolution of functional neuroimaging data is often insufficient to distinguish activity from neighboring nuclei. Additionally, the original data are often spatially smoothed, further limiting their effective resolution. Thus, the specific roles of individual nuclei within the larger speech production system remain somewhat poorly understood. Thalamic activations (primarily in the anterior and ventrolateral subregions) in neuroimaging studies have been associated with a number of processes relevant for speech including overt articulation (e.g., Riecker et al., 2005; Bohland and Guenther, 2006; Ghosh et al., 2008), volitional control of breathing (e.g., Ramsay et al., 1993; Murphy et al., 1997), swallowing (e.g., Malandraki et al., 2009; Toogood et al., 2017), and verbal working memory (Moore et al., 2013; e.g., Koelsch et al., 2009). While the thalamus is often viewed simply as a relay station for information transmitted to or from the cerebral cortex, analysis of the circuitry involved in nonhuman primates (Barbas et al., 2013) suggests this perspective is overly simplistic. Further work is needed to better delineate the contributions of the ventral lateral and ventral anterior portions of the thalamus to speech production.

Cerebellar circuits have long been recognized to be important for motor learning and fine motor control, including control of articulation (Holmes, 1917). Cerebellar lesions can cause ataxic dysarthria, a disorder characterized by inaccurate articulation, prosodic excess, and phonatory-prosodic insufficiency (Darley et al., 1975). Damage to the cerebellum additionally results in increased durations of sentences, words, syllables, and phonemes (Ackermann and Hertrich, 1994; Kent et al., 1997). The classical view of the cerebellum’s involvement in the control of speech is in regulating fine temporal organization of the motor commands necessary to produce smooth, coordinated productions over words and sentences (Ackermann, 2008), particularly during rapid speech. In addition to a general role in fine motor control, the cerebellum is implicated in the control of motor sequences (Inhoff et al., 1989), possibly in the translation of a programmed sequence into a fluent motor action (Braitenberg et al., 1997). Furthermore, it is considered to be an important locus of timing (Inhoff et al., 1989; Keele and Ivry, 1990; Ackermann and Hertrich, 1994; Ivry, 1997) and sensory prediction (Blakemore et al., 1998; Wolpert et al., 1998; Knolle et al., 2012). Like the basal ganglia, the cerebellum is heavily interconnected with the cerebral cortex via the thalamus, with particularly strong projections to the motor and premotor cortices.

Neuroimaging studies have helped provide a more refined picture of cerebellar processing in speech, with different loci of cerebellar activation associated with distinct functional roles. The most commonly activated portion of the cerebellum during speech is in the superior cerebellar cortex. This site may actually contain two or more distinct subregions, including an anterior vermal region in cerebellar lobules IV and V and a more lateral and posterior region spanning lobule VI and Crus I of lobule VII (Figure 6.2; Riecker, Ackermann, Wildgruber, Meyer, et al., 2000; Wildgruber et al., 2001; Riecker et al., 2002; Ghosh et al., 2008). Lesion studies implicate both the anterior vermal region (Urban et al., 2003) and the more lateral paravermal region (Ackermann et al., 1992) in ataxic dysarthria. Activation of the superior cerebellum appears to be particularly important for rapid temporal organization of speech, demonstrating a step-wise increase in response for syllable rates between 2.5 and 4 Hz (Riecker et al., 2005). These areas are also more active during overt compared to covert production and for complex syllables (i.e., those containing consonant clusters) compared to simple syllables (e.g., Bohland and Guenther, 2006).

The more lateral portions of the superior cerebellar cortex are likely involved in higher-order processes as compared to the phonetic-articulatory functions of the more medial regions (e.g., Leiner et al., 1993). This hypothesis is consistent with work by Durisko and Fiez (2010) suggesting that the superior medial cerebellum plays a role in general speech motor processing, with significantly greater activation during overt vs. covert speech, whereas no significant difference in activation was observed in the superior lateral cerebellum. Furthermore, the superior lateral region, as well as an inferior region (lobule VIIB/VIII, discussed further below), was more engaged by a verbal working memory task than the speaking tasks, suggesting their role in higher-level representations of the speech plan (Durisko and Fiez, 2010). The involvement of lateral regions of the cerebellar cortex in speech coding was also found in an experiment using fMRI repetition suppression3 that showed adaptation to repeated phoneme-level units in the left superior lateral cerebellum, and to repeated supra-syllabic sequences in the right lateral cerebellum (Peeva et al., 2010).

Abundant evidence has shown that the cerebellum is engaged not just in motor learning and online motor control, but also for cognitive functions including working memory and language (Schmahmann and Pandya, 1997; Desmond and Fiez, 1998). Such a role is also supported by clinical cases in which cerebellar lesions give rise to deficits in speech planning and short-term verbal rehearsal (Silveri et al., 1998; Leggio et al., 2000; Chiricozzi et al., 2008). Ackermann and colleagues have suggested that the cerebellum serves two key roles in both overt and covert speech production: (a) temporal organization of the sound structure of speech sequences, and (b) generation of a pre-articulatory phonetic plan for inner speech (Ackermann, 2008). As noted throughout this chapter, each of these processes also likely engages a number of additional brain structures. Considerable evidence, however, points to the cerebellum being involved in pre-articulatory sequencing of speech, with the more lateral aspects engaged even during covert rehearsal (Riecker, Ackermann, Wildgruber, Dogil, et al., 2000; Callan et al., 2006).

A separate activation locus in the inferior cerebellar cortex (in or near lobule VIII, particularly in the right hemisphere) has been noted in some studies of speech and is evident in the pooled analysis results shown in Figure 6.2. Bohland and Guenther (2006) reported increased activity in this region for speech sequences composed of three distinct syllables, which subjects had to encode in short-term memory prior to production, compared to sequences composed of the same syllable repeated three times. In contrast with the superior cerebellar cortex, the inferior region did not show increased activity for more complex syllables (e.g., “stra” vs. “ta”) compared to simple syllables, suggesting that it is involved in speech sequencing at the supra-syllabic level without regard for the complexity of the individual syllable “chunks.” Further support for this view comes from the working memory studies of Desmond and colleagues. Desmond et al. (1997) reported both a superior portion (corresponding to lobule VI/Crus I) and an inferior portion (right-lateralized lobule VIIB) of the cerebellum that showed load-dependent activations in a verbal working memory task, but only the superior portions showed the load-dependent effects in a motoric rehearsal task (finger tapping) that lacked working memory storage requirements. Chen and Desmond (2005) extended these results to suggest that lobule VI/Crus I works in concert with frontal regions for mental rehearsal, while lobule VIIB works in concert with the parietal lobe (BA40) as a phonological memory store.

Increased activation of the right inferior posterior cerebellum (lobule VIII) was also noted in an experiment in which auditory feedback (presented over compatible headphones compatible with magnetic resonance imaging) was unexpectedly perturbed during speech (Tourville et al., 2008). A similar, bilateral, effect in lobule VIII was also observed during somatosensory perturbations using a pneumatic bite block (Golfinopoulos et al., 2011). While further study is required, these results suggest a role for lobule VIII in the monitoring and/or adjustment of articulator movements when sensory feedback is inconsistent with the speaker’s expectations. However, it is not just inferior cerebellum that is sensitive to sensory feedback manipulations; using a multi-voxel pattern analysis approach, Zheng et al. (2013) demonstrated that bilateral superior cerebellum responds consistently across different types of distorted auditory feedback during overt articulation.

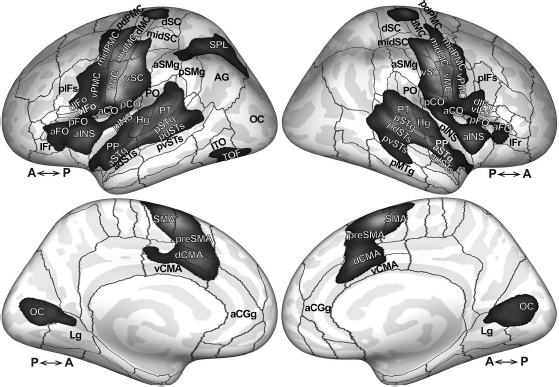

The bulk of the neuroimaging literature on speech and language has focused on processing in the cerebral cortex. The cortical regions reliably active during our own speech production studies are demonstrated in Figure 6.3. The statistical maps were generated from the pooled individual speech-baseline contrast volumes described earlier. For the cerebral cortex, the t-test for significant differences was performed in a vertex-by-vertex fashion after mapping individual contrast data to a common cortical surface representation using FreeSurfer (Dale et al., 1999; Fischl et al., 1999).

Speech production recruits a large portion of the cortical surface, including lateral primary motor cortex (vMC, midMC, aCO in Figure 6.3; see Figure 6.3 caption and List of Abbreviations for the definitions of abbreviations), premotor cortex (midPMC, vPMC) somatosensory cortex (vSC, pCO), auditory cortex (Hg, PT, aSTg pSTg, pdSTs), medial prefrontal cortex (SMA, preSMA, dCMA, vCMA), and inferior frontal gyrus (dIFo, vIFo, aFO, pFO). This set of regions, referred to hereafter as the cortical “speech network,” is consistently activated during speech production, even for simple, monosyllabic speech tasks (e.g., Sörös et al., 2006; Ghosh et al., 2008). The speech network is largely bilateral. In the detailed description that follows, however, evidence of lateralized contributions from some regions will be described. Bilateral activity in the middle cingulate gyrus (dCMA, vCMA) and the insula (aINS, pINS) is also apparent in Figure 6.3. While clear in this pooled analysis, significant speech-related activity in the cingulate and insula is noted less reliably in individual studies than the core regions listed earlier. After describing the most consistently activated cortical areas, we then discuss these and other regions that are less consistently active during speech and some of the factors that might contribute to this variability.

Additional prominent activations in Figure 6.3 appear in the bilateral medial occipital cortex (OC) extending into lingual gyrus (Lg), and the left superior parietal lobule (SPL). These activations likely reflect visual processes associated with reading stimuli and not speech production, and will not be addressed here.

The strongest speech-related responses in Figure 6.3 (brightest values in the overlaid statistical map) are found along the central sulcus, the prominent anatomical landmark that divides the primary motor (BA 4) and primary somatosensory (BA 3, 1, and 2) cortices. This cluster of activity extends outward from the sulcus to include much of the lateral portion of the precentral and postcentral gyri, together referred to as Rolandic cortex, from deep within the opercular cortex dorso-medially to the omega-shaped bend in the central sulcus that marks the hand sensorimotor representation. Primary motor cortex lies anterior to the sulcus, sharing the precentral gyrus with premotor cortex (BA 6). Primary somatosensory cortex extends from the fundus of the central sulcus posteriorly along the postcentral gyrus. The representations of the speech articulators lie mostly in the ventral portion of the primary motor and somatosensory cortices, ranging approximately from the Sylvian fissure to the midpoint of the lateral surface of the cerebral cortex along the central sulcus.

Abbreviations: aCGg, anterior cingulate gyrus; aCO, anterior central operculum; adSTs, anterior dorsal superior temporal sulcus; aFO, anterior frontal operculum; Ag, angular gyrus; aINS, anterior insula; aSMg, anterior supramarginal gyrus; aSTg, anterior superior temporal gyrus; dCMA, dorsal cingulate motor area; dIFo, dorsal inferior frontal gyrus, pars opercularis; dMC, dorsal motor cortex; dSC, dorsal somatosensory cortex; Hg, Heschl’s gyrus; Lg, lingual gyrus; midMC, middle motor cortex; midPMC, middle premotor cortex; midSC, middle somatosensory cortex; OC, occipital cortex; pCO, posterior central operculum; pdPMC, posterior dorsal premotor cortex; pdSTs, posterior dorsal superior temporal sulcus; pIFs, posterior inferior frontal sulcus; pINS, posterior insula; pMTg, posterior middle temporal gyrus; pFO, posterior frontal operculum; PO, parietal operculum; PP, planum polare; preSMA, pre-supplementary motor area; pSMg, posterior supramarginal gyrus; pSTg, posterior superior temporal gyrus; PT, planum temporale; pvSTs, posterior ventral superior temporal sulcus; SMA, supplementary motor area; SPL, superior parietal lobule; vCMA, ventral cingulate motor area; vIFo, ventral inferior frontal gyrus pars opercularis; vIFt, ventral inferior frontal gyrus, pars triangularis; vMC, ventral motor cortex; vPMC, ventral premotor cortex; vSC, ventral somatosensory cortex.

Functional imaging studies involving overt articulation yield extremely reliable activation of the Rolandic cortex bilaterally. In our own fMRI studies, the ventral precentral gyrus is typically the most strongly activated region for any overt speaking task compared to a passive baseline task, a finding consistent with a meta-analysis of PET and fMRI studies involving overt single word reading, which found the greatest activation likelihood across studies along the precentral gyrus bilaterally (Turkeltaub, 2002). Like many areas involved in articulation, activations of the bilateral precentral gyri are strongly modulated by articulatory complexity (e.g., the addition of consonant clusters requiring rapid articulatory movements; Basilakos et al., 2017). These areas are also engaged, to a lesser extent, in covert speech or in tasks that involve preparation or covert rehearsal of speech items (e.g., Wildgruber et al., 1996; Riecker, Ackermann, Wildgruber, Meyer, et al., 2000; Bohland and Guenther, 2006).

The degree to which speech-related activation of the motor cortex is lateralized appears to be task-dependent. Wildgruber et al. (1996) identified strong left hemisphere lateralization during covert speech in the precentral gyrus. Riecker et al. (2000) confirmed this lateralization for covert speech, but found bilateral activation, with only a moderate leftward bias, for overt speech, and a right hemisphere bias for singing. Bohland and Guenther (2006) noted strong left lateralization of motor cortical activity during “no go” trials in which subjects prepared to speak a three-syllable pseudoword sequence but did not actually produce it, but on “go” trials, this lateralization was not statistically significant. This study also noted that the effect of increased complexity of a syllable sequence was significantly stronger in the left-hemisphere ventral motor cortex than in the right. These results offer the hypothesis that preparing speech (i.e., activating a phonetic/articulatory plan) involves motor cortical cells primarily in the left hemisphere, whereas overt articulation engages motor cortex bilaterally (Bohland et al., 2010).

Early electrical stimulation studies (Penfield and Rasmussen, 1950) suggested a motor homunculus represented along the Rolandic cortex and provided insight into the locations of speech-relevant representations. Functional imaging provides further evidence of these cortical maps. Figure 6.4 illustrates the results of recent meta-analyses of non-speech PET and fMRI tasks involving individual articulators (see also Takai et al., 2010; Guenther, 2016). Each panel shows peak (left-hemisphere) activation locations for individual articulators; the bottom right panel shows peak locations determined from two meta-analyses of speech production (Turkeltaub, 2002; Brown et al., 2005). The articulatory meta-analyses indicate a rough somatotopic organization consisting of (from dorsal to ventral) respiratory, laryngeal, lip, jaw, and tongue representations. Notably, however, representations for all of the articulators are clustered together in the ventral Rolandic cortex and overlap substantially; this overlap of articulatory representations in sensorimotor cortex likely contributes to the high degree of inter-articulator coordination present in fluent speech.

The supplementary motor area (SMA) is a portion of the premotor cortex located on the medial cortical surface anterior to the precentral sulcus (see Figure 6.3). It contains at least two subregions that can be distinguished on the basis of cytoarchitecture, connectivity, and function: The preSMA, which lies rostral to the vertical line passing through the anterior commissure, and the SMA proper (or simply SMA) located caudal to this line (Picard and Strick, 1996). Primate neurophysiological studies have suggested that the preSMA and SMA are differentially involved in the sequencing and initiation of movements, with preSMA acting at a more abstract level than the more motoric SMA (Matsuzaka et al., 1992; Tanji and Shima, 1994; Shima et al., 1996; Shima and Tanji, 1998a; Tanji, 2001). These areas also have distinct patterns of connectivity with cortical and subcortical areas in monkeys (Jürgens, 1984; Luppino et al., 1993), a finding supported in humans using diffusion tensor imaging (Johansen-Berg et al., 2004; Lehéricy et al., 2004). Specifically, the preSMA is heavily connected with the prefrontal cortex and the caudate nucleus, whereas the SMA is more heavily connected with the motor cortex and the putamen, again suggesting a functional breakdown with preSMA involved in higher-level motor planning and SMA with motor execution.

Microstimulation of the SMA in humans (Penfield and Welch, 1951; Fried et al., 1991) often yields involuntary vocalization, repetitions of words or syllables, speech arrest, slowing of speech, or hesitancy, indicating its contribution to speech output. Speech-related symptoms from patients with SMA lesions have been described in the literature (e.g., Jonas, 1981, 1987; Ziegler et al., 1997; Pai, 1999). These often result in a transient period of total mutism, after which patients may suffer from reduced propositional (self-initiated) speech with non-propositional speech (automatic speech; e.g., counting, repeating words) nearly intact. Other problems include involuntary vocalizations, repetitions, paraphasias, echolalia, lack of prosodic variation, stuttering-like behavior, and variable speech rate, with only rare occurrences of distorted articulations. These outcomes are suggestive of a role in sequencing, initiating, suppressing, and/or timing speech output, but likely not providing detailed motor commands to the articulators. Based largely on the lesion literature, Jonas (1987) and Ziegler et al. (1997) arrived at similar conclusions regarding the role of the SMA in speech production, suggesting that it aids in sequencing and initiating speech sounds, but likely not in determining their phonemic content.

Recent neuroimaging studies have begun to reveal the distinct contributions made by the SMA and preSMA to speech production. For instance, Bohland and Guenther (2006) noted that activity in the preSMA increased for sequences composed of more phonologically complex syllables, whereas activity in the SMA showed no such effect for syllable complexity, but rather its response was preferentially increased when the sequence was overtly articulated. In a study of word production, Alario et al. (2006) provided further evidence for a preferential involvement of the SMA in motor output, and suggested a further functional subdivision within the preSMA. Specifically, these authors proposed that the anterior preSMA is more involved with lexical selection and the posterior portion with sequence encoding and execution. Tremblay and Gracco (2006) likewise observed SMA involvement across motor output tasks, but found that the preSMA response increased in a word generation task (involving lexical selection) as compared to a word reading task. Further evidence for a domain-general role of the left preSMA in motor response selection during word, sentence, and oral motor gesture production has been found using transcranial magnetic stimulation (Tremblay and Gracco, 2009) and fMRI (Tremblay and Small, 2011).

Collectively, these studies suggest that the SMA is more involved in the initiation and execution of speech output, whereas the preSMA contributes to higher-level processes including response selection and possibly the sequencing of syllables and phonemes. It has been proposed that the SMA serves as a “starting mechanism” for speech (Botez and Barbeau, 1971), but it remains unclear to what extent it is engaged throughout the duration of an utterance. Using an event-related design that segregated preparation and production phases of a paced syllable repetition task, Brendel et al. (2010) found that the medial wall areas (likely including both SMA and preSMA) became engaged when a preparatory cue was provided, and that the overall BOLD response was skewed toward the initial phase of extended syllable repetition periods; however, activity was not limited to the initial period but rather extended throughout the production period as well, suggesting some ongoing involvement throughout motor output. In a study probing the premotor speech areas using effective connectivity (which estimates the strength of influence between brain areas during a task), Hartwigsen et al. (2013) found that the optimal network arrangement (of 63 possible networks) placed the preSMA as the source of driving input (i.e., the starting mechanism) within the premotor network for pseudoword repetition. Bilateral preSMA was also more activated and provided significantly stronger faciliatory influence on dorsal premotor cortex during pseudoword repetition compared to word repetition, presumably due to an increased demand for sequencing speech sounds in unfamiliar pseudowords.

As more data accumulate, the overall view of the contribution of the medial premotor areas in speech motor control is becoming clearer, and is now being addressed in computational models. For example, the GODIVA model (Bohland et al., 2010) proposes that the preSMA codes for abstract (possibly syllable and/or word-level) sequences, while the SMA (in conjunction with the motor loop through the basal ganglia) is involved in initiation and timing of speech motor commands. This model is addressed in more detail in a later section (“The GODIVA model of speech sound sequencing”).

Because speech production generates an acoustic signal that impinges on the speaker’s own auditory system, speaking obligatorily activates the auditory cortex. As we describe in this section, this input is not simply an artifact of the speaking process, but instead it plays a critical role in speech motor control. The primary auditory cortex (BA 41), located along Heschl’s gyrus on the supratemporal plane within the Sylvian fissure, receives (via the medial geniculate body of the thalamus) the bulk of auditory afferents, and projects to surrounding higher-order auditory cortical areas (BA 42, 22) of the superior temporal gyrus, including the planum temporale and planum polare within the supratemporal plane, and laterally to the superior temporal sulcus. These auditory areas are engaged during speech perception and speech production. It has been demonstrated with fMRI that covert speech, which does not generate an auditory signal, also activates auditory cortex (e.g., Hickok et al., 2000; Okada et al., 2003; Okada and Hickok, 2006). These results are consistent with an earlier MEG study demonstrating modulation of auditory cortical activation during covert as well as overt speech (Numminen and Curio 1999). It is notable that silent articulation (which differs from covert speech in that it includes overt tongue movemements) produces significantly increased auditory cortical activity bilaterally compared to purely imagined speech, despite no differences in auditory input (Okada et al., 2017).

Auditory and other sensory inputs provide important information to assist in the control problems involved in speech production. Specifically, hearing one’s own speech allows the speaker to monitor productions and to make online error corrections as needed during vocalization. As described further in this chapter, modifications to auditory feedback during speech lead to increased activity in auditory cortical areas (e.g., Hashimoto and Sakai, 2003; Tourville et al., 2008; Niziolek and Guenther, 2013), and this has been hypothesized to correspond to an auditory error map that becomes active when a speaker’s auditory feedback does not match his/her expectations (Guenther et al., 2006). The feedback-based control of speech is further described from a computational perspective in a later section (“The DIVA model of speech motor control”).

A related phenomenon, referred to as “speech-induced suppression,” is observed in studies using MEG or EEG. In this effect, activation in the auditory cortex (measured by the amplitude of a stereotyped potential ~100 ms after sound onset), is reduced for self-produced speech relative to hearing the speech of others or a recorded version of one’s own voice when not speaking (Numminen et al., 1999; Curio et al., 2000; Houde et al., 2002). The magnitude of speech-induced suppression may be related to the degree of match of auditory expectations with incoming sensory feedback. This idea is further supported by studies that alter feedback (by adding noise or shifting the frequency spectrum) and report a reduced degree of suppression when feedback does not match the speaker’s expectation (Houde et al., 2002; Heinks-Maldonado et al., 2006) and by a study demonstrating that less prototypical productions of a vowel are associated with reduced speech-induced suppression compared to prototypical productions (Niziolek et al., 2013). PET and fMRI studies have also demonstrated reduced response in superior temporal areas to self-initiated speech relative to hearing another voice, suggesting an active role in speech motor control (Hirano et al., 1996, 1997; Takaso et al., 2010; Zheng et al., 2010; Christoffels et al., 2011). Using multi-voxel pattern analysis, Markiewicz and Bohland (2016) found a region in the posterior superior temporal sulcus bilaterally whose response was predictive of the vowel that a subject heard and repeated aloud in an area consistent with the “phonological processing network” proposed by Hickok and Poeppel (2007). It is possible that this region encodes speech sounds at an abstract, phonological level, but this study could not specifically rule out a more continuous, auditory phonetic representation. For further discussion of the phonetics–phonology interface, see Chapter 13 of this volume.

An area deep within the posterior extent of the Sylvian fissure at the junction of parietal and temporal lobes (Area Spt) has been suggested to serve the role of sensorimotor integration in speech production (Buchsbaum et al., 2001; Hickok et al., 2003, 2009). This area, which straddles the boundary between the planum temporale and parietal operculum (PT and PO in Figure 6.3, respectively), is activated both during passive speech perception and during speech production (both overt and covert), suggesting its involvement in both motor and sensory processes. While not exclusive to speech acts, Area Spt appears to be preferentially activated for vocal tract movements as compared to manual movements (Pa and Hickok 2008). A recent state feedback control model of speech production proposes that this region implements a coordinate transform that allows mappings between articulatory and auditory phonetic representations of speech syllables (Hickok et al., 2011; Hickok, 2012). Such a role is largely consistent with proposals within the DIVA model (described in more detail in “The DIVA model of speech motor control”), although details differ in terms of the proposed mechanisms and localization.

The posterior portion of the inferior frontal gyrus (IFG) is classically subdivided into two regions: The pars opercularis (IFo; BA 44) and pars triangularis (IFt; BA 45). Left hemisphere IFo and IFt are often collectively referred to as “Broca’s Area,” based on the landmark study by Paul Broca that identified these areas as crucial for language production (Broca, 1861, see also Dronkers et al., 2007 for a more recent analysis of Broca’s patients’ lesions). Penfield and Roberts (1959) demonstrated that electrical stimulation of the IFG could give rise to speech arrest, offering direct evidence for its role in speech motor output. Later stimulation studies indicated the involvement of left inferior frontal areas in speech comprehension (Schäffler et al., 1993) in addition to speech production. The left IFG has also been implicated in reading (e.g., Pugh et al., 2001), grammatical aspects of language comprehension (e.g., Heim et al., 2003; Sahin et al., 2006), and a variety of tasks outside of the domain of speech and language production (see, for example, Fadiga et al., 2009).

Although likely also participating in higher-level aspects of language, the posterior portion of left IFG and the adjoining ventral premotor cortex (vPMC) are commonly activated in imaging studies of speech tasks as simple as monosyllabic pseudoword production (Guenther et al., 2006; Ghosh et al., 2008). The DIVA model (described in further detail in the next section) suggests that the left IFG and adjoining vPMC contain speech sound map cells that represent learned syllables and phonemes without regard for semantic content and that, when activated, generate the precise motor commands for the corresponding speech sounds. This view is consistent with the observation that activity in the left lateral premotor cortex decreases with learning of syllables containing phonotactically illegal consonant clusters, suggesting that practice with new sound sequences leads to the development of new, more efficient motor programs in this area (Segawa et al., 2015). This proposed role for left IFo/vPMC is similar, but not identical, to the view put forth by Indefrey and Levelt (2004), who suggested that this region serves as an interface between phonological and phonetic encoding, with the left posterior IFG responsible for syllabification (forming syllables from strings of planned phonological segments). This phonological role of the left IFG occurs in both the production and perception domains; for example, based on a large review of the PET and fMRI literature, Price (2012) suggested that both speech and non-speech sounds activate left IFG in tasks where they need to be segmented and held in working memory. Papoutsi et al. (2009) conducted an experiment using high and low frequency pseudoword production, aiming to clarify the role of the left IFG in either phonological or phonetic/articulatory processes in speech production. Their results revealed a differential response profile within the left IFG, suggesting that the posterior portion of BA 44 is functionally segregated along a dorsal–ventral gradient, with the dorsal portion involved in phonological encoding (consistent with Indefrey and Levelt, 2004), and the ventral portion in phonetic encoding (consistent with Hickok and Poeppel, 2004; Guenther et al., 2006). Further evidence that this region interfaces phonological representations with motoric representations comes from Hillis et al. (2004), who demonstrated that damage to the left (but not right) hemisphere posterior IFG is associated with apraxia of speech, a speech motor disorder that is often characterized as a failure or inefficiency in translating from well-formed phonological representations of words or syllables into previously learned motor information (McNeil et al., 2009).

While most evidence points to left-hemisphere dominance for IFo and IFt in speech and language function, a role for the right hemisphere homologues in speech motor control has also recently emerged. As previously noted, studies involving unexpected perturbations of either auditory (Toyomura et al., 2007; Tourville et al., 2008) or somatosensory (Golfinopoulos et al., 2011) feedback during speech (compared to typical feedback) give rise to differential sensory cortical activations, but also display prominent activations of right hemisphere ventral premotor cortex and posterior IFG. This activity is hypothesized to correspond to the compensatory motor actions invoked by subjects under such conditions, giving rise to a right-lateralized feedback control system (Tourville and Guenther, 2011), in contrast to a left-lateralized feedforward control system.

Thus far we have concentrated on cortical areas that are reliably active during speech tasks. Some regions of the cerebral cortex, however, appear to play a role in speech production but are not always significantly active compared to a baseline task in speech neuroimaging studies. These include the inferior frontal sulcus, inferior posterior parietal cortex, cingulate cortex, and the insular cortex. A number of factors may contribute to this, including variability in image acquisition, data analysis, statistical power, and precise speech and baseline tasks used. We next address several such areas.

The inferior frontal sulcus (IFS) separates the inferior frontal and middle frontal gyri in the lateral prefrontal cortex. In addition to a role in semantic processing (e.g., Crosson et al., 2001, 2003), the IFS and surrounding areas have been implicated in a large number of studies of language and working memory (Fiez et al., 1996; D’Esposito et al., 1998; Gabrieli et al., 1998; Kerns et al., 2004) and in serial order processing (Petrides, 1991; Averbeck et al., 2002, 2003). As previously noted, the dorsal IFG, which abuts the IFS,4 appears to be more engaged by phonological than by motor processes; the IFS likewise appears to be sensitive to phonological parameters and specifically related to phonological working memory.

Bohland and Guenther (2006) showed that strongly left-lateralized activity within the IFS and surrounding areas was modulated by the phonological composition of multisyllabic pseudoword sequences. In this task, subjects were required to encode three-syllable sequences in memory prior to the arrival of a GO signal. IFS activity showed an interaction between the complexity of the syllables within the sequence (i.e., /stra/ vs. /ta/) and the complexity of the sequence (i.e., /ta ki ru/ vs. /ta ta/), which is consistent with this area serving as a phonological buffer (as hypothesized in the GODIVA model, described in more detail in the next section; Bohland et al., 2010). This area was previously shown to encode phonetic categories during speech perception (Myers et al., 2009), and recent work has shown that response patterns in the left IFS are predictive of individual phonemes (vowels) that a subject heard and must later produce in a delayed auditory repetition task (Markiewicz and Bohland 2016). These results argue that subjects activate prefrontal working memory representations as a consequence of speech perception processes (for maintenance or subvocal rehearsal of the incoming sounds), which may utilize an abstracted, phoneme-like representation in the left IFS.

The inferior posterior parietal cortex consists of the angular gyrus (BA 39) and supramarginal gyrus (BA 40). Although these areas were not found to be reliably active across word production studies in the meta-analysis of Indefrey and Levelt (2004),5 they have been implicated in a number of speech studies. Speech production tasks with a working memory component can also activate the inferior parietal lobe and/or intraparietal sulcus (e.g., Bohland and Guenther, 2006). This observation fits in well with the view of inferior parietal cortex playing a role in verbal working memory (e.g., Paulesu et al., 1993; Jonides et al., 1998; Becker et al., 1999) and may also be related to the observation that damage to left supramarginal gyrus causes impairment on phonetic discrimination tasks that involve holding one speech sound in working memory while comparing it to a second incoming sound (Caplan et al., 1995). The left inferior parietal lobe has also been shown to be activated in syllable order judgments (compared to syllable identification), further suggesting its importance in encoding verbal working memory (Moser et al., 2009). Damage to the left supramarginal gyrus and/or temporal-parietal junction gives rise to difficulties in repetition tasks (Fridriksson et al., 2010), potentially compromising part of a “dorsal pathway” for acoustic-to-articulatory translation as suggested by Hickok and Poeppel (2007).

The anterior supramarginal gyrus has also been suggested to be involved in the somatosensory feedback-based control of speech (Guenther et al., 2006). This hypothesis was corroborated by results of an experiment showing increased activation in inferior parietal areas during speech when the jaw was unexpectedly perturbed compared to non-perturbed speech (Golfinopoulos et al., 2011). This topic will be discussed further in the next section (see “The DIVA model of speech motor control”).

Although not always identified in speech neuroimaging studies, activation of the middle cingulate gyrus is clearly illustrated in Figure 6.3 (dCMA, extending into vCMA). This activity peaks in the ventral bank of the cingulate sulcus, likely part of the cingulate motor area (cf. Paus et al., 1993). The cingulate motor area has been associated with providing the motivation or will to vocalize (e.g., Jürgens and von Cramon, 1982; Paus, 2001) and with processing reward-based motor selection (Shima and Tanji, 1998b). Bilateral damage to cingulate cortex can severely impact voluntary (but not innate) vocalizations in monkeys (Jürgens, 2009). In humans, bilateral damage to cingulate cortex can result in transcortical motor aphasia, marked by a dramatically reduced motivation to speak (including reduced ability to move or speak; Németh et al., 1988), but such damage does not impair grammar or articulation of any speech that is produced (Rubens, 1975; Jürgens and von Cramon, 1982).

Buried deep within Sylvian fissure, the insula is a continuous sheet of cortex that borders the frontal, parietal, and temporal lobes. Because of its close proximity to several areas known to be involved in speech and language processes, it has long been considered a candidate for language function (Wernicke, 1874; Freud, 1891; Dejerine, 1914, see Ardila, 1999 for a review). In the anterior superior direction, the insula borders speech motor areas in the frontal opercular region of the IFG (BA 44) and the inferior-most portion of the precentral gyrus (BA 4, 6), which contains the motor representation of the speech articulators. In the posterior superior direction, the insula borders the somatosensory representations of the speech articulators in the postcentral gyrus (BA 1, 2, 3), as well as the parietal operculum (BA 40). Inferiorly, the insula borders the auditory cortical areas of the superior temporal plane (BA 41, 42, 22). Figure 6.3 shows bilateral insula activity that extends to each of these speech-related areas. Activity is stronger and more widespread in the anterior portion (aINS) than the posterior portion (pINS), particularly in the left hemisphere.

Unlike the neocortical areas described thus far, the insular cortex is a phylogenetically older form of cortex known as “mesocortex” (along with the cingulate gyrus) and is considered a paralimbic structure. In addition to speech, a wide range of functions including memory, drive, affect, gustation, and olfaction have been attributed to the insula in various studies (Türe et al., 1999). Consistent with the placement of the insula in the paralimbic system, Benson (1979) and Ardila (1999) have argued that the insula is likely involved in motivational and affective aspects of speech and language. Damage to the insula has been shown previously to lead to deficits in speech initiation (Shuren, 1993) and motivation to speak (Habib et al., 1995).

As noted in the review by Price (2012), the opinion of the research community on the role of the insular cortex in speech has evolved several times. This area has received significant recent attention in both the neuroimaging and neurology literatures (Ardila, 1999; Türe et al., 1999; Ackermann and Riecker, 2004), largely following the lesion mapping study of Dronkers (1996). This study found that the precentral gyrus of the left insula was the only common site of overlap in a group of patients diagnosed with apraxia of speech, but was preserved in a second group with aphasia but not apraxia of speech. This region, therefore, was argued to be critical for motor programming, or translating a planned speech sound into articulatory action.6 A number of neuroimaging studies have found activation of the insula in overt speaking tasks (Wise et al., 1999; Riecker, Ackermann, Wildgruber, Dogil et al., 2000; Sakurai et al., 2001; Bohland and Guenther, 2006), even for single syllables (Sörös et al., 2006; Ghosh et al., 2008). The relatively consistent finding of greater activation of the anterior insula (either bilateral or left-lateralized, which varies across studies) for overt vs. covert speech suggests its role may be in assisting articulatory control rather than in pre-articulatory planning processes (Ackermann and Riecker, 2004). There is evidence against a role for anterior insula in the detailed planning of articulator movements; for example, Bohland and Guenther (2006) noted that activity at the level of the precentral gyrus of the anterior insula was not modulated by phonological/phonetic complexity. A separate focus of activity at the junction of the anterior insula and adjoining frontal operculum, however, was strongly modulated by complexity, which is largely consistent with other reports (Riecker et al., 2008; Shuster, 2009). These results suggest that anterior insula may enable or modulate speech motor programs, rather than store them.

Although many speech neuroimaging studies have reported insula activation, others have not, at least for some speaking conditions (e.g., Lotze et al., 2000; Riecker, Ackermann, Wildgruber, Meyer et al., 2000; Sörös et al., 2006). Furthermore, other lesion-based studies have not replicated the results of Dronkers (Hillis et al., 2004). Sörös et al. (2006) have suggested that insula activation may depend on whether the speaking condition involves repeated production of the same sounds or production of different syllables/words on each trial. Relatedly, insula recruitment may hinge on the automaticity of the speaking task, with very simple or overlearned speech tasks not necessitating its involvement, whereas more complex sequential production tasks may recruit insular cortex during or prior to articulation (Ackermann and Riecker, 2004). This role is largely consistent with a recent study using voxel-based lesion symptom mapping in a group of 33 patients with left-hemisphere strokes that related lesion site to several measures of performance on speech production tasks (Baldo et al., 2011). This study found that the superior portion of the precentral gyrus of the insula was critical for complex articulations (e.g., consonant clusters, multisyllabic words) but not for simple syllable production. The importance of the insula for speech production, however, remains contentious, with a recent individual subject analysis demonstrating weak responses of the anterior insula to overt speech (in comparison, for example, to the posterior IFG), and enhanced responses to non-speech oral movements (Fedorenko et al., 2015). Additional efforts are necessary to carefully delineate and differentially test between the various proposals in the literature about the role of the insula in speech. Such efforts may also benefit from computational/simulation studies, which can help to formalize theoretical roles and generate specific hypotheses that can be tested using neuroimaging.

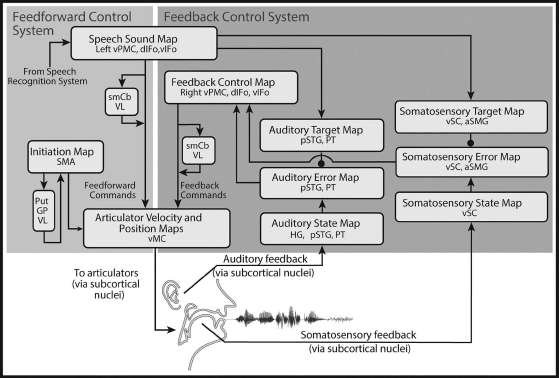

The expansive library of results from neuroimaging studies provide important insight into the roles of many brain areas in speech motor control. In isolation, however, these results do not provide an integrated, mechanistic view of how the neural circuits engaged by speech tasks interact to produce fluent speech. To this end, computational models that both (a) suggest the neural computations performed within specific modules and across pathways that link modules, and (b) propose specific neural correlates for these computational elements, help to bridge this critical gap. These models further suggest specific hypotheses that may be tested in imaging and behavioral experiments, leading to a continuous cycle of model refinement that provides a unifying account of a substantial fraction of the relevant literature. Next we briefly describe the most influential and thoroughly tested neurocomputational account of speech motor control, the DIVA model, which has been developed, tested, and refined by Guenther and colleagues over the last two decades. We also provide an introduction to an extension of the DIVA model, termed “GODIVA,” which begins to account for higher-level phonological processes involved in speech planning and their interface with speech motor control (see also Hickok, 2012; Roelofs, 2014).

The Directions Into Velocities of the Articulators (DIVA) model (recent descriptions in Guenther et al., 2006; Golfinopoulos et al., 2010; Tourville and Guenther, 2011; Guenther, 2016) provides a computational account of the neural processes underlying speech production. The model, schematized in Figure 6.5, takes the form of an adaptive neural network whose components correspond to regions of the cerebral cortex and associated subcortical structures (some of which are not shown in the figure for clarity). Each box in Figure 6.5 corresponds to a set of neurons (or map) in the model, and arrows correspond to synaptic projections that transform one type of neural representation into another. The model is implemented in computer simulations that control an articulatory synthesizer (Maeda, 1990) in order to produce an acoustic signal. The articulator movements and acoustic signal produced by the model have been shown to account for a wide range of data concerning speech development and production (see Guenther et al., 1998, 2006 for reviews). The model’s neurons (each meant to roughly correspond to a small population of neurons in the brain) are localized in a stereotactic coordinate frame to allow for direct comparisons between model simulations and functional neuroimaging experiments, and a number of model predictions about neural activity have been tested with fMRI (e.g., Ghosh et al., 2008; Tourville et al., 2008; Peeva et al., 2010; Golfinopoulos et al., 2011) and intracortical microelectrode recordings (Guenther et al., 2009). Although the model is computationally defined, the discussion here will focus on the hypothesized functions of the modeled brain regions without consideration of associated equations (see Guenther et al., 2006; Golfinopoulos et al., 2010 for mathematical details).

Abbreviations: GP, globus pallidus; Put, putamen; smCB, superior medial cerebellum; VL, ventrolateral thalamic nucleus. See Figure 6.3 caption for the definition of additional abbreviations.

According to the DIVA model, production of speech starts with activation of neurons in a speech sound map in left ventral premotor cortex and an initiation map in SMA. How these neurons themselves may be activated by a representation of a multisyllabic speech plan is described further in “The GODIVA model of speech sound sequencing.” The speech sound map includes a representation of each speech sound (phoneme or syllable) that the model has learned to produce, with the most commonly represented sound unit being the syllable (cf. the “mental syllabary” of Levelt and Wheeldon, 1994). Activation of speech sound map neurons leads to motor commands that arrive in motor cortex via two control subsystems: A feedforward control subsystem (left shaded box in Figure 6.5) and a feedback control subsystem (right shaded box). The feedback control subsystem can be further broken into two components: An auditory feedback control subsystem and a somatosensory feedback control subsystem.

The feedforward control system has two main components: One involving the ventral premotor and motor cortices along with a cerebellar loop, and one involving the SMA and associated basal ganglia motor loop. Roughly speaking, these two components can be thought of as a motor program circuit that generates the detailed muscle activations needed to produce phonemes, syllables, and words, and an initiation circuit that determines which motor programs to activate and when to activate them.

The first component of the feedforward control system involves projections from the left ventral premotor cortex to primary motor cortex, both directly and via a side loop involving the cerebellum7 (feedforward commands in Figure 6.5). These projections encode stored motor commands (or motor programs) for producing the sounds of the speech sound map; these commands take the form of time-varying trajectories of the speech articulators, similar to the concept of a gestural score (see Browman and Goldstein, 1990). The primary motor and premotor cortices are well-known to be strongly interconnected (e.g., Passingham, 1993; Krakauer and Ghez, 1999). Furthermore, the cerebellum is known to receive input via the pontine nuclei from premotor cortical areas, as well as higher-order auditory and somatosensory areas that can provide state information important for choosing motor commands (e.g., Schmahmann and Pandya, 1997), and projects heavily to the primary motor cortex (e.g., Middleton and Strick, 1997). As previously noted (see “Neural systems involved in speech production”), damage to the superior paravermal region of the cerebellar cortex results in ataxic dysarthria, a motor speech disorder characterized by slurred, poorly coordinated speech (Ackermann et al., 1992), supporting the view that this region is involved in fine-tuning the feedforward speech motor commands, perhaps by information about the current sensorimotor state.

The second component of the feedforward system is responsible for the release of speech motor commands to the articulators. According to the model, the release of a motor program starts with activation of a corresponding cell in an initiation map that lies in the SMA. It is proposed that the timing of initiation cell activation, and therefore, motor commands, is governed by contextual inputs from the basal ganglia via the thalamus (see also “The GODIVA model of speech sound sequencing” for further details). The basal ganglia are well-suited for this role given their afferents from most cortical regions of the brain, including motor, prefrontal, associative, and limbic cortices. These nuclei are also strongly and reciprocally connected with the SMA (Romanelli et al., 2005).

The auditory feedback control subsystem involves axonal projections from speech sound map cells in the left ventral premotor cortex to the higher-order auditory cortical areas; these projections embody the auditory target region for the speech sound currently being produced. That is, they represent the auditory feedback that should arise when the speaker hears himself/herself producing the sound, including a learned measure of acceptable variability. This target is compared to incoming auditory information from the auditory periphery, and if the current auditory feedback is outside the target region, neurons in the model’s auditory error map, which resides in the posterior superior temporal gyrus and planum temporale, become active. Auditory error cells project to a feedback control map in right ventral premotor cortex, which is responsible for transforming auditory errors into corrective movements of the speech articulators (feedback commands in Figure 6.5). Numerous studies suggest that sensory error representations in cerebellum influence corrective movements (e.g., Diedrichsen et al., 2005; Penhune and Doyon, 2005; Grafton et al., 2008), and the model accordingly posits that the cerebellum contributes to the feedback motor command.

The projections from the DIVA model’s speech sound map to the auditory cortical areas inhibit auditory error map cells. If the incoming auditory signal is within the target region, this inhibition cancels the excitatory effects of the incoming auditory signal. If the incoming auditory signal is outside the target region, the inhibitory target region will not completely cancel the excitatory input from the auditory periphery, resulting in activation of auditory error cells. As discussed earlier, evidence of inhibition in auditory cortical areas in the superior temporal gyrus during one’s own speech comes from several different sources, including recorded neural responses during open brain surgery (Creutzfeldt et al., 1989a, 1989b), MEG measurements (Numminen and Curio, 1999; Numminen et al., 1999; Houde et al., 2002), and PET measurements (Wise et al., 1999).

The DIVA model also posits a somatosensory feedback control subsystem operating alongside the auditory feedback subsystem. The model’s somatosensory state map corresponds to the representation of tactile and proprioceptive information from the speech articulators in primary and higher-order somatosensory cortical areas in the postcentral gyrus and supramarginal gyrus of the inferior parietal lobe. The somatosensory target map represents the expected somatosensory consequences of producing the current sound; this target is encoded by projections from left ventral premotor cortex to the supramarginal gyrus. The model’s somatosensory error map is hypothesized to reside primarily in the anterior supramarginal gyrus, a region that has been implicated in phonological processing for speech perception, and speech production (Geschwind, 1965; Damasio and Damasio, 1980). According to the model, cells in this map become active during speech if the speaker’s tactile and proprioceptive feedback from the vocal tract deviates from the somatosensory target region for the sound being produced. The output of the somatosensory error map then propagates to motor cortex via the feedback control map in right ventral premotor cortex; synaptic projections in this pathway encode the transformation from somatosensory errors into motor commands that correct those errors.

The DIVA model also provides a computational account of the acquisition of speech motor skills. Learning in the model occurs via adjustments to the synaptic projections between cortical maps (arrows in Figure 6.5). The first learning stage involves babbling movements of the speech articulators, which generate a combination of articulatory, auditory, and somatosensory information that is used to tune the feedback control system, specifically the synaptic projections that transform auditory and somatosensory error signals into corrective motor commands. The learning in this stage is not associated with specific speech sounds; instead these learned sensory-motor transformations will be used in the production of all speech sounds that are learned in the next stage.

The second learning stage is an imitation stage in which the model is presented with auditory sound samples of phonemes, syllables, or words to learn, much like an infant is exposed to the sounds and words of his/her native language. These sounds take the form of time-varying acoustic signals. Based on these samples, the model learns an auditory target for each sound, encoded in synaptic projections from left ventral premotor cortex to the auditory cortical areas in the posterior superior temporal gyrus (auditory target map in Figure 6.5). The targets consist of time-varying regions (or ranges) that encode the allowable variability of the acoustic signal throughout the syllable. The use of target regions rather than point targets is an important aspect of the DIVA model that provides a unified explanation for a wide range of speech production phenomena, including motor equivalence, contextual variability, anticipatory coarticulation, carryover coarticulation, and speaking rate effects (Guenther, 1995; Guenther et al., 1998). After the auditory target for a new sound is learned, the model then attempts to produce the sound. Initially the model does not possess an accurate set of feedforward commands for producing the sound, and sensory errors occur. The feedback control system translates these errors into corrective motor commands, and on each production attempt the commands generated by the feedback control system are incorporated into the feedforward command for the next attempt by adjusting the synaptic projections from the left ventral premotor cortex to the primary motor cortex (feedforward commands in Figure 6.5). This iterative learning process, which is hypothesized to heavily involve the cerebellum, results in a more accurate feedforward command for the next attempt. After a few production attempts, the feedforward command by itself is sufficient to produce the sound in normal circumstances. That is, the feedforward command is accurate enough that it generates very few auditory errors during production of the sound and thus does not invoke the auditory feedback control subsystem. As the model repeatedly produces a speech sound, it learns a somatosensory target region for the sound, analogous to the auditory target region mentioned earlier. This target represents the expected tactile and proprioceptive sensations associated with the sound and is used in the somatosensory feedback control subsystem to detect somatosensory errors.

As described earlier, cells in the speech sound map are activated both when perceiving a sound and when producing the same sound. This is consistent with studies that demonstrate recruitment of IFG and vPMC in speech perception (e.g., Wilson et al., 2004; D’Ausilio et al., 2009). In the model, these neurons are necessary for the acquisition of new speech sounds by infants. Here DIVA postulates that a population of speech sound map cells is activated when an infant hears a new sound, which enables the learning of an auditory target for that sound. Later, to produce that sound, the infant activates the same speech sound map cells to drive motor output. Individual neurons with such properties (i.e., that are active during both the performance and the comprehension of motor acts), have been found in the premotor Area F5 in monkeys (thought to be homologous with BA 44 in humans) and are commonly labeled “mirror neurons” (di Pellegrino et al., 1992; Gallese et al., 1996; Rizzolatti et al., 1996). See Rizzolatti and Arbib (1998) and Binkofski and Buccino (2004) for discussion of possible roles for mirror neurons in language beyond that proposed here.

The DIVA model accounts for many aspects of the production of individual speech motor programs, with the most typical unit of motor programming being the syllable. Additional brain areas, particularly in the left prefrontal cortex, are involved in conversational speech, or for producing multisyllabic words or phrases. A large body of evidence, particularly from naturally occurring “slips of the tongue” (e.g., MacKay, 1970; Fromkin, 1971), indicates that speech involves substantial advanced planning of the sound sequences to be produced. The GODIVA model (Bohland et al., 2010) addresses how the brain may represent planned speech sequences, and how phonological representations of these sequences may interface with phonetic representations and the speech motor control circuits modeled by DIVA.

The model can be thought of, in some ways, as a neurobiologically grounded implementation of slots-and-fillers style psycholinguistic models (e.g., Shattuck-Hufnagel, 1979; Dell, 1986; Levelt et al., 1999). In these types of models, a phonological frame describes an arrangement of abstract, categorical “slots” that is represented independently of the segments that fill those slots. Thus, the word “cat,” for example, could be jointly encoded by an abstract CVC (consonant-vowel-consonant) syllable frame and the string of phonemes /k/, /æ/, and /t/. During production, the slots in the phonological frame are “filled” by appropriate segments chosen from a buffer (i.e., phonological working memory). The segregation of frame and content is motivated in large part by naturally occurring speech errors, which are known to strongly preserve serial positions within syllables or words (i.e., the onset consonant from one forthcoming syllable may be substituted for the onset consonant from another, but very rarely for a vowel or coda consonant).