Before we build a model, we need to first explore the input data to understand what is available for user-item recommendations. In this section, we will prepare, process, and explore the data, which includes users, items (games), and interactions (hours of gameplay), using the following steps:

- First, let's load some R packages for preparing and processing our input data:

library(keras)

library(tidyverse)

library(knitr)

- Next, let's load the data into R:

steamdata <- read_csv("data/steam-200k.csv", col_names=FALSE)

- Let's inspect the input data using glimpse():

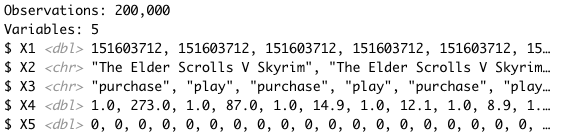

glimpse(steamdata)

This results in the following output:

- Let's manually add column labels to organize this data:

colnames(steamdata) <- c("user", "item", "interaction", "value", "blank")

- Let's remove any blank columns or extraneous whitespace characters:

steamdata <- steamdata %>%

filter(interaction == "play") %>%

select(-blank) %>%

select(-interaction) %>%

mutate(item = str_replace_all(item,'[ [:blank:][:space:] ]',""))

- Now, we need to create sequential user and item IDs so we can later specify an appropriate size for our lookup matrix via the following code:

users <- steamdata %>% select(user) %>% distinct() %>% rowid_to_column()

steamdata <- steamdata %>% inner_join(users) %>% rename(userid=rowid)

items <- steamdata %>% select(item) %>% distinct() %>% rowid_to_column()

steamdata <- steamdata %>% inner_join(items) %>% rename(itemid=rowid)

- Let's rename the item and value fields to clarify we are exploring user-item interaction data and implicitly defining user ratings based on the value field, which represents the total number of hours played for a particular game:

steamdata <- steamdata %>% rename(title=item, rating=value)

- This dataset contains user, item, and interaction data. Let's use the following code to identify the number of users and items available for analysis:

n_users <- steamdata %>% select(userid) %>% distinct() %>% nrow()

n_items <- steamdata %>% select(itemid) %>% distinct() %>% nrow()

We identified there are 11,350 users (players) and 3,598 items (games) to explore for analysis and recommendations. Since we don't have explicit item ratings (yes/no, 1-5, for example), we will generate item (game) recommendations based on implicit feedback (hours of gameplay) for illustration purposes. Alternatively, we could seek to acquire additional user-item data (such as contextual, temporal, or content), but we have enough baseline item-interaction data to build our preliminary CF-based recommender system with neural network embeddings.

- Before proceeding, we need to normalize our rating (user-item interaction) data, which can be implemented using standard techniques such as min-max normalization:

# normalize data with min-max function

minmax <- function(x) {

return ((x - min(x)) / (max(x) - min(x)))

}

# add scaled rating value

steamdata <- steamdata %>% mutate(rating_scaled = minmax(rating))

- Next, we will split the data into training and test data:

# split into training and test

index <- sample(1:nrow(steamdata), 0.8* nrow(steamdata))

train <- steamdata[index,]

test <- steamdata[-index,]

- Now we will create matrices of users, items, and ratings for the training and test data:

# create matrices of user, items, and ratings for training and test

x_train <- train %>% select(c(userid, itemid)) %>% as.matrix()

y_train <- train %>% select(rating_scaled) %>% as.matrix()

x_test <- test %>% select(c(userid, itemid)) %>% as.matrix()

y_test <- test %>% select(rating_scaled) %>% as.matrix()

Prior to building our neural network models, we will first conduct Exploratory data analysis (EDA) to better understand the scope, type, and characteristics of the underlying data.