Between stimulus and response, there is a space. In that space lies our power to choose our response, and in our response lies growth and freedom.—Viktor Frankl

Thinking is something we all do naturally, but not necessarily well. The process happens so quickly, naturally, and so far beneath our ordinary, day-to-day awareness that it seldom presents itself for scrutiny.

The human brain—the most extraordinary tool in the universe, the only one we know of that has the ability to rewire itself according to how we use it—is left largely to its own devices. ‘Thinking about thinking’ is something we are generally quite happy to hand over to other people to contemplate: the philosophers, neuroscientists, and psychotics.

Thinking ‘just is’, and for the most part we are quite content to leave it that way. Astonishingly, given the number of bad decisions our species has chalked up, education has proved of little help. We have been taught what to think, but not how to think.

Our species’ ability to error-correct has not improved significantly as a result. On the other hand, thinking skillfully—that is, with system and purpose, not to be confused with formal logic—allows us to consider information we might not even have noticed before; extract order and meaning out of apparent chaos; generate theories and hypotheses; and create solutions we can then test.

The complex chronic conditions currently overwhelming the health services are deeply in need of a new reasoning approach. They are baffling, we agree. But they appear baffling not because of their intrinsic nature, but because of the way we have been looking at them.

The old folk story, attributed to Mullah Nasruddin, about five blind men, each trying to describe an elephant by feeling just one part of its body, is an apt analogy. The elephant is, variously, like a snake, a tree, a huge palm leaf, according to whichever part each man feels. Any attempt to synthesize a whole from the parts is doomed to failure, no matter how eloquently each is able to sense and describe the part he is touching.

Most of us find the story amusing because it is so patently irrational. And yet research often follows the same thinking process—that, somehow, if the object is divided into sufficiently small parts, understanding of the ‘whole’ will follow.

Of course, this approach has served us well in certain areas in the past. We now know a lot about how a germ can cause a particular infection, and which gene can cause a specific deformity. Our knowledge about how people become sick is vast. But, as a result, our single-minded commitment to the twin beliefs of reductionism and causality tells us virtually nothing about how some people never get sick, and others get well.

This occurs largely because of the way in which we have chosen to think about a particular problem. Another Nasruddin story perfectly illustrates this approach.

A man happened upon his friend one night on his hands and knees beneath the only streetlight in the immediate area.

‘What are you doing?’ he asked his friend.

The man glanced up then back down at the earth he was sifting between his fingers. ‘I lost my keys,’ he said. ‘I’ve been searching for more than an hour and I still haven’t found them.’

His friend was anxious to help. ‘Where did you lose them?’ he asked, getting down on his knees beside the seeker.

The man pointed somewhere deep in the shadows. ‘Over there,’ he replied.

Perplexed, his friend asked, ‘Then why are you looking over here?’

The man looked at him, then spoke slowly as if to an idiot. ‘There’s more light over here, of course,’ he said.

The instant we decide to confine our attention to a specific area of inquiry, a particular set of events—the text of a story—we exclude information that may, in fact, explain the context in which that function or process occurs. To paraphrase Sir William Osler, we look at the disease the patient has, without looking at the patient who has the disease. We are baffled at the condition we encounter because we are trying to apply a familiar and limited map to unfamiliar territory.

On being comfortable with not-knowing

Of course, scientists are not alone in thinking this way. Ambiguity is, for most of us, an uncomfortable experience. The compulsion to avoid the discomfort of ‘not-knowing’ is so strong that often we will accept, without reflection, an explanation—either our own or one provided for us by some authority—that resolves our internal dissonance. This is where a thinking strategy known as ‘cognitive bias’ comes in. People (and health professionals are not immune) are inclined to adopt one or more ways of resolving cognitive dissonance, each involving deletion, distortion, or generalization of data…at the cost of accuracy and precision.

And yet the ability to tolerate ambiguity, at least for a time, is an essential step towards widening our perspective. Just for the moment, we need to suspend our beliefs and biases in the knowledge that this way only will permit new information to flow in. In order to break out of this particular box, we need to understand how easily our beliefs and perceptions are influenced by an ongoing interaction between the way in which our minds naturally work and the effect that unchallenged linguistic distortions (using language mindlessly) can have on our beliefs.

Consider the phrase ‘medically unexplained’, one of the descriptors used almost synonymously with ‘functional’, ‘somatoform’, ‘psychosomatic’, or ‘psychogenic’ disorders. On the face of it, these words, like the ubiquitous ‘idiopathic’, simply mean that our current medical knowledge is not able to account for the condition under consideration.

They do not mean—as is so often assumed by default—that the condition is imaginary or intended to deceive or manipulate. Although most practitioners will deny drawing these conclusions, it happens more frequently than we would like—especially when the health professional is under pressure.

Here are three examples of phrases we have encountered in physicians’ letters of referral for patients suffering from what is usually called ‘myofascial’ or ‘fibromyalgic’ pain syndromes:

‘Physical basis for the pain has been conclusively ruled out by all tests…A psychiatric assessment has diagnosed somatoform pain disorder.’

‘I suspect the patient has a hidden agenda, since I have determined that there is nothing physically wrong with him.’

‘The patient’s over-reaction during examination leads me to believe his problem is either hypochondriasis or malingering.’

Our argument here is not with whether the patient was or was not ‘making it up’, but with the cognitive mechanism employed by the attending physician in reaching his conclusion. The reasoning is as follows: All real pain has a physical cause. Therefore, if no physical cause is found, the pain cannot be real.

This reasoning process, known as syllogistic, is perfectly logical—but only within its own limited framework. If the propositions are correct, then the conclusion is correct. If the propositions are questionable, as in the example above, we—and the patient—are in trouble. The flaws in the reasoning include the facts that only pain with a physical cause is ‘real’ and that there is no physical cause, simply because one has not been identified.

The speed with which many people draw conclusions and make decisions tends to trick them into believing they are ‘right’. If questioned, they insist (and feel) that they have selected this response from a range of possibilities, all duly subjected to full assessment.

A number of researchers, including Benjamin Libet126 and Daniel Wegner,127 have demonstrated that a series of complicated mental and physical processes takes place some considerable time before you ‘choose’ to act in a particular way. Libet and Wegner found there was a gap of somewhere between a third and a half of a second before conscious awareness crept in. A later study, published in the journal Nature Neuroscience, suggested the outcome of a decision could be encoded in the brain activity of the prefrontal and parietal cortex by as much as 10 seconds before it entered conscious awareness.128

Conscious thought, therefore, follows a psychophysiological decision to act, rather than the other way around.

This ‘illusion’ is so compelling that people tend to defend vigorously their intention to act, even where intentionality can be disproved.

One thing we can be sure of: the mind does not rigorously question the sense or logic of each chunk of information it receives.

The sheer amount of data available to our senses would be overwhelming if we had to weigh up each incoming bit. The brain copes by streamlining. Information is chunked and processed according to general patterns; it responds to situations that resemble previous situations it has encountered, calling on the same feelings and responses as before. Top-down processing collides with bottom-up processing.

Inevitably, as it acquires more ‘similar’ experiences, these patterns coalesce into biases, mindsets, and, if the owner of the brain fails to remain alert, stereotypes.

A number of other factors can dissuade us from freeing up our thinking and reasoning processes. Four of these are as follows:

- Fear. The fear of making the ‘wrong’ decision is a major concern within the health professions, and not only because of the risks to the patient’s health and wellbeing. Litigation is increasing, forcing practitioners into an increasingly more defensive position. This results in an overdependence on referrals to specialists, multiple tests and opinions, invasive scans and surgery, and over-medication.

- Pressure. As stress mounts, the tendency to revert to familiar coping patterns also increases. Complex and manifold problems encountered in unfavorable circumstances are likely to trigger limbic arousal. Constraints of time increase pressure. And so does a sense that the practitioner does not have the resources to help the person seeking his knowledge and expertise.

- Peer acceptance. Doing something differently from your peers or superiors is a risky business. Or at least it feels risky. If you depart from the ‘norm’, you will tend to be concerned that someone will challenge you and, by doing so, ‘prove’ that your approach is inferior or wrong. Despite many organizations’ claim that they encourage ‘proactivity’ and ‘individuality’, disturbing the status quo is often penalized, implicitly, if not directly.

- External demands. An essentially market-driven model of health delivery as exists in the United States is rapidly being imposed in the United Kingdom and elsewhere in the world. This depends on external measures of ‘effectiveness’: audits, targets, and guidelines, established, at least partly, on the basis of cost-effectiveness. Meeting targets, then, can easily become an easier option than meeting patient needs.

Any of these constraints can trigger cognitive bias in the unwary health professional. And cognitive bias comes in many elusive forms. Some of these include:

Anchoring bias. Locking on to salient features in a patient’s initial presentation too early in the diagnostic process and failing to adjust in light of later information.

Availability bias. Judging things as being more likely if they readily come to mind; for example, a recent experience with a disease may increase the likelihood of it being diagnosed.

Confirmation bias. Looking for evidence to support a diagnosis rather than looking for evidence that might rebut it.

Diagnosis momentum. Allowing a diagnosis label that has been attached to a patient, even if only as a possibility, to gather steam so that other possibilities are wrongly excluded.

Overconfidence bias. Believing we know more than we do, and acting on incomplete information, intuitions, and hunches.

Premature closure. Accepting a diagnosis before it has been fully verified.

Search-satisfying bias. Calling off a search once something is found.129

Beyond bias

There are many highly effective means of developing rational thinking processes, but we believe the three tools we outline below are among the most easily understood and applied. The underlying intention of these is to make distinctions between fact and belief, to reduce errors that occur when we confuse one with the other, and to begin to explore some of the mechanisms that set up and maintain the patient’s problem. One side-effect of exploring these thinking tools is becoming increasingly mindful of the space, however small, between stimulus and response. As therapist and holocaust-survivor Viktor Frankl observed, in that space lies growth and freedom.

Thinking Tool 1: The Fact-Evaluation Spectrum

Irrefutable ‘facts’—especially if you’re a quantum physicist or a politician—are pretty hard to come by. Much of what most people regard as ‘true’ or ‘objective’ depends on evaluating the evidence on which they choose to place their attention.

Psychologists, such as Edmund Bolles, now recognize that ‘paying attention’ requires us actively to choose information from a confused buzz of sensations and details to which we then ascribe ‘meaning’.130 This is what we call ‘evaluation’.

But, the process of evaluation includes two other mental actions. In creating ‘meaning’ out of whatever you place your attention on, you also exclude certain information from what is available, and you supplement what is in front of you with what has gone before.

Far from functioning as objective beings, systematically uncovering absolute truth, even the most scientific humans are architects and builders constructing meaning according to blueprints, some of which they inherit with their jobs, most of which exist and function well below their conscious awareness.

The labels that are then selected to apply to that which has been attended to are quick to develop a life of their own. As we have discussed above, the word becomes the ‘thing’, and it is easy to forget that someone (yourself, or a ‘significant other’) created that part of your cognitive map by a process of evaluation. It is now ‘true’.

The Fact-Evaluation Spectrum is a tool designed to help you develop the ability to distinguish between verifiable data (fact) and belief, speculation, judgment, opinion, evaluation, etc. In doing this, we reduce our tendency towards premature cognitive closure (making your mind up before all the evidence has been acquired), and acting ‘as if’ the individual in front of you is physically, psychologically, socially, and spiritually identical to everyone else who matches some or all of the criteria of a particular class or ‘condition’.

We do not pretend to know what an incontrovertible fact is (we’re not priests or politicians); the guidelines in this section are intended more to caution against mindlessly applying beliefs, judgments, inferences, opinions, etc, as ‘true’ simply because we believe, or have been told, that they are true.

Belief is a major trap in clear thinking. It is important to understand that a belief differs from a fact, however dearly held. The distinction needs to be made. People often argue as to whether a belief is ‘true’ or ‘false’, whereas it might be more productive simply to decide whether it is ‘useful’ or not. (Inevitably, the issue of religious belief is raised here. Our response is simply this: if your religious beliefs support the health and wellbeing of yourself and others, they are ‘useful’. If not, they are not.)

Many of the descriptions we use in day-to-day practice may help us communicate to fellow professionals with some degree of consensuality; however, we need also to be cautious as to how they can (often unconsciously) inform and influence our beliefs and responses.

‘Functional’, the description of a very large class of complex chronic conditions without specific cause, is such a word. And the word itself often stands in the way of understanding and assisting the patient to restructure his experience.

Definitions vary from source to source, but only slightly. Ideally, a functional disorder is one in which no evidence of organic damage or disturbance may be found, but which nevertheless affects the patient’s physical and emotional wellbeing. However, increasingly, the word is taken to suggest the presence of ‘psychiatric’ elements (see the Oxford Concise Medical Dictionary, as an example). Sadly, the belief (that if the cause of a condition cannot be established it can’t be ‘real’) permeates everyday medical care. To the patient, there is a strong element of blaming and shaming, which, given our earlier investigation of the impact of stress on health, is hardly likely to help.

In a bid to minimize this kind of toxic labeling, our first ‘thinking tool’, therefore, is based on learning to recognize and distinguish between facts and evaluations (inferences, assumptions, judgments, and opinions). Simply put, ‘fact’, as we use the word from now on, refers to sensory-based information—that is whatever you (or the patient) can see, hear, touch, taste, and smell. As far as possible, these data should be free of value-judgment or interpretation. They should not be evaluated. A group of independent bystanders would, in the main, agree with your observations.

Examples of facts:

The man is sitting, slumped in his chair, head down, looking at the floor.

The woman sits with her arms wrapped around her body.

The child doesn’t make eye contact when he says he didn’t take the cookie.

Most observers would agree with these descriptions.

Examples of beliefs:

The man is depressed (whereas he might simply be absorbed by his problem or pain).

The woman is insecure or defensive (whereas she might be cold, or sensitive about her ‘muffin top’).

The child is lying (whereas he might be afraid he will be wrongly blamed yet again).

These are all evaluations we may believe to be true, but have no way of verifying without further information.

Especially where patients’ subjective (internal) experience is concerned, we seek to elicit sensory-specific, factual information. By this, we mean how the patient experiences his condition (what he sees, hears, feels, smells, and tastes), rather than the inferences he makes from that experience.

For example:

Patient: ‘I feel very depressed. It’s just awful. I feel really terrible.’ (All the information here is inferential. The patient responds ‘as if’ this is the ‘true’ nature of his experience.)

Practitioner: ‘How do you know? What specifically happens that lets you know this?’

Patient: ‘I wake up every morning with a gray cloud over my head and it’s just like…Oh, you’re never going to get any better, and it’s like a lead weight on my chest…’

This kind of information is separated out from inferences, judgments, and beliefs. It describes the process of his experience using sensory-based information.

Patient: ‘…I wake up every morning with a gray cloud’ (visual) ‘over my head and it’s just like…Oh, you’re never going to get any better’ (auditory), ‘and it’s like a lead weight on my chest’ (kinesthetic)…

As we report at various points in this book, sensory-based, factual information gives us something specific to work with. We can begin to change the experience by changing how the patient structures the experience, rather than being caught up in the ‘contents’ or story of the experience itself.

Thinking Tool 2: The Structural Differential

In the years following World War 1, Alfred Korzybski, a Polish nobleman and polymath, was puzzled how a species, already so advanced, could have plunged so deeply into chaos. With remarkable prescience, he formulated his ideas of the relationship of language with neurological function into a field he called General Semantics. (General Semantics should not be confused with semantics. The latter is concerned with the ‘meaning’ of words. Korzybski intended General Semantics to focus primarily on the process of ‘abstraction’ and its effect on thinking.)

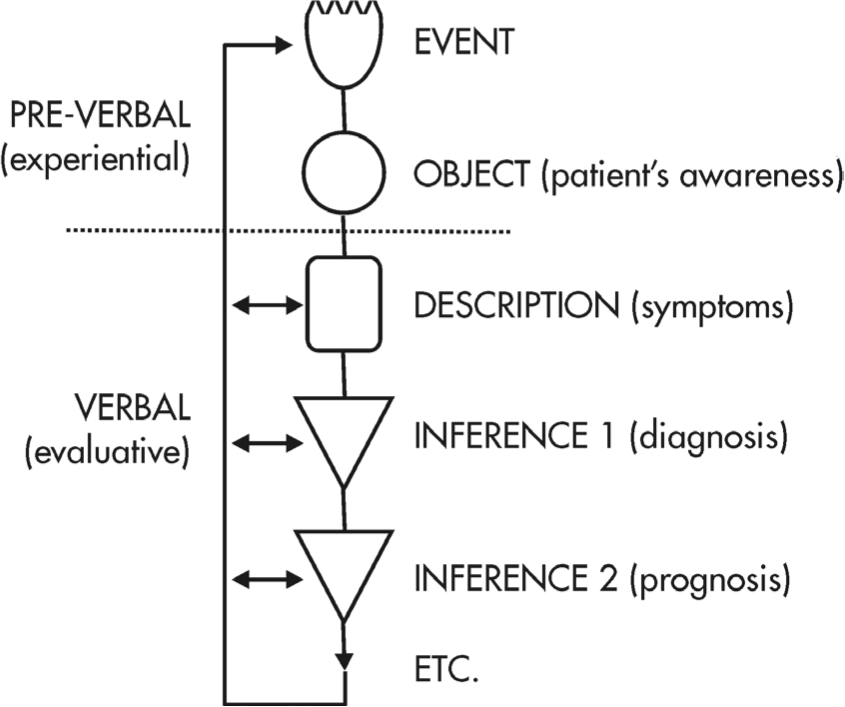

Language, he said, emerged as a ‘higher-order’ abstraction from certain neurological ‘events’. Put another way, ‘something’ happens in the subject’s neurology, a perturbation or Event, which exists formlessly and silently. This is also known as ‘process’.

The subject then becomes aware of some ‘difference’ occurring. Korzybski called this the Object, since it had characteristics (sub-modalities), but, like the Event, no words have yet been attached to it. Both Event and Object are on a ‘silent’ or pre-linguistic level.

The subject’s Description follows his experience. For the first time, words are involved and, of necessity, the words can only partially represent the experience itself. Then, based on the subject’s description, comes Inference 1—an evaluation about what this ‘means’. Following that is an inference about the first inference—Inference 2, followed by an inference about that inference, Inference 3, and so on, potentially to infinity (or, as Korzybski put it, ‘etc’).

The language used to describe and infer from the silent level of subjective experience may be regarded as ‘story’ or ‘content’. Since each level can feed back to the level(s) above it, Inferences can affect the original Event, regulating it either upwards or downwards, changing the subject’s experience of the Object. Thus ‘story’ is required to establish and maintain ongoing subjective experience, making it better or worse. Or, to put it another way, without story, structure cannot be easily maintained.

Korzybski represented this with a three-dimensional model of what he called the Structural Differential. Figure 7.1 is a two-dimensional representation of the process as applied to the medical or psychiatric investigation.

Figure 7.1 The Structural Differential as applied to the medical investigation. Note that everything that is verbalized is evaluative, not ‘factual’ (Adapted with permission of the Institute of General Semantics.)

In practice, we can use the Structural Differential to map a patient’s experience in the following way:

Some disturbance occurs within the patient’s neurology that affects complex homeostasis, and compromises allostasis (for the sake of illustration, let’s say he suffers confusion and anxiety when he tries to understand written information). We may never know everything about what causes the disturbance or how it occurs (Event level). Broadly speaking, the Event level is the territory of neuroscience, and its companion fields of psychopharmacology and psychoneuroimmunology (PNI).

The patient becomes aware of a ‘problem’ (Object level), and then goes on to try to describe it (Descriptive level). Based on the description, the ‘expert’ creates the first Inference (Inference 1), also known as ‘diagnosis’; the patient has ‘dyslexia’.

Further Inferences follow, both from the patient and from those around him (Inference 2, Inference 3, etc). These might include: ‘He has a learning disability’; ‘I am stupid because I have a disability’; ‘His feelings about having a disability are making him anxious’; ‘He needs treatment for his anxiety about his feelings about having a disability’… etc. Diagnoses of ‘co-morbidity’ (two or more co-existing medical conditions or disease processes that are additional to an initial diagnosis) often add to the problems at this point, all related to inferential thinking.

Any, or all, of these Inferences can loop back and intensify the experience of ‘confusion’ that first alerted the patient to an Event. Thus, he may become more anxious, more ‘disabled’, and even more confused.

Differences in treatments and schools of psychology develop when various theorists observe and develop models applying to different levels of Inference. One might try to curb the anxiety with medication, another might try to alter the subject’s ‘negative self-talk’, yet another might try to retrain the way he moves his eyes when he reads.

From this, it can easily be seen that all diagnoses are Inferences based on abstracted information, and all Inferences (including prognoses and treatment modalities) depend on the quality of the Inferences that precede it. Therefore, the diagnosis (Inference) is not the disease (Event), but only the currently accepted label for a collection of descriptions (symptoms). And these (the Inferences) will change. Indeed, they are changing all the time.

The fact that medical scientists and many physicians accept so readily the ‘evidence’ presented to them (sometimes by spurious organizations with clearly evident self-interests) has much to do with human beings’ hard-wired tendency to seek patterns out of the chaotic data that make up ‘reality’. This drive often leads people to detect patterns where ‘noise’ might predominate. Think for a moment of the face of Jesus manifesting on a badly toasted tortilla, or rising damp on a basement wall. Once ‘detected’, a pattern is difficult to ignore, even though it might exist entirely in the eyes of the beholder.

In this way, it can be claimed that medical science creates and cures diseases by the inferences it makes and the patterns the ruling authorities decide are ‘true’.

Let’s look at some examples, starting with the diagnosis of diabetes:

The World Health Organization’s diagnostic criteria for diabetes were defined in the late 1970s, then revised four more times until 1989.

Then in 2011 WHO issued further guidelines for diagnosis using new units entirely and recommended an HbA1c level of 6.5% (48 mmol/mol) as the cut-off for diagnosis of diabetes. The HbA1c measurement, having some clear advantages over fasting glucose, can itself be influenced by a variety of common co-morbidities such as anemia, liver or kidney impairment and genetic defects, known as haemoglobinopathies.

However, even allowing for artefacts in the measuring process, this new classification may still be inadequate.

The British Medical Journal reported that diagnoses could be erroneous in up to 15% of cases and suggested additional classifications of diabetes. Classifications of diabetes should now include Genetic Diabetes (such as MODY or Maturity Onset Diabetes of the Young), Secondary Diabetes, Non-Diabetic Hyperglycemia, and even Unclassified Diabetes (insufficient information to fit one of the other categories). This is in addition to the traditional Type I and II diagnoses.131

Meanwhile, in practice, this diagnostic dithering over labeling has had adverse consequences for our patients. For example, over the years, many patients had been told they had normal blood glucose when in fact they were actually diabetic whilst many patients with MODY had been erroneously treated with insulin.

Since the first edition of this book, and possibly to avoid further diagnostic errors, medical authorities in the West have introduced a new condition called ‘pre-diabetes’. This is a gray area between normal blood sugar and diabetic levels—in other words, some, but not all, of the diagnostic criteria for diabetes have been identified.132

But, is it a disease? Decide for yourself.

Some other examples:

The diagnostic descriptions of ‘acute coronary syndrome’, ‘social phobia’, and ‘restless legs’ did not exist as separate entities with those labels until relatively recently…with the very active participation of the pharmaceutical companies, all happy to provide medication for new ‘conditions’.

A transient ischemic attack (TIA) is diagnosed if symptoms last less than 24 hours. If the symptoms last 24 hours and one minute it becomes a ‘stroke’. The disease process is the same.

Many diseases are notoriously difficult for clinicians to assign diagnostic labels to—and yet this labeling process remains a cornerstone of current orthodox medical practice. As suggested above, when prime cause cannot be established, the most proximal cause is often selected, or a new condition may be created. But the further away from the Event and Object levels that the Inferences move, the greater the potential for confusion.

Also, confusing levels of abstraction with each other (for example, treating an inference as an explanation, or even a cause) can lead to the pathologizing of body-mind events that may fall within the range of ‘normal’ experience and encourages excessive medical and surgical intervention, yet can leave both patient and practitioner still at a loss as to how to relieve the suffering. (We note here that many of the ‘symptoms’ experienced by a patient diagnosed with transient ischemic attack are identical to those experienced by people in deep meditation or undergoing ‘religious experiences’. In one culture this is a highly desirable occurrence, in another a suitable cause for alarm.)

Reference to the Structural Differential allows the practitioner to identify Inferences at various levels of abstraction (both his own and those of the patient), and to plan his response accordingly. It is an especially valuable tool to eliminate confusion caused by interpreting an Inference about the problem as a cause.

Thinking Tool 3: Function Analysis

In the corporate world, function analysis is designed to measure the cost and benefit of introducing a new product or system against the cost and benefit of keeping the old. Whether or not we are consciously aware of it, we apply function analysis to almost every new decision we make, from buying a new sound system to which medication might be best for a particular patient.

Problems arise when function analysis has been conducted ‘intuitively’ or derives from criteria we have inherited and accepted, without examination, from others.

In the Medical NLP model of whole-person healthcare, we aim to recognize and respond to the psychosocial components of complex chronic conditions and to accord them the same significance as biomechanical signs and symptoms. We want to understand context (the landscape in which the problem occurs) as much as text (the details of the problem itself). We seek answers to questions such as: ‘What is the value, need, or purpose of the old condition/behavior to the patient?’; ‘How can those values, needs or purposes be met by another behavior or response that is more useful or appropriate?’; ‘What are the costs of making a change balanced against the costs of staying the way he is—and, is he prepared to meet those costs?’

And, by ‘cost’, of course, we mean the price paid or value expressed in emotional, social, spiritual, and behavioral terms. Is it worth it to the patient to make the change? Function analysis helps us move to a higher order of investigation—looking for patterns that may explain the condition’s resistance to treatment.

Supposing we are considering the possibility that a patient feels deficient in social support and connectedness. In applying function analysis to the presenting condition, we will be considering if and how the condition is related to that specific emotional shortfall.

For example:

Case history: A single, middle-aged woman developed multiple chemical sensitivities after her neighbor sprayed his garden (and, inadvertently, hers) with an illegal toxic herbicide. She confronted him and reported that he was rude and unrepentant. The allergic response developed soon after the confrontation and spread rapidly to include a wide range of allergens, including house paint, cigarette smoke, perfume and aftershave, and exhaust fumes. (Note: This is‘text’.)

In conversation, it emerged that the woman felt lonely and uncared for. She spoke several times about her father, whom she considered cold and uncaring; she became agitated and apparently angry when talking about him. (We noticed that her breathing became raspy and constricted and her eyes watered whenever the subject came up, typical of an allergic response.) The only time he showed any consideration for her was when she was confined to her bed with an ‘attack’, even though she suspected he thought she was malingering. (This is ‘context’. It also reflects the process of abstraction and evaluation taking place inside her model of the world.)

She also reported being immensely angry at her neighbor, and had abandoned conventional treatment, explaining that both her neighbor and doctors in general were ‘paternalistic’ and uninterested in the welfare of others…‘just like my father’.

At that point, the practitioner’s focus shifted to: (1) the possible purpose or intention of her symptoms, (2) whether these needs were being met, and (3) whether it would be possible to find ways of meeting these needs in ways that would make ‘being sick’ no longer necessary.

From the information elicited, the practitioner constructed several hypotheses for later testing. These included the possibility that she needed: to expand her social connectedness (in this case, by reactivating supportive friendships she had allowed to lapse); to resolve or come to terms with her anger towards her father; to explore whether her neighbor’s indifferent attitude was functioning as a subliminal reminder of her father’s emotional unavailability; and that she needed to be ill to get the love and attention she needed, etc.

(Interestingly, when we sent her out, after ensuring there was no risk of anaphylactic shock, into a place where people were smoking, with the instruction to ‘make the symptom appear’, nothing happened. That was the first step in her beginning to understand that she was not completely at the mercy of her condition.)

Our intention was not to impose these theories on the patient, but to seek an ‘entry point’ in the behavioral loop, set up initially as a protective mechanism, with the intention of helping her to change. At best, we make cautious suggestions. More often, though, the patient notices patterns and connections himself. This is therapeutic gold. But, as with all other cases, change would not have been possible for this patient unless her unmet needs were addressed and the entire ‘system’ in which she functioned was effectively balanced. Please note that at no time did we suggest (or even consider) that her responses were ‘not real’; they were. Nor was there any attempt to ‘explore’ her relationship with her father, or reactivate past pain and disappointment.

The purpose of function analysis, then, is to acquire ‘higher-order’ information (about how something happens). With this and the other principles and tools already discussed, the Engagement phase of the consultation can begin.

EXERCISES

1. We recommend that you practice the three thinking tools so they can operate in the background of your experience. They are not intended to dominate your attention. As you become more familiar with the processes, you will find it progressively easier to apply them naturally and conversationally.

The Fact-Evaluation Spectrum

Establish whether statements (including your own) are factual or evaluative. Make a mental note whenever a fact checks out as well grounded and sensory-specific. Likewise, notice how easily evaluation creeps in. Test for these by asking questions such as, ‘How do you/I know?’, ‘What is the (sensory) evidence?’

The Structural Differential

Practice locating statements along the Structural Differential. How much, if any, of the patient’s description is in fact evaluative? Are the Inferences you detect first-level evaluations, or are they evaluations of the evaluation(s) before it?

Function Analysis

As you listen to the patient, keep in mind the higher order questions, such as, ‘What purpose could this symptom/behavior serve?’, ‘How does it work?’, etc.

2. Review one or more decisions you made in the past and which you now regret. As you do so, notice what diverted your attention from the ‘space’ to which Viktor Frankl refers. Write down a few thoughts about how things could have worked out differently and better had you become aware of the space in time to avoid a knee-jerk response. (This is not an invitation to beat yourself up over what you ‘should have’ or ‘would have’ done. We are simply suggesting you become aware that the space existed—and that it exists at almost any point where you choose to pause and just notice ‘what is’ rather than ‘what should be’.)

Notes

126. Libet B (1992) The neural time factor in perception, volition, and free will. Revue de Métaphysique et de Morale 97: 255–72.

127. Wegner DM (2002) The Illusion of Conscious Will. Cambridge, MA: Bradford Books.

128. Soon CS, Brass M, Heinze H-J, Haynes J-D (2008) Unconscious determinants of free decisions in the human brain. Nature Neuroscience 11: 543–545.

129. Coskerry P (August 2003) The importance of cognitive errors in diagnosis and strategies to minimize them, Acad. Med., 78: 775–780.

130. Bolles EB (1991) A Second Way of Knowing. New York: Prentice Hall Press.

131. Changing classification of diabetes: BMJ (2011); 342: d3319.

132. Dorland’s Medical Dictionary 32E.