2

Music

The Machine That Plays by Itself

Roughly forty-three thousand years ago, a young cave bear died in the rolling hills on the northwest border of modern-day Slovenia. A thousand miles away, and a thousand years later, a mammoth died in the forests above the river Blau, near the southern edge of modern-day Germany. Within a few years of the mammoth’s demise, a griffon vulture also perished in the same vicinity. Five thousand years after that, a swan and another mammoth died nearby.

We know almost nothing about how these different animals met their deaths. They may have been hunted by Neanderthals or modern humans; they may have died of natural causes; they may have been killed by other predators. Like almost every creature from the Paleolithic era, the stories behind their lives (and deaths) are a mystery to us, lost to the unreconstructible past. But these different creatures—dispersed across both time and space—did share one remarkable posthumous fate. After their flesh had been consumed by carnivores or bacteria, a bone from each of their skeletons was meticulously crafted by human hands into a flute.

A bone flute from around 33,000 BCE

Bone flutes are among the oldest known artifacts of human technological ingenuity. The Slovenian and German flutes date back to the very origins of art; the caves where some of them were found also featured drawings of animals and human forms on their walls, suggesting the tantalizing possibility that our ancestors gathered in the firelit caverns to watch images flicker on the stone walls, accompanied by music. But musical technology is likely far older than the Paleolithic. The Slovenian and German flutes survived because they were made of bone, but many indigenous tribes in modern times construct flutes or drums out of reeds and animal skin, materials unlikely to survive tens of thousands of years. Many archeologists believe that our ancestors have been building drums for at least a hundred thousand years, making musical technology almost as old as technology designed for hunting or temperature regulation.

This chronology is one of the great puzzles of early human history. It seems to be jumping more than a few levels in the hierarchy of needs to go directly from spearheads and clothing to the invention of wind instruments. Aeons before early humans started to imagine writing or agriculture, they were crafting tools for making music. This seems particularly puzzling because music is the most abstract of the arts. Paintings represent the inhabitants of the world that our eyes naturally perceive: animals, plants, landscapes, other people. Architecture gives us shelter. Stories follow the arc of events that make up a human life. But music has no obvious referent beyond a vague association with the chirps and trills of birdsong. No one likes a hit record because it sounds like the natural world. We like music because it sounds like music—because it sounds different from the unstructured noise of the natural world. And what sounds like music is much closer to the abstracted symmetries of math than any experience a hunter-gatherer would have had a hundred thousand years ago.

A brief lesson in the physics of sound should help underscore the strangeness of the archeological record here. Some of the bone flutes recovered from Paleolithic cave sites are intact enough that they can be played, and in many cases, researchers have found that the finger holes carved into the bones are spaced in such a way that they can produce musical intervals that we now call perfect fourths and fifths. (In the terms of Western music, these would be F and G in the key of C.) Fourths and fifths not only make up the harmonic backbone of almost every popular song in the modern canon, they are also some of the most ubiquitous intervals in the world’s many musical systems. Though some ancient tonal systems, like Balinese gamelan music, evolved without fourths and fifths, only the octave is more common. Musicologists now understand the physics behind these intervals, why they seem to trigger such an interesting response in the human ear. An octave—two notes exactly twelve steps apart from each other on a piano keyboard—exhibits a precise 2:1 ratio in the wave forms it produces. If you play a high C on a guitar, the string will vibrate exactly two times for every single vibration the low C string generates. That synchronization—which also occurs with the harmonics or overtones that give an instrument its particular timbre—creates a vivid impression of consonance in the ear, the sound of those two wave forms snapping into alignment every other cycle. The perfect fourth and fifth have comparably even ratios: a fourth is 4:3, while a fifth is 3:2. If you play a C and a G note together, the higher G strings will vibrate three times for every two vibrations of the C. By contrast, a C and F# played together create the most dissonant interval in the Western scale—the notorious tritone, once called the “devil’s interval”—with a ratio of 43:32.

The existence of these ratios has been known since the days of ancient Greece; the tuning system that features them is often called Pythagorean tuning, after the Greek mathematician who, legend has it, first identified them. (Today, the average seventh grader knows Pythagoras for his triangles, but his ratios are the cornerstone of every pop song on Spotify.) The study of musical ratios marked one of the very first moments in the history of knowledge where mathematical descriptions productively explained natural phenomena. In fact, the success of these mathematical explanations of music triggered a two-thousand-year pursuit of similar cosmological ratios in the movements of the sun and planets in the sky—the famous “music of the spheres” that would inspire Kepler and so many others.

Wave forms, integer ratios, overtones—none of these concepts were available to our ancestors in the Upper Paleolithic. And yet, for some bizarre reason, they went to great lengths to build tools that could conjure these mathematical patterns out of the simple act of exhaling. Put yourself in that Slovenian cave forty thousand years ago: you’ve mastered fire, built simple tools for hunting, learned how to craft garments from animal skins to keep yourself warm in the winter. An entire universe of further innovation lies in front of you. What would you choose to invent next? It seems preposterous that you would turn to crafting a tool that created vibrations in air molecules that synchronized at a perfect 3:2 ratio when played together. Yet that is exactly what our ancestors did.

—

Why were early humans so intent on producing acoustic waves that traveled in such clean integer ratios? The fact that they made musical instruments without understanding the fundamentals of acoustic theory is not so puzzling, of course. There were obviously many cases where evolution had steered them toward objectives whose underlying nature was not at all understood; the Paleolithic hunter-gatherers sought out—and built tools to acquire—the tastes of sugars and fats without knowing anything about the molecular chemistry of carbohydrates and lipids. But sugars and fats are essential components of our diets; we die if we don’t eat enough of them. Listening to a 4:3 ratio doesn’t bestow the same obvious benefits. Our hominid relatives had survived for millions of years without hearing them at all. “Without music, life would be a mistake,” Nietzsche famously proclaimed. But there would still be life. Without sex, or water, or proteins, the human race would cease to exist. Without perfect fourths, we’d be robbed of the bass hook from “Under Pressure”—and just about every piece of music written in the past few centuries—but we’d survive as a species.

I suspect music first emerged not with a need but with a difference: an unusually resonant sound happened to emerge out of the structure of some hollow object—a reed or a bone—creating a tone just different enough from the ordinary cacophony of the world that the ear took note. The sound wasn’t meaningful yet, or laden with the kind of emotional overtones that humans now associate with music. It was just new. And like the unusual shade of Tyrian purple, because the sound was new, it was interesting, worth repeating, worth tinkering with. As these early instruments began to be capable of triggering octaves when played as an ensemble, it may be that our distant ancestors found the sound particularly evocative because male and female voices are, on average, roughly an octave apart, and so the strange consonance of the proto-flute seemed to echo the sound of a conversation between a man and a woman. Perhaps chanting in harmony predated the first instruments, and so when the random variation of evolution happened to concoct a bone or a stalk that by sheer coincidence generated an octave, and we in turn stumbled across that bone or stalk, we found the echoes so alluring that we set about engineering the effect ourselves.

Because music has such a long history in human society, some scientists believe that an appetite for song is part of the genetic heritage of Homo sapiens, that our brains evolved an interest in musical sounds the way it evolved color perception or the ability to recognize faces. The question of whether music is a cultural invention or an evolutionary adaptation has been a contentious one in the last decade or so, a debate initially triggered by Steven Pinker’s best-selling manifesto of evolutionary psychology, How the Mind Works. Pinker is famous for seeing the mind as a kind of toolbox with a set of specific attributes shaped by the evolutionary pressures of our ancestral environments. But music he considers to be a cultural hack, designed to trigger circuits in the brain that evolved for more pressing tasks. In one of the book’s most controversial passages, he compared music to strawberry cheesecake:

We enjoy strawberry cheesecake, but not because we evolved a taste for it. We evolved circuits that gave us trickles of enjoyment from the sweet taste of ripe fruit, the creamy mouth feel of fats and oils from nuts and meat, and the coolness of fresh water. Cheesecake packs a sensual wallop unlike anything in the natural world because it is a brew of megadoses of agreeable stimuli which we concocted for the express purpose of pressing our pleasure buttons . . . music is auditory cheesecake, an exquisite confection crafted to tickle the sensitive spots of at least six of our mental faculties.

Something about that cheesecake metaphor did not sit well with other scientists, and in the years that followed, many argued that the taste for music must have had some direct adaptive value, given the prominence of musical instruments in the early archeological record—and the ubiquity of music across all human societies. Some believe that musical chanting may have predated language itself, that words and sentences evolved out of the prelinguistic communication of harmony and rhythm. The researchers who discovered one set of bone flutes in Germany argue that music may have played a key role in cementing social bonds among early humans: “The presence of music in the lives of early Upper Paleolithic peoples did not directly produce a more effective subsistence economy and greater reproductive fitness,” they write. “Viewed, however, in a broader behavioral context, early Upper Palaeolithic music could have contributed to the maintenance of larger social networks, and thereby perhaps have helped facilitate the demographic and territorial expansion of modern humans relative to culturally more conservative and demographically more isolated Neanderthal populations.” Others take the sexual conquests of modern musicians as a sign that musical talent may be a trait encouraged by sexual selection: having a gift for song didn’t make you more likely to survive the challenges of life in the Upper Paleolithic, but it did make you more likely to reproduce your genes.

One premise unites both sides in this debate: that music “presses our pleasure buttons,” as Pinker describes it. Yet there is something too simple in describing our appetite for music in this way. Sugar and opiates, to give just two examples, press pleasure buttons in the brain in a relatively straightforward fashion. Given a taste of one, we instinctively return for more of the same, like those legendary lab rats endlessly pressing the lever for more stimulants. And we put our ingenuity to work concocting ever-more-efficient delivery mechanisms for these forms of pleasure: we refine opium into heroin; we start selling soda in Big Gulp containers. But music—like the patterns and colors unleashed by the fashion revolution—appears to resonate with our pleasure centers at more of an oblique angle. The pleasure in hearing those captivating sounds doesn’t just establish a demand for more of the same. Instead, music seems to send us out on a quest for new experiences: more of the same, but different.

Wherever you fall on the evolutionary question, music confronts us with one undeniable paradox: this most abstract and ethereal of entertainments—conjured up out of invisible symmetries of air molecules vibrating—has a longer history of technological innovation than any other form of art. Since tones generated by that first bone flute resonated in our ears, we’ve been chasing new sounds, new timbres, new harmonies. And that pursuit led to countless technological breakthroughs that shaped modern life in entirely nonmusical ways.

The pursuit of novelty recurs again and again in the history of play. One way to imagine it is that evolution has given us two kinds of pleasure buttons. The first is an all-hands-on-deck kind of button: we need food; we need warmth; we need offspring. If we don’t have those things, we will die, or our genes will not be passed on to the next generation. So there are “pleasure buttons” associated with satisfying those needs: the pleasures of sex, or eating proteins and carbohydrates. But there are other, less urgent pleasures, like the sound of air being blown through a vulture bone. We don’t need to hear that sound in any existential sense, but nonetheless something about it captures our attention, prods us to seek the experience out in future environments. But at the same time, something about it compels us to vary the experience. The pure pleasure buttons in the brain, like the endorphin system, don’t compel you to seek out anything other than increasing amounts of endorphins. In fact, the pleasure associated with them is so powerful that most people who get addicted to artificial versions, like heroin or OxyContin, lose interest in other experiences altogether. The pull of opiates is centripetal; most heroin victims die alone for a reason. But music, like other similar forms of play, is a push: it propels you to seek out new twists.

That exploratory, expansive drive is what separates delight from demand: when we are in play mode, we are open to new surprises, while our base appetites focus the mind on the urgent needs of staying alive. Understanding that distinction is critical to understanding why play—despite its seemingly frivolous veneer—has led to so many important discoveries and innovations. The question of why the Homo sapiens brain possesses this strange hankering for play and surprise is a fascinating one, and I will return to it in the final pages of this book. But for now, we need to establish just how far those playful explorations took us.

The bone flutes must have sounded enchanting to the early humans of the Upper Paleolithic. But they were just the beginning.

—

The Banu Musa—those brilliant toy designers from the Islamic golden age—earned a permanent place in the pantheon of engineering and robotics with their Book of Ingenious Devices. And yet the brothers omitted from that collection what may have been their most ingenious device of all, a machine that would introduce one of the most important concepts of the digital age more than a thousand years before the first computers were built. Evidence of their design lies in a separate treatise, transcribed by a scholar in the twelfth century and discovered a hundred years ago in a library at Three Moons College in Syria. Like most of the Banu Musa’s work, the document is an intensely technical how-to guide for building a machine with hundreds of distinct components. But the title has a captivating clarity to it: “The Instrument Which Plays by Itself.”

“We wish to explain,” the brothers announce in the opening lines, “how an instrument is made which plays by itself continuously in whatever melody we wish, sometimes in a slow rhythm and sometimes in a quick rhythm, and also that we may change from melody to melody when we so desire.” The instrument in question was a hydraulic, or water-powered, organ, similar in design to organs built by the Greeks and Romans centuries before. Yet the Banu Masu device had one crucial feature that no instrument designer had ever implemented. The notes played by the organ were not triggered by human fingers on a keyboard. Instead, they were triggered by what came to be known as a pinned cylinder—a barrel with small “teeth,” as the brothers called them, irregularly distributed across its surface. As the barrel rotated, those teeth activated a series of levers that opened and closed the pipes of the organ. Different patterns of “teeth” produced different melodies as the air was allowed to flow through the pipes in unique sequences. The brothers explained how a melody could be encoded onto these cylinders by capturing the notes played by a live musician on a rotating drum covered by black wax, strongly reminiscent of the phonographic technology that wouldn’t be invented for another thousand years. (The Banu Musa system recorded the specific notes played, not sound waves, however.) The marks etched in the wax could then be translated into the teeth of the cylinder. Anticipating the slang that eventually developed for the record industry, the Banu Musa described this process as “cutting” a melody. In a fitting echo of the musical innovations that preceded them, the Banu Musa even included a description of how their instrument could be embedded inside an automaton, creating the illusion that the robot musician was playing the encoded melody on a flute.

Reconstruction of the Banu Musa’s self-playing music automaton

The result was not just an instrument that played itself, as marvelous as that must have been. The Banu Musa were masters of automation, to be sure, but humans had been tinkering with the idea of making machines move in lifelike ways since the days of Plato. Animated peacocks, water clocks, robotic dancers—all these contraptions were engineering marvels, but they also shared a fundamental limitation. They were locked in a finite routine of movements. But the instrument that played itself was not restrained in the same way. You could dream up new melodies for it, instruct it to produce new patterns of sound. The water clocks the Banu Musa built were automated. But their “instrument” was endowed with a higher-level property. It was programmable.

Conceptually, this was a massive leap forward: machines designed specifically to be open-ended in their functionality, machines controlled by code and not just mechanics. A direct line of logic connects the “Instrument Which Plays by Itself” to the Turing machines that have so transformed life in the modern age. You can think of the instrument as the moment where the Manichean divide between hardware and software first opened up. An invention that itself makes invention easier, faster, more receptive to trial and error. A virtual machine.

Yet it must have been hard to see (or hear) its significance at the time. To the untutored spectator, it might have paled beside the animated peacocks. So the organ sometimes played one sequence of notes, and sometimes played other tunes—how big a deal could that be? The textual evidence suggests that the Banu Musa were onto the significance of the advance; they devoted many pages in their assembly manual to discussing the technique for “cutting” new cylinders and for modifying the playback of existing melodies. But they couldn’t have grasped where that step change would eventually lead.

Something about the concept of programmable music continued to attract the attention of engineers and instrument designers during the centuries that followed. Behind timepieces, music boxes were some of the most advanced works of mechanical engineering in the sixteenth and seventeenth centuries; almost without exception, these devices employed the pinned cylinder devised by the Banu Musa to program the melody and chords. Like the automata that succeeded them, these contraptions were meant to delight and amuse the elite, not compete with serious musical performances. They were playthings, novelties—but, through that play, a larger and more revolutionary idea was slowly taking shape.

—

The next milestone in the evolution of that idea would have been visible to any curious-minded Parisian in the late 1730s. Wandering into the reception room of the Hôtel de Longueville in Paris, on the site now occupied by part of the Tuileries, one would have seen, amid the ornate furnishings, a life-sized shepherd crouching on a pedestal, playing a flute. While the shepherd was in fact a machine, it played the flute the way any human would: by blowing air through the mouthpiece in varying rhythms, while covering and uncovering air holes on the body of the flute itself. Hidden inside the pedestal, air pumps and crankshafts controlled the pressure of the air released through the shepherd’s mouth, along with the movement of the automaton’s fingers. A pinned cylinder controlled the volume and sequence of the notes played; a rotating collection of cylinders allowed the shepherd to play twelve distinct songs.

The flute player was the creation of Jacques de Vaucanson, the French automaton designer now most famous for his “digesting duck.” (The duck would appear at the Hôtel de Longueville on a pedestal next to the flute player the following year.) Vaucanson was the first of the automaton designers to focus on creating truly lifelike behavior in his machines, with movements that were predicated on careful anatomical study. After a decade of desultory work and travel displaying a handful of early designs, Vaucanson had attracted the patronage of a Parisian gentleman named Jean Marguin. With sufficient economic backing for the first time in his life, Vaucanson set out to design a machine that lived up to his ambitions: technology so advanced it would simulate the breath of life itself. Without realizing it, he was retracing the steps that the Paleolithic toolmakers had followed forty thousand years before. He took the most advanced engineering knowledge the world had ever seen, and used it to create 4:3 ratios in sound waves by blowing air through the holes carved in a hollow tube.

The bio-mimicry of the flute player’s finger work and breathing would have impressed the Banu Musa, but the fundamental principles behind Vaucanson’s design were essentially the same as the “Instrument Which Plays by Itself.” The “programming” that controlled the machine’s behavior came from the patterns of teeth on a rotating cylinder, and the power of that programmability was harnessed to play music. We should not overlook the strange nature of the technological history here: for eight hundred years, humans had possessed the protean resource of programmability, and over that time they had used that resource exclusively to generate pleasing patterns of sound waves in the air. (And, in the case of the flute player, to accompany those sound waves with physical movements that mimicked the behavior of human musicians.) Think of all the ways that the world has been transformed by software, by machines whose behavior can be sculpted and reimagined by new instruction sets. For almost a thousand years, we had that meta-tool in our collective toolbox, and we did nothing with it other than play music.

Jacques de Vaucanson

Vaucanson’s flute player, however, would lead us out of that functional cul-de-sac. Designing the programmable cylinders that brought the musical shepherd to life suggested another application to Vaucanson, one that had far more commercial promise than showcasing androids in hotel lobbies. If you could use pinned cylinders to trigger complex patterns of sound waves, Vaucanson thought, why couldn’t you use the same system to trigger complex patterns of color? If you could build a machine with enough mechanical dexterity to play a flute, why couldn’t you build a similar machine to weave a pattern out of silk?

By 1741, Vaucanson’s scandalous triumph with the digesting duck had made him a minor celebrity in France, acclaimed for his showmanship as much as for his engineering; he parlayed that success into a royal appointment advising Louis XV on plans to revitalize the French weaving industry, which was widely considered to have lagged behind its more technically ambitious competitors on the other side of the Channel. He toured the preindustrial looms of the country and began making plans for a machine that would do to fabric what the Banu Masu had done to melody. Instead of opening airholes in an organ or moving an android’s fingers, Vaucanson’s loom would control an array of hooks and needles that switched between different warp threads according to instructions encoded in a pinned cylinder. The same machine could be taught to weave a vast set of potential patterns out of silk. For the first time, programming would escape the boundaries of song.

That, at least, was the theory. In practice, the machine that Vaucanson ultimately built was hamstrung by the difficulty of translating the patterns onto the pinned cylinders, which were expensive to “cut.” The patterns themselves had to adhere to repetitive designs, since the pattern looped with each rotation of the cylinder. Though several working prototypes were built, the machine never found a home in the French textile industry. But one of those prototypes survived long enough to find its way into the collection of the Conservatoire des Arts et Métiers, an institute formed in the early days of the French Revolution, more than a decade after Vaucanson’s death. In 1803, an ambitious inventor from Lyon named Joseph-Marie Jacquard made a pilgrimage to the conservatoire to inspect Vaucanson’s automated loom. Recognizing both the genius and the limitations of the pinned cylinder, Jacquard hit upon the idea of using a sequence of cards punched with holes to program the loom. In Jacquard’s design, small rods, each attached to an individual thread, pressed against the punch cards; if they encountered the card’s surface, the thread remained stationary. But if the rod pushed through a hole in the card, the corresponding warp thread would be woven into the fabric. It was, in its way, a kind of binary system, the holes in the cards reflecting on-off states for each of the threads. The cards were far easier to manufacture than the metal cylinders, and they could be arranged to create an infinite number of patterns. The automated nature of Jacquard’s loom also made it more than twenty times faster than traditional drawlooms. “Using the Jacquard loom,” James Essinger writes, “it was possible for a skilled weaver to produce two feet of stunningly beautiful decorated silk fabric every day compared with the one inch of fabric per day that was the best that could be managed with the drawloom.”

Joseph-Marie Jacquard displaying his loom

The Jacquard loom, patented in 1804, stands today as one of the most significant innovations in the history of textile production. But its most important legacy lies in the world of computation. In 1839, Charles Babbage wrote a letter to an astronomer friend in Paris, inquiring about a portrait he had just encountered in London, a portrait that when viewed from across the room seemed to have been rendered in oil paints, but on closer inspection turned out to be woven entirely out of silk. The subject of the portrait was Joseph-Marie Jacquard himself. In his letter Babbage explained his interest in the legendary textile inventor:

You are aware that the system of cards which Jacard [sic] invented are the means by which we can communicate to a very ordinary loom orders to weave any pattern that may be desired. Availing myself of the same beautiful invention I have by similar means communicated to my Calculating Engine orders to calculate any formula however complicated. But I have also advanced one stage further and without making all the cards, I have communicated through the same means orders to follow certain laws in the use of those cards and thus the Calculating Engine can solve any equations, eliminate between any number of variables and perform the highest operations of analysis.

Babbage borrowed a tool designed to weave colorful patterns of fabric, which was itself borrowed from a tool for generating patterns of musical notes, and put it to work doing a new kind of labor: mechanical calculation. When his collaborator Ada Lovelace famously observed that Babbage’s analytical engine could be used not just for math but potentially for “composing elaborate . . . pieces of music,” she was, knowingly or not, bringing Babbage’s machine back to its roots, back to the “Instrument Which Plays by Itself” and Vaucanson’s flute player. Always one to celebrate his influences, Babbage managed to acquire one of the silk portraits of Jacquard and displayed it prominently in his Marylebone home alongside Merlin’s dancer and his Difference Engine.

The thread that connects Jacquard to Babbage to the digital pioneers of the 1940s is well-worn, for good reasons. Most histories of computation include a nod to Jacquard’s punch cards, even though technically speaking he wasn’t programming a computational device. But punch cards did persist as the dominant input and data storage device for digital information well into the second half of the twentieth century. (I can remember using punch cards as a grade-schooler in the 1970s.) Fittingly, punch cards were replaced as input devices by keyboards, and as storage devices by magnetic tape: both technologies, as we will see, that were originally designed to play or record music.

But there is another reason why tech historians are so quick to celebrate Jacquard’s role in jump-starting the age of computation. The handoff from loom to analytic engine follows the wider pattern of economic paradigm shifts: the industry that launched the industrial revolution—textiles—provides the seeds for the digital revolution two centuries later. There’s a kind of macroeconomic poetry to telling the story this way: one dominant mode of production laying the groundwork for one of its successors. But when you step back and look at the history from a wider angle—from the Banu Musa through the music-box curios to Vaucanson and his flute—you can’t help noticing how long the idea of a programmable machine was kept in circulation by the propulsive force of delight, and not industrial ambition: first the patterns of sounds, produced by instruments that play themselves, then the patterns of color on a cloth. The entrepreneurs and industrialists may have turned the idea of programmability into big business, but it was the artists and the illusionists who brought the idea into the world in the first place.

—

In October of 1608, Ferdinando I, the Grand Duke of Tuscany and head of the Medici dynasty, hosted a monthlong pageant in Florence to celebrate the marriage of his son Cosimo to an Austrian archduchess. Given the vast wealth of the Medici clan, and the years of geopolitical negotiations that had preceded the engagement, the wedding was destined to be an extravagant affair: closer, in modern terms, to a city hosting the Olympics than a celebrity wedding. Jousting tournaments, equestrian ballets, even a mock naval battle on the Arno were all staged in the days leading up to the ceremony. The official wedding banquet was crowned with a theatrical production of a play (written by Michelangelo’s son) interspersed with six musical numbers, known as intermedi, all based on new scores written for the wedding festivities by a team of court composers. The sixth and final intermedio featured an elaborate stage set in which the gods descended from the heavens to extend their welcome and congratulations to the newlyweds. A sketch of the stage design by young Michelangelo shows roughly a hundred musicians, singers, and dancers onstage—some of them apparently hovering above the audience in simulated clouds.

The tradition of lavish intermedi—which may have hit its climax with Cosimo’s wedding banquet—is of particular interest to music historians, because it served as a kind of transitional form that would, over the course of the 1600s, solidify into the set of dramatic and orchestral conventions that we now call opera. But for our purposes, consider that extravagant performance purely in terms of the technology on display. Think first of the tools available to artists or scientists in the early years of the seventeenth century: the printing press was only a hundred and fifty years old; telescopes were just being deployed for the first time; microscopes were still fifty years away. Beyond the printing press, a writer’s tools would have been indistinguishable from the quills and ink that the Greeks and Romans used more than two thousand years before. Painters had access to new oil paints that had been developed in the preceding century; some used the older technology of the camera obscura to create the distinctive realism of Renaissance painting. Needless to say, the technologies of photography and cinema were unimaginable to the creative class that the Medicis supported.

By contrast, the composers of the wedding banquet intermedi had at their disposal a rich and diverse array of musical technologies with which to entertain the Medicis and their guests. A roster of instruments that played during the final intermedio—an ensemble that bears a clear resemblance to a modern orchestra—gives a vivid picture of music’s technological dominance in the early 1600s. To create their sonic extravaganza, the court composers drew upon multiple variations of plucked stringed instruments, including lutes, chitarroni, and citterns—instruments that date back at least to ancient Egypt. They employed a now largely extinct wind instrument called the cornett that was carved out of wood with a mouthpiece made of ivory, along with keyboard-based pipe organs that enabled long, sustained notes, an instrument that dates back to Roman times. Percussive sounds were generated by bronze cymbals and triangles. (Interestingly, the ensemble seems to have lacked any form of traditional drum.) The intermedio also featured metal trombones with a distinctive sliding tube to change pitch—a relatively new addition to the composer’s palette, having been invented only a few centuries before. The most advanced instruments in the ensemble would have been the bowed string instruments: violins, violas, bass violins—technology so advanced that by most accounts it peaked less than a century after the Medici wedding with the production of the legendary Stradivarius violins still played by world-class soloists. And, of course, the ensemble included flutes, the descendants of those mammoth and vulture bone flutes from the Upper Paleolithic.

As diverse as the Florentine proto-orchestra might seem, several key instruments commonly used during that period were not featured in the performance: trumpets, harps, and, most important, the new keyboard-based clavichord and harpsichord. (The pianoforte, now called simply the piano, wouldn’t be invented for another century or so.) Viewed purely in terms of the technological innovation at their disposal, the poets and painters of the early seventeenth century were living in the Stone Age compared to the high-tech bounty available to the composers. That extensive inventory of sonic tools illustrates how, from the very beginning, our appetite for music has been satisfied by engineering and mechanical craft as much as by artistic inspiration. The innovations that music inspired turned out to unlock other doors in the adjacent possible, in fields seemingly unrelated to music, the way the “Instrument Which Plays by Itself” carved out a pathway that led to textile design and computer software. Seeking out new sounds led us to create new tools—which invariably suggested new uses for those tools.

Legendary violin maker Stradivari’s workshop

Consider one of the most essential and commonly used inventions of the computer age: the QWERTY keyboard. Many of us today spend a significant portion our waking hours pressing keys with our fingertips to generate a sequence of symbols on a screen or page: typing up numbers in a spreadsheet, writing e-mails, or tapping out texts on virtual keyboards displayed on smartphone screens. Anyone who works at a computer all day likely spends far more time interacting with keyboards than with more celebrated modern inventions like automobiles. Once the province of the great American novelists, clerks, and the typing pool, the keyboard has become so ubiquitous that we rarely celebrate it as an invention at all, preferring instead to pay tribute to the technically more advanced machines those keyboards are now connected to. But the idea of using the pads of our fingers to generate language—with individual keys mapped onto letters in the alphabet—was a tremendous breakthrough; without it, the digital revolution would have been at the very least significantly delayed. Technically speaking, keyboards were not as essential to advanced computation as the idea of programmability that Babbage inherited, indirectly, from the Banu Musa. But on a practical level, the existence of text keyboards allowed computers to achieve astonishingly complex feats. Other input mechanisms, like punch cards, were simply too slow. The chips might have followed the upward arc of Moore’s law for a spell, but eventually the hardware would have hit a ceiling dictated by the software: without keyboards, writing code even for, say, a 1970s mainframe would have been tedious beyond belief. Voice entry might have one day offered an equivalently powerful medium for writing software, but it is unlikely that we would have been able to compose software smart enough to recognize spoken language without the speed and precision that keyboards supplied. You don’t need keyboards to invent a computer, but it’s awfully hard to get a computer do anything interesting without also inventing a typing mechanism to go along with it.

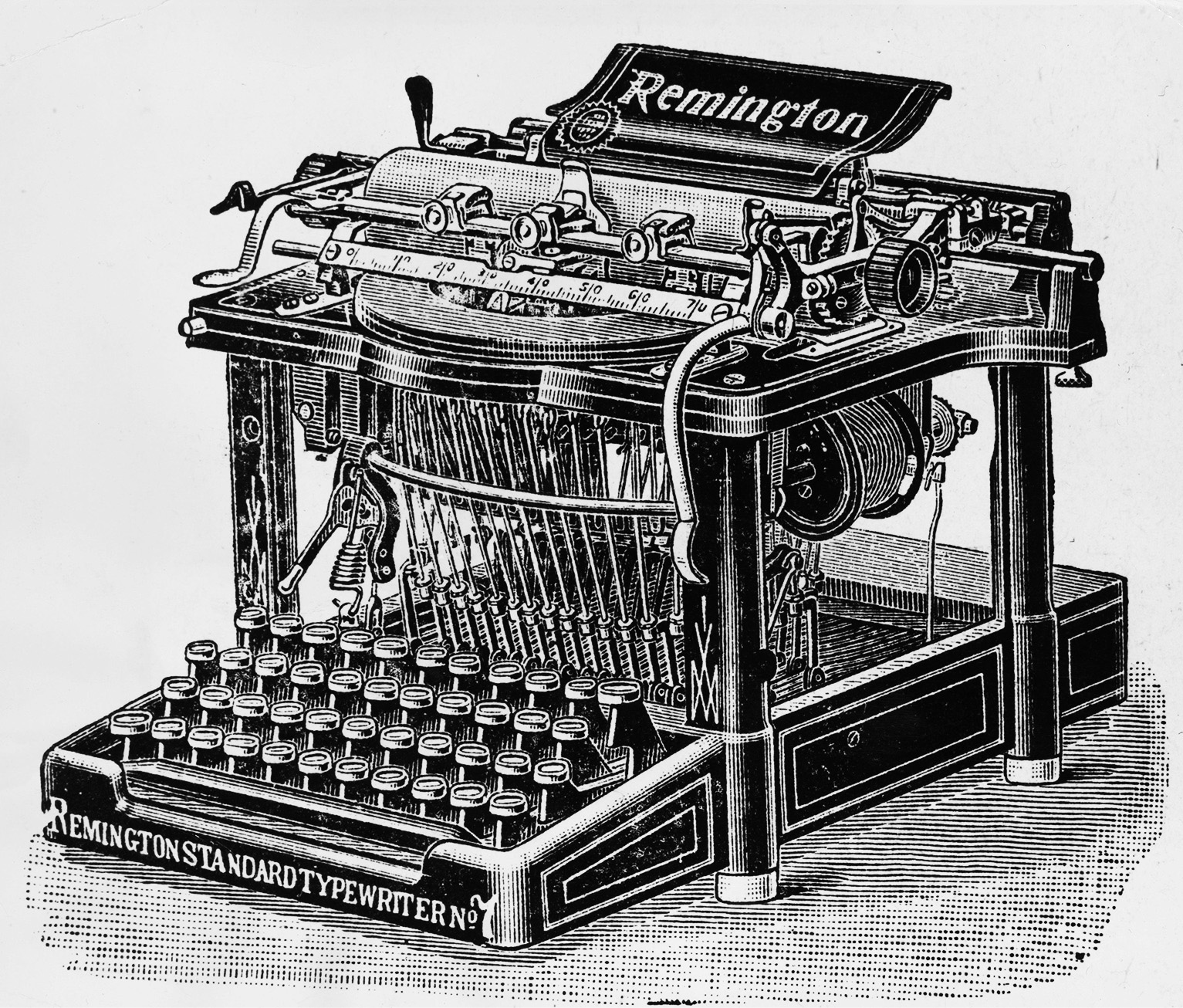

And yet, despite its obvious utility, even in the pre-digital era, the concept of an alphanumeric keyboard seems to have been strangely difficult for people to imagine. Inventors didn’t begin tinkering with “writing machines” until the early 1800s, and the first commercially successful typewriter—the Remington No. 1—didn’t go on the market until 1874. Like the bicycle, which wasn’t invented until the middle of the 1800s, the typewriter is one of those technologies that seems to have emerged much later than it should have. Gutenberg had demonstrated the utility and commercial value of mechanized typesetting in the 1400s; Renaissance- and Enlightenment-era clockmakers—and automaton designers—had the engineering skills to design machines far more complex than the original Remington. “From a mechanical point of view,” historian Michael Adler writes, “there is no reason why a writing machine could not have been built successfully in the fourteenth century, or even earlier.” And yet no one bothered to do it.

What finally provoked inventors to start tinkering with typewriter keyboard designs? The answer is visible in the word itself: the “keys” we press when we type descend, etymologically, from the musical meaning of the word. While no one thought to build keyboards to trigger language until the nineteenth century, people had been constructing keyboards to make music since the pipe organs of Roman times. While Gutenberg was perfecting his movable type system, dozens of instrument designers across Europe were experimenting with different models of keyboard-based string instruments, devices that eventually solidified into those staples of Baroque music, the clavichord and harpsichord. In the early 1700s, the great-grandson of Cosimo II de’ Medici—whose 1608 wedding had showcased such a wide range of musical devices—recruited an instrument maker named Bartolomeo Cristofori to manage his extensive collection of instruments. Cristofori went on to invent the pianoforte under the patronage of the Medici clan, a keyboard instrument that for the first time allowed notes to be played at different volume levels. (Pianoforte means “soft-loud” in Italian.) By the 1800s, the piano had become one of the most widely played instruments in the world.

Keyboard-based instruments were so appealing to musicians for the same reason that alphanumeric keyboards appeal to us today: they enable us to use all ten of our fingers independently. And as the piano became increasingly prominent in the 1700s, inventors began to think about how the keyboard system might be used not just to play music but also to capture the notes played in some kind of permanent medium. In 1745, a German named Johann Freidrich Unger proposed a device that would draw musical notes on a rolling sheet of paper triggered by a live performance on a piano-style keyboard. Each note would be represented by a straight line, its length determined by the length of time the corresponding key was depressed. (Unger’s proposed score looked remarkably like the MIDI “piano roll” layout used by digital music software today.) While the whole contraption depended on the paper roll unfurling at a constant speed, Unger was strangely silent about how his device would achieve that consistency, which may be one reason he appears to have never actually built the thing. Other inventors followed in his wake, including one Miles Berry, who patented a device in 1836 that punched holes through carbon paper with a stylus, a technique that would later become essential to player pianos.

But the flurry of interest in using keyboards to write musical notes suggested another application, with an even larger potential market: using keyboards to record letters, words, and whole sentences. Inspired by the way pianists played chords by depressing multiple keys simultaneously, a French librarian named Benoit Gonod invented in 1827 a shorthand typewriter using only twenty keys that printed a stream of dots that could be translated back into alphanumeric code. (Gonod’s chordal system is still used by stenographers today.) The details are murky, but at some point in the 1830s or 1840s, an Italian named Bianchi appears to have presented to the Paris academy a “writing harpsichord” that used a piano keyboard and a cylindrical platen wrapped with paper to print actual letterforms directly on the page. The first functioning machine that a modern observer would identify as a typewriter was patented in 1855 by an Italian named Giuseppe Ravizza who had been obsessed with the idea of writing machines for thirty years. He used the same metaphor that Bianchi had used before him, calling his creation the cembalo scrivano, the “writing harpsichord.”

Early Remington typewriter

Other typewriter inventors during this period used piano-style keyboards—with interspersed white and black keys—including a “printing machine” invented by a New Yorker named Samuel Francis in 1857. But by the time the Remington No. 1 hit the market in the 1870s, the musical roots of the typewriter had been washed away, lingering only in the word keyboard itself. The typewriter keyboard was poised to reinvent the way humans communicate. But the idea for this now indispensable tool began in song.

—

Why did music play such an important role in our technological history? One likely reason is that music naturally lends itself toward the creation of codes, more than any other human activity other than language and mathematics. Cuneiform tablets dating back to 2000 BC have been found inscribed with a simple form of musical notation, featuring notes arranged according to what we now call the diatonic scale. Once again, music appears to leap ahead of where it should logically be on the hierarchy of needs. In 2000 BC, most human settlements around the world hadn’t invented a notation system for language yet. And yet somehow the ancient Sumerians were already composing scores.

Rhythm, too, can function as a kind of informational code, as Samuel Morse discovered in the invention of the telegraph. The very first long-distance wireless networks were the “talking drums” of West Africa, percussive instruments that were tuned to mimic the pitch contours of African languages. Complex messages warning of impending invasions, or sharing news and gossip about deaths or marriage ceremonies, could be conveyed at close to the speed of sound across dozens of miles, through relays of drummers situated in each village. Instruments designed originally to set the cadence for dance and other musical rituals turned out to be surprisingly useful for encoding information as well. The origins of the talking drum technology are lost to history; there is no Samuel Morse to celebrate, some ingenious inventor of the original code. But presumably the idea followed roughly the same sequence that brought other civilizations from bone flutes to music boxes: the patterns of synchronized sound were initially triggered for the strange intoxication they invoked in their listeners. But, over time, West African inventors, like Vaucanson so many centuries later, began to notice that those patterns could convey something more than mere rhythm or melody. Like the patterns of wave forms that we translate into spoken language, the patterns of tones generated by the drums could carry another layer of meaning. For the first time, symbols were aloft in the air, traveling far beyond the range of the human voice.

Today, of course, our lives are surrounded by encoded information and entertainment. We watch movies and share family photographs and play games that have been translated and compressed into binary code, captured in some kind of storage medium, distributed through optical disks or, increasingly, through the Internet—and then, at the very end of the chain, the code is translated back into sounds and images and words that we can understand, by machines designed to translate these hidden codes for us, turning them into meaningful information. This vast cycle of encoding and decoding is now as ubiquitous as electricity in our lives, and yet, like electricity, the cycle was for all practical purposes nonexistent just a hundred and fifty years ago.

Not surprisingly, one of the very first technologies that introduced the coding/decoding cycle to everyday life took the form of a musical instrument, one with a direct lineage to Vaucanson and the Banu Musa: the player piano. Though its prehistory dates back to the House of Wisdom, a self-playing piano became a central focus for instrument designers in the second half of the nineteenth century; dozens of inventors from the United States and Europe contributed partial solutions to the problem of designing a machine that could mimic the feel of a human pianist. The new opportunities for expression that Cristofori’s pianoforte had introduced posed a critical challenge for automating that expression; it wasn’t enough to record the correct sequence of notes—the player piano also had to capture the loudness of each individual note, what digital music software now calls “velocity.” The first player piano to reach genuine commercial success was the pianola, designed by a Detroit inventor named Edwin Scott Votey in 1895. Powered pneumatically by the suction created by a human pressing foot pedals (which in turn determined the tempo of the performance), the Pianola encoded its songs onto perforated rolls of paper—a scrolling version of Jacquard’s punch cards. While dozens of other player pianos swarmed the market in the early 1900s (including one called the Tonkunst—German for “musical art”—from which the phrase honky-tonk derives), the pianola was such a success that it achieved Kleenex and Band-Aid levels of brand awareness. During the heyday of the player piano—which lasted until around 1930—many consumers simply called them pianolas regardless of the device’s actual brand name.

Newspaper advertisement for the Pianola, 1920s

With the commercial success of the pianola came an innovative new business model: selling new songs that had been encoded in the piano roll format. Today we take this exchange for granted: you buy a piece of hardware and then spend the next few years purchasing software to run on it, code that endows the machine with new functionality. But in 1900, the whole concept of paying for new programming was an entirely novel idea. Hundreds of thousands of songs—from classic compositions to honky-tonk—were recorded in piano-roll format in the first decades of the twentieth century. And while the player piano would be largely killed off by radio and phonographs by the 1930s, the commercial template it established—paying for code—would eventually give rise to the some of the most profitable companies in the history of capitalism.

There is something puzzling about the fact that the player piano achieved such dramatic success during this period. Radio, of course, didn’t become a mainstream technology until the 1920s, so the fact that the pianola found a foothold in American homes and public venues before then shouldn’t surprise us. But the phonograph was invented by Edison in 1877, almost twenty years before Votey’s pianola. A phonograph was far less cumbersome as a device—and less expensive—and it would go on to replace the player piano as a “programmable” machine by the 1930s. So why did it take so long? As is so often the case in history of innovation, the explanation for this strange sequence revolves around the networked nature of technological progress. Inventions are almost never solitary, isolated creatures; they depend on other inventions that complete them, or endow them with new applications that their original inventors never considered. The phonograph on its own was a true breakthrough, capturing the actual analog wave forms of music and human speech for the first time. But it required another, parallel technology to reach mainstream success. A pianola was at its core an actual piano, with hammers hitting actual strings; it generated sound loud enough to fill a clamorous parlor or saloon. But the phonograph was amplified only by the passage of the sound waves through a flaring horn. You had to lean in to hear it, and in a room with any sort of ambient noise, the sound was effectively inaudible. The phonograph was far more versatile than the pianola; you could hear singers, brass bands, orchestras, or poetry. It just wasn’t loud enough. It needed amplification, which arrived via two related inventions: metered electric currents and vacuum tubes. The second you could plug in the phonograph, the pianola’s days were numbered.

—

The distinguished crowd gathered on June 19, 1926, at the Théâtre des Champs-Élysées, the grand art deco concert hall in the eighth arrondissement, would have known they weren’t in for just another night at the opera the second they caught sight of the instruments assembled on the stage. Alongside a handful of percussive instruments that wouldn’t have been out of place at the Medici wedding—xylophones, glockenspiels, bass drums—the ensemble also included a pianola, several traditional pianos, a siren, hammers, saws, a collection of electric bells, and two oversized airplane propellers.

The musicians playing these bizarre instruments were there to perform a piece called Ballet Mécanique, written by the twenty-four-year-old American composer George Antheil. Like many Jazz Age artists and intellectuals, Antheil had left the banal Americana of his upbringing and set sail for the bright lights and bonhomie of the Parisian avant-garde at the age of twenty-one. By the time of the Théâtre des Champs-Élysées premiere, he’d made a home for himself among the cognoscenti: renting a room above Shakespeare and Company from Sylvia Beach; dreaming up collaborations with James Joyce and Ezra Pound; feuding with Igor Stravinsky. Drawing on the Futurists’ obsession with the aesthetics of industrial machinery, Antheil built a reputation as a daring composer eager to widen the definition of what constituted a musical instrument in the first place. (Years later, Antheil, in an act of self-branding that would have impressed Prince, titled his autobiography The Bad Boy of Music, although some of his musical innovations mimicked earlier experiments by Stravinsky and Darius Milhaud.) Ballet Mécanique had originally been conceived as a score for a short experimental film by the same title, but the two works soon took on separate lives—in part because Antheil’s score turned out to be almost twice as long as the film.

As eclectic as the “orchestra” might have seemed on the stage of the Théâtre des Champs-Élysées, it represented a far more streamlined and conventional ensemble than Antheil had envisioned. The centerpiece of his original score was a suite of sixteen pianolas playing four distinct parts, many of them atonal. The “mechanical ballet” that Antheil initially planned to stage had automated machines instead of dancers—the pulsing rhythm and noise of the industrial age transformed into a kind of music, not through the dexterity of the virtuoso player, but through the punched notes of the piano roll. And yet, ultimately, the pianola technology undermined Antheil’s project, in large part because he was asking the technology to do something it was almost never asked to do in ordinary use. A piano roll could effectively mimic the tempo and articulation of an accomplished pianist, coordinating the activity of all eighty-eight notes on the keyboard. But there was no easy way to synchronize the activity of multiple player pianos. Even if you triggered each of them at the exact same time, minuscule differences between the tempos of each machine would quickly cause them to fall out of sync. Today, modern concerts effortlessly sync dozens of different instruments thanks to the digital code of MIDI, but in the midtwenties this was a problem that no one had even conceptualized yet. Instruments were locked to the same tempo because they were being played by human beings who could follow the gestures of the conductor or hear the beat being generated by the rhythm section. But a machine needed mechanical cues to stay in sync with other machines. Antheil had attempted to jerry-rig a system of pneumatic tubes and electric cabling to coordinate the pianolas, but none of his efforts worked in the end. Eventually, he gave up and rewrote the score for a single pianola, accompanied by traditional pianists.

Even without the sixteen player pianos, the 1926 premiere managed to provoke a small riot in the theater. With an all-star lineup of Parisian intellectuals in attendance—including Joyce, Pound, T. S. Eliot, and Man Ray—the crowd began to hurl invectives at the stage as the performance rumbled on; when the airplane propellers lurched into action, concertgoers opened up umbrellas to pantomime being blown away by the airflow. A friend of Antheil’s later wrote, “The Ballet began to seem like some monstrous abstract beast, battling with the nerves of the audience, and I began to wonder which one would win out.” At one point, Pound apparently stood up and shouted to the catcallers: “Vous êtes tous des imbéciles!” (“You are all imbeciles!”). Aaron Copland later suggested that the Ballet Mécanique riots were even more tumultuous than the famous outburst that greeted the premiere of Stravinsky’s Sacre du Printemps. (In Copland’s words, Antheil had “outsacked the Sacre.”) Antheil, of course, saw the whole affair as a great triumph: “From this moment on,” he wrote in his autobiography, “I knew that, for a time at least, I would be the new darling of Paris.”

The Ballet Mécanique performance has a kind of quaint nostalgia to it now: those were the good old days, when experimental music performances in concert halls used to provoke mayhem in the aisles. But I think that night in 1926 embodies a set of deeper truths about the way music has long been intertwined with technological innovation. Something about the nature of music encourages subcultures to seek out new kinds of sound, often by designing new instruments, like the synchronized player pianos of Antheil’s original score. Eventually, those new sounds—as harsh or unintelligible as they might be to the first generation of listeners—grow more familiar; mainstream composers and performers integrate them into more traditional songs and arrangements, the way electronica and dubstep have become a cornerstone of much of today’s Top 40. And that exploratory urge for new sounds ends up creating new technological possibilities that have applications outside the realm of music. Antheil failed to devise a scheme to synchronize his sixteen player pianos. But the attempt to solve that problem—in the name of art and sonic experimentation—would lead, almost two decades later, to a breakthrough military technology, one that would eventually become a crucial component of civilian wireless communications. As it happens, to make that leap, Antheil needed the most unlikely partner imaginable: Hedy Lamarr, at the time one of the most glamorous movie stars in the world.

Hedy Lamarr

Ignored for many decades, the story of Lamarr and Antheil’s strange-bedfellows collaboration has in recent years become part of tech-history lore. Lamarr had begun her career as an actress in Weimar Germany, and married an arms dealer who ultimately turned out to have ties to the Nazis. She fled Europe in 1937 and found her way to MGM studios, where she quickly became one the great seductresses of American cinema. Offscreen, though, Lamarr was an amateur inventor who was constantly tinkering with ideas that might help the Allied cause. She largely avoided the Hollywood party circuit, preferring to stay at home in a living room populated by engineering textbooks and drawing boards pinned with schematics. After a German U-boat sank the refugee ship City of Benares in September of 1940—killing seventy children—Lamarr began sketching out a plan for a remote-controlled torpedo that could take out a submarine. She was well aware, from her days on the periphery of the Austrian munitions business, of the difficulty with wireless control mechanisms. Using radio frequencies, it was easy enough to send a signal that could alter the direction of a torpedo in the water; the problem was that those signals were easily detected and jammed by the enemy. But it occurred to Lamarr that a system could route around that interference by constantly changing frequencies according to some preestablished pattern. She called this technique “frequency hopping.”

Fortuitously, Lamarr happened to meet Antheil just as this idea was taking shape in her mind. Since his riotous days performing Ballet Méchanique, Antheil had followed a Fitzgeraldian path from Paris to Hollywood, where he developed a successful, though less scandalous, career composing scores for a series of forgettable films. (Amazingly, he also wrote a relationship advice column for Esquire.) After dining together several times in the fall of 1940, Lamarr and Antheil began working on the frequency-hopping project as a team. It may well have been the most bizarre partnership in the history of invention: the movie star and the experimental composer/advice columnist, working late into the night in the Hollywood Hills, brainstorming cutting-edge ideas for naval communications protocols.

Lamarr’s original frequency-hopping idea, as brilliant as it was, presented one critical challenge: the receiving mechanism had to shift frequencies in concert with the sending device. It was, in a sense, a synchronization problem. This is where Antheil’s experience with Ballet Mécanique supplied the missing element that completed Lamarr’s invention. Sitting in Lamarr’s drawing room night after night, wrestling with the problem of coordinating a shifting set of frequencies in perfect tempo, he found himself thinking back to those sixteen pianolas and his dream of programming them all in perfect time using barrel rolls. He proposed a control system whereby the instructions for frequencies were encoded in two perforated ribbons. Where the holes in the piano roll signaled a musical note, the holes in the ribbons signaled a frequency change. To an enemy scanning the spectrum for signals to jam, the pattern would be impossible to detect. But as long as the ribbons were activated at the same time, to the receiver on the torpedo the random bursts of noise jumping across the spectrum would sound like information. As a nod to the musical roots of the idea, Antheil proposed that the system include eighty-eight distinct frequency hops, the exact number of keys on a piano.

While the navy largely ignored Lamarr and Antheil’s proposal, the two inventors did receive a patent for it in 1942, and it was considered valuable enough to be locked away as classified information. (Antheil later wondered if the musical analogy hurt their cause with the hardheaded military analysts: “‘My god,’ I can see them saying, ‘we shall put a player piano in a torpedo,’” he wrote.) But the idea of frequency hopping was too good to disappear forever in the vaults of classified patents. An updated version of Antheil and Lamarr’s invention was implemented on navy ships during the Cuban missile crisis. Today, frequency hopping has evolved into the spread-spectrum technology used by numerous essential wireless systems, including cell-phone networks, Bluetooth, and Wi-Fi.

—

George Antheil correctly foresaw the extraordinary explosion of new sounds that the age of electricity promised, though he may have been a bit shortsighted about the data storage technology those sounds would employ. “We shall see orchestral machines with a thousand new sounds, with thousands of new euphonies, as opposed to the present day’s simple sounds of strings, brass, and woodwinds,” he wrote in his 1924 “Mechanical-Musical Manifesto.” “It is only a short step until all [musical performance] can be perforated onto a roll of paper.” Reasonable people can disagree about whether strings or brass or woodwinds are “simpler” than the new sounds unleashed by electronic music, but it is an empirical fact that “orchestral machines” in the form of synthesizers, samplers, and digital music software have introduced thousands of new sounds to the modern listener. And these novel sonic textures are not just being savored by the educated ear of the avant-garde aficionado; they are ubiquitous on Spotify and SiriusXM. No doubt there would have been even more outrage if that 1926 audience had been exposed to Kraftwerk or Aphex Twin. But today those machine-made—and often noise-saturated— sounds have found their way into mainstream taste.

Like almost every innovator in the history of instrument design, the pioneers of electronic music charted a path that would eventually be followed by other, less adventurous settlers. Today we take photographs and shoot films with digital cameras; we write novels on digital computers; we design buildings using CAD software. But the first electronic and digital tools for creativity were almost exclusively musical in nature. Some of those tools—and the people behind them—became recognizable names, like the Moog synthesizer designed by the brilliant engineer Robert Moog. (Always willing to experiment with new sounds, the Beatles were among the first musicians to integrate the Moog into the recordings—you can hear it playing the organlike parts on “Here Comes the Sun.”) But as with any paradigm shift worth the title, brilliant mistakes or generative dead ends propelled the change almost as forcefully as the success stories.

One of those dead ends resides in the collection of London’s Science Museum. With coils of cables and film ribbons streaming out of an open frame the size of a chest of drawers, it brings to mind an office photocopier that’s been dissected on an autopsy table. But this strange device is, in fact, a musical instrument, one of the very first functioning electronic instruments ever built. It is called the Oramics Machine, named after its creator, Daphne Oram.

Oram belonged to a generation of women who played crucial roles in designing and programming some of the first machines of the digital age. Oram herself was a sound engineer at the BBC, and a composer of experimental music. (Taking up where Antheil left off, she tinkered with compositions using multiple tape recorders and sine wave oscillators.) One of her first jobs at the BBC happened to coincide with the waning days of the Blitz; during live radio broadcasts of classical performances, Oram would synchronize recorded performances of the music. If a German bomb strike interrupted the performance, she would deftly switch over to the recorded version. (Given the poor audio quality of AM radio and 1940s-era speakers, the listening audience at home rarely noticed the handoff.) By the 1950s, she had built up enough political capital inside the BBC to successfully argue for the creation of the BBC Radiophonics Workshop, an influential sound-effects lab that endured for forty years.

The idea for the Oramics Machine had its origin during her technical training course in 1944. For the first time, Oram encountered a cathode-ray oscilloscope, a device that converts incoming sound waves into a scrolling, jittery line on a screen, not unlike a modern EKG. Oram recalled the experience decades later:

I was allowed to sing into it and there I saw my own voice as patterns on the screen, graphs, and I asked the instructors why we couldn’t do it the other way around and draw the graphs and get the sound out of it. I was eighteen I think and they thought this was a pretty stupid, silly teenage girl asking silly questions. But I was quite determined from that time on that I would investigate that, but I had no oscilloscope.

Oram’s question may have baffled her instructors, but something about the idea of drawing sound stuck in the back of her mind. When the world of electronics matured in the 1950s, with widespread adoption of transistors, photocells, and magnetic tape, Oram became convinced that the time was right to turn her teenage hunch into a reality. She first went to the BBC, arguing that they should fund the development of a machine that could, as she later put it, “[explore] this vast new continent of music.” The top brass at the BBC found the request baffling. “I went to see the Head of Research and said I’ve got an idea of writing graphic music could I have some equipment please,” Oram recalled. “He pulled himself up to his full height and said ‘Miss Oram, we employ a hundred musicians to make all the songs we want, thank you.’ . . . That was the official attitude: they had the BBC Symphony Orchestra, and it was there to make all the music they wanted, and nothing else was of any interest.”

Rebuffed by her lifelong employer, Oram struck out on her own in 1962 and began working on her Oramics device independently, supported by a series of grants and a rotating cast of engineers. Reminiscent of the perforated ribbons that Antheil and Lamarr had devised, the device used strips of 35mm film to control the sound. The “composer” would sketch a wavering line that designated changes in timbre, amplitude, or pitch. Photocells scanned the ribbons and passed the information on the sound generators. The final iteration of the machine included ten separate film strips that could be simultaneously processed. The device had four “voices” that could play distinct parts in perfect tempo. Notes were programmed with a grid of rectangles that took its cues from the piano-roll format. The other elements—volume, say, or reverb—were controlled by free-form shapes sketched onto the film. The Banu Musa and Vaucanson had struggled with the difficulty of “cutting” a pinned cylinder to program a musical machine. Daphne Oram thought it should be as simple as drawing a line.

In a 1966 letter, she described her progress:

We are delighted to tell you that we have succeeded in proving that graphic information can be converted into sound. We can draw any wave form pattern and scan this electronically to produce sound. By varying the shape of the scanned pattern the timbre is varied accordingly . . . We believe that no similar piece of equipment exists anywhere else in the world.

Daphne Oram drawing timbres on the Oramics machine

She was correct about that belief. Not only was the Oramics the first of its kind, it was, in a way, the last of its kind. Oram had been right to argue that a “vast new continent of music” was about to surface. By the middle of the 1970s, adventurous musicians were recording entire albums of electronic tones—but the synthesizers they used relied on much more traditional input mechanisms: the tones controlled by a dashboard of knobs and dials, and notes controlled by that most ancient of conventions—the keyboard. By the time electronic music became mainstream thanks to postpunk bands like Devo and New Order, controlling a synthesizer by painting lines on a ribbon of film would have seemed as pointless as controlling it with punch cards.

But we shouldn’t be distracted by the spectacle of those film strips. Yes, that particular part of the solution turned out to be a dead end. Computer interfaces, not undulating lines painted onto film strips, turned out to be much more efficient at the task of telling the synthesizers what notes to play. But look at any modern piece of music recording software—Pro Tools, Logic, even GarageBand; the default view of all of these now-indispensable tools is a stack of undulating lines, scrolling across a screen in time with the song. Some of those lines control pitch; some control volume and reverb. There were hundreds of provocative electronic instruments under development during the sixties, many of which produced more direct descendants than Oram’s machine. But none of those devices have the striking family resemblance that modern software tools share with the Oramics machine. The physical medium Oram devised was obsolete almost as soon as it was invented. But the interface she devised belonged to the future.

—

The technological sequence that musical instruments followed—from mechanics to electronics to software, from the physical notches in the piano roll to the invisible zeros and ones of code—also shaped the evolution of recorded music: the windup gramophone replaced by the tube amps of stereo gear replaced by the digital bits of audio CDs. The migration to digital music is recent enough that most of us grasp its wider repercussions: think of the still-simmering debates over music in the post-Napster era, the challenges it has posed to our intellectual property laws and the economics of all creative industries. But, as always, what began with an attempt to create and share new kinds of sounds ended up triggering other revolutions in other domains. The first true peer-to-peer networks for sharing information were designed specifically for the swapping of musical files. It is still too early to tell, but this innovation may turn out to be as influential as those piano keyboards and pinned cylinders, if in fact peer-to-peer platforms like Bitcoin eventually become an important part of the global financial infrastructure, as many people believe. It is entirely possible that the most significant advance in the history of money since the invention of a government-backed currency will end up having its roots in teenagers sharing Metallica songs.

Whenever waves of new information technology have crested, music has been there to greet them. Music was among the first activities to be encoded, the first to be automated, the first to be programmed, the first to be digitized as a commercial product, the first to be distributed via peer-to-peer networks. There is something undeniably pleasing about that litany, something hopeful. Think about the history behind the most influential device of the modern age: a digital computer sharing information across wireless networks. What were the enabling technologies that made it possible to invent such a device in the first place? The standard story is that computers—and the Internet—descend from military technology, since many early computers were designed specifically to crack wartime codes or calculate rocket trajectories. But inventing a computer also required other building blocks: music boxes, automated flautists, harpsichord keyboards, player pianos. Too often we hear the old bromide that innovation invariably follows the lead of the warriors. But it turns out the minstrels and the maestros led us to their fair share of breakthroughs as well, particularly with technology that involves some kind of code. Yes, the Department of Defense helped build the Internet. But the pinned cylinders of the music boxes gave us software. When it comes to generating new tools for sharing and processing information, the instruments of destruction have nothing on the instruments of song.