5

Games

The Landlord’s Game

In the second half of the thirteenth century, a Dominican friar from the Lombard region of modern-day Italy began delivering a series of sermons that meditated on the proper roles of different social groups: royalty and merchants, clergy and farmers. Over the years, his name has been warped into an almost comical number of variations, including Cesolis, Cessole, Cesulis, Cezoli, de Cezolis, de Cossoles. But historians now generally refer to him as Jacobus de Cessolis. We know next to nothing about Cessolis, only that his sermons were well received by his monastic brothers, and the friar was encouraged to translate them to the page.

This was still more than a century and a half before Gutenberg, so the friar’s words were inscribed by hand, and not mass-printed when they first began to circulate. Yet Cessolis’s text would end up playing a crucial role in the early history of the printing press. Something about his message resonated with the popular mood, and in the decades that followed its first appearance, hand-copied versions of Cessolis’s original manuscript swept across Europe. “The original was not just copied,” the historian H. L. Williams notes. “It was translated, abbreviated, modified, turned into verse, and otherwise edited by substitutions and emendations that made the material relevant to other regions and cultures . . . Some versions grew to more than four times the length of Cessolis’ original.” By the time Gutenberg introduced his movable-type technology, Cessolis’s manuscript was, by some accounts, surpassed only by the Bible in its popularity. More than fifteen separate editions, in almost as many languages, were published in the first decades after Gutenberg originally printed his Bible. It even caught the eye of the entrepreneurial British printer William Caxton, who translated and published it out of a printing shop in Bruges. It was the second book ever printed in the English language.

What was the title of this manifesto? Cessolis gave it two. The first was more formal: The Book of the Manners of Men and the Offices of the Nobility. But the second had an unusual twist. He called it The Game of Chess.

—

The Game of Chess is one of those books that would be impossible to place on a modern bookstore shelf. It defies sensible genre categorization. It was, in part, a straightforward guide to the rules of chess, with sections devoted to each of the pieces and the rules that govern their behavior. But out of the game’s elemental rules, Cessolis constructed an elaborate allegorical message: the king’s behavior on the board reflected the proper behavior of real-world kings; the knight’s reflected the proper behavior of real-world knights. (To extend his societal analysis, Cessolis made each pawn represent a specific group of commoners: farmers, blacksmiths, money-changers, and so on.) It was a baffling hybrid: a profound sociological treatise bound together with a game guide. It was as though Studs Terkel had bundled his 1970s classic Working with a cheat sheet for Ms. Pac-Man. The combination makes for some entertaining leaps, where Cessolis jumps from the minutiae of chess strategy to broad generalizations about medieval lifestyles without the slightest pause:

Both knights have three possible openings. The white knight may open toward the right onto the black square in front of the farmer. This is reasonable, because the farmer works the fields, tills and cultivates them. The knight and his horse receive food and nourishment from the farmer and protect him in return. The second opening is to the black square in front of the clothier. This move is also reasonable since the knight ought to protect the man who makes his clothes. The third opening is toward the left onto the black square in front of the king, but only if the merchant is not there. This is reasonable since the knight is supposed to guard and protect the king as he would himself.

Some of the friar’s lessons were sensible enough: “The king has dominion, leadership, and rank over everyone, and for that reason should not travel much outside his kingdom.” Others seem less advisable to a modern reader, because the rules of society and the rules of chess have changed: “If women want to remain chaste and pure they should not sit by the door to the gardens, they should avoid venturing into the streets, and they should not forget all their ladylike manners.” Avoiding “venturing out into the streets” might have been a good strategy for the queen in medieval games of chess, but that is only because that piece wouldn’t take on its extensive powers until the 1500s; in Cessolis’s time, the queen was severely limited in its mobility on the board.

But as amusing as it is to chuckle over the eccentricities of The Game of Chess, it is more instructive to contemplate the book’s cultural significance. Perhaps unwittingly, the friar was dismantling a vision of social organization that had been dominant for at least a thousand years: the body politic, the image of society as a single organism, directed—inevitably—by the metaphoric “head of state.” Instead, Cessolis proposed a different model: independent groups, governed by contractual and civic obligations, but far more autonomous than the “body politic” metaphor had implied. This amounted to a profound shift in social consciousness. For more than a thousand years, social order had been imagined in physiological terms. “If the head of a body decided that the body should walk,” the historian Jenny Adams writes, “the feet would have to follow.” But the chessboard suggested another way of connecting social pieces: a society regulated by laws and contracts and conventions. “The chess allegory imagines its subjects to possess independent bodies in the form of pieces bound to the state by rules rather than biology,” Adams explains. “If the chess king advances, the pawns are not beholden to do the same.”

The social transformation mapped out on Cessolis’s chessboard would eventually ripple across the map of Europe itself, with the rise of the Renaissance guild system and a wave of legal codes that endowed merchants and artisans with newfound freedoms. Social positions were not defined as God-given inevitabilities, but rather emerged out of legal and ethical conventions. Authority no longer trickled down from above, but instead was established through a web of consensual relationships, in many cases defined by contracts. This was not yet a radical secularization of society; kings and bishops still had important roles. But it was a crucial early step that led toward the truly secular state that would arise five hundred years later: a society governed ultimately by laws and not monarchs.

A miniature of Otto IV of Brandenburg playing chess with a lady, circa 14th century

How big a role did The Game of Chess have in triggering this shift? It is difficult to say for certain. It may well be that Cessolis was simply popularizing a conceptual shift that was already under way, and thus the book’s success lay in the way it explained a historic change that must have been bewildering for the citizens trying to make sense of it. Or perhaps the book’s popularity helped hasten the transformation itself, as the chess allegory encouraged readers to seek out the kind of contractual autonomy the book celebrated. Either way, it is clear that the game itself provided a new way of thinking about society, a new framework that could be used to understand some of the most important issues in civic life. This turns out to be one of the key ways in which the seemingly frivolous world of game play affects the “straight” world of governance, law, and social relations. The experimental tinkering of games—a parallel universe where rules and conventions are constantly being reinvented—creates a new supply of metaphors that can then be mapped onto more serious matters. (Think how reliant everyday speech is on metaphors generated from games: we “raise the stakes”; we “advance the ball”; we worry about “wild cards”; and so on.) Every now and then, one of those metaphors turns out to be uniquely suited to a new situation that requires a new conceptual framework, a new way of imagining. A top-down state could be described as a body or a building—with heads or cornerstones—but a state governed by contractual interdependence needed a different kind of metaphor to make it intelligible. The runaway success of The Game of Chess—first as a sermon, then as a manuscript, and finally as a book—suggests just how valuable that metaphor turned out to be.

We commonly think of chess as the most intellectual of games, but in a way its greatest claim to fame may be its allegorical power. No other game in human history has generated such a diverse array of metaphors. “Kings cajoled and threatened with it; philosophers told stories with it; poets analogized with it; moralists preached with it,” writes chess historian David Shenk. “Its origins are wrapped up in some of the earliest discussions of fate versus free will. It sparked and settled feuds, facilitated and sabotaged romances, and fertilized literature from Dante to Nabokov.” Metaphoric thinking can be mind-opening, the way Cessolis’s allegory helped medieval Europe understand a new civic order. But it can also be restrictive, as the metaphor keeps you from seeing other possibilities that don’t quite fit the mold. Sometimes it can be a bit of both. In the middle of the twentieth century, chess became a kind of shorthand way of thinking about intelligence itself, both in the functioning of the human brain and in the emerging field of computer science that aimed to mimic that intelligence in digital machines.

The very roots of the modern investigation into artificial intelligence are grounded in the game of chess. “Can [a] machine play chess?” Alan Turing famously asked in a groundbreaking 1946 paper. “It could fairly easily be made to play a rather bad game. It would be bad because chess requires intelligence . . . There are indications however that it is possible to make the machine display intelligence at the risk of its making occasional serious mistakes . . . What we want is a machine that can learn from experience . . . [the] possibility of letting the machine alter its own instructions.” Turing’s speculations form a kind of origin point for two parallel paths that would run through the rest of the century: building intelligence into computers by teaching them to play chess, and studying humans playing chess as a way of understanding our own intelligence. Those interpretative paths would lead to some extraordinary breakthroughs: from the early work on cybernetics and game theory from people like Claude Shannon and John von Neumann, to machines like IBM’s Deep Blue that could defeat grandmasters with ease. In cognitive science, the litany of insights that derived from the study of chess could almost fill an entire textbook, insights that have helped us understand the human capacity for problem solving, pattern recognition, visual memory, and the crucial skill that scientists call, somewhat awkwardly, chunking, which involves grouping a collection of ideas or facts into a single “chunk” so that they can be processed and remembered as a unit. (A chess player’s ability to recognize and often name a familiar sequence of moves is a classic example of mental chunking.) Some cognitive scientists compared the impact of chess on their field to Drosophila, the fruit fly that played such a central role in early genetics research.

But the prominence of chess in the first fifty years of both cognitive and computer science also produced a distorted vision of intelligence itself. It helped cement the brain-as-computer metaphor: a machine driven by logic and pattern recognition, governed by elemental rules that could be decoded with enough scrutiny. In a way, it was a kind of false tautology: brains used their intelligence to play chess; thus, if computers could be taught to play chess, they would be intelligent. But human intelligence turns out to be a much more complex beast than chess playing suggests. When Deep Blue finally defeated world chess champion Gary Kasparov in 1997, it marked a milestone for computer science, but Deep Blue itself was largely helpless if you wanted to ask it about anything other than chess. It would have captured Cessolis’s king in a matter of minutes, but if you wanted to know something about actual kings, Cessolis would be infinitely more informative. (Noam Chomsky famously declared that Deep Blue’s victory was about as interesting as a bulldozer winning the Olympics for weight lifting.) Today, the study of intelligence has greatly diversified: we know that the skills necessary to play chess are only a small part of what it means to be smart.

—

Cessolis was hardly alone in conceiving the game board as a platform for moral instruction. Up until the end of the nineteenth century, most American board games were explicitly designed to impart ethical or practical lessons to their players. One popular game of the 1840s, the Mansion of Happiness, embedded a stern Puritan worldview in its game play. Many passages from the game’s rule book sound more like a Cotton Mather sermon than an idle amusement:

WHOEVER possesses PIETY, HONESTY, TEMPERANCE, GRATITUDE, PRUDENCE, TRUTH, CHASTITY, SINCERITY . . . is entitled to Advance six numbers toward the Mansion of Happiness. WHOEVER gets into a PASSION must be taken to the water and have a ducking to cool him . . . WHOEVER posses[ses] AUDACITY, CRUELTY, IMMODESTY, or INGRATITUDE, must return to his former situation till his turn comes to spin again, and not even think of HAPPINESS, much less partake of it.

Milton Bradley launched his gaming empire in 1860 with the Checkered Game of Life, the distant ancestor of the modern Game of Life. (Both games use a spinner instead of dice, a randomizing device introduced by Bradley to differentiate his game from the long-standing association between dice games and gambling.) As gaming historian Mary Pilon notes, Bradley’s creation was quite a bit darker than its contemporary version: “[The] board had an Intemperance space that led to Poverty, a Government Contract space that led to Wealth, and a Gambling space that led to Ruin. A square labeled Suicide had an image of a man hanging from a tree, and other squares were labeled Perseverance, School, Ambition, Idleness, and Fat Office.”

But the most intriguing—and tragically overlooked—heir to Cessolis was a woman named Lizzie Magie who managed to conceive of the game board as a vehicle not of conventional religious instruction, but rather social and political revolution. Born in Illinois in 1866, Magie had an eclectic and ambitious career even by suffragette standards. She worked at various points as a stenographer, poet, and journalist. She invented a device that made typewriters more efficient, and filed for a patent for it in 1893. She worked part-time as an actress on the stage. For a long time, her greatest claim to fame came through an act of political performance art, placing a mock advertisement in a local paper that put herself on the market as a “young woman American slave”—protesting the oppressive wage gap between male and female salaries, and mocking the mercenary nature of many traditional marriages. Magie was also a devotee of the then-influential economist Henry George, who had argued in his 1879 best-selling book Progress and Poverty for an annual “land-value tax” on all land held as private property—high enough to obviate the need for other taxes on income or production. Many progressive thinkers and activists of the period integrated “Georgist” proposals for single-tax plans into their political platforms and stump speeches. But only Lizzie Magie appears to have decided that radical tax reform might make compelling subject matter for a board game.

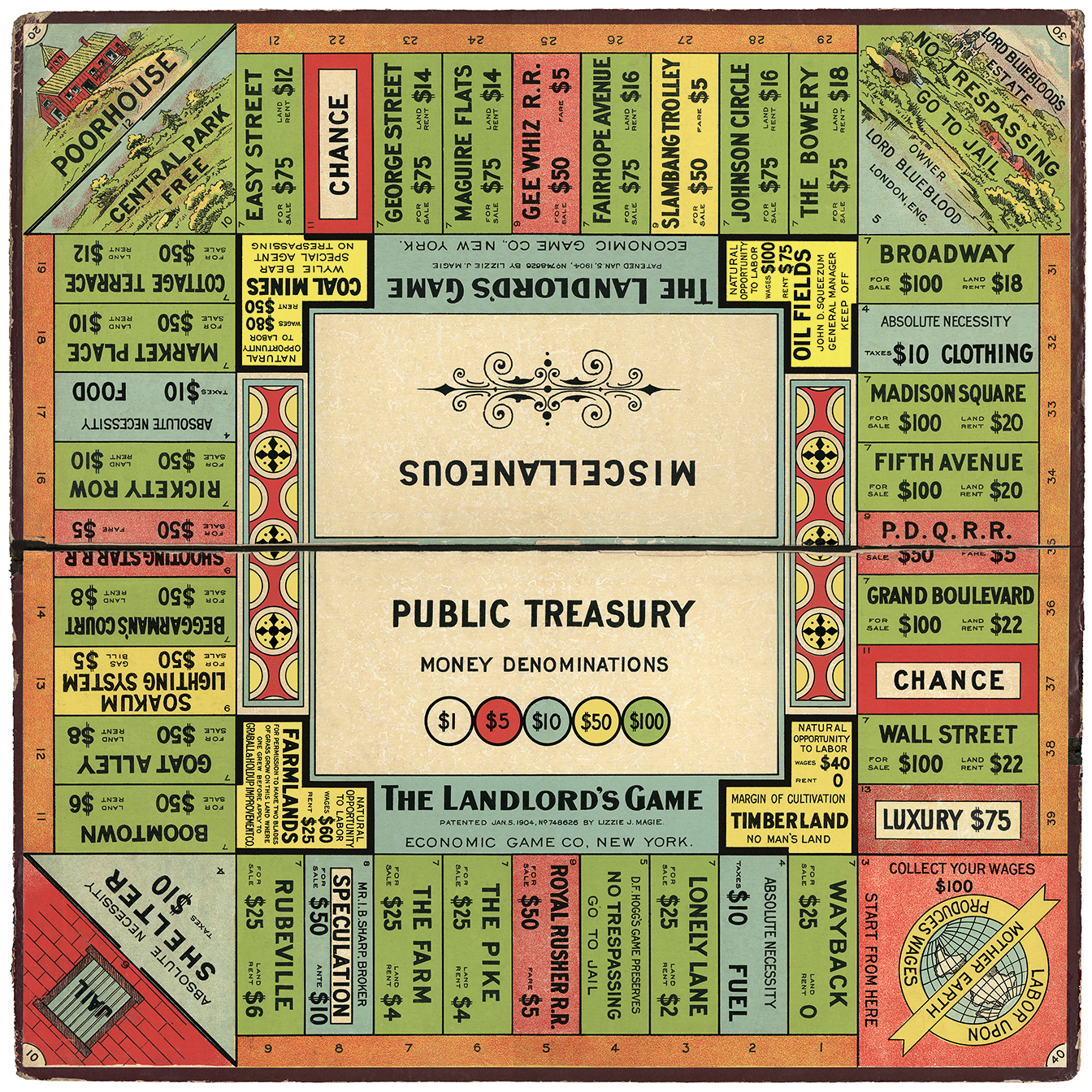

Magie began sketching the outlines of a game she would come to call the Landlord’s Game. In 1904, she published a brief outline of the game in a Georgist journal called Land and Freedom. Her description would be immediately familiar to most grade schoolers in dozens of countries around the world:

Representative money, deeds, mortgages, notes and charters are used in the game; lots are bought and sold; rents are collected; money is borrowed (either from the bank or from individuals), and interest and taxes are paid. The railroad is also represented, and those who make use of it are obliged to pay their fare, unless they are fortunate enough to possess a pass, which, in the game, means throwing a double. There are two franchises: the water and the lighting; and the first player whose throw brings him upon one of these receives a charter giving him the privilege of taxing all others who must use his light and water. There are two tracts of land on the board that are held out of use—are neither for rent nor for sale—and on each of these appear the forbidding sign: “No Trespassing. Go to Jail.”

The playing board for The Landlord’s Game, Monopoly’s predecessor

Magie had created the primordial Monopoly, a pastime that would eventually be packaged into the most lucrative board game of the modern era, though Magie’s role in its invention would be almost entirely written out of the historical record. Ironically, the game that became an emblem of sporty capitalist competition was originally designed as a critique of unfettered market economics. Magie’s version actually had two variations of game play, one in which players competed to capture as much real estate and cash as possible, as in the official Monopoly, and one in which the point of the game was to share the wealth as equitably as possible. (The latter rule set died out over time—perhaps confirming the old cliché that it is simply less fun to be a socialist.) Either way you played it, however, the agenda was the same: teaching children how modern capitalism worked, warts and all. In its way, The Landlord’s Game was every bit as moralizing as the Mansion of Happiness. “The little landlords take a general delight in demanding the payment of their rent,” Magie wrote.

They learn that the quickest way to accumulate wealth and gain power is to get all the land they can in the best localities and hold on to it. There are those who argue that it may be a dangerous thing to teach children how they may thus get the advantage of their fellows, but let me tell you there are no fairer-minded beings in the world than our own little American children. Watch them in their play and see how quick they are, should any one of their number attempt to cheat or take undue advantage of another, to cry, “No fair!” And who has not heard almost every little girl say, “I won’t play if you don’t play fair.” Let the children once see clearly the gross injustice of our present land system and when they grow up, if they are allowed to develop naturally, the evil will soon be remedied.

The Landlord’s Game never became a mass hit, but over the years it developed an underground following. It circulated, samizdat-style, through a number of communities, with individually crafted game boards and rule books dutifully transcribed by hand. Students at Harvard, Columbia, and the Wharton School played the game late into the night; Upton Sinclair was introduced to the game in a Delaware planned community called Arden; a cluster of Quakers in Atlantic City, New Jersey, adopted it as a regular pastime. As it traveled, the rules and terminology evolved. Fixed prices were added to each of the properties. The Wharton players first began calling it “the monopoly game.” And the Quakers added the street names from Atlantic City that would become iconic, from Baltic to Boardwalk.

It was among that Quaker community in Atlantic City that the game was first introduced to a down-on-his-luck salesman named Charles Darrow, who was visiting friends on a trip from his nearby home in Philadelphia. Darrow would eventually be immortalized as the sole “inventor” of Monopoly, though in actuality he turned out to be one of the great charlatans in gaming history. Without altering the rules in any meaningful way, Darrow redesigned the board with the help of an illustrator named Franklin Alexander, and struck deals to sell it through the Wanamaker’s department store in Philadelphia and through FAO Schwarz. Before long, Darrow had sold the game to Parker Brothers in a deal with would make him a multimillionaire. For decades, the story of Darrow’s rags-to-riches ingenuity was inscribed in the Parker Brothers rule book: “1934, Charles B. Darrow of Germantown, Pennsylvania, presented a game called MONOPOLY to the executives of Parker Brothers. Mr. Darrow, like many other Americans, was unemployed at the time and often played this game to amuse himself and pass the time. It was the game’s exciting promise of fame and fortune that prompted Darrow to initially produce this game on his own.”

Both the game itself—and the story of its origins—had entirely inverted the original progressive agenda of Lizzie Magie’s landlord game. A lesson in the abuses of capitalist ambition had been transformed into a celebration of the entrepreneurial spirit, its collectively authored rules reimagined as the work of a lone genius.

—

Game mythologies habitually seek out heroic inventors, even when those invention stories are on the very edges of plausibility. Every year, millions of baseball fans descend on the small town of Cooperstown, New York, to visit the Baseball Hall of Fame because Abner Doubleday invented the game in a cow pasture there in 1839. Most such origin stories overstate the role of the inventor, reducing a complex lineage down to a single individual genius. But in the case of Doubleday, the story appears to be an almost complete fabrication. An acclaimed Civil War general who had fired the first shot at Fort Sumter, Doubleday was pegged as baseball’s founding father in 1907 by a commission headed by then National League president Abraham Mills. The commission had been assembled ostensibly to uncover the origins of the sport, though its unspoken objective was to prove that the roots of the American national pastime were, in fact, American. (Many believed, probably correctly, that the game evolved out of the British sport rounders.) And so the Mills Commission somewhat randomly declared that baseball’s immaculate conception had occurred in Cooperstown in 1839, produced sui generis from the mind of Abner Doubleday. This origin story had all the right elements—military hero invents revered game in a flash of inspiration—except for the troubling fact that Doubleday seems to have had nothing to do with baseball whatsoever. In his voluminous collection of letters, he never once mentioned the game. Even worse, Doubleday was enrolled and living at West Point as a young cadet in 1839, his family having moved from Cooperstown the year before.

Invention stories like that of Abner Doubleday and the cow pasture so often fall apart with games because almost without exception our most cherished games have been the product of collective invention, usually involving collaborations that span national borders. Baseball, for instance, turns out to have a complicated lineage that includes rounders and cricket and an earlier game called stoolball. Citizens of Britain, Ireland, France, and the Netherlands, as well as the United States, played a role in the evolution of the game, although it is almost impossible to map the exact evolutionary tree. The conventional fifty-two-card deck that we now use for poker and solitaire evolved over roughly five hundred years, with important contributions coming from Egypt, France, Germany, and the United States. European football has roots that date back to Ancient Greece.

Chess, too, emerged out of multinational networks. Cessolis’s Game of Chess managed to inspire so many different translations in part because the book’s message resonated with broader developments in European society. But it also traveled well because chess itself had traveled well. Though the game had originated around 500 CE, by Cessolis’s time, it was played throughout Europe, the Middle East, and Asia. Today, we take it for granted that blockbuster movies play in theaters all across the globe, but a thousand years ago, before the age of exploration, cross-cultural exchange was severely limited by geographic and linguistic barriers. Chess was one of the first truly global cultural experiences. By medieval times, with players on three continents and in dozens of countries, even Christianity’s geographic footprint looked small beside the long stride of chess.

That global expansion set a template that would be repeated hundreds of times by other games in the ensuing centuries. We may not take our games as seriously as we do our forms of governance or our legal codes or our literary novels, but for some reason games have a wonderful ability to cross borders. Games were cosmopolitan many centuries before the word entered the English vocabulary. And unlike religious or military encroachments, when games cross borders, they almost inevitably tighten the bonds between different nations rather than introducing conflict. European football is played professionally in almost every nation on the face of the earth. The global reach of games is even more pronounced in virtual gameplay. Consider the epic success of Minecraft, an immense online universe populated by players logging in from around the world. In the case of Minecraft, of course, the world of the game itself—and the rules that govern it—are being created by that multinational community of players, in the form of mods and servers programmed and hosted by Minecraft fans. McLuhan coined the term “global village” as a metaphor for the electronic age, but if you watch a grade-schooler constructing a virtual town in Minecraft with the help of players from around the world, the phrase starts to sound more literal.

The migratory history of chess, like that of most games, did not begin with some immaculate conception in the mind of some original genius game designer. As chess traveled across borders, new players in new cultures experimented with the rules. “Like the Bible and the Internet,” Shenk writes, “[chess was] the result of years of tinkering by a large, decentralized group, a slow achievement of collective intelligence.” Evolving out of an earlier Indian game called chaturanga, the first game that modern eyes would recognize as chess was played in Persia during the fifth century CE, a game called chatrang. The key ingredients were the same: a board divided into sixty-four squares, with two opposing armies of sixteen pieces each. The iconography drew on Indian culture: today’s bishop, for instance, was an elephant on the chatrang board. The rules governing the movement of pieces also differed from today’s game: the elephant could only move two squares diagonally, and the queen—known then as the minister—was as restricted as the king in its movement. The practice of announcing the king’s imminent capture dates from this period: the odd phrase checkmate derives from the Persian words for king and defeat, shah and mat. Alternating black and white squares were introduced sometime around the game’s first appearance in Europe, a few hundred years before Cessolis wrote his sermons. Shortly thereafter, regional versions of the game adopted a rule whereby pawns could be moved two squares in their opening move. In a shift allegedly inspired by the formidable Queen Isabella of Spain, herself an avid chess player, the queen became the most powerful piece on the board by the end of the fifteenth century.

Today, we take it for granted that software projects like Linux might be collectively authored by thousands of people scattered around the world, each contributing ideas to the project without any official affiliation or traditional management structure. This kind of global creation was almost unheard-of a thousand years ago: the limitations of transportation networks made invention and production largely local affairs. Games themselves—not the physical manifestation of the game, but the underlying rules—were among the first key cultural dishes to be cooked up in the global melting pot. (In a way, the closest equivalent to chess’s cosmopolitan evolution are the scientific insights that followed a similar geographic path, from the Islamic Renaissance through medieval monasteries to the European Enlightenment, with small but crucial additions and corrections added with each step of the journey.) Once again, a seemingly frivolous custom turns out to be an augur of future developments: if you were an aspiring futurologist in Cessolis’s day and you were looking for clues about the future of invention and commerce—perhaps even a future where virtual encyclopedias would be written and edited by millions of people around the world—a good place to start would be by studying the games people were playing for fun, and the evolution of the rules that governed those games.

Any evolutionary tree contains branches that stop growing, promising new forms that die out. There are cultural extinctions just as there are biological ones. For every chess innovation that ultimately survived to the present day, there are others that perished. As chess found its way into Europe during the Middle Ages, some new players found the game too slow. (The queen’s limited powers made it much more difficult to reach the endgame.) In part to speed things up, a new element was added to the game, one that would be anathema to the modern chess player: dice. “The wearingness which players experienced from the long duration of the game when played right through [is the reason] dice have been brought into chess, so that it can be played more quickly,” a Spanish player wrote in 1283. A roll of the dice determined which pieces could be moved, bringing chess, briefly, from the realm of pure logic and perfect information into the realm of chance.

For whatever reason—perhaps because the introduction of randomness undermined precisely what made chess such a fascinating game—the dice-based version of chess did not survive. Chess didn’t last long as a game of chance. But for all their association with gambling and lesser intellectual pursuits, games of chance would end up transforming society in ways that arguably exceed the impact of chess. And at the center of that revolution was the material design of the dice themselves.

—

In 1526, a young medical student from Padua named Girolamo Cardano found himself losing at cards in a Venetian gambling house. Faced with an alarming financial loss, Cardano determined that the deck of cards had been marked, and the game had been rigged against him from the start. Enraged at this deception, the twenty-five-year-old Cardano slashed his opponent in the face with a dagger, grabbed his lost money, and ran out into the streets of Venice, where he promptly fell into one of the canals. This episode seems to be a representative one for Cardano, who managed to live a spectacularly interesting—if somewhat debauched—life. He was apparently “hot tempered, single-minded, and given to women . . . cunning, crafty, sarcastic, diligent, impertinent, sad and treacherous, miserable, hateful, lascivious, obscene, lying, obsequious . . .” (This parade of adjectives, mostly critical, comes from Cardano himself, who wrote them in his own salacious autobiography.) For several years in his youth, his primary source of income came through gambling with cards and dice.

Girolamo Cardano

Cardano was also, as it happens, a gifted mathematician, and at some point in his early sixties he decided to combine his interests in a book called Liber de Ludo Aleae, or The Book of Games of Chance. Like Cessolis’s work before him, Cardano’s book was in part a game guide, a manual of sorts for the aspiring gambler, helping to inaugurate a somewhat less erudite branch of the publishing world that would eventually take the shape of countless how-to books for beating the odds in Las Vegas. But to make his advice actually useful, Cardano had to do something that most of his successors didn’t have to bother with: he had to invent an entirely new field of mathematics.

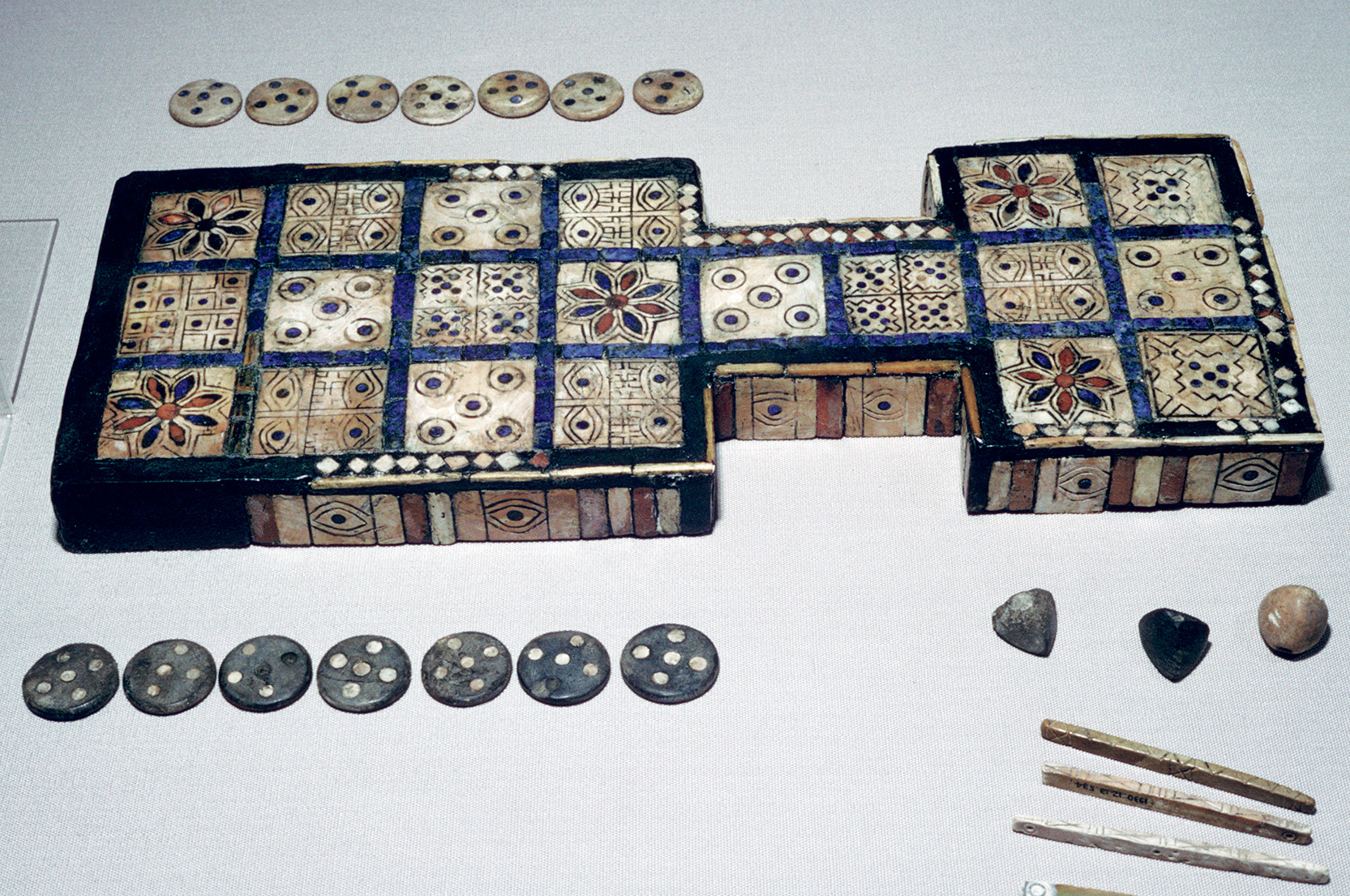

An early version of backgammon discovered in the Royal Tomb of Ur in Southern Iraq, dating back to 2600 BCE

Games of chance are among the oldest cultural artifacts known to man. The pharaohs of Egypt played dicelike games with what were called astragali, fashioned out of the anklebones of animals. A game resembling modern backgammon was discovered in the Royal Tomb of Ur, dating back to 2600 BC. Both the Greeks and Romans played obsessively with astragali. There seems to be a deep-seated human interest in chance and randomness, manifest in these intricate game pieces that have survived millennia, still recognizable to the modern eye. To play a game of chance is, in a sense, to rehearse for the randomness that everyday life presents, particularly in a prescientific world where basic circuits and patterns in nature had not yet been perceived.

But there are patterns, too, in the random outcomes of games of chance. Roll two modern dice and the number on each individual die will be random, but the sum of the two numbers will be more predictable: a seven is slightly more likely than an eight, and far more likely than a twelve. The concept is simple enough that young children can grasp it if it is explained to them: there are more ways of making a seven with two dice, and so the seven has a higher probability of being the result. And yet, despite the simplicity of the concept, it appears to have not occurred to anyone—at least, not long enough to write it down with mathematical precision—until Cardano began writing The Book of Games of Chance. Cardano developed subtle mathematical equations for analyzing dice games: for instance, he discovered the additive formula for calculating the odds of one of two potential events occurring in a single roll. (If you want to know how likely it is that you will roll either a three or an even number, you take the 1⁄6 likelihood of the three being rolled, and the 1⁄2 odds of the even number, and add them together. You will roll a three or an even number two out of three times on average with a six-sided die.) He also demonstrated the multiplicative nature of probability when predicting the results of a sequence of dice rolls: the chance of rolling three sixes in a row is one in 216: 1⁄6 x 1⁄6 x 1⁄6.

Written in 1564, Cardano’s book wasn’t published for another century. By the time his ideas got into wider circulation, an even more important breakthrough had emerged out of a famous correspondence between Blaise Pascal and Pierre de Fermat in 1654. This, too, was prompted by a compulsive gambler, the French aristocrat Antoine Gombaud, who had written Pascal for advice about the most equitable way to predict the outcome of a dice game that had been interrupted. Their exchange put probability theory on a solid footing and created the platform for the modern science of statistics. Within a few years, Edward Halley (of comet legend) was using these new tools to calculate mortality rates for the average Englishman, and the Dutch scientist Christiaan Huygens and his brother Lodewijk had set about to answer “the question . . . to what age a newly conceived child will naturally live.” Lodewijk even went so far as to calculate that his brother, then aged forty, was likely to live for another sixteen years. (He beat the odds and lived a decade beyond that, as it turns out.) It was the first time anyone had begun talking, mathematically at least, about what we now call life expectancy.

Probability theory served as a kind of conceptual fossil fuel for the modern world. It gave rise to the modern insurance industry, which for the first time could calculate with some predictive power the claims it could expect when insuring individuals or industries. Capital markets—for good and for bad—rely extensively on elaborate statistical models that predict future risk. “The pundits and pollsters who today tell us who is likely to win the next election make direct use of mathematical techniques developed by Pascal and Fermat,” the mathematician Keith Devlin writes. “In modern medicine, future-predictive statistical methods are used all the time to compare the benefits of various drugs and treatments with their risks.” The astonishing safety record of modern aviation is in part indebted to the dice games Pascal and Fermat analyzed; today’s aircraft are statistical assemblages, with each part’s failure rate modeled to multiple decimal places.

When we think about the legacy of Cardano and Pascal and Fermat, one question comes immediately to mind: What took us so long? Before Cardano, gamblers apparently had noticed that some results seemed more likely than others, but no one seems to have had the inclination or ability to explain why exactly. This is one of those blind spots that seems baffling to the modern mind. The Greeks developed Euclidean geometry and the Pythagorean theorem; the Romans completed brilliant engineering projects that stand to this day. Many of them played games of chance avidly; many of them had a significant financial stake in figuring out the hidden logic behind those games. Yet none of the ancients were able to make the leap from chance to probability. What held them back?

The answer to this riddle appears to lie with the physical object of the die itself. The astragali favored by the Egyptians and Greeks—along with other mechanisms for generating random results—were not the products of uniform manufacturing techniques. Each individual die would have its own idiosyncrasies. “[Each] had two rounded sides and only four playable surfaces, no two of which were identical,” Devlin writes. “Although the Greeks did seem to believe that certain throws were more likely than others, these beliefs—superstitions—were not based on observation, and some were at variance with the actual likelihoods we would calculate today.” Seeing the patterns behind the game of chance required random generators that were predictable in their randomness. The Greeks were playing with dice whereby some sets might favor the four over the one; others might be more inclined to land on a two. The unpredictable nature of the physical object made it harder to perceive the underlying patterns of probability.

All that had begun to change by the thirteenth century, when guilds of dice makers began to appear across Europe. Two sets of statutes from a dice-making guild in Toulouse, dating from 1290 and 1298, show how important the manufacture of uniform objects had become to the trade. The very first regulation forbids the production of “loaded, marked, or clipped dice” to prevent their association with swindlers and cheats. But the statutes also place a heavy emphasis on uniform design specifications: all dice had to have the exact same dimensions, with numbers positioned in the same configuration on the six sides of the cube. By the time Cardano picked up the game, dice had become standardized in their design. That regularity may have foiled the swindlers in the short term, but it had a much more profound effect that had never occurred to dice-making guilds: it made the patterns of the dice games visible, which enabled Cardano, Pascal, and Fermat to begin to think systematically about probability. Ironically, making the object of the die itself more uniform ultimately enabled people like Huygens and Halley to analyze the decidedly nonuniform experience of human mortality using the new tools of probability theory. No longer mere playthings, the dice had become, against all odds, tools for thinking.

—

The logic of games is ethereal. We have no idea how most ancient games were played, either because written rule books did not survive to modern times, or because the rules themselves evolved and then died out before the game’s players adopted the technology of writing. But we know about these games because they were physically embodied in matter: in game pieces, sports equipment, even in rooms or arenas designed to accommodate rules that have long since been lost to history. We can see the rules embedded indirectly in the shapes of these artifacts, like the trace a mollusk leaves behind in a shell. Racquetball-like courts, constructed almost four thousand years ago by the Olmec civilization, which predates the Mayans and Aztecs, have been uncovered in modern-day Mexico. At roughly the same time, on the other side of the world, an Egyptian sculptor captured King Tuthmose III playing a ball game that resembled modern cricket. These artifacts—like the standardized dice of Toulouse—make it clear that games have not just tested our intellectual or athletic gifts: they’ve also tested our skills as toolmakers.

Invariably, the tools end up transforming the toolmakers, just as the regular shapes of those medieval dice helped their human creators think about probability in a new way. Consider one of humanity’s original technologies: the ball. Some Australian Aborigines played with “a stuffed ball made from grass and beeswax, opossum pelt, or, in some cases, the scrotum of a kangaroo,” writes historian John Fox. The Copper Inuits of the Canadian Arctic play a football-like game with a ball made from the hide of a seal. The Olmecs even buried their balls along with other religious talismans, the pre-Columbian equivalent of being buried in your Green Bay Packers jersey. Balls are the jellyfish of gaming evolution—at once ancient and yet still ubiquitous in the modern world. The Olmecs, Aztecs, and Mayans all failed to invent the wheel, but the ball was central to the culture of all three societies.

Ruins of a Mayan ball court, Honduras

During Columbus’s second voyage to the Americas, the explorer and his crew observed the local tribes of Hispaniola (now Haiti) playing a ball game that may have been derived from the Olmec game. The spectacle of a sporting event would have been nothing new to European eyes at the end of the fifteenth century. But there was something captivating and mysterious about this game. The ball seemed to defy physics. Describing the ball’s behavior several decades later, a Dominican friar wrote, “Jumping and bouncing are its qualities, upward and downward, to and fro. It can exhaust the pursuer running after it before he can catch up with it.” Like the balls already omnipresent in Europe, the Hispaniola balls could be thrown with ease and would roll great distances. But these balls had an additional property. They could bounce.

Christopher Columbus observes a ball game in Hispaniola

Columbus and his crew didn’t realize it at the time, but they were the first Europeans to experience the distinctive properties of the organic compound isoprene, the key ingredient of what we now call rubber. The balls had been formed out of naturally occurring latex, a white sticky liquid produced by many species of plants, including one known as Castilla elastica. Around 1500 BC, the Mesoamerican natives hit upon a way to mold and stabilize the liquid into the shape of a sphere, which then possessed a marvelous elasticity that made it ideal for games. (They also used the material for sandals, armor, and raingear.) Rubber ball games became a staple of the Mesoamerican civilization for thousands of years, played by Mayans and Aztecs as well as the indigenous populations of Caribbean islands like Hispaniola. The games were both sporting events and religious rituals, officiated by priests and featuring idols that represented the gods of gaming. Some scholars believe the games were occasionally accompanied by ritual sacrifices as well.

Viewing the Haitians playing their games, Columbus and his crew allegedly found the elasticity of the balls so mesmerizing that they brought one back to Seville, though evidence of this is somewhat shaky. But a 1528 drawing by Christoph Weiditz marks the earliest verifiable appearance of these rubber balls in a European context. From his first voyage to the New World, Hernan Cortés had brought back two Aztec ballplayers, skilled athletes in a sport known as ullamaliztli, a hybrid word that unites the Aztec terms for ball play and rubber. The sport involved bouncing the balls off the hips and the buttocks, although variants permitted the use of hands and sticks. The athletes performed in the court of Charles V, where Weiditz sketched them at play, their mostly naked bodies protected only by loincloths and leather bands covering their posteriors. The Aztec ballplayers had been essentially kidnapped by Cortés, so we should be wary of making light of their appearance in the Spanish court, but their performance before Charles did mark the beginning of a practice that would become commonplace in the modern age: athletes imported from one part of the world to another thanks to their agility at playing with rubber balls.

The most important legacy of those ullamaliztli players would not belong to the world of sport, however. The real innovation lay in the rubber itself. Europeans first took notice of this puzzling new material thanks to the astonishing kinetics of the Mesoamerican balls. Several decades after the first balls made their way to Europe, the Spanish royal historian Pedro Mártir d’Angleria wrote, “I don’t understand how when the balls hit the ground they are sent into the air with such incredible bounce?” The great seventeenth-century historian Antonio de Herrera y Tordesillas included a long account of the Mesoamerican “gum balls,” which were “made of the Gum of a Tree that grows in hot Countries, which having Holes made in it distills great white Drops, that soon harden and being work’d and moulded together turn as black as pitch.”

Scientists began experimenting in earnest with the material in the eighteenth century, including the British chemist Joseph Priestley, who allegedly noticed that the material was also uniquely suited for erasing (or “rubbing out”) pencil marks, thus coining the name “rubber” itself. (Priestley had nothing to do with condoms, however.) Today, of course, the rubber industry is massive; we walk with shoes made with rubber soles, chew gum made from rubber compounds, drive cars and fly planes supported by rubber tires. The history of rubber’s ascent is marred by exploitation, both of human and natural resources. “The demand for rubber was to see men carve out huge plantations from tropical forests around the world,” John Tully writes in his social history of rubber, The Devil’s Milk. “Roads and railways would be built, along with docks, power stations, and reticulated water supplies. In the process, whole populations would be transplanted to provide the labor power, and the ethnic composition of whole countries would be changed forever, as in Singapore and Malaysia. The demand was to lead to barbarism, as in the Belgian Congo and on the remote banks of the Putumayo River in Peru.” Vast fortunes would be made out of isoprene’s unique chemistry. Some of the most famous names in the history of Big Industry began their careers manufacturing and selling rubber products: Firestone, Pirelli, Michelin. Columbus had returned to Seville disappointed by his failure to bring back gold. He had no idea that he had stumbled across a material that would prove to be just as valuable—and far more versatile—in its eventual applications.

Today, of course, the canonical story of rubber innovation is that of the struggling nineteenth-century entrepreneur Charles Goodyear, who hit upon a technique (called vulcanization) that made rubber durable enough for industrial use, and made Goodyear himself a titan of industry. But the Mesoamericans had developed a vulcanization method thousands of years before Goodyear began his experiments. The prominence of the Goodyear narrative is partly due to a long-standing bias towards Euro-American characters in the history of innovation, but I suspect it also derives from another, more subtle bias: the assumption that important innovations come out of “serious” research like Goodyear’s, fueled by entrepreneurial energy. But long before Goodyear’s investigation, the Mesoamericans took the opposite path, driven not by industrial ambition but rather by delight and wonder. The rubber balls of the Olmecs make it clear that games do not just help concoct new metaphors or ways of imagining society. They can also drive advances in materials science. Sometimes the world is changed by heroic figures deliberately setting out to reinvent an industry and making a fortune in the process. But sometimes the world is changed just by following a bouncing ball.

—

In the fall of 1961, three MIT grad students were rooming together at what they would later recall as a “barely habitable tenement” on Hingham Street in Cambridge. (As a joke, they dubbed their living quarters the “Hingham Institute.”) They were avid science-fiction readers, budding mathematicians, and steam-train aficionados. They would entertain themselves by watching low-budget Japanese monster movies at seedy Boston theaters. But most of their time they spent thinking about computers and their potential. Today we would call them hackers, though at the time the category didn’t exist.

At that point in history, the sexiest piece of digital technology was a new machine from Digital Equipment Corporation: the PDP-1, considered at the time to be one of the first minicomputers ever released—although, to the modern eye, mini seems almost laughable, since it was the size of an armoire. What made the PDP-1 especially alluring to the fellows of the Hingham Institute was an accessory called the Type 30 Precision CRT: a circular black-and-white display. To the average American in 1961, who would have been just hearing the first hype about the coming revolution of color TV, the Type 30 would have looked like a step backward, but the fellows of the Hingham Institute knew the grainy images of the Type 30 augured a more radical transformation than color. The pixels on the Type 30 might have been woefully monochromatic, but you could control them with software. That made all the difference. For most of the short history of computing, human-machine interactions had traveled through the middleman of paper, through punch cards and printouts. You typed something in and waited for the machine to type something back. But with a CRT beaming electrons at a screen, that interaction became something fundamentally different. It was live.

This was more than three decades before Michael Dell pioneered the just-in-time manufacturing model for digital computers; ordering a PDP-1 was more like commissioning a yacht than it was one-click ordering a new gadget online. And so, when MIT announced that it was going to be installing a PDP-1 and Type 30 in the engineering lab, the fellows of the Hingham Institute had more than a few months to contemplate what they would do with such a powerhouse. As early adopters in an emerging academic discipline, they were cursed with the added burden of having to justify their field while simultaneously making progress in it. And so they began to think about applications that would both advance the art of computer science and dazzle the uninitiated. They came up with three principles:

1. It should demonstrate as many of the computer’s resources as possible, and tax those resources to the limit.

2. Within a consistent framework, it should be interesting, which means every run should be different.

3. It should involve the onlooker in a pleasurable and active way—in short, it should be a game.

Inspired by the lowbrow sci-fi novels of Edward E. Smith that featured a steady stream of galactic chase sequences, the Hingham fellows decided that they would christen the PDP-1 by designing a game of cosmic conflict. They called it Spacewar!

The rules of Spacewar! were elemental: two players, each controlling a spaceship, dart around the screen, firing torpedoes at each other. Even in its infancy, you can see the family resemblance to the 1970s arcade classic Asteroids, which was heavily inspired by Spacewar! The graphics, of course, were pathetic by modern standards: it looked more like a battle between a ghostly pair of semicolons than a scene from Star Wars. But something about the experience of controlling these surrogates on the screen was hypnotic, and word of Spacewar! began to flow out of MIT and across the small but growing subculture of computer scientists. As the digital pioneer Alan Kay put it, “The game of Spacewar! blossoms spontaneously wherever there is a graphics display connected to a computer.”

Just as chess evolved new rules as it migrated across the continents a thousand years before, the world of Spacewar! gathered new features as it traveled from computer lab to computer lab. Gravity was introduced to the game; specially designed control mechanisms (anticipating modern joysticks) were produced; a hyperspace option whereby your craft became momentarily invisible became customary; new graphics routines—many of them focused on explosions—made the game more lifelike. An MIT programmer named Peter Samson wrote a program—memorably dubbed “Expensive Planetarium”—that filled the Spacewar! screen with an accurate representation of the night sky. “Using data from the American Ephemeris and Nautical Almanac,” writes J. Martin Graetz, one of the original Hingham Fellows, “Samson encoded the entire night sky (down to just above fifth magnitude) between 221⁄2°N and 221⁄2°S, thus including most of the familiar constellations . . . By firing each display point the appropriate number of times, Samson was able to produce a display that showed the stars at something close to their actual relative brightness.” Integrated merely as a background flourish for Spacewar!, Expensive Planetarium marked one of the first times that a computer graphics program had modeled a real-world environment; when we look at family photos on our computer screens, or follow directions layered over Google Maps satellite images on our phones, we are interacting with descendants of Samson’s flickering planetarium.

It goes without saying that Spacewar! began a lineage that would ultimately evolve into the modern video game industry, which generates more than $100 billion in sales annually. This is impressive enough, but perhaps not so surprising. Video games had to start somewhere, after all. But the legacy of Spacewar! extends far beyond PlayStation and Donkey Kong and SimCity. Just as Expensive Planetarium inaugurated a profound shift in the relationship between on-screen data and the real world, Spacewar! itself planted the seeds for a number of crucial developments in computing. The idea that a software application might be codeveloped by dozens of different programmers at different institutions spread around the world—each contributing new features or bug fixes and optimized graphics routines—was unheard-of when the Hingham Institute first began dreaming of space torpedoes. But that development process would ultimately create essential platforms like the Internet or the Web or Linux. Spacewar! was one of the first programs that successfully engaged the user in a real-time, visual interaction with the computer, with on-screen icons moving in sync with our physical gestures. Everyone who clicks on icons with a mouse or trackpad today is working within a paradigm that Spacewar! first defined more than half a century ago.

Dan Edwards and Peter Samson playing Spacewar! on the PDP-1 30 display

But perhaps the most fundamental revolution that Spacewar! set in motion was this: the game made it clear that these massive, unwieldy, bureaucratic machines could be hijacked by the pursuit of fun. Before Spacewar!, even the most impassioned computer evangelist saw them belonging to the world of Serious History: tabulating census returns, calculating rocket trajectories. For all their brilliance, none of the early visionaries of computing—from Turing to von Neumann to Vannevar Bush—imagined that a million-dollar machine might also be useful for blowing up an opponent’s spaceship with imaginary torpedoes for sheer amusement. You might teach a computer to play chess in order to determine how intelligent the machine had become, but programming a computer to play games just for the sake of playing games would have seemed like a colossal waste of resources, like hiring a symphony orchestra to play “Chopsticks.” But the Spacewar! developers saw a different future, one where computers had a more personal touch. Or, put another way, developing Spacewar! helped them see that future more clearly.

In 1972, during a hiatus between publishing issues of The Whole Earth Catalog, Stewart Brand visited the Artificial Intelligence Lab at Stanford to witness “the First Intergalactic Spacewar! Olympics.” He wrote up his experiences for Rolling Stone in an article called “Spacewar: Fanatic Life and Symbolic Death Among the Computer Bums.” As one of the first essays to document the hacker ethos and its connection to the counterculture, it is now considered one of the seminal documents of technology writing. In Spacewar!, Brand saw “a flawless crystal ball of things to come in computer science and computer use.” The bearded computer “bums” tinkering with the rules to their sci-fi fantasy world were not just freaks and geeks; they offered a glimpse of what mainstream society would be doing in two decades. Brand famously began the essay with the proclamation “Ready or not, computers are coming to the people.” In the sheer delight and playfulness of Spacewar!, Brand recognized that these seemingly austere machines would inevitably be domesticated and brought into the sphere of everyday life. Right after Brand’s manifesto was published, another young hippie from the Bay Area started working for the pioneering game company Atari (which had been founded to bring a commercial version of Spacewar! to the market) and then shortly thereafter created a company devoted exclusively to manufacturing personal computers. His name, of course, was Steve Jobs.

In his Rolling Stone piece, Brand alluded to an important distinction between “low-rent” and “high-rent” forms of research. High-rent is official business: fancy R&D labs funded by corporate interests or government grants, reviewed by supervisors, with promising ideas funneled onto production lines. The world of games, however, is low-rent. New ideas bubble up from below, at the margins, after hours; people experiment for the love of it, and they share their experiments because they have none of the usual corporate restrictions that protect intellectual property. By sharing, they allow those new ideas to be improved by others along the chain. Before long, a game of juvenile intergalactic conflict becomes the seedling of a digital revolution, or a game of dice lays the groundwork for probability theory, or a board game maps out a new model of social organization. The ideas evolve in a low-rent world, but they often end up transforming the classier neighborhoods along the way.

—

One day in the late summer of 1961, just as the Hingham Institute was forming across the country in Cambridge, a woman stood at a roulette table in Las Vegas, placing bets in the chaos of the casino floor. At her side, a neatly dressed thirty-year-old man pushed his chips onto the betting area with a strange inconsistency. The man would never bet until a few seconds after the croupier had released the ball; many times he failed to bet at all, waiting patiently tableside until the next spin. But the pile of chips slowly growing in front of him suggested that his eccentric strategy was working. At one point, the woman turned to glance at the stranger beside her. A look of alarm passed across her face as she noticed a wiry appendage protruding from his ear, like the antennae of an “alien insect,” as the man would later describe it. Seconds later, he was gone.

The mysterious stranger at the roulette table was not, contrary to appearances, a criminal or a mafioso; he was not even, technically speaking, cheating at the game—although years later his secret technique would be banned by the casinos. He was, instead, a computer scientist from MIT named Edward Thorp, who had come to Vegas not to break the bank but rather to test a brand-new device: the very first wearable computer ever designed. Thorp had an accomplice at the roulette table, standing unobserved at the other end, pretending not to know his partner. He would have been unrecognizable to the average casino patron, but he was in fact one of the most important minds of the postwar era: Claude Shannon, the father of information theory and one of the key participants in the invention of digital computers.

Thorp had begun thinking about beating the odds at roulette as a graduate student in physics at UCLA in 1955. Unlike card games like blackjack or poker where strategy could make a profound difference in outcomes, roulette was supposed to be a game of pure chance; the ball was equally likely to end up on any number on the wheel. Roulette rotors with small biases could make certain outcomes slightly more likely than others. (Humphrey Bogart’s casino in Casablanca featured a rigged roulette wheel that favored certain numbers.) But most modern casinos went to great lengths to ensure their roulette wheels generated perfectly random results. Using probability equations based on Cardano’s dice analysis, casinos were then able to establish odds that gave the house a narrow, but predictable, advantage over the players. Thanks to his physics background, Thorp began to think of the roulette wheel’s unbiased perfection as an opportunity for the gambler. With a “mechanically well made and well maintained” roulette wheel, Thorp later recalled, “the orbiting roulette ball suddenly seemed like a planet in its stately, precise and predictable path.” With astral bodies, of course, knowing the initial position, direction, and velocity of the object would enable you to predict its location at a specified later date. Could you do the same thing with a roulette ball?

Thorp spent some time tinkering with a cheap, half-sized roulette wheel he had acquired, analyzing ball movements with a stopwatch accurate to hundredths of a second, even making slow-motion films of the action. But the wheel proved to be too defective to make accurate predictions, and Thorp got sidetracked writing software programs to calculate winning strategies at blackjack. (One gets the sense that Cardano and Thorp would have hit it off nicely.) In 1960, having moved on to MIT, Thorp decided to try publishing his blackjack analysis in the Proceedings of the National Academy of Sciences, and sought out the advice of Shannon, the only mathematician at MIT who was also a member of the academy. Impressed by Thorp’s blackjack system, Shannon inquired whether Thorp was working on anything else “in the gambling area.” Dormant for five years, Thorp’s roulette investigation was suddenly reawakened as the two men began a furious year of activity, seeking a predictable pattern in the apparent randomness of the roulette wheel.

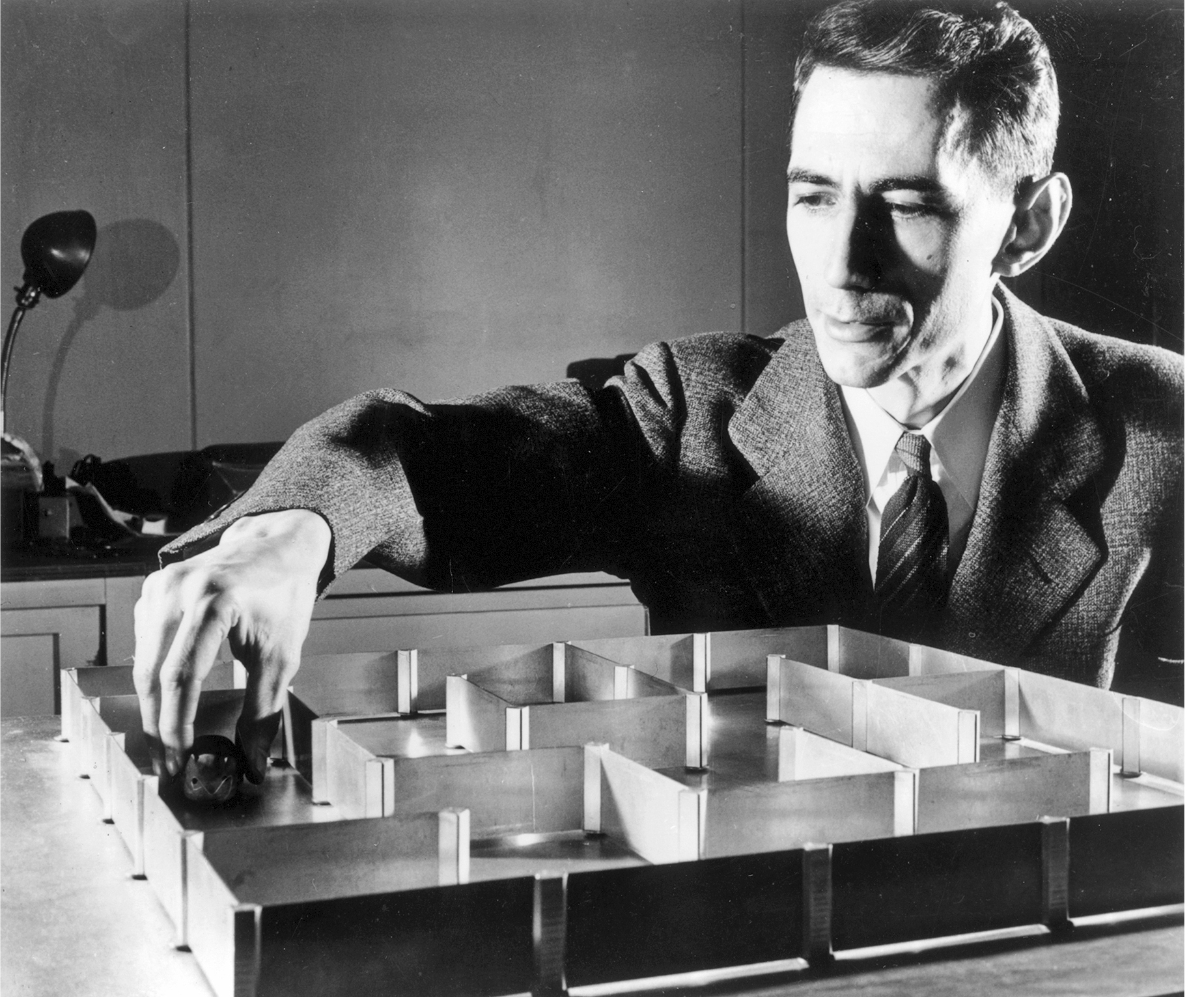

Claude Shannon with an electronic mouse

In his old, rambling wooden house outside of Cambridge, Shannon had created a basement exploratorium that would have astounded Merlin and Babbage. Thorp later described it as a “gadgeteer’s paradise”:

It had perhaps a hundred thousand dollars (about six hundred thousand 1998 dollars) worth of electronic, electrical and mechanical items. There were hundreds of mechanical and electrical categories, such as motors, transistors, switches, pulleys, gears, condensers, transformers, and on and on. As a boy science was my playground and I spent much of my time building and experimenting in electronics, physics and chemistry, and now I had met the ultimate gadgeteer.

Before long, Thorp and Shannon had spent thousands of dollars acquiring a regulation wheel from Reno, along with strobe lights and a specialized clock. They commandeered an old billiards table in Shannon’s house and began analyzing the orbit of the ball on a spinning roulette wheel. Just as Thorp had intuitively surmised five years before, the ultimate destination of the ball could be predicted with some accuracy if you had a way of measuring its initial velocity. The two men decided the best way to assess that velocity would be to measure the length of time it took the ball to complete one rotation around the wheel. A long rotation would suggest a slower velocity, while a short rotation suggested that the ball was traveling at a higher speed. That initial velocity assessment gave them an indication of when the ball would eventually fall off the sloped track and onto the rotor, eventually settling into the numbered frets. The higher the velocity, the longer it would take to drop onto the rotor. Getting a read on the initial velocity couldn’t tell you exactly what number the ball would eventually land on, but it did suggest a sector of the wheel where the ball was slightly more likely to come to a stop. Because the house’s advantage in roulette is set at such a narrow margin, even a slight edge in predicting the ball’s outcome could tip the advantage in the player’s favor.

The problem was you couldn’t exactly stroll onto the casino floor at the Palms with a strobe light and stopwatches. To use Shannon and Thorp’s technique, you needed some way to measure the velocity of the ball in real time without anyone else at the roulette table noticing. And you needed to calculate how tiny differences in velocity would translate into the ball’s ultimate resting place. You needed some kind of sensor to track the ball’s physical movements, and you needed a computer to do the math. The prospects of this imagined system would have been laughable to anyone familiar with the technological state of the art in 1961. The smallest video camera in the world was the size of a suitcase, and most computers were larger than a refrigerator. Thorp and Shannon wouldn’t have been vulnerable to getting caught cheating in the Vegas casinos with that gear. They would have never made it in the front door.

But Shannon in particular wasn’t just familiar with the technological state of the art. He was one of its masters. His approach to innovation anticipated the rec-room informality that would come to define the Google or Facebook campuses decades later; work and play were inextricably linked. In Thorp’s description:

As we worked and during breaks, Shannon was an endless source of playful ingenuity and entertainment. He taught me to juggle three balls (in the ’70’s he proved “Shannon’s juggling theorem”) and he rode a unicycle on a “tightrope,” which was a steel cable about 40 feet long strung between two tree stumps. He later reached his goal, which was to juggle the balls while riding the unicycle on the tightrope. Gadgets and “toys” were everywhere. He had a mechanical coin tosser which could be set to flip the coin through a set number of revolutions, producing a head or tail according to the setting. As a joke, he built a mechanical finger in the kitchen which was connected to the basement lab. A pull on the cable curled the finger in a summons.

Eventually, between the juggling sessions and the unicycle tightrope rides, Shannon and Thorp designed a computer with twelve transistors that could be concealed in a box the size of a deck of cards. Instead of using cameras to track the ball’s motion, they designed special input mechanisms in their shoes; using their toes to activate microswitches that marked the beginning and end of the ball’s initial rotation around the wheel. Based on that assessment of the ball’s velocity, the microcomputer calculated the most likely landing spot, conveying the information with one of eight musical tones, corresponding to eight sectors of the roulette wheel. The mix of technology Thorp and Shannon assembled to crack the roulette game had not only never been seen before, it had barely been imagined. Even the most visionary computer scientists or science-fiction authors imagined computers as bulky, stationary objects—closer to a piece of furniture than a piece of jewelry. But fifty years later, Thorp and Shannon’s roulette-wheel hack would become the most ubiquitous form of computing on the planet: a small digital device in your pocket, attached to headphones, with sensors recording your body’s movements. It might have looked like two men goofing off and trying to beat the house at roulette, but it was also something much more profound. An entire family tree of devices—iPods, Android phones, Apple Watches, Fitbits—descend directly from that roulette hack.

In the end, Shannon and Thorp ventured to Vegas with their wives and gave their system a test run with real money. Shannon usually stood by the croupier and timed the ball with his toes; Thorp placed bets on the other end of the table while the wives stood lookout for any undue attention from the casino personnel. (Everyone but Thorp was nervous about being discovered by the mob element that controlled much of Vegas in those days.) They only bet ten-cent chips, but reliably beat the house. Their novel microcomputer performed brilliantly; ironically, the components of the system that proved to be the most failure-prone were the earphones.

After the Vegas trip, Thorp left MIT and published a few popular books on blackjack technique. Shannon and Thorp revealed the details of their secret invention in 1966, inspiring a few imitators over their years with more pecuniary objectives. Microdevices that monitored blackjack and roulette wheels were finally banned in the state of Nevada in 1985. But by that time, Thorp had little need for roulette winnings, having found a bigger casino to exploit. Using his mathematical skills and his experience discovering small opportunities in probability, he founded a company in 1974 called Princeton/Newport Partners. It was one of the first of a new genus of finance firms that would eventually become a wellspring of profit and controversy: the hedge fund.

—

In the mid-2000s, the head of research at IBM, Paul Horn, began thinking about the next chapter in IBM’s storied tradition of “Grand Challenges”—high-profile projects that showcase advances in computation, often with a clearly defined milestone of achievement designed to attract the attention of the media. Deep Blue, the computer that ultimately defeated Gary Kasparov at chess, had been a Grand Challenge a decade before, exceeding Alan Turing’s hunch that chess-playing computers could be made to play a tolerable game. Horn was interested in Turing’s more celebrated challenge: the Turing Test, which he first formulated in a 1950 essay on “Computing Machinery and Intelligence.” In Turing’s words, “A computer would deserve to be called intelligent if it could deceive a human into believing that it was human.”

The deception of the Turing Test had nothing to do with physical appearances; the classic Turing Test scenario involves a human sitting at a keyboard, engaged in a text-based conversation with an unknown entity who may or may not be a machine. Passing for a human required both an extensive knowledge about the world and a natural grasp of the idiosyncrasies of human language. Deep Blue could beat the most talented chess player on the planet, but you couldn’t have a conversation with it about the weather. Horn and his team were looking for a comparable milestone that would spur research into the kind of fluid, language-based intelligence that the Turing Test was designed to measure. One night, Horn and his colleagues were dining out at a steak house near IBM’s headquarters and noticed that all the restaurant patrons had suddenly gathered around the televisions at the bar. The crowd had assembled to watch Ken Jennings continue his legendary winning streak at the game show Jeopardy!, a streak that in the end lasted seventy-four episodes. Seeing that crowd forming planted the seed of an idea in Horn’s mind: Could IBM build a computer smart enough to beat Jennings at Jeopardy!?

The system they eventually built came to be called Watson, named after IBM’s founder Thomas J. Watson. To capture as much information about the world as possible, Watson ingested the entirety of Wikipedia, along with more than a hundred million pages of additional data. There is something lovely about the idea of the world’s most advanced thinking machine learning about the world by browsing a crowdsourced encyclopedia. (Even H. G. Wells’s visionary prediction of a “global brain” didn’t anticipate that twist.) From the data sources, and from software prompting by the IBM researchers, Watson developed a nuanced understanding of linguistic structures that let it parse and engage in less rigid conversations with humans. By the end of the process, Watson could understand and answer questions about just about anything in the world, particularly answers that relied on factual statements. And of course, given the Grand Challenge that had inspired its creation, Watson knew how to present those answers in the form of a question.

In February of 2011, Watson competed in a special two-round Jeopardy! competition against Jennings and another Jeopardy! champion named Brad Rutter. Viewers at home were treated to a glimpse inside Watson’s “mind” as it searched its databases for the correct answer to each question, via a small graphic that showed the computer’s top three guesses and overall “confidence level” for each answer. Somewhat disingenuously, the IBM promotional video that aired at the beginning of the episode suggested that Watson was like the two other human players in that it “did not have access to the Internet,” which is a bit like saying you don’t have “access” to the cake you just consumed.

Watson made some elemental mistakes during the match—at one point answering “What is Toronto?” in a category called “U.S. Cities.” But in the end, the computer trounced its human opponents, almost doubling the combined score of Jennings and Rutter. Watson’s performance was particularly impressive given the rhetorical subtlety of Jeopardy!’s questions. In the second match, Watson correctly answered “What is an event horizon?” with 97 percent confidence for a question that read, “Tickets aren’t needed for this ‘event,’ a black hole’s boundary from which matter can’t escape.” To make sense of that question, Watson had to effectively disregard the entire first half of the statement, and recognize that the allusion to “tickets” is simply a play on another meaning of event. The final question that sealed Watson’s victory was a literary one: “An account of the principalities of Wallachia and Moldavia inspired this author’s most famous novel.” Watson got it right: “Who is Bram Stoker?” Jennings had the answer as well, but recognizing that he had just lost to the machine, he scribbled a postscript beneath his answer: “I for one welcome our new computer overlords.” The computer overlords may have a good chuckle over that one someday.

In terms of sheer computational power, many other supercomputers around the world outperform Watson. But because of Watson’s facility with natural language, and its ability to make subtle inferences out of unstructured data, Watson comes as close to the human experience of intelligence as any machine on the planet. As Jennings later described it in an essay:

The computer’s techniques for unraveling Jeopardy! clues sounded just like mine. That machine zeroes in on key words in a clue, then combs its memory (in Watson’s case, a 15-terabyte data bank of human knowledge) for clusters of associations with those words. It rigorously checks the top hits against all the contextual information it can muster: the category name; the kind of answer being sought; the time, place, and gender hinted at in the clue; and so on. And when it feels “sure” enough, it decides to buzz. This is all an instant, intuitive process for a human Jeopardy! player, but I felt convinced that under the hood my brain was doing more or less the same thing.

IBM, of course, has plans for Watson that extend far beyond Jeopardy!. Already Watson is being employed to recommend cancer treatment plans by analyzing massive repositories of research papers and medical data, and answer technical support questions about complex software issues. But still, Watson’s roots are worth remembering: arguably the most advanced form of artificial intelligence on the planet received its education by training for a game show.

You might be inclined to dismiss Watson’s game-playing roots as a simple matter of publicity: beating Ken Jennings on television certainly attracted more attention for IBM than, say, Watson attending classes at Oxford would have. But when you look at Watson in the context of the history of computer science, the Jeopardy! element becomes much more than just a public relations stunt. Consider how many watershed moments in the history of computation have involved games: Babbage tinkering with the idea of a chess-playing “analytic engine”; Turing’s computer chess musings; Thorp and Shannon at the roulette table in Vegas; the interface innovations introduced by Spacewar!; Kasparov and Deep Blue. Even Watson’s 97 percent confidence score in the “event horizon” answer relied on the mathematics of probability that Cardano had devised to analyze dice games five hundred years before. Of all the fields of human knowledge, only a handful—primarily mathematics, logic, and electrical engineering—have been more important to the invention of modern computers than game play. Computers have long had a reputation for being sober, mathematical machines—the Vulcans of the technology world—but the history of both music and games, from The Instrument that Plays Itself to Spacewar!, make it clear that the modern digital computer has a long tradition of play and amusement in its family tree. That history may explain in part why computers have become so ubiquitous in modern life. The abacus and the calculator were good at math too, but they didn’t have the knack for wonder and delight that computers possess.