3

The Stored Program Principle

If the ENIAC, a remarkable machine, were a one-time development for the U.S. Army, it would hardly be remembered. But it is remembered for at least two reasons. First, in addressing the shortcomings of the ENIAC, its designers conceived of the stored program principle, which has been central to the design of all digital computers ever since. This principle, combined with the invention of high-speed memory devices, provided a practical alternative to the ENIAC’s cumbersome programming. By storing a program and data in a common high-speed memory, not only could programs be executed at electronic speeds; the programs could also be operated on as if they were data—the ancestor of today’s high-level languages compiled inside modern computers. A report that John von Neumann wrote in 1945, after he learned of the ENIAC then under construction, proved to be influential and led to several projects in the United States and Europe.1 Some accounts called these computers “von Neumann machines,” a misnomer since his report did not fully credit others who contributed to the concept. The definition of computer thus changed, and to an extent it remains the one used today, with the criterion of programmability now extended to encompass the internal storage of that program in high-speed memory.

From 1945 to the early 1950s, a number of firsts emerged that implemented this stored program concept. An experimental stored program computer was completed in Manchester, United Kingdom, in 1948 and carried out a rudimentary demonstration of the concept. It has been claimed to be the first stored program electronic computer in the world to execute a program. The following year, under the direction of Maurice Wilkes in Cambridge, a computer called the EDSAC (Electronic Delay Storage Automatic Computer) was completed and began operation. Unlike the Manchester “baby,” it was a fully functional and useful stored program computer. Similar American efforts came to fruition shortly after, among them the SEAC, built at the U.S. National Bureau of Standards and operational in 1950. The IBM Corporation also built an electronic computer, the SSEC, which contained a memory device that stored instructions that could be modified during a calculation (hence the name, which stood for “selective sequence electronic calculator”). It was not designed along the lines of the ideas von Neumann had expressed, however. IBM’s Model 701, marketed a few years later, was that company’s first such product.

The second reason for placing the ENIAC at such a high place in history is that its creators, J. Presper Eckert and John Mauchly, almost alone in the early years sought to build and sell a more elegant follow-on to the ENIAC for commercial applications. The ENIAC was conceived, built, and used for scientific and military applications. The UNIVAC, the commercial computer, was conceived and marked as a general-purpose computer suitable for any application that one could program it for. Hence the name: an acronym for “universal automatic computer.”

Eckert and Mauchly’s UNIVAC was well received by its customers. Presper Eckert, the chief engineer, designed it conservatively, for example, by carefully limiting the loads on the vacuum tubes to prolong their life. That made the UNIVAC surprisingly reliable in spite of its use of about 5,000 vacuum tubes. Sales were modest, but the technological breakthrough of a commercial electronic computer was large. Eckert and Mauchly founded a company, another harbinger of the volatile computer industry that followed, but it was unable to survive on its own and was absorbed by Remington Rand in 1950. Although not an immediate commercial success, the UNIVAC made it clear that electronic computers were eventually going to replace the business and scientific machines of an earlier era. That led in part to the decision by the IBM Corporation to enter the field with a large-scale electronic computer of its own, the IBM 701. For its design, the company drew on the advice of von Neumann, whom it hired as a consultant. Von Neumann had proposed using a special-purpose vacuum tube developed at RCA for memory, but they proved difficult to produce in quantity. The 701’s memory units were special-purpose modified cathode ray tubes, which had been invented in England and used on the Manchester computer.

IBM initially called the 701 a “defense calculator,” an acknowledgment that its customers were primarily aerospace and defense companies with access to large amounts of government funds. Government-sponsored military agencies were also among the first customers for the UNIVAC, now being marketed by Remington Rand. The 701 was optimized for scientific, not business, applications, but it was a general-purpose computer. IBM also introduced machines that were more suitable for business applications, although it would take several years before business customers could obtain a computer that had the utility and reliability of the punched card equipment they had been depending on.

By the mid-1950s, other vendors joined IBM and Remington Rand as U.S. and British universities were carrying out advanced research on memory devices, circuits, and, above all, programming. Much of this research was supported by the U.S. Air Force, which contracted with IBM to construct a suite of large vacuum tube computers to be used for air defense. The system, called “SAGE” (Semi-Automatic Ground Environment), was controversial: it was built to compute the paths of possible incoming Soviet bombers, and to calculate a course for U.S. jets to intercept them. But by the time the SAGE system was running, the ballistic missile was well under development, and SAGE could not aid in ballistic missile defense. Nevertheless, the money spent on designing and building multiple copies of computers for SAGE was beneficial to IBM, especially in developing mass memory devices that IBM could use for its commercial computers.2 There was a reason behind the acronym for the system: semiautomatic was deliberately chosen to assure everyone that in this system, a human being was always in the loop when decisions were made concerning nuclear weapons. No computer was going to start World War III by itself, although there were some very close calls involving computers, radars, and other electronic equipment during those years of the Cold War. In some cases the false alarms were caused by faulty computer hardware, in another by poorly written software, and also when a person inadvertently loaded a test tape that simulated an attack, which personnel interpreted to mean a real attack was underway.3 Once again, the human-machine interface issue appeared.

The Mainframe Era, 1950–1970

The 1950s and 1960s were the decades of the mainframe, so-called because of the large metal frames on which the computer circuits were mounted. The UNIVAC inaugurated the era, but IBM quickly came to dominate the industry in the United States, and it made significant inroads into the European market as well.

In the 1950s, mainframes used thousands of vacuum tubes; by 1960 these were replaced by transistors, which consumed less power and were more compact. The machines were still large, requiring their own air-conditioned rooms and a raised floor, with the connecting cables routed beneath it. Science fiction and popular culture showcased the blinking lights of the control panels, but the banks of spinning reels of magnetic tape that stored the data formerly punched onto cards were the true characteristic of the mainframe. The popular image of these devices was that of “giant brains,” mainly because of the tapes’ uncanny ability to run in one direction, stop, read or write some data, then back up, stop again, and so on, all without human intervention. Cards did not disappear, however: programs were punched onto card decks, along with accompanying data, both of which were transferred to reels of tape. The decks were compiled into batches that were fed into the machine, so that the expensive investment was always kept busy. Assuming a program ran successfully, the results would be printed on large, fan-folded paper, with sprocket holes on both sides. In batch operation, the results of each problem would be printed one after another; a person would take the output and separate it, then place the printed material in a mail slot outside the air-conditioned computer room for those who needed it. If a program did not run successfully, which could stem from any number of reasons, including a mistake in the programming, the printer would spew out error messages, typically in a cryptic form that the programmer had to decode. Occasionally the computer would dump the contents of the computer’s memory onto the printed output, to assist the programmer. Dump was very descriptive of the operation. Neither the person whose problem was being solved nor the programmer (who typically were different people) had access to the computer.

Because these computers were so expensive, a typical user was not allowed to interact directly with them. Only the computer operator had access: he (and this job was typically staffed by men) would mount or demount tapes, feed decks of cards into a reader, pull results off a printer, and otherwise attend to the operation of the equipment. A distaste for batch operations drove enthusiasts in later decades to break out of this restricted access, at first by using shared terminals connected directly to the mainframe, later by developing personal computers that one could use (or abuse) as one saw fit. Needless to say, word processing, spreadsheets, and games were not feasible with such an installation. To be fair, batch operation had many advantages. For many customers, say, an electric power utility that was managing the accounts of its customers, the programming did not change much from month to month. Only the data—in this case, the consumption of power—changed, and they could easily be punched onto cards and fed into the machine on a routine basis.

World War II–era pioneers like John von Neumann foresaw how computers would evolve, but they failed to see that computing would require as much effort in programming—the software—as in constructing its hardware. Every machine requires a set of procedures to get it to do what it was built to do. Only computers elevate those procedures to a status equal to that of the hardware and give them a separate name. Classically defined machines do one thing, and the procedures to do that are directed toward a single purpose: unlock the car door, get in, fasten seat belt, adjust the mirrors, put the key in the ignition, start the engine, disengage the parking brake, engage the transmission, step on the gas. A computer by contrast can do “anything” (including drive a car), and therefore the procedures for its use vary according to the application.

Many of the World War II–era computers were programmable, but they were designed to solve a narrow range of mathematical problems (or, in the case of the Colossus, code breaking). A person skilled in mathematical analysis would lay out the steps needed to solve a problem, translate those steps into a code that was intrinsic to the machine’s design, punch those codes into paper tape or its equivalent, and run the machine. Programming was straightforward, although not as easy as initially envisioned: programmers soon found that their codes contained numerous “bugs,” which had to be rooted out.4

Once general-purpose computers like the UNIVAC (note the name) began operation, the importance of programming became clearer. At this moment, a latent quality of the computer emerged to transform the activity of programming. When von Neumann and the ENIAC team conceived of the stored program principle, they were thinking of the need to access instructions at high speed and to have a simple design of the physical memory apparatus to accommodate a variety of problems. They also noted that the stored program could be modified, in the restricted sense that an arithmetic operation could address a sequence of memory locations, with the address of the memory incremented or modified according to the results of a calculation. Through the early 1950s, that was extended: a special kind of program, later called a compiler, would take as its input instructions written in a form that was familiar to human programmers and easy to grasp , and its output would be another program, this one written in the arcane codes that the hardware was able to decode. That concept is at the root of modern user interfaces, whether they use a mouse to select icons or items on a screen, a touch pad, voice input—anything.

The initial concept was to punch common sequences, such as computing the sine of a number, onto a tape or deck of cards, and assemble, or compile, a program by taking these precoded sequences, with custom-written code to knit them to one another. If those sequences were stored on reels of tape, the programmer had only to write out a set of commands that called them up at the appropriate time, and these commands could be written in a simpler code that did not have to keep track of the inner minutiae of the machine. The concept was pioneered by the UNIVAC team, led by Grace Murray Hopper (1906–1992), who had worked with Howard Aiken at Harvard during World War II (see figure 3.1). The more general concept was perhaps first discovered by two researchers, J. H. Laning and N. Zierler for the Whirlwind, an air force–sponsored computer and the ancestor of the SAGE air defense system, at the MIT in the early 1950s. Unlike the UNIVAC compilers, this system worked much as modern compilers do: it took as its input commands that a user entered, and it generated as output fresh and novel machine code that executed those commands and kept track of storage locations, handled repetitive loops, and did other housekeeping chores. Laning and Zierler’s “algebraic system” was the first step toward such a compiler: it took commands a user typed in familiar algebraic form and translated them into machine codes that Whirlwind could execute.

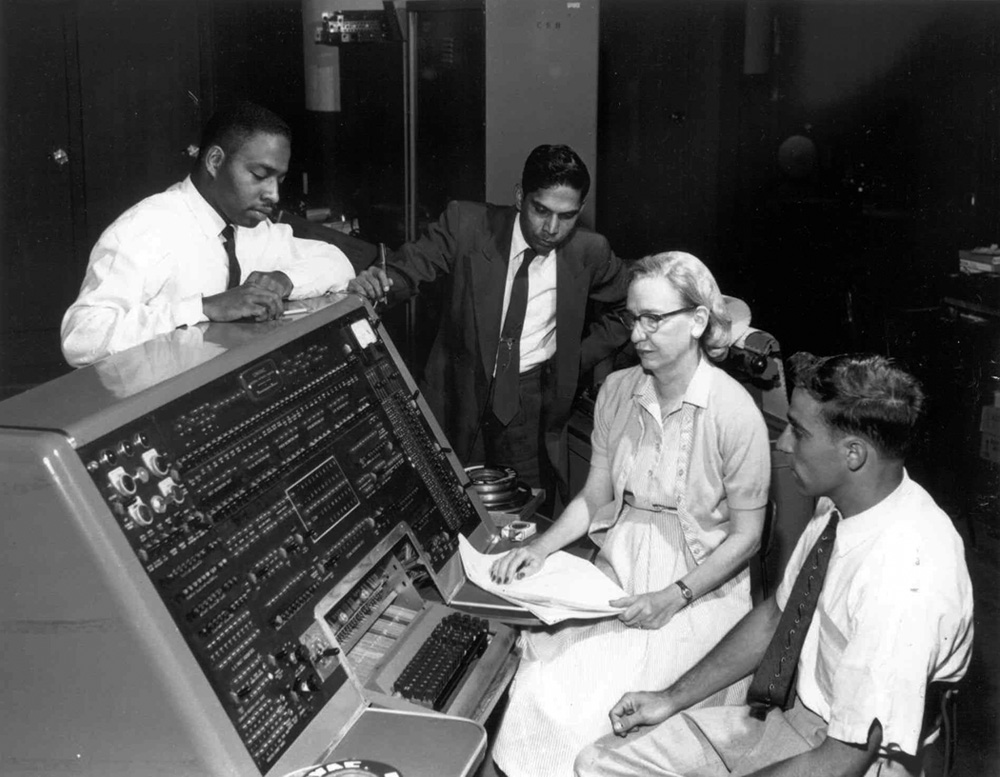

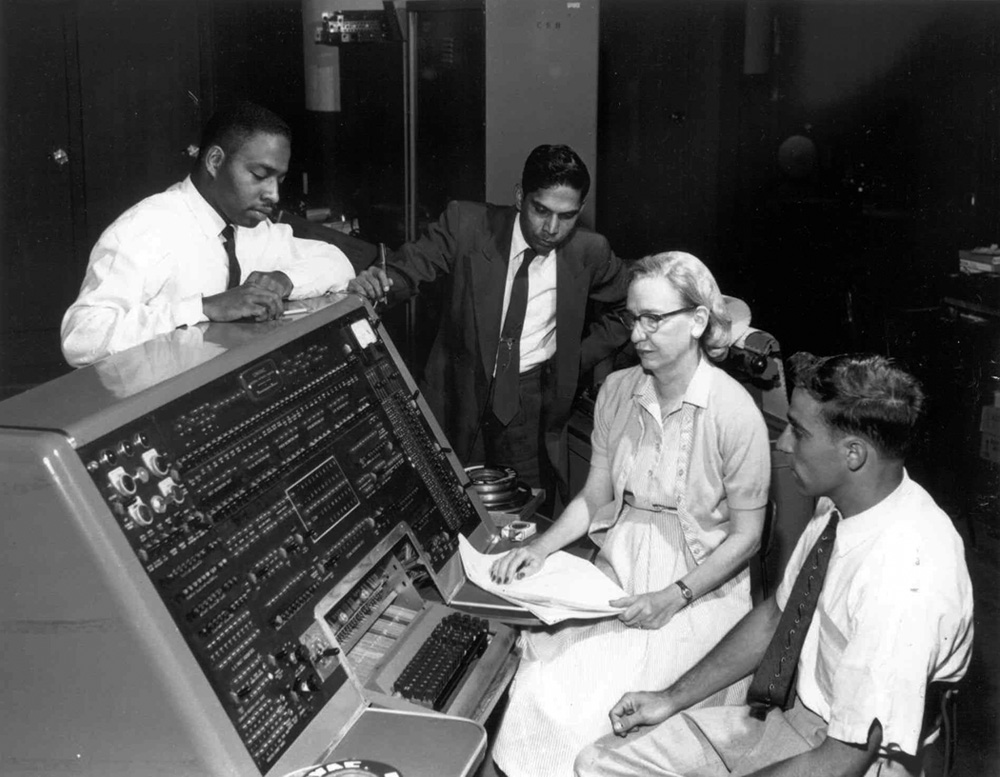

Figure 3.1

Grace Murray Hopper and students at the control panel of a UNIVAC computer in the mid-1950s. Hopper helped design systems that made it easier to program the UNIVAC, and she devoted her long career in computing to making them more accessible and useful to nonspecialists. (Credit: Grace Murray Hopper/Smithsonian Institution).

The Whirlwind system was not widely adopted, however, as it was perceived to be wasteful of expensive computer resources. Programming computers remained in the hands of a priesthood of specialists who were comfortable with arcane machine codes. That had to change as computers grew more capable, the problems they were asked to solve grew more complex, and researchers developed compilers that were more efficient. The breakthrough came in 1957 with Fortran, a programming language IBM introduced for its Model 704 computer, and a major success. (It was also around this time that these codes came to be called “languages,” because they shared many, though not all, characteristics with spoken languages.) Fortran’s syntax—the choice of symbols and the rules for using them—was close to ordinary algebra, which made it familiar to engineers. And there was less of a penalty in overhead: the Fortran compiler generated machine code that was as efficient and fast as code that human beings wrote. IBM’s dominant market position also played a role in its success. People readily embraced a system that hid the details of a machine’s inner workings, leaving them free to concentrate on solving their own, not the machine’s, problems.

Fortran’s success was matched in the commercial world by COBOL (Common Business Oriented Language). COBOL owed its success to the U.S. Department of Defense, which in May 1959 convened a committee to address the question of developing a common business language; that meeting was followed by a brief and concentrated effort to produce specifications for the language. As soon as those were published, manufacturers set out writing compilers for their respective computers. The next year the U.S. government announced that it would not purchase or lease computer equipment unless it could handle COBOL. As a result, COBOL became one of the first languages to be standardized to a point where the same program could run on different computers, from different vendors, and produce the same results. The first recorded instance of that milestone occurred in December 1960, when the same program (with a few minor changes) ran on a UNIVAC II and an RCA 501.

As with Fortran, the backing of a powerful organization—in this case, the Department of Defense—obviously helped win COBOL’s acceptance. Whether the language itself was well designed and capable is still a matter of debate. It was designed to be readable, with English commands like “greater than” or “equals” replacing the algebraic symbols >, =, and the others. The commands could even be replaced by their equivalent commands in a language other than English. Proponents argued that this design made the program easier to read and understand by managers who used the program but had little to do with writing it. That belief was misplaced: the programs were still hard to follow, especially years later when someone else tried to modify them. And programmers tended to become comfortable with shorter codes if they used them every day. Programming evolved in both directions: for the user, English commands such as “print” or “save” persisted but were eventually replaced by symbolic actions on icons and other graphic symbols. Programmers gravitated toward languages such as C that used cryptic codes but also allowed them to get the computer to perform at a high level of efficiency.

As commercial computers took on work of greater complexity, another type of program emerged that replaced the human operators who managed the flow of work in a punched card installation: the operating system. One of the first was used at the General Motors Research Laboratories around 1956, and IBM soon followed with a system it called JCL (Job Control Language). JCL consisted of cards punched with codes to indicate to the computer that the deck of cards that followed was written in Fortran or another language, or that the deck contained data, or that another user’s job was coming up. Systems software carried its own overhead, and if it was not well designed, it could cripple the computer’s ability to work efficiently. IBM struggled with developing operating systems, especially for its ambitious System/360 computer series, introduced in 1964. IBM’s struggles were replaced in the 1980s and 1990s by Microsoft’s, which produced windows-based systems software for personal computers. As a general rule, operating systems tend to get more and more complex with each new iteration, and with that comes increasing demands on the hardware. From time to time, the old inefficient systems are pushed aside by new systems that start out lean, as with the current Linux operating system, and the lean operating systems developed for smart phones (although these lean systems, like their predecessors, get more bloated with each release) (see figure 3.2).

Figure 3.2

A large IBM System/360 installation. Note the control panel with its numerous lights and buttons (center) and the rows of magnetic tape drives, which the computer used for its bulk memory. Behind the control panel in the center is a punched card reader with a feed of fan-folded paper; to the right is a printer and a video display terminal. (Credit: IBM Corporation)

The Transistor

A major question addressed in of this narrative is to what extent the steady advance of solid-state electronics has driven history. Stated in the extreme, as modern technology crams ever more circuits onto slivers of silicon, new devices and applications appear as ripe fruit falling from a tree. Is that too extreme? We saw how vacuum tubes in place of slower mechanical devices were crucial to the invention of the stored program digital computer. The phenomenon was repeated again, around 1960, by the introduction of the solid-state transistor as a replacement for tubes.

The vacuum tubes used in the ENIAC offered dramatically higher speeds: the switching was done by electrons, excited by a hot filament, moving at high speeds within a vacuum. Not all computer engineers employed them; Howard Aiken and George Stibitz, for example, were never satisfied with tubes, and they preferred devices that switched mechanically. Like the familiar light bulb from which they were descended, tubes tended to burn out, and the failure of one tube among thousands would typically render the whole machine inoperative until it was replaced (so common was this need for replacement that tubes were mounted in sockets, not hardwired into the machine as were other components). Through the early twentieth century, physicists and electrical engineers sought ways of replacing the tube with a device that could switch or amplify currents in a piece of solid material, with no vacuum or hot filament required. What would eventually be called solid-state physics remained an elusive goal, however, as numerous attempts to replace active elements of a tube with compounds of copper or lead, or the element germanium, foundered.5 It was obvious that a device to replace vacuum tubes would find a ready market. The problem was that the theory of how these devices operated lagged. Until there was a better understanding of the quantum physics behind the phenomenon, making a solid-state replacement for the tube was met with frustration. During World War II, research in radio detection of enemy aircraft (radar) moved into the realm of very high and ultrahigh frequencies (VHF, UHF), where traditional vacuum tubes did not work well. Solid-state devices were invented that could rectify radar frequencies (i.e., pass current in only one direction). These were built in quantity, but they could not amplify or switch currents.

After the war, a concentrated effort at Bell Laboratories led to the invention of the first successful solid-state switching or amplifying device, which Bell Labs christened the “transistor.” It was invented just before Christmas 1947. Bell Labs publicly demonstrated the invention, and the New York Times announced it in a modest note in the “The News of Radio” column in on July 1, 1948 —in one of the greatest understatements in the history of technology. The public announcement was crucial because Bell Labs was part of the regulated telephone monopoly; in other words, the revolutionary invention was not classified or retained by the U.S. military for weapons use. Instead it was to be offered to commercial manufacturers. The three inventors, William Shockley, Walter Brattain, and John Bardeen, shared the 1956 Nobel Prize in physics for the invention.

It took over a decade before the transistor made a significant effect on computing. Much of computing history has been dominated by the impact of Moore’s law, which describes the doubling of transistors on chips of silicon since 1960. However, we must remember that for over a decade prior to 1960, Moore’s law had a slope of zero: the number of transistors on a chip remained at one. Bringing the device to a state where one could place more than one of them on a piece of material, and get them to operate reliably and consistently, took a concentrated effort. The operation of a transistor depends the quantum states of electrons as they orbit a positively charged nucleus of an atom. By convention, current is said to flow from a positive to a negative direction, although in a conductor such as a copper wire, it is the negatively charged electrons that flow the other way. What Bell Labs scientists discovered was that in certain materials, called semiconductors, current could also flow by moving the absence of an electron in a place where it normally would be: positive charges that the scientists called holes. Postwar research concentrated specifically on two elements that were amenable to such manipulation: germanium and silicon. The 1947 invention used germanium, and it worked by having two contacts spaced very near one another, touching a substrate of material. It worked because its inventors understood the theory of what was happening in the device. Externally it resembled the primitive “cat’s whisker” detector that amateur radio enthusiasts used through the 1950s—a piece of fine wire carefully placed by hand on a lump of galena—hardly a device that could be the foundation of a computer revolution.6

From 1947 to 1960 the foundations of the revolution in solid state were laid. First, the point-contact device of 1947 gave way to devices in which different types of semiconductor material were bonded rigidly to one another. Methods were developed to purify silicon and germanium by melting the material in a controlled fashion and growing a crystal whose properties were known and reproducible. Precise amounts of impurities were deliberately introduced, to give the material the desired property of moving holes (positive charges, or P-type) or electrons (negative charges, or N-type), a process called doping. The industry managed a transition from germanium to silicon, which was harder to work but has several inherent advantages. Methods were developed to lay down the different material on a substrate by photolithography. This last step was crucial: it allowed miniaturizing the circuits much like one could microfilm a book. The sections of the transistor could be insulated from one another by depositing layers of silicon oxide, an excellent insulator.

Manufacturers began selling transistorized computers beginning in the mid-1950s. Two that IBM introduced around 1959 marked the transition to solid state. The Model 1401 was a small computer that easily fit into the existing punched card installations then common among commercial customers. Because it eased the transition to electronic computers for many customers, it became one of IBM’s best-selling products, helping to solidify the company’s dominant position in the industry into the 1980s. The IBM 7090 was a large mainframe that had the same impact among scientific, military, and aerospace customers. It had an interesting history: IBM bid on a U.S. Air Force contract for a computer to manage its early-warning system then being built in the Arctic to defend the United States from a Soviet missile attack (a follow-on to SAGE). IBM had just introduced Model 709, a large and capable vacuum tube mainframe, but the air force insisted on a transistorized computer. IBM undertook a heroic effort to redesign the Model 709 to make it the transistorized Model 7090, and it won the contract.

The Minicomputer

The IBM 7090 was a transistorized computer like the radio was a “wireless” telegraph or the automobile was a “horseless” carriage: it retained the mental model of existing computers, only with a new and better base technology. What if one started with a clean sheet of paper and designed a machine that took advantage of the transistor for what it was, not for what it was replacing?

In 1957, Ken Olsen, a recent graduate of MIT, and Harlan Anderson, who had been employed at MIT’s Digital Computer Laboratory, did just that.7 Both had been working at the Lincoln Laboratory, a government-sponsored lab outside Boston that was concerned with problems of air defense. While at Lincoln they worked on the TX-0, a small computer that used a new type of transistor manufactured by the Philco Corporation. The TX-0 was built to test the feasibility of that transistor for large-scale computers. It not only demonstrated that; it also showed that a small computer, using high-speed transistors, could outperform a large mainframe in certain applications. Olsen and Anderson did not see the path that computers would ultimately take, and Olsen in particular would be criticized for his supposed lack of foresight. But they opened a huge path to the future: from the batch-oriented mainframes of IBM to the world we know today: a world of small, inexpensive devices that could be used interactively.

With $70,000 of venture capital money—another innovation—Olsen and Anderson founded the Digital Equipment Corporation (DEC, pronounced as an acronym), and established a plant at a nineteenth-century woolen mill in suburban Maynard, Massachusetts. Their initial product was a set of logic modules based on high-performance transistors and associated components. Their ultimate goal was to produce a computer, but they avoided that term because it implied going head-to-head with IBM, which most assumed was a foolish action. They chose instead the term programmed data processor. In 1960, DEC announced the PDP-1, which in the words of a DEC historian, “brought computing out of the computer room and into the hands of the user.”8 The company sold the prototype PDP-1 to the Cambridge research firm Bolt Beranek and Newman (BBN), where it caught the eye of one of its executives, psychologist J. C. R. Licklider. We return to Licklider’s role in computing later, but in any event, the sale was fortuitous (see figure 3.3).

Figure 3.3

Engineers at the Digital Equipment Corporation introducing their large computer, the PDP-6, in 1964. Although DEC was known for its small computers, the PDP-6 and its immediate successor, the PDP-10, were large machines that were optimized for time-sharing. The PDP-6 had an influence on the transition in computing from batch to interactive operation, to the development of artificial intelligence, and to the ARPANET. Bill Gates and Paul Allen used a PDP-10 at Harvard University to write some of their first software for personal computers. Source: Digital Equipment Corporation—now Hewlett-Packard. Credit: Copyright© Hewlett-Packard Development Company, L.P. Reproduced with permission.

In April 1965 DEC introduced another breakthrough computer, the PDP-8. Two characteristics immediately set it apart. The first was its selling price: $18,000 for a basic system. The second was its size: small enough to be mounted alongside standard equipment racks in laboratories, with no special power or climate controls needed. The machine exhibited innovations at every level. Its logic circuits took advantage of the latest in transistor technology. The circuits themselves were incorporated into standard modules, which in turn were wired to one another by an automatic machine that employed wire wrap, a technique developed at Bell Labs to execute complex wiring that did not require hand soldering. The modest size and power requirements have already been mentioned. The overall architecture, or functional design, was also innovative. The PDP-8 handled only 12 bits at a time, far fewer than mainframes. That lowered costs and increased speed as long as it was not required to do extensive numerical calculations. This architecture was a major innovation from DEC and signified that the PDP-8 was far more than a vacuum tube computer designed with transistors.

At the highest level, DEC had initiated a social innovation as significant as the technology. DEC was a small company and did not have the means to develop specific applications for its customers, as IBM’s sales force was famous for doing. Instead it encouraged the customers themselves to develop the specialized systems hardware and software. It shared the details of the PDP-8’s design and operating characteristics and worked with customers to embed the machines into controllers for factory automation, telephone switching, biomedical instrumentation, and other uses: a restatement in practical terms of the general-purpose nature of the computer as Turing had outlined in 1936. The computer came to be called a “mini,” inspired by the Morris Mini automobile, then being sold in the United Kingdom, and the short skirts worn by young women in the late 1960s. The era of personal, interactive computing had not yet arrived, but it was coming.

The Convergence of Computing and Communications

While PDP-8s from Massachusetts were blanketing the country, other developments were occurring that would ultimately have an equal impact, leading to the final convergence of computing with communications. In late November 1962, a chartered train was traveling from the Allegheny Mountains of Virginia to Washington, D.C. The passengers were returning from a conference on “information system sciences,” sponsored by the U.S. Air Force and the MITRE Corporation, a government-sponsored think tank. The conference had been held at the Homestead Resort in the scenic Warm Springs Valley of Virginia. The attendees had little time to enjoy the scenery or take in the healing waters of the springs, however. A month earlier, the United States and Soviet Union had gone to the brink of nuclear war over the Soviet’s placement of missiles in Cuba. Poor communications, not only between the two superpowers but between the White House, the Pentagon, and commanders of ships at sea, were factors in escalating the crisis.

Among the passengers on that train was J. C. R. Licklider, who had just moved from Bolt Beranek and Newman to become the director of the Information Processing Techniques Office (IPTO) of a military agency called the Advanced Research Projects Agency (ARPA). That agency (along with NASA, the National Aeronautics and Space Administration) was founded in 1958, in the wake of the Soviet’s orbiting of the Sputnik earth satellites. Licklider now found himself on a long train ride in the company of some of the brightest and most accomplished computer scientists and electrical engineers in the country. The conference in fact had been a disappointment. He had heard a number of papers on ways digital computers could improve military operations, but none of the presenters, in his view, recognized the true potential of computers in military—or civilian—activities. The train ride gave him a chance to reflect on this potential and to share it with his colleagues.

Licklider had two things that made his presence among that group critical. The first was money: as director of IPTO, he had access to large sums of Defense Department funds and a free rein to spend them on projects as he saw fit. The second was a vision: unlike many of his peers, he saw the electronic digital computer as a revolutionary device not so much because of its mathematical abilities but because it could be used to work in symbiosis—his favorite term—with human beings. Upon arrival in Washington, the passengers dispersed to their respective homes. Two days later, on the Friday after Thanksgiving, Robert Fano, a professor at MIT, initiated talks with his supervisors to start a project based on the discussions he had had on the train two days before. By the following Friday, the outline was in place for Project MAC, a research initiative at MIT to explore “machine-aided cognition,” by configuring computers to allow “multiple access”—a dual meaning of the acronym. Licklider, from his position at ARPA, would arrange for the U.S. Office of Naval Research to fund the MIT proposal with an initial contract of around $2.2 million. Such an informal arrangement might have raised eyebrows, but that was how ARPA worked in those days.

Licklider’s initial idea was to start with a large mainframe and share it among many users simultaneously. The concept, known as time-sharing, was in contrast to the sequential access of batch operation. If it was executed properly, users would be unaware that others were also using the machine because the computer’s speeds were far higher than the response of the human brain or the dexterity of human fingers. The closest analogy is a grandmaster chess player playing a dozen simultaneous games with less capable players. Each user would have the illusion (a word used deliberately) that he or she had a powerful computer at his personal beck and call.

Licklider and most of his colleagues felt that time-sharing was the only practical way to evolve computers to a point where they could serve as direct aids to human cognition. The only substantive disagreement came from Wes Clark, who had worked with Ken Olsen at Lincoln Laboratories on transistorized computers. Clark felt this approach was wrong: he argued that it would be better to evolve small computers into devices that individuals could use directly. He was rebuffed by electrical and computer engineers but found a more willing audience among medical researchers, who saw the value of a small computer that would not be out of place among the specialized equipment in their laboratories. With support from the National Institutes of Health, he assembled a team that built a computer called the “LINC” (from Lincoln Labs), which he demonstrated in 1962. A few were built, and later they were combined with the Digital Equipment Corporation PDP-8. It was a truly interactive, personal computer for those few who had the privilege of owning one. The prevailing notion at the time, however, was still toward time-sharing large machines; personal computing would not arrive for another decade.9 The dramatic lowering of the cost of computing that came with advances in solid-state electronics made that practical, and that was exploited by young people like Steve Jobs and Bill Gates who were not part of the academic research environment.

Clark was correct in recognizing the difficulties of implementing time-sharing. Sharing a single large computer among many users with low-powered terminals presented many challenges. While at BBN, Licklider’s colleagues had configured the newly acquired PDP-1 to serve several users at once. Moving that concept to large computers serving large numbers of users, beginning with an IBM and moving to a General Electric mainframe at MIT, was not easy. Eventually commercial time-sharing systems were marketed, but not for many years and only after the expenditure of a lot of development money. No matter: the forces that Licklider set in motion on that train ride transformed computing.

Time-sharing was the spark that set other efforts in motion to join humans and computers. Again with Licklider’s support, ARPA began funding research to interact with a computer using graphics and to network geographically dispersed mainframes to one another.10 Both were crucial to interactive computing, although it was ARPA’s influence on networking that made the agency famous. A crucial breakthrough came when ARPA managers learned of the concept of packet switching, conceived independently in the United Kingdom and the United States. The technique divided a data transfer into small chunks, called packets, which were separately addressed and sent to their destination and could travel over separate channels if necessary. That concept was contrary to all that AT&T had developed over the decades, but it offered many advantages over classical methods of communication, and remains the technical backbone of the Internet to this day.

The first computers of the “ARPANET” were linked to one another in 1969; by 1971 there were fifteen computers, many of them from the Digital Equipment Corporation, connected to one another. The following year, ARPA staged a demonstration at a Washington, D.C., conference. The ARPANET was not the sole ancestor of the Internet as we know it today: it was a military-sponsored network that lacked the social, political, and economic components that together comprise the modern networked world. It did not even have e-mail, although that was added soon. The demonstration did show the feasibility of packet switching, overcoming a lot of skepticism from established telecommunications engineers who believed that such a scheme was impractical. The rules for addressing and routing packets, which ARPA called protocols, worked. ARPA revised them in 1983 to a form that allowed the network to grow in scale. These protocols, called Transmission Control Protocol/Internet Protocol (TCP/IP) remain in use and are the technical foundation of the modern networked world.