Figure 5.1

Patent for the microprocessor.

5

The Microprocessor

By 1970, engineers working with semiconductor electronics recognized that the number of components placed on an integrated circuit was doubling about every year. Other metrics of computing performance were also increasing, and at exponential, not linear, rates. C. Gordon Bell of the Digital Equipment Corporation recalled that many engineers working in the field used graph paper on which they plotted time along the bottom axis and the logarithm of performance, processor speeds, price, size, or some other variable on the vertical axis. By using a logarithmic and not a linear scale, advances in the technology would appear as a straight line, whose slope was an indication of the time it took to double performance or memory capacity or processor speed. By plotting the trends in IC technology, an engineer could predict the day when one could fabricate a silicon chip with the same number of active components (about 5,000) as there were vacuum tubes and diodes in the UNIVAC: the first commercial computer marketed in the United States in 1951.1 A cartoon that appeared along with Gordon Moore’s famous 1965 paper on the doubling time of chip density showed a sales clerk demonstrating dictionary-sized “Handy Home Computers” next to “Notions” and “Cosmetics” in a department store.2

But even as Moore recognized, that did not make it obvious how to build a computer on a chip or even whether such as device was practical. That brings us back to the Model T problem that Henry Ford faced with mass-produced automobiles. For Ford, mass production lowered the cost of the Model T, but customers had to accept the model as it was produced (including its color, black), since to demand otherwise would disrupt the mass manufacturing process.3 Computer engineers called it the “commonality problem”: as chip density increased, the functions it performed were more specialized, and the likelihood that a particular logic chip would find common use among a wide variety of customers got smaller and smaller.4

A second issue was related to the intrinsic design of a computer-on-a-chip. Since the time of the von Neumann Report of 1945, computer engineers have spent a lot of their time designing the architecture of a computer: the number and structure of its internal storage registers, how it performed arithmetic, and its fundamental instruction set, for example.5 Minicomputer companies like Digital Equipment Corporation and Data General established themselves because their products’ architecture was superior to that offered by IBM and the mainframe industry. If Intel or another semiconductor company produced a single-chip computer, it would strip away one reason for those companies’ existence. IBM faced this issue as well: it was an IBM scientist who first used the term architecture as a description of the overall design of a computer. The success of its System/360 line came in a large part from IBM’s application of microprogramming, an innovation in computer architecture that had previously been confined mainly to one-of-a-kind research computers.6

The chip manufacturers had already faced a simpler version of this argument: they produced chips that performed simple logic functions and gave decent performance, though that performance was inferior to custom-designed circuits that optimized each individual part. Custom-circuit designers could produce better circuits, but what was crucial was that the customer did not care. The reason was that the IC offered good enough performance at a dramatically lower price, it was more reliable, it was smaller, and it consumed far less power.7

It was in that context that Intel announced its 4004 chip, advertised in a 1971 trade journal as a “microprogrammable computer on a chip.” Intel was not alone; other companies, including an electronics division of the aerospace company Rockwell and Texas Instruments, also announced similar products a little later. As with the invention of the integrated circuit, the invention of the microprocessor has been contested. Credit is usually given to Intel and its engineers Marcian (“Ted”) Hoff, Stan Mazor, and Federico Faggin, with an important contribution from Masatoshi Shima, a representative from a Japanese calculator company that was to be the first customer. What made the 4004, and its immediate successors, the 8008 and 8080, successful was its ability to address the two objections mentioned above. Intel met the commonality objection by also introducing input–output and memory chips, which allowed customers to customize the 4004’s functions to fit a wide variety of applications. Intel met the architect’s objection by offering a system that was inexpensive and small and consumed little power. Intel also devoted resources to assist customers in adapting it to places where previously a custom-designed circuit was being used.

In recalling his role in the invention, Hoff described how impressed he had been by a small IBM transistorized computer called the 1620, intended for use by scientists. To save money, IBM stripped the computer’s instruction set down to an absurdly low level, yet it worked well and its users liked it. The computer did not even have logic to perform simple addition; instead. whenever it encountered an “add” instruction, it went to a memory location and fetched the sum from a set of precomputed values.8 That was the inspiration that led Hoff and his colleagues to design and fabricate the 4004 and its successors: an architecture that was just barely realizable in silicon, with good performance provided by coupling it with detailed instructions (called microcode) stored in read-only-memory (ROM) or random-access-memory (RAM) (see figure 5.1).

Figure 5.1

Patent for the microprocessor.

The Personal Computer

Second only to the airplane, the microprocessor was the greatest invention of the twentieth century. Like all other great inventions, it was disruptive as much as beneficial. The benefits of having all the functionality of a general-purpose computer on a small and rugged chip are well known. But not everyone saw it that way. The first, surprisingly, was Intel, where the microprocessor was invented. Intel marketed the invention to industrial customers and did not imagine that anyone would want to use it to build a computer for personal use. The company recognized that selling this device required a lot more assistance than was required for simpler devices. It designed development systems, which consisted of the microprocessor, some read-only and read-write memory chips, a power supply, some switches or a keypad to input numbers into the memory, and a few other circuits. It sold these kits to potential customers, hoping that they would use the kits to design embedded systems (say, for a process controller for a chemical or pharmaceutical plant), and then place large orders to Intel for subsequent chips. These development kits were in effect genuine computers and a few recognized that, but they were not marketed as such.

Hobbyists, ham radio operators, and others who were only marginally connected to the semiconductor industry thought otherwise. A few experimental kits were described in electronics hobby magazines, including 73 (for ham radio operators) and Radio Electronics. Ed Roberts, the head of an Albuquerque, New Mexico, supplier of electronics for amateur rocket enthusiasts, went a step further: he designed a computer that replicated the size and shape, and had nearly the same functionality, as one of the most popular minicomputers of the day, the Data General Nova, at a fraction of the cost. When Micro Instrumentation and Telemetry Systems (MITS) announced its “Altair” kit on the cover of the January 1975 issue of Popular Electronics, the floodgates opened (see figure 5.2).

Figure 5.2

The Altair personal computer. Credit: Smithsonian Institution.

The next two groups to be blindsided by the microprocessor were in the Boston region. The impact on the minicomputer companies of having the architecture of, say, a Data General minicomputer on a chip has already been mentioned. A second impact was in the development of advanced software. In 1975, Project MAC was well underway on the MIT campus with a multifaceted approach toward innovative ways of using mainframe computers interactively. A lot of their work centered around developing operating system software that could handle these tasks. About five miles east of the MIT campus, the research firm Bolt Beranek and Newman (BBN) was building the fledgling ARPANET, by then rapidly linking computers across the country (it was at BBN where the @ sign was adapted for e-mail a few years earlier). Yet when Paul Allen, a young engineer working at Honeywell, and Bill Gates, a Harvard undergraduate, saw the Popular Electronics article, they both left Cambridge and moved to Albuquerque, to found Microsoft, a company devoted to writing software for the Altair. Perhaps they did not know much about those other Cambridge projects, but at Harvard, Gates had had access to a state-of-the-art PDP-10 computer from the Digital Equipment Corporation, and it was on that PDP-10 computer that they developed the first software for the Altair in Albuquerque. The move to the west by these two young men was not isolated. In the following decades, the vanguard of computing would be found increasingly in Seattle (where Microsoft eventually relocated) and Silicon Valley (see figures 5.3, 5.4).

Figure 5.3

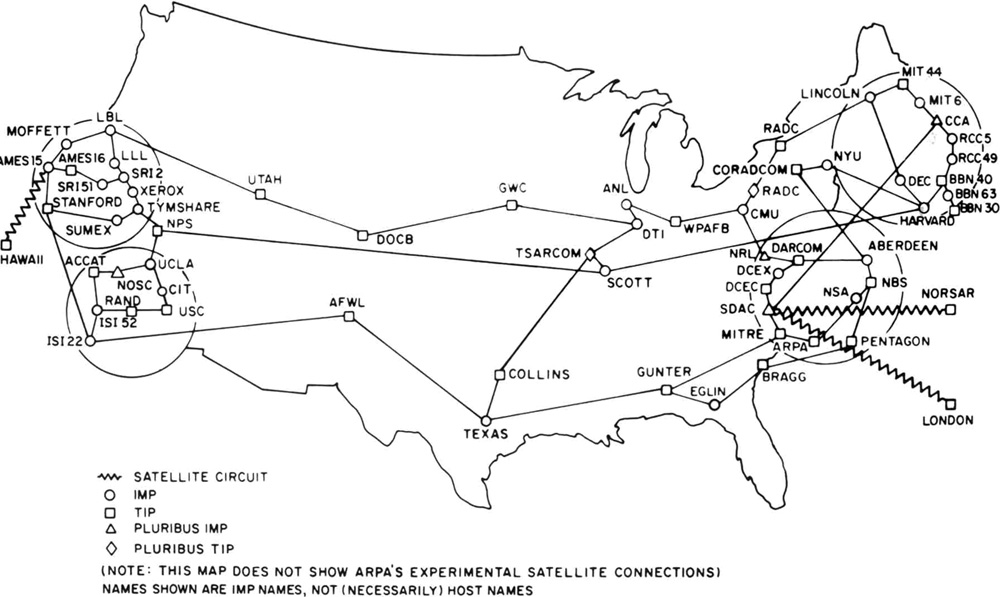

Map of the ARPANET, circa 1974. Notice the concentration in four regions: Boston, Washington, D.C., Silicon Valley, and southern California. (Credit: DARPA)

Figure 5.4

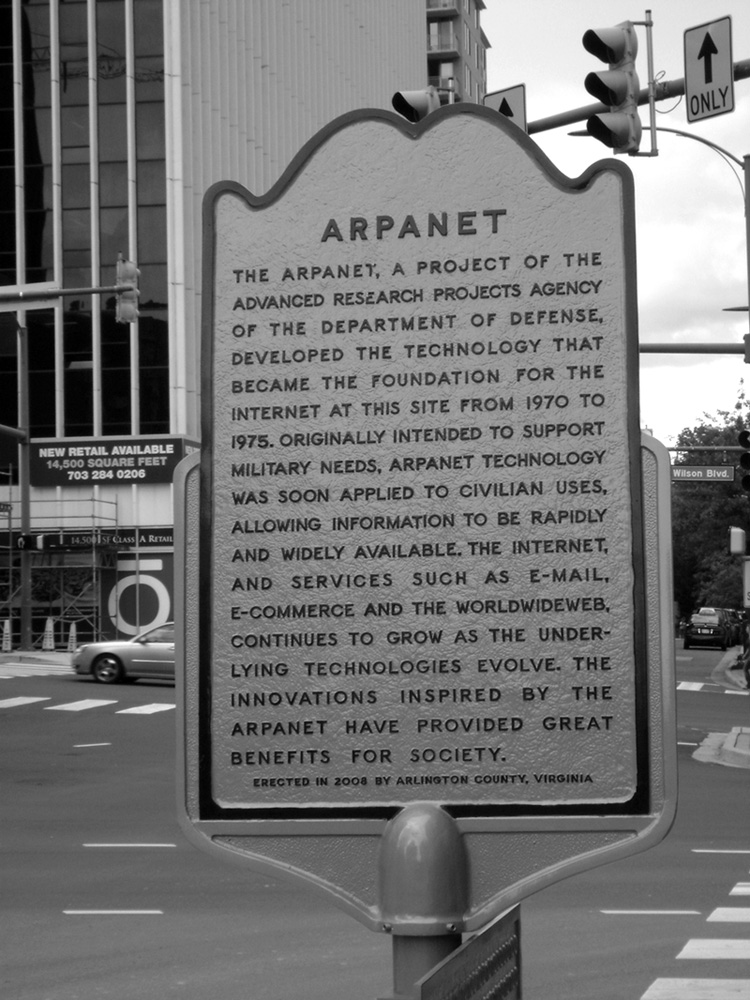

Historical marker, Wilson Boulevard, Arlington, Virginia, commemorating the location of ARPA offices, where the specifications for the ARPANET were developed in the early 1970s. The common perception of the Internet is that it resides in an ethereal space; in fact, its management and governance is located in the Washington, DC, region, not far from the Pentagon. Photo by the author.

The Altair soon found itself competing with a number of microprocessor-based systems. Just as the availability of fast and reliable transistors after 1965 fueled a number of minicomputer companies, so too did the availability of microprocessors and associated memory chips fuel a personal computer firestorm. Some of them, like the IMSAI, were close copies of the Altair design, only with incremental improvements. Others used microprocessors offered by competitors to Intel. Among the most favored was a chip called the 6502, sold by MOS Technology (the term MOS referred to the type of circuit, metal-oxide-semiconductor, which lends itself to a high density of integration on a chip).9 The 6502 was introduced at a 1975 trade show and was being sold for only $25.00. Other personal systems used chips from Motorola and Zilog, whose Z-80 chip extended the instruction set of the Intel 8080 used in the Altair. Just as Intel built development systems to allow potential customers to become familiar with the microprocessor’s capabilities, so too did MOS Technology offer a single board system designed around the 6502. The KIM-1 was primitive, yet to the company’s surprise, it sold well to hobbyists and enthusiasts who were eager to learn more about this phenomenon.10 Radio Shack chose the Zilog chip for a computer, the TRS-80, which it sold in its stores, and a young Silicon Valley enthusiast named Steve Wozniak chose the 6502 for a system he was designing, later called the Apple 1.

The transformation of the computer from a room-sized ensemble of machinery to a handheld personal device is a paradox. On the one hand, it was the direct result of advances in solid-state electronics following the invention of the transistor in 1947 and, as such, an illustration of technological determinism: the driving of social change by technology. On the other hand, personal computing was unanticipated by computer engineers; instead, it was driven by idealistic visions of the 1960s-era counterculture. By that measure, personal computing was the antithesis of technological determinism. Both are correct. In Silicon Valley, young computer enthusiasts, many of them children of engineers working at local electronics or defense firms, formed the Homebrew Computer Club and met regularly to share ideas and individual designs for personal computers. The most famous of those computers was the Apple. It was the best known, and probably the best designed, but it was one of literally dozens of competing microprocessor-based systems from all over the United States and in Europe. Other legendary personal systems that used the 6502 were the Commodore PET; its follow-on, the Commodore 64, used an advanced version of the chip and was one of the most popular of the first-generation personal computers. The chip was also found in video game consoles—special-purpose computers that enjoyed a huge wave of popularity in the early 1980s.

MITS struggled, but Apple, founded in 1976, did well under the leadership of Wozniak and his friend, Steve Jobs (a third founder, Ronald Gerald Wayne, soon sold his share of the company for a few thousand dollars, fearing financial liability if the company went under).11 The combination of Wozniak’s technical talents and Jobs’s vision of the limitless potential of personal computing set that company apart from the others. The company’s second consumer product, the Apple II, not only had an elegant design; it also was attractively packaged and professionally marketed. Apple’s financial success alerted those in Silicon Valley that microprocessors were suitable for more than just embedded or industrial uses. It is no coincidence that these computers could also play games, similar to what was offered by the consoles from companies like Atari. That was a function that was simply unavailable on a mainframe, for economic and social reasons.

Bill Gates and Paul Allen recognized immediately that the personal computer’s potential depended on the availability of software. Their first product for the Altair was a program, written in 8080 machine language, that allowed users to write programs in BASIC, a simple language developed at Dartmouth College for undergraduates to learn how to program. At Dartmouth, BASIC was implemented on a General Electric mainframe that was time-shared; the BASIC that Gates and Allen delivered for the Altair was to be used by the computer’s owner, with no sharing at all. That was a crucial distinction. Advocates of large time-shared or networked systems did not fully grasp why people wanted a computer of their own, a desire that drove the growth of computing for the following decades. The metaphor of a computer utility, inspired by electric power utilities, was seductive. When such a utility did come into existence, in the form of the World Wide Web carried over the Internet, its form differed from the 1960s vision in significant ways, both technical and social.

Other ARPA-funded researchers at MIT, Stanford, and elsewhere were working on artificial intelligence: the design of computer systems that understood natural language, recognized patterns, and drew logical inferences from masses of data. AI research was conducted on large systems, like the Digital Equipment Corporation PDP-10, and it typically used advanced specialized programming languages such as LISP. AI researchers believed that personal computers like the Altair or Apple II were ill suited for those applications. The Route 128 companies were making large profits on minicomputers, and they regarded the personal computers as too weak to threaten their product line. Neither group saw how quickly the ever-increasing computer power described by Moore’s law, coupled with the enthusiasm and fanaticism of hobbyists, would find a way around the deficiencies of the early personal computers.

While the Homebrew Computer Club’s ties to the counterculture of the San Francisco Bay Area became legendary, more traditional computer engineers were also recognizing the value of the microprocessor by the late 1970s.12 IBM’s mainframes were profitable, and the company was being challenged in the federal courts for antitrust violations. A small IBM group based in Florida, far from IBM’s New York headquarters, set out to develop a personal computer based around an Intel microprocessor. The IBM Personal Computer, or PC, was announced in 1981. It did not have much memory capacity, but in part because of the IBM name, it was a runaway success, penetrating the business world. Its operating system was supplied by Microsoft, which made a shrewd deal allowing it to market the operating system (known as MS-DOS) to other manufacturers. The deal catapulted Microsoft into the ranks of the industry’s wealthiest as other vendors brought out machines that were software-compatible clones of the IBM PC. Among the most successful were Compaq and Dell, although for a while, the market was flooded with competitors. In the 1970s, IBM had fought off a similar issue with competitors selling mainframe components that were, as they called them, “plug-compatible” with IBM’s line of products (so-called because a customer could unplug an IBM component such as a disk drive, plug in the competitor’s product, and the system would continue to work). This time IBM was less able to assert control over the clone manufacturers. Beside the deal with Microsoft, there was the obvious factor that most of the innards of the IBM PC, including its Intel microprocessor, were supplied by other companies. Had IBM not been in the midst of fighting off an antitrust suit, brought on in part by its fight with the plug-compatible manufacturers, it might have forestalled both Microsoft and the clone suppliers. It did not, with long-term implications for IBM, Microsoft, and the entire personal computer industry.

One reason for the success of both the IBM PC and the Apple II was a wide range of applications software that enabled owners of these systems to do things that were cumbersome to do on a mainframe. These first spreadsheet programs—VisiCalc for the Apple and Lotus 1-2-3 for the IBM PC—came from suppliers in Cambridge, Massachusetts; they were followed by software suppliers from all over the United States. Because of their interactivity, spreadsheet programs gave more direct and better control over simple financial and related data than even the best mainframes could give. From its base as the supplier of the operating system, Microsoft soon dominated the applications software business as well. And like IBM, its dominance triggered antitrust actions against it from the U.S. Department of Justice. A second major application was word processing—again something not feasible on an expensive mainframe, even if time-shared, given the massive, uppercase-only printers typically used in a mainframe installation. For a time, word processing was offered by companies such as Wang or Lanier with specialized equipment tailored for the office environment. These were carefully designed to be operated by secretaries (typically women) or others with limited computer skills. The vendors deliberately avoided calling them “computers,” even though that was exactly what they were. As the line of IBM personal computers with high-quality printers matured and as reliable and easy-to-learn word processing software became available, this niche disappeared.

Xerox PARC

Apple’s success dominated the news about Silicon Valley in the late 1970s, but equally profound innovations were happening in the valley out of the limelight, at a laboratory set up by the Xerox Corporation. The Xerox Palo Alto Research Center (PARC) opened in 1970, at a time when dissent against the U.S. involvement in the war in Vietnam ended the freewheeling funding for computer research at the Defense Department. An amendment to a defense authorization bill in 1970, called the Mansfield amendment named after Senator Mike Mansfield who chaired the authorization committee, contained language that restricted defense funding to research that had a direct application to military needs. The members of that committee assumed that the National Science Foundation (NSF) would take over some of ARPA’s more advanced research, but that did not happen (although the NSF did take the lead in developing computer networking). The principal beneficiary of this legislation was Xerox: its Palo Alto lab was able to hire many of the top scientists previously funded by ARPA, and from that laboratory emerged innovations that defined the digital world: the Windows metaphor, the local area network, the laser printer, the seamless integration of graphics and text on a screen. Xerox engineers developed a computer interface that used icons—symbols of actions—that the user selected with a mouse. The mouse had been invented nearby at Stanford University by Douglas Engelbart, who was inspired to work on human-computer interaction after reading Vannevar Bush’s “As We May Think” article in the Atlantic.13 Some of these ideas of using what we now call a graphical user interface had been developed earlier at the RAND Corporation in southern California, but it was at Xerox PARC where all these ideas coalesced into a system, based around a computer of Xerox’s design, completed in 1983 and called the Alto.

Xerox was unable to translate those innovations into successful products, but an agreement with Steve Jobs, who visited the lab at the time, led to the interface’s finding its way to consumers through the Apple Macintosh, introduced in 1984. The Macintosh was followed by products from Microsoft that also were derived from Xerox, carried to Microsoft primarily by Charles Simonyi, a Xerox employee whom Microsoft hired. Xerox innovations have become so common that we take them for granted, especially the ability of word processors to duplicate on the screen what the printed page will look like: the so-called WYSIWYG, or “what you see is what you get,” feature (a phrase taken from a mostly forgotten television comedy Laugh In).

Consumers are familiar with these innovations as they see them every day. Even more significant to computing was the invention, by Xerox researchers Robert Metcalfe and David Boggs, of Ethernet.14 This was a method of connecting computers and workstations at the local level, such as an office or department on a college campus. By a careful mathematical analysis, in some ways similar to the notion of packet switching for the ARPANET, Ethernet allowed data transfers that were orders of magnitude faster than what had previously been available. Ethernet finally made practical what time-sharing had struggled for so many years to implement: a way of sharing computer resources, data, and programs without sacrificing performance. The technical design of Ethernet dovetailed with the advent of microprocessor-based personal computers and workstations to effect the change. No longer did the terminals have to be “dumb,” as they were marketed; now the terminals themselves had a lot of capability of their own, especially in their use of graphics.