I have been using ELK for quite a long time. It was daily work for me, as AWS Lambda logs are shipped to CloudWatch, but as my company uses ELK for centralized log management, I now like to push all the logs from CloudWatch to ELK.

So I decided to ship the CloudWatch logs to ELK. Lambda logs can be shipped directly to Elasticsearch or to Redis for Logstash to pick it up. There is a plugin available that will help us to ship the Lambda CloudWatch logs to ELK. We will now look at how to configure this. We will be using a Docker ELK image to set up ELK locally and then connect to AWS CloudWatch through the Logstash plugin. Then we will push the logs to Elasticsearch. Let's go through the following steps:

- Get the Docker image for ELK, as shown in the following code. If you already have an ELK account set up, then you don't need to follow this step:

$ docker pull sebp/elk

$ docker run --rm -it -p 5044:5044 -p 5601:5601 -p 9200:9200 -p 9300:9300 sebp/elk:latest

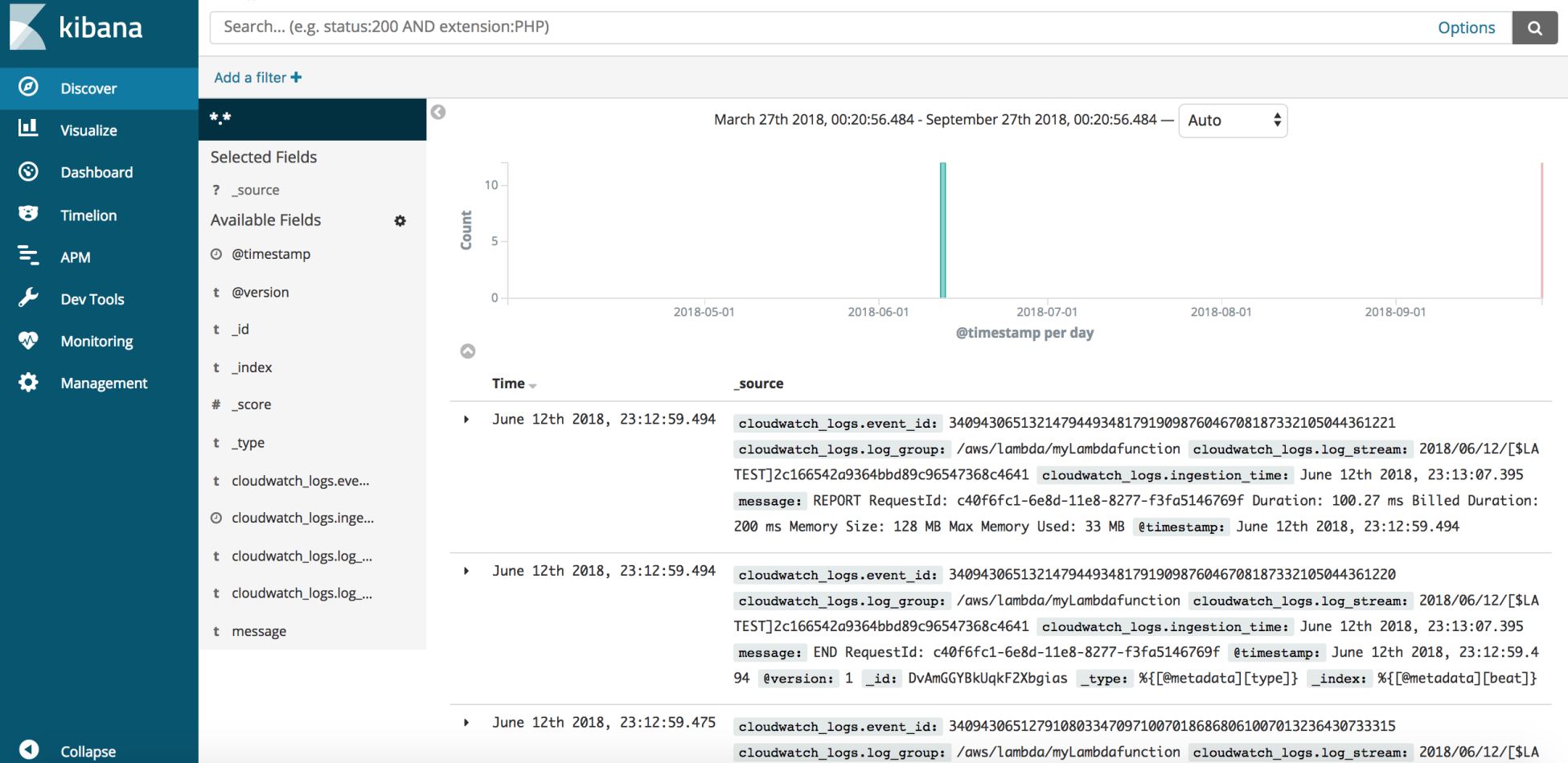

Now, if you go to your browser and open the link http://localhost:5601/, then you should be able to see the Kibana dashboard.

- Install the logstash-input-cloudwatch_logs plugin. Ssh into your Docker container that was created in the previous step and install the plugin, as shown in the following code:

$ docker exec -it <container> bash

$ /opt/logstash/bin/logstash-plugin install logstash-input-cloudwatch_logs

Validating logstash-input-cloudwatch_logs

Installing logstash-input-cloudwatch_logs

Installation successful

- Once the plugin is successfully installed, we need to create a Logstash config file that will help us in shipping the CloudWatch logs. Let's open the editor and add the following config file and name it cloud-watch-lambda.conf. We need to replace access_key_id and secret_access_key as per the AWS IAM user and also update the log group. I have added three grok filters:

- The first filter matches the generic log message, where we strip the timestamp and pull out the [lambda][request_id] field for indexing

- The second grok filter handles the START and END log messages

- The third filter handles the REPORT messages and gives us the most important fields

[lambda][duration]

[lambda][billed_duration]

[lambda][memory_size]

[lambda][memory_used]

input {

cloudwatch_logs {

log_group => "/AWS/lambda/my-lambda"

access_key_id => "AKIAXXXXXX"

secret_access_key => "SECRET"

type => "lambda"

}

}

Let's name this cloud-watch-lambda.conf and place it in the /etc/logstash/conf.d file of the Docker container.

- Now let's restart the Logstash service, as shown in the following code. Once this is done, you should be able to see that the logs have been pulled into our ELK container:

$ /etc/init.d/logstash restart

- Open the browser and go to the page http://localhost:5601 and we should be able to see the logs streaming into ELK from cloudwatch and this can be refined further with ELK filter and regular expression.