From the arrival of the Neanderthals in Europe through their replacement by anatomically modern humans and for millennia afterward, the food supply of any human community was limited to what the ecosystem offered. Paleolithic foragers could eat only what was there to be hunted, and cave art reflected their understanding of this fundamental fact. Not just the pattern of individual lives but the size and character of early settlements depended on foraging. Prehistorians reason that only small, mobile bands could sustain themselves by living directly off the land. Groups that were too large would be driven by hunger either to fragment or to starve. Sedentary groups of any size would soon exhaust the resources within reach and would have to move on or starve. Everything changed when farming developed. So dramatic was the change this invention brought about that it is often described as a Neolithic Revolution.

In recent decades new research into agricultural history has overturned the paradigm that many of us learned in school. We were taught that large-scale farming began in the Fertile Crescent when Mesopotamian chieftains consolidated their hold over multiple towns and created bureaucracies that coordinated the complex work of irrigating grain fields. Social hierarchies of specialists and multitudes of laborers, homogeneous crops of cereal grains—these were the origins of agriculture and civilization.1 This theory had its roots in nineteenth-century concerns and combined elements that preoccupied a broad spectrum of people. Focused as it was on Mesopotamia, the theory located the origins of agriculture and the origins of large-scale political organization in the same place, tying together accounts of the rise of domestication of crops and animals and the rise of the nation-state. The narrative of state formation, which was a major political preoccupation of post-Napoleonic Europe, was further linked with the origins of the coercive power of the community—that is to say, with the origins of war. For theorists imbued with the thought of that era, three distinct theoretical concerns were inextricably blended together. The history of cultivation and domestication, the rise of the nation-state, and the story of warfare are distinct, but nineteenth-century historiography joined them in a way that contemporary theorists must struggle to undo.

What prehistorians now believe is quite different. The agricultural revolution came about in fits and starts; it was by no means the creation of a single culture. Its great effect was achieved by the combination of scattered discoveries into a readily adaptable package of seeds, herds, and techniques of cultivation. This new history undermines the chronology that the earlier picture enshrined and breaks the links that nineteenth-century theory forged between domestication, state formation, and organized violence. Not every scholar has kept up with these developments. Some of the most influential writers on the Neolithic agricultural revolution, among them its harshest critiques from both an ecological and an economic perspective, remain unaware of the new picture of the past that has emerged from the accumulated evidence of the past decades.2

While archaeologists and others were drawing this new picture of the agricultural revolution, researchers in other fields began to revise long-accepted views of the nutritional soundness of cultivated crops. What for generations had seemed to be a positive, progressive emergence from the dark uncertainties of the Paleolithic period was turned on its head. A utopian view of the Paleolithic is now far more common, along with a nagging sense that a lot of today’s problems can be traced to the Neolithic Revolution: the beginning of farming. I take issue with this view. This chapter chronicles both new thinking about the origins of agriculture and research centered on the nutritional controversy. In subsequent chapters, devoted more to culture than to the pragmatic conduct of agriculture, I chart the development of the First Nature view.

Jericho was the first archaeological site to indicate that the history of cultivation was more drawn out and more elusive than anyone had imagined. When excavators reached the earliest levels at the site, what they found contradicted everything they believed. Pottery had always been seen as a necessary part of the agricultural revolution. Theorists believed that it was required to store grain and oil and to carry water to houses and fields. Yet there was no pottery in the earliest levels at Jericho. The people of Jericho were sedentary and lived in houses surrounded by a high wall, but much of their diet came from hunting and foraging. Hunting gazelles and gathering wild plants went side by side with farming to produce a mixed economy halfway between that of a Paleolithic hunter-gatherer and a Neolithic farmer. The third feature of Jericho that confounded theory was the importance of trade to a society with no obvious social hierarchy. Theorists believed that long-distance trade, like urbanization, depended on large-scale agriculture and powerful rulers. Jericho showed trade in an entirely different light.

The ruins of ancient Jericho lie in a desert that is part of a geological feature known as a rift valley. It is an area where the large plates that underlie the continents are drifting apart. The Jordan Valley is part of an interconnected system that reaches into Africa. It runs north from the Red Sea, through the Dead Sea and the Sea of Galilee, to Syria and eastern Turkey. Easy passage through the Jordan Valley supported regional trade that was crucial to the city’s development. The long mountain chain that separates the Mediterranean coast from the rift creates a rain shadow that all but deprives the valley of moisture. Farming that depended directly on rainwater was impossible there. Still, the valley was not without water. The rain that fell in the mountains fed streams that flowed west toward the Mediterranean and others that flowed east down dry slopes into the fringes of the valley.3

Once rainwater reached the valley floor near Jericho, it flowed underground, where it nourished a desert spring called Ain es Sultan that provided water for the city year round. The spring, which continues to flow today, also offered a feature that was easy for archaeologists to overlook—namely, damp ground. Without irrigation or rainfall in the valley itself, the farmers of Jericho were able to cultivate grain in this very special and very limited environment. Once archaeologists recognized that cultivation at Jericho had depended on this unexpected resource, they began looking for similar environments.

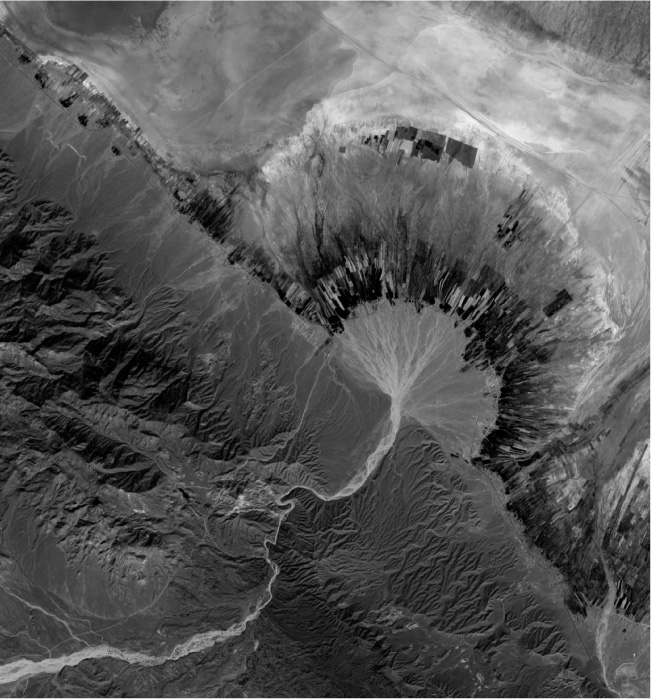

One of the most common landscape features in deserts is a fan-shaped deposit of soil at the mouth of a seasonal mountain stream. After the brief annual rains, these streams suddenly fill, and over a few days or weeks they transport not just water but enormous amounts of soil from slopes to the valley flatlands. Over time, these seasonal river courses, which are called wadis in Arabic, create fan-shaped deposits of mountain soil at their outlets. For a period of weeks or months after the water ceases to flow, the deep and rich soil that makes up these fans stays moist. Cultivated like the damp ground near the Jericho spring, they could be extremely productive.

Floods saturate the soil in spring, just at the moment that new growth can begin. The same inundations make yearly deposits of nutrient-rich soil while they sweep the fan clear of weeds and brush. Once the floods are finished, seeds can be broadcast on the wet soil or set into shallow pits or trenches traced with a stick. Soil moisture causes the seeds to sprout and continues to nourish the growing plants. Because these fields are continually reinvigorated by mineral-rich transported soils, they supported annual cultivation for hundreds or even thousands of years.4 On these sites, farming was sustainable, and its environmental impact was low.

The noted prehistorian Andrew Sherratt argues that the first agriculture in West Asia sprang up on sites of this kind. He calls this kind of agriculture “floodwater farming.” At Jericho and elsewhere, grain cultivation did not replace hunter-gatherer activities; cultivated grain supplemented the traditional diet. The grains that these special and unusual sites produced were, Sherratt maintains, a rare and therefore precious commodity. Since relatively few areas were suited to their production, for many hundreds of years grain was a luxury commodity rather than a staple and had a high barter value.

An alluvial fan is under cultivation today. (Photograph from NASA.)

It would have been traded for goods that the inhabitants of Jericho needed but did not produce locally. Among these goods was obsidian, a glasslike, durable volcanic rock that can be fractured to create exceedingly sharp-edged tools. Grain harvesting required sickles of some sort, and obsidian made keen blades. The early inhabitants of Jericho also traded for seashells from the Mediterranean. These more exotic shells supplemented the shells that could be gathered from the Dead Sea nearby. The inhabitants of Jericho offered grain in exchange for these goods, but also bitumen, a soft, sticky form of petroleum that welled from the earth near the site. Bitumen had a variety of uses. It served as a caulk and waterproof coating, and it was widely used as an adhesive to bind stone tool heads to wooden handles.

A lot of ecological diversity is packed into the Jordan Valley and its surrounding steppe and mountain habitats—a relatively small territory. The development of agriculture within this unique environment drew on this diversity. Transplanting grain from its native environment to the desert spring was the most obvious example of the benefits of connection. The movement of resources—grain and the knowledge of how to cultivate it, obsidian, bitumen—from one part of the region to another were also important. The exploitation of diversity and regional cooperation through trade were the keys to the process.5

Excavations at Abu Hureyra in Syria also revealed a Jericho-like mixture of hunter-gatherer lifestyles and emerging agriculture. The digging there was carried out in the early 1970s, and in 1974 the site was flooded when a dam was built on the Euphrates River. Prehistorians have continued to analyze the data obtained in these digs for forty years. The first occupation level at the site dates to 9500 BCE. At that early period, the only form of food production that can be documented in the archaeological record is hunting. Five hundred years later, there is evidence of plant gathering, and by 6400 BCE the local diet had expanded to include hunted gazelle, foraged plants, and some cultivated cereals. One thousand years later, the residents were growing peas and beans along with cereals.6

The food supply at the equally precocious Anatolian city Çatalhöyük was similar to one part of the diet that sustained Jericho. In the earliest phases of city life the meat of wild aurochs was the mainstay (the auroch is the wild ancestor of the ox). Excavations in the 1960s also uncovered evidence of widespread and diverse trade.7 The quantity and quality of the imported goods that the city could afford poses the question of what they had to offer in exchange. Noting that the skulls and bones of aurochs preserved in the site became smaller over time, Sherratt and others have suggested that Çatalhöyük may have been the first place were wild aurochs were domesticated. Over generations, these wild cattle went through a series of genetic changes, becoming less robust and less aggressive. These useful beasts were what the city had to offer in exchange for imported goods. When domestication of cattle became a widespread practice, the city lost its commercial edge and simply vanished.

Located in the highlands of what is now Turkey, the abandoned city of Çatalhöyük was buried in two enormous mounds that cover an area of more than thirty acres.8 James Mellaart, the first excavator, identified ten layers of mud-brick cities piled one on top of the other and indeterminate layers beneath separating level X from virgin soil. Level X, the earliest distinguishable layer, has been dated to 6400 BCE. The highest level at the site dates to 5700 BCE. After that, the site was abandoned for five thousand years, then reoccupied in the late classical period.9

As Çatalhöyük emerged from the ground during the first excavations, it soon became clear that nothing like it had ever been seen before. (The sites that anticipate the architecture and culture of Çatalhöyük were excavated later.) The complex had been conceived as a single architectural entity and laid out in an asymmetrical grid. The houses had no doorways, and there were no streets. Entry to each house was through a hatch in the roof. The city was higher in the middle and lowest at the outer edge. The walls of the outermost houses formed the city boundary.

The degree of coordination among the individual housing units was high. Builders used wooden forms to create mud bricks from which every house was built. The forms were of uniform dimensions, so bricks throughout the city were of the same size. Houses could be bigger or smaller, but all were designed in the same way. From the roof hatch a ladder angled down to rest against the southern interior wall, where the hearth and the oven were placed. There were platforms of different sizes—probably for sleeping and resting—set into the floor against the north wall. In many houses, bodies have been discovered, buried beneath the floors. Males were buried under the easternmost platform and females under a platform to its west. The westernmost platform in a house could cover the remains of either men or women. These segregated burials suggest that the platforms were perhaps rigorously assigned to either men or women.10

Plaster-covered interior walls and successive layers of replastering suggest that the houses lasted on average about a hundred years before becoming unstable. Vertical buttresses, often painted red, braced some walls. Wooden beams, typically salvaged from abandoned houses, supported roofs. Since traffic within the town passed across the rooftops, there was no space between adjacent houses. Intermittent openings seem to have served as dumps for waste and debris.

Excavations at Jericho, Çatalhöyük, and Abu Hureyra uncovered signs of early farming and animal domestication.

While some houses were bigger than others, their essentially random placement within the city suggests to the excavators that their scale reflected family size rather than family status. No palace complex was discovered, and no quarter of the city appears to have been devoted to people of a superior social rank. The excavators also failed to find evidence of occupational specialties.11

Like the cave painters of the Paleolithic, the men and women who lived in Çatalhöyük used wall art to represent the world as they understood it. Scattered among the houses were buildings identified by the excavators as shrines. Structured like houses, the shrines were distinguished by their decoration. One of the most elaborate shrines was uncovered at level VII.9.12 The main room of the shrine seems designed to support multiple and complex cult activities. Its main decorations are sculpted aurochs’ heads. There are subdivisions of the walls, depressions and platforms on the floor, and multiple low entrances to secondary rooms. The east wall of the room, for example, is subdivided by two buttresses into three sections. Each of the buttresses supports a large idealized bull’s head crafted from clay plaster. A shelf runs the full length of the wall, and it supports a third, smaller horned bull’s head between two larger ones. There is a shallow depression in the floor slightly off center in front of these bull masks. A small secondary depression is next to it. A tiny opening in the wall under the shelf leads to a small adjoining room. A low doorway—typical of the sort that leads to household storage rooms—opens in the near corner of the south wall.

The opposite wall of the shrine is subdivided again into three sections. Three superimposed bull masks with widespread wavy horns decorate one of the buttresses. A single bull’s head with two sets of horns juts from the second buttress, and a third bull’s head is centered in the open space between them. There are no openings or floor depressions here. Two conventional low doors in the north wall open into a shallow second room.

In another shrine from the same level (VII.35) the repertoire of decoration is slightly different. Bulls’ heads are combined with idealized pairs of breasts arranged both horizontally and vertically on the wall. The nipples have been replaced by vultures’ beaks.13 One of the most highly decorated bull shrines was uncovered at the somewhat later level VI. There, multiple altars with sweeping bulls’ horns face the corners of a room where one of the buttresses is extended by a benchlike projection flanked by seven sets of bulls’ horns.14

The activities that took place in the shrines can only be imagined. Sherratt emphasized in his late work the continuity between the first phase of agriculture, domestic architecture, and a forager’s worldview.15 At Çatalhöyük settlers continued to depend on animals for their livelihood and passed fairly seamlessly from foraging to animal domestication. They continued to understand and symbolize their world through animal images. Their cults linked symbols of power, death, and fertility—animal images and images of women—in architectural settings. The vitality of the city and the power of animals were interdependent in their minds.

What became of all these communities? Abu Hureyra, Jericho, and Çatalhöyük were abandoned many millennia ago. It seems clear that resource depletion, a factor repeatedly invoked in modern critiques of ancient agricultural civilizations, was not the problem for any of them. The causes were not ecological but economic and technological. Jericho thrived on floodwater farming as long as domesticated grains were luxury trade goods. Low annual production made the city noncompetitive when new methods of farming made grain a commodity. Çatalhöyük grew rich on the obsidian trade, which failed when materials like fired clay and metal replaced stone. Its other source of wealth, a monopoly on the production of domestic cattle, was short-lived. These sites were abandoned because their success depended on products that lost their market and on farming and herding that were limited in scope. There is no evidence that they depleted the soils or the herds on which their livelihood depended.

For Sherratt, the eminent reviser of the early history of agriculture, the important factor is not domestication in and of itself. Grains transformed from a forager’s resource into a farmer’s staple were important but did not cause the Neolithic Revolution.16 That revolution rested on the combination of single domesticates into a larger pattern of cultivation. This combination depended on the geographical diversity of the Jordan Valley, where a variety of cultures exploiting different ecosystems traded goods and technologies. The resulting synergy produced and combined technological and cultural advances that together made the Neolithic Revolution.17

The Mediterranean founder crops, or “primary domesticates,” are few in number. They include two or three varieties of wheat along with barley, lentils, peas, chickpeas, and bitter vetch. Rye was cultivated at sites on the fringes of the Fertile Crescent, but it was not a large element in the regional diet. All these crops were descendants of wild grasses that foragers, like those living in the earliest period at Jericho and Abu Hureyra, had gathered for hundreds of years. Edged tools for cutting the stalks of wild grains and grinders for transforming their seed into meal existed long before the grasses became domesticated. Both technologically and temporally, the transition from a forager’s lifestyle to that of a cultivator was slow and incremental.

As foragers morphed into farmers, the wild plants they cultivated changed as well. Wild plants that are adapted to their environment have genetic profiles that match the climate and soil they depend on. When farmers cultivate these wild grasses in a new environment, they replace those natural conditions with artificial ones. The change in environment does not stop the genetic process, but it introduces new conditions that redirect it. Many traits that existed before remain essential to a crop’s success, but some features that helped the plant survive in the wild are not favored by cultivation, so over time these diminish or disappear altogether. Alterations of this kind are what make cultivated grains different from their wild progenitors and what make domestication traceable in the archaeological record.

In the wild, ripe seeds that fall from a grass germinate at different rates depending on the thickness of their husks. Some sprout during the next season when conditions are right, but others lie in the soil for years before germinating. This variability in the pace of germination allows wild grasses to hedge their bets. The next growing season might be a good one, or it might not. Thin-husked seeds that sprout then may not mature, but thicker-husked, slower-germinating seeds that can wait out one or more summers may survive to sprout later on. In a farmer’s field, however, seeds that do not sprout in the year they are planted rarely get the chance to produce offspring. The rhythm of sowing and harvesting favors quick-sprouting thin-husked grasses.

Thinner-husked, faster-germinating seeds are richer in starch, whereas thicker-husked seeds have more proteins and fatty acids. By favoring faster-germinating seeds, farmers produce domesticated grains that are starchier and lower in oils and proteins. Seed for seed, they are less nutritious than their wild forms. Domestication makes up for this drop in the nutritional value of a single seed by vastly increasing the available supply of grain, but the subtle switch from protein-based to starch-based nutrition poses metabolic problems for some humans.

Harvesting also shapes the crop. Wild plants must drop their seeds so that they can sprout, but with cultivated grasses, only those seeds that remain on the stalk at harvest time are gathered and reseeded. Grains that mature slowly are infertile when harvested early and do not sprout if sown as seed. Simply by sowing and harvesting, Neolithic farmers over many generations changed wild grasses into crops that were different from their wild forms.18

The thousands of modern varieties and hybrids of the Fertile Crescent founder crops feed much of the world today. Foods like wheat, olives, and wine are our strongest and most intimate link to the deep past of the Mediterranean. But as difficult as it might be to imagine, the world’s entire diet today remains rooted in a limited number of Neolithic events that occurred in different parts of the globe.19 The full range of founder crops from all the Neolithic centers feed every person on earth. The biggest component of the contemporary world diet that owes its origins to the Mediterranean region is wheat. Although there are now many varieties of wheat, descendants of the earliest cultivars are still widely grown. Triticum dicoccum, or emmer wheat, is the domesticated descendant of a wild grass called Triticum dicoccoides. According to the food authority Alan Davidson, in the modern world it is grown primarily to feed animals, “although good bread and other baked items could be made from it,” as they undoubtedly were for many thousands of years.20 Triticum monococcum, or einkorn wheat, which many believe is the oldest variety of wheat in cultivation, still grows in poor soils in many parts of the Mediterranean littoral. Modern wheats would not flourish in this environment, but einkorn wheat is one of the most grasslike in its ability to tolerate drought and low levels of soil nutrients.

Today the most widely grown wheat is Triticum aestivum, spring or winter wheat. This variety is called a hard wheat not because its kernels are particularly tough but because its seeds contain a lot of the protein that forms gluten. Gluten binds the ingredients of bread together in an elastic mass that stretches around the bubbles of carbon dioxide exhaled by yeast in the loaf. Durum wheat, as its name suggests, actually does have hard kernels (durum is Latin for “hard”). Ground durum wheat produces semolina, the basis of couscous, the North African staple, and the preferred flour for making pasta.

Barley may have been collected and cultivated before wheat. Stores of wild barley gathered ten thousand years ago were uncovered by excavators in Syria. Barley became the main crop of the Fertile Crescent partly because of its tolerance for salty soils, and its cultivation spread both east and west. It grew in Spain seven thousand years ago, in India five thousand years ago, and in China four thousand years ago. Barley contains little gluten and is difficult to bake into yeasty loaves. It was boiled and eaten like oatmeal or baked into flatbreads. Some beer was made with barley. Today most barley is grown as animal feed, but it is still used in soups in the West. In Tibet, where the cold climate favors this hardy grain, roasted and ground barley is a primary food.

Most of the commodity foods of the modern world—foods that are mass-produced and unspecialized—are cereals or tubers. Legumes like peas and beans, which played a major role in early cultivation, are now only secondary food crops. Both farmers and dieticians know how unfortunate this is. Unlike cereals, which deplete the soil, legumes collect nitrogen—a vital soil nutrient—in nodules on their roots and restore it to the soil as they decompose. Legumes are nutritious in themselves, but their proteins also complement those in grains. Wheat contains all the major amino acids—the building blocks of protein—that humans need except lysine. Legumes are rich in lysine, so a diet that combines grains and legumes is nutritionally complete. Vegetarians recognize this fact, as do the millions worldwide whose diet rests on rice and beans or a similar combination. The founder legumes are the common or garden pea, the lentil, and the chickpea, along with another member of the pea family that people seldom eat today, bitter vetch.

Even though cereal farming was important in the Mediterranean basin, agriculture there came to be focused on a different suite of crops and crop technologies.21 The intensive and widespread cultivation of olives, grapes, and figs has been fundamental to Mediterranean agriculture since its first development in the late Neolithic. The widespread creation of terraced hillsides for the cultivation of these three crops has had a profound effect on the regional landscape.

The range of the olive tree virtually defines the limits of the Mediterranean region. Three categories of olive-related trees exist around the Mediterranean today, and their proliferation and variety made the job of finding the origin of the olive difficult. The oleaster is the indigenous species from which the cultivated olive was developed. Some modern oleasters, however, are actually the offspring of domestic olives—a kind of genetic return to the wild. During each ice age, the oleasters of the Mediterranean, like every other plant and animal species that survived, were forced into isolated refuges. Repeated glaciations and retreats led to the creation of distinct regional varieties of the plant. Given these complexities, it is no surprise that the genetic history of the olive tree is vexed. It appears to be the case that cultivated olives are closer genetically to the oleasters of the eastern Mediterranean than to those of the western part of the region. This suggests that the cultivated olive originated in the east and spread through the Mediterranean basin in much the same way as cereal crops,

The genus Ficus, to which the domestic fig belongs, thrives in tropical or semitropical climates worldwide. The historical domestic fig comes from a wild variety of West Asian origin called the caprifig. The botanical name of one domestic variety, Ficus carica, reflects the Greek belief that Caria in West Asia was the home of the fig. It is more likely that the city on the western coast of modern Turkey was only a way station on the pathway of the domestic fruit, not its point of origin.

Like the fig, the grape exists in many varieties worldwide. The particular variety that flourished in the Mediterranean, Vitis vinifera, the wine grape, is indigenous to areas east of modern Turkey and south of the Black Sea. Both genetic and archaeological evidence confirm the long-held view that grape cultivation began in this area and spread both around the Mediterranean toward Spain and eastward toward China. Recently the picture has been complicated by genetic studies suggesting that crosses with wild grapes may have occurred along the way that produced a measurable genetic difference between the grape varieties of the eastern and the western Mediterranean.22

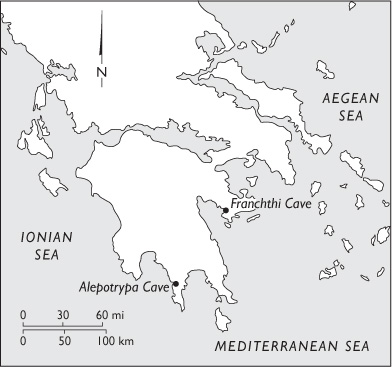

Scientists continue to argue over the exact routes that led to the near universal adoption of agriculture in West Asia, Europe, and North Africa. One of the few places in the Mediterranean basin where the transition from foraging to farming can be studied systematically is Franchthi Cave in southern Greece. A decade of excavations begun there in 1967 revealed an incredibly long history of repeated occupation. The first datable use of the cave occurred more than twenty thousand years ago. From then on, hunter-gatherers visited the cave from time to time. The bones of the animals they killed and ate show a gradual change in their quarry as the climate cooled, then warmed. Twenty thousand years ago, they ate more wild horses than anything else. By the end of the Paleolithic, some ten thousand years ago, red deer were their most important prey. They also foraged for wild plant foods. By about 11,000 BCE, they had discovered wild stands of pistachios and almonds and ate nuts along with wild vetch and lentils.23

Deer and small animals remained a major part of their diet in the post-glacial period. Fish from the Mediterranean played an increasing part, and the size of some of the fish they were catching suggests that they had boats that could take them into deep water. Pieces of obsidian from the island of Melos, which could only have come by sea, reinforce this notion. Wild oats and barley became a common part of the local diet, and stones to grind them became a common implement.

The Neolithic layers at the cave are sharply divided from the older remains beneath them. In the Neolithic layers the skeletons of domestic sheep and goats show up. Domesticated wheat, barley, and lentil abound, and a variety of new stone tools make their appearance. After a relatively short delay, pottery is present. When these tools and fruits of cultivation make their debut, evidence of the traditional diet vanishes. There are no more seeds from the wild varieties of oats, barley, lentils, and peas that had been staples of the foragers’ diet.

Franchthi Cave is at a crossroads on a major cultural highway. From this exceptionally well-documented and thoroughly published site, it is possible to get a sense of the revolution that passed through in Neolithic times. There is no question that the transition from foraging to cultivation was relatively swift. Nor is there any doubt that the particular grains, pottery, and domestic animals that suddenly show up in the Franchthi subsoil are just like the ones found to the east. The Neolithic Revolution was undoubtedly an easy sell at Franchthi. Unfortunately it is still hard to tell exactly how the change came about. In the continuity between wild and domesticated grains, some researchers see evidence that the inhabitants of the region turned from gathering to cultivating local grasses.24 For other specialists, the sudden appearance of the full Neolithic package strongly suggests, if not colonization, at least an undeniable influence from the east. Did traders in boats bring the knowledge of farming from the east coast of the Mediterranean to this spot? Or did the same boats bring colonists from the east equipped with all the multiple parts of the Neolithic package? DNA research, which has not been published for Franchthi, might resolve some of these questions.

Floodwater farming of the sort that sustained Jericho and Abu Hureyra is rarely possible. Alluvial fans and similar wet spots were and are few and far between in the arid Levant. Domestication of the kind that the residents of Çatalhöyük practiced was not adaptable to all climates either. Natural grasslands provided seasonal food for domestic cattle just as they did for wild cattle, but pasturing cows in other types of terrain was difficult. During the second phase of the agricultural revolution, what Andrew Sherratt called the “Secondary Products Revolution,” these problems were solved by the exploitation of new energy resources and the opening of new ecosystems to farming.

Both developments grew out of and depended on the millennial history of the first agricultural revolution, and both relied on the combination of tools and practices that characterized this epoch. The first-phase “Neolithic package” had bundled cereal cultivation and limited domestication with village architecture and the use of pottery, although not every site made use of every part of the suite of crops and technologies. The second phase added two elements that overcame the limits of floodwater farming and turned agriculture into an ever more widely exploitable resource. The plow and cart pulled by draft animals were one. The other was water management techniques, which made farming flexible and adaptable over a range of ecosystems. As agriculture developed, differences of climate and differences of ecology created a technological divide between cultivators who used groundwater resources—rivers, streams, springs, and lakes—and cultivators who depended on rainfall.

While tradition had long pointed to cereal cultivation as the basis of the Neolithic Revolution, Sherratt argued that cereal cultivation became widespread not because of any improvement in grain stock but because of a magnification of the power available to cultivators. Digging sticks were sufficient for seeding the relatively small areas that floodwater farmers exploited. The much larger areas that irrigation opened up for cultivation required a more powerful tool. The ard, sometimes called the scratch-plow, filled the bill. The ard—formed from a crooked branch with a stubby spur to cut into the earth—created a shallow channel where seeds could be sowed.

Humans can pull or drive shallow plows, but animals do a much better job. They are heavier and more muscular, and their skeletons are better framed for pulling. Getting an animal to perform this or any task, however, was a formidable challenge. At first herders must have maintained an uneasy distance between themselves and the dangerous and excitable cattle that they managed to impound. Herding then played the role of modern canning or refrigeration: it kept the meat fresh until it could be used. As the tradition of herding continued, animals became smaller and more docile. Herders became more adept. Eventually it was possible to work more closely with the animals. The ox-powered plow can be heavier and dig deeper than any plow that humans can pull. It can be used to till large fields in the short period of time that seasonal conditions impose. And with an oxcart, the harvest is gathered and transported efficiently.

Pulling and carrying are not the only things that make domestic animals useful. Goats and sheep produce wool that can be spun into cloth. In the modern world of synthetic fibers, this may seem inconsequential, but in the ancient world, the exploitation of wool expanded the range of garments people could wear and dramatically increased the range of ecosystems that could produce useful products for home consumption and for trade. Before sheep and goats were domesticated, linen was the only cloth manufactured in the Mediterranean. Linen is a lightweight, generally loose-textured cloth that makes clothes suited to warm climates, but it has real disadvantages. Linen is produced from flax, a cultivated plant that competes with food crops for field space and labor resources. Wool is easier to spin into thread than linen and produces heavier yarn and cloth with greater insulating qualities. New raw wool, rich in lanolin, sheds water. Unlike many other fibers, wool remains a good insulator even when it is soaking wet. Because wool makes warm clothing, it extends the range of habitable climates. And because the animals that produce wool, goats and sheep, can live happily in climates that have short growing seasons, raising them increases the range of habitats that can be productive without impinging on scarce arable land, as flax does.

The life of the shepherd is very different from that of the farmer. Farmers live in a village and tend nearby fields. When the fields are idle or fallow, they attend to other tasks that probably do not take them far from home. Shepherds, like traditional foragers, are mobile. The sheep and goats move from one pasture to another and may range over long distances.25 In many Mediterranean economies, flocks have for millennia migrated from low-altitude winter pastures to mountain meadows, where the animals fatten on grass available only during the short summer. This seasonal pattern, called transhumance, provided one of the background rhythms to Mediterranean life from the third century BCE to the twentieth century CE. Though now almost extinct, vestiges of this pattern can still be seen in central Italy, southern France, North Africa, and the Levant. Nomadic herders range even more widely. Exploiting the seasonal availability of forage in harsh climates, nomads travel to widely scattered sites. Unlike the pattern of transhumance, which is repeated every year, the nomad may trace a lifelong pathway with limited repeats.

The activities of shepherds, nomads, and farmers combined to produce a regional economy, and the three groups lived in a symbiotic relationship that was maintained through trade. Their relationship duplicated the geographical relationship among climate zones and cultures that characterized the Jordan Valley and its surroundings. The relationship was easily adaptable to any mix of flatland for grain cultivation and highlands for pasturing. From its point of origin in a particular regional geography, it became a concept, a widely applied system of land use. As such, it was exported regionwide as part of the Neolithic Revolution.

Sherratt emphasizes milk as a significant secondary product of domestication. Though it might seem obvious that the earliest herders would have seized on milk as a nutritious and easily available food, this notion is unrealistic. Cows only produce milk when they have a calf to nourish. Where forage is poor, they can produce only enough milk to feed their offspring. The herder who forced the issue might obtain a little milk at the expense of a valuable calf. Even when there is excess milk, the job of collecting it may not be easy. The cow’s ability to express milk involves an involuntary response—the “let-down reflex”—that makes the milk available in the teat. Early images of milking show penned cows with calves nearby. Seeing a calf triggers the let-down reflex and makes the milk flow. Over generations, as cattle were selected for the abundance and richness of their milk, as well as its availability, milking became routine. Modern dairy cows produce enormous quantities of milk daily, and anyone who learns the knack can milk a cow.

Milk, then, was originally a seasonal product that was only available from calving time in the spring to sometime in autumn, when the calves were weaned and the cows’ milk dried up. Preserving milk for year-round consumption was desirable, and over time a number of techniques were discovered to make this possible. These techniques varied widely from region to region. The rich variety of preserved milk products that exist today is a survival of such techniques. In the Mediterranean region, where thousands of cheese varieties are still made, the raw materials include sheep, goat, buffalo, and cow’s milk. The cheeses made from this variable stock range in texture from rock hard to runny. Cheese may come in wheels that weigh hundreds of pounds or in disks or wedges weighing a few ounces. Climate has a strong effect on the composition and preservation of cheese. Mediterranean cheeses tend to be made of sheep or goat’s milk rather than cow’s milk because of the kind and extent of pasture. Cheeses that are soft and quick to form predominate. The region also produces other preserved milk products. Yogurt and kefir are probably the best known and most widely used.

Like meat, milk is rich in proteins; it also contains large amounts of calcium and the vitamin D that metabolizes calcium in the body. Processing milk to make cheese or yogurt preserves most of the protein and calcium along with a percentage of the vitamin D. The material difference between processed and unprocessed milk is a surprising one. Milk is produced by mammals to nourish their young. After the young are weaned, their small intestines produce decreased amounts of the enzyme lactase, which breaks down the lactose in milk into easily metabolized sugars. By adulthood, many humans, like other mature mammals, have stopped producing this enzyme altogether. Without lactase they become “lactose intolerant.” Processing milk does away with a large percentage of the lactose, so cheese is more digestible for many than raw milk. In yogurt and kefir, bacterial cultures predigest the lactose.

Scientists have recently discovered the genes responsible for switching off lactase production in adults. This genetic switch that triggers lactose intolerance is widespread, especially in Asia and Africa. The lowest levels of lactose intolerance are found in northern Europe, where milk and milk products have formed an important part of the diet for millennia. Attempts to link the gene for lactase production with the advance of the Secondary Products Revolution have not, however, been successful.

Using the milk of domestic animals increased the food supply and made storing food possible. It also increased the efficiency of herding. Butchering a cow for meat yields a bonanza of calories for human use, so slaughtering a cow is like winning a lottery. Milking a cow over a number of years turns the lottery into an annuity. Milking not only harvests more calories for human use but recovers a larger percentage of the energy the cow herself has harvested from the ecosystem. Efficiency of this kind is relatively unimportant when cattle can get all their nourishment from uncultivated pastures. In some Mediterranean climates, however, winter forage is not available, and animals eat grain that has been cultivated and harvested. In that situation, it is extremely important that the farmer make the most efficient possible use of all the potential animal products. When cattle compete with humans for cultivated grain, the total calories produced may well shrink. Grain-feeding rather than pasturing cattle that are destined only to be slaughtered, an increasingly common modern practice, is incredibly wasteful for just this reason.

Other animals entered the domesticated community in different times and places. Dogs, which make animal herding and protection easier, began their partnership with humans at least fourteen thousand years ago, much longer ago if the snub-toed footprints at Chauvet Cave belonged to a domesticated dog. Cooperation between humans and dogs probably began when wolves followed human hunters or foraged for scraps on the edge of campsites. Dogs do not have to compete with humans for food; they are willing scavengers of discard and waste.

Cats, which have never been fully domesticated, probably began their association with humans when stores of wild or domesticated grain attracted rodents to villages. Cats entered village life in the Levant where agriculture began, and the domesticated cat, which has a single genetic origin, followed the same path as the expanding frontier of Neolithic agriculture.

Current research suggests that the ancestors of today’s chickens originally came from Southeast Asia. They were domesticated in China eight thousand years ago and reached the Mediterranean through central Russia sometime in the Neolithic. They were and remain an important source of food throughout the Mediterranean region. In the modern world, the great majority of chickens eat grain like every other domesticated animal. But just like dogs, chickens are content to scavenge for scraps of discarded food and scratch for grubs and worms like other birds. They do not have to compete with humans for food.26

The wild boars from which modern pigs descend were, like chickens, native to Southeast Asia. From there they spread across the Asian continent and into Europe. Recent DNA research on domestic pigs reveals a degree of genetic variability that suggests that pigs were domesticated in several different places.27 Though pigs are intelligent and as capable of learning as a dog, they are not herd or pack animals, so it must have been difficult to learn the techniques of driving them. Since no valuable secondary products are produced by pigs, they might have been an impractical animal to domesticate. In the Middle Ages, pigs and dogs foraged together in city streets. This may have been true in the Neolithic as well, but their more important source of food throughout premodern history came from an ecosystem that no other domestic species could safely exploit. Wild pigs were native to forests, and domestic pigs found food in an environment where sheep and cattle would starve. Acorns and other nuts, along with grubs, roots, edible fungi, small animals, and carrion nourished pigs. While humans occasionally ate acorns, they were never a major source of nutrition, so by and large, pigs extended the range of exploitable ecosystems rather than competing with humans for food, as they do today. Today pigs consume enormous amounts of cultivated grains and other manufactured foods, of which they return only a small percentage as usable calories. Like grain-fed chickens and cattle, pigs create an ecological deficit.

Neolithic people continued to forage and hunt, and they grew other crops that supplemented their diet and gave it variety. Considered as a whole, the human diet of the Mediterranean littoral was rich, varied, and nutritious. It reflected the interaction of multiple cultures—herding and farming—and relied on the cooperative use of products from multiple ecosystems. It is admirable as an intricate and carefully managed means of food production. It is equally admirable because agriculture as an industry today, its abuses notwithstanding, remains based on the suite of crops and techniques that were introduced in a few Neolithic events. In our day, when food literacy is becoming increasingly important, it is essential to remember that our appreciation and understanding of what nourishes us cannot be limited to the farm-to-table connection. We need to be aware, too, of how the crops we enjoy arrived on the farmer’s doorstep in the first place. Neolithic domestications brought them there.

Theorists and researchers long shared the belief that the Neolithic Revolution led to population increase and longer life. They took it for granted that agriculture and domestication had erased many of the dangers and uncertainties of a hunter-gatherer lifestyle. Conflicts with large prey and competition with other predators, the continual handling of weapons, and the high risk of an unsuccessful hunt suggest that life before agriculture was a difficult one. The English philosopher Thomas Hobbes described this era in history as one in which humankind lived in a state of constant apprehension. “In such condition there is no place for industry, because the fruit thereof is uncertain: and consequently no culture of the earth; no navigation, nor use of the commodities that may be imported by sea; no commodious building; no instruments of moving and removing such things as require much force; no knowledge of the face of the earth; no account of time; no arts; no letters; no society; and which is worst of all, continual fear, and danger of violent death; and the life of man, solitary, poor, nasty, brutish, and short.”28

For many reasons, scholars in the late twentieth century began to find Hobbes’s view unacceptable. The impression grew that the Paleolithic was something of a utopia and the Neolithic its antithesis and destroyer.29 Nutritionists point out that the kinds of food that the Neolithic Revolutions made available were considerably different from the foods that humans and their hominid ancestors had eaten before cultivation. Cultivated grains and meat from domestic animals were higher in fats and sugars than foraged food. This trend toward fats and sugars has been dramatically accelerated in the contemporary diet, which contains large amounts of processed foods, but some effects would have been felt in the early Neolithic. Sugars in foods increase blood glucose levels and stimulate insulin overproduction, which may lead to insulin resistance. Diseases related to this syndrome include obesity, coronary heart disease, type 2 diabetes, hypertension, and other “diseases of civilization.”30 Changes in the Neolithic diet created vitamin and mineral deficiencies associated with eating a small variety of foods and caused an increase in food acidity. The balance between two essential electrolytes in the body, potassium and sodium, also changed as humans switched from foraging to cultivation. Introduced in the Neolithic and exacerbated by modern food production, these changes have created the characteristic patterns of modern mortality. What we die of, like what we live on, was shaped by the Neolithic Revolution.

In the archaeological literature it has become commonplace to see the Neolithic as generally characterized by “an overall degradation of the quality of human health.”31 Though this conclusion is based on research that has become increasingly precise and definitive in the past few decades, the amount and kind of change in human health has been overstated. In 1958 members of the Greek Speleological Society explored Alepotrypa Cave in the southern Peloponnesus. Excavations began there in 1970 and continue to the present. The cave and the areas around it were occupied between seven thousand and fifty-two hundred years ago, until an earthquake sealed it off and preserved its archaeological remains. Artifacts and animal bones collected there point to a Neolithic lifestyle. Skeletal remains from a minimum of 160 persons have been found in the cave and meticulously studied.

The detailed picture of Neolithic health that the site paints is less dire than predicted, given the gloomy views of many in the archaeological community. It probably comes as no surprise that Neolithic life spans were, on average, shockingly brief by modern standards. The expectation of life at birth was under eighteen years. This dramatic figure, however, is misleading. If an average baby can hope to live only eighteen years, it is because a great many babies in such communities die in infancy or childhood. In fact, this population shows an “unusually high prevalence of child mortality.” By age ten, though, the picture looks quite different. For the child who survives, the horizon has expanded. The researcher concludes that “after the critical age of ten years, individuals can be expected to live to a mature age.” Still, the oldest individual in the sample died at age fifty, and the average age at death was twenty-nine.32

Franchthi Cave and Alepotrypa Cave provide glimpses of culture, diet, and health during the Neolithic.

As contemporary television programs show, skeletons can offer testimony about a life lived. Like worked stones, however, their range of expression is limited. What the skeletal remains from Alepotrypa represent are lives marked by the effects of Neolithic agriculture. Anemia was widespread and afflicted almost 60 percent of the population. In most cases, the anemia was mild; only a small fraction suffered severely. Iron deficiency is the most common cause of anemia, although rickets and scurvy leave similar marks on bones. Since cereal crops are low in iron, the deficiency is common in agricultural communities. There was some evidence for occasional halts in the growth of bones. Severe malnutrition can cause breaks in the pattern of bone growth. One failed crop can produce this effect.

Though the population was generally young, arthritis was common. Other skeletal evidence suggests prolonged stress. Those bones belonged to people who traveled long distances on foot, carried heavy loads, worked hard often on their knees, and used their hands. Despite these hardships, there was little evidence of injury. The only indication of trauma came from the skulls of nine individuals. Each had small head fractures that were well healed by the time of their deaths. To the researcher, these injuries suggested “interpersonal aggression and conflict but not lethal encounters…. Blunt objects such as stones might have been used to inflict this kind of trauma.”33

Teeth show the effects of Neolithic life most strongly. We might expect a diet rich in cereals to have little effect on hard tooth enamel, but oddly enough, the opposite is true. Grains may be soft, but the mortars and pestles that ground them were typically made of stone. As the grain was ground, the stone wore away, and the fine grit that eroded from it ended up in food. The teeth of the Alepotrypa farmers have shallow dish-shaped wear patterns on all their chewing surfaces. Otherwise, tooth health was remarkably good; there were few cavities and little evidence of infection.

The evidence from Alepotrypa confirms the general impression among archaeologists that human health and vigor decreased in the Neolithic period. The strongest impression that the data give, however, is that things could have been a great deal worse. Infant mortality must have been high, but there is little evidence of severe, prolonged anemia or chronic malnutrition among adults. Physical labor imposed its stresses, but crippling illness and severe trauma were not at all common. The researcher concludes that the evidence points to “a slight overall decline of health status with the transition to agriculture.”34

These conclusions take for granted that populations before the Neolithic would have been healthier, but skeletons of Paleolithic populations in the Old World remain scarce. Without that kind of background data, it really is impossible to say whether the Alepotrypa Cave data indicate a general decline in health, as most researchers assume, little change, or actual improvement. One set of research data, which has not received wide attention, does offer comparative information. Two Hungarian demographers looked at skeletal data for Paleolithic and Neolithic populations in a similar geographical setting. Their data suggested that life expectancy at the beginning of the Neolithic rose by about four years.35

The best general assessment of the impact of the Neolithic on human health is a measured one. Two factors need to be weighed. One is the enormous increase in the human population that the Neolithic created. The other is what appears to be a dip, though a slight one, in human health and an increase in endemic disease. The “diseases of civilization” have their remote origins in the Neolithic diet, but their prevalence today reflects the dramatic upswing in fats and sugars that characterize the modern industrialized diet and the simultaneous stripping away of such traditional causes of death as infection. A judicious observer might reject Hobbes’s gloomy assessment but would have to concede that without the Neolithic foods on our tables today, there would be many fewer humans populating the world, and those few would be living a life that was far from utopian.