INTRODUCTION

‘This Marvellous Invention’

Of all mankind’s manifold creations, language must take pride of place. Other inventions – the wheel, agriculture, sliced bread – may have transformed our material existence, but the advent of language is what made us human. Compared to language, all other inventions pale in significance, since everything we have ever achieved depends on language and originates from it. Without language, we could never have embarked on our ascent to unparalleled power over all other animals, and even over nature itself.

But language is foremost not just because it came first. In its own right it is a tool of extraordinary sophistication, yet based on an idea of ingenious simplicity: ‘this marvellous invention of composing out of twenty-five or thirty sounds that infinite variety of expressions which, whilst having in themselves no likeness to what is in our mind, allow us to disclose to others its whole secret, and to make known to those who cannot penetrate it all that we imagine, and all the various stirrings of our soul’. This was how, in 1660, the renowned grammarians of the Port-Royal abbey near Versailles distilled the essence of language, and no one since has celebrated more eloquently the magnitude of its achievement. Even so, there is just one flaw in all these hymns of praise, for the homage to language’s unique accomplishment conceals a simple yet critical incongruity. Language is mankind’s greatest invention – except, of course, that it was never invented.

This apparent paradox is at the core of our fascination with language, and it holds many of its secrets. It is also what this book is about.

Language often seems so skilfully drafted that one can hardly imagine it as anything other than the perfected handiwork of a master craftsman. How else could this instrument make so much out of barely three dozen measly morsels of sound? In themselves, these configurations of the mouth – p, f, b, v, t, d, k, g, sh, a, e and so on – amount to nothing more than a few haphazard spits and splutters, random noises with no meaning, no ability to express, no power to explain. But run them through the cogs and wheels of the language machine, let it arrange them in some very special orders, and there is nothing that these meaningless streams of air cannot do: from sighing the interminable ennui of existence (‘not tonight, Josephine’) to unravelling the fundamental order of the universe (‘every body perseveres in its state of rest, or of uniform motion in a right line, unless it is compelled to change that state by forces impressed thereon’).

The most extraordinary thing about language, however, is that one doesn’t have to be a Napoleon or a Newton to set its wheels in motion. The language machine allows just about everybody – from pre-modern foragers in the subtropical savannah, to post-modern philosophers in the suburban sprawl – to tie these meaningless sounds together into an infinite variety of subtle senses, and all apparently without the slightest exertion. Yet it is precisely this deceptive ease which makes language a victim of its own success, since in everyday life its triumphs are usually taken for granted. The wheels of language run so smoothly that one rarely bothers to stop and think about all the resourcefulness and expertise that must have gone into making it tick. Language conceals its art.

Often, it is only the estrangement of foreign tongues, with their many exotic and outlandish features, that brings home the wonder of language’s design. One of the showiest stunts that some languages can pull off is an ability to build up words of breath-breaking length, and thus express in one word what English takes a whole sentence to say. The Turkish word şehirlileştiremediklerimizdensiniz, to take one example, means nothing less than ‘you are one of those whom we can’t turn into a town-dweller’. (In case you are wondering, this monstrosity really is one word, not merely many different words squashed together – most of its components cannot even stand up on their own.) And if that sounds like some one-off freak, then consider Sumerian, the language spoken on the banks of the Euphrates some 5,000 years ago by the people who invented writing and thus kick-started history. A Sumerian word like munintuma’a (‘when he had made it suitable for her’) might seem rather trim compared to the Turkish colossus above. What is so impressive about it, however, is not its lengthiness, but rather the reverse: the thrifty compactness of its construction. The word is made up of different ‘slots’ ![]() , each corresponding to a particular portion of meaning. This sleek design allows single sounds to convey useful information, and in fact even the absence of a sound has been enlisted to express something specific. If you were to ask which bit in the Sumerian word corresponds to the pronoun ‘it’ in the English translation ‘when he had made it suitable for her’, then the answer would have to be … nothing. Mind you, a very particular kind of nothing: the nothing that stands in the empty slot in the middle. The technology is so fine-tuned, then, that even a non-sound, when carefully placed in a particular position, has been invested with a specific function. Who could possibly have come up with such a nifty contraption?

, each corresponding to a particular portion of meaning. This sleek design allows single sounds to convey useful information, and in fact even the absence of a sound has been enlisted to express something specific. If you were to ask which bit in the Sumerian word corresponds to the pronoun ‘it’ in the English translation ‘when he had made it suitable for her’, then the answer would have to be … nothing. Mind you, a very particular kind of nothing: the nothing that stands in the empty slot in the middle. The technology is so fine-tuned, then, that even a non-sound, when carefully placed in a particular position, has been invested with a specific function. Who could possibly have come up with such a nifty contraption?

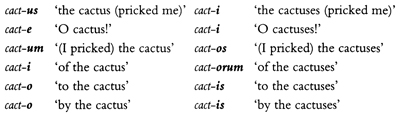

My own curiosity about such questions arose when, as a boy, I first came across a strange and complex structure in a foreign language, the Latin case system. As it happened, I was not particularly put out by the idea that learning a language involved memorizing lots of fiddly new words. But this Latin set-up presented a wholly unfamiliar concept, which looked intriguing but also rather daunting. In Latin, nouns don’t just have one form, but come in many different shapes and sizes. Whenever a noun is used, it must have an ending attached to it, which determines its precise role in the sentence. For instance, you use the word cactus when you say ‘the cactus pricked me’, but if you prick it, then you must remember to say cactum instead. When you are pricked ‘by the cactus’, you say cacto; but to pick the fruit ‘of the cactus’, you need to say cacti. And should you wish to address a cactus directly (‘O cactus, how sharp are thy prickles!’), then you would have to use yet another ending, cacte. Each word has up to six different such ‘cases’,* and each case has distinct endings for singular and plural. Just to give an idea of the complexity of this system, the set of endings for the noun cactus is given overleaf:

And as if this were not bad enough, the endings are not the same for all nouns. There are no fewer than five different groups of nouns, each with an entirely different set of such endings. So if, for instance, you wish to talk about a prickle instead, you have to memorize a different set of endings altogether.

While struggling to learn all the Latin case endings by heart, I developed pretty strong feelings towards the subject, but I wasn’t quite sure whether it was a matter more of love or of hate. On the one hand, the elegant mesh of meanings and forms made a powerful impression on me. Here was a remarkable structure, based on a simple yet inspired idea: using a little ending on the noun to determine its function in the sentence. This clever device makes Latin so concise that it can express gracefully in a few words what languages like English need longer sentences to say. On the other hand, the Latin case system also seemed both arbitrary and unnecessarily complicated. For one thing, why did there have to be so many different sets of endings for all the different groups of nouns? Why not just have one set of endings – one size to fit all? But more than anything, there was one question I could not get out of my mind: who could have dreamt up all these endings in the first place? And if they weren’t invented, how else could such an elaborate system of conventions ever have arisen?

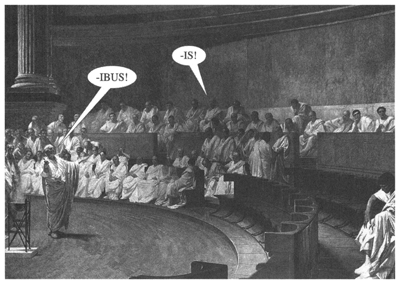

I had childish visions of the elders of ancient Rome, sitting in assembly one hot summer day and debating what the case endings should be. They first decide by vote that -orum is to be the plural ending of the ‘genitive’ case (‘of the cactuses’), and then they start arguing about the plural ending for the ‘dative’ case (‘to the cactuses’). One party opts for -is, but another passionately advocates -ibus. After heated debate, they finally agree to reach an amicable compromise. They decree that the nouns in the language will be divided into different groups, and that some nouns will have the ending -is, while others will take -ibus instead.

In the cold light of day, I somehow suspected that this wasn’t really a very likely scenario. Still, I couldn’t begin to imagine any plausible alternative which would explain where all these endings could have sprung from. If this intricate system of conventions had not been designed by some architect and given the go-ahead by a prehistoric assembly, then how else could it have come about?

Of course, I was not the first to be baffled by such problems. For as long as anyone can remember, the origins of language’s artful construction have engaged scholars’ minds and myth-makers’ imaginations. In earlier centuries, the answer to all these questions was made manifest by Scripture: like everything else in heaven and earth, language was invented, and the identity of the inventor explained its miraculous ingenuity. Language declared the glory of God, and its accomplishment showed his handiwork.

But if language was indeed divinely conceived and revealed to Adam fully formed, then how was one to account for its many less than perfect aspects? For one thing, why should mankind speak in so many different tongues, each one boasting its own formidable selection of complexities and irregularities? The Bible, of course, has an explanation even for these flaws. God quickly came to regret the tool that he had given mankind, for language had made people powerful, too powerful, and words had given them the imagination to lust for even more power. Their ambition knew no bounds, ‘and they said: go to, let us build us a city and a tower, whose top may reach unto heaven’. And so, to thwart their overweening pride, God scattered the people over the face of the earth, and confounded their languages. The messy multiplicity of languages could thus be explained as God’s punishment for human hubris.

The story of the Tower of Babel is a remarkable evocation of the power of language, and is surely a premonition of the excesses that this power has made possible. Taken literally, however, neither invention by divine fiat nor dispersal as a punishment for human folly seems at all likely today. But has anyone ever come up with a more convincing explanation?

In the nineteenth century, when the scientific study of language began in earnest, it seemed at first as if the solution would not be long in coming. Once linguists had subjected the history of language to systematic examination, and succeeded in understanding perhaps its most surprising trait, the incessant changes that affect its words, sounds and even structures over the years, they would surely find the key to all mysteries and discover how the whole edifice of linguistic conventions could have arisen. Alas, when linguists delved into the history of the European tongues, what they began to unearth was not how complex new structures grew, but rather how the old ones had collapsed, one on top of another. Just as one example, Latin’s mighty case system first fractured and then fell apart in the latter days of the language, when the endings on nouns were worn away and disappeared. A noun such as annus, ‘year’, which in classical Latin still had eight distinct endings for different cases in the singular and the plural (annus, anne, annum, anni, anno, annos, annorum, annis), ended up in the daughter language Italian with only two distinct forms intact: anno in the singular (with no differentiation of case) and anni in the plural. In another daughter language, French, the word has shrunk even further to an endingless an, and in the spoken language, not even the distinction between singular and plural has been maintained on the noun, since the singular an and the plural ans are usually pronounced the same way – something like {ã} (curly brackets are used here to mark approximate pronunciation).

And it is not only the descendants of Latin, and not only case systems, which have suffered such thorough disintegration. Ancient languages such as Sanskrit, Greek and Gothic flaunted not just highly complex case systems on nouns, but even more complex systems of endings on verbs, which were used to express a range of intricate nuances of meaning. But once again, most of these structures did not survive the passing of time, and fell apart in the modern descendants. It seemed that the deeper linguists dug into history, the more impressive was the make-up of words they encountered, but when they followed the movement of languages through time, the only processes that could be discerned were disintegration and collapse.

All the signs, then, seemed to point to some Golden Age lying somewhere in the twilight of prehistory (just before records began), when languages were graced with perfectly formed structures, especially with elaborate arrays of endings on words. But at some subsequent stage, and for some unknown reason, the forces of destruction were unleashed on the languages and began battering the carefully crafted edifices, wearing away all those endings. So, strangely enough, what linguists were uncovering only seemed to confirm the gist of the biblical account: God gave Adam a perfect language some 6,000 years ago, and since then, we have just been messing it up.

The depressingly one-sided nature of the changes in language left linguists in a rather desperate predicament, and gave rise to some equally desperate attempts at explanation. One influential theory contended that languages had been in the business of growing more complex structures only in the prehistoric era – that period which cannot be observed – because in those early days, nations were busy summoning all their strength for perfecting their language. As soon as a nation marched on to the stage of history, however, all its creative energy was expended on ‘history-making’ instead, so there was nothing left to spare for the onerous task of language-building. And thus it was that the forces of destruction attacked the nation’s language, and its structures gradually cracked and fell apart.

Was this tall story really the best that linguists could come up with? Surely a more plausible scenario would be that alongside the forces of destruction in language there must also be some creative and regenerative forces at work, natural processes which can shape and renew systems of conventions. After all, it is unlikely that those forces which had originally created the pristine prehistoric structures simply ceased to operate at some random point a few millennia ago, just because someone decided to start the stopwatch of history. So the forces of creation must still be somewhere around. But where? And why are they so much more difficult to spot than the all too evident forces of destruction?

It took a long time before linguists managed to show that the forces of creation are not confined to remote prehistory, but are alive and kicking even in modern languages. In fact, it is only in recent decades that linguists have begun to appreciate the full significance of these creative forces, and have amassed enough evidence from hundreds of languages around the world to allow us a deeper understanding of their ways. At last, linguists are now able to present a clearer picture of how imposing linguistic edifices can arise, and how intricate systems of grammatical conventions can develop quite of their own accord. So today, it is finally possible to get to grips with some of the questions which for so long had seemed so intractable.

* * *

This book will set out to unveil some of language’s secrets, and thereby attempt to dismantle the paradox of this great uninvented invention. Drawing on the recent discoveries of modern linguistics, I will try to expose the elusive forces of creation and thus reveal how the elaborate structure of language could have arisen. (The following chapter will describe in greater depth what ‘structure’ is – from meshes of endings on words to the rules of combining words into sentences – and show how it allows us to communicate unboundedly complex thoughts and ideas.) The ultimate aim, towards the end of the book, will be to embark on a fast-forward tour through the unfolding of language. Setting off from an early prehistoric age, when our ancestors only had names for some simple objects and actions, and only knew how to combine them into primitive utterances like ‘bring water’ or ‘throw spear’, we will trace the emergence of linguistic complexity and see how the extraordinary sophistication of today’s languages could gradually have evolved.

At first sight, this aim may seem much too ambitious, for how can anyone presume to know what went on in prehistoric times without indulging in make-believe? The actual written records we have for any language extend at most 5,000 years into the past, and the languages that are attested by that time are by no means ‘primitive’. (Just think of Sumerian, the earliest recorded language, with its cleverly designed sentence-words like munintuma’a, and with pretty much the full repertoire of complex features found in any language.) This means that the primitive stage that I have just referred to, and which can rather loosely be called the ‘me Tarzan’ stage, must lie long before records begin, deep in the prehistoric past. To make matters worse, no one even knows when complex languages first started to evolve (more on this later). So without any safe anchor in time, how can linguists ever hope to reconstruct what might have taken place in that remote period?

The crux of the answer is one of the fundamental insights of linguistics: the present is the key to the past. This tenet, which was borrowed from geology in the nineteenth century, bears the intimidating title ‘uniformitarianism’, but stands for an idea that is as simple as it is powerful: the forces that created the elaborate features of language cannot be confined to prehistory, but must be thriving even now, busy creating new structures in the languages of today. Perhaps surprisingly, then, the best way of unlocking the past is not always to peer at faded runes on ancient stones, but also to examine the languages of the present day.

All this does not mean, of course, that it is a trivial undertaking to uncover the creative forces in language even in today’s languages. Nevertheless, thanks to the discoveries that linguists have made in recent years, pursuing the sources of creation has become a challenge that is worth taking up, and here, in a nutshell, is how I propose to go about it.

The first chapter will give a clearer idea of what the ‘structure of language’ is all about, by sneaking behind the scenes of language and surveying some of the machinery that makes it tick. Then, having focused on the object of inquiry, we can start examining the transformations that languages undergo over time. The first challenge will be to understand why languages cannot remain static, why they change so radically through the years, and how they manage to do so without causing a total collapse in communication. Once the main motives for language’s perpetual restlessness have been outlined, the real business can begin – examining the processes of change themselves.

First to come under the magnifying glass will be the forces of destruction, for the devastation they wreak is perhaps the most conspicuous aspect of language’s volatility. And strangely enough, it will also emerge that these forces of destruction are instrumental in understanding linguistic creation and regeneration. Above all, they will be indispensable for solving a key question: the origin of the ‘raw materials’ for the structure of language. Where, for instance, could the whole paraphernalia of case endings (as in the Latin -us, -e, -orum, -ibus and so on) have come from? One thing is certain: in language, as in anything else, nothing comes from nothing. Only very rarely are words ‘invented’ out of the blue (the English word ‘blurb’ is reputedly one of the exceptions). Certainly, grammatical elements were not devised at a prehistoric assembly one summer day, nor did they rise from the brew of some alchemist’s cauldron. So they must have developed out of something that was already at hand. But what?

The answer may come as rather a surprise. The ultimate source of grammatical elements is nothing other than the most mundane everyday words, unassuming nouns and verbs like ‘head’ or ‘go’. Somehow, over the course of time, plain words like these can undergo drastic surgery, and turn into quite different beings altogether: case endings, prepositions, tense markers and the like. To discover how these metamorphoses take place, we’ll have to dig beneath the surface of language and expose some of its familiar aspects in an unfamiliar light. But for the moment, just to give a flavour of the sort of transformations we’ll encounter, think of the verb ‘go’ – surely one of the plainest and most unpretentious of words. In phrases such as ‘go away!’ or ‘she’s going to Basingstoke’, ‘go’ simply denotes movement from one place to another. But now take a look at these sentences:

Is the rain ever going to stop?

She’s going to think about it.

Here, ‘go’ has little to do with movement of any kind: the rain is not literally going anywhere to stop, in fact it has no plans to go anywhere at all, nor is anyone really ‘going’ anywhere to think. The phrase ‘going to’ merely indicates that the event will take place some time in the future. Indeed, ‘be going to’ can be replaced with ‘will’ in these examples, without changing the basic meaning in any way:

Will the rain ever stop?

She will think about it.

So what exactly is going on here? ‘Go’ started out in life as an entirely ordinary verb, with a straightforward meaning of movement. But somehow, the phrase ‘going to’ has acquired a completely different function, and has come to be used as a grammatical element, a marker of the future tense. In this role, the phrase ‘going to’ can even be shortened to ‘gonna’, at least in informal spoken language:

Is the rain ever gonna stop?

She’s gonna think about it.

But if you try the same contraction when ‘go’ is still used in the original meaning of movement, you’re gonna be disappointed. No matter how colloquial the style or how jazzy the setting, you simply cannot say ‘I’m gonna Basingstoke’. So ‘going to’ seems to have developed a kind of schizophrenic existence, since on the one hand it is still used in its original ‘normal’ sense (she’s going to Basingstoke), but on the other it has acquired an alter ego, one that has been transformed into an element of grammar. It has a different function, a different meaning, and has even acquired the possibility of a different pronunciation.

Of course, ‘gonna’ is only a very simple grammatical element – not much, you may feel, to write home about. But although ‘gonna’ may seem a rather slight example of ‘the structure of language’, worlds apart from grand architectures such as the Latin case system, the transformations that brought it about encapsulate many of the fundamental principles behind the creation of new grammatical elements. So when its antics have been exposed, they will lead the way to understanding how much more imposing edifices in language could have arisen.

Finally, once the principles of linguistic creation have begun to yield their secrets, and once the major forces that raise new grammatical structures have been revealed, it will be possible to synthesize all these findings into one ambitious thought-experiment, and project them on to the remote past. Towards the end of the book, I will invite you on a whistle-stop tour through the unfolding of language, starting from the primitive ‘me Tarzan’ stage, and ending up with the sophistication of languages in today’s world.

* * *

Before we can begin, however, there are two potential objections which need to be addressed. First, why did I say nothing about what might have happened before the ‘me Tarzan’ stage? Why does our story have to start so ‘late’ in the evolution of language, when there were already words around, rather than right at the beginning, millions of years ago, when the first hominids were coming down from the trees and uttering their first grunts? The reason why we can’t start any earlier is quite straightforward: the ‘me Tarzan’ stage is also the boundary of our knowledge. Once language already had words, it had become sufficiently similar to the present for sensible parallels to be drawn between then and now. For example, it is plausible to assume that the first ever grammatical elements arose in prehistory in much the same way as new grammatical elements develop in languages today. But it is not so easy to peer beyond the ‘me Tarzan’ stage, to a time when the first words were emerging, because we have neither contemporary parallels nor any other sources of evidence to go on. These days, there are no systems of communication which are in the process of evolving their first words. The closest parallel is probably the babbling of babies, but no one knows to what extent, if at all, the development of individual children’s linguistic abilities recapitulates the evolution of language in the human race. And clearly, there are no early hominids around nowadays on whom linguists can test their theories. All we have are a few hand-axes and some dry bones, and these say nothing about how language began. In fact, artefacts and fossils cannot even establish with any confidence when language started to develop. Nothing illustrates our present state of ignorance better than the range of estimates offered for when language might have emerged – so far, researchers have managed to narrow it down to anywhere between 40,000 and 1½ million years ago.

Some linguists believe that Homo erectus, some 1½ million years ago, already had a language that was rather similar to what I have called the ‘me Tarzan’ stage. The arguments they advance are that Homo erectus had a relatively large brain, and used primitive but fairly standardized stone tools, and probably also controlled the use of fire. This hypothesis may be true, of course, but it may well be wide of the mark. The use of tools certainly doesn’t require language: even chimpanzees use tools such as twigs to hunt termites or stones to crack nuts. What is more, chimps’ handling of stones is not an instinct, but a ‘culturally transmitted’ activity found only among certain groups. The skill is taught by mothers to their children, and this is done without relying on anything like a human language. Of course, even the most primitive tools of Homo erectus (flaked stone cores called ‘hand-axes’) are far more sophisticated than anything used by chimpanzees, but there is still no compelling reason why these flaked stones could not have been produced without language, and transmitted from generation to generation by imitation. Brain size is equally problematic as an indication for language, because ultimately, no one has any clue about exactly how much brain is needed for how much language. Moreover, the capacity for language may have been latent in the brain for millions of years, without actually being put to use. After all, even chimpanzees, when trained by humans, can be taught to communicate in a much more sophisticated way than they ever do naturally. So even if the brain of Homo erectus had the capacity for something resembling human language, there is no compelling reason to assume that the capacity was ever realized. The arguments for an early date are therefore fairly shaky.

But the arguments for a late date are pretty speculative too. Most scholars believe that human language (and by this I include the ‘me Tarzan’ stage) could not have emerged before Homo sapiens (that is, anatomically modern humans) arrived on the scene, around 150,000 years ago. Some arguments for this view rely on the shape and position of the larynx, which in earlier hominids was higher than in Homo sapiens and in consequence did not allow them to produce the full range of sounds that we can utter. According to some researchers, hominids prior to Homo sapiens could not, for instance, produce the vowel i {ee}. But ultimately, this does not say very much, since by all accounts, et es perfectle pesseble to have a thoroughle respectable language wethout the vowel i. Various researchers have proposed a much more recent date for the origin of language, and connect it with a so-called ‘explosion’ in arts and technology between 50,000 and 40,000 years ago. At this time, one starts finding unmistakable evidence of art from Eastern Africa, such as ostrich eggshells from Kenya fashioned into disc-shaped beads with a neat hole in the middle. Somewhat later, after 40,000 years ago, European cave paintings provide even more striking signs of artistic creativity. According to some linguists, it is only when there is evidence of such symbolic artefacts (and not just functional tools) that the use of ‘human language’ can be inferred, for after all, the quintessential quality of language is its symbolic nature, the communication with signs that mean something only by convention, not because they really sound like the object they refer to. There are also other tantalizing clues to the capability of our ancestors at around that time. Some time before 40,000 years ago, the first human settlers reached Australia, and since they must have had to build watercraft to get there, many researchers have claimed that these early colonizers would have needed to communicate fairly elaborate instructions.

Once again, however, a note of caution should be sounded. First, a steadily growing body of evidence seems to cast doubt on the ‘explosiveness’ of the explosion in arts and technology, and is pushing the date of the earliest symbolic artefacts further and further backwards. For example, researchers have recently found perforated shell-beads in a South African cave which appear to be clear signs of symbolic art from around 75,000 years ago. So ‘modern human behaviour’, as some archaeologists have labelled it, may have dawned much earlier than the supposed date of around 50,000 years ago, and may have developed more gradually than has sometimes been assumed.

Moreover, there is no necessary link between advances in art and technology and advances in language. To take an obvious example, the technological explosion we are experiencing today was certainly not inspired by an increase in the complexity of language, nor was any advance in language responsible for the industrial revolution, or for any other technological leap during the historical period. And there is an even stronger reason for caution. If technology was always an indication of linguistic prowess, then one would expect the simplest and most technologically challenged hunter-gatherer societies to have very simple, primitive languages. The reality, however, could not be more different. Small tribes with stone-age technology speak languages with structures that sometimes make Latin and Greek seem like child’s play. ‘When it comes to linguistic form, Plato walks with the Macedonian swineherd, Confucius with the head-hunting savage of Assam,’ as the American linguist Edward Sapir once declared. (Later on, I shall even argue that some aspects of language tend to be more complex in simpler societies.)

Needless to say, the lack of any reliable information about when and how speech first emerged has not prevented people from speculating. Quite the reverse – for centuries, it has been a favourite pastime of many distinguished thinkers to imagine how language first evolved in the human species. One of the most original theories was surely that of Frenchman Jean-Pierre Brisset, who in 1900 demonstrated how human language (that is to say, French) developed directly from the croaking of frogs. One day, as Brisset was observing frogs in a pond, one of them looked him straight in the eye and croaked ‘coac’. After some deliberation, Brisset realized that what the frog was saying was simply an abbreviated version of the question ‘quoi que tu dis?’ He thus proceeded to derive the whole of language from permutations and combinations of ‘coac coac’.

It must be admitted that more than a century on, standards of speculation have much improved. Researchers today can draw on advances in neurology and computer simulations to give their scenarios a more scientific bent. Nevertheless, despite such progress, the speculations remain no less speculative, as witnessed by the impressive range of theories circulating for how the first words emerged: from shouts and calls; from hand gestures and sign language; from the ability to imitate; from the ability to deceive; from grooming; from singing, dancing and rhythm; from chewing, sucking and licking; and from almost any other activity under the sun. The point is that as long as there is no evidence, all these scenarios remain ‘just so’ stories. They are usually fascinating, often entertaining, and sometimes even plausible – but still not much more than fantasy.

Of course, this means that our history of language must remain incomplete. But rather than lamenting what can never be known, we can explore the part that does lie within reach. Not only is it a substantial part, it is also pretty spectacular.

* * *

The second possible charge that could be raised against the plan of attack which I have outlined is potentially much more serious, and concerns the question of ‘innateness’: how much of language’s structure is already coded in our genes? Readers who are familiar with the debate over this issue might well wonder how exploring the processes of language change squares with the view – advanced over the last few decades in the work of Noam Chomsky and the influential research programme which he has inspired – that significant elements in the structure of language are specified in our genes. Linguists of the ‘innatist’ school believe that some of the fundamental rules of grammar are biologically pre-wired, and that babies’ brains are already equipped with a specific tool-kit for handling complex grammatical structures, so that they do not need to learn these structures when they acquire their mother-tongue.

Many people outside the field of linguistics are under the impression that there is an established consensus among linguists over the question of innateness. The reality, however, could not be more different. Let five linguists loose in a room and ask them to discuss innateness – chances are you will hear at least seven contradictory opinions, argued passionately and acrimoniously. The reason why there is so much disagreement is fairly simple: no one actually knows what exactly is hard-wired in the brain, and so no one really knows just how much of language is an instinct. (Usually, when something becomes known for a fact, there is little room left for fascinating controversy. There is no longer fierce debate, for instance, about whether the earth is round or flat, and whether it revolves around the sun or vice versa.) Of course, there are some basic facts about innateness that everyone agrees on, most importantly, perhaps, the remarkable ability of children to acquire any human language. Take a human baby from any part of the globe, and plonk it anywhere on earth, say in Indonesian Borneo, and within only a few years it will grow up to speak fluent and flawless Indonesian.

That this ability is unique to human babies is also clear. In Borneo, it is sadly still common practice to shoot female orang-utans and raise their babies as pets. These apes grow up in families, sometimes side by side with human babies of the same age, but the orang-utans never end up learning Indonesian. And despite popular myth, not even chimpanzees can learn a human language, although some chimpanzees in captivity have developed remarkable communicative skills. In the early 1980s a pygmy chimpanzee (or bonobo) called Kanzi made history by becoming the first ape to learn to communicate with humans without formal training. The baby Kanzi, born at the Language Research Center of the Georgia State University, Atlanta, used to play by his mother’s side during her training sessions, when researchers tried (rather unsuccessfully) to teach her to communicate by pointing at picture-symbols. The trainers ignored the baby because they thought he was still too young to learn, but unbeknownst to them, Kanzi was taking in more than his mother ever did, and as he grew up he went on to develop cognitive and communicative skills far surpassing any other ape before. As an adult, he is reported to be able to use over 200 different symbols, and to understand as many as 500 spoken words and even some very simple sentences. Yet although this Einstein of the chimp world has shown that apes can communicate far more intelligently than had ever been thought possible, and thus forced us to concede something of our splendid cognitive isolation, even Kanzi cannot string symbols together in anything resembling the complexity of a human language.

The human brain is unique in having the necessary hardware for mastering a human language – that much is uncontroversial. But the truism that we are innately equipped with what it takes to learn language doesn’t say very much beyond just that. Certainly, it does not reveal whether the specifics of grammar are already coded in the genes, or whether all that is innate is a very general ground-plan of cognition. And this is what the intense and often bitter controversy is all about. Ultimately, there must be just one truth behind this great furore – after all, in theory, the facts should all be verifiable. One day, perhaps, scientists will be able to scan and interpret the activity of the brain’s neurons with such accuracy that its hardware will become just as unmysterious as the shape of the earth. But please don’t hold your breath, because this is likely to take a little while. Despite remarkable advances in neurology, scientists are still very far from observing directly how any piece of abstract information such as a rule of grammar might be coded in the brain, either as ‘hardware’ (what is pre-wired) or ‘software’ (what is learnt). So it cannot be over-emphasized that when linguists argue passionately about what exactly is innate, they don’t base their claims on actual observations of the presence – or absence – of a certain grammatical rule in some baby’s neurons. This rather obvious point should be stressed, because readers outside the field of linguistics need to form a healthy disrespect for the arguments advanced on all sides of the debate. Uncontroversial facts are few and far between, and the claims and counter-claims are based mostly on indirect inferences and on subjective feelings of what seems a more ‘plausible’ explanation.

The most important of these battles of plausibility has been fought on grounds that are at some remove from the course of our historical exploration. The debate is known in linguistic circles as the ‘poverty of stimulus’ argument, and revolves around a perennial miracle: the speech that comes out of the mouth of babes and sucklings. How is it that children manage to acquire language with apparently so little difficulty? And how much of language can children really learn on the basis of the evidence they are exposed to? Chomsky and other linguists have argued that children manage to acquire language from scanty and insufficient evidence (in other words, from ‘poor stimulus’). After all, most children are not taught their mother-tongue systematically, and even more significantly, they are not exposed to ‘negative evidence’: their attention is rarely drawn to incorrect or ungrammatical sentences. And yet, not only do children manage to acquire the rules of their language, but there is a variety of errors that they don’t seem to make to start with. Chomsky claimed that since children could never have worked out all the correct rules purely from the evidence they were exposed to, the only plausible explanation for their remarkable success is that some rules of grammar were already hard-wired in their brain, and so they never had to learn them in the first place.

Other linguists, however, have proposed very different interpretations. Many have argued that children can learn more from the evidence they are exposed to than Chomsky had originally claimed, and that children receive much more stimulus than Chomsky had admitted. Others maintain that children don’t need to master many of the abstract rules that Chomsky postulated, because they can acquire a perfect knowledge of their language by learning much less abstract constructions. Finally, some linguists turn the argument on its head, and claim that the reason why children manage to learn the rules of their language from what appears to be scanty evidence is that language has evolved only those types of rules that can be inferred correctly on the basis of limited data.

The debate is still raging. But in what follows, the issue of learnability will not take centre stage, so it should be fairly easy to stay well clear of the crossfire on the front line. This psychological aspect of the ‘nature versus nurture’ controversy will not impinge directly on our historical exploration, so – at least until the cows come home – I will just regard the question as unresolved. (If you wish to embroil yourself in the details of the controversy, you can find suggestions for further reading in the note here.) Nor will the following pages be concerned with the biological question of the make-up of our brains. Instead, the aim will be to explore how elaborate conventions of communication can develop in human society. In other words, the subject of investigation will not be biological evolution, but rather the processes that are sometimes referred to as ‘cultural evolution’: the gradual emergence of codes of behaviour in society, which are passed down from generation to generation.

Nonetheless, it is inevitable that the question of innateness will hover somewhere in the background, and at least in one sense, I hope that exploring the paths of cultural evolution can make a positive contribution to the debate. The processes through which new linguistic structures emerge can offer a fresh perspective on what elements can plausibly be taken as pre-wired, and in particular, they can point to those areas in the structure of language for which there is no need to invoke innateness. The idea is fairly simple: it seems implausible that specific features in the structure of language are pre-wired in the brain if they could have developed only ‘recently’ (say within the last 100,000 years), and if their existence can be accredited to the natural forces of change that are steering languages even today. In other words, the details of language’s structure which can be put down to cultural evolution need not be coded in the genes (although the ability to learn and handle them must of course be innate). It thus seems implausible to me that the specifics of anything more sophisticated than the ‘me Tarzan’ stage, to which we’ll return in Chapter 7, need to be pre-wired.

In the pages that follow, I hope to make a convincing case for this view, not by investigating the plausibility or otherwise of certain genetic mutations in earlier hominids, nor by exploring the composition of chromosomes or the chemistry of neurons, but by looking at the evidence that language itself supplies in lavish abundance – in the written records of lost civilizations and in the spoken idiom on today’s streets. I invite you, therefore, to set off in pursuit of the elaborate conventions of communication, and discover how systems of sometimes breathtaking sophistication can arise through what appear to be the mundane and commonplace traits of everyday speech. But before we can begin, the object of the chase needs to be identified more clearly: the mysterious ‘structure of language’ – what it is, what it does, and how cleverly it goes about doing it.