Some customers configure all the servers to write their logs to a network share, NFS or otherwise. This setup can be made to work, but it is not ideal.

The advantages of this approach include:

- A forwarder does not need to be installed on each server that is writing its logs to the share

- Only the Splunk instance reading these logs needs rights to the logs

The disadvantages of this approach include:

- The network share can become overloaded and can become a bottleneck.

- If a single file has more than a few megabytes of unindexed data, the Splunk process will only read this one log until all the data is indexed. If there are multiple indexers in play, only one indexer will be receiving data from this forwarder. In a busy environment, the forwarder may fall behind.

- Multiple Splunk forwarder processes do not share information about what files have been read. This makes it very difficult to manage a failover for each forwarder process without a SAN.

- Splunk relies on the modification time to determine whether the new events have been written to a file. File metadata may not be updated as quickly on a share.

- A large directory structure will cause the Splunk process reading logs to use a lot of RAM and a large percentage of the CPU. A process to move away the old logs would be advisable so as to minimize the number of files that Splunk must track.

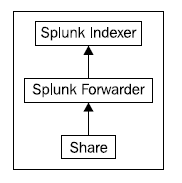

This setup often looks like the following diagram:

This configuration may look simple, but unfortunately, it does not scale easily.