Another option to interface with an external system is to run a custom alert action using the results of a saved search. Splunk provides a simple example in $SPLUNK_HOME/bin/scripts/echo.sh. Let's try it out and see what we get using the following steps:

- Create a saved search. For this test, do something cheap, such as writing the following code:

index=_internal | head 100 | stats count by sourcetype

- Schedule the search to run at a point in the future. I set it to run every five minutes just for this test.

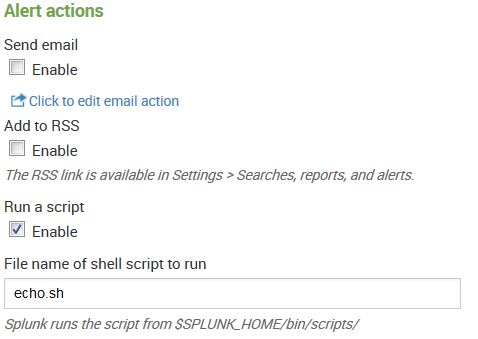

- Enable Run a script and type in echo.sh:

The script places the output into $SPLUNK_HOME/bin/scripts/echo_output.txt.

In my case, the output is as follows:

'/opt/splunk/bin/scripts/echo.sh' '4' 'index=_internal | head 100 | stats count by sourcetype' 'index=_internal | head 100 | stats count by sourcetype' 'testingAction' 'Saved Search [testingAction] always(4)' 'http://vlbmba.local:8000/app/search/@go?sid=scheduler__ admin__search__testingAction_at_1352667600_2efa1666cc496da4' '' '/ opt/splunk/var/run/splunk/dispatch/scheduler__admin__search__ testingAction_at_1352667600_2efa1666cc496da4/results.csv.gz' 'sessionK ey=7701c0e6449bf5a5f271c0abdbae6f7c'

In the bullets that follow, let's look through each argument in the preceding output:

- $0: This is the script path—'/opt/splunk/bin/scripts/echo.sh'

- $1: This is the number of events returned—'4'

- $2: These are the search terms—index=_internal | head 100 | stats count by sourcetype'

- $3: This is the full search string—index=_internal | head 100 | stats count by sourcetype'

- $4: This is the saved search's name—testingAction'

- $5: This is the reason for the action—'Saved Search [testingAction] always(4)'

- $6: This is a link to the search results; the host is controlled in web.conf:

'http://vlbmba.local:8000/app/search/@go?sid=scheduler__admin__search__testingAction_at_1352667600_2efa1666cc496da4'

- $7: This is deprecated—''

- $8: This is the path to the raw results, which are always gzipped:

'/opt/splunk/var/run/splunk/dispatch/scheduler__admin__search__testingAction_at_1352667600_2efa1666cc496da4/results.csv.gz'

- STDIN—this is the session key when the search ran:

'sessionKey=7701c0e6449bf5a5f271c0abdbae6f7c'

The typical use of scripted alerts is to send an event to a monitoring system. You could also imagine archiving these results for compliance reasons or to import them into another system.

Let's make a fun example that copies the results to a file and then issues a curl statement. That script might look like the following one:

#!/bin/sh DIRPATH='dirname "$8"' DIRNAME='basename "$DIRPATH"' DESTFILE="$DIRNAME.csv.gz" cp "$8" /mnt/archive/alert_action_example_output/$DESTFILE URL="http://mymonitoringsystem.mygreatcompany/open_ticket.cgi" URL="$URL?name=$4&count=$1&filename=$DESTFILE" echo Calling $URL curl $URL

You would then place your script in $SPLUNK_HOME/bin/scripts on the server that will execute the script and refer to the script by name in alert actions. If you have a distributed Splunk environment, the server that executes the scripts will be your search head.

If you need to perform an action for each row of results, then your script will need to open the results. The following is a Python script that loops over the contents of the GZIP file and posts the results to a ticketing system, including a JSON representation of the event:

#!/usr/bin/env python

import sys

from csv import DictReader

import gzip

import urllib

import urllib2

import json

#our ticket system url

open_ticket_url = "http://ticketsystem.mygreatcompany/ticket"

#open the gzip as a file

f = gzip.open(sys.argv[8], 'rb')

#create our csv reader

reader = DictReader(f)

for event in reader:

fields = {'json': json.dumps(event),

'name': sys.argv[4],

'count': sys.argv[1]}

#build the POST data

data = urllib.urlencode(fields)

#the request will be a post

resp = urllib2.urlopen(open_ticket_url, data)

print resp.read()

f.close()

Hopefully, these examples give you a starting point for your use case.