We’ve already written code in Case Study: Google NGrams, that can read the contents of the NGrams file, but if we want to sort it, we’re going to need to store the parsed content of the file in a data structure of some kind. It’ll need to track the word, year, and count of each line in the file. But to design this structure, we’ll need to have some understanding of the function that will consume it.

That function, qsort, has the following signature:

| | def qsort(data:Ptr[Byte], |

| | num:Int, |

| | size:Int, |

| | comparator:CFunctionPtr2[Ptr[Byte], Ptr[Byte], Int]) |

qsort is powerful, and it can sort almost anything, but it can be a bit cryptic. Like malloc, it uses Ptr[Byte] (or a void pointer in C) as a catch-all generic data type; it can sort anything we can represent as a pointer, and like with malloc, we’ll cast the data to Ptr[Byte] to make the types line up. Later on, you’ll learn how to properly configure the num, size, and comparator arguments to set up this process.

First, we need to figure out how to represent our NGram records as something that we can fit in a Ptr, and that C APIs will be able to make sense of.

If we were working in regular JVM Scala, and we wanted to design a data structure to hold our NGram items, we might use a simple case class like this:

| | case class NGram(word:String, count:Int, year:Int) |

Or if we wanted to streamline things even further, we could just use a tuple:

| | type NGram = Tuple3[String, Int, Int] |

Either way, for each line of the file, we’re going to need to create a data structure that contains the following:

In Scala Native, we can, of course, use case classes or tuples in regular code; the trouble is that because those objects are garbage-collected, we can’t represent them with pointers or pass them as arguments to C functions.

To model the same data in a C-friendly way, we’ll have to use a C-style data structure, called a struct. Structs combine many of the properties of strings and tuples with some of the advantages and disadvantages of C-style strings.

Instead, we’ll use Scala Native’s C interop capabilities to model the data as a struct. A struct is a C-style data structure that has some of the properties of a tuple or case class, as well as some of the use and storage characteristics of the CString type that you learned about in Chapter 1, The Basics: Input and Output. A struct consists of an ordered series of fields of different types, and all the fields have to be C-friendly Scala Native types like Byte, Int, Ptr, or another struct type.

Because all the fields have known sizes, and every struct instance is laid out the same, structs have the following useful properties:

The fields of a struct can be stored one after another in memory, just like characters in a string.

The position of any field is a static byte offset from the beginning of a struct.

All instances of a struct type have the exact same size, known at compile time.

A reference to a struct can be represented by a single pointer.

Structs can be be efficiently allocated in bulk.

Fortunately, Scala Native makes structs just as easy to define as a regular Scala class—we simply define a type alias for CStructN types, that work a lot like tuples. So, we can define a struct type analogous to our NGram type like this:

| | type NGramData = CStruct4[CString, Int, Int, Int] |

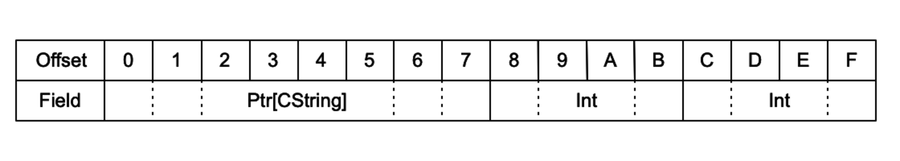

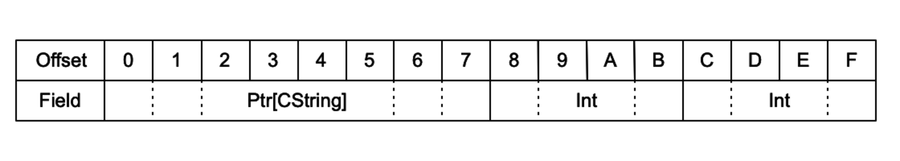

This defines NGramData to be a 16-byte wide region of memory, laid out like this:

Once we have a struct defined, we can use stackalloc or malloc to allocate space for it and get back a pointer to the uninitialized value, just like with a string. Scala Native has a bit of syntactic magic for working with the fields of structs; if we have a Ptr to a CStruct value, we can retrieve it’s member fields with ._1, ._2, and so on, just like a normal Scala tuple; but if we want the address of a field instead, we can get that with .at1, .at2, and so forth. This allows us to use dereference, assignment, or pointer arithmetic on whole structs or individual fields, but it can look a bit funny at first. To get a sense of it, let’s write a modified version of our line-parsing code from Case Study: Google NGrams. We’ll just pass in a Ptr[NGramData] for it to read into and get rid of the comparison logic, which we don’t need any more.

There’s one last catch. Because our NGramData struct contains a pointer to the CString, and not the CString content itself, we’ll still need to allocate space for the string content and store that Ptr[Byte] value within the struct field. Don’t worry; it’s easier than it sounds:

| | def parseLine(line_buffer:Ptr[Byte], data:Ptr[NGramData]):Unit = { |

| | val count = data.at2 |

| | val year = data.at3 |

| | val doc_count = data.at4 |

| | |

| | val sscanf_result = stdio.sscanf(line_buffer, c"%1023s %d %d %d\n", |

| | temp_word, year, count, doc_count) |

| | if (sscanf_result < 4) { |

| | throw new Exception("input error") |

| | } |

| | val word_length = strlen(temp_word) |

| | val new_string = malloc(word_length + 1) |

| | strncpy(new_string, temp_word, word_length + 1) |

| | data._1 = new_string |

| | } |

As you see here, we can store directly into the second, third, and fourth fields of our Ptr[NGramData] by fetching their addresses, and passing them directly into sscanf.

But to assign the string content we have to do things a little differently: we’ll read into a temporary buffer, then allocate some dedicated storage for our new string with malloc, and then carefully copy into the newly allocated memory with strncpy. After all that, though, the CString field in our NGramData struct is still uninitialized! Our struct only points to the new memory when we assign to the CString field once we assign into it with data._1 = new_string.

As a rule of thumb, it’s much more common to use the ._N form of field accessor than the .atN, for both assignment and dereference. But there will be a few points later in the book when .atN becomes very useful for working with intricate nested data structures. And as we’ve already seen, it’s a great fit for sscanf() in particular.

With this technique in our toolbelt, we’re now making serious headway into solving our sorting program. But we still need to devise a strategy for managing and growing our data as we read from our (very large!) input file.

At this point, introducing arrays feels a bit anticlimactic; we’ve already seen almost everything one can do with them. How so?

We’ve seen strings, which are a special class of byte arrays with unknown length.

We’ve used pointer arithmetic to manipulate addresses, which is how array access works.

We’ve used malloc and stackalloc to allocate strings and structs, which is how arrays are allocated.

In many ways, we’ve been working with arrays all along; but we should take this opportunity to review and consolidate what we know, so that we can plan for the amount of data we’ll be handling shortly.

An array is a data structure consisting of a series of items of the same type. Unlike arrays in higher-level languages, a Scala Native array can’t be dynamically resized, and access is unsafe—if you try to read or set an item past the end of the array, you’ll get nasty runtime errors. This happens because C and Scala Native represent arrays at runtime by their address only. Despite this, it’s generally necessary to track some additional metadata, such as the size of the array.

In addition, if we do need to increase the size of an array with realloc, we must take additional care. Because realloc invalidates pointers passed into it, we must make sure that no stale pointers to discarded memory escape into other data structures. And to reduce the number of expensive realloc calls, we’ll want to batch up our allocations periodically, in bulk, which means we’ll need to track the capacity of the array separately from the size.

To track all this vital data in an organized way, we can design a simple case class, along with some helper methods to streamline the more complex malloc and casting operations—something like this:

| | final case class WrappedArray[T](var data:Ptr[T], var used:Int, |

| | var capacity:Int) |

You may note here that I’m using a regular case class, for convenience. The WrappedArray object itself isn’t going to require high degrees of performance; rather, its inner data array will be used directly by client code.

Since this component is intended to be used solely for this application, I’m not going to stress about making it fully generic or universal. This also means that the helper functions we write to work with a WrappedArray[NGramData] will belong in the main object declaration of the program, and not in a WrappedArray companion object.

To initialize the array, we can write a helper function like this:

| | def makeWrappedArray(size:Int):WrappedArray[NGramData] = { |

| | val data = malloc(size * sizeof[NGramData]).asInstanceOf[Ptr[NGramData]] |

| | return WrappedArray[NGramData](data, 0, size) |

| | } |

And to resize the array, we can just reassign to the inner data field with realloc:

| | def growWrappedArray(array:WrappedArray[NGramData], size:Int):Unit = { |

| | val new_capacity = array.capacity + size |

| | val new_size = new_capacity * sizeof[NGramData] |

| | val new_data = realloc(array.data.asInstanceOf[Ptr[Byte]], new_size) |

| | array.data = new_data.asInstanceOf[Ptr[NGramData]] |

| | array.capacity = new_capacity |

| | } |

Best of all, this class is parameterized over the type of the array T, so we could use it to hold anything that we can represent with a pointer: structs, strings, integers, and so on. It should be perfect to hold our big slab of NGramData.

One more thing we should take care of before we move on is when we write a complex data structure like this, it’s usually best to also write a utility function to free all of the memory we’ve allocated when we’re done. This might not be strictly necessary for this program, since we are unlikely to have any opportunity to free memory before the program completes, but it could become useful later, and it’s not particularly complicated:

| | def freeArray(array:Ptr[NGramData], size:Int):Unit = { |

| | for (i <- 0 until size) { |

| | val item = array + i |

| | stdlib.free( item._1 ) |

| | } |

| | stdlib.free(array.asInstanceOf[Ptr[Byte]]) |

| | } |

The only subtlety here is that since the individual string arrays are stored in the larger array, we have to walk through and free each one before freeing array itself.