Figure 801: EXIF Rotater Sample App, with Original and Rotated Images

Most Android devices will have a camera, since they are fairly commonplace on mobile devices these days. You, as an Android developer, can take advantage of the camera, for everything from snapping tourist photos to scanning barcodes. If you wish to let other apps do the “heavy lifting” for you, working with the camera can be fairly straightforward. If you want more control, you can work with the camera directly, though this control comes with greater complexity.

You can also record videos using the camera. Once again, you have the option of either using a third-party activity, or doing it yourself.

Understanding this chapter requires that you have read the core chapters,

particularly the material on implicit Intents. You also need

to read the chapters on the ContentProvider component, particularly

the coverage of FileProvider.

If your app needs a camera — by any of the means cited in this chapter –

you should include a

<uses-feature> element in the manifest indicating your requirements. However, you

need to be fairly specific about your requirements here.

For example, the Nexus 7 (2012) has a camera… but only a front-facing camera. This facilitates

apps like video chat. However, the android.hardware.camera implies that you need

a high-resolution rear-facing camera, even though this is undocumented.

Hence, to work with the Nexus 7’s camera, you need to:

CAMERA permission (if you are using the Camera directly)android.hardware.camera feature (android:required="false")android.hardware.camera.front feature (if your app definitely

needs a front-facing camera)At runtime, you would use hasSystemFeature() on PackageManager, or interrogate

the Camera class for available cameras, to determine what you have access to.

Note that if you want to record audio when recording videos, you should also consider

the android.hardware.microphone feature.

The easiest way to take a picture is to not take the picture yourself,

but let somebody else do it. The most common implementation of this

approach is to use an ACTION_IMAGE_CAPTURE Intent to bring up

the user’s default camera application, and let it take a picture on

your behalf.

In theory, this is fairly simple:

startActivityForResult() on an ACTION_IMAGE_CAPTURE Intent

EXTRA_OUTPUT

as an extra on the ACTION_IMAGE_CAPTURE Intent — a full-sized photo should

be written to where you designated in EXTRA_OUTPUT

In practice, this gets complicated, in part because Android 7.0 is trying to get

rid of file schemes on Uri values. As a result, EXTRA_OUTPUT cannot point

to a file. Instead, it has to point to a ContentProvider, such as FileProvider.

To see this in use, take a look at the

Camera/FileProvider

sample project. This app will use system-supplied activities

to take a picture, then view the result, without actually implementing

any of its own UI.

Of course, we still need an activity, so our code can be launched by

the user. We just set it up with Theme.Translucent.NoTitleBar, so

no UI will be created for it:

android:label="@string/app_name"

android:theme="@android:style/Theme.Translucent.NoTitleBar">

<intent-filter>

<action android:name="android.intent.action.MAIN"/>

<category android:name="android.intent.category.LAUNCHER"/>

</intent-filter>

</activity>

<provider

Prior to Android 6.0, we did not need any permissions to use ACTION_IMAGE_CAPTURE.

That changed with Android 6.0, where we now need to hold the CAMERA

permission, if our targetSdkVersion is 23 or higher.

So, our manifest also has the <uses-permission> element for that camera

permission:

<uses-permission android:name="android.permission.CAMERA" />

But the CAMERA permission is a dangerous permission, one that we have

to request using runtime permissions in Android 6.0+. So, we need a bunch of

code to deal with that. In this project, we need that permission at the outset,

as our app cannot do anything if we do not have the permission. This helps to

reduce the code burden a bit.

This project uses a variation on the AbstractPermissionActivity profiled in

the material on runtime permissions.

Our MainActivity extends AbstractPermissionActivity and overrides

three methods. One is getDesiredPermissions(), which indicates that we

want the CAMERA permission and nothing else:

protected String[] getDesiredPermissions() {

return(new String[] {Manifest.permission.CAMERA});

}

Another is onPermissionDenied(), which will be called if the user rejects

our requested permission. Here, we just show a Toast and otherwise go away

quietly:

protected void onPermissionDenied() {

Toast

.makeText(this, R.string.msg_sorry, Toast.LENGTH_LONG)

.show();

finish();

}

And, we implement onReady(), which is the replacement for onCreate()

(which is handled in AbstractPermissionActivity). onReady() will be called

when we have our permission, either because we already had it from before or

because the user just granted it. Here is where our main application logic

can begin.

Well, except for one more thing.

As noted earlier, Android 7.0 has a ban on file: Uri values, if your

targetSdkVersion is 24 or higher. In particular, you

cannot use a file: Uri in an Intent, whether as the “data” aspect

of the Intent or as the value of an extra.

The proper way to implement this is to use a ContentProvider, such

as a FileProvider, as is covered in

one of the chapters on providers. This is

a fair bit more complicated, and not all camera apps will work well

with a content: Uri, but our options are limited.

Our res/xml/provider_paths.xml metadata for the FileProvider

indicate that we want to serve up the contents of the photos/ directory

inside of getFilesDir(), with a Uri segment of /p/ mapping to that

location:

<?xml version="1.0" encoding="utf-8"?>

<paths>

<files-path

name="p"

path="photos" />

</paths>

Our manifest now has a <provider> element for our FileProvider

subclass, named LegacyCompatFileProvider:

<provider

android:name="LegacyCompatFileProvider"

android:authorities="${applicationId}.provider"

android:exported="false"

android:grantUriPermissions="true">

<meta-data

android:name="android.support.FILE_PROVIDER_PATHS"

android:resource="@xml/provider_paths"/>

</provider>

That element:

FileProvider

applicationId of this app as the basis of our authorities

value, using a manifest placeholder

android:exported="false") except

where we explicitly grant permission in our Java code

(android:grantUriPermissions="true")LegacyCompatFileProvider is the same implementation as from

the original discussion of FileProvider,

using LegacyCompatCursorWrapper to help increase the odds that

clients of this ContentProvider will behave properly:

package com.commonsware.android.camcon;

import android.database.Cursor;

import android.net.Uri;

import android.support.v4.content.FileProvider;

import com.commonsware.cwac.provider.LegacyCompatCursorWrapper;

public class LegacyCompatFileProvider extends FileProvider {

@Override

public Cursor query(Uri uri, String[] projection, String selection, String[] selectionArgs, String sortOrder) {

return(new LegacyCompatCursorWrapper(super.query(uri, projection, selection, selectionArgs, sortOrder)));

}

}

Now we can start the work of taking pictures.

At this point, we can start using the provider, to give us a Uri that we

can use with EXTRA_OUTPUT and an ACTION_IMAGE_CAPTURE Intent.

Howeve, we need to grant permission to allow camera apps to write to our desired photo in this provider. Unfortunately, on Android 4.4 and below, this is a challenge. Plus, we have to think about configuration changes. All of that makes this process even more complicated.

Our onReady() method spends a lot of lines to eventually make that

startActivityForResult() call:

@Override

protected void onReady(Bundle savedInstanceState) {

if (savedInstanceState==null) {

output=new File(new File(getFilesDir(), PHOTOS), FILENAME);

if (output.exists()) {

output.delete();

}

else {

output.getParentFile().mkdirs();

}

Intent i=new Intent(MediaStore.ACTION_IMAGE_CAPTURE);

Uri outputUri=FileProvider.getUriForFile(this, AUTHORITY, output);

i.putExtra(MediaStore.EXTRA_OUTPUT, outputUri);

if (Build.VERSION.SDK_INT>=Build.VERSION_CODES.LOLLIPOP) {

i.addFlags(Intent.FLAG_GRANT_WRITE_URI_PERMISSION);

}

else if (Build.VERSION.SDK_INT>=Build.VERSION_CODES.JELLY_BEAN) {

ClipData clip=

ClipData.newUri(getContentResolver(), "A photo", outputUri);

i.setClipData(clip);

i.addFlags(Intent.FLAG_GRANT_WRITE_URI_PERMISSION);

}

else {

List<ResolveInfo> resInfoList=

getPackageManager()

.queryIntentActivities(i, PackageManager.MATCH_DEFAULT_ONLY);

for (ResolveInfo resolveInfo : resInfoList) {

String packageName = resolveInfo.activityInfo.packageName;

grantUriPermission(packageName, outputUri,

Intent.FLAG_GRANT_WRITE_URI_PERMISSION);

}

}

try {

startActivityForResult(i, CONTENT_REQUEST);

}

catch (ActivityNotFoundException e) {

Toast.makeText(this, R.string.msg_no_camera, Toast.LENGTH_LONG).show();

finish();

}

}

else {

output=(File)savedInstanceState.getSerializable(EXTRA_FILENAME);

}

}

When we are first run, our savedInstanceState Bundle will be null. If it

is not null, we know that we

are coming back from some prior invocation of this activity, and so we

do not need to call startActivityForResult() to take a picture.

First, we need a File pointing to where we want the photo to be stored.

We create a directory inside of getFilesDir() (named photos via the

PHOTOS constant), and in there identify a file (named CameraContentDemo.jpeg

via the FILENAME constant).

Then, we create the ACTION_IMAGE_CAPTURE Intent, use FileProvider.getUriForFile()

to get a Uri pointing to our desired File, then put that Uri in the

EXTRA_OUTPUT extra of the Intent.

Now, though, we have to grant permissions to be able to write to that Uri.

If we are on Android 5.0+, calling addFlags(FLAG_GRANT_WRITE_URI_PERMISSION)

not only affects the “data” aspect of the Intent, but also EXTRA_OUTPUT,

due to a bit of a hack that Google added to the Intent class. So, that scenario is simple.

The problem comes in with Android 4.4 and older devices, where

addFlags(FLAG_GRANT_WRITE_URI_PERMISSION) does not affect Uri values

passed in extras.

For Android 4.2 through 4.4, we can use a trick: while flags skip over

Intent extras, flags do apply to a ClipData that you attach to the

Intent via setClipData(). Even though the camera app will never use

this ClipData, by wrapping our Uri in a ClipData and attaching that

to the Intent, our addFlags(FLAG_GRANT_WRITE_URI_PERMISSION) will

affect that Uri. The fact that the camera app gets the Uri from

EXTRA_OUTPUT, instead of from the ClipData, makes no difference.

For Android 4.1 and older devices, though,

there is no means for us to simply indicate on the

Intent itself that it is fine for the app handling our request to write

to our Uri. Instead, we:

ACTION_IMAGE_CAPTURE, using

PackageManager and queryIntentActivities()

grantUriPermission(), inherited

from Context, to allow the app to read and write from our Uri

This allows our Intent to succeed for any camera app… at least those

that properly handle content: Uri values.

Finally, after all of that, we can call startActivityForResult(). However,

in case the user does not have a camera app, we wrap that call in a

try/catch block, watching for an ActivityNotFoundException.

In order to be able to save the File across configuration changes, we

stuff it in the saved instance state Bundle in onSaveInstanceState():

@Override

protected void onSaveInstanceState(Bundle outState) {

super.onSaveInstanceState(outState);

outState.putSerializable(EXTRA_FILENAME, output);

}

This is what allows us to pull that value back out in onReady().

Our onActivityResult() method then uses the same File,

creating an ACTION_VIEW Intent, pointing

at our output Uri, granting

read permission on that Uri, indicating the MIME type is image/jpeg, and starting

up an activity for that.

@Override

protected void onActivityResult(int requestCode, int resultCode,

Intent data) {

if (requestCode == CONTENT_REQUEST) {

if (resultCode == RESULT_OK) {

Intent i=new Intent(Intent.ACTION_VIEW);

Uri outputUri=FileProvider.getUriForFile(this, AUTHORITY, output);

i.setDataAndType(outputUri, "image/jpeg");

i.addFlags(Intent.FLAG_GRANT_READ_URI_PERMISSION);

try {

startActivity(i);

}

catch (ActivityNotFoundException e) {

Toast.makeText(this, R.string.msg_no_viewer, Toast.LENGTH_LONG).show();

}

finish();

}

}

}

We do not have to fuss with the grantUriPermissions() loop, as

addFlags() has always granted permission to the “data” aspect of the

Intent (our Uri).

We wrap the startActivity() call in another try/catch block, watching

for ActivityNotFoundException. Not all devices will have an image viewing

app that supports a content Uri. For example, stock Android 5.1 (e.g., on a

Nexus 4) will not have such an image viewer.

There are several downsides to this approach.

First, you have no control over the camera app itself. You do not even really know what app it is. You cannot dictate certain features that you would like (e.g., resolution, color effects). You simply blindly ask for a photo and get the result.

Also, since you do not know what the camera app is or behaves like, you cannot document that portion of your application’s flow very well. You can say things like “at this point, you can take a picture using your chosen camera app”, but that is about as specific as you can get.

As noted above, it is possible that your app’s process will be terminated while your

app is not in the foreground, because the user is taking a picture

using the third-party camera app. Whether or not this happens depends

on how much system RAM the camera app uses and what else is all going

on with the device. But, it does happen. Your app should be able

to cope with such things, just as we are doing with the saved instance

state Bundle. However, many developers do not expect their

process to be replaced between a call to startActivityForResult()

and the corresponding onActivityResult() callback.

Not every camera app will support a content Uri for the EXTRA_OUTPUT

value. In fact, Google’s own camera app did not do this until the summer of 2016.

With ACTION_VIEW, since the content Uri is in the “data” facet of the

Intent, the <intent-filter> elements in the manifest will ensure that

our Intent only goes to an activity that advertises support for content.

However, there is no equivalent of this for Uri values in extras. And so

we will launch the camera app, which then may crash because it does not like

our Uri, and our app does not really find out about the problem, other than

not getting RESULT_OK in onActivityResult().

Finally, some camera apps misbehave, returning odd results, such as a thumbnail-sized image rather than a max-resolution image. There is little you can do about this.

When you take a picture using an Android device — whether using

ACTION_IMAGE_CAPTURE or working with the camera APIs directly,

you may find that your picture turns out strange. For example, you

might take a picture in portrait mode, then find that some image viewers

will show you a portrait picture, while others show you a landscape

picture with its contents rotated.

That is due to the way Android camera hardware encodes the JPEG images that it takes. The orientation that you take the picture in may not be the orientation of the result.

JPEG images can have EXIF tags. These represent metadata about the image itself. For example, if you hear that an image has been “geotagged”, that means that the image has EXIF tags that contain the latitude and longitude of where the picture was taken.

These tags are contained in the JPEG file but are in a separate section from the actual image data itself. Tools can read in the EXIF tags and use them for additional information for the user (e.g., an image viewer with an integrated map to show where the picture was taken).

One EXIF tag is the “orientation” tag. In effect, this tag is a message from whatever created the image (e.g., camera hardware) to whatever is showing the camera image, saying “could you please rotate this image for me? #kthxbye”.

In other words, the camera hardware is being lazy.

A lot of camera hardware is designed to take landscape images, particularly when using a rear-facing camera, as that is the traditional way that cameras were held by default, going back decades. In an ideal world, if the user took a portrait photo, the camera hardware would take a portrait picture. Or, at least, the camera hardware would take a landscape picture, but then rotate the image to be portrait before delivering the JPEG to whatever app requested the image.

Some camera hardware does just that.

However, other camera hardware leaves the image as a landscape image, regardless of how the device was held when the image was taken. Instead, the camera hardware will set the orientation tag to indicate how image viewers should rotate the image, to reflect what the image really should look like.

Of course, this would not be a problem if all image viewers paid attention

to the orientation tag. However, many do not, particularly on Android…

because BitmapFactory ignores all EXIF tags. As a result, you get

the unmodified image, instead of one rotated as the camera hardware requested.

And so, if you blindly load the image, it will show up without taking the orientation tag into account.

If you want to take the orientation tag into account, you need to find

out the value of that tag. BitmapFactory will not help you here. However,

ExifInterface can… though which ExifInterface you use is important.

android.media.ExifInterface that Android used for years has

security flaws. Android 7.0+ devices should all ship with a patched

version. Some Android 6.0 devices might get a patch. Everything else

will go unpatched, and if your app uses android.media.ExifInterface, your

app may expose the user to security risks.

Fortunately, alternative ExifInterface implementations exist, that

not only avoid the security flaw, but also support InputStream

as well as File. That is only available on android.media.ExifInterface

starting with Android 7.0; older versions only supported a File, which

is awkward in modern Android development.

The simplest solution for most developers would be to use the exifinterface

artifact from the Android Support Library

dependencies {

compile 'com.android.support:exifinterface:25.1.0'

}

This has the same API as does the Android 7.0+ edition of ExifInterface,

including InputStream support.

However, for whatever reason, the API that Google has elected to expose

through both of their supplied ExifInterface classes pales in comparison

to the EXIF classes that they have elsewhere,

such as the AOSP editions of the camera and gallery apps.

A version of this code is available

as an artifact

published by Alessandro Crugnola and is demonstrated in the next section.

If your EXIF needs are fairly limited, using the Google-supplied

ExifInterface classes is simple. But even for something as seemingly

simple as rotating an image, you need a more robust EXIF API.

The Camera/EXIFRotater

sample project contains three images in assets/, culled from

this GitHub repository

that supplements this article on the problems with EXIF orientation handling.

Specifically, we have images with orientation tag values of 3, 6, and

8, which are the most common ones that you will encounter.

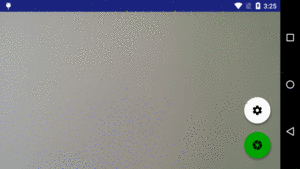

The objective of this app is to show one of those images in its original form and rotated in accordance with the EXIF orientation tag:

Figure 801: EXIF Rotater Sample App, with Original and Rotated Images

However, there are two product flavors in this project, reflecting

two different ways of getting that visual output: rotating the

ImageView and rotating the image itself. These are controlled via

a ROTATE_BITMAP value added to BuildConfig:

productFlavors {

image {

buildConfigField "boolean", "ROTATE_BITMAP", "false"

}

matrix {

buildConfigField "boolean", "ROTATE_BITMAP", "true"

}

}

The MainActivity kicks off an ImageLoadThread in onCreate().

That thread is responsible for loading (and, if appropriate, rotating)

the image. When that is done, the thread will post an ImageLoadedEvent

to an event bus (using greenrobot’s EventBus) to have the UI display

the image (and, if needed, rotate a copy of it):

private static class ImageLoadThread extends Thread {

private final Context ctxt;

ImageLoadThread(Context ctxt) {

this.ctxt=ctxt.getApplicationContext();

}

@Override

public void run() {

AssetManager assets=ctxt.getAssets();

try {

InputStream is=assets.open(ASSET_NAME);

ExifInterface exif=new ExifInterface();

exif.readExif(is, ExifInterface.Options.OPTION_ALL);

ExifTag tag=exif.getTag(ExifInterface.TAG_ORIENTATION);

int orientation=(tag==null ? -1 : tag.getValueAsInt(-1));

if (orientation==8 || orientation==3 || orientation==6) {

is=assets.open(ASSET_NAME);

Bitmap original=BitmapFactory.decodeStream(is);

Bitmap rotated=null;

if (BuildConfig.ROTATE_BITMAP) {

rotated=rotateViaMatrix(original, orientation);

exif.setTagValue(ExifInterface.TAG_ORIENTATION, 1);

exif.removeCompressedThumbnail();

File output=

new File(ctxt.getExternalFilesDir(null), "rotated.jpg");

exif.writeExif(rotated, output.getAbsolutePath(), 100);

MediaScannerConnection.scanFile(ctxt,

new String[]{output.getAbsolutePath()}, null, null);

}

EventBus

.getDefault()

.postSticky(new ImageLoadedEvent(original, rotated, orientation));

}

}

catch (Exception e) {

Log.e(getClass().getSimpleName(), "Exception processing image", e);

}

}

}

We first get an InputStream on the particular image from assets/

that we are to show (hard-coded as the ASSET_NAME constant).

We then create an ExifInterface, using the richer implementation from

the aforementioned artifact. This ExifInterface has a few versions

of readExif(), including one that can take our InputStream as input.

We can then get the orientation tag value via calls to getTag()

(to get an ExifTag for TAG_ORIENTATION), then getValueAsInt().

The latter method retrieves an integer tag value, with a supplied default

value if the tag exists but does not have an integer value.

However, it is also possible that the tag does not exist. In fact, many

JPEG images will lack this header, implying that the image is already

in the correct orientation. So, we use the ternary operator and

the default value to getValueAsInt() to get either the actual orientation

tag numeric value or –1 if, for any reason, we cannot get that value.

If the orientation is 3, 6, or 8, we will want to show the image.

So, we use BitmapFactory to load the image, via decodeStream().

If ROTATE_BITMAP is true, we do five things:

Bitmap itself using a Matrix, in the

rotateViaMatrix() method:

static private Bitmap rotateViaMatrix(Bitmap original, int orientation) {

Matrix matrix=new Matrix();

matrix.setRotate(degreesForRotation(orientation));

return(Bitmap.createBitmap(original, 0, 0, original.getWidth(),

original.getHeight(), matrix, true));

}

Bitmap to a file

on external storage, so we have a JPEG showing the rotated results yet

including all of the original EXIF tags (excluding the orientation

tag and thumbnail)MediaScannerConnection to scan this newly-created file, so

it shows up in file managers, both on-device and on-desktopIf ROTATE_BITMAP is false, we instead handle the rotation in

our onImageLoaded() method that is called when the ImageLoadedEvent

is posted:

@Subscribe(sticky=true, threadMode=ThreadMode.MAIN)

public void onImageLoaded(ImageLoadedEvent event) {

original.setImageBitmap(event.original);

if (BuildConfig.ROTATE_BITMAP) {

oriented.setImageBitmap(event.rotated);

}

else {

oriented.setImageBitmap(event.original);

oriented.setRotation(degreesForRotation(event.orientation));

}

}

Rather than show the rotated image in the lower ImageView, we show

the original image, then rotate the ImageView.

Which of these two approaches — rotate the ImageView or rotate the

image — is appropriate for you depends on your app. If all you need to

do is show the image properly to the user, rotating the ImageView

should be less memory-intensive. If, on the other hand, you need to save

the corrected image somewhere for later use, you will need to rotate

the image itself to make that correction.

Some devices have buggy firmware, where they do not rotate the image themselves nor set the orientation tag in the image. Instead, they just ignore the whole issue. For these devices, we have no way of distinguishing between “images that need to be rotated, but we do not know the orientation” and “images that are fine and do not need to be rotated”.

Your best option is to let the user manually request that the image be rotated (e.g., action bar “rotate” item).

If your objective is to scan a barcode, it is much simpler for you to integrate Barcode Scanner into your app than to roll it yourself.

Barcode Scanner – one of the most popular Android apps of all time — can scan a wide range of 1D and 2D barcode types. They offer an integration library that you can add to your app to initiate a scan and get the results. The library will even lead the user to the Play Store to install Barcode Scanner if they do not already have the app.

One limitation is that while the ZXing team (the authors and maintainers of Barcode Scanner) make the integration library available, they only do so in source form.

That sample project —

Camera/ZXing

– has a UI dominated by a “Scan!” button. Clicking the button invokes a doScan()

method in our sample activity:

public void doScan(View v) {

(new IntentIntegrator(this)).initiateScan();

}

This passes control to Barcode Scanner by means of the integration JAR and

the IntentIntegrator class. initiateScan() will validate that Barcode Scanner is

installed, then will start up the camera and scan for a barcode.

Once Barcode Scanner detects a barcode and decodes it, the activity invoked by

initiateScan() finishes, and control returns to you in onActivityResult() (as the

Barcode Scanner scanning activity was invoked via startActivityForResult()). There,

you can once again use IntentIntegrator to find out details of the scan, notably

the type of barcode and the encoded contents:

public void onActivityResult(int request, int result, Intent i) {

IntentResult scan=IntentIntegrator.parseActivityResult(request,

result,

i);

if (scan!=null) {

format.setText(scan.getFormatName());

contents.setText(scan.getContents());

}

}

To use IntentIntegrator and IntentResult, the sample project has two

modules: the app/ module for the app, and a zxing/ module containing

those two classes (and a rump AndroidManifest.xml to make the build tools

happy). The app/ module depends upon the zxing module via

a compile project(':zxing') dependency directive.

Some notes:

<uses-feature> element declaring that you need a camera, if your app

cannot function without barcodesJust as ACTION_IMAGE_CAPTURE can be used to have a third-party app supply you with

still images, there is an ACTION_VIDEO_CAPTURE on MediaStore that can be used

as an Intent action for asking a third-party app capture a video for you. As with

ACTION_IMAGE_CAPTURE, you use startActivityForResult() with ACTION_VIDEO_CAPTURE

to find out when the video has been recorded.

There are two extras of note for ACTION_VIDEO_CAPTURE:

MediaStore.EXTRA_OUTPUT, which indicates where the video

should be written, andMediaStore.EXTRA_VIDEO_QUALITY, which should be an integer, either 0 for

low quality/low size videos or 1 for high qualityIf you elect to skip EXTRA_OUTPUT, the video will be written to the

default directory for videos on the device

(typically a “Movies” directory in the root of external storage), and the Uri

you receive on the Intent in onActivityResult() will point to this file.

The impacts of skipping EXTRA_VIDEO_QUALITY are undocumented.

The

Media/VideoRecordIntent

sample project is a near-clone of the Camera/FileProvider sample from earlier in

this chapter. Instead of requesting a third-party app take a still image, though,

this sample requests that a third-party app record a video:

package com.commonsware.android.videorecord;

import android.app.Activity;

import android.content.Intent;

import android.content.pm.PackageManager;

import android.content.pm.ResolveInfo;

import android.net.Uri;

import android.os.Build;

import android.os.Bundle;

import android.provider.MediaStore;

import android.support.v4.content.FileProvider;

import java.io.File;

import java.util.List;

public class MainActivity extends Activity {

private static final String EXTRA_FILENAME=

BuildConfig.APPLICATION_ID+".EXTRA_FILENAME";

private static final String AUTHORITY=

BuildConfig.APPLICATION_ID+".provider";

private static final String VIDEOS="videos";

private static final String FILENAME="sample.mp4";

private static final int REQUEST_ID=1337;

private File output=null;

private Uri outputUri=null;

@Override

public void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

if (savedInstanceState==null) {

output=new File(new File(getFilesDir(), VIDEOS), FILENAME);

if (output.exists()) {

output.delete();

}

else {

output.getParentFile().mkdirs();

}

}

else {

output=(File)savedInstanceState.getSerializable(EXTRA_FILENAME);

}

outputUri=FileProvider.getUriForFile(this, AUTHORITY, output);

if (savedInstanceState==null) {

Intent i=new Intent(MediaStore.ACTION_VIDEO_CAPTURE);

i.putExtra(MediaStore.EXTRA_OUTPUT, outputUri);

i.putExtra(MediaStore.EXTRA_VIDEO_QUALITY, 1);

if (Build.VERSION.SDK_INT>=Build.VERSION_CODES.LOLLIPOP) {

i.addFlags(Intent.FLAG_GRANT_WRITE_URI_PERMISSION |

Intent.FLAG_GRANT_READ_URI_PERMISSION);

}

else {

List<ResolveInfo> resInfoList=

getPackageManager()

.queryIntentActivities(i, PackageManager.MATCH_DEFAULT_ONLY);

for (ResolveInfo resolveInfo : resInfoList) {

String packageName = resolveInfo.activityInfo.packageName;

grantUriPermission(packageName, outputUri,

Intent.FLAG_GRANT_WRITE_URI_PERMISSION |

Intent.FLAG_GRANT_READ_URI_PERMISSION);

}

}

startActivityForResult(i, REQUEST_ID);

}

}

@Override

protected void onSaveInstanceState(Bundle outState) {

super.onSaveInstanceState(outState);

outState.putSerializable(EXTRA_FILENAME, output);

}

@Override

protected void onActivityResult(int requestCode, int resultCode,

Intent data) {

if (requestCode==REQUEST_ID && resultCode==RESULT_OK) {

Intent view=

new Intent(Intent.ACTION_VIEW)

.setDataAndType(outputUri, "video/mp4")

.addFlags(Intent.FLAG_GRANT_READ_URI_PERMISSION);

startActivity(view);

finish();

}

}

}

onCreate() of MainActivity starts by setting up a File object pointing to a sample.mp4

file in internal storage. If the file already

exists, onCreate() deletes it; otherwise it ensures that the directory

already exists. We then go through much of the same headache that we

did in the ACTION_IMAGE_CAPTURE scenario, creating a Uri for our

FileProvider that points to our designated File, ensures that the

video-recording app has read/write access to our Uri, before finally

calling startActivityForResult().

The call to startActivityForResult() will trigger the third-party app to record

the video. When control returns to MainActivity, onActivityResult()

creates an ACTION_VIEW Intent for the same Uri, then calls startActivity()

to request that some app play back the video.

And, as before, we hold onto the File object via the saved instance

state Bundle, and we only record the video if there is no such

saved instance state Bundle, in case there is a configuration change

causing our activity to be destroyed and recreated.

There is only one problem: this app is less likely to work on your device

that did the ACTION_IMAGE_CAPTURE sample.

Camera apps need to be able to support content Uri values for EXTRA_OUTPUT

for both still images and video. However,

Google did not support this in their own camera app,

decreasing the likelihood that anyone else supports it.

You can elect to use a file Uri, pointing to a location on

external storage. However, that will require you to keep your targetSdkVersion

at 23 or lower, as once you go above that, file Uri values are

banned in Intent objects on Android 7.0.

CameraActivity Of Your OwnRelying upon third-party applications for taking pictures does introduce some challenges:

ACTION_IMAGE_CAPTURE

and ACTION_VIDEO_CAPTURE well… and others do not. Some might only

ever give you a thumbnail, or some might not support all valid Uri

values for writing out the output, and so on.BitmapFactory.The alternative to relying upon a third-party app is to implement camera functionality within your own app. For that, you have three major options:

android.hardware.Camera API, added to Android way back in

API Level 1, but marked as deprecated in API Level 21android.hardware.camera2 API, added to Android in API Level

21 as a replacement for android.hardware.Camera, but therefore is

only useful on its own if your minSdkVersion is 21 or higherThe author of this book has written two such libraries. One works but

has a lot of compatibility issues.

Plus, that library relied upon the android.hardware.Camera API, and

device manufacturers may not test that API quite as much in the future,

given that it is now deprecated.

The replacement library is CWAC-Cam2.

The API for this library is designed to generally mimic ACTION_IMAGE_CAPTURE

and ACTION_VIDEO_CAPTURE, making it easier for you to switch to

this library, or even offer support for both third-party camera apps

(via ACTION_IMAGE_CAPTURE and ACTION_VIDEO_CAPTURE) or your own

built-in camera support.

This section outlines how to use CWAC-Cam2. Note, though, that this library is very young and under active development, so there may be changes to this API that are newer than the prose in this section. Be sure to read the project documentation as well to confirm what is and is not supported.

The recipe for adding CWAC-Cam2 to your Android Studio project is

similar to the recipe used by other CWAC libraries: add the CWAC

repository, then add the artifact itself as a dependency. That involves

adding the following snippet to your module’s build.gradle file:

repositories {

maven {

url "https://repo.commonsware.com.s3.amazonaws.com"

}

}

dependencies {

compile 'com.commonsware.cwac:cam2:0.1.+'

}

If HTTPS is unavailable to you, you can downgrade the URL to HTTP.

The CameraActivity should be added to your manifest automatically, courtesy

of Gradle for Android’s manifest merger process.

To take still images, you create an Intent to launch the CameraActivity

and implement onActivityResult(), just as you would do with

ACTION_IMAGE_CAPTURE. However, CameraActivity provides an IntentBuilder

that makes it a bit easier to assemble the Intent with the features

that you want, as CameraActivity supports much more than the limited

roster of extras documented for ACTION_IMAGE_CAPTURE.

To create the Intent to pass to startActivityForResult() and take

the picture, create an instance of CameraActivity.IntentBuilder,

call zero or more configuration methods to describe the picture

that you want to take, then call build() to build the Intent.

By default, the Intent created by IntentBuilder will give you

a thumbnail version of the image. If you want to get a full-size

image written to some file, call to() on the IntentBuilder,

supplying a File or a Uri to write to. Note that since this activity

is in your app, you should be able to write the image to internal

storage if you so choose.

In addition:

MediaStore to index the newly-taken picture,

call updateMediaStore() on the IntentBuilder.skipConfirm()

on the IntentBuilder to skip this confirmation screenfacing(CameraSelectionCriteria.Facing.FRONT) to start with the

front-facing camera. Note that the activity will ignore your requested

Facing value if there is no such camera.So, for example, you could have the following code somewhere in one of your activities, to allow the user to take a picture:

Intent i=new CameraActivity.IntentBuilder(this)

.facing(CameraSelectionCriteria.Facing.FRONT)

.to(new File(getFilesDir(), "picture.jpg"))

.skipConfirm()

.build();

startActivityForResult(i, REQUEST_PICTURE);

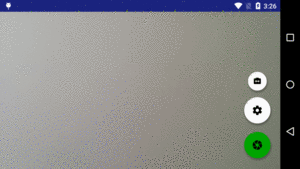

When the CameraActivity starts, the user is greeted with a large preview,

with a pair of floating buttons over the bottom right side:

Figure 802: CWAC-Cam2 CameraActivity

The green button will take a picture. The “settings” button above it is a floating action menu — when tapped, the menu exposes other smaller buttons for specific actions, such as switching between the rear-facing and front-facing cameras (where available):

Figure 803: CWAC-Cam2 CameraActivity, Showing Camera Switch Button

Tapping the green button takes the picture and returns control to your activity.

Handling the results of the startActivityForResult() call works

much like that for ACTION_IMAGE_CAPTURE. If the request code passed

to onActivityResult() is the one you supplied to the corresponding

startActivityForResult() call (e.g., REQUEST_PICTURE), check

the result code.

If the result code is Activity.RESULT_CANCELED, that means that the

user did not take a picture. It could be that the device does not

have a camera (use <uses-feature> elements to better control this)

or that the user declined to take a picture and pressed BACK to exit

the CameraActivity.

If the result code is Activity.OK, and you did not call to() on the

IntentBuilder, call data.getParcelableExtra("data") to get the

thumbnail Bitmap of the picture taken by the user.

If the result code is Activity.OK, and you did call to(), your

image should be written to the location that you designated in that

to() call. For convenience, this same value is returned in the

Intent handed to onActivityResult() — call getData() on that

Intent to get your Uri value.

As seen earlier in this chapter, MediaStore offers ACTION_VIDEO_CAPTURE,

as the video counterpart to ACTION_PICTURE_CHAPTER. CWAC-Cam2 also

supports video capture, via the VideoRecorderActivity as a counterpart

to the CameraActivity. The basic flow is the same: build the Intent,

call startActivityForResult(), and deal with the video when the recording

is complete.

VideoRecorderActivity has its own IntentBuilder that supports

many of the same methods as does CameraActivity.IntentBuilder, including

facing() and updateMediaStore(). It also supports the version

of the to() method that takes a File, but not one that takes a Uri,

due to limitations in the underlying video recording code. Note that to()

is required for VideoRecorderActivity, as videos are always written

to files.

It also offers a few new builder-style methods, including:

quality(), into which you can pass Quality.HIGH or Quality.LOW.

Quality.HIGH will aim to give you the best possible resolution, while

Quality.LOW will aim to give you something low-resolution, suitable

for stuff like MMS messages.sizeLimit(), which will aim to cap the video size at around the supplied

size in bytesdurationLimit(), which will aim to cap the video duration at around the

supplied duration in secondsSo, you could have:

Intent i=new VideoRecorderActivity.IntentBuilder(this)

.facing(CameraSelectionCriteria.Facing.FRONT)

.to(new File(getFilesDir(), "test.mp4"))

.quality(VideoRecorderActivity.Quality.HIGH)

.build();

startActivityForResult(i, REQUEST_VIDEO);

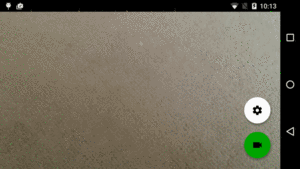

The VideoRecorderActivity UI is very similar to the CameraActivity

UI, with a pair of FABs:

Figure 804: CWAC-Cam2 VideoRecorderActivity

However, the main FAB is green and shows a video camera icon. Tapping

that begins recording, and the FAB switches to a red background with a

stop icon. Tapping the FAB again stops recording and control returns

to whatever activity had started the VideoRecorderActivity.

As with CameraActivity, handling the results of the startActivityForResult() call works

much like that for ACTION_VIDEO_CAPTURE. If the request code passed

to onActivityResult() is the one you supplied to the corresponding

startActivityForResult() call (e.g., REQUEST_VIDEO), check

the result code.

If the result code is Activity.RESULT_CANCELED, that means that the

user did not take a picture, either because the device does not have

a camera or the user pressed BACK without recording a video.

If the result code is Activity.OK, your

video should be written to the location that you designated in your

to() call on the builder. For convenience, this same value is returned in the

Intent handed to onActivityResult() — call getData() on that

Intent to get your Uri value.

Of course, you can bypass these third-party apps and libraries, electing instead to work directly with the camera if you so choose. This is very painful, as will be illustrated in the next chapter.