Whereas perception is very much concerned with making sense of the external environment, attentional processes lie at the interface between the external environment and our internal states (goals, expectations, and so on). The extent to which attention is driven by the environment (our attention being grabbed, so-called bottom-up) or our goals (our attention being sustained, so called top-down) can vary according to the circumstances. In most cases both forces are in operation and attention can be construed as a cascade of bottom-up and top-down influences in which selection takes place.

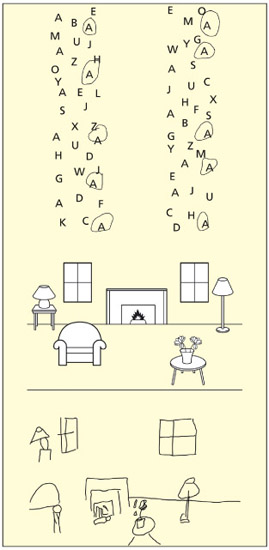

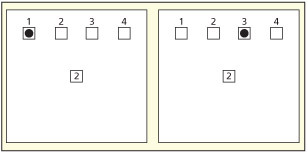

In change detection tasks, two different images alternate quickly (with a short blank in between). Participants often appear “blind” to changes in the image (here, the height of the wall) and this is linked to limitations in attentional capacity.

In terms of visual attention, one of the most pervasive metaphors is to think about attention in terms of a spotlight. The spotlight may highlight a particular location in space (e.g. if that location contains a salient object). It may move from one location to another (e.g. when searching) and it may even zoom in or out (La Berge, 1983). The locus of the attentional spotlight need not necessarily be the same as the locus of eye fixation. It is possible, for example, to look straight ahead while focusing attention to the left or right when metaphorically “looking out of the corner of one’s eyes.” However, there is a natural tendency for attention and eye fixation to go together because visual acuity (discriminating fine detail) is greatest at the point of fixation. Moving the focus of attention is termed orienting and is conventionally divided into covert orienting (moving attention without moving the eyes or head) and overt orienting (moving the eyes or head along with the focus of attention). It is important not to take the spotlight metaphor too literally. For example, there is evidence to suggest that attention can be split between two non-adjacent locations without incorporating the middle locations (Awh & Pashler, 2000). The most useful aspects of the spotlight metaphor are to emphasize the notion of limited capacity (not everything is illuminated), and to emphasize the typically spatial characteristics of attention. However, there are non-spatial attentional processes too as described later.

Attention has been likened to a spotlight that highlights certain information or a bottleneck in information processing. But how do we decide which information to select and which to ignore?

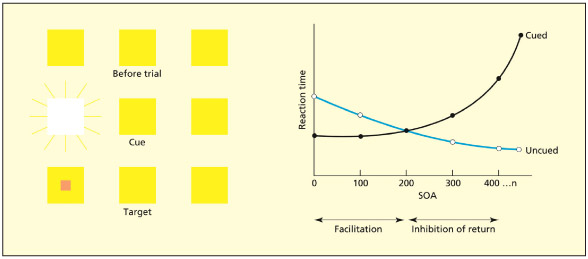

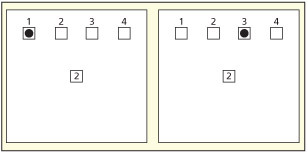

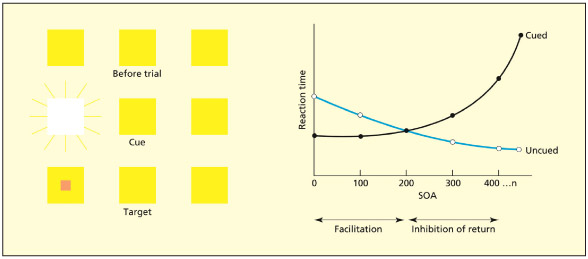

Posner described a classic study to illustrate that attention operates on a spatial basis (Posner, 1980; Posner & Cohen, 1984). The participants were presented with three boxes on the screen in different positions: left, central, and right. The task of the participant was simply to press a button when they detected a target in one of the boxes. On “catch trials” no target appeared. At a brief interval before the onset of the target, a cue would also appear in one of the locations such as an increase in luminance (a flash). The purpose of the cue was to summon attention to that location. On some trials the cue would be in the same box as the target and on others it would not. As such, the cue is completely uninformative with regards to the later position of the target. When the cue precedes the target by up to 150 ms, participants are significantly faster at detecting the target at that location. The cue captures the attentional spotlight and this facilitates subsequent perceptual processing at that location. At longer delays (above 300 ms) the reverse pattern is found: participants are slower at detecting a target in the same location as the cue. This can be explained by assuming that the spotlight initially shifts to the cued location, but if the target does not appear, attention shifts to another location (called “disengagement”). There is a processing cost in terms of reaction time associated with going back to the previously attended location. This is called inhibition of return.

Key Terms

Overt orienting

The movement of attention accompanied by movement of the eyes or body.

Inhibition of return

A slowing of reaction time associated with going back to a previously attended location.

Exogenous orienting

Attention that is externally guided by a stimulus.

Endogenous orienting

Attention is guided by the goals of the perceiver.

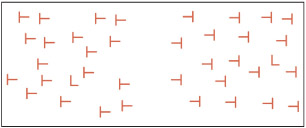

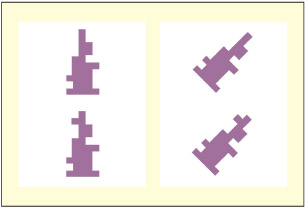

How does the spotlight know where to go? Who controls the spotlight? In the Posner spatial cueing task, the spotlight is attracted by a sudden change in the periphery. That is, attention is externally guided and bottom-up. This is referred to as exogenous orienting. However, it is also possible for attention to be guided, to some degree, by the goals of the perceiver. This is referred to as endogenous orienting. As an example of this, La Berge (1983) presented participants with words and varied the instructions. In one instance, they were asked to attend to the central letter and on another occasion they were asked to attend to the whole word. When attending to the central letter participants were faster at making judgments about that letter but not other letters in the word. In contrast, when asked to attend to the whole word they were faster at making judgments about all the letters. Thus, the attentional focus can be manipulated by the demands of the task (i.e. top-down). Another commonly used paradigm that uses endogenous attention is called visual search (Treisman, 1988). In visual search experiments, participants are asked to detect the presence or absence of a specified target object (e.g. the letter “F”) in an array of other distracting objects (e.g. the letters “E” and “T”). As discussed in more detail later, visual search is a good example of a mix of bottom-up processing (perceptual identification of objects and features) and top-down processing (holding in mind the target and endogenously driven orienting of attention).

Participants initially fixate at the central box. A brief cue then appears as a brightening of one of the peripheral boxes. After a delay (called the “stimulus onset asynchrony,” or SOA), the target then appears in the cued or uncued box. Participants are faster at detecting the target in the cued location if the target appears soon after the cue (facilitation) but are slower if the target appears much later (inhibition).

Key Term

Visual search

A task of detecting the presence or absence of a specified target object in an array of other distracting objects.

Do you see a face or a house? The ability to shift between these percepts is an example of object-based attention.

Examples of non-spatial attention mechanisms include object-based attention and time-based/temporal (not to be confused with temporal lobes) attentional processes. With regards to object-based attention, if two objects (e.g. a house and a face) are transparently superimposed in the same spatial location then participants can still selectively attend to one or the other. This has consequences for neural activity—the attended object is linked to a greater BOLD response in its corresponding brain region given that extrastriate visual cortex contains regions that respond differently to different stimuli (O’Craven et al., 1999). So attending to a face will activate the fusiform face area, and attending to a house will activate the parahippocampal place area even though both objects are in the same spatial location. It also has consequences for cognition: for instance, the unattended object will be responded to more slowly if it now becomes task-relevant (Tipper, 1985). With regards to Posner-style cueing tasks, research has shown that inhibition of return is partly related to the spatial location itself and partly related to the object that happens to occupy that location (Tipper et al., 1991). If the object moves, then the inhibition can also move with the object rather than remaining entirely at the initial location.

Key Term

Attentional blink

An inability to report a target stimulus if it appears soon after another target stimulus.

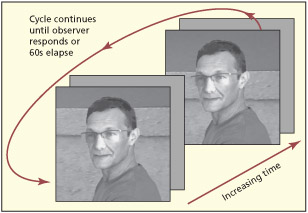

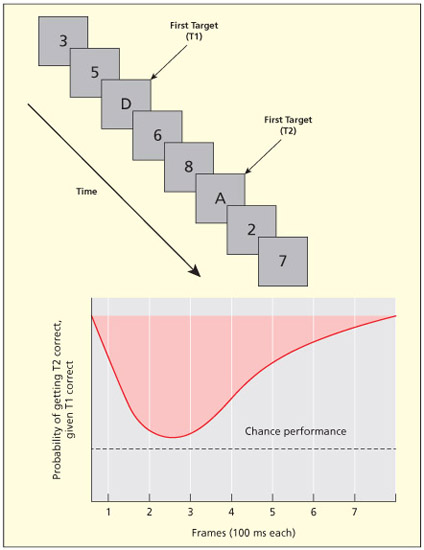

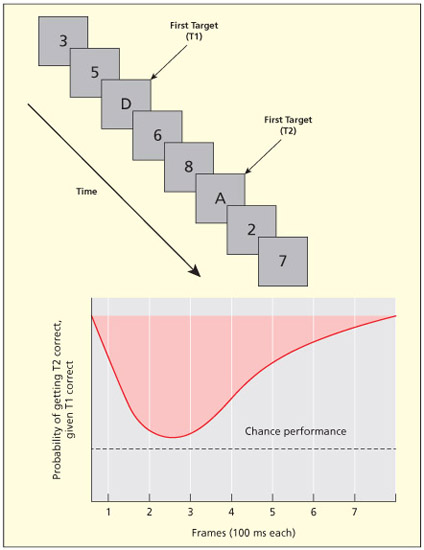

In the attentional blink paradigm, there is a fast presentation of stimuli and participants are asked to report which targets they saw (e.g. reporting letters among digits; “D and A” being the correct answer in this example). Participants fail to report the second target when it appears soon after an initial target. The initial target (T1) may take over our limited attentional capacity leading to an apparent “blindness” of a subsequent target (T2).

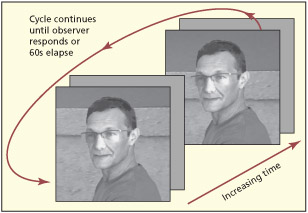

The best example of attention also operating in a temporal domain comes from the attentional blink (Dux & Marois, 2009; Raymond et al., 1992). In the attentional blink paradigm, a series of objects (e.g. letters) are presented in rapid succession (~10 per second) and in the same spatial location. The typical task is to report two targets that may appear anywhere within the stream which are referred to as T1 and T2 (e.g. white letters among black; or letters among digits). What is found is that participants are “blind” to the second target, T2, when it occurs soon after the first target, T1 (typically 2–3 items later). This is believed to reflect attention rather than perception because it is strongly modulated by the task. The effect is found when participants are instructed to attend to the first target but not when instructed to ignore it (Raymond et al., 1992).

Key Terms

Lateral intraparietal area (LIP)

Contains neurons that respond to salient stimuli in the environment and are used to plan eye movements.

Saccade

A fast, ballistic movement of the eyes.

This section considers the role of the parietal lobes (and to a lesser degree the frontal lobes) in attention. The frontal lobes are considered in more detail elsewhere with regards to action selection (Chapter 8), working memory (Chapter 9), and executive functions (Chapter 14). The first part of this section considers mechanisms of spatial attention and how this relates to the notion of a “where” pathway. The second part of the section considers hemispheric asymmetries in function considering both spatial and non-spatial attention processes. The final part considers the interplay between perception, attention, and awareness.

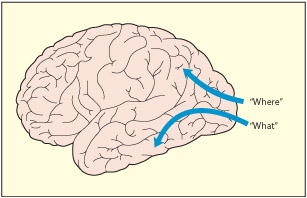

The “where” pathway, salience maps, and orienting of attention

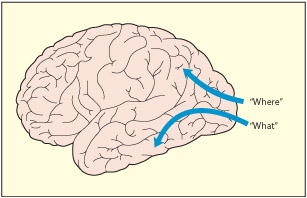

From early visual processing in the occipital cortex, two important pathways can be distinguished that may be specialized for different types of information (Ungerleider & Mishkin, 1982). A ventral route (or “what” pathway) leading into the temporal lobes may be concerned with identifying objects. In contrast, a dorsal route (or “where” pathway) leading in to the parietal lobes may be specialized for locating objects in space. The dorsal route also has an important role to play in attention, spatial or otherwise. The dorsal route also guides action toward objects and some researchers also consider it a “how” pathway as well as a “where” pathway (Goodale & Milner, 1992).

Later stages of visual processing are broadly divided into two routes: a “what” pathway (or ventral stream) is involved in object perception and memory, whereas a “where” pathway (or dorsal stream) is involved in attending to and acting upon objects.

Single-cell recordings from the monkey parietal lobe provide important insights into the neural mechanisms of spatial attention. Bisley and Goldberg (2010) summarize evidence that a region in the posterior parietal lobe, termed LIP (lateral intraparietal area), is involved in attention. This region responds to external sensory stimuli (vision, sound) and is important for eliciting a particular kind of motor response (eye movements, termed saccades). Superficially then, it could be labeled as a sensorimotor association region. However, a closer inspection of its response properties reveals how it may play an important role in attention. First, this region does not respond to most visual stimuli, but rather has a sparse response profile such that it tends to respond to stimuli that are unexpected (e.g. abrupt, unpredictable onsets) or relevant to the task. When searching for a target in an array of objects (e.g. a red triangle), LIP neurons tend to respond more strongly when the target lands in its receptive field than when a distractor (e.g. a blue square) does (Gottlieb et al., 1998). So it isn’t related to sensory stimulation per se. Moreover, sudden changes in luminance are a salient stimulus to these neurons (Balan & Gottlieb, 2009), analogous to how luminance changes drive attention in the Posner cueing task. As such, neurons in this region have characteristics associated with both exogenous and endogenous attention. It has been suggested that area LIP contains a salience map of space in which only the locations of the most behaviorally relevant stimuli are encoded (e.g.Itti & Koch, 2001). This is clearly reminiscent of the filter or spotlight metaphor of attention in cognitive models that selects only a subset of information in the environment.

Key Terms

Salience map

A spatial layout that emphasizes the most behaviorally relevant stimuli in the environment.

Remapping

Adjusting one set of spatial coordinates to be aligned with a different coordinate system.

In addition to representing the saliency of visual stimuli, neurons in LIP also respond to the current position of the eye (in fact, its responsiveness depends on the two sources of information being multiplied together). This information can be used to plan a saccade—i.e. overt orienting of attention. There is also evidence that they may support covert orienting. Lesioning LIP in one hemisphere leads to slower visual search in the contralateral (not ipsilateral) visual field even in the absence of saccades (Wardak et al., 2004).

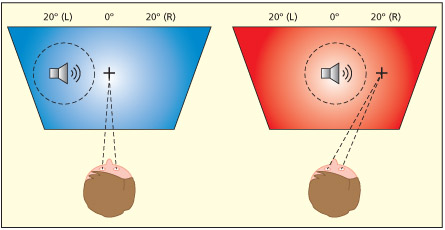

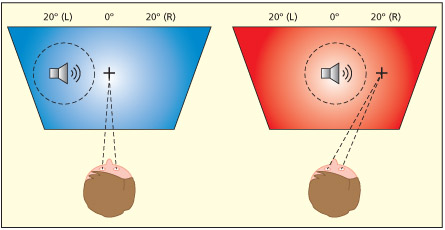

Spatial attention to sounds is also associated with activity in LIP neurons and this can also be used to plan saccades (Stricanne et al., 1996). Thus, this part of the brain is multi-sensory. In order to link sound and vision together on the same salience map it requires the different senses to be spatially aligned or remapped. This is because the location of sound is coded relative to the angle of the head/ears, but the location of vision is coded (at least initially) relative to the angle of the eyes. Some neurons in LIP transform sound locations to be relative to the eyes so they can be used to plan saccades, instead of relative to the head/ears (Stricanne et al., 1996).

An example of an auditory neuron that responds to sounds that have been “remapped” into eye-centered coordinates. These neurons are found in brain regions such as LIP and the superior colliculus. This neuron responds to sounds about 20 degrees to the left of fixation irrespective of whether the sound source itself comes from the left (left figure) or centre of space (right figure). This enables orienting of the eyes to sounds.

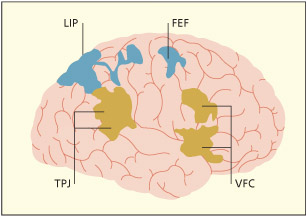

In humans, using fMRI, presenting an arrow (an endogenous cue for spatial orienting) is associated with brief activity in visual cortical regions followed by sustained activity in posterior parietal lobes (including the likely homologue of area LIP) and a frontal region called the frontal eye field, FEF (Corbetta et al., 2000). This activity occurs irrespective of whether the required response is one of covert orienting of attention, a saccade, or a pointing response (Astafiev et al., 2003). That is, it reflects a general orienting of attention. It may also not be spatially specific as a similar region is implicated in orienting attention between spatially superimposed objects (Serences et al., 2004). Bressler et al. (2008) examined the functional connectivity among this network during presentation of a preparatory orienting stimulus (the spoken words “left” or “right”) prior to a visual stimulus and concluded that, in this situation, the directionality of activation was top-down: that is, from frontal regions, to parietal regions, to visual occipital cortex. Of course, in other situations (an exogenous cue) it may operate in reverse.

Key Term

Frontal eye field (FEF)

Part of the frontal lobes responsible for voluntary movement of the eyes.

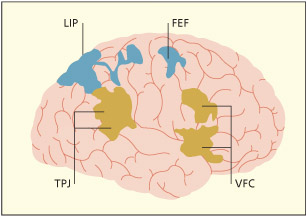

According to Corbetta and Shulman (2002), this is only one of two major attentional networks involving the parietal lobes. They suggest that the dorsal stream should be reconsidered as split into two: a dorso-dorsal branch and a ventro-dorsal branch (for a related proposal see Rizzolatti & Matelli, 2003). They conceptualize the role of the dorso-dorsal stream in attention as one of orienting within a salience map (as described above) and involving the LIP and FEF. By contrast, they regard the more ventro-dorsal branch as a “circuit breaker” that interrupts ongoing cognitive activity to direct attention outside of the current focus of processing. This attentional disengagement mechanism is assumed to involve the temporoparietal region (and ventral prefrontal cortex) and is considered to be more strongly right lateralized. For instance, activity in this region is found when detecting a target (but not when processing a spatial cue) whereas activity in the LIP region shows a strong response to the cue (Corbetta et al., 2000). Activity in the right temporoparietal region is enhanced when detecting an infrequent target particularly if it is presented at an unattended location (Arrington et al., 2000). Downar et al. (2000) found that several frontal areas as well as the temporoparietal junction (TPJ) were activated when participants were monitoring for a stimulus change, independently of whether the change occurred in auditory, visual or tactile stimuli.

Hemispheric differences in parietal lobe contributions to attention

Corbetta and Schulman (2002) have suggested that there are two main attention-related circuits involving the parietal lobes: a dorso-dorsal circuit (involving LIP) that is involved in attentional orienting within a salience map; and a more ventral circuit (involving right TPJ) that diverts attention away from its current focus.

The parietal lobes of the right and left hemispheres represent the full visual field (unlike early parts of visual cortex) but do so in a graded fashion that favors the contralateral side of space (Pouget & Driver, 2000). So the right parietal lobe shows a maximal responsiveness to stimuli on the far left side, a moderate responsiveness to the middle, and a weaker response to the far right side. The left parietal lobe shows the reverse profile. One consequence of this is that damage to the parietal lobes in one hemisphere leads to left-right spatially graded deficits in attention. For instance, damage to the right parietal lobe would lead to less attentional resources allocated to the far left side, moderate attentional resources to the midline, and near-normal attentional abilities on the right. This disorder is called hemispatial neglect, in which patients fail to attend to stimuli on the opposite side of space to the lesion. Neglect is normally far more severe following right hemisphere lesions, resulting in failure to attend to the left. This suggests that, in humans, there is likely to be a hemispheric asymmetry such that the right parietal lobe is more specialized for spatial attention than the left. Another possible way of conceptualising this is that the right parietal lobe makes a larger contribution to the construction of a salience map than the left side: resulting in a normal bias for the left side of space to be salient (a phenomenon termed pseudo-neglect, see BOX) and for the left side of space to be, therefore, particularly vulnerable to the effects of brain damage (neglect)

Why Do Actors Make a Hidden Entrance from Stage Right?

The right parietal lobes of humans are generally considered to have a more dominant role in spatial attention than its left hemisphere equivalent. One consequence of this is that right-hemisphere lesions have severe consequences for spatial attention, particularly for the left space (as in the condition of “neglect”). Another consequence of right-hemisphere spatial dominance is that, in a non-lesioned brain, there is over-attention to the left side of space (termed pseudoneglect). For example, there is a general tendency for everyone to bisect lines more to the left of center (Bowers & Heilman, 1980). This phenomenon may explain why actors enter from stage right when they do not wish their entrance to be noticed (Dean, 1946). It may also explain why pictures are more likely to be given titles referring to objects on the left, and why the left side of pictures feels nearer than the right side of the same picture when flipped (Nelson & MacDonald, 1971). The light in paintings is more likely to come from the left side and people are faster at judging the direction of illumination when the source of light appears to come from the left (Sun & Perona, 1998). Moreover, we are less likely to bump into objects on the left than the right (Nicholls et al., 2007). Thus, there is a general leftwards attentional bias in us all.

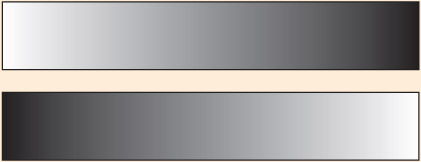

Which bar appears darker: the one on the top or the bottom? Most people perceive the bottom bar as being darker because of an attentional bias to the left caused by a right-hemisphere dominance for space/attention, even though the two images are identical mirror images.

Key Terms

Hemispatial neglect

A failure to attend to stimuli on the opposite side of space to a brain lesion.

Pseudo-neglect

In a non-lesioned brain there is over attention to the left side of space.

Parietal lobe lesions can also result in non-spatial deficits of attention. Husain et al. (1997) found that neglect patients had an unusually long “blind” period in the attentional blink task in which stimuli were presented centrally. Again, this can be construed in terms of both hemispheres making a contribution to the normal detection of salient stimuli (in this task, the second target in a rapidly changing stream). When one hemisphere is damaged then the attentional resource is depleted.

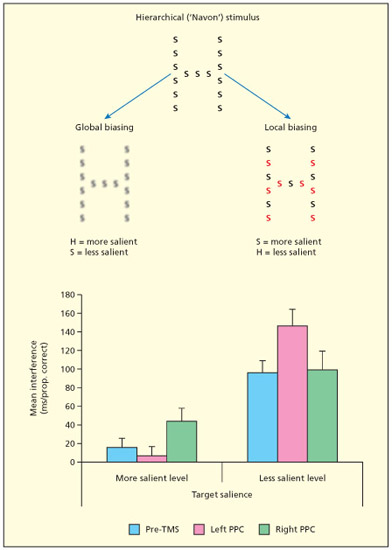

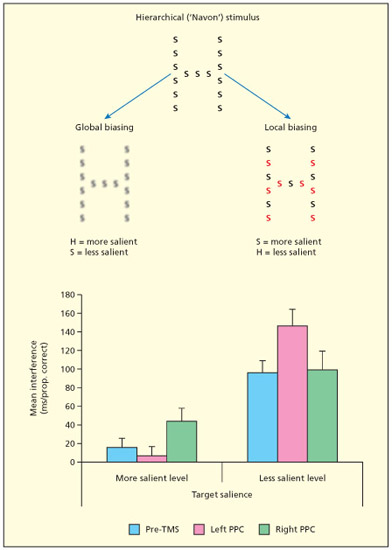

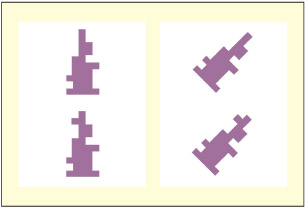

Having alternating colors makes local elements salient, but blurring the local elements makes the global shape more salient. TMS over the right posterior parietal cortex disrupts the ability to detect the more salient element (e.g. the local S in the right example, and the global “H” in the left example). TMS over the left posterior parietal cortex disrupts the ability to detect the less salient element (e.g. the global “H” in the right example, and the local S in the left example).

Spatial Attention Across the Senses—Ventriloquist and Rubber Hand Illusions

In ventriloquism, there is a conflict between the actual source of a sound (the ventriloquist him or herself) and the apparent source of the sound (the dummy). In this instance, the sound appears to come from the dummy the dummy has associated lip movements whereas the lip movements of the ventriloquist are suppressed. In other words, the spatial location of the visual cue “captures” the location of the sound.

Why is it that sound tends to be captured by the visual stimulus but not vice versa? One explanation is that the ability to locate things in space is more accurate with vision than audition, so when there is a mismatch between them, the brain may default to the visual location. Witkin et al. (1952) found that sound localization was impaired in the presence of a visual cue in a conflicting location. Driver and Spence (1994) found that people are able to repeat back a speech stream (or “shadow”) more accurately when lip movements and the loudspeaker are on the same side of space than when they are on opposite sides.

In the brain, there are multi-sensory regions such as in the superior temporal sulcus and intraparietal sulcus that respond selectively to sound and vision when both occur at the same time or in the same location (Calvert, 2001). For instance, the superior temporal sulcus shows greater activity to synchronous audio-visual speech than asynchronous speech (Macaluso et al., 2004). However, when there is a spatial mismatch between the auditory and visual locations of synchronous speech then the right inferior parietal lobe is activated (Macaluso et al., 2004). This audio-visual spatial mismatch is found in the ventriloquist illusion and may involve the shifting or suppression of the heard location or, conversely, “grabbing” of spatial attention by the visual modality.

More bizarrely, there is an analogue of the ventriloquist effect in the tactile modality. Botvinick and Cohen (1998) placed participants’ hand behind a hidden screen and placed a rubber hand on the visible side of the screen. Watching the rubber hand stroked with a paintbrush while their own (unseen) hand was stroked with a paintbrush could induce a kind of “out of body” experience. Participants report curious sensations such as, “I felt as if the rubber hands were my hands.” In this instance, there is a conflict between the seen location of the (rubber) hand and felt bodily location of the real hand: the conflict is resolved by visual capture of the tactile sensation.

Mevorach and colleagues have proposed that the left and right parietal lobes have different roles in non-spatial attention: specifically the right hemisphere is considered important for attending to a salient stimulus, and the left hemisphere is important for suppressing a non-salient stimulus or “ignoring the elephant in the room” (Mevorach et al., 2010; Mevorach et al., 2006). Their non-spatial manipulation of saliency involved making certain elements of the display easier to perceive. For instance, a figure comprised of an “H” made up of small S’s can be altered so that either the “H” is more salient (blurring the S’s) or the S is more salient (using alternating colors). fMRI shows that the left intraparietal sulcus is involved when the task is to focus on non-salient features (and ignore salient ones)—such as finding the local S’s in a blurred global “H” (Mevorach et al., 2006). Disruption of this region using TMS (but not the right hemisphere region) interferes with the ability to do this task and disrupts the connectivity between this region and those in the occipital lobe that are presumably engaged in shape processing. By contrast, the right intraparietal cortex responds more when the task is to identify the salient features (and ignore the non-salient ones) and TMS to this region disrupts that task (Mevorach et al., 2006). What is presently unclear is how these sorts of non-spatial selection mechanisms relate to the spatially specific deficits seen in neglect. One possibility is that neglect comprises a variety of attention-related deficits, some that are spatially specific (the defining symptoms of neglect) and some that are not. Whatever the relationship to neglect, there is an emerging consensus that attention itself can be fractionated into different kinds of mechanisms (Riddoch et al., 2010).

The relationship between attention, perception, and awareness

The terms “attention,” “perception,” and “awareness” are all common in everyday usage although most of us would be pushed to give a good definition of them in either lay or scientific terms. To begin with, a simple working definition may suffice (although more detailed nuances will be introduced later). Attention is a mechanism for the selection of information. Awareness is an outcome (a conscious state) that is, in many theories, linked to that mechanism. Perception is the information that is selected from and, ultimately, forms the content of awareness. Needless to say it is possible to be aware of, and attend to, things that are not related to perception (e.g. one’s own thoughts and feelings) and it is assumed that broadly similar mechanisms operate here with, possibly, an additional prefrontal gating mechanism that switches the focus of attention between the external environment and inner thoughts (Burgess et al., 2007).

Considering first the relationship between perception and attention, a number of studies have explored what happens in brain regions responsible for perception (e.g. in the visual ventral stream) when a stimulus is attended versus unattended. In general, when an object (e.g. a face) or a perceptual feature (e.g. motion) is attended then there is an increased activity, measured with fMRI, in brain regions that are involved in perceiving those stimuli relative to when they are unattended (Kanwisher & Wojciulik, 2000). This, however, also depends on how difficult the attention-demanding task is. For instance, Rees et al. (1997) instructed participants to attend to words (in a language-based task) and ignore visual motion in the periphery. The activity in the motion-sensitive area V5/MT was reduced when the language-based task was difficult compared with when it was easy. This is compatible with the notion of attention being a limited resource, with less of the resource available for (bottom-up) processing of irrelevant perceptual information when (top-down) task demands are high.

There is evidence that attention can affect activity in visual cortex (including in V1) even in the absence of a visual stimulus (Kastner et al., 1999). This increase appears to be related to attended locations rather than attended features such as color or motion (McMains et al., 2007). It is also related to attended modalities. Increases in BOLD activity are found in visual, auditory or tactile cortices when a stimulus is expected in that modality but is linked to decreases in the non-predicted modalities (Langner et al., 2011). This may reflect increased neural activity prior to the presentation of the stimulus. In monkeys it has been shown that neurons increase the spontaneous firing rate in an attended location even in the absence of a visual stimulus (Luck et al., 1997). In these examples in which no perceptual stimulation is present, it is clear that attention can sometimes operate in the absence of awareness of perceptual stimuli (Koch & Tsuchiya, 2007).

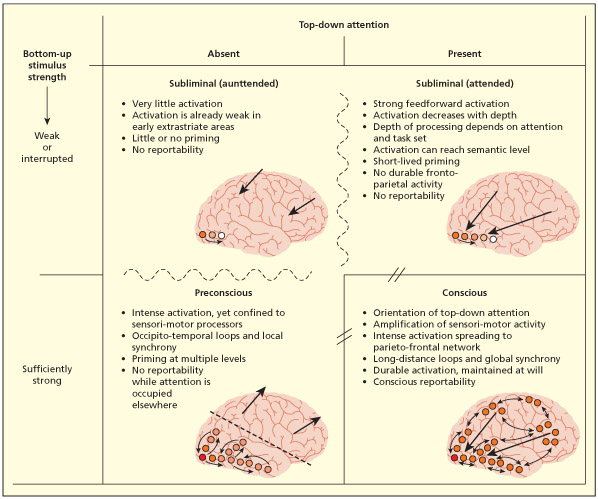

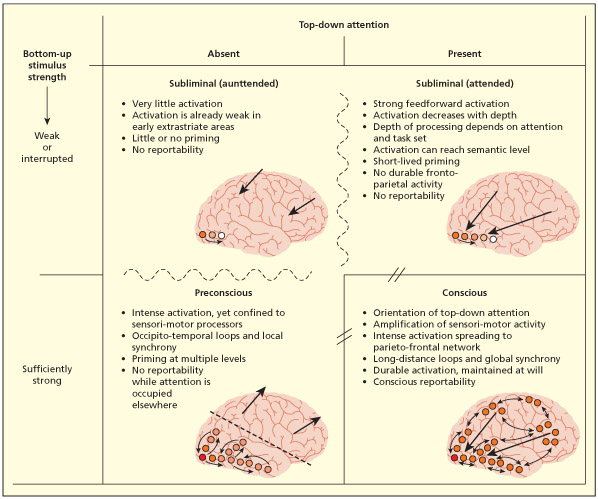

In the model of Dehaene et al. (2006), awareness is linked to top-down attention to a sufficiently strong sensory stimulus. This is associated with activity spreading to a frontal-parietal network. In contrast, non-aware conditions (e.g. attending to a very weak sensory signal, inattention to a strong sensory signal) are linked to varying levels of activity in sensory cortex alone.

The discussion above has centered on the links between perception and attention. What of the links between perception and awareness? Awareness, as typically defined, depends on participants’ ability to report on the presence of a stimulus. This can be studied by comparing stimuli that are perceived consciously versus those perceived unconsciously (e.g. presented too briefly to be reported). In such studies, conducted with fMRI, a typical pattern of brain activity has two main features: firstly, there is greater activity in regions involved in perception (e.g. ventral visual stream) when participants are aware of a stimulus than unaware and, secondly, that there is a spread of activity to distant brain regions (notably the frontal-parietal network) in the aware state (see Dehaene et al., 2006). It is this broadcasting of information that is often assumed to enable participants to be able to report on, or act on, the perceived information. Moreover, this network of regions in the frontal and parietal lobes are those typically implicated in studies of attention suggesting (to some researchers) that attention is the mechanism that gives rise to awareness of perception (Posner, 2012; Rees & Lavie, 2001). To give a concrete example, in the attentional blink paradigm activity in frontal and parietal regions discriminates between awareness versus unawareness of the second target (Kranczioch et al., 2005). It has been suggested that, in these circum stances, attentional selection generates an all-or-nothing outcome (some thing that is consciously seen or unseen) from information that is essentially continuous in nature, i.e. perceived to some degree (Vul et al., 2009).

Key Terms

Phenomenal consciousness

The “raw” feeling of a sensation, the content of awareness.

Access consciousness

The ability to report on the content of awareness.

This standard view of perception, attention and awareness is not universally accepted. One alternative viewpoint suggests that perceptual awareness can be sub-divided into two mechanisms: one relating to the experience of perceiving itself and one related to the reportability of that experience (Block, 2005; Lamme, 2010). These are often referred to as phenomenal consciousness and access consciousness respectively. In these models, the reportability of an experience is linked to the frontal-parietal network, but the actual experience of perceiving is assumed to lie within interactions in the perceptual processing network itself. For instance, there is evidence that the visibility of unattended stimuli is related solely to activity in the occipital lobe (Tse et al., 2005). In this view, attention is still related to awareness, but only to some aspects of awareness (i.e. its reportability).

Evaluation

This section has taken core ideas relating to the concept of attention (e.g. filtering irrelevant information, the spotlight metaphor, links to eye movements) and explained how these may be implemented in the brain. The parietal lobes has a key role due to it the fact that it interfaces between regions involved in executive control (top-down aspects of attention) and regions involved in perceptual processing (bottom-up aspects of attention). One of the emerging trends in the literature on attention, that has been driven largely by neuroscience evidence, is to consider attention in terms of separable but interacting component processes (e.g. orienting attention, disengaging attention, and so on). This is not surprising given that most other cognitive faculties (e.g. vision, memory) are now thought of in this way. However, it would be fair to say that there is less consensus over what the constituent components are (if any) in the attention domain. The next main section considers several specific models of attention that conform to the general principles discussed thus far.

This section considers in more detail three influential theories in the attention literature: the Feature Integration Theory proposed by Treisman and colleagues; Biased Competition Theory proposed by Desimone, Duncan, and colleagues; and the Premotor Theory of Rizzolatti and colleagues.

Feature integration theory

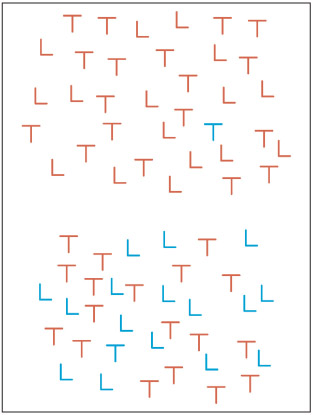

Feature integration theory (FIT) is a model of how attention selects perceptual objects and binds the different features of those objects (e.g. color and shape) into a reportable experience. Most of the evidence for it (and against it) has come from the visual search paradigm. Look at the two arrays of letters in the next figure. Your task is to try to find the blue “T” as quickly as possible.

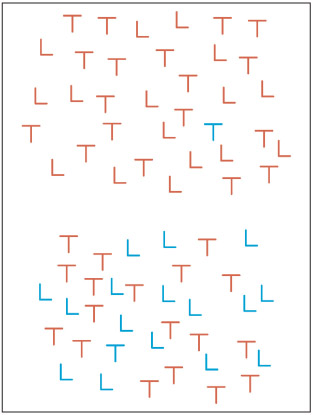

Try to find the blue “T” as quickly as possible. Why is one condition harder than the other? Feature-Integration Theory assumes that basic features are coded in parallel but that focused attention requires serial search. When the letter differs from others by a single feature, such as color, then it can be identified quickly by the initial stage of feature detection. When the letter differs from others by two or more features, then attention is needed to serially search.

Was the letter relatively easy to find in the first array, but hard to find in the second array? In the second case, did you feel as if you were searching each location in turn until you found it? In the first array, the target object (the blue “T”) does not share any features with the distractor objects in the array (red Ts and red Ls). The object can therefore be found from a simple inspection of the perceptual mechanism that supports color detection.

According to FIT, perceptual features such as color and shape are coded in parallel and prior to attention (Treisman, 1988; Treisman & Gelade, 1980). If an object does not share features with other objects in the array it appears to pop-out. In the second array, the distractors are made up of the same features that define the object. Thus, the object cannot be detected by inspecting the color module alone (because some distractors are blue) or by inspecting the shape module alone (because some distractors are T-shaped). To detect the target one needs to bring together information about several features (i.e. a conjunction of color and shape). Feature-Integration Theory assumes that this occurs by allocating spatial attention to the location of candidate objects. If the object turns out not to be the target, then the “spotlight” inspects the next candidate and so on in a serial fashion.

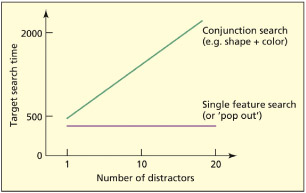

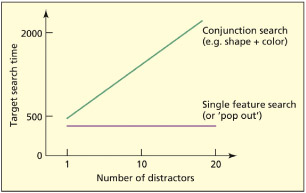

Typical data from a visual search experiment such as the one conducted by Treisman and Gelade (1980) is presented on p. 150. The dependent measure is the time taken to find the target (some arrays do not contain the target, but these data are not presented here). The variables manipulated were the number of distractors in the array and the type of distractor. When the target can only be found from a conjunction of features, there is a linearly increasing relationship between the number of distractors and time taken to complete the search. This is consistent with the notion that each candidate object must be serially inspected in turn. When a target can be found from only a single feature, it makes very little difference how many distractors are present, because it “pops out.” If attention is not properly deployed, then individual features may incorrectly combine. These are referred to as illusory conjunctions. For example, if displays of colored letters are presented briefly so that serial search with focal attention cannot take place, then participants may incorrectly say that they had seen a red “H” when in fact they had been presented with a blue “H” and a red “E” (Treisman & Schmidt, 1982). This supports the conclusion arising from FIT that attention needs to be deployed to combine features of the same object correctly.

Key Terms

Pop-out

The ability to detect an object among distractor objects in situations in which the number of distractors presented is unimportant.

Illusory conjunctions

A situation in which visual features of two different objects are incorrectly perceived as being associated with a single object.

According to FIT, when a target is defined by a conjunction of features, search becomes slower when there are more items, because the items are searched serially. When a target is defined by a single feature it may “pop out”; that is, the time taken to find it is not determined by the number of items in the array.

TMS applied over the parietal lobe slows conjunction searches but not single-feature searches (Ashbridge et al., 1997, 1999) and a functional imaging study has demonstrated parietal involvement in conjunction, but not single-feature searches (Corbetta et al., 1995). Patients with parietal lesions often show a high level of illusory con junction errors with brief presentation (Friedman-Hill et al., 1995).

One difficulty with FIT is that there is no a priori way to define what constitutes a “feature.” Features tend to be defined in a post hoc manner according to whether they elicit pop-out. For instance, it is generally assumed that the features consist of lines (e.g. vertical line, horizontal line) rather than letters (i.e. clusters of lines). However, some evidence does not support this assumption. For example, searching for an “L” among Ts is hard if the “T” is rotated at 180 or 270 degrees, and easier if the “T” is rotated at 0 or 90 degrees (Duncan & Humphreys, 1989). This occurs even though the basic features (horizontal and vertical lines) are equally present in them all. Duncan and Humphreys (1989) suggest that most of the data that FIT attempts to explain can also be explained in terms of how easy it is to perceptually group objects together rather than in terms of parallel feature perception followed by serial attention. They found that it is not just the similarity between the target and distractor that is important, but also the similarity between different types of distractor. This implies that there is some feature binding prior to attention and this contradicts a basic assumption of FIT.

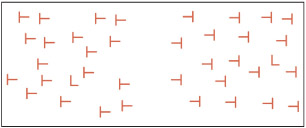

Searching for an “L” among “T” is hard if the “T” is rotated 180 degrees or 270 degrees (left), but easier if the “T” is rotated at 0 degrees or 90 degrees (right). It suggests that features in visual search consist of more than oriented lines, or that some form of feature integration takes place without attention.

Another issue is whether simple feature searches (e.g. a single blue letter among red letters) really occur without attention as assumed by FIT. An alternative position is that all visual search requires attention even in the case of pop-out stimuli. Wolfe (2003) argues that pop-out is not preattentive but is simply a stimulus driven (exogenous) cue of attention.

Key Terms

Early selection

A theory of attention in which information is selected according to perceptual attributes.

Late selection

A theory of attention in which all incoming information is processed up to the level of meaning (semantics) before being selected for further processing.

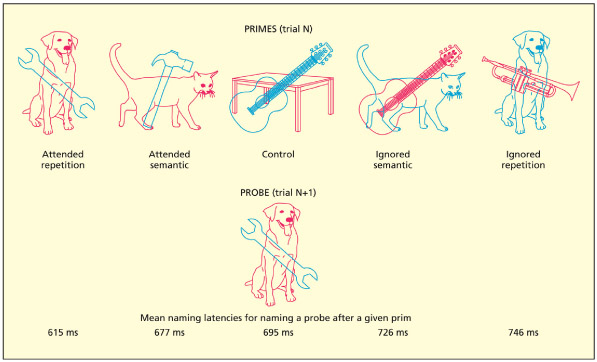

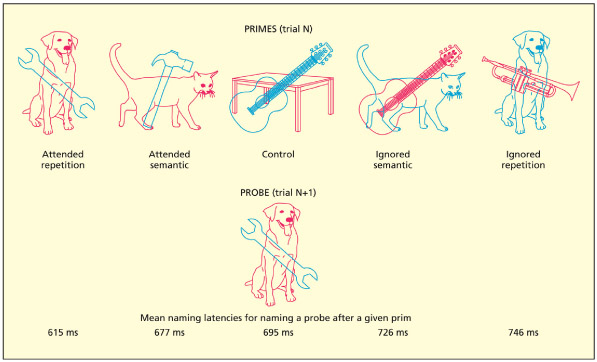

Finally, FIT is an example of what has been termed an early selection model of attention. Recall that the main reason for having attentional mechanisms is to select some information for further processing, at the expense of other information. According to early selection theories, information is selected according to perceptual attributes (e.g. color or pitch). This can be contrasted with late selection theories that assume that all incoming information is processed up to the level of meaning (semantics) before being selected for further processing. One of the most frequently cited examples of late selection is the negative priming effect (Tipper, 1985). In this figure, participants must name the red object and ignore the blue one. If the ignored object on trial N suddenly becomes the attended object on trial N+1, then participants are slower at naming it (called negative priming). The effect can also be found if the critical object is from the same semantic category. This suggests that the ignored object was, in fact, processed meaningfully rather than being excluded purely on the basis of its color as would be expected by early selection theories such as FIT.

In this example, participants must name the red object and ignore the blue one. If an ignored object becomes an attended object on the subsequent trial, then there is cost of processing, which is termed negative priming.

How can the evidence both for and against FIT be reconciled? The selection of objects for further processing may sometimes be early (i.e. based on perceptual features) and sometimes late (i.e. based on meaning), depending on the demands of the task. Lavie (1995) has shown that, when there is a high perceptual load (e.g. the large arrays typically used for visual search), then selection may be early, but in conditions of low load in which few objects are present (as in the negative priming task), then there is a capacity for all objects to be processed meaningfully consistent with late selection. Other findings have suggested that the process of feature binding may also operate at several levels (Humphreys et al., 2000), with some forms of binding occurring prior to attention. This could account for the distractor similarity effects of Duncan and Humphreys (1989).

Biased competition theory

Key Term

Negative priming

If an ignored object suddenly becomes the attended object, then participants are slower at processing it.

The biased competition theory of Desimone and Duncan (1995) draws more heavily from neuroscience than from cognitive psychology. It explicitly rejects a spotlight metaphor of attention (inherent, for instance, in Feature Integration Theory). Instead “attention is an emergent property of many neural mechanisms working to resolve competition for visual processing and control of behavior.” By “emergent property,” Desimone and Duncan imply that attention isn’t a dedicated module, but rather a broad set of mechanisms for reducing many inputs to limited outcomes and there is no clear division between attentive and preattentive stages. The Biased Competition Theory has been extended and updated by others in light of more recent evidence (Beck & Kastner, 2009; Knudsen, 2007).

One assumption of the model is that competition occurs at multiple stages rather than at some fixed bottleneck—i.e. neither early nor late selection but something more dynamic. This competition occurs first within the visual ventral stream itself in terms of the processing of visual features (colors, motion, etc.) and objects. For instance, single cell electrophysiology suggests that when two stimuli are presented in a single receptive field (e.g. two color patches presented in the receptive field of a neuron in V4) then the responsiveness of the neuron is less than the sum of its responsiveness to each stimulus in isolation (Luck et al., 1997). This is one way in which “competition” may be realized at the neural level.

In humans, the BOLD response in areas of the visual ventral stream to multiple stimuli presented together is less than the sum of its parts as determined by a control condition involving sequential presentation. This also depends on how spatially close together the different stimuli are (Kastner et al., 2001). Brain regions containing neurons with small receptive fields (e.g. V1) are only disrupted by competitors that are close by, but regions that have larger receptive fields (e.g. V4) are also disrupted by more distant competitors. As well as spatial proximity, the degree of competition depends on perceptual similarity of multiple stimuli within the field (Beck & Kastner, 2009). This may be the neural basis of early grouping effects and also pop-out. Certain perceptual representations may also tend to dominate in the competitive process by virtue of being familiar (e.g. spotting your partner in a crowd), or by virtue of being recently seen, and so on. Again, this does not require a special mechanism as such: it just requires that there is bias in the way these stimuli are represented that facilitates their selection (e.g. neurons fire more when expected or frequently encountered). Selection may also be biased by top-down signals. When a receptive field contains an experimentally defined target and an irrelevant distractor then the neural response resembles that to the target alone suggesting some filtering out of the distractor (Moran & Desimone, 1985).

Another key assumption of this theory is that attention is not deployed serially, but rather perceptual competition occurs in parallel. Serial processing, by contrast, is assumed to arise from competition at the response level rather than perceptual processing (e.g. from the fact that it is only possible to fixate one location at a time). (This idea is linked closely to the Premotor Theory of Attention discussed in the next section). Neurons recorded in monkey V4 during visual search tasks are activated in parallel (i.e. irrespective of whether it is being currently attended/fixated) whenever a target feature (e.g. color) falls in the receptive field (Bichot et al., 2005). This occurs for both simple feature (i.e. pop-out) and conjunction searches. However, there is also an enhanced response when the target is selected for a saccade suggesting serial processing linked to motor responses. Whereas Feature-Integration Theory assumes either parallel or serial search (depending on the nature of the targets), the Biased Competition Theory suggests both kinds of mechanisms act together.

The Biased Competition Model also accounts for spatial and non-spatial attention within the same model. The differences between spatial and non-spatial attention was originally assumed to be due to different anatomical origins of the biasing signals rather than reflecting different mechanisms per se. The posterior parietal cortex was thought to be the origin of spatial biases (e.g. effects of arrows in orienting attention) and prefrontal cortex may code task-related biases (find the blue X). However, other research has suggested that the same frontal and parietal regions support both spatial and non-spatial search cues (Egner et al., 2008).

Key Terms

Extinction

in the context of attention, it refers to unawareness of a stimulus in the presence of competing stimuli.

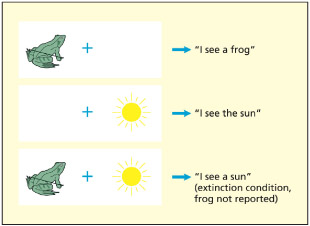

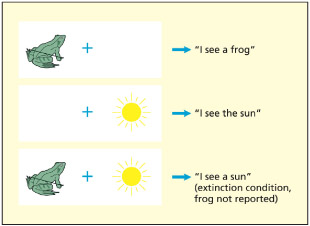

Damage to the parietal lobe not only produces neglect it may also lead to a curious symptom called extinction (Riddoch et al., 2010). When a single stimulus is presented briefly to either the left or the right of fixation, patients with parietal lesions tend to accurately report them. But when presented with two stimuli at the same time, the patient may report seeing the object on the right but not the object on the left (in the case of right parietal damage). Thus the patient has not lost the ability to see the left side of space, nor have they lost the ability to attend to the left side of space per se, but they have lost the ability to attend to (and be aware of) the left side of space when there is a competitor on the right.

Similar effects are found for the Posner-cueing task as a result of parietal lobe lesions (Posner & Petersen, 1990). For patients with right parietal lobe lesions, they are able to initially orient attention to either the left or right side of space as a result of a prestimulus flash of light on either the left or right. However, while they are able to shift attention from a cue on the left (their neglected side) to a target on the right (their “good” side) they are impaired in the reverse scenario (shifting from the “good” to neglected side). While this could be explained by damage to a special attention mechanism relating to disengaging attention (Posner & Petersen, 1990) it could be explained by biased competition: it is easy to orient to the “bad” side of space when competition is low (i.e. to the initial cue), but harder to orient to the “bad” side following a salient visual stimulus on the “good” side.

Neglect patients may fail to notice the stimulus on the left when two stimuli are briefly shown (called extinction), but notice it when it is shown in isolation. It suggests that attention depends on competition between stimuli.

The premotor theory of attention

The premotor theory of attention assumes that the orienting of attention is nothing more than preparation of motor actions (Rizzolatti et al., 1987, 1994). As such, it is primarily a theory of spatial attention. The theory encompasses both overt orienting, in which actual movement occurs, and covert orienting. The latter is assumed to reflect movement that is planned but not executed.

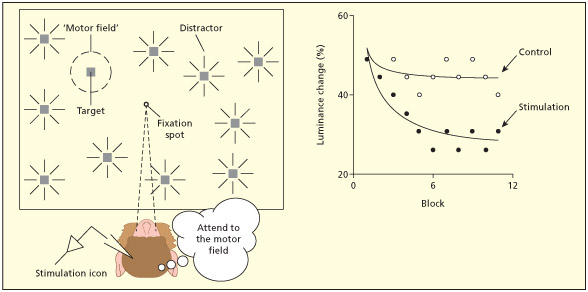

The initial evidence for the theory came from a spatial cueing task (Rizzolatti et al., 1987). The set-up used four spatial locations arranged left to right (1, 2, 3 and 4) and a centrally fixated square. Within the central square, a digit would appear that would indicate where a target was likely to appear (with 80 percent certainty). A flash would then appear in one of the four boxes and the participant simply had to press a button as soon as it was detected. Participants were slower when the cue was misleading, forcing them to shift attention. However, they found that costs in response times were not only related to whether attention had to shift per se, but also whether attention had to reverse in direction. So a shift of attention from position 2 (left) to position 1 (far left) had a small cost whereas a shift from position 2 (left) to a rightward location (position 3) had a larger cost (and similarly a shift from 3 to 4 was less costly than from 3 to 2). The same results were also found for vertical alignments of the four posi tions so the findings do not relate to processing differences across hemispheres. The basic finding is hard to reconcile with a simple “spotlight” account, because the spotlight is moved the same distance in both scenarios. They suggest instead that the pattern reflects the programming of eye movements (but not their execution as overt movements were not allowed). Specifically, a leftwards eye movement can be made to go further leftwards with minimal additional processing effort, but to change a leftwards movement to a rightwards movement requires a different motor program to be set up and the original one discarded.

In the study of Rizzolatti et al. (1987), a centrally presented digit indicates where a target stimulus is likely to appear (in this case, position 2), but it may sometimes appear in an unattended location (as shown here, in positions 1 or 3). Although positions 1 and 3 are equidistant from the expected location, participants are faster at shifting attention to position 1 than position 3. Why might this be?

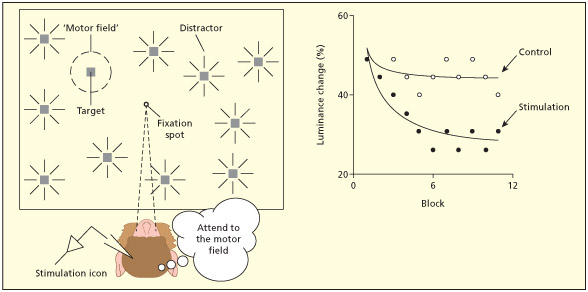

In Moore and Fallah (2001) the task of the animal was to press a lever when one of the stimuli “blinked” (a small change in luminance). They were better at doing the task when a part of the brain involved in generating eye movements was stimulated than in a no-stimulation control condition (even though no eye movements occurred), provided the light stimulus fell in the appropriate receptive field. This is consistent with the idea that attending to a region of space is like a virtual movement of the eyes.

The term “premotor” refers to the claim that attention is a preparatory motor act and is not referring to the premotor cortical region of the brain (discussed in Chapter 8). The theory does, however, make strong neuroanatomical predic tions: namely that the neural substrates of attention should be the same as the neural substrates for motor preparation (particularly eye movements). As discussed previously, there is evidence to sup port this view from single-cell recordings (Bisley & Goldberg, 2010) and human fMRI (Nobre et al., 2000). There is also intriguing evidence from brain stimulation studies. Electrical stimulation of neurons in the frontal eye fields (FEF) of monkeys can elicit reliable eye movements to particular locations in space. Moore and Fallah (2001) identified such neurons and then stimulated them at a lower intensity such that no eye movements occurred (the animal continued to fixate centrally). However, the animals did show enhanced perceptual discrimination of a stimulus presented in the location where an eye-movement would have occurred. This suggests that attention was deployed there and is consistent with the idea that covert orienting of attention is a non-executed movement plan.

Key Terms

Balint’s syndrome

A severe difficulty in spatial processing normally following bilateral lesions of parietal lobe; symptoms include simultanagnosia, optic ataxia, and optic apraxia.

Simultanagnosia

Inability to perceive more than one object at a time.

The premotor theory of attention has not been without criticism. Smith and Schenk (2012) argue that it fails as a general theory of attention and may only be valid in certain situations (e.g. exogenous orienting of attention to, say, flashes of light). For instance, patients with chronic lesions of the FEF have a saccadic deficit but no deficit of endogenous attention in covert orienting tasks involving, say, arrow cues (Smith et al., 2004).

Seeing One Object at a Time: Simultanagnosia and Balint’S Syndrome

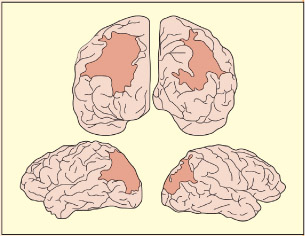

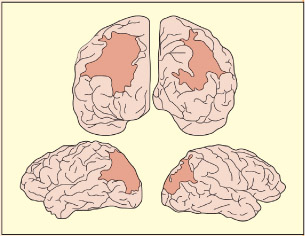

RM has extensive damage to both the left and right parietal lobes and severe difficulties in perceiving spatial relationships (top diagrams are viewed from the back of the brain; bottom diagrams are viewed from the side). RM was unable to locate objects verbally, or by reaching or pointing (Robertson et al., 1997). In contrast, his basic visual abilities were normal (normal 20/15 visual acuity, normal color vision, contrast sensitivity, etc.). He was impaired at locating sounds, too.

The idea that one could perceive an object but not its location is highly counterintuitive, because it falls outside of the realm of our own experiences. However, there is no reason why the functioning of the brain should conform to our intuitions. Patients with Balint’s syndrome (Balint, 1909, translated 1995) typically have damage to both the left and the right parietal lobes and have severe spatial disturbances. Patients with Balint’s syndrome may notice only one object at a time: this is termed simultanagnosia. For example, the patient may notice a window, then, all of a sudden, the window disappears and a necklace is seen, although it is unclear who is wearing it. In terms of the two visual streams idea, it is as if there is no “there” there (Robertson, 2004). Within the Biased Competition Theory it could be regarded as an extreme form of perceptual competition due to a limited spatial selection capacity. Within Feature Integration Theory, it can be construed as an inability to bind features to locations and, hence, to each other. Recall that if a blue “H” and a red “E” are presented very quickly to normal participants, then illusory conjunction errors may be reported (e.g. red “H”). Balint’s patients show these errors even when they are free to view objects for as long as they like (Friedman-Hill et al., 1995). In addition to simultanagnosia, patients typically have problems in using vision to guide hand actions (optic ataxia; considered in Chapter 8) and fail to make appropriate eye movements (optic apraxia).

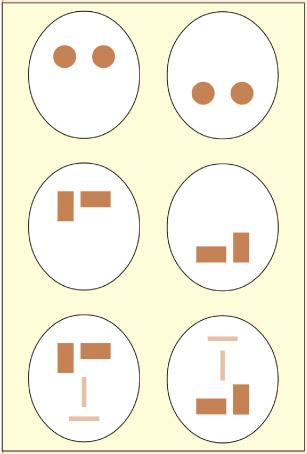

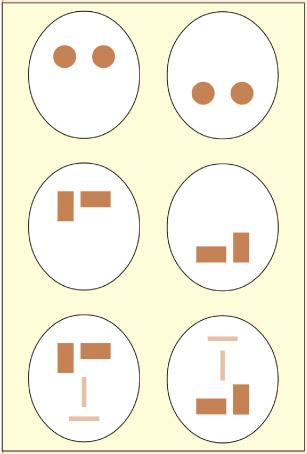

Under what circumstances is a face perceived as a whole or as a collection of parts? Patient GK can identify the location of the ovals when he is told that they are the eyes of a face, but not if he thinks of them just as circles inside an oval. The former judgment may use his intact ventral route for identifying faces/objects, whereas the latter may use the impaired dorsal route for appreciating the location of the circles relative to another. GK was also better at making location judgments about the rectangles when other face-like features were added.

To say that Balint’s patients can recognize single objects leads to some potential ambiguities. Consider a face. Is a face a single object or a collection of several objects (eyes, nose, mouth, etc.) arranged in a particular spatial configuration? A number of factors appear to determine whether parts are grouped together or not. Humphreys et al. (2000) showed that, in their Balint’s patient, GK, parts are likely to be grouped into wholes if they share shape, if they share color, or if they are connected together. This suggests that some early feature binding is possible prior to attention. Another factor that determines grouping of parts into wholes is the familiarity of the stimulus and how a given stimulus is interpreted (so-called top-down influences). Shalev & Humphreys (2002) presented GK with the ambiguous stimuli on the left. When asked whether the two circles were at the top or bottom of the oval he was at chance (55 percent). He performed the task well (91 percent) when asked whether the eyes were at the top or bottom of the face.

Evaluation

Although there are many theories of attention, three prominent ones have been considered here. Feature Integration Theory and the Premotor Theory are necessarily limited in scope in that they are specifically theories of spatial attention, whereas the Biased Competition Theory has the advantage of offering a more general account. Feature Integration Theory has been successful in explaining much human behavioral data in visual search. Biased Competition Theory offers a more neuroscientific account of this data in which competition arises at multiple levels (e.g. perceptual crowding, and response competition) and attention is syn ony mous with the selection function of this overall system. The Premotor Theory offers an interesting explanation as to how attention can be considered as a combination of both “where” (spatial) and “how” (motor) functions of the dorsal stream.

Key Terms

Line bisection

A task involving judging the central point of a line.

Cancellation task

A variant of the visual search paradigm in which the patient must search for targets in an array, normally striking them through as they are found.

Patients with neglect (also called hemispatial neglect, visuospatial neglect or visual neglect) fail to attend to stimuli on the opposite side of space to their lesion— normally a right-sided lesion resulting in inattention to the left side of space.

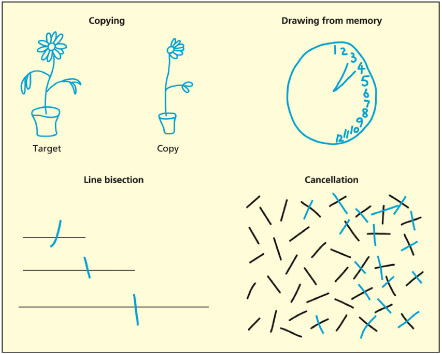

Characteristics of neglect

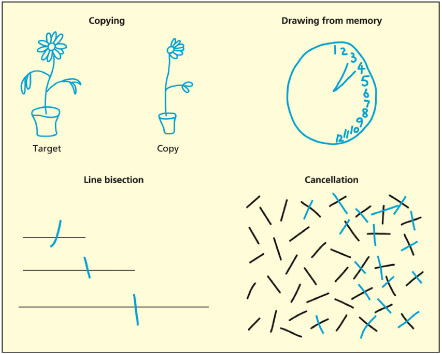

Different ways of assessing neglect include copying, drawing from memory, finding the center of a line (line bisection), and crossing out targets in an array (cancellation).

There are a number of common ways of testing for neglect. Patients may omit features from the left side when drawing or copying. In tests of line bisection, patients tend to misplace the center of the line toward the right (because they underestimate the extent of the left side). The bias in bisection is proportional to the length of the line (Marshall & Halligan, 1990). Cancellation tasks are a variant of the visual search paradigms already discussed, in which the patients must search for targets in an array (normally striking them through as they are found). They will typically not find ones on the right. Some of these tasks may be passed by some neglect patients but failed by others, the reasons for which have only recently started to become clear (Halligan & Marshall, 1992; Wojciulik et al., 2001). In extreme cases, neglect patients may shave only half of their face or eat half of their food on the plate.

Neglect is associated with lesions to the right inferior parietal lobe. This photo shows the region of highest overlap of the lesions of 14 patients.

Mort et al. (2003) examined the brain regions critical for producing neglect in 35 patients and concluded that the critical region was the right angular gyrus of the inferior parietal lobe, including the right temporoparietal junction (TPJ). Functional imaging studies of healthy participants performing line bisection also point to an involvement of this area in that particular task (Fink et al., 2000); as do the results from a TMS study (Fierro et al., 2000). While there is good consensus over the role of this region in neglect, it is not the only region that is implicated. For instance, Corbetta and Schulman (2011) argue that the right posterior parietal cortex, containing salience maps, may tend to be functionally deactivated (due to its connectivity with the damage right TPJ) despite not being structurally damaged. Others have argued that neglect itself can be fractionated into different kinds of spatial processes with differing neural substrates, as considered later.

Neglect and the relationship between attention, perception, and awareness

It is important to stress that neglect is not a disorder of low-level visual perception. A number of lines of evidence support this conclusion. Functional imaging reveals that objects in the neglected visual field still activate visual regions in the occipital cortex (Rees et al., 2000). Stimuli presented in the neglected field can often be detected if attention is first cued to that side of space (Riddoch & Humphreys, 1983). This also argues against a low-level perceptual deficit. The situations in which neglect patients often fare worse are those requiring voluntary orienting to the neglected side and those situations in which there are several stimuli competing for attention. Although the primary deficit in neglect is related to attention, not perception, it does lead to deficits in awareness of the perceptual world.

Neglect is not just restricted to vision, but can apply to other senses as well. This is consistent with evidence presented earlier that the parietal lobes have multi-sensory characteristics. Pavani et al. (2002) have shown that neglect patients show a right-skewed bias in identifying the location of a sound (but note that they are not “deaf” to sounds on the left). Extinction can also cross sensory modalities. A tactile (or visual) sensation on the right will not be reported if accompanied by a visual (or tactile) stimulus on the left, but will be reported when presented in isolation (Mattingley et al., 1997).

Patients with neglect can be shown to process information in the neglected field to at least the level of object recognition. The ventral “what” route seems able to process information “silently” without entering awareness, whereas the dorsal “where” route to the parietal lobe is important for creating conscious experiences of the world around us. Vuilleumier et al. (2002b) presented brief pictures of objects in left, right, or both fields. When two pictures were presented simultaneously the patients extinguished the one on the left and only reported the one on the right and when later shown the neglected stimuli they claimed not to remember seeing them (a test of explicit memory). However, when later asked to identify a degraded picture of the object, their performance was facilitated, which suggests that the extinguished object was processed unconsciously. Other lines of evidence support this view. Marshall and Halligan (1988) presented a neglect patient with two depictions of a house that were identical on the non-neglected (right) side, but differed on the left side such that one of the two houses had flames coming from a left window. Although the patients claimed not to be able to perceive the difference between them, they did, when forced to choose, state that they would rather live in the house without the flames! This, again, points to the fact that the neglected information is implicitly coded to a level that supports meaningful judgments being made.

How Is A “Lack Of Awareness” In Neglect Different From Lack Of Awareness In Blindsight?

|

|

|

| Neglect |

Blindsight |

|

|

| • Lack of awareness is not restricted to vision and may be found for other sensory modalities |

• Lack of awareness is restricted to the visual modality |

|

|

| • Whole objects may be processed implicitly |

• Implicit knowledge is restricted to basic visual discriminations (direction of motion; but see Marcel, 1998) |

|

|

| • Lack of awareness can often be overcome by directing attention to neglected region |

• Lack of awareness not overcome by directing attention to “blind” region |

|

|

| • Neglected patients often fail to voluntarily move their eyes into neglected region |

• Blindsight patients do move their eyes into “blind” region |

|

|

| • Neglected region is egocentric |

• Blind region is retinocentric |

Different types of neglect and different types of space

Space, as far as the brain goes, is not a single continuous entity. A more helpful analogy is to think of the brain creating (and perhaps storing) different kinds of “maps.” Cognitive neuroscientists refer to different spatial reference frames to capture this notion. Each reference frame (“map”) may have its own center point (origin) and set of coordinates. Similarly there may be ways of linking one map to another—so-called remapping. It has already been described how neurons may remap the spatial position of sounds from a head-centered reference frame to an eye-centered reference frame (so-called retinocentric space). This enables sounds to trigger eye movements. The same can happen for other combinations: for instance, visual receptive fields may be remapped so that they are centered on the position of the hands rather than the position of the eyes (facilitating hand-eye coordination during manual actions). The parietal lobes can perform remapping because they receive postural information about the body as well as sensory information relating to sound, vision and touch (Pouget & Driver, 2000).

Key Term

Egocentric space

A map of space coded relative to the position of the body.

Allocentric space

A map of space coding the locations of objects and places relative to each other.

The main clinical features of neglect tend to relate to egocentric space (reference frames centered on the body midline) and it is these kinds of spatial attentional disorders that are linked to brain damage to the right temporoparietal region (Hillis et al., 2005). However, neglect is also linked to other kinds of spatial reference frames, as outlined below. Although this could be conceptualized as losing particular kinds of spatial representations, another way of thinking about it is in terms of attention deficits created by disrupting competition at different levels of processing.

Perceptual versus representational neglect

Bisiach and Luzzatti (1978) established that neglect can occur for spatial mental images and not just for spatial representations derived directly from perception. Patients were asked to imagine standing in and facing a particular location in a town square that was familiar to them (the Piazza del Duomo, in Milan). They were then asked to describe the buildings that they saw in their “mind’s eye.” The patients often failed to mention buildings in the square to the left of the Duomo. Was this because of loss of spatial knowledge of the square or a failure to attend to it? To establish this, the patients were then asked to imagine themselves at the opposite end of the square, facing in, and describe the buildings. In this condition, the buildings that were on the left (and neglected) are now on the right and are reported, whereas the buildings that were on the right (and reported previously) are now on the left and get neglected. Thus, spatial knowledge of the square is not lost but is unavailable for report. Subsequent research has established that this so-called representational neglect forms a double dissociation with neglect of perceptual space (Bartolomeo, 2002; Denis et al., 2002). The brain appears to contain different spatial reference frames for mental imagery and for egocentric perceptual space. The hippocampus is often considered to store an allocentric map of space (the spatial relationship of different landmarks to each other, rather than relative to the observer), but the parietal lobes may be required for imagining it from a given viewpoint (Burgess, 2002).

The Piazza del Duomo in Milan featured in a classic neuropsychological study. When asked to imagine the square from one viewpoint, patients with neglect failed to report buildings on the left. When asked to imagine the square from the opposing viewpoint they still failed to report buildings on the left, even though these had been correctly reported on the previous occasion. It suggests a deficit in spatial attention rather than memory.

Near versus far space

Double dissociations exist between neglect of near space (Halligan & Marshall, 1991) versus neglect of far space (Vuilleumier et al., 1998). This can be assessed by line bisection using a laser pen and stimuli in either near or far space, even equating for visual angles. Near space appears to be defined as “within reach,” but it can get stretched! Berti and Frassinetti (2000) report a patient with a neglect deficit in near space but not far space. When a long stick was used instead of a laser pointer, the “near” deficit was extended. This suggests that tools quite literally become fused with the body in terms of the way that the brain represents the space around us. This is consistent with single-cell recordings from animals suggesting that visual-receptive fields for the arm get spatially stretched when the animal has been trained to use a rake tool (Iriki et al., 1996).

Personal and peripersonal space

Patients might show neglect of their bodily space. This might manifest itself as a failure to groom the left of the body or failure to notice the position of the left limbs (Cocchini et al., 2001). This can be contrasted with patients who show neglect of the space outside their body, as shown in visual search type tasks, but not the body itself (Guariglia & Antonucci, 1992).

The orientation of the body and the orientation of the world can have independent effects on neglect, suggesting that these are also coded separately. Calvanio et al. (1987) displayed words in four quadrants of a computer screen for the patients to identify. When seated upright, patients showed left neglect. However, when lying down on their side (i.e. 90 degrees to upright), the situ ation was more complex. Performance was deter mined both relative to the left–right dimension of the room and the left–right dimension of the body.

The patient makes omission errors on the left side of objects irrespective of the object’s position in space.

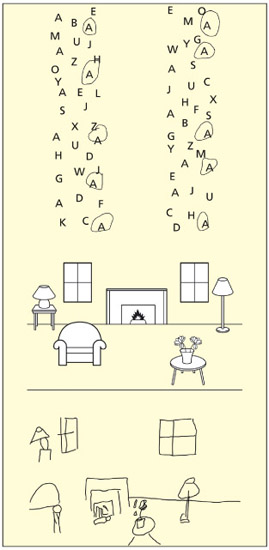

Within objects versus between objects (or object-based versus space-based)

Look at the figures to the right (from Robertson, 2004). Note how the patient has attempted to draw all of the objects in the room (including those on the left) but has distorted or omitted the left parts of the objects. Similarly, the patient has failed to find the As on the left side of the two columns of letters even though the right side of the left column is further leftwards than the left side of the right column. This patient would probably be classed as having object-based neglect.

Are these objects the same or different? The critical difference lies on the left side of the object but, in the slanted condition, on the right side of space.

The object in question may be more dynamically defined according to the current spatial reference frame being attended. Driver and Halligan (1991) devised a task that pitted object-based coordinates with environmentally based ones. The task was to judge whether two meaningless objects were the same or not. On some occasions, the critical difference was on the left side of the object but on the right side of space, and the patient did indeed fail to spot such differences.

Written words are an interesting class of object, because they have an inherent left to right order of letters. Patients with left object-based neglect may make letter substitution errors in reading words and nonwords (e.g. reading “home” as “come”), whereas patients with space-based (or between object) neglect may read individual words correctly but fail to read whole words on the left of a page. In one unusual case, NG, the patient made neglect errors in reading words that were printed normally but also made identical errors when the words were printed vertically, printed in mirror image (so that the neglected part of the word was on the opposite side of space) and even when the letters were dictated aloud, one by one (Caramazza & Hillis, 1990a). This strongly suggests that it is the internal object frame that is neglected.

Neglect within objects is linked to brain damage in different regions than that associated with neglect of egocentric space; in particular, it seems to be linked to ventral stream lesions including to the white matter (Chechlacz et al., 2012). This raises the interesting possibility that this form of neglect represents a disconnection between object-based perceptual representations and more general mechanisms of attention.

Evaluation

Although the cardinal symptom of neglect is a lack of awareness of perceptual stimuli, neglect is best characterized as a disorder of attention rather than perception. This is because it tends to be multi-sensory in nature, the deficit is more pronounced when demands on attention are high (e.g. voluntary orienting, presence of competing stimuli), and there is evidence that neglected stimuli are perceived (albeit unconsciously and perhaps less detailed). However, neglect is a heterogeneous disorder and this may reflect the different ways in which space is represented in the brain. Basic attention processes (involving competition and selection) may operate across different spatial reference frames giving rise to the different characteristics of neglect.