1

History of Quality

Giovanna Culot

1.1. What is Quality all About?

We have posed the same question many times entering a new class on quality management. How can quality be defined? The answers – be it from undergrads, master students, or executives – tend to converge on a production-oriented kind of understanding, “conformity” and “specification” the words coming up more frequently. Every now and then, someone would try out a different answer, bringing up ideas such as “product performance,” “customer satisfaction,” or even the “way you do your daily activities.”

Conventional wisdom collocates quality in the field of operations management and related engineering disciplines. Reality is that there is no single truth behind the concept. Most certainly, many methods and techniques have been developed in connection with the challenge of manufacturing products without defects. It is also true, however, that quality is an overarching concept which has applications both in the business environment and in everyday life.

The term “quality” is commonly used to mean a degree of excellence in a given product or activity. Most of the people would agree with that. Philosophers have argued that a more precise definition of quality is not possible: building on discussions initiated by Socrates, Aristotle, Plato, and other thinkers in Ancient Greece, quality is understood as a universal value that we learn to recognize only through the experience of being exposed to a succession of objects characterized by it (Buchanen, 1948; Piersing, 1974).

In a business setting, such a general understanding is, however, not sufficient. Where does quality end and non-quality begin? Are there different degrees of quality? If so, is it possible to measure quality? These are only some of the questions that have been raised in academia and in industry, and the answers have been very different (Reeves & Bednar, 1994).

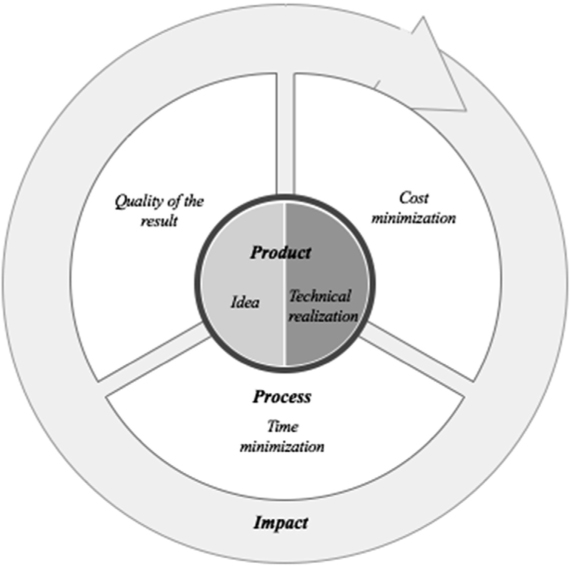

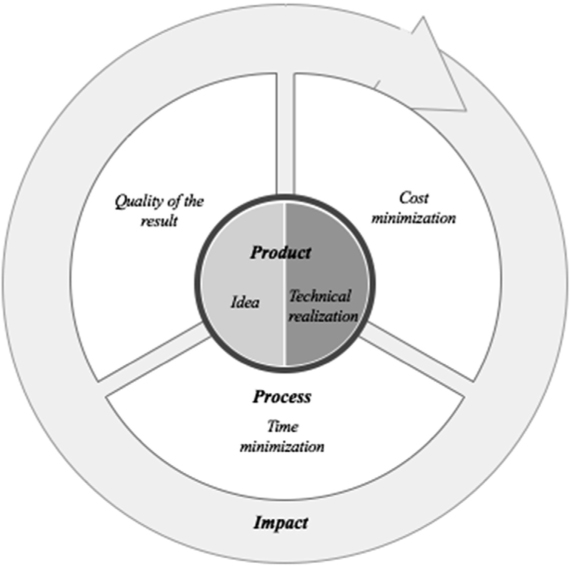

To begin with, quality might refer both to a product (or service) or to the process that generates it. In terms of product quality, there are multiple possible dimensions or elements (Garvin, 1984). Some of these elements refer to the quality of the technical realization of the product and are usually objective and measurable, such as conformance. Other elements are instead less objective, as the preferences of the customers might vary in relation to them: they can be measurable, such as product performance or durability, or purely qualitative, as in the case of aesthetics. This last set of elements might be understood as the degree by which the product (or its initial prototype) is fit to customer expectations or the quality of the idea. A similar line of reasoning is also applicable to services.

In operations management, the most attention has historically been placed on the quality of technical realization, described as conformance of a product to a design or specification (Crosby, 1979; Gilmore, 1974), and the cost of attaining it with respect to the price the customer is willing to pay (Feigenbaum & Vallin, 1961). Vice versa, economists and marketers understanding has mainly been focused on the quality of the idea, seen as a precondition to customer satisfaction. In this respect, the main questions have been revolving around the characteristics or attributes a product should have (Abbot, 1955; Leffler, 1982), and their appraisal considering different individual tastes or preferences (Kuehn & Day, 1962; Maynes, 1976; Brown & Dacin, 1997).

Several efforts have been made over the years to combine these two perspectives into all-encompassing conceptualizations and practical approaches. However, even nowadays, it is not unusual to see companies where this dialectic evolves into acrimonious meetings: designers, marketing, and sales executives on one side, production managers on the other. In fact, the relevance of the quality of the idea and the quality of technical realization (and the understanding of their underlying elements) varies significantly within each company, depending on the responsibilities and goals of each function (e.g., product development, production, and sales), stage in the product life-cycle, and, if present, positioning of the different product lines. The definition of quality varies significantly also across companies, even in the same industry, based on their value proposition. There are many examples of this. In the piano industry, for instance, Stainway & Sons has built its reputation as quality leader based on the uniqueness of the sound and style of each handcrafted product. On the other hand, Yamaha has also developed a strong reputation for quality emphasizing totally different dimensions more related to reliability and conformance (Garvin, 1984).

The definition of quality shall, however, not be limited to the final product. Again, different dimensions in terms of process quality have been investigated over the years. On the one hand, the question has been how to ensure the final quality of the result (Reeves & Bednar, 1994). On the other hand, a more comprehensive view has suggested that quality is related not only of processes efficacy (i.e., producing quality products), but also to their effectiveness (i.e., cost and time minimization). Process quality has been investigated by several disciplines, including but not limited to operations management (e.g., Anderson et al., 1995; Flynn et al., 1994; Saraph et al., 1989) and organizational behavior (Ivancevich, Matteson, & Konopaske, 1990).

Finally, in recent years, quality has also been related to sustainability and the effects (or, in economic jargon, externalities) of a company’s decisions and activities on a broad set of stakeholders, including the society, the environment, and future generations. This dimension is referred to here as quality of impact.

Overall, quality is a multi-faceted concept, whose definition is complex and fundamentally context-dependent (Reeves & Bednar, 1994). Fig. 1 illustrates how the different meanings of quality explained above unfold into concentric circles. At the center, product quality in its two dimensions: quality of the idea (prototype or design) and quality of the technical realization (conformance). Around it, process quality, in terms of effectiveness (quality of the result) and efficiency (time/cost minimization), which should be considered both at firm and supply-chain level. Finally, the outer circle embraces all previous dimensions of quality and represents the company’s and its products’ impact.

Today, we tend to have a comprehensive understanding of quality. Even though functional (in companies) and disciplinary (in academia) differences in terms of focus and priorities remain, we are aware that a one-dimensional view on the topic is not sufficient. As this historical review will show, however, this has not been the case for most of the modern history.

Fig. 1: The Different Meanings of Quality.

1.2. Approaching Quality in History

The history of quality extends for millennia, spanning over different geographies and socio-political systems. Throughout the evolution of civilization, many of the methods and tools that constitute the foundations of the current approach to quality have been developed, such as quality warranties, standardization, interchangeability, inspections, and laws for consumer protection. Already in ancient times, trained craftsmen not only provided clothing and tools, including equipment for armed forces, but also built roads, bridges, temples, and other masterpieces of design and construction, some of which endure to this day. Managing for quality is by no means a product of the modern Western world (Juran, 1995).

Industrialization was, however, a real game-changer as, differently than in the past, a more fragmented division of labor and the use of machines made virtually impossible to account the responsibility for the quality of a product to a specific individual (Weckenmann, Akkasoglu, & Wener, 2015). New solutions needed to be found, and this is where, experts believe, quality management as a discipline was born. This historical review also starts from here.

Since then, many progresses have been made, and quality management practices become mainstream. Many prominent thinkers have punctuated this evolution; however, a review of their publications would not give a realistic view of how quality has been developing over the years. It is not about “inventions.” It is rather about how and when these inventions have been adopted consistently with the challenges faced by companies, policy-makers, and individuals at any given time. On this basis, in the following paragraphs the history of quality is presented taking into account the changing context of international competition, customer expectations and technical opportunities. Since the various approaches to quality have been implemented in a different timeframe according to country- or industry-specific situations, the five phases described below do not present a linear evolution, but rather a chronological progression in which contrasting approaches, often in response to different challenges, might have occurred at the same time.

1.3. Quality at Time of the Industrial Revolution(s): Quality Inspection

In the age of craft production, there was a comprehensive understanding of the meaning of quality. Artisans, and merchants for their part, had strong ties to their local communities and often a personal knowledge of their customers. Laws would enforce the fulfillment of basic demands concerning society, whereas at least since the Middle Age, guilds promoted standards to ensure product quality punishing any deviation considered as fraud. Honor and personal accountability would complement the typical approach of craftsmen to quality. As the precondition for economic trade to be profitable is to meet customers’ expectations and do it effectively in terms of time and cost, they also acknowledged that quality needed to be pursued at all levels, from product design to realization and delivery. Both aspects of product quality (the idea and its technical realization) were important, even though the latter was not strictly understood as conformance: details would make a product unique.

This picture changed dramatically with the advent of mechanization. First, between the end of the eighteenth and the beginning of the nineteenth century, steam power and the development of machine tools impacted a first cluster of industries, mostly raw materials and semi-finished products, including textiles and iron making. One century later, in what is commonly known as Second Industrial Revolution or Mass Production, electrification was introduced to a broader range of industries. New technological opportunities gave rise to a series of managerial innovations, first and foremost, the implementation of the moving assembly line by Henry Ford in 1913 and the conceptualization of scientific management by Frederick W. Taylor (1911).

On the demand side, a new class of industrial workers was born. After the many drawbacks in terms of working conditions and living standards brought about by the First Industrial Revolution, the paradigm changed significantly after the 1910s as workers were now ensured decent-enough wages to make them become potential customers (De Grazia, 2005). In order to serve this increasing customer base better than the competition, companies needed to provide goods fast, in volume, and at a low price.

In this scenario, the meaning of quality was entirely related to the product technical realization, now decoupled from the quality of the idea. As an effort to reduce costs, and thus the price to the customer, companies pursued a low product variety: customer needs were hardly considered and product properties were defined by the will of the organizations (Weckenmann et al., 2015). The words of Henry Ford are famous in this respect: “Any customer can have a car painted any color that he wants so long as it is black.”

The game was thus all played in production and related operations. Here, while the division of labor was not a new concept, mechanization and especially the moving assembly line had led to an increasing specialization of the workers, now focused on single repetitive tasks. Workers in such settings failed to see the contribution of their activities to the quality of the final product, and it was no longer possible for managers to trace individual accountability.

In order to ensure the quality of products and avoid complaints from customers, companies started performing activities of quality inspection (QI). Again, QI is not a new concept, being part of every kind of organized production from Ancient China’s handicraft industry and mediaeval guilds to quality acceptance inspections of raw materials and semi-finished products at the time of the First Industrial Revolution (Nassimbeni, Sartor, & Orzes, 2014). However, as production volumes were growing to unprecedented levels, traditional QI was not up to speed with increasing labor productivity. Often defective products were delivered to customers and replaced with new ones only after complaints. A new systematic approach to QI was needed in order to reduce cost and complexity. Many companies established thus a separate inspection department: following Taylor’s (1911) recommendations, designated employees would check the quality of the products at the end of the production process in order to verify their compliance with specifications. Should any defect be observed, then the defective component would have been replaced. This was possible thanks to the interchangeability of parts, a practice piloted in Europe since the first years of the nineteenth century and becoming mainstream in the era of mass production: large volumes of individual parts were manufactured and tested within tolerance, ready to be assembled (and re-assembled).

Although QI still plays an important role in current quality practices, its stand-alone application led to several issues. Since the goal was to prevent defective products from leaving the factory, but not to decrease the defect-rate in producing them, there was usually no feedback loop to production managers or workers about failures, and no analysis was carried out about their root causes. Moreover, the repair or replacement of defective components at the end of the process resulted in high waste rates and significant losses of efficiency as many tasks in production needed to be first done, and then redone. In many cases, “hidden factories” operated to correct the output of the obvious factory (Womack, Jones, & Ross, 1991).

As competition was becoming fiercer, a change of pace was needed to ensure that the quality of the final product was met at a reasonable cost.

1.4. From Inspection to Control: Quality After World War II

The years following World War II were marked by an unprecedented speed of economic recovery, combined with an impressive strength and scale of international cooperation. This particularly high and sustained growth was experienced in several countries, including the United States, Western Europe, and East Asia, so much that expressions like “boom,” “miracle,” and “Golden Age” are often used to describe this period. Both demand and productivity were steadily increasing. In the United States, demographic growth and the rise of the middle class, coupled with easier access to consumer credit, gave rise to the phenomenon of “mass consumption” (Ciment, 2007). As a consequence of the Marshall Plan, US companies had secured access to European markets, where again demand was growing. Productivity was benefitting from the commercial adoption of a backlog of technological innovations developed between the two World Wars, including some automation technologies, and the overall infrastructural development.

In this context, the competitive levers were related to how cheap and how fast products could reach yet unserved consumers. Quality was still perceived as a residual dimension in the product, mainly related to its technical realization.

The hitherto dominant approach of QI presented several drawbacks in terms of effectiveness (problems were addressed only after they occurred) and cost (products were repaired at the end of the process, generating scraps and loss of efficiency). These drawbacks were also clear in the eyes of Walter Shewhart as in the 1920s, fresh from a Doctorate in Physics, he joined Western Electric to assist their engineers in improving the conformance rate of the hardware produced for Bell Telephone. His solution, statistical process control (SPC), was to consider not only the final product conformance, but also how variations in the production process affected the quality of the result. In fact, building on mathematical and statistical theories, he concluded that (Shewhart, 1931):

The object of industry is to set up economic ways of satisfying human wants and in so doing to reduce everything possible to routines requiring a minimum amount of human effort. Through the use of the scientific method, extended to take account of modern statistical concepts, it has been found possible to set up limits within which the results of routine efforts must lie if they are to be economical. Deviations in the results of a routine process outside such limits indicate that the routine has broken down and will no longer be economical until the cause of trouble is removed.

In practice, the approach involved the implementation of control charts in order to detect any variation in the production process affecting product conformance. Samples were taken at various stages of the process and the quality variable of interest plotted on a chart. The pattern of the data points was analyzed with respect to the in-control mean of the parameter, considering an interval of three standard deviations from it, with the aim of isolating variations assignable to a specific cause, as opposed to the ones which are naturally part of a process. Compliance of all products was statistically inferred from the sample.

At the outbreak of World War II, SPC was adopted by the United States for military equipment manufacturing, but spread to the industry only in the late 1940s. Starting from here, a variety of methodologies have been developed over the years under the umbrella of quality control (QC), including the Plan-Do-Check-Act cycle, a four-step management method made popular by W. Edwards Deming following his collaboration with Shewhart (Deming, 2000), or the application of the Pareto principle (also known as the 80/20 rule) in a business context as suggested by Joseph M. Juran. At the same time, statistical design of experiments was introduced in the industry to facilitate the efficient identification and adjustment of input parameters.

Even though QC represented a major step – change with respect to QI in terms of cost and effectiveness (problems could be detected before the final product was made), it still had significant limitations due to its reactive and technically driven nature (the problem needed first to be detected, then corrective actions were developed). Some American quality experts, Deming and Juran in the first place, already in the early 1950s started working on possible solutions. However, in a climate of overall economic prosperity, QC seemed sufficient for the business community not to be worried about the “just enough” level of quality required to serve an increasing consumer base.

1.5. The 1960s: Different Markets and Different Approaches

Overall, the rapid economic expansion of the early post-war years largely reflected a process of “catch-up growth,” that is, reconstruction, industry reconversion, and war-time technology deployment (Eichengreen, 2006). Once these early opportunities were exhausted, new avenues in terms of innovation and efficiency needed to be pursued.

In the 1960s, in the United States, the quest for growth had soon become an obsession because of a climate of rising international tension due to the Cold War (Ciment, 2007). However, quality was not seen a mean to fuel this growth. As far as the domestic market is concerned, for many consumer products, for example, in the rapid developing high-tech electronics sector, demand was still exceeding supply. Companies were operating under limited competitive pressures, as pre-existing corporations were merging into larger, more influential conglomerates. The situation was similar also in Western European countries, as they followed the American productivity lead by emulating its technology and business organization. No major improvement in quality was needed to win the consumer’s preference.

In this timeframe, in the West the main innovations in terms of quality came from the defense sector as mainstream operationalization of wartime practices, in particular those related to quality assurance (QA). The impact was essentially in business-to-administration and business-to-business, with initially just a limited impact in the business-to-consumer segment.

First experiments in terms of QA were made during World War II in the United States, as the Navy surveyed its most reliable manufacturers to compile a best-practice list, and in Britain, where the Ministry of Defense decided to implement a set of standards for the management of procedures and inspect their suppliers for adherence. In both cases, the underlying assumption was that technical analyses were not sufficient to prevent defects, but organizational practices needed to be called into question. Standards and procedures were identified as the solution for a preventive approach to quality. Government procurement requirements were established first in defense, then in aerospace, energy, and telephony, this in turn contributed to the spread of QA along the respective supply chains. By the end of the 1960s, most large US corporations had a QA function in place (Weckenmann et al., 2015).

In practice, QA involved two different steps: first, management standards ensuring product and process outcomes (i.e., quality management system) were defined, second, inspections (i.e., audits) were performed. Initially, audits were carried out by company experts within the organization (first-party audit). Later during the 1970s, in front of increasing product complexity, QA was extended to suppliers (external QA) in order to establish basic requirements to be used as the basis for contractual agreements.

As a side effect of external QA, innovative methodologies for preventive analysis developed in a military context spread also in the private sector, such as in the case of the Failure mode and effect analysis, the Fault Tree Analysis, and the Event Tree Analysis.

QA was complementing QC and QI practices in an overall approach that was more cost-effective. However, besides the time and resources absorbed in the auditing process, the scope of the meaning of quality was still limited to a product technical realization, thus the process was tackled only insofar it contributed to ensuring the final quality result. This was not an issue in the regulated markets where QA originated, nor in subcontracting, as technical specifications were defined upfront by the client. It was also not an issue in the growing, essentially domestic consumer market of the 1960s.

In the 1970s, the situation changed dramatically. In an economic context severely hit by the oil crisis and the fall of the Bretton Woods system, the US companies were exponentially losing out market share to Japanese competitors, first in motor vehicles, later also in household appliances and electronics. As a matter of fact, Japanese products were more reliable, cheaper, and had better performances than the ones manufactured by the US-based companies, and thus were preferred by the American consumers. The different path to quality taken by Japan since the end of the 1950s, total quality control (TQC), was paying back.

At the outbreak of World Word II, most of Japanese capital, best managers, engineers, and materials were employed to sustain the country’s imperial ambitions. Only residual resources were left to the consumer exports, building the reputation of Japan as a low-quality and low-cost producer (Juran, 1993). After the end of the conflict, with industrial production dropping a little over 10% of the pre-war standard, there was increasing awareness among the Japanese political and business leadership that a step-change was needed in order to regain a meaningful position on the international chessboard. The United States was also supporting an early stabilization of the Japanese economy, seen as a precondition for preventing future remilitarization. As part of this effort, one of the leading American quality experts back then, Deming, was summoned in the 1950s in Japan to lecture on QC. While he was in the country, he was also contacted by the newly established Japanese Union of Scientists and Engineers (JUSE) to talk directly to local business leaders. Four years later, the JUSE contacted Juran for the same purpose. Both thinkers discussed not only the state-of-the-art of QC as it stood in the 1950s, but starting from the limitations they acknowledged in the approach to quality back then, also their innovative ideas on management and organization. Ideas that had largely gone unheard in the United States as they were challenging the role of quality within the organization as a support function. In Juran’s (1993) own words:

What I told the Japanese was what I had been telling audiences in the United States for years … I suggested the Japanese to find ways to institutionalize programs within their companies that would yield continuous quality improvement. That is exactly what they did.

These seminars jump-started an innovative approach to quality in Japan sustained by the role of the JUSE in involving foreign experts, developing local solutions and disseminating best practices within the business community. A fundamental step in this respect was the establishment in 1951 of the Deming Prize to reward companies for quality improvements.

The JUSE-led quality movement got a label as well as further inspiration from the work of another American, Armand V. Feigenbaum, who in 1961 coined the term TQC to describe the systematic integration of quality development, quality maintenance, and quality improvement efforts of the various groups in an organization (beyond production) in order to pursue customer satisfaction.

Besides the influence of American experts, many Japanese thinkers played a prominent role in shaping the Japanese TQC foundations, leaving the mark also on the West later in the 1980s and 1990s. This is the case of Kaoru Ishikawa (1985), credited to be the father of the fishbone cause and effect diagram and the seven basic tools of quality management (Q7) as well as evangelist of the QC circles, Genichi Taguchi, famous for his loss function and the off-line QC approach (Taguchi, Chowdury, & Wu, 2005), and Shingeo Shingo (1986), leading expert on the Toyota Production System and theorist of the zero QC and the poka yoke system.

Overall, the Japanese approach was revolutionary in that it radically extended the meaning of quality. As far as the product is concerned, while conformance was still important (or even more important, as zero defects was the target), the quality of the idea became more relevant: defect-free products could still remain unsold, unless they created value for the customers at a price they were willing to pay. To this end, process quality was the key: all possible performance dimensions were now in scope for quality, including cost and time minimization.

In terms of impact on the organization, quality was not managed exclusively by a department or a function. It became a mindset (forma mentis) and a way of doing things (modus operandi) across the organization, involving all functions (production, marketing, sales, etc.) at all levels (from chief executive officers, CEOs, to line workers). No final target was set, but continuous improvements programs were setting the pace.

In a nutshell, following Ishikawa’s (1985) own definition, TQC is

to develop, design, produce and service a quality product that is most economical, most useful and always satisfactory for the consumer. To meet this goal, everyone in the company must participate in and promote quality control, including top executives, all division within the company and all employees.

The consequences of the different route to quality taken in the United States (and in Europe) compared to Japan came to light in the 1970s. Whereas American companies had not invested in upgrading their approaches, Japanese firms, eager to reverse the opinion of them being shoddy goods producers, entered the US market with a value proposition strongly centered on quality.

This caught US executives completely off-guard. All other possible explanations were given to account for the Japanese success, for example, dumping, low wages, access to preferential financing, exploitation of suppliers, and improper governmental support. Whereas some of these were relevant, yet quality was hardly mentioned. On the one hand, an objective evaluation was hindered by a strong bias against Japan, considered a second-tier producer. On the other hand, quality was overall not considered a competitive variable as, even in academia, it was perceived as a purely technical topic. American CEOs were lacking the instruments on their dashboard in terms of customer satisfaction, competitive quality, and performance analysis to fully grasp the issue. A good example is Xerox (Juran, 1993). In the 1970s, their machines malfunctioned or broke down regularly. Executives, aware of this situation, created a top-notch service team to fix the machines. However, as they did not have tools to measure customer satisfaction, they did not realize that their clients did not want timely repairs, but machines that did not break down in the first place. This situation set the stage for the entry of Japanese competition.

Overall, as noted by Robert Cole (1999):

[…] it is hard to exaggerate the impact of the Japanese quality challenge upon American management. The Americans were truly blindsided by the strong Japanese attack on their prevailing understanding of competitive activity. Whole industries, like color TVs, were lost before U.S. managers even comprehended the major factors contributing to these outcomes. At the very beginning they simply denied quality as a competitive issue and denied the possibility that the Japanese had bested them in those dimensions of quality that mattered most to consumers. [...] Only after managers recognized the quality gap as a major competitive factor, could they take action to address this deficit. […] Only after they stopped blaming their own employees for the problem could they begin to address their own responsibilities.

Only in the 1980s, it became clear to the US companies that, if they wanted to stay competitive in the market, their approach to quality had to change.

1.6. The Development of the Western Quality Movement Since the Early 1980s

The Reagan Era was starting off with the goal of reaffirming American superiority in terms of international relations, by rolling back the influence of the Soviet Union, and economic growth, requalifying the United States as leading high-quality producer against the Japanese stronghold and the emerging export-oriented Four Asian Tigers (Hong Kong, Singapore, South Korea, and Taiwan). The ongoing question was, echoing a 1980 NBC broadcast that made history, “If Japan Can … Why Can’t We?” Teams of engineers and researchers were sent to Japan to understand what was happening. They came back with a new way of approaching quality (TQC) and production in general (the Toyota Production System).

As quality was making the front page in the news, the American business community was (re)discovering the work of a number of thinkers (the so-called “quality gurus”) who influenced the Japanese evolution, such as the Americans Deming, Juran, Feigenbaum, and Crosby, but also Japanese experts like Ishikawa and Imai. In front of the growing excitement, many consulting companies were also entering the debate, each promoting their own “unique” approach (Brown, 2013). Similar trends were also present in Europe and Australia.

Besides individual differences, the various models and principles emerging in this phase are collectively known as total quality management (TQM). Overall, America was rebranding the Japanese TQC, which incidentally was renamed TQM at the beginning of the 1990s by the JUSE to show consistency with the increasingly popular Western label.

As the popularity of TQM was growing, however, inflated expectations were often matched with over-simplistic practical responses. Since the early 1980s several companies had adopted analytical and visualization tools or some of the new organizational solutions (e.g., quality circles), not embracing the profound organizational and cultural changes needed (Dale & Lascelles, 2007). Responsibility for quality was usually delegated to middle management and CEOs, who would have the levers to intervene more radically, were not directly involved. Moreover, some cultural and context-related elements of the Japanese approach could hardly be replicated in a different context. Against this scenario, there was the need to engage business leaders in defining a sustainable “American way.”

An important step in this direction was taken with the establishment in 1987 of the Malcom Baldrige National Quality Award, an initiative of the US Congress managed by the National Institute of Standards and Technology. Following the example of the Deming Prize in Japan, companies were evaluated not only on the results, but also on how they managed quality. Seven dimensions were used: leadership, information and analysis, strategic quality planning, human resources utilization, quality assurance of products and services, quality results, and customer satisfaction. The goal was relevant and ambitious. In the words of the late Secretary of Commerce Baldrige (reported in Steeples, 1992, p. 9):

We have to encourage American executives to get out of their boardrooms and onto the factory floor to learn how they products are made and how they can be made better.

For this purpose, the award established a national framework for TQM and motivated organizations to find their own solutions around it. In particular, it succeeded in stimulating companies to attain excellence for the pride of achievement; recognizing outstanding companies to provide examples to others; establishing guidelines that business, governmental, and other organizations could use to evaluate and improve their own quality efforts; providing information from winning companies on how to manage for superior quality (Congress of the United States of America, 1987). Preparing to submit a Baldrige application was no simple task, as it required large investment of money and time; however, the number of filings showed an exponential increase in the early 1990s. Its philosophy and practices were adopted by many more companies, as firms were also using the Baldrige framework for self-assessment without presenting an application for the award.

Overall, by the end of the 1990s, the United States have shown the world their ability to recover lost ground by leveraging on a new approach to quality. Looking at Europe in the same timeframe, the evolution of quality was relatively uneven. The concepts of TQM had gained significant popularity here too and in 1992, on the heels of the Baldrige Award, 14 leading companies (who had previously established the European Foundation for Quality Management) introduced the European Quality Award (Conti, 2008). However, whereas large companies were increasingly embracing this new vision of quality, many small and medium enterprises (SMEs) were getting to know a completely different, in many respects outdated version of quality and became more and more dissatisfied with it (Brown, 2013).

In fact, in 1987, the International Organization for Standardization (ISO) had published the first version of the ISO 9000 family of standards and many firms, especially in Europe due to the strong calls for accreditation by the Union, were founding themselves in a situation of apparent contradiction. On the one hand, the buzz around TQM was creating a hype around the benefits of quality, on the other, the ISO version of quality was limited in its scope (conformance of the technical realization) and levers (aligning procedures rather than managing processes).

By definition, a standard does not “invent” anything new. It formalizes shared know-how and best practices into guidelines and requirements in a specific field. The field, in the case of the ISO 9000 standards, was not TQM but external QA as it had shaped over the previous 20 years starting from government procurement agencies and related business sectors (e.g., defense, energy, etc.). Here the issue was neither to fight back Japanese players (national interest and high-entry barriers in terms of technological expertise made these industries not an easy target) nor to understand customer expectations in terms of product quality (which were formalized in technical specifications), but to ensure a smooth supplier development in terms of quality requirements. In the 1970s, government procurement standards had provided a first answer to the issue. However, as products were becoming more complex and the number of specialized subcontractors was growing, the cost of managing these standards had grown exponentially: each vendor/procurement agency had to perform periodic inspections on their several suppliers, conversely each supplier needed to prove compliant to as many standards as the number of customers/contracts. With the aim of making the whole process more cost-effective, several industry-specific standards (defense, nuclear plants, electronic components, etc.) had been developed. Furthermore, in response to the request made by the British Ministry of Defense, the British Standard Institution published in 1979, the first management system quality standard applicable across industries, the BS5750. The broad success it obtained right from the outset proved that a standardized approach to quality in supplier relationships was needed and paved the way for the formulation in 1987 of the ISO 9000 standards.

The focus on conformance of the first edition was in line with its original background in defense. Since then, the ISO has worked on several revisions (in 1994, 2000, 2008, and 2015) to ensure relevance and applicability; however, there was no shift in the underlying assumption about the meaning of quality until 2000, when a broader understanding (more in line with TQM) was finally embraced.

The ISO 9000 standards have been extremely important in institutionalizing the role of quality in the customer–supplier relationship and in disseminating some principles of quality management to a large number of companies, including SMEs. However, especially in their early days, as certification was used to enter a particular market, tender for government contracts or serve large organizations, many companies developed a “certificate on the wall syndrome” not backed up with actual result improvements (Brown, 2013). This, in turn, led to negative publicity around the concept of quality itself calling for a deep reorientation of expectations and approaches in the following decade.

1.7. Quality at the Turn of the Millennium: A Polarization of Perspectives

The most important legacy of the 1990s in terms of quality management is the lessons learnt from when it failed: once quality goes beyond a purely technical domain (as in QI or QC), there is no universal recipe for success.

At the turn of the millennium, many believed that quality was a “fallen star” (Dale, Zairi, Van der Wiele, & Williams, 2000). Alongside many successful companies having adopted TQM models/ISO 9000 standards, a significant number of managers were increasingly disappointed by the returns of the time and resources invested to implement them. The lack of success was probably not due to major flaws in the concept, but rather to the way it had been introduced and used by organizations: ISO 9000 as if a certificate alone could change the way a company was operating, TQM as some tools and practices with a one-off application. The effects on the overall reputation of quality were magnified as Japan, the champion of the quality-driven approach to business, found itself in the midst of a severe economic recession after the burst of the 1989 bubble. The whole Japanese Economic Miracle was now reinterpreted by revisionists critics, who were suggesting that a number of management myths, including quality, have been created around the country (Crawford, 1998).

Against a decreasing popularity of the concept, many consulting companies moved away from quality-related services, or rebranded them under new names. The same CEOs that had been bandying around a “quality strategy” were now afraid to use the term as an exhausted fad. Even quality awards progressively stripped out references to quality, becoming “business excellence models,” first in Europe (1999), then in the United States (2010).

Despite the disappointment of many scholars and experts of the quality movement, this change in terminology reflected the fact that the more the scope of quality widens, the less it can be understood in isolation. In a company, it is part of its way of doing business, across functions, processes, and hierarchical levels. From a conceptual point of view, its development shows significant interdependencies with advances in many other managerial disciplines or, more broadly, social sciences, so much that drawing a line between which concept/methodology is quality and which one is not seems arbitrary.

So, what is quality all about? Since the late 1990s the answers to this question, and thus the evolution of quality management, have been polarizing. On the one hand, in front of the widespread criticism of more overarching models, “classic” statistics-based quality has been reborn under the “Six Sigma” name, a methodology combining the basics of QC with continuous improvement practices. Espoused by Motorola in the 1980s, it became popular only 20 years later after General Electric’s adoption. Six Sigma promotes a down-to-earth approach to quality: employees are trained on the methodology at different proficiency levels (or “belts”), teams of such employees are assigned well-defined projects having an impact on the organization’s bottom line, Deming-like tools and approach to problem-solving are applied.

While Six Sigma took quality back to its operations management engineering roots, the recent history of quality has seen also the opposite trend, with thinkers and professionals of various backgrounds involved in the debate. The increasing identification of quality as “business excellence” brings into the picture all kind of managerial disciplines, including organizational design, strategy, finance, and marketing.

Moreover, the fields of application of quality management have further widened over the past 20 years. Quality has grown in importance in the service sector as a consequence of increasing competition and following modernization. The topic has been treated especially from a marketing point of view in terms of methodologies to identify gaps between customer expectations and service delivery, for example, the development of the SERVQUAL tool (Parasuraman, Zeithami, & Berry, 1988), and of practices of customer relationship management. More recently, quality has become a topic also for public administration as a consequence of calls for a more efficient use of public resources.

Finally, over the past 20 years the meaning of quality had shifted another time to encompass also the impact dimension. By the early 2000s, several corporate scandals and concerns over globalization gave a new start to corporate social responsibility (CSR) and related concepts after the discussion was kick-started in the 1970s (Friedman, 1970). CSR, which again is at the cross-road of many disciplines, seems a natural and progressive extension of quality in its broader sense, and is increasingly part of a quality practitioner’s competences (e.g., by managing standards such as the SA 8000 for social accountability in the workplace, the ISO 14000 family of standards for environmental management) (Orzes et al., 2018; Orzes, Jia, Sartor, & Nassimbeni, 2017; Sartor, Orzes, Di Mauro, Ebrahimpour, & Nassimbeni, 2016; Sartor, Orzes, Touboulic, Culot, & Nassimbeni, 2019).

1.8. Summary and Outlook

The history of quality in the past 200 years has been characterized by “long periods of relative stability punctuated by short periods of turbulent change” (Juran, 1995), usually determined by market dynamics (e.g., competition, customer expectations, etc.) or the advent of new technology. Many of these changes are essentially context-specific with respect to the country or the industry (but even to the firm itself).

Overall, besides the many differences in when (and how) any new phase of quality manifested in practice, the evolution has been characterized by the shift from a narrow understanding of the meaning of quality (compliance of the technical realization) to a broader one, which sees quality as mind-set and modus operandi to reach the company’s goals in terms of profitability and sustainability. This in turn has led to the development of a preventive (rather than reactive) and dynamic (rather than one-off) approach to quality, involving no longer just the quality department, but systemically the whole company and its partners.

The next phase of quality is on the horizon. The second largest economy in the world, China, has quality improvements high on the agenda of its industrial policies (State Council of China, 2015). Meanwhile, a new wave of technological innovation is shaping the economy and the society (the so-called Fourth Industrial Revolution). The practical implications for quality are yet to be seen, but there is no doubt they will be substantial.

References

Abbott, L. (1955). Quality and competition. New York, NY: Columbia University Press.

Anderson, J.C., Rungtusanatham, M., Schroeder, R.G. & Devaraj, S. (1995). A path analytic model of a theory of quality management underlying the Deming management method. Preliminary empirical findings. Decision Sciences. 26(5), 637–58.

Brown, A. (2013). Quality: Where have we come from and what can we expect? The TQM Journal, 25(6), 585–596.

Brown, T. J., & Dacin, P. A. (1997). The company and the product: Corporate associations and consumer product responses. Journal of Marketing, 61(1), 68–84.

Buchanen, S. (1948). The portable Plato. New York, NY: The Viking Press.

Ciment, J. (2007). Postwar America: An Encyclopedia of social, political, cultural, and economic history. New York, NY: M.E. Sharpe.

Cole, R. E. (1999). Managing quality fads. Oxford: Oxford University Press.

Congress of the United States of America (1987). The Malcom Baldrige National Quality Improvement Act. Retrieved from https://www.nist.gov/sites/default/files/documents/2017/05/09/Improvement_Act.pdf

Conti, T. (2007). A history and review of the European Quality Award Model. The TQM Journal, 19(2), 112–128.

Crawford, R. J. (1998, January–February). Reinterpreting the Japanese economic miracle. Harvard Business Review, 76(1), 179–184.

Crosby, P. B. (1979). Quality is free. New York, NY: McGraw-Hill.

Dale, B. G., & Lascelles, D. M. (2007). Levels of TQM adoption. In B. G. Dale (Ed.), Managing quality (5th ed.) (pp. 111–126). Oxford: Blackwell.

Dale, B. G., Zairi, M., Van der Wiele, A., & Williams, A. R. T. (2000). Quality is dead in Europe – Long live excellence – True or false? Measuring Business Excellence, 4(3), 4–10.

Deming, W. E. (1982). Out of the crisis. Cambridge, MA: Massachusetts Institute of Technology, Center for Advanced Educational Services.

Eichengreen, B. (2006). The European economy since 1945. Princeton, NJ: Princeton University Press.

Feigenbaum, A. V., & Vallin, A. (1961). Total quality control. New York, NY: McGraw-Hill.

Flynn, B.B., Schroeder, G.G. & Sakakibara, S. (1994). A framework for quality management research and an associated measurement instrument. Journal of Operations Management. 11(4), 339–66

Friedman, M. (1970). The social responsibility of business is to increase its profits. The New York Time Magazine, September 13. p. 17.

Garvin, D. A. (1984, October). What does ‘product quality’ really mean? MIT Sloan Management Review, 26, 25–45.

Gilmore, H. L. (1974, June). Product conformance cost. Quality Progress, 7(6), 16–19.

Ishikawa, K. (1985). What is total quality control? The Japanese way. Upper Saddle River, NJ: Prentice Hall.

Ivancevich, J. M., Matteson, M. T., & Konopaske, R. (1990). Organizational behavior and management (9th ed.). New York, NY: McGraw-Hill.

Juran, J. M. (1993). What Japan taught us about quality. The Washington Post, August 15. p. H6 or link: https://www.washingtonpost.com/archive/business/1993/08/15/what-japan-taught-us-about-quality/271f2822-b70d-4491-b942-4954caa710f8/?utm_term=.2c3196cc2ff3

Juran, J. M. (1995). A history of managing for quality. The evolution, trends and future directions of managing for quality. Milwaukee, WI: ASQC Quality Press.

Kuehn A. A., & Day, R. L. (1962, November–December). Strategy of product quality. Harvard Business Review, 40(6), 100–110.

Leffler, K. B. (1982). Ambiguous changes in product quality. American Economic Review, 72(5), 956–967.

Maynes, E. S. (1976). The concept and measurement of product quality. In N. E. Terleckyj (Ed.), Household production and consumption (pp. 529–584). Cambridge, MA: National Bureau of Economic Research.

Mohr, L. A., Webb, D. J., & Harris, K. E. (2001). Do consumers expect companies to be socially responsible? The impact of corporate social responsibility on buying behavior? The Journal of Consumer Affairs, 35(1), 45–72.

Nassimbeni, G., Sartor, M., & Orzes, G. (2014). Countertrade: Compensatory requests to sell abroad. Journal for Global Business Advancement, 7(1), 69–87.

Orzes, G., Jia, F., Sartor, M., & Nassimbeni, G. (2017). Performance implications of SA8000 certification. International Journal of Operations and Production Management, 37(11), 1625–1653.

Orzes, G., Moretto, A. M., Ebrahimpour, M., Sartor, M., Moro, M., & Rossi, M. (2018). United Nations global compact: Literature review and theory-based research agenda. Journal of Cleaner Production, 177, 633–654.

Parusaman, A. P., Zeithami, V. A., & Berry, L. L. (1988). SERVQUAL: A multiple-item scale for measuring customer perceptions of service quality. Journal of Retailing, 64(1), 12–40.

Piersing, R. M. (1974). Zen and the art of motorcycle maintenance: An inquiry into values. New York, NY: William Morrow.

Reeves, C. A., & Bednar, D. A. (1994). Defining quality: Alternatives and implications. The Academy of Management Review, 19(3), 419–445.

Saraph, J.V., Benson, P.G. & Schroeder, R.G. (1989). An Instrument for measuring the critical factors of quality management. Decision Sciences. 20(4), 810–92.

Sartor, M., Orzes, G., Di Mauro, C., Ebrahimpour, M., & Nassimbeni, G. (2016). The SA8000 social certification standard: Literature review and theory-based research agenda. International Journal of Production Economics, 175, 164–181.

Sartor, M., Orzes G., Touboulic, A., Culot, G., & Nassimbeni, G. (2019). ISO 14001 standard: Literature review and theory-based research agenda, Quality Management Journal 26(1), 32–64.

Shewhart, W. A. (1931). Economic control of quality of manufactured products. New York, NY: D. Van Nostrand.

Shingo, S. (1986). Zero quality control: Source inspection and the poke yoke system. Cambridge, MA: Productivity Press.

State Council of China. (2015). Made in China 2025. Retrieved from http://www.gov.cn/zhengce/content/2015-05/19/content_9784.htm

Steeples, M. M. (1992). The corporate guide to the Malcom Baldrige National Quality Award: Proven strategies for building quality into your organization. Milwaukee, WI: ASQC Quality Press.

Taguchi, G., Chowdury, S., & Wu, Y. (2005). Taguchi quality engineering handbook. Hoboken, NJ: John Wiley and Sons.

Taylor, F. W. (1911). Principles of scientific management. New York, NY: Harper & Brothers.

Weckenmann, A., Akkasoglu, G., & Wener, T. (2015). Quality management – History and trends. The TQM Journal, 27(3), 281–293.

Womack, J. P., Jones, D. T., & Ross, D. (1991). The machine that changed the world: The story of lean production. New York, NY: Harper Perennial.