Chapter 11. Visual Attention While Driving

Measures of Eye Movements Used in Driving Research

David Crundall and Geoffrey Underwood

University of Nottingham, Nottingham, UK

Vision is the predominant sensory modality used when driving, and as such it has received considerable interest from researchers who employ eye tracking devices to monitor where drivers look when engaged in on-road driving or when performing subcomponents of the driving task in the laboratory. But what measures provide the most reliable evidence or the greatest insight into the task of driving? Using examples from the research literature, this chapter examines some of the many eye movement measures that have been applied to driving research. Such measures include the probability of looking at a prespecified object, measures of glance duration, and measures of the spread of visual search. We conclude that no single measure can escape controversy, and all data must be viewed in the context in which they were collected. We argue for a multivariate approach to measuring eye movements in driving, combining many measures to obtain a holistic view of visual skills and strategies.

1. Introduction

Van Gompel, Fischer, Murray, and Hill (2007) introduced their book on eye movements with a historic perspective on the idea that the eyes provide access to the inner workings of the mind and brain. They quote De Laurens (1596) as referring to the eyes as “windowes (sic) of the mind” (p. 3), which presents an opportunity to indirectly observe what is being processed in the brain on the basis of what the eye is looking at. This link between the location of the eye in the visual world and the concomitant processing in the brain is most formally stated in Just and Carpenter's (1980) eye–mind assumption, which states that the eye remains fixated on an object until the brain has finished processing it. Various ancillary assumptions can be appended to this, such as the argument that the brain should not be processing any visual information that the eye is not looking at, and that whenever the eye is fixating something, that particular object must be being processed. If these assumptions are met, it is easy to see how valuable it would be for a psychologist to monitor the eye movements of individuals engaging in various tasks, including driving, which is predominantly dependent on the processing of visual information.

Unfortunately, the story is not so simple. Numerous studies demonstrate the ability of readers to process words parafoveally—that is, to process them without looking at them directly (Underwood & Everatt, 1992). Conversely, there is evidence that looking directly at an object or area of a scene does not guarantee that the viewer will process the information. Studies of change blindness have demonstrated that viewers may not notice a change made to an object in a visual scene even though they are looking at the object when the change occurs (Caplovitz, Fendrich, & Hughes, 2008). Indeed, whenever the mind wanders while reading a book, it is common to feel that one has read a sentence without actually processing what it meant, and drivers sometimes report not being aware of familiar sections of roadway that they have successfully negotiated. Despite the refutation of the strong version of the eye–mind assumption, the link between what the eye is looking at and what the viewer is thinking about is still very robust. Although Underwood and Everatt (1992) have presented several challenges to the eye–mind assumption, they acknowledge that these are all special cases in which the assumption can be shown to fail, and in general it is a safe working assumption that if someone is looking at something, then he or she is processing it. Modern reviews of eye tracking (Van Gompel et al., 2007) have demonstrated the appeal of this methodology as a means of better understanding how people approach and engage in a variety of tasks and situations.

We believe that the driving task is eminently suited to the application of eye tracking methodologies. The information that a driver uses is predominantly visual (Sivak, 1996), and a wide range of specific driving behaviors, from navigation to anticipation of hazardous events, are primarily dependent on the optimum deployment of attention through overt eye movements. Classic studies of road collision statistics have identified perceptual problems to be a leading cause of traffic crashes (Lestina and Miller, 1994, Sabey and Staughton, 1975 and Treat et al., 1979), and in-car observation of driver behavior preceding an actual crash supports the causal role of distraction and inattention (Klauer, Dingus, Neale, Sudweeks, & Ramsey, 2006). Several reviews of driving research have all reached the same conclusion: When and where drivers look is of vital importance to driver safety (Lee, 2008 and Underwood, 2007), and we need to record and interpret these eye movements in order to decrease death and injury on our roads (Shinar, 2008). Recording and interpreting eye movements has indeed been a valuable tool in driving research during the past 40 years, and we review much of the findings in this chapter. This methodology is likely to become even more important in the future with the development of transport simulators that allow the recording of eye movements in near-naturalistic situations while maintaining a high degree of experimental control over the environment. Considering the interest that has been and will be shown in recording drivers' eye movements, we believe that it is important to provide a review of the various measures that can be and have been captured in previous studies and to discuss how these different measures can be used to address different hypotheses. This chapter is not concerned with the benefits of one eye tracker over another (for a review of eye tracking methods, see Duchowski (2007)) but is restricted to measures we might record.

Essentially, eye movements consist of two primary events: fixations and saccades. Fixations are periods of relative stability, during which the eyes focus on something in the visual scene. Such fixations most often reflect the fact that the brain is processing the fixated information. Saccades are rapid, ballistic jumps of the eye that separate the fixations and serve to orient the focus of the eyes from one point of interest to another. No visual information is taken in during these rapid movements. Although we can reduce eye movements to these two components, there are numerous exceptions and different methods of capturing, combining, averaging, and analyzing these processes. This chapter provides an overview of some of these methods and also reviews the various studies that have employed these methods in their search for greater insight into the task of driving. We start with the most obvious of measures—assessing whether drivers actually look at elements of the road scene that might help prevent a collision.

2. Do Drivers Look at Critical Information?

Lee (2008) reviewed 50 years of research and concluded that collisions occur because drivers “fail to look at the right thing at the right time” (p. 525). We first consider how we should measure whether drivers “look at the right thing” before later considering how to measure whether they look “at the right time.”

The simplest conception of whether an individual has looked at a certain object in a scene is whether the eye coordinates recorded by an eye tracker are coincident with the world coordinates of the object. This can be calculated automatically for eye movements to static images where the precise coordinates of an object are easily defined and related to the eye position coordinates. Many eye tracking software packages allow areas of interest (AOIs) to be generated for particular pictures, which allow automatic calculation of when individuals look at specific objects. These AOIs are regions of a visual image that are defined by two-dimensional (2-D) coordinates in the viewing plane and thus allow software to identify fixations that fall within their boundaries. However, there are two particular problems with this approach. First, the AOI is typically a symmetric shape drawn on top of the image or stimulus (most often a rectangle). Unfortunately, real-world objects rarely fit into such shapes. Objects may have irregular outlines, or their 2-D shape may be distorted by the 3-D representation. Objects in real scenes also tend to be partially obscured or may themselves partially obscure other interesting stimuli. It is impossible to determine from 2-D data whether the participant is genuinely looking at the car ahead or whether he or she is looking through the windows of the car to identify any further traffic that might be obscured. The second, more pertinent, problem is that AOIs cannot be practically defined for unpredictable interactions on the road or in a driving simulator. Thus, if eye movements are recorded from an on-road vehicle, it is impractical to define the coordinates of a particular vehicle in the road ahead because the position of both the target car and the participant's car would require the AOI coordinates to be updated constantly. Improvements in the software of at least one eye tracking system have extended the application of AOIs to video-based stimuli (where one can specify an AOI at several points during a video, and the software will interpolate the coordinates in between, creating a dynamic AOI); however, this procedure is limited by the predictability of the dynamic object that one wishes to track. For instance, it is relatively easy to interpolate the coordinates of an approaching car if the vehicle maintains its heading and speed: Drawing an AOI around the car when it first appears in the video and when it is last visible will allow the software to estimate the rate and direction of the AOI expansion as the vehicle approaches. Less predictable patterns require more experimenter-defined AOIs to allow more accurate prediction of how the AOIs change. At least with video-based stimuli, even if a large number of experimenter-defined AOIs were required to identify a single object, the calculations could then be applied across all participants watching the same video clips. With simulation and on-road eye tracking, however, this benefit is absent because the position and dynamic nature of visual targets will vary across participants. A requirement to define a large number of AOIs for every participant simply to assess whether drivers tend to look at a particular object would rapidly become impractical. Although it is theoretically feasible that a simulator could record coordinates of objects as they move through the virtual world, providing a personalized dynamic AOI (several research groups are pursuing this goal), we are unaware of any published articles that have used this methodology. Instead, researchers who are faced with eye movement data from dynamic stimuli (especially on-road eye tracking) must often perform a frame-by-frame analysis of video footage containing the dynamic stimuli (e.g., the external world in on-road tests, often recorded through a windscreen-mounted or head-mounted camera) and an overlaid cursor depicting where the eye tracker thinks they were looking.

A study that employed this methodology was conducted by Pradhan et al. (2005). Three groups of drivers, of varying age and experience, drove through a series of simulated scenarios in which potential hazards might (but did not) occur. For instance, one scenario contained a line of bushes obscuring the entry to a pedestrian crossing. It was feasible that a pedestrian could have emerged from behind the hedge and entered the crossing in front of the participant's vehicle. Participants were assessed in regard to whether they looked at this hedge on approach to the pedestrian crossing, with the associated assumption that a glance at the hedge reflected the driver's concern that it might conceal a pedestrian. The results of the study demonstrated that novices “often completely fail to look at elements of a scenario that clearly need to be scanned in order to acquire information relevant to the assessment of a potential risk” (p. 851). In one particular scenario, only 10% of novices looked in the appropriate direction to check whether pedestrians might emerge from behind a parked truck onto a crossing. The more experienced drivers, by contrast, were significantly more likely to look at these a priori areas of the visual scene, which was considered indicative of safe behavior by the experimenters. Similar results were found by Borowsky, Shinar, and Oron-Gilad (2010), who compared young, inexperienced drivers to more experienced drivers. They noted instances in which the experienced drivers fixated areas in the scene that they considered important to hazard detection (e.g., vehicles merging from an adjoining road). In this particular instance, the young, inexperienced drivers tended to focus on the road ahead, apparently disregarding the hazard posed by merging vehicles. These results are important because they offer a suggestion as to why novice drivers are consistently overrepresented in crash statistics (Clarke et al., 2006, Organisation for Economic Co-operation and Development/European Conference of Ministers of Transport, 2006, Underwood, 2007, Underwood et al., 2009 and Underwood et al., 2007). Several researchers have argued that novices have poor hazard perception skills (Horswill & McKenna, 2004), and the results of Pradhan et al. (2005) and Borrowsky et al. (2010) ostensibly demonstrate that a failure to anticipate locations from where hazards may emerge and then prioritize these locations for visual search may be a major contributor to failures in hazard perception.

There is, however, a potential confound in the interpretation of simple glance measures that record only where the driver has looked. Often, they do not take into account any measure of duration, simply assuming that to look at an object is to process that object. However, extremely short fixations may not reflect sufficient processing time for a particular fixated object to be identified. It is common practice in eye movement research to filter out extremely short fixations from subsequent analyses because they are unlikely to reflect object processing (often researchers define a fixation as at least 100 ms of eye stability). Fixations shorter than 100 ms do occur, but they are more likely to reflect attempts to reorient visual search on the basis of global features in the whole scene rather that processing what is at the point of fixation. However, the 100-ms cutoff is not a psychophysical threshold, after which any fixations must have accessed the identity of the fixated object, but is instead a heuristic for accepting and rejecting data. We know that some objects take longer to process than others because they have a higher threshold for identification. This is most obvious in the research literature on eye movements during reading. Results have consistently shown that lower frequency words receive longer fixations (Liversedge and Findlay, 2000 and Rayner, 1998), and this appears to translate to objects in scenes, with unexpected or inconsistent objects receiving longer fixations (Henderson and Hollingworth, 1999 and Underwood et al., 2008). Even task-irrelevant objects may evoke longer fixation durations due to their novel or unexpected nature (Brockmole & Boot, 2009). This, in itself, does not pose a problem for the typical glance analyses seen in many driving studies (Pradhan et al., 2005), providing that all glances to target objects meet the required threshold duration. The problem arises when fixations are curtailed before the threshold is met. This issue is detailed in the E-Z Reader model of reading (Reichle, Rayner, & Pollatsek, 2003). Reichle et al. presume two levels of lexical access when looking at a word in a sentence. The first level is the familiarity check, which is undertaken when the eye first lands on the word. If the word is identified as familiar (and therefore to have a low threshold for complete identification), then the oculomotor system begins to plan the next saccade in parallel with the second level of word processing, secure in the knowledge that the full identity of the word will be accessed before the saccade is triggered. If, however, the word does not pass the familiarity check, then the subsequent plan for the next saccade may be delayed. Even if the system has already begun to plan the next saccade, it can be canceled if the order is made quickly enough (during the labile stage of the saccadic planning process). If the reader realizes that the word is a difficult one to process only once the saccadic plan has reached the nonlabile stage, the eye movement will go ahead even though the reader has realized that more attention needs to be devoted to the troublesome word. Thus, the eye will move away from the word before it is identified. This will often lead to a regressive saccade, where the eye jumps back in the text in order to reprocess a tricky word.

It is highly probable that something similar occurs with drivers' glances when on the road. Consider, for instance, the most common cause of motorcycle collisions in the United Kingdom—when a car driver pulls out from a side road into the path on an oncoming motorcycle (Clarke, Ward, Bartle, & Truman, 2007). The driver will check down the road to see if there is any conflicting traffic. If the driver's eyes land directly upon an approaching car, the familiarity check will probably be completed successfully and allow the driver to plan the next saccade during level 2 processing. Information about this approaching vehicle (trajectory, speed, etc.) could then be integrated with information about traffic coming from the opposite direction, which may then identify a suitable gap in which to pull out. Even if the approaching car was displaying behavior that required closer attention, this should be flagged at least in the labile stage of saccadic programming, allowing the subsequent saccade to be canceled and more attention to be given to the car.

The situation becomes more precarious, however, if the approaching vehicle is a motorcycle. Because motorcycles comprise only 1% of UK traffic (Department for Transport, 2010 and Department for Transport, 2010), they are novel, low-frequency items that should therefore be associated with higher thresholds for target identification (and thus, just as with low-frequency words, would require longer fixations before identification is achieved). Even assuming that the driver looks directly at the approaching motorcycle, the low cognitive and physical conspicuity associated with the target may result in the familiarity check wrongly assuming that the road is empty. Thus, the eye may move away from the motorcycle before the perceptual system has had time to process and identify the threat. Even if this happens, in fortunate cases the driver may at least realize that there is an approaching motorcycle in the nonlabile stage of the saccade program. Although the eyes may move off the motorcycle before fully processing it, the driver may then have enough information to realize that this was an error and then re-fixate on the motorcycle, which may be enough to enable a second look. In particularly unfortunate cases, however, the driver may have no contradictory information to challenge the initial assumption that the road is empty, and the maneuver may proceed with dire consequences.

This is a classic case of a “look but fail to see” accident, in which drivers often report that they had looked in the appropriate direction but had completely failed to see the approaching motorcycle (Brown, 2002). There is even evidence that initial gaze durations upon approaching motorcycles at T-junctions can, in certain situations, be shorter than the corresponding gazes devoted to approaching cars. Considering that cars and motorcycles are the equivalent of high- and low-frequency words, respectively, we would expect this significant effect to be reversed. The short initial gazes on motorcycles were therefore argued to be indicative of initial fixations that were not long enough for drivers to realize exactly what they were looking at (Crundall, Crundall, Clarke, & Shahar, in press). To further pursue the analogy of driving and reading, a study of eye movements during reading found the equivalent of “look but fail to see” events (Ehrlich & Rayner, 1981). Readers were set a task of detecting misspellings in a paragraph of text, and quite often they would look at a misspelling but not report it. Interestingly, this was more likely to happen if the word was predictable: The readers saw what they expected to see, and perhaps drivers sometimes do the same.

In light of the possibility of “look but fail to see” errors, one can understand how perilous it might be to rely on a binary measure of whether a glance occurred or not. Instead, we argue that glance probability or frequency needs to be paired with a measure of glance duration. The following section considers the different measures of duration that can be used and what previous studies have shown regarding the sensitivity of these durations to different driving conditions.

3. Measures of Glance Duration

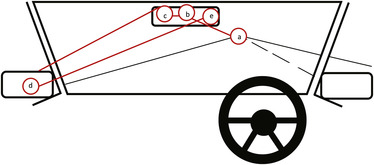

Several measures of glance duration are typically used in studies of reading, scene perception, and driving. The smallest unit of duration is the first fixation duration (FFD). This represents the time that the eye dwells in one place for the first time. This is only applicable in relation to specific objects. For instance, it makes sense to consider the FFD upon a pedestrian who steps out from behind a truck, but it is less useful to record the FFD on more general categories such as the “road ahead.” This initial fixation and subsequent fixations are often averaged to create a mean fixation duration (MDF), which can apply to both specific objects and general categories. Gazes differ slightly from fixations in that they are concerned with multiple fixations on specific objects. Depending on how large an object of interest is, it may be possible to have two separate fixations within the same object without having saccaded away (i.e., the eye moves to another location within the object). Only when a fixation occurs outside the boundary of the object does the gaze end. Total dwell time (TDT) is simply the summation of all fixations on a specific object. Figure 11.1 shows a pictorial representation of how these measures are calculated.

Typically, all of these measures reflect various levels of processing of the stimuli, although there are arguments for and against different measures. For instance, TDT represents the most stable measure of overt attention, presented either in absolute terms (providing all participants had the same duration of opportunity to fixate the object) or in percentage or ratio terms. Individual fixations, however, represent the most sensitive measure of processing demand, although they are more susceptible to slight variations in a magnitude of potentially confounding factors.

Henderson (1992) argues that the more global measures of fixation time, such as TDT, on an object will include not just the time spent processing the target but also many other post-identification processes, such as integrating the object into a situational model. On this basis, Henderson argues in favor of the FFD as the cleanest measure of initial processing demands, although within the driving domain this could give undue prominence to “look but fail to see” fixations, potentially leading researchers to underestimate the processing time required to successfully identify an object in the first fixation. Some researchers have gone further than Henderson: Kotowicz, Rutishauser, and Kock (2010) suggest that in simple visual search experiments, a fixation on the target might not be necessary to identify it. They found that participants could accurately report a target location with as little as 10 ms of fixation on it before it offset. They argue that the target is actually identified extrafoveally during the visual search, which then results in the saccade to the target location. The amount of time spent fixating the target does not increase target identification accuracy, but it does increase confidence in reporting the item as the target. We argue, however, that Kotowicz et al.’s data are unlikely to transfer from a simple visual search task for a target among distracters to a complex driving situation. In simple visual search tasks, the targets are usually highly constrained in both appearance and location, the task tends not to require any secondary response, and the distracters are well-defined. In driving, however, there are multiple task goals that need to be monitored, with a nonuniform background to interrogate. Furthermore, it might be understandable why participants in some simple visual search experiments attempt to maximize their use of extrafoveal vision: Without any peripheral cues, participants are faced with using a random search strategy (or at least a strategy that is unrelated to the likely location of subsequent targets). When driving, however, people often use specific search strategies to scan the road ahead in anticipation of potential hazards that might occur. As discussed later, these strategies are built up from experience and from learning where in the driving scene certain information is available or where certain hazards might be likely to appear. Thus, drivers are unlikely to mobilize as many extrafoveal resources to direct their saccades as were participants in Kotowicz et al.’s (2010) simple visual search experiments.

The use of fixation and gaze durations in driving research has revealed some very consistent patterns. For instance, Chapman and Underwood (1998) and Underwood, Phelps, Wright, van Loon, and Galpin (2005) demonstrated that fixation durations tend to increase in the presence of specific hazards (e.g., the car ahead suddenly braking). We interpret this in the same way that low-frequency words or incongruent objects in pictures evoke longer fixation durations: Because hazards are relatively novel events, which are more difficult to predict and require additional processing, they require longer fixation durations. This is not to say that all long fixation durations on a hazard are indicative of high initial processing demands, but (as Henderson (1992) would argue) it is likely that longer fixations also reflect ongoing monitoring of the hazard, attempts to integrate it into a situational model, and concomitant memory processes. Regardless of the precise reason for the increased fixation lengths during hazards, it is clear that this is a form of attentional capture, in which the saccade to the next fixation location is delayed for longer than usual due to the additional processing tasks that are inherent with hazards.

Interestingly, there is an additional parallel to the reading literature: Not only do low-frequency, novel, and complex hazards (or words) demand longer fixations but also those individuals who are considered better at the primary task tend to have overall shorter fixations. In studies of eye movements in reading, it has been shown that reading age correlates with a reduction in fixation length on words (Rayner, 1998), and greater exposure to typically low-frequency words will lower the thresholds for those words relative to those of other readers. For instance, lawyers are likely to have shorter fixations on certain Latin phrases compared to readers from other professions. In the same way, it appears that greater experience in driving tends to lead to a reduction in the duration of certain types of fixations. Chapman and Underwood (1998) noted this when recording the eye movements of novice and experienced drivers while watching hazard perception video clips. Although the appearance of a hazard tended to increase the fixation durations of all participants, the experienced drivers had consistently shorter fixations than the novice drivers in all conditions, especially in the presence of a hazard. They argued that this was because the hazardous events are less novel to the experienced drivers: If they have previously been exposed to similar situations, their threshold for understanding the threat posed by a particular object should be lower. This experiential effect has been replicated in a simulator. Konstantopoulos, Crundall, and Chapman (2010) measured the eye movements of driving instructors and learner drivers while navigating a hazardous route through a virtual city in a medium fidelity simulator. They found the driving instructors to have shorter, more frequent fixations than the learner drivers, which supports the suggestion that experience and expertise improve one's ability to extract relevant driving information from a single fixation. A second finding of interest from the Konstantopoulos study was an increase in fixation durations during nighttime driving and driving through rain in the simulator. They argue that the nighttime and rain conditions decreased visibility, thereby increasing the difficulty of extracting information during individual fixations. Again, this parallels the reading research, which suggests that words that are more difficult to perceive will require longer fixations (Reingold & Rayner, 2006).

However, there are certain paradoxes in the fixation duration literature on driving that echo Henderson's (1992) concerns that anything beyond the FFD is likely to reflect a host of post-identification processes. For instance, whereas hazards tend to produce longer fixations, more complex driving scenes tend to reduce overall fixation length. For instance, Chapman and Underwood (1998) noted that video clips of complex urban settings tended to evoke significantly shorter fixations than did clips of rural settings. Similarly, on-road data suggest that more visually complex roadways tend to produce shorter, more frequent fixations than more sparsely populated roads, such as dual carriageways or single-carriageway rural roads (Crundall & Underwood, 1998). Typically, the urban and suburban roads that produce the shortest fixation durations have a greater number of potential distracters and potential hazards: Shop fronts, pedestrians, parked vehicles, road and informational signs, and roadside advertising all vie for the attention of drivers. This increase in visual complexity requires a higher sampling rate of visual search. In contrast, empty undulating rural roads provide little to distract or interest the attention of the driver beyond the immediate road ahead. Although participants might occasionally search hedgerows for gates and emerging vehicles, the majority of their time will be spent looking as far down the road as possible (although how far one can comfortably look down the road varies from person to person for a variety of reasons, including driving experience and the extent of extrafoveal region from which ambient information may be processed) (Underwood et al., 2007). Thus, the long fixation durations that are seen during rural driving are not predominantly due to object processing or identification but instead related to vigilance and monitoring.

In summary, the length of fixation durations provides an important addition to a simple analysis of binary or frequency-based glances analyses. Unfortunately, the length of a fixation is affected by many factors (Henderson, 1992), and without a careful understanding of what is reflected in these duration measures, it is possible to draw erroneous conclusions. However, a number of clear patterns have emerged from a decade or more of studies. First, it seems that experience in the driving domain does tend to reduce fixation durations on average, most likely through a mixture of reduced thresholds for object or event identification and through an increase in processing speed. Second, localized increases in demand, such as the appearance of a hazardous pedestrian stepping out from behind a parked vehicle, tend to increase fixation durations. Considering the priority given to these stimuli, this may be understandable. However, the fact that novice driver fixations are proportionally more affected by the appearance of a hazard raises the potential problem of attentional capture beyond what is required to process the hazard. This may have a negative impact on the driver's ability to process other objects or events that appear soon after the hazard. Third, a dispersed increase in general demand (in regard to more visually complex road scenes) provokes the opposite reaction to a localized increased in demand. Whereas hazards capture attention, urban and suburban scenes promote shorter and more frequent fixations in order to cope with the greater number of points of potential interest.

4. Measures of Spread

Although the process of identifying what drivers look at, and for how long, has resulted in a number of insights into driver vision and behavior, these measures provide no indication of whether drivers adopt general scanning strategies when driving. Certainly, a number of experts in the field of driver training expound the view that wide and constant scanning is important for safe driving (Coyne, 1997 and Mills, 2005) and warn against the “disastrous habit of fixating [in one place for too long]” (Haley, 2006, p. 112). Some of the earliest research also noted that novice drivers scan a smaller area of the visual scene (Mourant & Rockwell, 1972). Later research confirmed that new drivers scan the road in a curiously maladaptive way, tending to look straight ahead of them and not showing any sensitivity to changes in driving conditions (Crundall and Underwood, 1998 and Konstantopoulos et al., 2010). The measure of greatest interest in the study by Crundall and Underwood was the variance of fixation locations, with high variance indicating greater scanning. This measure is calculated from the location of fixations in one axis (in the eye tracking system's reference frame). For instance, a sample of 100 fixations will provide a sequence of xy coordinates. The variance, or standard deviation, of the x coordinates reflects the extent of search activity in the horizontal axis. Likewise, using the y coordinates would produce a measure of the spread of search in the vertical axis.

In the Crundall and Underwood (1998) study, new and experienced drivers traveled along a range of roads through countryside, suburban housing areas, and along a demanding section of a multilane highway. Their eye movements were monitored and recorded during this drive. On simple rural roads with few hazards, all drivers tended to look at the roadway ahead, but on a tricky multilane highway with traffic joining from both left and right, the experienced drivers increased their scanning, whereas the novices continued to look straight ahead. This is maladaptive, or insensitive, because merging traffic requires an adjustment to the driver's own speed and a preparedness to take avoiding acting (and is in accordance with the work of Borowsky et al., 2010). The experienced drivers showed situation awareness in that they looked around them to determine the trajectories of the proximal traffic. Why should the novice drivers fail to scan for hazards on the roads most likely to present them with dangers? Three explanations considered here are that (1) novices need to look at markings on the road (white lines, curbs, and barriers) in order to steer their vehicles, (2) novices have not yet automatized the steering and speed control—the subskills required for the coordination of the vehicle—and are thus are unable to allocate mental resources to the task of monitoring other traffic, or (3) novices may opt not to look around them because they have a poor idea of the dangers present on these roads—they have inadequate situation awareness (Gugerty, 1997, Horswill and McKenna, 2004 and Underwood, 2007). Of course, these three explanations are not mutually exclusive. It is plausible that new drivers have difficulties in maintaining their lane position, in thinking about much else other than controlling the vehicle, and in forming a mental model of what the other road users are doing and what they are likely to do next. As drivers become more skilled in handling their vehicles, cognitive resources are released and can be allocated to other tasks such as hazard surveillance. With increased experience, novice drivers no longer need to concentrate on their engine speed when deciding on the moment to change gear or on the coordinated sequence of accelerator pedal release and clutch pedal depression when doing so. They are increasingly able to think about the behavior of the traffic around them while maintaining vehicle speed and position seemingly without thinking about these relatively low-level actions.

The three hypotheses emphasize steering control demands, vehicle control demands, and the driver's situation awareness. The first two hypotheses are closely related, with steering control being a special case of the demands of vehicle control. Mourant and Rockwell (1972) demonstrated that novices tend to look at the roadway closer to the vehicle than do experienced drivers, perhaps suggesting that they have not yet learned to use peripheral vision for steering control (Land & Horwood, 1995) or that the dynamics of perceptual-motor coordination are still being learned. If they need to look at road markers in order to keep their vehicle in the center of their lane, then they will have limited scope for looking at other objects in the roadway. The second hypothesis extends this view of the demands of vehicle control and sees central cognitive resources being occupied more generally, to the extent that the novice driver does not have the resources available for scanning the road scene and thereby acquiring new information about potential hazards. It has been established that varying the demand of the driving task will cause variations in the acquisition of information. Recarte and Nunes (2000) reported that mirror checking is reduced as the mental load on the driver is increased, and Underwood, Crundall, and Chapman (2002) also found that mirror checking varied with driving experience, with greater selectivity of the choice of mirror used by experienced drivers during lane changing. Similarly, as driving demands increase, fewer fixations on mirrors and other nonessential objects are reported (Schweigert & Bubb, 2001). This evidence from studies of the inspection of the information available in the mirrors suggests that as driving demands increase, experienced drivers re-allocate their cognitive resources and modify their intake of information about traffic in the roadway.

The third suggestion is that perhaps novices stereotypically look straight ahead when driving because they have inadequate situation awareness. Differences in search patterns associated with driving experience—specifically, increased variance of fixation locations in more experienced drivers—would then be explained as a product of the knowledge base developed through previous traffic encounters. As drivers interact with other drivers and observe the behavior of other road users, they accumulate memories of events that happen on different kinds of roads, and they develop an awareness of their probability of happening. These situation-specific probabilities can help guide drivers through newly encountered environments if they are sufficiently similar to earlier circumstances for drivers to generalize their behavior (Shinoda, Hayhoe, & Shrivastava, 2001). Because of his or her limited exposure to varying roadway conditions, a new driver necessarily has an impoverished catalog compared to an experienced driver. Perhaps, when novices scanned a multilane highway to a lesser extent than the experienced drivers in Crundall and Underwood's (1998) eye tracking study, they behaved like this because they were unaware of the special dangers associated with this particular type of road. They perhaps had insufficient exposure to this kind of road with which to build a mental model of the behavior that might be shown by other vehicles. They would then be unable to predict where other vehicles would be a few seconds later, and they would not recognize the demands of negotiating interweaving lanes of traffic and the need to monitor not only the traffic ahead but also the lane-changing activity of traffic immediately to the rear.

We sought evidence to discriminate between the vehicle control and situation awareness hypotheses by recording the scanning behavior of drivers in a laboratory task that eliminated the need for vehicle control (Underwood, Chapman, Bowden, & Crundall, 2002). Drivers sat in the laboratory and watched film clips recorded from a car as it traveled along the roads used by Crundall and Underwood (1998). One advantage of this approach is that each driver saw the same traffic conditions, whereas in the original study traffic conditions inevitably varied from moment to moment and from driver to driver. The laboratory task was essentially one of observation and prediction, with the task being to make a key-press response if they saw an event that would cause a driver to take evasive action—essentially a hazard detection task that gave a reason for monitoring the video recordings carefully. The scanning behavior of new and experienced drivers was the principal interest, and while they watched the films, their eye movements were recorded. If new drivers have restricted search patterns because their resources are allocated to vehicle control, then eliminating the vehicle-control element of driving should result in a visual search pattern in the laboratory that is similar to that of an experienced driver. Take away the demands of maintaining vehicle speed and lane position and resources should become available for scanning, but only if the need for scanning is understood. If the search patterns of new drivers result from a mental model that does not inform them of the particular hazards associated with multilane highways, then they would continue to restrict their scanning while watching the roadway video recordings in the viewing-only task. The results indicated that the two groups of drivers were thinking about the scene differently, even when their resources were not occupied by the demands of vehicle control. Experienced drivers exhibited more extensive scanning when they watched more demanding sections of the roadway, whereas new drivers showed less sensitivity to changing traffic conditions. The eye tracking data indicated differences between new and experienced drivers that support the hypothesis that their inspection of the roadway varies not because they have differences in their mental resources residual from the task of vehicle control but, rather, because the novice drivers have an impoverished mental model of what other drivers might do on demanding roadways. Other research supports the hypothesis of limited situational awareness in novice drivers. Jackson, Chapman, and Crundall (2009) asked drivers to predict events from driving video clips that were stopped at critical moments and obscured. They found that more experienced drivers were better able to predict the subsequent events, which they argued was akin to greater level 3 situational awareness (Endsley, 1995 and Endsley, 1999).

Although these measures of the spread of search have provided some consistent and replicable findings, they need to be used with caution. For instance, in Figure 11.2, panels a and b reflect the typical scan path that one might expect from an experienced driver and novice driver, respectively. Calculating the variance or standard deviation of the x-axis fixation coordinates would reveal a clear difference between the two. However, the two scan paths shown in panels c and d would be indistinguishable on the basis of this simple calculation of spread. Imagine two pedestrians on either side of the roadway: In panel c, the driver extensively scans one of the pedestrians before shifting to the other, whereas in panel d the driver constantly switches between the two pedestrians. From a commonsense viewpoint, we might consider panel d to reflect greater spread of search. Certainly, most driver-training experts would consider panel d to represent a safer search strategy than panel c (Mills, 2005). However, the calculation of the variance of locations will not discriminate between these two strategies because it cannot take into account the sequential nature of the fixations.

|

| FIGURE 11.2 |

A further issue with this measure is that it will not discriminate between a decrease in the eccentricity of fixations to the left and right of the road ahead and a decrease in the frequency of these eccentric fixations. Thus, a reduction in the actual area of the visual scene that is scanned is indistinguishable from a reduction in the number of fixations away from the road ahead. In essence, a measure of spread is still informative about the extent to which drivers sample the visual scene, but it cannot be used to separate out the more subtle differences in visual strategies. However, these spread measures can be used in conjunction with range measures (e.g., mean saccade length, or how far the visual search extends in the scene) (Crundall et al., in press) to provide a more detailed picture.

The failure of measures of spread to take into account the sequential nature of the fixations can also be overcome through scan path analysis. This type of analysis searches for statistical regularity in sequences of fixation locations using a transition matrix. Underwood, Chapman, Brocklehurst, Underwood, and Crundall (2003) recorded where drivers looked as a function of where they had looked immediately beforehand. Video recordings of fixations made in the Crundall and Underwood (1998) study were used as the input to the process of identifying fixation scan paths, and driver differences in the inspection of different roadways were again the focus of interest. The most interesting differences between drivers were again seen when they traveled along the multilane highway with merging traffic—the most demanding section of the drive. The increased variance of experienced drivers from the earlier analysis was reflected in the scan paths, which showed very little consistency. There were few two-fixation scan paths that appeared regularly for these drivers, indicating that their fixation behavior was unpredictable statistically, and this can be explained by variations in traffic conditions from moment to moment prompting changes in fixations. As other vehicles appeared in the roadway or in the vehicle's mirrors, the experienced drivers inspected them and evaluated their trajectories. There was no consistency in the location of one fixation according to where they had looked previously. The new drivers, on the other hand, showed a remarkable consistency that can be summarized by a simple generalization: Wherever they had looked previously, the next place they looked was at the roadway straight ahead of them. Their fixation behavior was stereotyped and not sensitive to the variations in traffic behavior seen on a highway with fast-moving vehicles that are regularly changing lanes, both ahead and to the rear. The low variance of fixations recorded by Crundall and Underwood was a product of the new drivers repeatedly moving their eyes to inspect the roadway directly before them.

5. Conclusions

The use of eye tracking measures has greatly increased our understanding of how driving skills develop and what strategies drivers employ to ensure a safe journey. Eye movement analyses are now being applied to help understand specific accident types (e.g., spotting overtaking motorcycles; Shahar, van Loon, Clarke, & Crundall, in press) and are forming the basis of training interventions to decrease accident rates (Chapman et al., 2002 and Pradhan et al., 2009). It is in these areas that the most exciting advances are being made. However, there are caveats that need to be mentioned.

First, we have noted in this chapter that eye movement measures, when taken in isolation, are open to errors of interpretation. Simply inferring safe driving on the basis of whether one has looked at a particular area of the scene (Pradhan et al., 2005) may not be sufficient because extremely short fixations may not be indicative of full processing (as with “look but fail to see” errors; Crundall et al., in press). Similarly, we noted that measures of spread may be unable to identify certain visual strategies. Thus, it seems that multiple measures of visual behavior should be taken to ensure that the potential confounds associated with one particular measure do not dominate the conclusions.

Second, we need to relate all measures back to the context in which they were collected. It is useful to relate measures of vision in driving to other fields of research such as reading, although it must always be borne in mind that the complex context of real driving is unlikely to be paralleled in an analogous laboratory. Thus, although reading research provides us with a framework with which to interpret increased fixation durations in terms of processing difficulty, this analogy does not necessarily transfer to the rural road, where fixations on the focus of expansion can be extremely large. The number of variables that can influence the patterns of eye movements during driving seem too many to document, but that has not stopped attempts to do so. Indeed, during approximately the past 10 years, great strides have been made in our understanding of how various factors interact, although we must remember that there are other potential aspects of the context that we have not accounted for when drawing conclusions.

Finally, when considering the potential for designing training interventions to encourage eye movements, particularly in young and novice drivers, we must be aware of the developmental limitations on eye movement strategies. For instance, Mourant and Rockwell (1972) found that novice drivers look more at lane markings than do more experienced drivers; Land and Horwood (1995) demonstrated that experienced drivers still use the information provided by lane markers but do so through peripheral vision; and Crundall et al., 1999 and Crundall et al., 2002 demonstrated that inexperienced drivers have fewer resources devoted to peripheral vision than do more experienced drivers. Taken together, this body of work suggests that although lane markings are of vital importance to maintaining the lateral position of the vehicle, inexperienced drivers do not necessarily have the available resources to devote to peripheral vision in order to extract lane marker information without foveating them. Thus, an intervention strategy that directly or indirectly trains inexperienced drivers to focus less on lane markings may have the unintended effect of impairing lane maintenance.

Despite these caveats, it is clear that studies of eye movements have provided considerable insights into the driving process and have achieved moderate success in rudimentary training situations (Chapman et al., 2002 and Pradhan et al., 2005). As eye tracking technology continues to improve and costs are reduced, the use of these systems in future research will increase, and we hope that this brief discussion provides some ideas on how to best employ this technology for current and future uses.

References

Borowsky, A.; Shinar, D.; Oron-Gilad, T., Age, skill and hazard perception in driving, Accident Analysis and Prevention 42 (2010) 1240–1249.

Brockmole, J.R.; Boot, W.R., Should I stay or should I go? Attentional disengagement from visually unique and unexpected items at fixation, Journal of Experimental Psychology: Human Perception and Performance 35 (2009) 808–815.

Brown, I.D., A review of the “look but failed to see” accident causation factor, In: Behavioural Research in Road Safety XI (2002) Department for Transport, Local Government and the Regions, London.

Caplovitz, G.P.; Fendrich, R.; Hughes, H.C., Failures to see: Attentive blank stares revealed by change blindness, Consciousness and Cognition 17 (2008) 877–886.

Chapman, P.; Underwood, G., Visual search of driving situations: Danger and experience, Perception 27 (1998) 951–964.

Chapman, P.; Underwood, G.; Roberts, K., Visual search patterns in trained and untrained novice drivers, Transportation Research Part F: Traffic Psychology and Behaviour 5 (2002) 157–167.

Clarke, D.D.; Ward, P.; Bartle, C.; Truman, W., Young driver accidents in the UK: The influence of age, experience, and time of day, Accident Analysis and Prevention 38 (2006) 871–878.

Clarke, D.D.; Ward, P.; Bartle, C.; Truman, W., The role of motorcyclist and other driver behaviour in two types of serious accident in the UK, Accident Analysis and Prevention 39 (2007) 974–981.

Coyne, P., Roadcraft: The essential police driver's handbook. (1997) The Stationary Office, London.

Crundall, D.; Underwood, G., Effects of experience and processing demands on visual information acquisition in drivers, Ergonomics 41 (1998) 448–458.

Crundall, D.; Underwood, G.; Chapman, P., Driving experience and the functional field of view, Perception 28 (1999) 1075–1087.

Crundall, D.; Underwood, G.; Chapman, P., Attending to the peripheral world while driving, Applied Cognitive Psychology 16 (2002) 459–475.

Department for Transport, Road traffic by vehicle type, Great Britain: 1950–2009 (miles), http://www.dft.gov.uk/pgr/statistics/datatablespublications/roads/traffic/annual-volm/tra0101.xls (2010); Accessed November 11, 2010.

Department for Transport, Road traffic and speed statistics—2009, http://www.dft.gov.uk/pgr/statistics/datatablespublications/roads/traffic (2010); Accessed November 11, 2010.

Duchowski, A.T., Eye tracking methodology: Theory and practice. 2nd ed. (2007) Springer-Verlag, New York.

Ehrlich, S.E.; Rayner, K., Contextual effects on word perception and eye movements during reading, Journal of Verbal Learning and Verbal Behavior 20 (1981) 641–655.

Endsley, M.R., Toward a theory of situation awareness in dynamic systems, Human Factors 37 (1) (1995) 32–64.

Gugerty, L., Situation awareness during driving: Explicit and implicit knowledge in dynamic spatial memory, Journal of Experimental Psychology: Applied 3 (1997) 42–66.

Haley, S., Mind driving. (2006) Safety House, Croydon, UK.

Henderson, J.M., Identifying objects across saccades: Effects of extrafoveal preview and flanker object context, Journal of Experimental Psychology: Learning, Memory, and Cognition 18 (1992) 521–530.

Henderson, J.M.; Hollingworth, A., The role of fixation position in detecting scene changes across saccades, Psychological Science 10 (1999) 438–443.

Horswill, M.S.; McKenna, F.P., Drivers' hazard perception ability: Situation awareness on the road, In: (Editors: Banbury, S.; Tremblay, S.) A cognitive approach to situation awareness (2004) Ashgate, Aldershot, UK.

Jackson, A.L.; Chapman, P.; Crundall, D., What happens next? Predicting other road users' behaviour as a function of driving experience and processing time, Ergonomics 52 (2) (2009) 154–164.

Just, M.A.; Carpenter, P.A., A theory of reading: From eye fixations to comprehension, Psychological Review 87 (4) (1980) 329–354.

Klauer, S.G.; Dingus, T.A.; Neale, V.L.; Sudweeks, J.D.; Ramsey, D.J., The impact of driver inattention on near-crash/crash risk: An analysis using the 100-Car Naturalistic Driving Study data. (2006) National Highway Traffic Safety Administration, Washington, DC.

Konstantopoulos, P.; Crundall, D.; Chapman, P., Driver's visual attention as a function of driving experience and visibility. Using a driving simulator to explore visual search in day, night and rain driving, Accident Analysis and Prevention 42 (Special issue) (2010) 827–834.

Kotowicz, A.; Rutishauser, U.; Kock, C., Time course of target recognition in visual search, Frontier in Human Neuroscience 4 (2010) 31.

Land, M.F.; Horwood, J., Which parts of the road guide steering?Nature 377 (1995) 339–340.

Lee, J.D., Fifty years of driving safety research, Human Factors 50 (2008) 521–528.

Lestina, D.C.; Miller, T.R., Characteristics of crash-involved younger drivers, In: 38th Annual proceedings of the Association for the Advancement of Automotive Medicine (1994) Association for the Advancement of Automotive Medicine, Des Plaines, IL, pp. 425–437.

Liversedge, S.P.; Findlay, J.M., Saccadic eye movements and cognition, Trends in Cognitive Sciences 4 (2000) 6–14.

Mills, K.C., Disciplined attention: How to improve your visual attention when you drive. (2005) Profile Press, Chapel Hill, NC.

Mourant, R.R.; Rockwell, T.H., Strategies of visual search by novice and experienced drivers, Human Factors 14 (1972) 325–335.

Organisation for Economic Co-operation and Development/European Conference of Ministers of Transport, Young drivers: The road to safety. (2006) Organisation for Economic Co-operation and Development, Paris.

Pradhan, A.K.; Hammel, K.R.; DeRamus, R.; Pollatsek, A.; Noyce, D.A.; Fisher, D.L., Using eye movements to evaluate effects of driver age on risk perception in a driving simulator, Human Factors 47 (2005) 840–852.

Pradhan, A.K.; Pollatsek, A.; Knodler, M.; Fisher, D.L., Can younger drivers be trained to scan for information that will reduce their risk in roadway traffic scenarios that are hard to identify as hazardous?Ergonomics 52 (2009) 657–673.

Rayner, K., Eye movements in reading and information processing: Twenty years of research, Psychological Bulletin 124 (1998) 372–422.

Recarte, M.A.; Nunes, L.M., Effects of verbal and spatial-imagery tasks on eye fixations while driving, Journal of Experimental Psychology: Applied 6 (2000) 31–43.

Reichle, E.D.; Rayner, K.; Pollatsek, A., The E-Z Reader model of eye movement control in reading: Comparisons to other models, Behavioral and Brain Sciences 26 (2003) 445–476.

Reingold, E.M.; Rayner, K., Examining the word identification stages hypothesized by the E-Z Reader model, Psychological Science 17 (2006) 742–746.

Schweigert, M.; Bubb, H., Eye movements, performance and interference when driving a car and performing secondary tasks. (2001, August) Paper presented at the Vision in Vehicles 9 conference, Brisbane, Australia.

Shinar, D., Looks are (almost) everything: Where drivers look to get information, Human Factors 50 (2008) 380–384.

Shinoda, H.; Hayhoe, M.M.; Shrivastava, A., What controls attention in natural environments?Vision Research 41 (2001) 3535–3545.

Sivak, M., The information that drivers use: Is it indeed 90% visual?Perception 25 (1996) 1081–1089.

Treat, J.R.; Tumbas, N.S.; McDonald, S.T.; Shinar, D.; Hume, R.D.; Mayer, R.E.; Stansifer, R.L.; Castellan, N.J., TRI-level study of the causes of traffic accidents: Final report. (Report No. DOT HS 805-085) (1979) U.S. Department of Transportation, National Highway Traffic Safety Administration, Washington, DC.

Underwood, G., Visual attention and the transition from novice to advanced driver, Ergonomics 50 (2007) 1235–1249.

Underwood, G.; Chapman, P.; Bowden, K.; Crundall, D., Visual search while driving: Skill and awareness during inspection of the scene, Transportation Research Part F: Traffic Psychology and Behaviour 5 (2002) 87–97.

Underwood, G.; Chapman, P.; Brocklehurst, N.; Underwood, J.; Crundall, D., Visual attention while driving: Sequences of eye fixations made by experienced and novice drivers, Ergonomics 46 (2003) 629–646.

Underwood, G.; Chapman, P.; Crundall, D., Experience and visual attention in driving, In: (Editor: Castro, C.) Human factors of visual and cognitive performance in driving (2009) CRC Press, Boca Raton, FL, pp. 89–116.

Underwood, G.; Crundall, D.; Chapman, P., Selective searching while driving: The role of experience in hazard detection and general surveillance, Ergonomics 45 (2002) 1–12.

Underwood, G.; Crundall, D.; Chapman, P., Cognition and driving, In: (Editor: Durso, F.) Handbook of applied cognition2nd ed (2007) Wiley, New York, pp. 391–414.

Underwood, G.; Everatt, J., The role of eye movements in reading: Some limitations of the eye–mind assumption, In: (Editors: Chekaluk, E.; Llewellyn, K.R.) The role of eye movements in perception (1992) North-Holland, Amsterdam.

Underwood, G.; Phelps, N.; Wright, C.; van Loon, E.; Galpin, A., Eye fixation scanpaths of younger and older drivers in a hazard perception task, Ophthalmic and Physiological Optics 25 (2005) 346–356.

Underwood, G.; Templeman, E.; Lamming, L.; Foulsham, T., Is attention necessary for object identification? Evidence from eye movements during the inspection of real-world scenes, Consciousness & Cognition 17 (2008) 159–170.

Van Gompel, R.P.G.; Fischer, M.H.; Murray, W.S.; Hill, R.L., Eye-movement research: An overview of current and past developments, In: (Editors: van Gompel, R.P.G.; Fischer, M.H.; Murray, W.S.; Hill, R.L.) Eye movements: A window on mind and brain (2007) Elsevier, Oxford, pp. 1–28.