3. Sound

Roughly one million years ago, the seas retreated from the basin that surrounds modern-day Paris, leaving a ring of limestone deposits that had once been active coral reefs. Over time, the River Cure in Burgundy slowly carved its way through some of those limestone blocks, creating a network of caves and tunnels that would eventually be festooned with stalactites and stalagmites formed by rainwater and carbon dioxide. Archeological findings suggest that Neanderthals and early modern humans used the caves for shelter and ceremony for tens of thousands of years. In the early 1990s, an immense collection of ancient paintings was discovered on the walls of the cave complex in Arcy-sur-Cure: over a hundred images of bison, woolly mammoths, birds, fish—even, most hauntingly, the imprint of a child’s hand. Radiometric dating determined that the images were thirty thousand years old. Only the paintings at Chauvet, in southern France, are believed to be older.

For understandable reasons, cave paintings are conventionally cited as evidence of the primordial drive to represent the world in images. Eons before the invention of cinema, our ancestors would gather together in the firelit caverns and stare at flickering images on the wall. But in recent years, a new theory has emerged about the primitive use of the Burgundy caves, one focused not on the images of these underground passages, but rather on the sounds.

A few years after the paintings in Arcy-sur-Cure were discovered, a music ethnographer from the University of Paris named Iegor Reznikoff began studying the caves the way a bat would: by listening to the echoes and reverberations created in different parts of the cave complex. It had long been apparent that the Neanderthal images were clustered in specific parts of the cave, with some of the most ornate and dense images appearing more than a kilometer deep. Reznikoff determined that the paintings were consistently placed at the most acoustically interesting parts of the cave, the places where the reverberation was the most profound. If you make a loud sound standing beneath the images of Paleolithic animals at the far end of the Arcy-sur-Cure caves, you hear seven distinct echoes of your voice. The reverberation takes almost five seconds to die down after your vocal chords stop vibrating. Acoustically, the effect is not unlike the famous “wall of sound” technique that Phil Spector used on the 1960s records he produced for artists such as the Ronettes and Ike and Tina Turner. In Spector’s system, recorded sound was routed through a basement room filled with speakers and microphones that created a massive artificial echo. In Arcy-sur-Cure, the effect comes courtesy of the natural environment of the cave itself.

Reznikoff’s theory is that Neanderthal communities gathered beside the images they had painted, and they chanted or sang in some kind of shamanic ritual, using the reverberations of the cave to magically widen the sound of their voices. (Reznikoff also discovered small red dots painted at other sonically rich parts of the cave.) Our ancestors couldn’t record the sounds they experienced the way they recorded their visual experience of the world in paintings. But if Reznikoff is correct, those early humans were experimenting with a primitive form of sound engineering—amplifying and enhancing that most intoxicating of sounds: the human voice.

The drive to enhance—and, ultimately, reproduce—the human voice would in time pave the way for a series of social and technological breakthroughs: in communications and computation, politics and the arts. We readily accept the idea that science and technology have enhanced our vision to a remarkable extent: from spectacles to the Keck telescopes. But our vocal chords, vibrating in speech and in song, have also been massively augmented by artificial means. Our voices grew louder; they began traveling across wires laid on the ocean floor; they slipped the surly bonds of Earth and began bouncing off satellites. The essential revolutions in vision largely unfolded between the Renaissance and the Enlightenment: spectacles, microscopes, telescopes; seeing clearly, seeing very far, and seeing very close. The technologies of the voice did not arrive in full force until the late nineteenth century. When they did, they changed just about everything. But they didn’t begin with amplification. The first great breakthrough in our obsession with the human voice arrived in the simple act of writing it down.

—

FOR THOUSANDS OF YEARS after those Neanderthal singers gathered in the reverberant sections of the Burgundy caves, the idea of recording sound was as fanciful as counting fairies. Yes, over that period we refined the art of designing acoustic spaces to amplify our voices and our instruments: medieval cathedral design, after all, was as much about sound engineering as it was about creating epic visual experiences. But no one even bothered to imagine capturing sound directly. Sound was ethereal, not tangible. The best you could do was imitate sound with your own voice and instruments.

The dream of recording the human voice entered the adjacent possible only after two key developments: one from physics, the other from anatomy. From about 1500 on, scientists began to work under the assumption that sound traveled through the air in invisible waves. (Shortly thereafter they discovered that these waves traveled four times faster through water, a curious fact that wouldn’t turn out to be useful for another four centuries.) By the time of the Enlightenment, detailed books of anatomy had mapped the basic structure of the human ear, documenting the way sound waves were funneled through the auditory canal, triggering vibrations in the eardrum. In the 1850s, a Parisian printer named Édouard-Léon Scott de Martinville happened to stumble across one of these anatomy books, triggering a hobbyist’s interest in the biology and physics of sound.

Scott had also been a student of shorthand writing; he’d published a book on the history of stenography a few years before he began thinking about sound. At the time, stenography was the most advanced form of voice-recording technology in existence; no system could capture the spoken word with as much accuracy and speed as a trained stenographer. But as he looked at these detailed illustrations of the inner ear, a new thought began to take shape in Scott’s mind: perhaps the process of transcribing the human voice could be automated. Instead of a human writing down words, a machine could write sound waves.

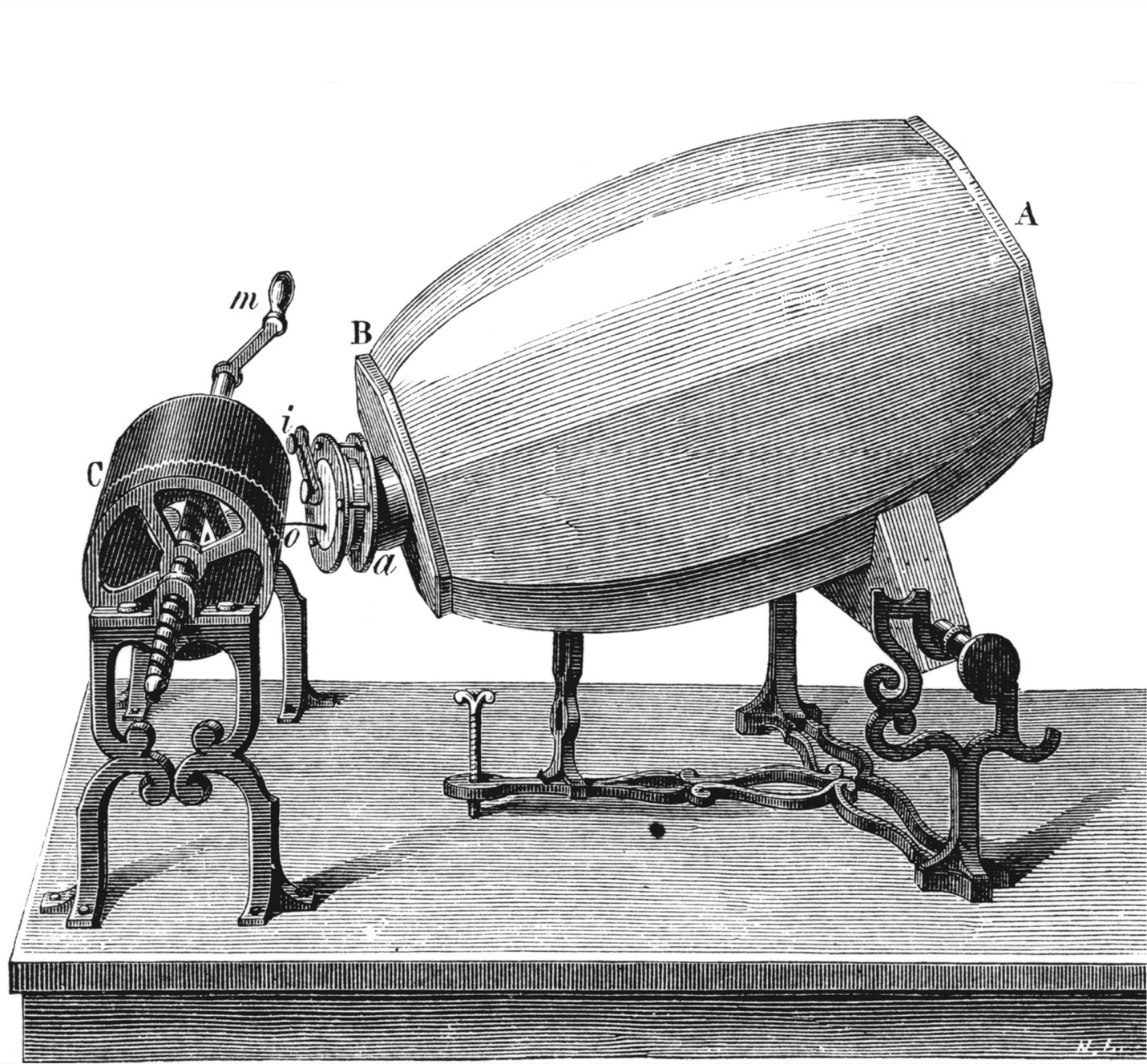

In March 1857, two decades before Thomas Edison would invent the phonograph, the French patent office awarded Scott a patent for a machine that recorded sound. Scott’s contraption funneled sound waves through a hornlike apparatus that ended with a membrane of parchment. Sound waves would trigger vibrations in the parchment, which would then be transmitted to a stylus made of pig’s bristle. The stylus would etch out the waves on a page darkened by the carbon of lampblack. He called his invention the “phonautograph”: the self-writing of sound.

In the annals of invention, there may be no more curious mix of farsightedness and myopia than the story of the phonautograph. On the one hand, Scott had managed to make a critical conceptual leap—that sound waves could be pulled out of the air and etched onto a recording medium—more than a decade before other inventors and scientists got around to it. (When you’re two decades ahead of Edison, you can be pretty sure you’re doing well for yourself.) But Scott’s invention was hamstrung by one crucial—even comical—limitation. He had invented the first sound recording device in history. But he forgot to include playback.

Édouard-Léon Scott de Martinville, French writer and inventor of the phonautograph

Actually, “forgot” is too strong a word. It seems obvious to us now that a device for recording sound should also include a feature where you can actually hear the recording. Inventing the phonautograph without including playback seems a bit like inventing the automobile but forgetting to include the bit where the wheels rotate. But that is because we are judging Scott’s work from the other side of the divide. The idea that machines could convey sound waves that had originated elsewhere was not at all an intuitive one; it wasn’t until Alexander Graham Bell began reproducing sound waves at the end of a telephone that playback became an obvious leap. In a sense, Scott had to look around two significant blind spots, the idea that sound could be recorded and that those recordings could be converted back into sound waves. Scott managed to grasp the first, but he couldn’t make it all the way to the second. It wasn’t so much that he forgot or failed to make playback work; it was that the idea never even occurred to him.

If playback was never part of Scott’s plan, it is fair to ask exactly why he bothered to build the phonautograph in the first place. What good is a record player that doesn’t play records? Here we confront the double-edged sword of relying on governing metaphors, of borrowing ideas from other fields and applying them in a new context. Scott got to the idea of recording audio through the metaphor of stenography: write waves instead of words. That structuring metaphor enabled him to make the first leap, years ahead of his peers, but it also may have prevented him from making the second. Once words have been converted into the code of shorthand, the information captured there is decoded by a reader who understands the code. Scott thought the same would happen with his phonautograph. The machine would etch waveforms into the lampblack, each twitch of the stylus corresponding to some phoneme uttered by a human voice. And humans would learn to “read” those squiggles the way they had learned to read the squiggles of shorthand. In a sense, Scott wasn’t trying to invent an audio-recording device at all. He was trying to invent the ultimate transcription service—only you had to learn a whole new language in order to read the transcript.

It wasn’t that crazy an idea, looking back on it. Humans had proven to be unusually good at learning to recognize visual patterns; we internalize our alphabets so well we don’t even have to think about reading once we’ve learned how to do it. Why would sound waves, once you could get them on the page, be any different?

Sadly, the neural toolkit of human beings doesn’t seem to include the capacity for reading sound waves by sight. A hundred and fifty years have passed since Scott’s invention, and we have mastered the art and science of sound to a degree that would have astonished Scott. But not a single person among us has learned to visually parse the spoken words embedded in printed sound waves. It was a brilliant gamble, but ultimately a losing one. If we were going to decode recorded audio, we needed to convert it back to sound so we could do our decoding via the eardrum, not the retina.

We may not be waveform readers, but we’re not exactly slackers, either: during the century and a half that followed Scott’s invention, we did manage to invent a machine that could “read” the visual image of a waveform and convert it back into sound: namely, computers. Just a few years ago, a team of sound historians named David Giovannoni, Patrick Feaster, Meagan Hennessey, and Richard Martin discovered a trove of Scott’s phonautographs in the Academy of Sciences in Paris, including one from April 1860 that had been marvelously preserved. Giovannoni and his colleagues scanned the faint, erratic lines that had been first scratched into the lampblack when Lincoln was still alive. They converted that image into a digital waveform, then played it back through computer speakers.

At first, they thought they were hearing a woman’s voice, singing the French folk song “Au clair de la lune,” but later they realized they had been playing back the audio at double its recorded speed. When they dropped it down to the right tempo, a man’s voice appeared out of the crackle and hiss: Édouard-Léon Scott de Martinville warbling from the grave.

Understandably, the recording was not of the highest quality, even played at the right speed. For most of the clip, the random noise of the recording apparatus overwhelms Scott’s voice. But even this apparent failing underscores the historic importance of the recording. The strange hisses and decay of degraded audio signals would become commonplace to the twentieth-century ear. But these are not sounds that occur in nature. Sound waves dampen and echo and compress in natural environments. But they don’t break up into the chaos of mechanical noise. The sound of static is a modern sound. Scott captured it first, even if it took a century and a half to hear it.

But Scott’s blind spot would not prove to be a complete dead end. Fifteen years after his patent, another inventor was experimenting with the phonautograph, modifying Scott’s original design to include an actual ear from a cadaver in order to understand the acoustics better. Through his tinkering, he hit upon a method for both capturing and transmitting sound. His name was Alexander Graham Bell.

—

FOR SOME REASON, sound technology seems to induce a strange sort of deafness among its most advanced pioneers. Some new tool comes along to share or transmit sound in a new way, and again and again its inventor has a hard time imagining how the tool will eventually be used. When Thomas Edison completed Scott’s original project and invented the phonograph in 1877, he imagined it would regularly be used as a means of sending audio letters through the postal system. Individuals would record their missives on the phonograph’s wax scrolls, and then pop them into the mail, to be played back days later. Bell, in inventing the telephone, made what was effectively a mirror-image miscalculation: He envisioned one of the primary uses for the telephone to be as a medium for sharing live music. An orchestra or singer would sit on one end of the line, and listeners would sit back and enjoy the sound through the telephone speaker on the other. So, the two legendary inventors had it exactly reversed: people ended up using the phonograph to listen to music and using the telephone to communicate with friends.

As a form of media, the telephone most resembled the one-to-one networks of the postal service. In the age of mass media that would follow, new communications platforms would be inevitably drawn toward the model of big-media creators and a passive audience of consumers. The telephone system would be the one model for more intimate—one-to-one, not one-to-many—communications until e-mail arrived a hundred years later. The telephone’s consequences were immense and multifarious. International calls brought the world closer together, though the threads connecting us were thin until recently. The first transatlantic line that enabled ordinary citizens to call between North America and Europe was laid only in 1956. In the first configuration, the system allowed twenty-four simultaneous calls. That was the total bandwidth for a voice conversation between the two continents just fifty years ago: out of several hundred million voices, only two dozen conversations at a time. Interestingly, the most famous phone in the world—the “red phone” that provided a hotline between the White House and the Kremlin—was not a phone at all in its original incarnation. Created after the communications fiasco that almost brought us to nuclear war in the Cuban Missile Crisis, the hotline was actually a Teletype that enabled quick, secure messages to be sent between the powers. Voice calls were considered to be too risky, given the difficulties of real-time translation.

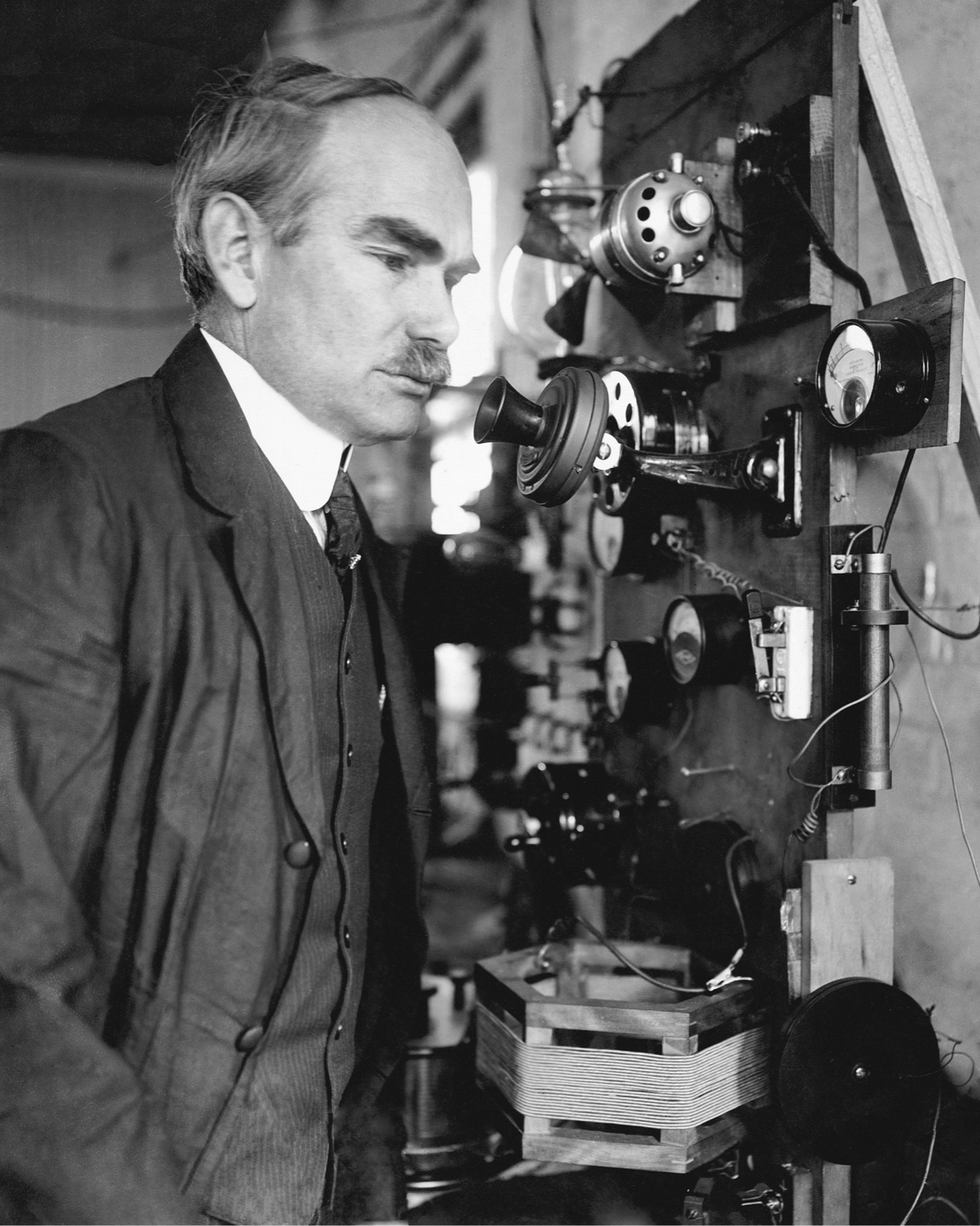

Inventor Alexander Graham Bell's laboratory in which he experimented with the transmission of sound by electricity, 1886.

The telephone enabled less obvious transformations as well. It popularized the modern meaning of the word “hello”—as a greeting that starts a conversation—transforming it into one of the most recognized words anywhere on earth. Telephone switchboards became one of the first inroads for women into the “professional” classes. (AT&T alone employed 250,000 women by the mid-forties.) An AT&T executive named John J. Carty argued in 1908 that the telephone had had as big of an impact on the building of skyscrapers as the elevator:

It may sound ridiculous to say that Bell and his successors were the fathers of modern commercial architecture—of the skyscraper. But wait a minute. Take the Singer Building, the Flatiron Building, the Broad Exchange, the Trinity, or any of the giant office buildings. How many messages do you suppose go in and out of those buildings every day? Suppose there was no telephone and every message had to be carried by a personal messenger? How much room do you think the necessary elevators would leave for offices? Such structures would be an economic impossibility.

But perhaps the most significant legacy of the telephone lay in a strange and marvelous organization that grew out of it: Bell Labs, an organization that would play a critical role in creating almost every major technology of the twentieth century. Radios, vacuum tubes, transistors, televisions, solar cells, coaxial cables, laser beams, microprocessors, computers, cell phones, fiber optics—all these essential tools of modern life descend from ideas originally generated at Bell Labs. Not for nothing was it known as “the idea factory.” The interesting question about Bell Labs is not what it invented. (The answer to that is simple: just about everything.) The real question is why Bell Labs was able to create so much of the twentieth century. The definitive history of Bell Labs, Jon Gertner’s The Idea Factory, reveals the secret to the labs’ unrivaled success. It was not just the diversity of talent, and the tolerance of failure, and the willingness to make big bets—all of which were traits that Bell Labs shared with Edison’s famous lab at Menlo Park as well as other research labs around the world. What made Bell Labs fundamentally different had as much to do with antitrust law as the geniuses it attracted.

From as early as 1913, AT&T had been battling the U.S. government over its monopoly control of the nation’s phone service. That it was, in fact, a monopoly was undeniable. If you were making a phone call in the United States at any point between 1930 and 1984, you were almost without exception using AT&T’s network. That monopoly power made the company immensely profitable, since it faced no significant competition. But for seventy years, AT&T managed to keep the regulators at bay by convincing them that the phone network was a “natural monopoly” and a necessary one. Analog phone circuits were simply too complicated to be run by a hodgepodge of competing firms; if Americans wanted to have a reliable phone network, it needed to be run by a single company. Eventually, the antitrust lawyers in the Justice Department worked out an intriguing compromise, settled officially in 1956. AT&T would be allowed to maintain its monopoly over phone service, but any patented invention that had originated in Bell Labs would have to be freely licensed to any American company that found it useful, and all new patents would have to be licensed for a modest fee. Effectively, the government said to AT&T that it could keep its profits, but it would have to give away its ideas in return.

It was a unique arrangement, one we are not likely to see again. The monopoly power gave the company a trust fund for research that was practically infinite, but every interesting idea that came out of that research could be immediately adopted by other firms. So much of the American success in postwar electronics—from transistors to computers to cell phones—ultimately dates back to that 1956 agreement. Thanks to the antitrust resolution, Bell Labs became one of the strangest hybrids in the history of capitalism: a vast profit machine generating new ideas that were, for all practical purposes, socialized. Americans had to pay a tithe to AT&T for their phone service, but the new innovations AT&T generated belonged to everyone.

—

ONE OF THE MOST TRANSFORMATIVE breakthroughs in the history of Bell Labs emerged in the years leading up to the 1956 agreement. For understandable reasons, it received almost no attention at the time; the revolution it would ultimately enable was almost half a century in the future, and its very existence was a state secret, almost as closely guarded as the Manhattan Project. But it was a milestone nonetheless, and once again, it began with the sound of the human voice.

The innovation that had created Bell Labs in the first place—Bell’s telephone—had ushered us across a crucial threshold in the history of technology: for the first time, some component of the physical world had been represented in electrical energy in a direct way. (The telegraph had converted man-made symbols into electricity, but sound belonged to nature as well as culture.) Someone spoke into a receiver, generating sound waves that became pulses of electricity that became sound waves again on the other end. Sound, in a way, was the first of our senses to be electrified. (Electricity helped us see the world more clearly thanks to the lightbulb during the same period, but it wouldn’t record or transmit what we saw for decades.) And once those sound waves became electric, they could travel vast distances at astonishing speeds.

But as magical as those electrical signals were, they were not infallible. Traveling from city to city over copper wires, they were vulnerable to decay, signal loss, noise. Amplifiers, as we will see, helped combat the problem, boosting signals as they traveled down the line. But the ultimate goal was a pure signal, some kind of perfect representation of the voice that wouldn’t degrade as it wound its way through the telephone network. Interestingly, the path that ultimately led to that goal began with a different objective: not keeping our voices pure, but keeping them secret.

During World War II, the legendary mathematician Alan Turing and Bell Labs’ A. B. Clark collaborated on a secure communications line, code-named SIGSALY, that converted the sound waves of human speech into mathematical expressions. SIGSALY recorded the sound wave twenty thousand times a second, capturing the amplitude and frequency of the wave at that moment. But that recording was not done by converting the wave into an electrical signal or a groove in a wax cylinder. Instead, it turned the information into numbers, encoded it in the binary language of zeroes and ones. “Recording,” in fact, was the wrong word for it. Using a term that would become common parlance among hip-hop and electronic musicians fifty years later, they called this process “sampling.” Effectively, they were taking snapshots of the sound wave twenty thousand times a second, only those snapshots were written out in zeroes and ones: digital, not analog.

Working with digital samples made it much easier to transmit them securely: anyone looking for a traditional analog signal would just hear a blast of digital noise. (SIGSALY was code-named “Green Hornet” because the raw information sounded like a buzzing insect.) Digital signals could also be mathematically encrypted much more effectively than analog signals. While the Germans intercepted and recorded many hours of SIGSALY transmissions, they were never able to interpret them.

Developed by a special division of the Army Signal Corps, and overseen by Bell Labs researchers, SIGSALY went into operation on July 15, 1943, with a historic transatlantic phone call between the Pentagon and London. At the outset of the call, before the conversation turned to the more pressing issues of military strategy, the president of Bell Labs, Dr. O. E. Buckley, offered some introductory remarks on the technological breakthrough that SIGSALY represented:

We are assembled today in Washington and London to open a new service, secret telephony. It is an event of importance in the conduct of the war that others here can appraise better than I. As a technical achievement, I should like to point out that it must be counted among the major advances in the art of telephony. Not only does it represent the achievement of a goal long sought—complete secrecy in radiotelephone transmission—but it represents the first practical application of new methods of telephone transmission that promise to have far-reaching effects.

If anything, Buckley underestimated the significance of those “new methods.” SIGSALY was not just a milestone in telephony. It was a watershed moment in the history of media and communications more generally: for the first time, our experiences were being digitized. The technology behind SIGSALY would continue to be useful in supplying secure lines of communication. But the truly disruptive force that it unleashed would come from another strange and wonderful property it possessed: digital copies could be perfect copies. With the right equipment, digital samples of sound could be transmitted and copied with perfect fidelity. So much of the turbulence of the modern media landscape—the reinvention of the music business that began with file-sharing services such as Napster, the rise of streaming media, and the breakdown of traditional television networks—dates back to the digital buzz of the Green Hornet. If the robot historians of the future had to mark one moment where the “digital age” began—the computational equivalent of the Fourth of July or Bastille Day—that transatlantic phone call in July 1943 would certainly rank high on the list. Once again, our drive to reproduce the sound of the human voice had expanded the adjacent possible. For the first time, our experience of the world was becoming digital.

—

THE DIGITAL SAMPLES OF SIGSALY traveled across the Atlantic courtesy of another communications breakthrough that Bell Labs helped create: radio. Interestingly, while radio would eventually become a medium saturated with the sound of people talking or singing, it did not begin that way. The first functioning radio transmissions—created by Guglielmo Marconi and a number of other more-or-less simultaneous inventors in the last decades of the nineteenth century—were almost exclusively devoted to sending Morse code. (Marconi called his invention “wireless telegraphy.”) But once information began flowing through the airwaves, it was not long before the tinkerers and research labs began thinking of how to make spoken word and song part of the mix.

One of those tinkerers was Lee De Forest, one of the most brilliant and erratic inventors of the twentieth century. Working out of his home lab in Chicago, De Forest dreamed of combining Marconi’s wireless telegraph with Bell’s telephone. He began a series of experiments with a spark-gap transmitter, a device that created a bright, monotone pulse of electromagnetic energy that can be detected by antennae miles away, perfect for sending Morse code. One night, while De Forest was triggering a series of pulses, he noticed something strange happening across the room: every time he created a spark, the flame in his gas lamp turned white and increased in size. Somehow, De Forest thought, the electromagnetic pulse was intensifying the flame. That flickering gaslight planted a seed in De Forest’s head: somehow a gas could be used to amplify weak radio reception, perhaps making it strong enough to carry the more information-rich signal of spoken words and not just the staccato pulses of Morse code. He would later write, with typical grandiosity: “I discovered an Invisible Empire of the Air, intangible, yet solid as granite.”

After a few years of trial and error, De Forest settled on a gas-filled bulb containing three precisely configured electrodes designed to amplify incoming wireless signals. He called it the Audion. As a transmission device for the spoken word, the Audion was just powerful enough to transmit intelligible signals. In 1910, De Forest used an Audion-equipped radio device to make the first ever ship-to-shore broadcast of the human voice. But De Forest had much more ambitious plans for his device. He had imagined a world in which his wireless technology was used not just for military and business communications but also for mass enjoyment—and in particular, to make his great passion, opera, available to everyone. “I look forward to the day when opera may be brought into every home,” he told the New York Times, adding, somewhat less romantically, “Someday even advertising will be sent out over the wireless.”

On January 13, 1910, during a performance of Tosca by New York’s Metropolitan Opera, De Forest hooked up a telephone microphone in the hall to a transmitter on the roof to create the first live public radio broadcast. Arguably the most poetic of modern inventors, De Forest would later describe his vision for the broadcast: “The ether wave passing over the tallest towers and those who stand between are unaware of the silent voices which pass them on either side. . . . And when it speaks to him, the strains of some well-loved earthly melody, his wonder grows.”

Alas, this first broadcast did not trigger quite as much wonder as it did derision. De Forest invited hordes of reporters and VIPs to listen to the broadcast on his radio receivers dotted around the city. The signal strength was terrible, and listeners heard something closer to the Green Hornet’s unintelligible buzz than the strains of a well-loved earthly melody. The Times declared the whole adventure “a disaster.” De Forest was even sued by the U.S. attorney for fraud, accused of overselling the value of the Audion in wireless technology, and briefly incarcerated. Needing cash to pay his legal bills, De Forest sold the Audion patent at a bargain price to AT&T.

When the researchers at Bell Labs began investigating the Audion, they discovered something extraordinary: from the very beginning Lee De Forest had been flat-out wrong about most of what he was inventing. The increase in the gas flame had nothing to do with electromagnetic radiation. It was caused by sound waves from the loud noise of the spark. Gas didn’t detect and amplify a radio signal at all; in fact, it made the device less effective.

But somehow, lurking behind all of De Forest’s accumulation of errors, a beautiful idea was waiting to emerge. Over the next decade, engineers at Bell Labs and elsewhere modified his basic three-electrode design, removing the gas from the bulb so that it sealed a perfect vacuum, transforming it into both a transmitter and a receiver. The result was the vacuum tube, the first great breakthrough of the electronics revolution, a device that would boost the electrical signal of just about any technology that needed it. Television, radar, sound recording, guitar amplifiers, X-rays, microwave ovens, the “secret telephony” of SIGSALY, the first digital computers—all would rely on vacuum tubes. But the first mainstream technology to bring the vacuum tube into the home was radio. In a way, it was the realization of De Forest’s dream: an empire of air transmitting well-loved melodies into living rooms everywhere. And yet, once again, De Forest’s vision would be frustrated by actual events. The melodies that started playing through those magical devices were well-loved by just about everyone except De Forest himself.

—

RADIO BEGAN ITS LIFE as a two-way medium, a practice that continues to this day as ham radio: individual hobbyists talking to one another over the airwaves, occasionally eavesdropping on other conversations. But by the early 1920s, the broadcast model that would come to dominate the technology had evolved. Professional stations began delivering packaged news and entertainment to consumers who listened on radio receivers in their homes. Almost immediately, something entirely unexpected happened: the existence of a mass medium for sound unleashed a new kind of music on the United States, a music that had until then belonged almost exclusively to New Orleans, to the river towns of the American South, and to African-American neighborhoods in New York and Chicago. Almost overnight, radio made jazz a national phenomenon. Musicians such as Duke Ellington and Louis Armstrong became household names. Ellington’s band performed weekly national broadcasts from the Cotton Club in Harlem starting in the late 1920s; Armstrong became the first African-American to host his own national radio show shortly thereafter.

All of this horrified Lee De Forest, who wrote a characteristically baroque denunciation to the National Association of Broadcasters: “What have you done with my child, the radio broadcast? You have debased this child, dressed him in rags of ragtime, tatters of jive and boogie-woogie.” In fact, the technology that De Forest had helped invent was intrinsically better suited to jazz than it was to classical performances. Jazz punched through the compressed, tinny sound of early AM radio speakers; the vast dynamic range of a symphony was largely lost in translation. The blast of Satchmo’s trumpet played better on the radio than the subtleties of Schubert.

The collision of jazz and radio created, in effect, the first surge of a series of cultural waves that would wash over twentieth-century society. A new sound that has been slowly incubating in some small section of the world—New Orleans, in the case of jazz—finds its way onto the mass medium of radio, offending the grown-ups and electrifying the kids. The channel first carved out by jazz would subsequently be filled by rock ’n’ roll from Memphis, British pop from Liverpool, rap and hip-hop from South Central and Brooklyn. Something about radio and music seems to have encouraged this pattern, in a way that television or film did not: almost immediately after a national medium emerged for sharing music, subcultures of sound began flourishing on that medium. There were “underground” artists before radio—impoverished poets and painters—but radio helped create a template that would become commonplace: the underground artist who becomes an overnight celebrity.

With jazz, of course, there was a crucial additional element. The overnight celebrities were, for the most part, African-Americans: Ellington, Armstrong, Ella Fitzgerald, Billie Holiday. It was a profound breakthrough: for the first time, white America welcomed African-American culture into its living room, albeit through the speakers of an AM radio. The jazz stars gave white America an example of African-Americans becoming famous and wealthy and admired for their skills as entertainers rather than advocates. Of course, many of those musicians also became powerful advocates, in songs such as Billie Holiday’s “Strange Fruit,” with its bitter tale of a southern lynching. Radio signals had a kind of freedom to them that proved to be liberating in the real world. Those radio waves ignored the way in which society was segmented at that time: between black and white worlds, between different economic classes. The radio signals were color-blind. Like the Internet, they didn’t break down barriers as much as live in a world separate from them.

The birth of the civil rights movement was intimately bound up in the spread of jazz music throughout the United States. It was, for many Americans, the first cultural common ground between black and white America that had been largely created by African-Americans. That in itself was a great blow to segregation. Martin Luther King Jr. made the connection explicit in remarks he delivered at the Berlin Jazz Festival in 1964:

It is no wonder that so much of the search for identity among American Negroes was championed by Jazz musicians. Long before the modern essayists and scholars wrote of “racial identity” as a problem for a multi-racial world, musicians were returning to their roots to affirm that which was stirring within their souls. Much of the power of our Freedom Movement in the United States has come from this music. It has strengthened us with its sweet rhythms when courage began to fail. It has calmed us with its rich harmonies when spirits were down. And now, Jazz is exported to the world.

—

LIKE MANY POLITICAL FIGURES of the twentieth century, King was indebted to the vacuum tube for another reason. Shortly after De Forest and Bell Labs began using vacuum tubes to enable radio broadcasts, the technology was enlisted to amplify the human voice in more immediate settings: powering amplifiers attached to microphones, allowing people to speak or sing to massive crowds for the first time in history. Tube amplifiers finally allowed us to break free from the sound engineering that had prevailed since Neolithic times. We were no longer dependent on the reverberations of caves or cathedrals or opera houses to make our voices louder. Now electricity could do the work of echoes, but a thousand times more powerfully.

Amplification created an entirely new kind of political event: mass rallies oriented around individual speakers. Crowds had played a dominant role in political upheaval for the preceding century and a half; if there is an iconic image of revolution before the twentieth century, it’s the swarm of humanity taking the city streets in 1789 or 1848. But amplification took those teeming crowds and gave them a focal point: the voice of the leader reverberating through the plaza or stadium or park. Before tube amplifiers, the limits of our vocal chords made it difficult to speak to more than a thousand people at a time. (The elaborate vocal stylings of opera singing were in many ways designed to coax maximum projection out of the biological limitations of the voice.) But a microphone attached to multiple speakers extended the range of earshot by several orders of magnitude. No one recognized—and exploited—this new power more quickly than Adolf Hitler, whose Nuremberg rallies addressed more than a hundred thousand followers, all fixated on the amplified sound of the Führer’s voice. Remove the microphone and amplifier from the toolbox of twentieth-century technology and you remove one of that century’s defining forms of political organization, from Nuremberg to “I Have a Dream.”

Tube amplification enabled the musical equivalent of political rallies as well: the Beatles playing Shea Stadium, Woodstock, Live Aid. But the idiosyncrasies of vacuum-tube technology also had a more subtle effect on twentieth-century music—making it not just loud but also making it noisy.

It is hard for those of us who have lived in the postindustrial world our entire lives to understand just how much a shock the sound of industrialization was to human ears a century or two ago. An entirely new symphony of discord suddenly entered the realm of everyday life, particularly in large cities: the crashing, clanging of metal on metal; the white-noise blast of the steam engine. The noise was, in many ways, as shocking as the crowds and the smells of the big cities. By the 1920s, as electrically amplified sounds began roaring alongside the rest of the urban tumult, organizations such as Manhattan’s Noise Abatement Society began advocating for a quieter metropolis. Sympathetic to the society’s mission, a Bell Labs engineer named Harvey Fletcher created a truck loaded with state-of-the-art sound equipment and Bell engineers who drove slowly around New York City noise hot spots taking sound measurements. (The unit of measurement for sound volume—the decibel—came out of Fletcher’s research.) Fletcher and his team found that some city sounds—riveting and drilling in construction, the roar of the subway—were at the decibel threshold for auditory pain. At Cortlandt Street, known as “Radio Row,” the noise of storefronts showcasing the latest radio speakers was so loud it even drowned out the elevated train.

But while noise-abatement groups battled modern noise through regulations and public campaigns, another reaction emerged. Instead of being repelled by the sound, our ears began to find something beautiful in it. The routine experiences of everyday life had been effectively a training session for the aesthetics of noise since the early nineteenth century. But it was the vacuum tube that finally brought noise to the masses.

Starting in the 1950s, guitarists playing through tube amplifiers noticed that they could make an intriguing new kind of sound by overdriving the amp: a crunchy layer of noise on top of the notes generated by strumming the strings of the guitar itself. This was, technically speaking, the sound of the amplifier malfunctioning, distorting the sound it had been designed to reproduce. To most ears it sounded like something was broken with the equipment, but a small group of musicians began to hear something appealing in the sound. A handful of early rock ’n’ roll recordings in the 1950s features a modest amount of distortion on the guitar tracks, but the art of noise wouldn’t really take off until the sixties. In July 1960, a bassist named Grady Martin was recording a riff for a Marty Robbins song called “Don’t Worry” when his amplifier malfunctioned, creating a heavily distorted sound that we now call a “fuzz tone.” Initially Robbins wanted it removed from the song, but the producer persuaded him to keep it. “No one could figure out the sound because it sounded like a saxophone,” Robbins would say years later. “It sounded like a jet engine taking off. It had many different sounds.” Inspired by the strange, unplaceable noise of Martin’s riff, another band called the Ventures asked a friend to hack together a device that could add the fuzz effect deliberately. Within a year, there were commercial distortion boxes on the market; within three years Keith Richards was saturating the opening riff of “Satisfaction” with distortion, and the trademark sound of the sixties was born.

A similar pattern developed with a novel—and initially unpleasant—sound that occurs when amplified speakers and microphones share the same physical space: the swirling, screeching noise of feedback. Distortion was a sound that had at least some aural similarity to those industrial sounds that had first emerged in the eighteenth century. (Hence the “jet engine” tone of Grady Martin’s bass line.) But feedback was an entirely new creature; it did not exist in any form until the invention of speakers and microphones roughly a century ago. Sound engineers would go to great lengths to eliminate feedback from recordings or concert settings, positioning microphones so they didn’t pick up signal from the speakers, and thus cause the infinite-loop screech of feedback. Yet once again, one man’s malfunction turned out to be another man’s music, as artists such as Jimi Hendrix or Led Zeppelin—and later punk experimentalists like Sonic Youth—embraced the sound in their recordings and performances. In a real sense, Hendrix was not just playing the guitar on those feedback-soaked recordings in the late 1960s, he was creating a new sound that drew upon the vibration of the guitar strings, the microphone-like pickups on the guitar itself, and the speakers, building on the complex and unpredictable interactions between those three technologies.

Sometimes cultural innovations come from using new technologies in unexpected ways. De Forest and Bell Labs weren’t trying to invent the mass rally when they came up with the first sketches of a vacuum tube, but it turned out to be easy to assemble mass rallies once you had amplification to share a single voice with that many people. But sometimes the innovation comes from a less likely approach: by deliberately exploiting the malfunctions, turning noise and error into a useful signal. Every genuinely new technology has a genuinely new way of breaking—and every now and then, those malfunctions open a new door in the adjacent possible. In the case of the vacuum tube, it trained our ears to enjoy a sound that would no doubt have made Lee De Forest recoil in horror. Sometimes the way a new technology breaks is almost as interesting as the way it works.

—

FROM THE NEANDERTHALS chanting in the Burgundy caves, to Édouard-Léon Scott de Martinville warbling into his phonautograph, to Duke Ellington broadcasting from the Cotton Club, the story of sound technology had always been about extending the range and intensity of our voices and our ears. But the most surprising twist of all would come just a century ago, when humans first realized that sound could be harnessed for something else: to help us see.

The use of light to signal the presence of dangerous shorelines to sailors is an ancient practice; the Lighthouse of Alexandria, constructed several centuries before the birth of Christ, was one of the original seven wonders of the world. But lighthouses perform poorly at precisely the point where they are needed the most: in stormy weather, where the light they transmit is obscured by fog and rain. Many lighthouses employed warning bells as an additional signal, but those too could be easily drowned out by the sound of a roaring sea. Yet sound waves turn out to have an intriguing physical property: under water, they travel four times faster than they do through the air, and they are largely undisturbed by the sonic chaos above sea level.

In 1901, a Boston-based firm called the Submarine Signal Company began manufacturing a system of communications tools that exploited this property of aquatic sound waves: underwater bells that chimed at regular intervals, and microphones specially designed for underwater reception called “hydrophones.” The SSC established more than a hundred stations around the world at particularly treacherous harbors or channels, where the underwater bells would warn vessels, equipped with the company’s hydrophones, that steered too close to the rocks or shoals. It was an ingenious system, but it had its limits. To begin with, it worked only in places where the SSC had installed warning bells. And it was entirely useless at detecting less predictable dangers: other ships, or icebergs.

The threat posed by icebergs to maritime travel became vividly apparent to the world in April 1912, when the Titanic foundered in the North Atlantic. Just a few days before the sinking, the Canadian inventor Reginald Fessenden had run across an engineer from the SSC at a train station, and after a quick chat, the two men agreed that Fessenden should come by the office to see the latest underwater signaling technologies. Fessenden had been a pioneer of wireless radio, responsible for both the first radio transmission of human speech and the first transatlantic two-way radio transmission of Morse code. That expertise had led the SSC to ask him to help them design their hydrophone system to better filter out the background noise of underwater acoustics. When news of the Titanic broke, just four days after his visit to the SSC, Fessenden was as shocked as the rest of the world, but unlike the rest of the world, he had an idea about how to prevent these tragedies in the future.

Fessenden’s first suggestion had been to replace the bells with a continuous, electric-powered tone that could also be used to transmit Morse code, borrowing from his experiences with wireless telegraphy. But as he tinkered with the possibilities, he realized the system could be much more ambitious. Instead of merely listening to sounds generated by specifically designed and installed warning posts, Fessenden’s device would generate its own sounds onboard the ship and listen to the echoes created as those new sounds bounced off objects in the water, much as dolphins use echolocation to navigate their way around the ocean. Borrowing the same principles that had attracted the cave chanters to the unusually reverberant sections of the Arcy-sur-Cure caves, Fessenden tuned the device so that it would resonate with only a small section of the frequency spectrum, right around 540hz, allowing it to ignore all the background noise of the aquatic environment. After calling it, somewhat disturbingly, his “vibrator” for a few months, he ultimately dubbed it the “Fessenden Oscillator.” It was a system for both sending and receiving underwater telegraphy, and the world’s first functional sonar device.

Once again, the timing of world-historical events underscored the need for Fessenden’s contraption. Just a year after he completed his first working prototype, World War I erupted. The German U-boats roaming the North Atlantic now posed an even greater threat to maritime travel than the Titanic’s iceberg. The threat was particularly acute for Fessenden, who as a Canadian citizen was a fervent patriot of the British Empire. (He also seems to have been a borderline racist, later advancing a theory in his memoirs about why “blond-haired men of English extraction” had been so central to modern innovation.) But the United States was still two years away from joining the war, and the executives at the SSC didn’t share his allegiance to the Union Jack. Faced with the financial risk of developing two revolutionary new technologies, the company decided to build and market the oscillator as a wireless telegraphy device exclusively.

Fessenden ultimately traveled on his own dollar all the way to Portsmouth, England, to try to persuade the Royal Navy to invest in his oscillator, but they too were dubious of this miracle invention. Fessenden would later write: “I pleaded with them to just let us open the box and show them what the apparatus was like.” But his pleas were ultimately ignored. Sonar would not become a standard component of naval warfare until World War II. By the armistice in 1918, upward of ten thousand lives had been lost to the U-boats. The British and, eventually, the Americans had experimented with countless offensive and defensive measures to ward off these submarine predators. But, ironically, the most valuable defensive weapon would have been a simple 540hz sound wave, bouncing off the hull of the attacker.

Radio developer Reginald Fessenden testing his invention, 1906

In the second half of the twentieth century, the principles of echolocation would be employed to do far more than detect icebergs and submarines. Fishing vessels—and amateur fishers—used variations of Fessenden’s oscillator to detect their catch. Scientists used sonar to explore the last great mysteries of our oceans, revealing hidden landscapes, natural resources, and fault lines. Eighty years after the sinking of the Titanic inspired Reginald Fessenden to dream up the first sonar, a team of American and French researchers used sonar to discover the vessel on the Atlantic seabed, twelve thousand feet below the surface.

But Fessenden’s innovation had the most transformative effect on dry land, where ultrasound devices, using sound to see into a mother’s womb, revolutionized prenatal care, allowing today’s babies and their mothers to be routinely saved from complications that had been fatal less than a century ago. Fessenden had hoped his idea—using sound to see—might save lives; while he couldn’t persuade the authorities to put it to use in detecting U-boats, the oscillator did end up saving millions of lives, both at sea and in a place Fessenden would never have expected: the hospital.

Of course, ultrasound’s most familiar use involves determining the sex of a baby early in a pregnancy. We are accustomed now to think of information in binary terms: a zero or a one, a circuit connected or broken. But in all of life’s experiences, there are few binary crossroads like the sex of your unborn child. Are you going to have a girl or a boy? How many life-changing consequences flow out of that simple unit of information? Like many of us, my wife and I learned the gender of our children using ultrasound. We now have other, more accurate, means of determining the sex of a fetus, but we found our way to that knowledge first by bouncing sound waves off the growing body of our unborn child. Like the Neanderthals navigating the caves of Arcy-sur-Cure, echoes led the way.

There is, however, a dark side to that innovation. The introduction of ultrasound in countries such as China with a strong cultural preference for male offspring has led to a growing practice of sex-selective abortions. An extensive supply of ultrasound machines was introduced throughout China in the early 1980s, and while the government shortly thereafter officially banned the use of ultrasound to determine sex, the “back-door” use of the technology for sex selection is widespread. By the end of the decade, the sex ratio at birth in hospitals throughout China was almost 110 boys to every 100 girls, with some provinces reporting ratios as high as 118:100. This may be one of the most astonishing, and tragic, hummingbird effects in all of twentieth-century technology: someone builds a machine to listen to sound waves bouncing off icebergs, and a few generations later, millions of female fetuses are aborted thanks to that very same technology.

The skewed sex ratios of modern China contain several important lessons, setting aside the question of abortion itself, much less gender-based abortion. First, they are a reminder that no technological advance is purely positive in its effects: for every ship saved from an iceberg, there are countless pregnancies terminated because of a missing Y chromosome. The march of technology has its own internal logic, but the moral application of that technology is up to us. We can decide to use ultrasound to save lives or terminate them. (Even more challenging, we can use ultrasound to blur the very boundaries of life, detecting a heartbeat in a fetus that is only weeks old.) For the most part, the adjacencies of technological and scientific progress dictate what we can invent next. However smart you might be, you can’t invent an ultrasound before the discovery of sound waves. But what we decide to do with those inventions? That is a more complicated question, one that requires a different set of skills to answer.

But there’s another, more hopeful lesson in the story of sonar and ultrasound, which is how quickly our ingenuity is able to leap boundaries of conventional influence. Our ancestors first noticed the power of echo and reverberation to change the sonic properties of the human voice tens of thousands of years ago; for centuries we have used those properties to enhance the range and power of our vocal chords, from cathedrals to the Wall of Sound. But it’s hard to imagine anyone studying the physics of sound two hundred years ago predicting that those echoes would be used to track undersea weapons or determine the sex of an unborn child. What began with the most moving and intuitive sound to the human ear—the sound of our voices in song, in laughter, sharing news or gossip—has been transformed into the tools of both war and peace, death and life. Like those distorted wails of the tube amp, it is not always a happy sound. Yet, again and again, it turns out to have unsuspected resonance.