6. Light

Imagine some alien civilization viewing Earth from across the galaxies, looking for signs of intelligent life. For millions of years, there would be almost nothing to report: the daily flux of weather moving across the planet, the creep of glaciers spreading and retreating every hundred thousand years or so, the incremental drift of continents. But starting about a century ago, a momentous change would suddenly be visible: at night, the planet’s surface would glow with the streetlights of cities, first in the United States and Europe, then spreading steadily across the globe, growing in intensity. Viewed from space, the emergence of artificial lighting would arguably have been the single most significant change in the planet’s history since the Chicxulub asteroid collided with Earth sixty-five million years ago, coating the planet in a cloud of superheated ash and dust. From space, all the transformations that marked the rise of human civilization would be an afterthought: opposable thumbs, written language, the printing press—all these would pale beside the brilliance of Homo lumens.

Viewed from the surface of the earth, of course, the invention of artificial light had more rivals in terms of visible innovations, but its arrival marked a threshold point in human society. Today’s night sky now shines six thousand times brighter than it did just 150 years ago. Artificial light has transformed the way we work and sleep, helped create global networks of communication, and may soon enable radical breakthroughs in energy production. The lightbulb is so bound up in the popular sense of innovation that it has become a metaphor for new ideas themselves: the “lightbulb” moment has replaced Archimedes’s eureka as the expression most likely to be invoked to celebrate a sudden conceptual leap.

One of the odd things about artificial light is how stagnant it was as a technology for centuries. This is particularly striking given that artificial light arrived via the very first technology, when humans originally mastered the controlled fire more than a hundred thousand years ago. The Babylonians and Romans developed oil-based lamps, but that technology virtually disappeared during the (appropriately named) Dark Ages. For almost two thousand years, all the way to the dawn of the industrial age, the candle was the reigning solution for indoor lighting. Candles made from beeswax were highly prized but too expensive for anyone but the clergy or the aristocracy. Most people made do with tallow candles, which burned animal fat to produce a tolerable flicker, accompanied by a foul odor and thick smoke.

As our nursery rhymes remind us, candle-making was a popular vocation during this period. Parisian tax rolls from 1292 listed seventy-two “chandlers,” as they were called, doing business in the city. But most ordinary households made their own tallow candles, an arduous process that could go on for days: heating up containers of animal fat, and dipping wicks into them. In a diary entry from 1743, the president of Harvard noted that he had produced seventy-eight pounds of tallow candles in two days of work, a quantity that he managed to burn through two months later.

It’s not hard to imagine why people were willing to spend so much time manufacturing candles at home. Consider what life would have been like for a farmer in New England in 1700. In the winter months the sun goes down at five, followed by fifteen hours of darkness before it gets light again. And when that sun goes down, it’s pitch-black: there are no streetlights, flashlights, lightbulbs, fluorescents—even kerosene lamps haven’t been invented yet. There’s just a flickering glow of a fireplace, and the smoky burn of the tallow candle.

Those nights were so oppressive that scientists now believe our sleep patterns were radically different in the ages before ubiquitous night lighting. In 2001, the historian Roger Ekirch published a remarkable study that drew upon hundreds of diaries and instructional manuals to convincingly argue that humans had historically divided their long nights into two distinct sleep periods. When darkness fell, they would drift into “first sleep,” waking after four hours to snack, relieve themselves, have sex, or chat by the fire, before heading back for another four hours of “second sleep.” The lighting of the nineteenth century disrupted this ancient rhythm, by opening up a whole array of modern activities that could be performed after sunset: everything from theaters and restaurants to factory labor. Ekirch documents the way the ideal of a single, eight-hour block of continuous sleep was constructed by nineteenth-century customs, an adaptation to a dramatic change in the lighting environment of human settlements. Like all adaptations, its benefits carried inevitable costs: the middle-of-the-night insomnia that plagues millions of people around the world is not, technically speaking, a disorder, but rather the body’s natural sleep rhythms asserting themselves over the prescriptions of nineteenth-century convention. Those waking moments at three a.m. are a kind of jet lag caused by artificial light instead of air travel.

The flicker of the tallow candle had not been strong enough to transform our sleep patterns. To make a cultural change that momentous, you needed the steady bright glow of nineteenth-century lighting. By the end of the century, that light would come from the burning filaments of the electric lightbulb. But the first great advance in the century of light came from a source that seems macabre to us today: the skull of a fifty-ton marine mammal.

—

IT’S A STORY THAT BEGINS with a storm. Legend has it that sometime around 1712 a powerful nor’easter off the coast of Nantucket blew a ship captain named Hussey far out to sea. In the deep waters of the North Atlantic, he encountered one of Mother Nature’s most bizarre and intimidating creations: the sperm whale.

Hussey managed to harpoon the beast—though some skeptics think it simply washed ashore in the storm. Either way, when locals dissected the giant mammal, they discovered something utterly bizarre: inside the creature’s massive head, they found a cavity above the brain, filled with a white, oily substance. Thanks to its resemblance to seminal fluid, the whale oil came to be called “spermaceti.”

To this day, scientists are not entirely sure why sperm whales produce spermaceti in such vast quantities. (A mature sperm whale holds as much as five hundred gallons inside its skull.) Some believe the whales use the spermaceti for buoyancy; others believe it helps with the mammal’s echolocation system. New Englanders, however, quickly discovered another use for spermaceti: candles made from the substance produce a much stronger, whiter light than tallow candles, without the offensive smoke. By the second half of the eighteenth century, spermaceti candles had become the most prized form of artificial light in America and Europe.

In a 1751 letter, Ben Franklin described how much he enjoyed the way the new candles “afford a clear white Light; may be held in the Hand, even in hot Weather, without softening; that their Drops do not make Grease Spots like those from common Candles; that they last much longer, and need little or no Snuffing.” Spermaceti light quickly became an expensive habit for the well-to-do. George Washington estimated that he spent $15,000 a year in today’s currency burning spermaceti candles. The candle business became so lucrative that a group of manufacturers formed an organization called United Company of Spermaceti Chandlers, conventionally known as the “Spermaceti Trust,” designed to keep competitors out of the business and force the whalers to keep their prices in check.

Despite the candle-making monopoly, significant economic rewards awaited anyone who managed to harpoon a sperm whale. The artificial light of the spermaceti candle triggered an explosion in the whaling industry, building out the beautiful seaside towns of Nantucket and Edgartown. But as elegant as these streets seem today, whaling was a dangerous and repulsive business. Thousands of lives were lost at sea chasing these majestic creatures, including from the notorious sinking of the Essex, which ultimately inspired Herman Melville’s masterpiece, Moby-Dick.

Extracting the spermaceti was almost as difficult as harpooning the whale itself. A hole would be carved in the side of the whale’s head, and men would crawl into the cavity above the brain—spending days inside the rotting carcass, scraping spermaceti out of the brain of the beast. It’s remarkable to think that only two hundred years ago, this was the reality of artificial light: if your great-great-great-grandfather wanted to read his book after dark, some poor soul had to crawl around in a whale’s head for an afternoon.

Spermaceti whale of the Southern Ocean. Hand-colored engraving from The Naturalist’s Libray, Mammalia, Vol. 12, 1833–1843, by Sir William Jardine.

Somewhere on the order of three hundred thousand sperm whales were slaughtered in just a little more than a century. It is likely that the entire population would have been killed off had we not found a new source of oil for artificial light in the ground, introducing petroleum-based solutions such as the kerosene lamp and the gaslight. This is one of the stranger twists in the history of extinction: because humans discovered deposits of ancient plants buried deep below the surface of the earth, one of the ocean’s most extraordinary creatures was spared.

—

FOSSIL FUELS WOULD BECOME CENTRAL to almost all aspects of twentieth-century life, but their first commercial use revolved around light. These new lamps were twenty times brighter than any candle had ever been, and their superior brightness helped spark an explosion in magazine and newspaper publishing in the second half of the nineteenth century, as the dark hours after work became increasingly compatible with reading. But they also sparked literal explosions: thousands of people died each year in the fiery eruption of a reading light.

Despite these advances, artificial light remained extremely expensive by modern standards. In today’s society, light is comparatively cheap and abundant; 150 years ago, reading after dark was a luxury. The steady march of artificial light since then, from a rare and feeble technology to a ubiquitous and powerful one, gives us one map for the path of progress over that period. In the late 1990s, the Yale historian William D. Nordhaus published an ingenious study that charted that path in extraordinary detail, analyzing the true costs of artificial light over thousands of years of innovation.

When economic historians try to gauge the overall health of economies over time, average wages are usually one of the first places they start. Are people today making more money than they did in 1850? Of course, inflation makes those comparisons tricky: someone making ten dollars a day was upper-middle-class in nineteenth-century dollars. That’s why we have inflation tables that help us understand that ten dollars then is worth $160 in today’s currency. But inflation covers only part of the story. “During periods of major technological change,” Nordhaus argued, “the construction of accurate price indexes that capture the impact of new technologies on living standards is beyond the practical capability of official statistical agencies. The essential difficulty arises for the obvious but usually overlooked reason that most of the goods we consume today were not produced a century ago.” Even if you had $160 in 1850, you couldn’t buy a wax phonograph, not to mention an iPod. Economists and historians needed to factor not only the general value of a currency, but also some sense of what that currency could buy.

This is where Nordhaus proposed using the history of artificial light to illuminate the true purchasing power of wages over the centuries. The vehicles of artificial light vary dramatically over the years: from candles to LEDs. But the light they produce is a constant, a kind of anchor in the storm of rapid technological innovation. So Nordhaus proposed as his unit of measure the cost of producing one thousand “lumen-hours” of artificial light.

A tallow candle in 1800 would cost roughly forty cents per thousand lumen-hours. A fluorescent bulb in 1992, when Nordhaus originally compiled his research, cost a tenth of a cent for the same amount of light. That’s a four-hundred-fold increase in efficiency. But the story is even more dramatic when you compare those costs to the average wages from the period. If you worked for an hour at the average wage of 1800, you could buy yourself ten minutes of artificial light. With kerosene in 1880, the same hour of work would give you three hours of reading at night. Today, you can buy three hundred days of artificial light with an hour of wages.

Something extraordinary obviously happened between the days of tallow candles or kerosene lamps and today’s illuminated wonderland. That something was the electric lightbulb.

—

THE STRANGE THING about the electric lightbulb is that it has come to be synonymous with the “genius” theory of innovation—the single inventor inventing a single thing, in a moment of sudden inspiration—while the true story behind its creation actually makes the case for a completely different explanatory framework: the network/systems model of innovation. Yes, the lightbulb marked a threshold in the history of innovation, but for entirely different reasons. It would be pushing things to claim that the lightbulb was crowdsourced, but it is even more of a distortion to claim that a single man named Thomas Edison invented it.

The canonical story goes something like this: after a triumphant start to his career inventing the phonograph and the stock ticker, a thirty-one-year-old Edison takes a few months off to tour the American West—perhaps not coincidentally, a region that was significantly darker at night than the gaslit streets of New York and New Jersey. Two days after returning to his lab in Menlo Park, in August 1878, he draws three diagrams in his notebook and titles them “Electric Light.” By 1879, he files a patent application for an “electric lamp” that displays all the main characteristics of the lightbulb we know today. By the end of 1882, Edison’s company is powering electric light for the entire Pearl Street district in Lower Manhattan.

It’s a thrilling story of invention: the young wizard of Menlo Park has a flash of inspiration, and within a few years his idea is lighting up the world. The problem with this story is that people had been inventing incandescent light for eighty years before Edison turned his mind to it. A lightbulb involves three fundamental elements: some kind of filament that glows when an electric current runs through it, some mechanism to keep the filament from burning out too quickly, and a means of supplying electric power to start the reaction in the first place. In 1802, the British chemist Humphry Davy had attached a platinum filament to an early electric battery, causing it to burn brightly for a few minutes. By the 1840s, dozens of separate inventors were working on variations of the lightbulb. The first patent was issued in 1841 to an Englishman named Frederick de Moleyns. The historian Arthur A. Bright compiled a list of the lightbulb’s partial inventors, leading up to Edison’s ultimate triumph in the late 1870s.

At least half of the men had hit upon the basic formula that Edison ultimately arrived at: a carbon filament, suspended in a vacuum to prevent oxidation, thus keeping the filament from burning up too quickly. In fact, when Edison finally began tinkering with electric light, he spent months working on a feedback system for regulating the flow of electricity to prevent melting, before finally abandoning that approach in favor of the vacuum—despite the fact that nearly half of his predecessors had already embraced the vacuum as the best environment for a sustained glow. The lightbulb was the kind of innovation that comes together over decades, in pieces. There was no lightbulb moment in the story of the lightbulb. By the time Edison flipped the switch at the Pearl Street station, a handful of other firms were already selling their own models of incandescent electric lamps. The British inventor Joseph Swan had begun lighting homes and theaters a year earlier. Edison invented the lightbulb the way Steve Jobs invented the MP3 player: he wasn’t the first, but he was the first to make something that took off in the marketplace.

So why does Edison get all the credit? It’s tempting to use the same backhanded compliment that many leveled against Steve Jobs: that he was a master of marketing and PR. It is true that Edison had a very tight relationship with the press at this point of his career. (On at least one occasion, he gave shares in his company to a journalist in exchange for better coverage.) Edison was also a master of what we would now call “vaporware”: He announced nonexistent products to scare off competitors. Just a few months after he had started work on electric light, he began telling reporters from New York papers that the problem had been solved, and that he was on the verge of launching a national system of magical electrical light. A system so simple, he says, “that a bootblack might understand it.”

Despite this bravado, the fact remained that the finest specimen of electric light in the Edison lab couldn’t last five minutes. But that didn’t stop him from inviting the press out to Menlo Park lab to see his revolutionary lightbulb. Edison would bring each reporter in one at a time, flick the switch on a bulb, and let the reporter enjoy the light for three or four minutes before ushering him from the room. When he asked how long his lightbulbs would last, he answered confidently: “Forever, almost.”

But for all this bluffing, Edison and his team did manage to ship a revolutionary and magical product, as the Apple marketing might have called the Edison lightbulb. Publicity and marketing will only get you so far. By 1882, Edison had produced a lightbulb that decisively outperformed its competitors, just as the iPod outperformed its MP3-player rivals in its early years.

In part, Edison’s “invention” of the lightbulb was less about a single big idea and more about sweating the details. (His famous quip about invention being one percent inspiration and ninety-nine percent perspiration certainly holds true for his adventures in artificial light.) Edison’s single most significant contribution to the electric lightbulb itself was arguably the carbonized bamboo filament he eventually settled on. Edison wasted at least a year trying to make platinum work as a filament, but it was too expensive and prone to melting. Once he abandoned platinum, Edison and his team tore through a veritable botanic garden of different materials: “celluloid, wood shavings (from boxwood, spruce, hickory, baywood, cedar, rosewood, and maple), punk, cork, flax, coconut hair and shell, and a variety of papers.” After a year of experimentation, bamboo emerged as the most durable substance, which set off one of the strangest chapters in the history of global commerce. Edison dispatched a series of Menlo Park emissaries to scour the globe for the most incandescent bamboo in the natural world. One representative paddled down two thousand miles of river in Brazil. Another headed to Cuba, where he was promptly struck down with yellow fever and died. A third representative named William Moore ventured to China and Japan, where he struck a deal with a local farmer for the strongest bamboo the Menlo Park wizards had encountered. The arrangement remained intact for many years, supplying the filaments that would illuminate rooms all over the world. Edison may not have invented the lightbulb, but he did inaugurate a tradition that would turn out to be vital to modern innovation: American electronics companies importing their component parts from Asia. The only difference is that, in Edison’s time, the Asian factory was a forest.

The other key ingredient to Edison’s success lay in the team he had assembled around him in Menlo Park, memorably known as the “muckers.” The muckers were strikingly diverse both in terms of professional expertise and nationality: the British mechanic Charles Batchelor, the Swiss machinist John Kruesi, the physicist and mathematician Francis Upton, and a dozen or so other draftsmen, chemists, and metalworkers. Because the Edison lightbulb was not so much a single invention as a bricolage of small improvements, the diversity of the team turned out to be an essential advantage for Edison. Solving the problem of the filament, for instance, required a scientific understanding of electrical resistance and oxidation that Upton provided, complementing Edison’s more untutored, intuitive style; and it was Batchelor’s mechanical improvisations that enabled them to test so many different candidates for the filament itself. Menlo Park marked the beginning of an organizational form that would come to prominence in the twentieth century: the cross-disciplinary research-and-development lab. In this sense, the transformative ideas and technologies that came out of places such as Bell Labs and Xerox-PARC have their roots in Edison’s workshop. Edison didn’t just invent technology; he invented an entire system for inventing, a system that would come to dominate twentieth-century industry.

Edison also helped inaugurate another tradition that would become vital to contemporary high-tech innovation: paying his employees in equity rather than just cash. In 1879, in the middle of the most frenetic research into the lightbulb, Edison offered Francis Upton stock worth 5 percent of the Edison Electric Light Company—though Upton would have to forswear his salary of $600 a year. Upton struggled over the decision, but ultimately decided to take the stock grant, over the objections of his more fiscally conservative father. By the end of the year, the ballooning value of Edison stock meant that his holdings were already worth $10,000, more than a million dollars in today’s currency. Not entirely graciously, Upton wrote to his father, “I cannot help laughing when I think how timid you were at home.”

By any measure, Edison was a true genius, a towering figure in nineteenth-century innovation. But as the story of the lightbulb makes clear, we have historically misunderstood that genius. His greatest achievement may have been the way he figured out how to make teams creative: assembling diverse skills in a work environment that valued experimentation and accepted failure, incentivizing the group with financial rewards that were aligned with the overall success of the organization, and building on ideas that originated elsewhere. “I am not overly impressed by the great names and reputations of those who might be trying to beat me to an invention. . . . It’s their ‘ideas’ that appeal to me,” Edison famously said. “I am quite correctly described as ‘more of a sponge than an inventor.’”

Early Edison carbon filament lamp, 1897

The lightbulb was the product of networked innovation, and so it is probably fitting that the reality of electric light ultimately turned out to be more of a network or system than a single entity. The true victory lap for Edison didn’t come with that bamboo filament glowing in a vacuum; it came with the lighting of the Pearl Street district two years later. To make that happen, you needed to invent lightbulbs, yes, but you also needed a reliable source of electric current, a system for distributing that current through a neighborhood, a mechanism for connecting individual lightbulbs to the grid, and a meter to gauge how much electricity each household was using. A lightbulb on its own is a curiosity piece, something to dazzle reporters with. What Edison and the muckers created was much bigger than that: a network of multiple innovations, all linked together to make the magic of electric light safe and affordable.

New York: Adapting the Brush Electric Light to the Illumination of the Streets, a Scene Near the Fifth Avenue Hotel.

Why should we care whether Edison invented the lightbulb as a lone genius or as part of a wider network? For starters, if the invention of the lightbulb is going to be a canonical story of how new technologies come into being, we might as well tell an accurate story. But it’s more than just a matter of getting the facts right, because there are social and political implications to these kinds of stories. We know that one key driver of progress and standards of living is technological innovation. We know that we want to encourage the trends that took us from ten minutes of artificial light on one hour’s wage to three hundred days. If we think that innovation comes from a lone genius inventing a new technology from scratch, that model naturally steers us toward certain policy decisions, like stronger patent protection. But if we think that innovation comes out of collaborative networks, then we want to support different policies and organizational forms: less rigid patent laws, open standards, employee participation in stock plans, cross-disciplinary connections. The lightbulb shines light on more than just our bedside reading; it helps us see more clearly the way new ideas come into being, and how to cultivate them as a society.

Artificial light turns out to have an even deeper connection to political values. Just six years after Edison lit the Pearl Street district, another maverick would push the envelope of light in a new direction, while walking the streets just a few blocks north of Edison’s illuminated wonderland. The muckers might have invented the system of electric light, but the next breakthrough in artificial light would come from a muckraker.

—

BURIED DEEP NEAR THE CENTER of the Great Pyramid of Giza lies a granite-faced cavity known as “the King’s Chamber.” The room contains only one object: an open rectangular box, sometimes called a “coffer,” carved out of red Aswan granite, chipped on one corner. The chamber’s name derives from the assumption that the coffer had been a sarcophagus that once contained the body of Khufu, the pharaoh who built the pyramid more than four thousand years ago. But a long line of maverick Egyptologists have suggested that the coffer had other uses. One still-circulating theory notes that the coffer possesses the exact dimensions that the Bible attributes to the original Ark of the Covenant, suggesting to some that the coffer once housed the legendary Ark itself.

In the fall of 1861, a visitor came to the King’s Chamber in the throes of an equally outlandish theory, this one revolving around a different Old Testament ark. The visitor was Charles Piazzi Smyth, who for the preceding fifteen years had served as the Royal Astronomer of Scotland, though he was a classic Victorian polymath with dozens of eclectic interests. Smyth had recently read a bizarre tome that contended that the pyramids had been originally built by the biblical Noah. Long an armchair Egyptologist, Smyth had grown so obsessed with the theory that he left his armchair in Edinburgh and headed off to Giza to do his own investigations firsthand. His detective work would ultimately lead to a bizarre stew of numerology and ancient history, published in a series of books and pamphlets over the coming years. Smyth’s detailed analyses of the pyramid’s structure convinced him that the builders had relied on a unit of measurement that was almost exactly equivalent to the modern British inch. Smyth interpreted this correspondence to be a sign that the inch itself was a holy measure, passed directly from God to Noah himself. This in turn gave Smyth the artillery he needed to attack the metric system that had begun creeping across the English Channel. The revelation of the Egyptian inch made it clear that the metric system was not just a symptom of malevolent French influence. It was also a betrayal of divine will.

Smyth’s scientific discoveries in the Great Pyramid may not have stood the test of time, or even kept Britain from going metric. Yet he still managed to make history in the King’s Chamber. Smyth brought the bulky and fragile tools of wet-plate photography (then state of the art) to Giza to document his findings. But the collodion-treated glass plates couldn’t capture a legible image in the King’s Chamber, even when the room was lit by torchlight. Photographers had tinkered with artificial lighting since the first daguerreotypes were printed in the 1830s, but almost all the solutions to date had produced unsatisfactory results. (Candles and gaslight were useless, obviously.) Early experiments heated a ball of calcium carbonate—the “limelight” that would illuminate theater productions until the dawn of electric light—but limelit photographs suffered from harsh contrasts and ghostly white faces.

The failed experiments with artificial lighting meant that by the time Smyth set up his gear in the King’s Chamber, more than thirty years after the invention of the daguerreotype, the art of photography was still entirely dependent on natural sunlight, a resource that was not exactly abundant in the inner core of a massive pyramid. But Smyth had heard of recent experiments using wire made of magnesium—photographers who twisted the wire into a bow and set it ablaze before capturing their low-light image. The technique was promising, but the light was unstable and generated an unpleasant amount of dense fumes. Burning magnesium wire in a closed environment had a tendency to make ordinary portraits look as though they were composed in dense fog.

Smyth realized that what he needed in the King’s Chamber was something closer to a flash than a slow burn. And so—for the first time in history, as far as we know—he mixed magnesium with ordinary gunpowder, creating a controlled mini-explosion that illuminated the walls of the King’s Chamber for a split second, allowing him to record its secrets on his glass plates. Today, the tourists that pass through the Great Pyramid encounter signs that forbid the use of flash photography inside the vast structure. They do not mention that the Great Pyramid also marks the site where flash photography was invented.

Or at least, one of the sites where flash photography was invented. Just as with Edison’s lightbulb, the true story of flash photography’s origins is a more complicated, more networked affair. Big ideas coalesce out of smaller, incremental breakthroughs. Smyth may have been the first to conceive of the idea of combining magnesium with an oxygen-rich, combustible element, but flash photography itself didn’t become a mainstream practice for another two decades, when two German scientists, Adolf Miethe and Johannes Gaedicke, mixed fine magnesium powder with potassium chlorate, creating a much more stable concoction that allowed high-shutter-speed photographs in low-light conditions. They called it Blitzlicht—literally, “flash light.”

Word of Miethe and Gaedicke’s invention soon trickled out of Germany. In October 1887, a New York paper ran a four-line dispatch about Blitzlicht. It was hardly a front-page story; the vast majority of New Yorkers ignored it altogether. But the idea of flash photography set off a chain of associations in the mind of one reader—a police reporter and amateur photographer who stumbled across the article while having breakfast with his wife in Brooklyn. His name was Jacob Riis.

Charles Piazzi Smyth

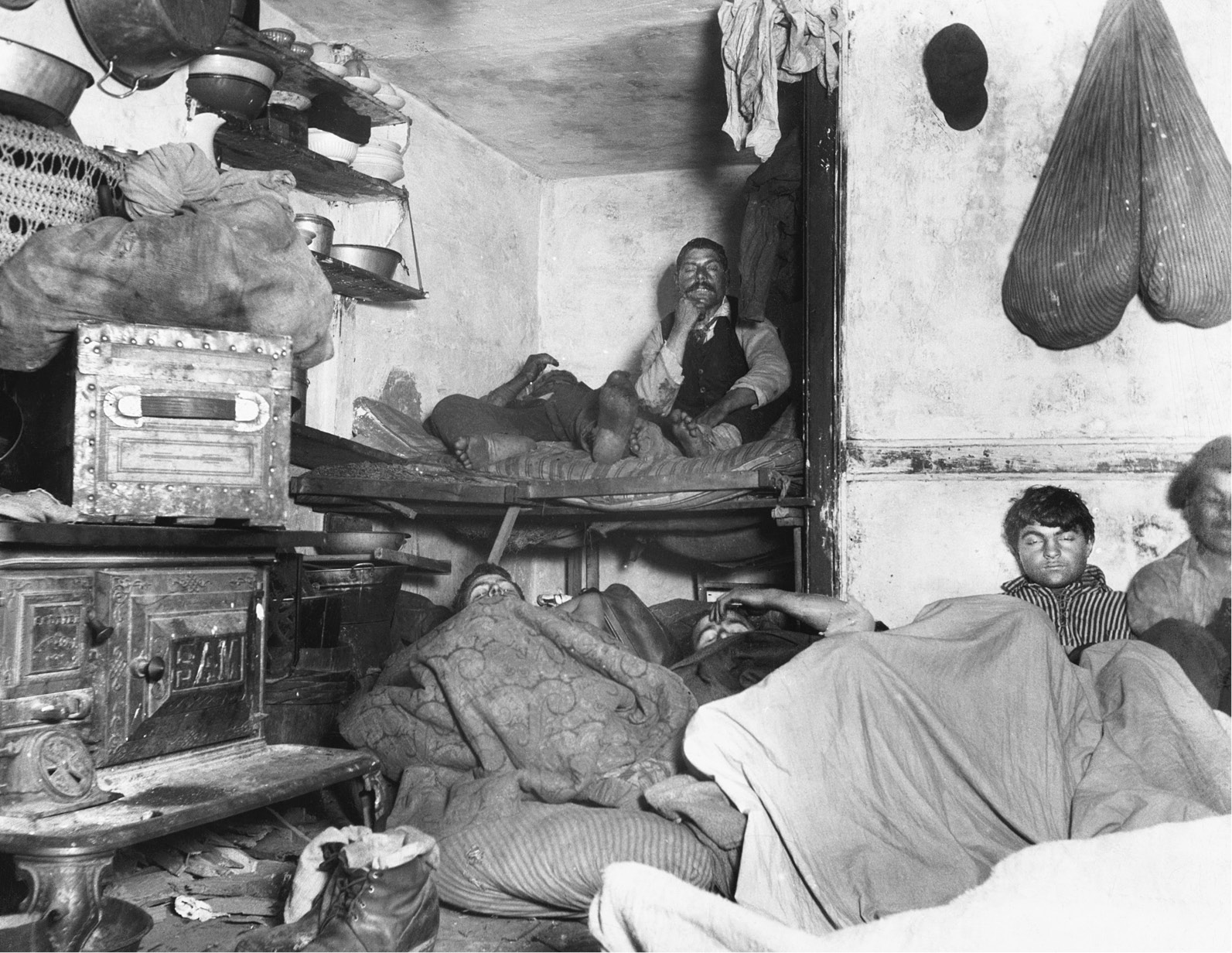

Then a twenty-eight-year-old Danish immigrant, Riis would ultimately enter the history books as one of the original muckrakers of the late nineteenth century, the man who did more to expose the squalor of tenement life—and inspire a progressive reform movement—than any other figure of the era. But until that breakfast in 1887, Riis’s attempts to shine light on the appalling conditions in the slums of Manhattan had failed to change public opinion in any meaningful way. A close confidant of then police commissioner Teddy Roosevelt, Riis had been exploring the depths of Five Points and other Manhattan hovels for years. With over half a million people living in only fifteen thousand tenements, sections of Manhattan were the most densely populated places on the planet. Riis was fond of taking late-night walks through the bleak alleyways on his way back home to Brooklyn from the police headquarters on Mulberry Street. “We used to go in the small hours of the morning,” he later recalled, “into the worst tenements to count noses and see if the law against overcrowding was violated, and the sights I saw there gripped my heart until I felt that I must tell of them, or burst, or turn anarchist, or something.”

Appalled by what he had discovered on his expeditions, Riis began writing about the mass tragedy of the tenements for local papers and national magazines such as Scribner’s and Harper’s Weekly. His written accounts of the shame of the cities belonged to a long tradition, dating back at least to Dickens’s horrified visit to New York in 1840. A number of exhaustive surveys of tenement depravity had been published over the years, with titles like “The Report of the Council of Hygiene and Public Health.” An entire genre of “sunshine and shadow” guidebooks to Five Points and its ilk flourished after the Civil War, offering curious visitors tips on exploring the seedy underbelly of big-city life, or at least exploring it vicariously from the safety of a small-town oasis. (The phrase “slumming it” originates with these tourist expeditions.) But despite stylistic differences, these texts shared one attribute: they had almost no effect on improving the actual living conditions of those slum dwellers.

Riis had long suspected that the problem with tenement reform—and urban poverty initiatives generally—was ultimately a problem of imagination. Unless you walked through the streets of Five Points after midnight, or descended into the dark recesses of interior apartments populated by multiple families at a time, you simply couldn’t imagine the conditions; they were too far removed from the day-to-day experience of most Americans, or at least most voting Americans. And so the political mandate to clean up the cities never quite amassed enough support to overcome the barriers of remote indifference.

Like other chroniclers of urban blight before him, Riis had experimented with illustrations that dramatized the devastating human cost of the tenements. But the line drawings invariably aestheticized the suffering; even the bleakest underground hovel looked almost quaint as an etching. Only photographs seemed to capture the reality with sufficient resolution to change hearts, but whenever Riis experimented with photography, he ran into the same impasse. Almost everything he wanted to photograph involved environments with minimal amounts of light. Indeed, the lack of even indirect sunlight in so many of the tenement flats was part of what made them so objectionable. This was Riis’s great stumbling block: as far as photography was concerned, the most important environments in the city—in fact, some of the most important new living quarters in the world—were literally invisible. They couldn’t be seen.

All of which should explain Jacob Riis’s epiphany at the breakfast table in 1887. Why trifle with line drawings when Blitzlicht could shine light in the darkness?

Within two weeks of that breakfast discovery, Riis assembled a team of amateur photographers (and a few curious police officers) to set off into the bowels of the darkened city—literally armed with Blitzlicht. (The flash is produced by firing a cartridge of the substance from a revolver.) More than a few denizens of Five Points found the shooting party hard to comprehend. As Riis would later put it: “The spectacle of half a dozen strange men invading a house in the midnight hour armed with big pistols which they shot off recklessly was hardly reassuring, however sugary our speech, and it was not to be wondered at if the tenants bolted through windows and down fire-escapes wherever we went.”

Before long, Riis replaced the revolver with a frying pan. The apparatus seemed more “home-like,” he claimed, and made his subjects feel more comfortable encountering the baffling new technology. (The simple act of being photographed was novelty enough for most of them.) It was still dangerous work; one small explosion in the frying pan nearly blinded Riis, and twice he set fire to his house while experimenting with the flash. But the images that emerged from those urban expeditions would ultimately change history. Using new halftone printing techniques, Riis published the photographs in his runaway bestseller, How the Other Half Lives, and traveled across the country giving lectures that were accompanied by magic-lantern images of Five Points and its previously invisible poverty. The convention of gathering together in a darkened room and watching illuminated images on a screen would become a ritual of fantasy and wish fulfillment in the twentieth century. But for many Americans, the first images they saw in those environments were ones of squalor and human suffering.

Riis’s books and lectures—and the riveting images they contained—helped create a massive shift in public opinion, and set the stage for one of the great periods of social reform in American history. Within a decade of their publication, Riis’s images built support for the New York State Tenement House Act of 1901, one of the first great reforms of the Progressive Era, which eliminated much of the appalling living conditions that Riis had documented. His work ignited a new tradition of muckraking that would ultimately improve the working conditions of factory floors as well. In a literal sense, illuminating the dark squalor of the tenements changed the map of urban centers around the world.

New York City: A shelter for immigrants in a Bayard Street tenement. Photo taken by Jacob Riis, 1888.

Here again we see the strange leaps of the hummingbird’s wing at play in social history, new inventions leading to consequences their creators never dreamed of. The utility of mixing magnesium and potassium chlorate seems straightforward enough: Blitzlicht meant that human beings could record images in dark environments more accurately than ever before. But that new capability also expanded the space of possibility for other ways of seeing. This is what Riis understood almost immediately. If you could see in the dark, if you could share that vision with strangers around the world thanks to the magic of photography, then the underworld of Five Points could, at long last, be seen in all its tragic reality. The dry, statistical accounts of “The Report of the Council of Hygiene and Public Health” would be replaced with actual human beings sharing physical space of devastating squalor.

The network of minds that invented flash photography—from the first tinkerers with limelight to Smyth to Miethe and Gaedicke—had deliberately set out with a clearly defined goal: to build a tool that would allow photographs to be taken in darkness. But like almost every important innovation in human history, that breakthrough created a platform that allowed other innovations in radically different fields. We like to organize the world into neat categories: photography goes here, politics there. But the history of Blitzlicht reminds us that ideas always travel in networks. They come into being through networks of collaboration, and once unleashed on the world, they set into motion changes that are rarely confined to single disciplines. One century’s attempt to invent flash photography transformed the lives of millions of city dwellers in the next century.

Riis’s vision should also serve as a corrective to the excesses of crude techno-determinism. It was virtually inevitable that someone would invent flash photography in the nineteenth century. (The simple fact that it was invented multiple times shows us that the time was ripe for the idea.) But there was nothing intrinsic to the technology that suggested it be used to illuminate the lives of the very people who could least afford to enjoy it. You could have reasonably predicted that the problem of photographing in low light would be “solved” be 1900. But no one would have predicted that its very first mainstream use would come in the form of a crusade against urban poverty. That twist belongs to Riis alone. The march of technology expands the space of possibility around us, but how we explore that space is up to us.

—

IN THE FALL OF 1968, the sixteen members of a graduate studio at the Yale School of Art and Architecture—three faculty and thirteen students—set off on a ten-day expedition to study urban design in the streets of an actual city. This in itself was nothing new: architecture students had been touring the ruins and the monuments of Rome or Paris or Brasília for as long as there have been architecture students. What made this group unusual is that they were leaving behind the Gothic charm of New Haven for a very different kind of city, one that happened to be growing faster than any of the old relics: Las Vegas. It was a city that looked nothing like the dense, concentrated tenements of Riis’s Manhattan. But like Riis, the Yale studio sensed that something new and significant was happening on the Vegas strip. Led by Robert Venturi and Denise Scott Brown, the husband-and-wife team who would become founders of postmodern architecture, the Yale studio had been drawn to the desert frontier by the novelty of Vegas, by the shock value they could elicit by taking it seriously, and by the sense that they were watching the future being born. But as much as anything, they had come to Vegas to see a new kind of light. They were drawn, postmodern moths to the flame, to neon.

1960s night scene in downtown Las Vegas, Nevada

While neon is technically considered one of the “rare gases,” it is actually ubiquitous in the earth’s atmosphere, just in very small quantities. Each time you take a breath, you are inhaling a tiny amount of neon, mixed in with all the nitrogen and oxygen that saturate breathable air. In the first years of the twentieth century, a French scientist named Georges Claude created a system for liquefying air, which enabled the production of large quantities of liquid nitrogen and oxygen. Processing these elements at industrial scale created an intriguing waste product: neon. Even though neon appears as only one part per 66,000 in ordinary air, Claude’s apparatus could produce one hundred liters of neon in a day’s work.

With so much neon lying around, Claude decided to see if it was good for anything, and so in proper mad-scientist fashion, he isolated the gas and passed an electrical current through it. Exposed to an electric charge, the gas glowed a vivid shade of red. (The technical term for this process is ionization.) Further experiments revealed that other rare gases such as argon and mercury vapor would produce different colors when electrified, and they were more than five times brighter than conventional incandescent light. Claude quickly patented his neon lights, and set up a display showcasing the invention in front of the Grand Palais in Paris. When demand surged for his product, he established a franchise business for his innovation, not unlike the model employed by McDonald’s and Kentucky Fried Chicken years later, and neon lights began to spread across the urban landscapes of Europe and the United States.

In the early 1920s, the electric glow of neon found its way to Tom Young, a British immigrant living in Utah who had started a small business hand-lettering signs. Young recognized that neon could be used for more than just colored light; with the gas enclosed in glass tubes, neon signs could spell out words much more easily than collections of lightbulbs. Licensing Claude’s invention, he set up a new business covering the American Southwest. Young realized that the soon-to-be-completed Hoover Dam would bring a vast new source of electricity to the desert, providing a current that could ionize an entire city of neon lights. He formed a new venture, the Young Electric Sign Company, or YESCO. Before long, he found himself building a sign for a new casino and hotel, The Boulders, that was opening in an obscure Nevada town named Las Vegas.

It was a chance collision—a new technology from France finding its way to a sign letterer in Utah—that would create one of the most iconic of twentieth-century urban experiences. Neon advertisements would become a defining feature of big-city centers around the world—think Times Square or Tokyo’s Shibuya Crossing. But no city embraced neon with the same unchecked enthusiasm that Las Vegas did, and most of those neon extravaganzas were designed, erected, and maintained by YESCO. “Las Vegas is the only city in the world whose skyline is made not of buildings . . . but signs,” Tom Wolfe wrote in the middle of the 1960s. “One can look at Las Vegas from a mile away on route 91 and see no buildings, no trees, only signs. But such signs! They tower. They revolve, they oscillate, they soar in shapes before which the existing vocabulary of art history is helpless.”

It was precisely that helplessness that brought Venturi and Brown to Vegas with their retinue of architecture students in the fall of 1968. Brown and Venturi had sensed that there was a new visual language emerging in that glittering desert oasis, one that didn’t fit well with the existing languages of modernist design. To begin with, Vegas had oriented itself around the vantage point of the automobile driver, cruising down Fremont Street or the strip: shop windows and sidewalk displays had given way to sixty-foot neon cowboys. The geometric seriousness of the Seagram Building or Brasília had given way to a playful anarchy: the Wild West of the gold rush thrust up against Olde English feudal designs, sitting next to cartoon arabesques, fronted by an endless stream of wedding chapels. “Allusion and comment, on the past or present or on our great commonplaces or old clichés, and inclusion of the everyday in the environment, sacred and profane—these are what are lacking in present-day Modern architecture,” Brown and Venturi wrote. “We can learn about them from Las Vegas as have other artists from their own profane and stylistic sources.”

That language of allusion and comment and cliché was written in neon. Brown and Venturi went so far as to map every single illuminated word visible on Fremont Street. “In the seventeenth century,” they wrote, “Rubens created a painting ‘factory’ wherein different workers specialized in drapery, foliage, or nudes. In Las Vegas, there is just such a sign ‘factory,’ the Young Electric Sign Company.” Until then, the symbolic frenzy of Vegas had belonged purely to the world of lowbrow commerce: garish signs pointing the way to gambling dens, or worse. But Brown and Venturi had seen something more interesting in all that detritus. As Georges Claude had experienced more than sixty years before, one person’s waste is another one’s treasure.

Think about these different strands: the atoms of a rare gas, unnoticed until 1898; a scientist and engineer tinkering with the waste product from his “liquid air”; an enterprising sign designer; and a city blooming implausibly in the desert. All these strands somehow converged to make Learning from Las Vegas—a book that architects and urban planners would study and debate for decades—even imaginable as an argument. No other book had as much influence on the postmodern style that would dominate art and architecture over the next two decades.

Learning from Las Vegas gives us a clear case study in how the long-zoom approach reveals elements that are ignored by history’s traditional explanatory frameworks: economic or art history, or the “lone genius” model of innovation. When you ask the question of why postmodernism came about as a movement, on some fundamental level the answer has to include Georges Claude and his hundred liters of neon. Claude’s innovation wasn’t the only cause, by any means, but, in an alternate universe somehow stripped of neon lights, the emergence of postmodern architecture would have in all likelihood followed a different path. The strange interaction between neon gas and electricity, the franchise model of licensing new technology—each served as part of the support structure that made it even possible to conceive of Learning from Las Vegas.

This might seem like yet another game of Six Degrees of Kevin Bacon: follow enough chains of causality and you can link postmodernism back to the building of the Great Wall of China or the extinction of the dinosaurs. But the neon-to-postmodernism connections are direct links: Claude creates neon light; Young brings it to Vegas, where Venturi and Brown decide to take its “revolving and oscillating” glow seriously for the first time. Yes, Venturi and Brown needed electricity, too, but just about everything needed electricity in the 1960s: the moon landing, the Velvet Underground, the “I Have a Dream” speech. By the same token, Venturi and Brown required the noble gases, too; the odds are pretty good that they needed oxygen to write Learning from Las Vegas. But it was the rare gas of neon that made their story unique.

—

IDEAS TRICKLE OUT OF SCIENCE, into the flow of commerce, where they drift into the less predictable eddies of art and philosophy. But sometimes they venture upstream: from aesthetic speculation into hard science. When H. G. Wells published his groundbreaking novel The War of the Worlds in 1898, he helped invent the genre of science fiction that would play such a prominent role in the popular imagination during the century that followed. But that book introduced a more specific item to the fledging sci-fi canon: the “heat ray,” used by the invading Martians to destroy entire towns. “In some way,” Wells wrote of his technologically savvy aliens, “they are able to generate an intense heat in a chamber of practically absolute non-conductivity. This intense heat they project in a parallel beam against any object they choose, by means of a polished parabolic mirror of unknown composition, much as the parabolic mirror of a lighthouse projects a beam of light.”

The heat ray was one of those imagined concoctions that somehow get locked into the popular psyche. From Flash Gordon to Star Trek to Star Wars, weapons using concentrated beams of light became almost de rigueur in any sufficiently advanced future civilization. And yet, actual laser beams did not exist until the late 1950s, and didn’t become part of everyday life for another two decades after that. Not for the last time, the science-fiction authors were a step or two ahead of the scientists.

But the sci-fi crowd got one thing wrong, at least in the short term. There are no death rays, and the closest thing we have to Flash Gordon’s arsenal is laser tag. When lasers did finally enter our lives, they turned out to be lousy for weapons, but brilliant for something the sci-fi authors never imagined: figuring out the cost of a stick of chewing gum.

Like the lightbulb, the laser was not a single invention; instead, as the technology historian Jon Gertner puts it, “it was the result of a storm of inventions during the 1960s.” Its roots lie in research at Bell Labs and Hughes Aircraft and, most entertainingly, in the independent tinkering of physicist Gordon Gould, who memorably notarized his original design for the laser in a Manhattan candy store, and who went on to have a thirty-year legal battle over the laser patent (a battle he eventually won). A laser is a prodigiously concentrated beam, light’s normal chaos reduced down to a single, ordered frequency. “The laser is to ordinary light,” Bell Lab’s John Pierce once remarked, “as a broadcast signal is to static.”

Unlike the lightbulb, however, the early interest in the laser was not motivated by a clear vision of a consumer product. Researchers knew that the concentrated signal of the laser could be used to embed information more efficiently than could existing electrical wiring, but exactly how that bandwidth would be put to use was less evident. “When something as closely related to signaling and communication as this comes along,” Pierce explained at the time, “and something is new and little understood, and you have the people who can do something about it, you’d just better do it, and worry later just about the details of why you went into it.” Eventually, as we have already seen, laser technology would prove crucial to digital communications, thanks to its role in fiber optics. But the laser’s first critical application would appear at the checkout counter, with the emergence of bar-code scanners in the mid-1970s.

The idea of creating some kind of machine-readable code to identify products and prices had been floating around for nearly half a century. Inspired by the dashes and dots of Morse code, an inventor named Norman Joseph Woodland designed a visual code that resembled a bull’s-eye in the 1950s, but it required a five-hundred-watt bulb—almost ten times brighter than your average lightbulb—to read the code, and even then it wasn’t very accurate. Scanning a series of black-and-white symbols turned out to be the kind of job that lasers immediately excelled at, even in their infancy. By the early 1970s, just a few years after the first working lasers debuted, the modern system of bar codes—known as the Universal Product Code—emerged as the dominant standard. On June 26, 1974, a stick of chewing gum in a supermarket in Ohio became the first product in history to have its bar code scanned by a laser. The technology spread slowly: only one percent of stores had bar-code scanners as late as 1978. But today, almost everything you can buy has a bar code on it.

In 2012, an economics professor named Emek Basker published a paper that assessed the impact of bar-code scanning on the economy, documenting the spread of the technology through both mom-and-pop stores and big chains. Basker’s data confirmed the classic trade-offs of early adoption: most stores that integrated bar-code scanners in the early years didn’t see much benefit from them, since employees had to be trained to use the new technology, and many products didn’t have bar codes yet. Over time, however, the productivity gains became substantial, as bar codes became ubiquitous. But the most striking discovery in Basker’s research was this: The productivity gains from bar-code scanners were not evenly distributed. Big stores did much better than small stores.

There have always been inherent advantages to maintaining a large inventory of items in a store: the customer has more options to choose from, and items can be purchased in bulk from wholesalers for less money. But in the days before bar codes and other forms of computerized inventory-management tools, the benefits of housing a vast inventory were largely offset by the costs of keeping track of everything. If you kept a thousand items in stock instead of a hundred, you needed more people and time to figure out which sought-after items needed restocking and which were just sitting on the shelves taking up space. But bar codes and scanners greatly reduced the costs of maintaining a large inventory. The decades after the introduction of the bar-code scanner in the United States witnessed an explosion in the size of retail stores; with automated inventory management, chains were free to balloon into the epic big-box stores that now dominate retail shopping. Without bar-code scanning, the modern shopping landscape of Target and Best Buy and supermarkets the size of airport terminals would have had a much harder time coming into being. If there was a death ray in the history of the laser, it was the metaphoric one directed at the mom-and-pop, indie stores demolished by the big-box revolution.

—

WHILE THE EARLY SCI-FI FANS of War of the Worlds and Flash Gordon would be disappointed to see the mighty laser scanning packets of chewing gum—its brilliantly concentrated light harnessed for inventory management—their spirits would likely improve contemplating the National Ignition Facility, at the Lawrence Livermore Labs in Northern California, where scientists have built the world’s largest and highest-energy laser system. Artificial light began as simple illumination, helping us read and entertain ourselves after dark; before long it had been transformed into advertising and art and information. But at NIF, they are taking light full circle, using lasers to create a new source of energy based on nuclear fusion, re-creating the process that occurs naturally in the dense core of the sun, our original source of natural light.

Deep inside the NIF, near the “target chamber,” where the fusion takes place, a long hallway is decorated with what appears, at first glance, to be a series of identical Rothko paintings, each displaying eight large red squares the size of a dinner plate. There are 192 of them in total, each representing one of the lasers that simultaneously fire on a tiny bead of hydrogen in the ignition chamber. We are used to seeing lasers as a pinpoint of concentrated light, but at NIF, the lasers are more like cannonballs, almost two hundred of them summed together to create a beam of energy that would have made H. G. Wells proud.

The multibillion-dollar complex has all been engineered to execute discrete, microsecond-long events: firing the lasers at the hydrogen fuel while hundreds of sensors and high-speed cameras observe the activity. Inside the NIF, they refer to these events as “shots.” Each shot requires the meticulous orchestration of more than six hundred thousand controls. Each laser beam travels 1.5 kilometers guided by a series of lenses and mirrors, and combined they build in power until they reach 1.8 million joules of energy and five-hundred-trillion watts, all converging on a fuel source the size of a peppercorn. The lasers have to be positioned with a breathtaking accuracy, the equivalent of standing on the pitcher’s mound at AT&T Park in San Francisco and throwing a strike at Dodger Stadium in Los Angeles, some 350 miles away. Each microsecond pulse of light has, for its brief existence, a thousand times the amount of energy in America’s entire national grid.

When all of NIF’s energy slams into its millimeter-sized targets, unprecedented conditions are generated in the target materials—temperatures of more than a hundred million degrees, densities up to a hundred times the density of lead, and pressures more than a hundred billion times Earth’s atmospheric pressure. These conditions are similar to those inside stars, the cores of giant planets, and nuclear weapons—allowing NIF to create, in essence, a miniature star on Earth, fusing hydrogen atoms together and releasing a staggering amount of energy. For that fleeting moment, as the lasers compress the hydrogen, that fuel pellet is the hottest place in the solar system—hotter, even, than the core of the sun.

The goal of the NIF is not to create a death ray—or the ultimate bar-code scanner. The goal is to create a sustainable source of clean energy. In 2013, NIF announced that the device had for the first time generated net positive energy during several of its shots; by a slender margin, the fusion process required less energy than it created. It is still not enough to reproduce efficiently on a mass scale, but NIF scientists believe that with enough experimentation, they will eventually be able to use their lasers to compress the fuel pellet with almost perfect symmetry. At that point, we would have a potentially limitless source of energy to power all the lightbulbs and neon signs and bar-code scanners—not to mention computers and air-conditioners and electric cars—that modern life depends on.

Vaughn Draggoo inspects a huge target chamber at the National Ignition Facility in California, a future test site for light-induced nuclear fusion. Beams from 192 lasers will be aimed at a pellet of fusion fuel to produce a controlled thermonuclear blast (2001).

Those 192 lasers converging on the hydrogen pellet are a telling reminder of how far we have come in a remarkably short amount of time. Just two hundred years ago, the most advanced form of artificial light involved cutting up a whale on the deck of a boat in the middle of the ocean. Today we can use light to create an artificial sun on Earth, if only for a split-second. No one knows if the NIF scientists will reach their goal of a clean, sustainable fusion-based energy source. Some might even see it as a fool’s errand, a glorified laser show that will never return more energy than it takes in. But setting off for a three-year voyage into the middle of the Pacific Ocean in search of eighty-foot sea mammals was every bit as crazy, and somehow that quest fueled our appetite for light for a century. Perhaps the visionaries at NIF—or another team of muckers somewhere in the world—will eventually do the same. One way or another, we are still chasing new light.