3.1 Introduction to Process Design

The design of micro production processes and systems faces a variety of significant challenges today. This is due both to economic and to technological challenges. Generally speaking, in most industries micro production tends to be characterized by mass production, meaning very high lot sizes from several thousands (e.g. in medical technology) to literally billions (e.g. resistor end caps in the electronics industry). Moreover, such micro parts are often produced under severe cost pressure, with the market price for an individual part often being only a fraction of a € cent.

From an economic point of view, this means that the whole process chain has to be designed such that the output or production rate (e.g. measured in parts produced per minute) is maximized, whilst the defect rate (measured in ppm (parts per million) rejected) has to be minimized. From a technical point of view, these economic constraints are often very challenging, especially due to the specific characteristics of micro production with regard to materials, processes and products. Typical examples—in contrast to macro production—are a higher fragility of parts, scatter in and anisotropy of properties of the materials and parts produced, the highest tolerance requirements, and size effects.

In this context, and in order to deal with such challenges specific to micro production, an integrated “Micro-Process Planning and Analysis” (µ-ProPlAn) methodology and procedure with the required level of detail and complexity are suggested and implemented in software (Sect. 3.3). With this prototype software, the integrated planning of manufacturing, handling, and quality inspection at different levels of detail shall be enabled, reducing the overall effort and time for designing micro production processes, also pursuing a Simultaneous Engineering approach. Thus, three views with increasing levels of detail are provided: the process chain view, the material flow view, and the configuration view. The latter view is the closest to the part produced and the manufacturing process as such, and incorporates both qualitative and quantitative cause-effect networks to combine expert knowledge with statistical and learning-based data analysis methods. The challenge is that, between and within all views, complex interrelations occur, which are, for example, due to micro specific features such as high variances in properties. The µ-ProPlAn software prototype shall support process designers during all stages of planning from manufacturing process design to process configuration and the evaluation of process chain alternatives by analyzing and optimizing the underlying models, e.g. with respect to time- or cost-related parameters.

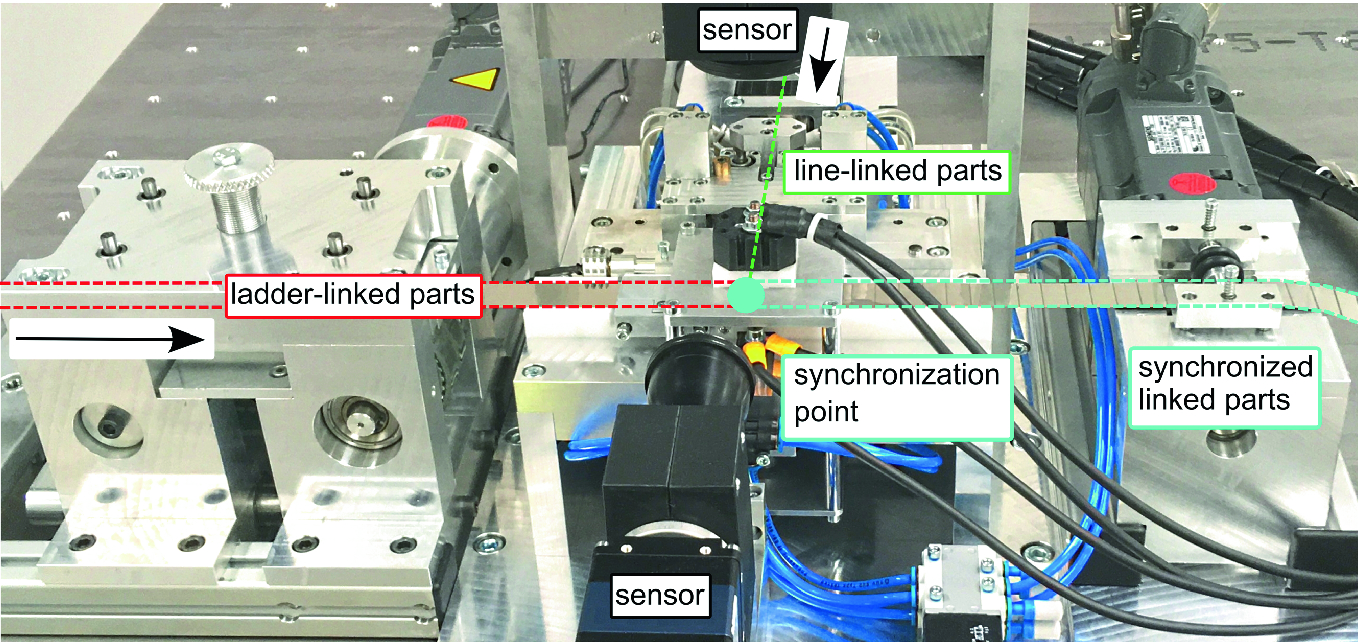

On a level closer to the actual micro manufacturing technology, in both bulk and sheet metal forming, the micro specific challenges illustrated above have to be dealt with, too (Sect. 3.2). In order to improve the planning especially of handling and assembly operations and systems, a micro specific enhancement of data exchange models on the basis of ISO 10303 is suggested. As handling of individual micro parts is fairly difficult, it is proposed and discussed to produce, convey and store these parts in the form of linked parts (either line-linked, ladder-linked or comb-linked). This reduces the effort for handling and storage (both long-term storage and highly dynamic buffering), but increases the effort for referencing parts with respect to their position and orientation at high production rates. Moreover, the force transfer especially during forming between neighboring parts due to low stiffness has to be considered, e.g. in the design of conveyors or (adaptive) guides, and can have an effect on part quality and accuracy. Understanding and modeling such scatter in accuracy can potentially allow us to widen the tolerance fields by methods such as selective assembly, thus minimizing waste. However, a prerequisite there is to master appropriate strategies for synchronization.

Acknowledgements The editors and authors of this book like to thank the Deutsche Forschungsgemeinschaft DFG (German Research Foundation) for the financial support of the SFB 747 “Mikrokaltumformen – Prozesse, Charakerisierung, Optimierung” (Collaborative Research Center “Micro Cold Forming – Processes, Characterization, Optimization”). We also like to thank our members and project partners of the industrial working group as well as our international research partners for their successful cooperation.

3.2 Linked Parts for Micro Cold Forming Process Chains

Philipp Wilhelmi*, Ann-Kathrin Onken, Christian Schenck, Bernd Kuhfuss and Kirsten Tracht

Abstract The method of production as linked parts allows a significant increase of output rates compared to the production of loose micro parts. In the macro range, it is already applied to sheet metal forming, for example, in the production of structural parts in the automobile or aviation industry, but a simple downscaling is not appropriate. The goal of producing high volumes of high-quality parts requires micro-specific methods. A holistic concept is presented considering planning and production. The main aspects of planning are the design of linked parts and the production planning. Furthermore, an approach is introduced to widen the tolerance field for assemblies to the production of linked micro parts. This approach requires the consideration of all affected process chains during planning. Linking of the parts enables them to be conveyed as a string and thereby simplifies handling, but also causes new challenges during production. Specific handling technologies are studied to overcome these challenges. Finally, a concept for the physical synchronization with regard to the investigation of tolerance field widening is presented.

Keywords Handling ∙ Assembly ∙ Production Planning

3.2.1 Introduction

Achieving high production rates is the key factor for a profitable industrial production of micro parts because of the typically low unit prices. Due to high tooling costs, this is especially relevant for cold forming. In addition to the small tolerances, fragility and sticking of the parts to each other or machine components make handling a major bottleneck in this context. This is mainly related to the surface-to-volume ratio, which increases with decreasing part size [Qin15]. The strength and weight of the parts depend on the volume, while the adhesion forces are related to the surface. Fantoni et al. have carried out extensive research in this field related to grasping [Fan14]. In the literature many different concepts for the handling of micro parts can be found, where grasping is involved at least for orientation or fixation, as presented in [Fle11], for example. However, most of these systems do not achieve the desired clock rates. Vibratory bowl feeders are the most common systems applied in the industrial environment so far [Kru09]. They are robust in their function and comparatively inexpensive, but only applicable for a limited range of types of micro parts and do not allow the production in fixed cycle times. The concept of production as linked parts, presented in [Kuh11], eliminates or strongly simplifies most of the speed-limiting handling operations like separation, grasping, releasing, orientation and fixation of individual parts. A linking structure keeps the parts interconnected and allows feeding of the parts as a string throughout the whole of the production stages. Examples of the industrial application of production as linked macro parts are mainly found in the manufacturing of sheet metal parts by punching, bending and deep drawing. The sheet is fed stepwise as a strip and processed by progressive dies. Lead frames for surface-mounted devices (SMD) or electrical connectors are produced as linked parts with features in the micro range. The sheet thickness for these applications is typically s ≥ 100 µm. In the MASMICRO project, the feeding and processing of thinner sheet material with progressive dies has been investigated [Qin08]. Merklein et al. considered a concept for massive forming as linked parts. Before the actual forming process, billets are formed from a metal strip (thickness: 1 mm ≤ s ≤ 3 mm) and remain therein [Mer12].

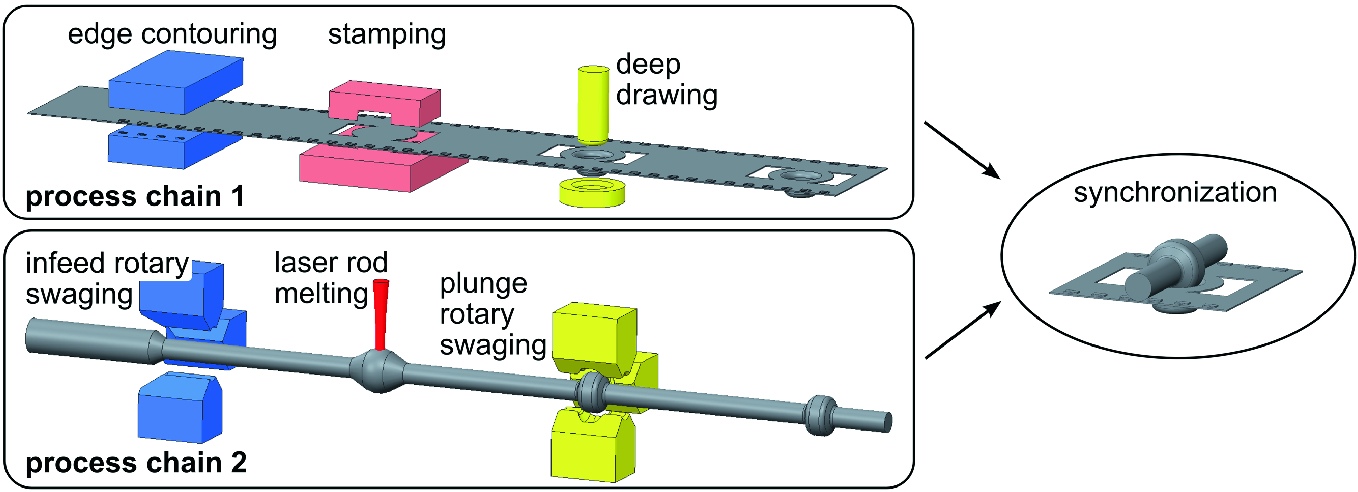

Considered process chains

In order to enable the mass production of different types of linked micro parts, two major fields of research are addressed. The first one is the planning of linked micro parts. In combination with the linking of the parts also micro-specific planning methods are required. The buffering of processes as well as the arrangement of the processes changes significantly, as shown in Sect. 3.2.2. The main focus of the second field of research is on the production of linked parts. Due to the linking of the parts, specific handling concepts are required. These are presented in Sect. 3.2.3 together with the synchronization of parts for building pre-assemblies.

3.2.2 Design and Production Planning of Linked Parts

The planning and controlling of processes is required to ensure an economic production. Considering the production of linked micro parts, the manufacturing and assembly of parts in high quantities and quality is influenced by the interconnection of the parts, the small dimensions, and low tolerances. Therefore, three subject areas are addressed in the following. In the first area, the design of the linked parts as well as a product model for deriving the data throughout the production stages are presented (see Sect. 3.2.2.1). These results are required for deciding which kinds of linked parts are applicable, and how to define the linking. Furthermore, the product model and the connection of processes are important for the second field of research. Due to the fact that every manufacturing stage influences the dimensions of workpieces in a different way and that the statistical distributions of the macro range are not applicable, micro-specific distributions are presented for the simulation of process chains, as well as recommendations for the arrangement of process steps for increasing the throughput (see Sect. 3.2.2.2). The third field involves the control of the production stages. In contrast to conventional approaches, the increase of assembled parts is reached by a design adjustment. The linking of the parts enables the consideration of trends occurring, for example, as caused by wear. The deviation of the geometrical properties is used for the identification of assemblies and for playing back this information to the design, as shown in Sect. 3.2.2.3.

3.2.2.1 Design and Product Model of Linked Parts

Example of line-linked spheres

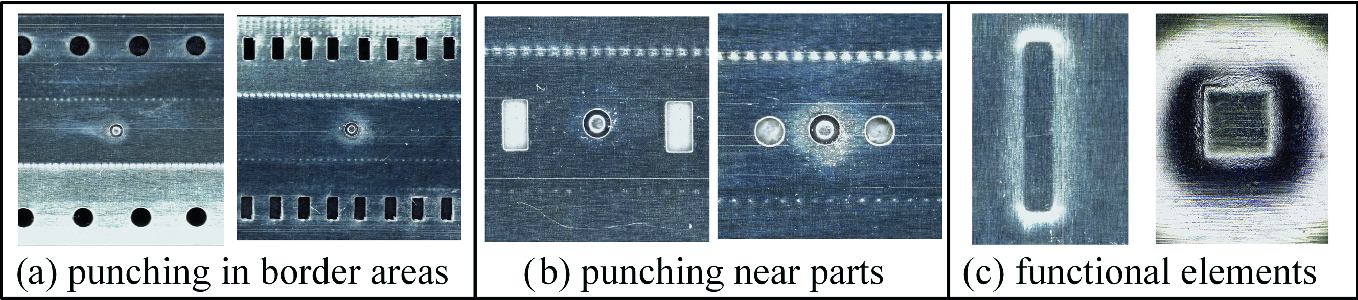

Examples of the design of the border area of ladder-linked parts for absorbing forces

Besides the transfer of forces, such as the accelerating forces, the design of linked parts could be used for providing further functions and assisting while manufacturing parts. Especially the border areas of the ladder-linked parts could be utilized for this [Wek14]. Examples of the utilization of the border areas are lateral guide elements, pleats to increase the local stiffness, reference points, elements to modify the feeding force transmission in a belt conveyor, and spacers to protect the parts when they are coiled for storage [Kuh14]. Functional punching and elements in the rim of ladder-linked parts are depicted in Fig. 3.3.

The linked parts are produced in multistage processes. For this reason, product data models for a standard exchange of data are required. One possibility is the application of ISO 10303 STEP, which is the abbreviation of “Standard for the Exchange of Product Model Data”. Therefore, different methods, guidelines and application protocols are used. ISO 10303 is divided into classified series [ISO04]. Special areas are defined in the 200-series like the AP 207, where a product data model for sheet metal die planning and design is defined [ISO11]. Ladder-linked parts are made of sheet metal. For this reason, they are manufactured using sheet treating processes and could be integrated into AP 207 [Tra12].

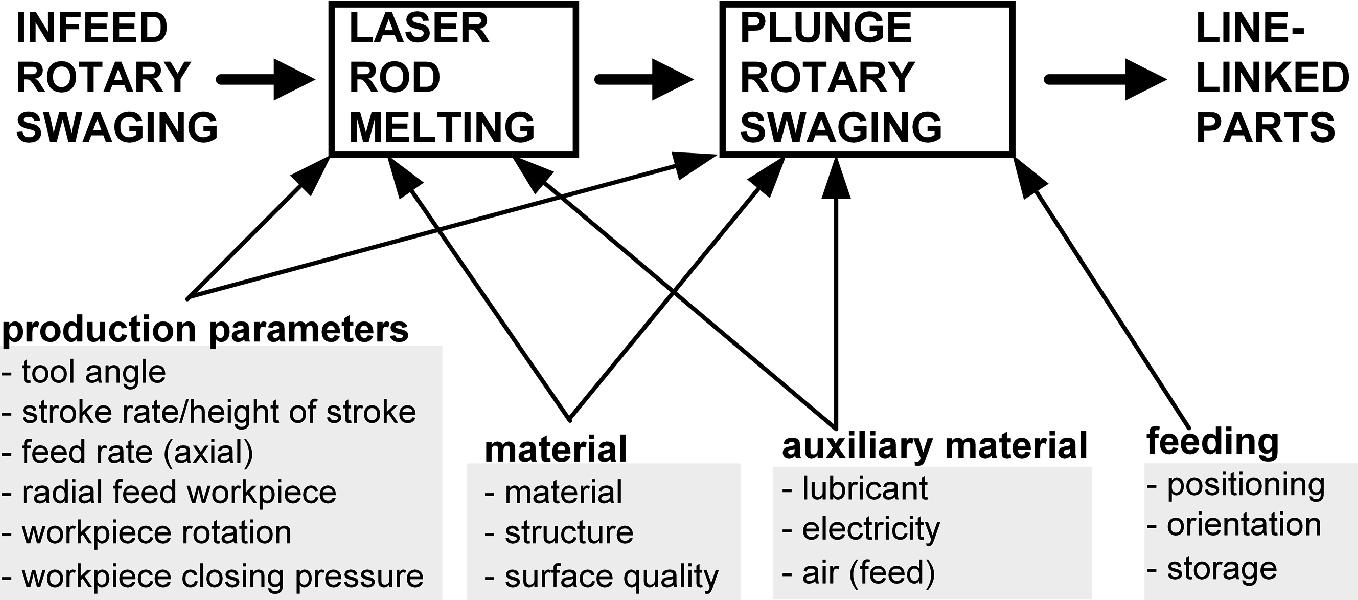

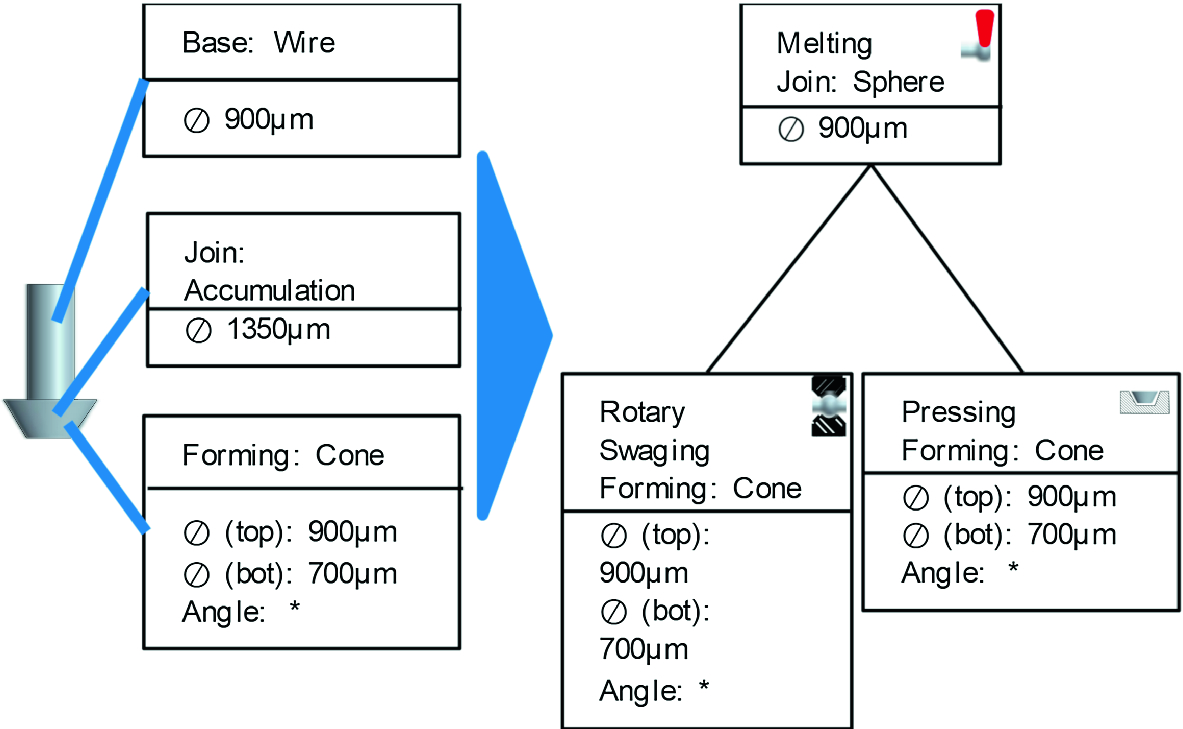

Information and data for plunge rotary swaging and laser melting

The identified application objects and their relation are the basis for the development of the AIM of the production process for linked parts. For completing the AIM, the geometry of the linked micro parts needs to be modularized. Therefore, it is especially important to summarize the piece part instances and the linkage parts instances [Wek16].

3.2.2.2 Production Planning

For achieving high output rates while guaranteeing an adequate part quality, it is important to profoundly understand the whole production process with its interfaces, technologies, and process conditions [Vol04]. In the following, important aspects for planning the mass production of linked micro parts are introduced, based on the example of manufacturing and assembling linked spherical parts and cups.

The occurrence of disturbances or scrap parts requires recommendations for actions that can improve multi-stage processes. Due to the fact that the impact of defects in shape, dimensions, and surfaces is much higher than in macro production [Wek14a], micro-specific process properties have to be taken into account. Simulation studies are an important tool in the planning of manufacturing lines even in the micro range. For reliable simulation results, it is important to use micro-specific distributions during the simulation of micro manufacturing processes. Therefore, micro-specific statistical distributions of ladder-linked parts [Wek13] and line-linked parts [Wek13a] are considered.

Looking at micro cups, the deviation of the rib thickness and the variability of the rib altitude, for example, are used for measuring the quality of the cups. Under consideration of the basic material, and the coating of the punching tool, the distributions for ladder-linked parts are investigated. In many cases, the burr distribution is applicable [Wek13].

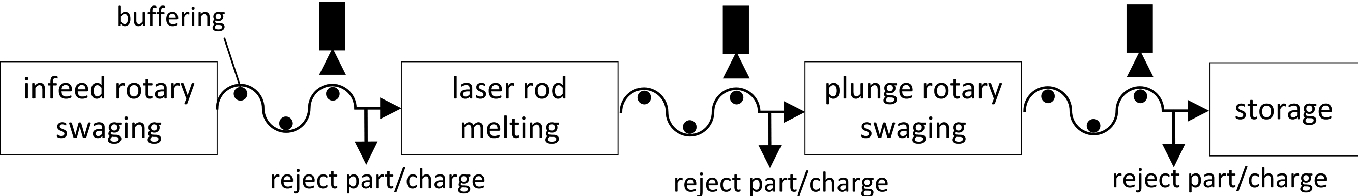

Simulation scenario of line-linked parts with and buffering and rejecting

Due to the rigid linking of the parts, the processing steps are also rigidly linked. Therefore, the configuration of buffers has a major influence on the throughput of the system and balances the varying process times. Furthermore, scrap parts are also present in the linked parts. Therefore, it is important to evaluate if the extraction of only one part or even a whole charge is to be recommended. Due to the fact that the unit prices are comparatively lower as in the macro range, it could be more profitable to extract charges [Wek14a].

The distribution and the amount of scrap parts influence the way in which the processes have to be arranged to improve the production of linked parts. In the following, recommendations for process chains are presented that are investigated by simulating the process chains of linked parts. In the case of a large amount of widespread scrap parts, it is best to extract only single parts instead of batches, due to the fact that there is a minor possibility of batches without defective parts. The implementation of buffers has a positive impact on the output, due to the balancing of the processes. Especially when scrap parts are extracted, it is important to buffer these processes. The decision whether defective parts should be extracted or manufactured in further steps is also dependent on the number of defective parts. When there is a large number of scrap parts, the extraction of the scrap parts has a positive impact on the throughput [Wek14a]. For a further increase of the number of assembled good parts, the geometrical deviations, which are caused by the whole manufacturing line, need to be considered. An approach to the widening of the tolerance field is presented in the following section.

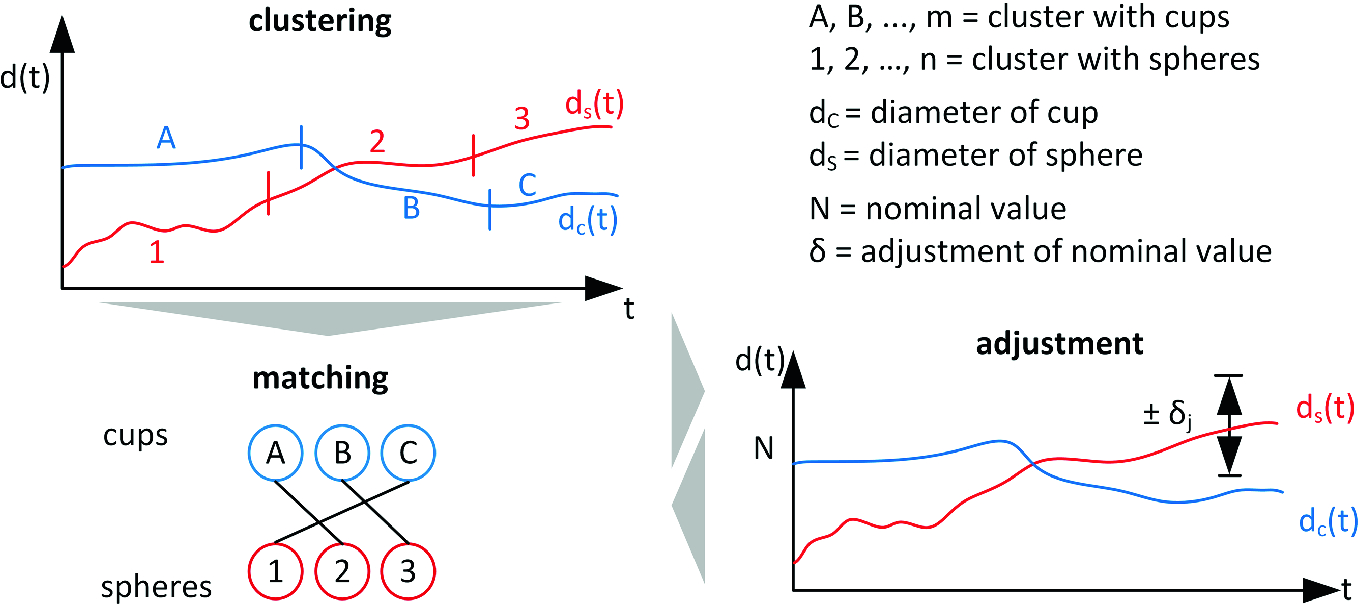

3.2.2.3 Tolerance Field Widening

The linking of the parts maintains the production order. This facilitates an investigation of the deviation of geometrical dimensions over the production time. The knowledge of these deviations enables a widening of the tolerance field by identifying sections that could be assembled according to the fit [Onk17]. This approach is similar to selective assembly, where parts are sorted and matched for assembly according to their geometric characteristics. Several approaches to selective assembly, like the identification of assembly batches [Raj11], and reducing surplus parts using genetic algorithms [Kum07], are known. The adaptation of the production based on selective assembly has also been focused on in research. Approaches for selective and adaptive production systems offer the possibility of adjusting the process parameters directly during production. Kayasa et al. offer a simulation-based evaluation of adaptive and selective production systems [Kay12]. Lanza et al. provide a method for real-time optimization in selective and adaptive production systems [Lan15].

Schemata of the tolerance field widening

Linked parts clustering [Tra18]

In the second step, the parts are matched under consideration of the fit. Due to the fact that the matching of these two parts is similar to bipartite graphs, matching algorithms for bipartite graphs like the Munkres algorithm could be used [Onk17]. For the matching of clusters with varying lot sizes, these algorithms must be enhanced by adding additional rows due to the fact that most of them require N × N matrices.

As the third step of the tolerance field widening, the nominal value is adjusted. Due to the known development of the trends, an ideal nominal value can be identified. This means that the ratio of the two trends enables a large number of parts to be assembled. Therefore, after every adjustment of the nominal value, the number of pre-assemblies achieved is verified by another matching [Onk17]. The results of the tolerance field widening are influenced by the number of identified sections for matching and the trends occurring. Simulation results showed that with a larger number of sections also the number of mountable parts increases. The results emphasize that there is a point at which an explicit increase of sections only initializes a small increase of the number of pre-assemblies. Due to the fact that micro parts have a comparatively low unit price, such a small increase of assemblies is insignificant. Every interruption for changing sections leads to an increase of handling operations and thus decreases the throughput. The clock rates of the manufacturing and assembly of the parts are increased [Onk17a].

3.2.3 Automated Production of Linked Micro Parts

By applying the method of production as linked parts, on the one hand, time-critical sub-functions of the handling task like the grasping of individual parts and part orientation are avoided. On the other hand, by linking the parts, new challenges arise that must be dealt with to achieve the desired high output rates. The positioning in the processing zone cannot necessarily be considered independent of the transport. Processing may cause interactions and affect neighboring parts. Further, when applying the linked parts method to the production of assemblies, different process chains need to be synchronized. To ensure a stable material flow at high cycle rates and at the same time adequate precision for the positioning in the single production stages, specific equipment is necessary.

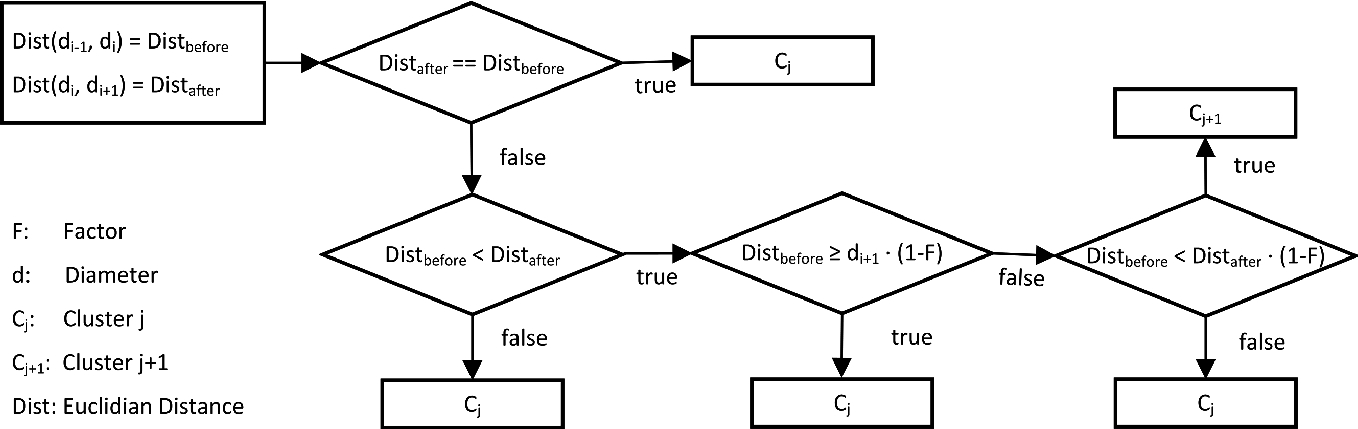

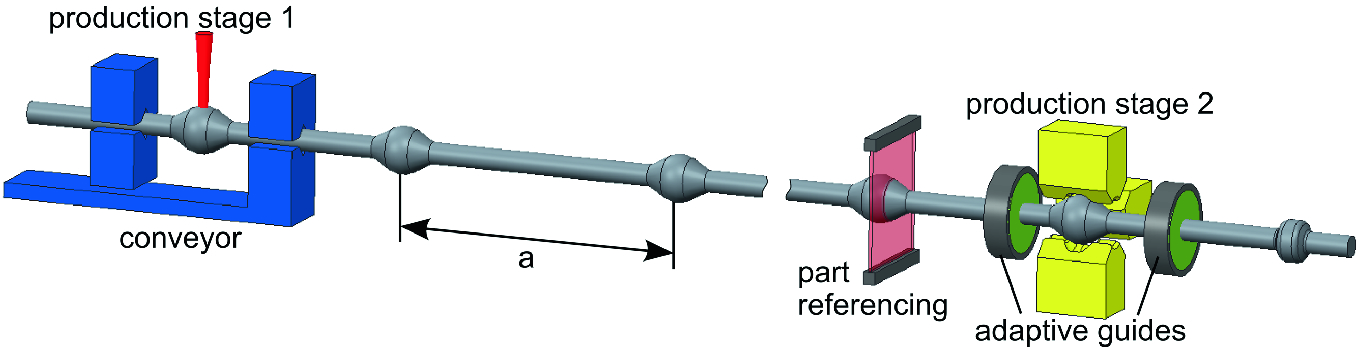

3.2.3.1 Handling Concept and Equipment

An approach for realizing a precise positioning in the macro range is to punch reference holes in the first production stage. In the following stages, pilot pins, which are integrated in the tools, realize a fine positioning. This approach, which is typically applied in the aforementioned example of production with progressive dies, hypothesizes that the general structure of the linked parts has a certain inherent stiffness and that it does not change during production. However, for the manufacturing of micro parts on the basis of thin sheet (h ≤ 100 µm) and for massive forming of line-linked parts, these requirements cannot be guaranteed. A further measure to simplify positioning, which is applied in the progressive dies, is the integration of all production stages into a compound tool. This allows low tolerances to be realized for the pitch between the single stages, which corresponds to the part distance a. In this way, a certain number of neighboring parts can be positioned and processed simultaneously. This is possible under the condition that all production stages have a similar character and can be actuated by a single press.

Multi-stage production of linked parts

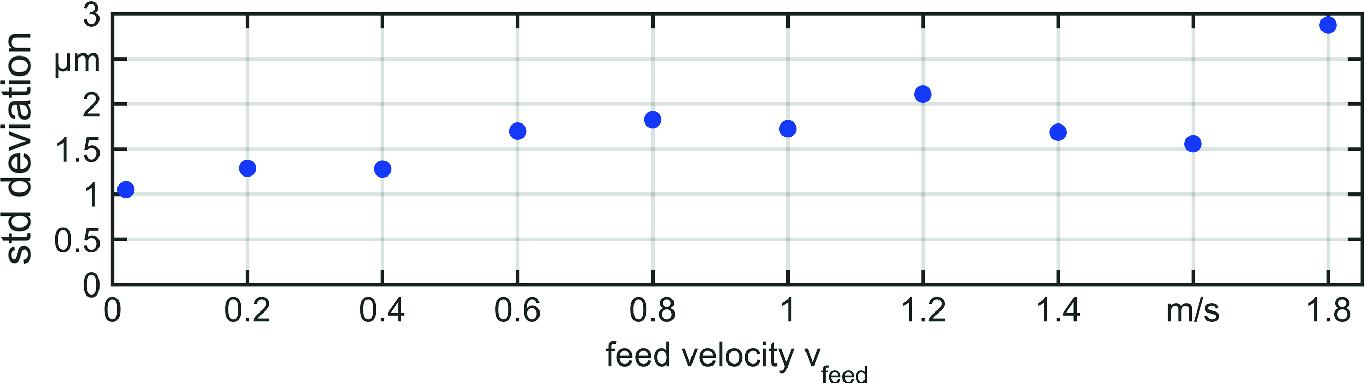

Standard deviation in dependency on velocity for part distance measurement with part referencing system (line camera), Δxtrigger = 50 µm

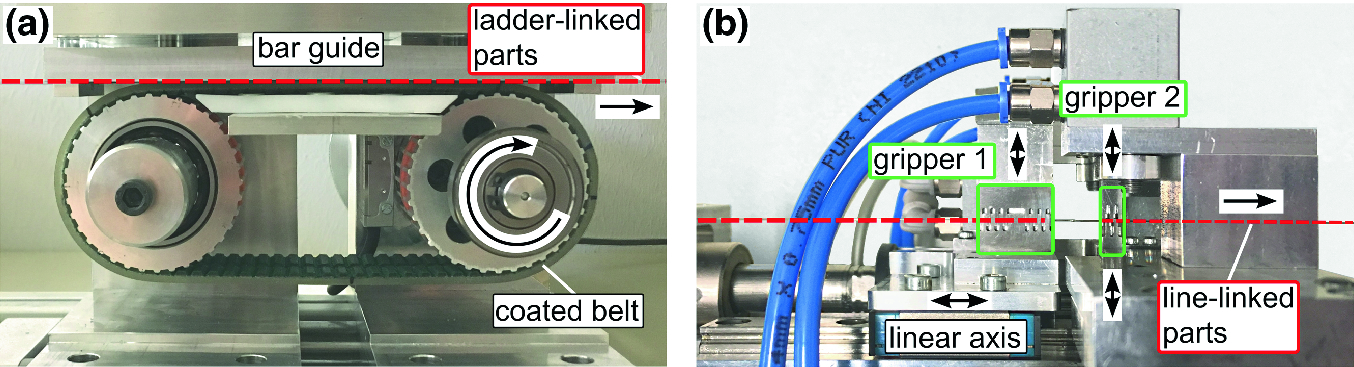

Linked parts conveyors: a belt conveyor, b feed axis with grippers

Apart from the positioning accuracy of the conveyor, the linked parts influence the positioning. The low bending stiffness of the linked parts, which counts especially for thin sheet material, may lead to buckling and cause position deviations. In order to reduce this problem, the linked parts can be fed by pulling instead of pushing. Further, a tensioning of the linked parts with the help of a hysteresis brake or a second conveyor helps to solve this problem. Considering the accuracy of the feed axis with the grippers, small deviations are introduced as a result of the gripping. But, when assuming that after referencing no gripping is performed and the relevant part is directly positioned, the accuracy depends mainly on the feed axis and is in the sub-micrometer range. The belt conveyor has a large contact area between the linked parts and the coated belts. Thereby, high feed forces can be transmitted, and the local contact force may be reduced in order to avoid deformations. The rotational motion is converted by a friction power transmission in a linear feed. Consequently, different positional errors are introduced, for example, by conversion of the motion measurement from rotation to linear shift or the appearance of slip. Considering this, the feed axis shows a better performance in terms of positioning accuracy. Nevertheless, the belt conveyor can achieve a positioning accuracy in the lower micrometer range. One means of increasing the positioning accuracy is a direct measurement of the shift of the linked parts. Due to the fragility of the parts, contactless measurement is necessary. A sensor based on speckle correlation was investigated for that purpose and shows promising results, especially with regard to the achievable resolutions [Blo16].

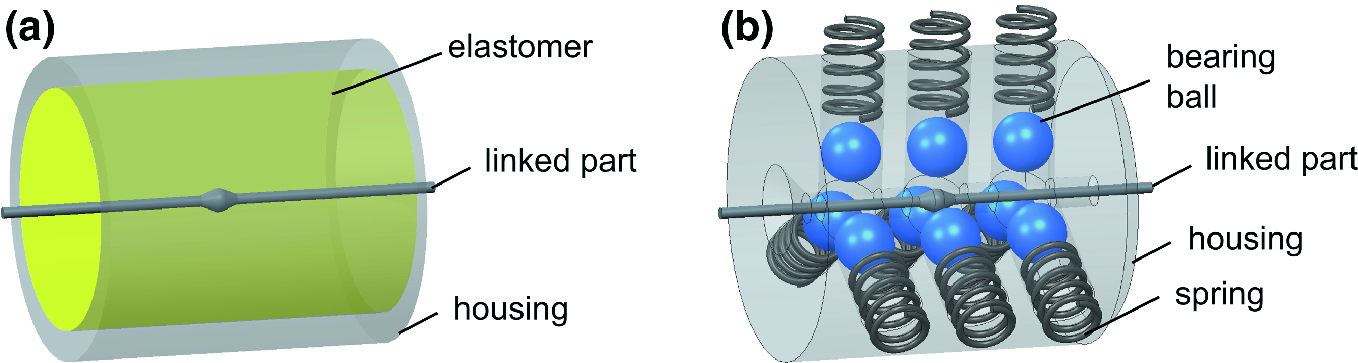

Adaptive guide concepts: a elastomer; b spring load, bearing balls

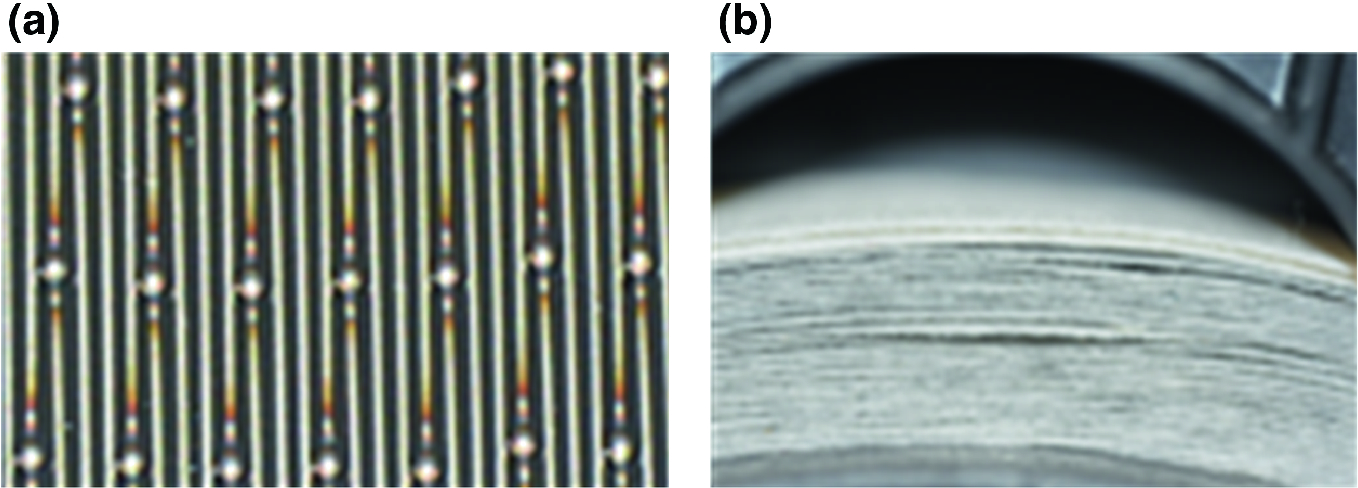

Storage of linked parts: a spooled line-linked parts, b ladder-linked parts spooled with protective layer

3.2.3.2 Effects Resulting from the Production as Linked Parts

There are different challenges that result from the linking of the parts. One is related to the fact that the feeder is applied for the transport, positioning and optional feeding during processing. Further, the structural connection leads to a transfer of forces between neighboring parts or the structure is changed by the processes. The concrete form of the effects that occur depends strongly on the manufacturing process applied.

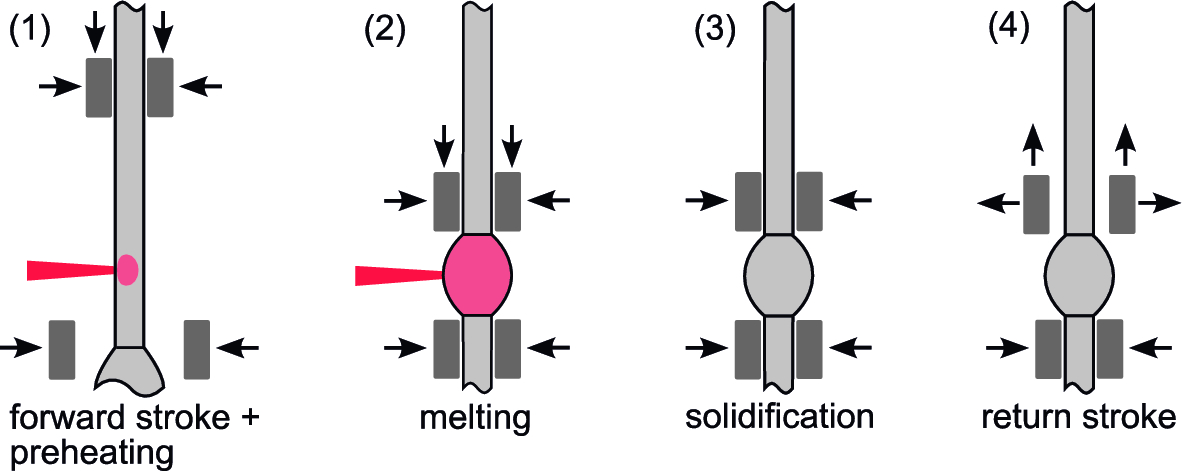

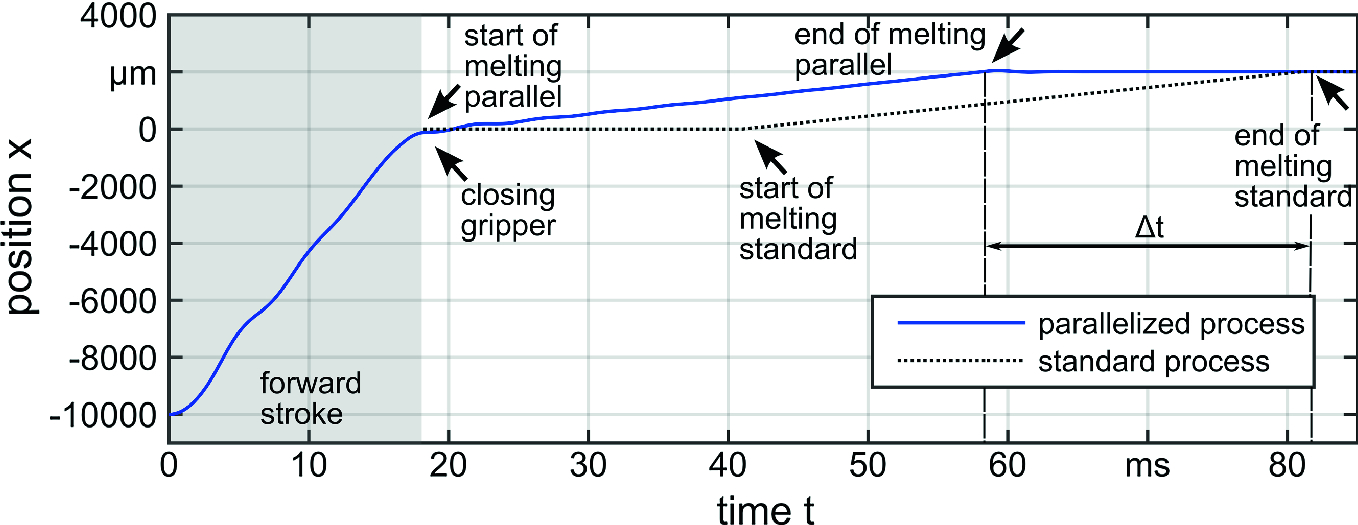

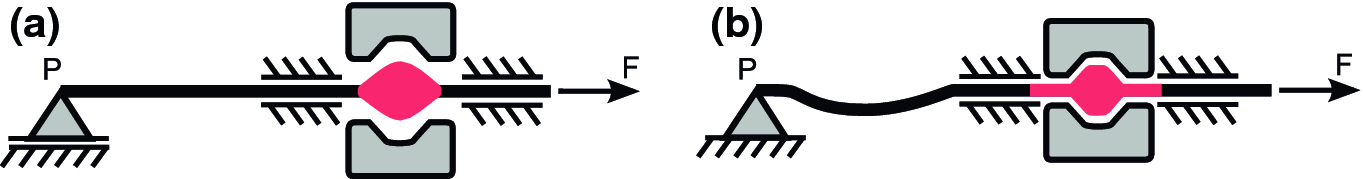

Process scheme of parallelized laser rod melting

Position measurement of parallelized process

Material flow during rotary swaging: a before processing, b during processing

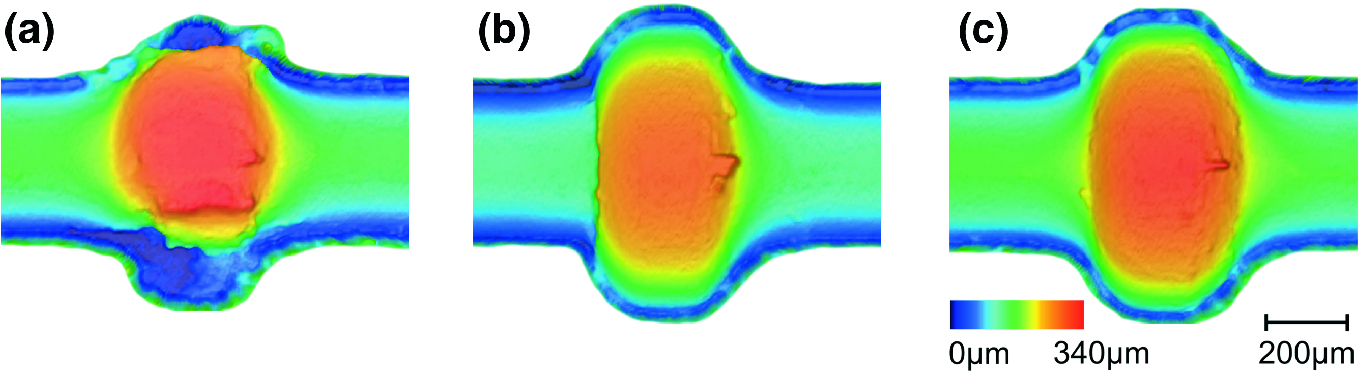

Forming results: a bad part, b asymmetric part, c symmetric part

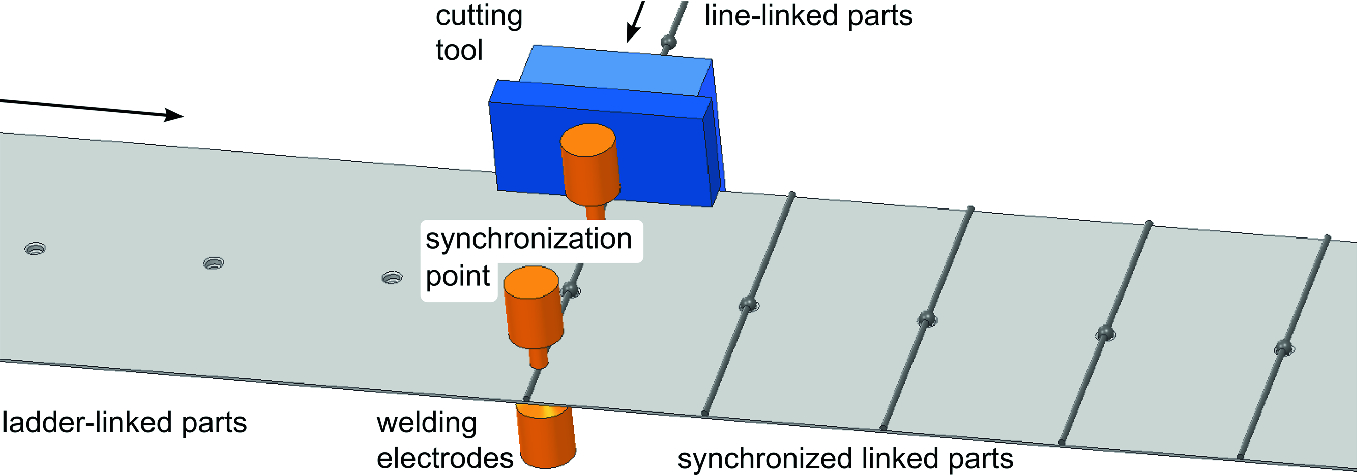

3.2.3.3 Synchronization of Linked Parts

directly joining both types of linked parts without separating them before;

separating the linked parts of both types and creating new linkage;

separating the linked parts of one type and joining them to the linked parts of the other type.

Basic function of the central module of the synchronization station

Complete setup—synchronization station

Acknowledgements The editors and authors of this book like to thank the Deutsche Forschungsgemeinschaft DFG (German Research Foundation) for the financial support of the SFB 747 “Mikrokaltumformen – Prozesse, Charakerisierung, Optimierung” (Collaborative Research Center “Micro Cold Forming – Processes, Characterization, Optimization”). We also like to thank our members and project partners of the industrial working group as well as our international research partners for their successful cooperation.

3.3 A Simultaneous Engineering Method for the Development of Process Chains in Micro Manufacturing

Daniel Rippel*, Michael Lütjen and Michael Freitag

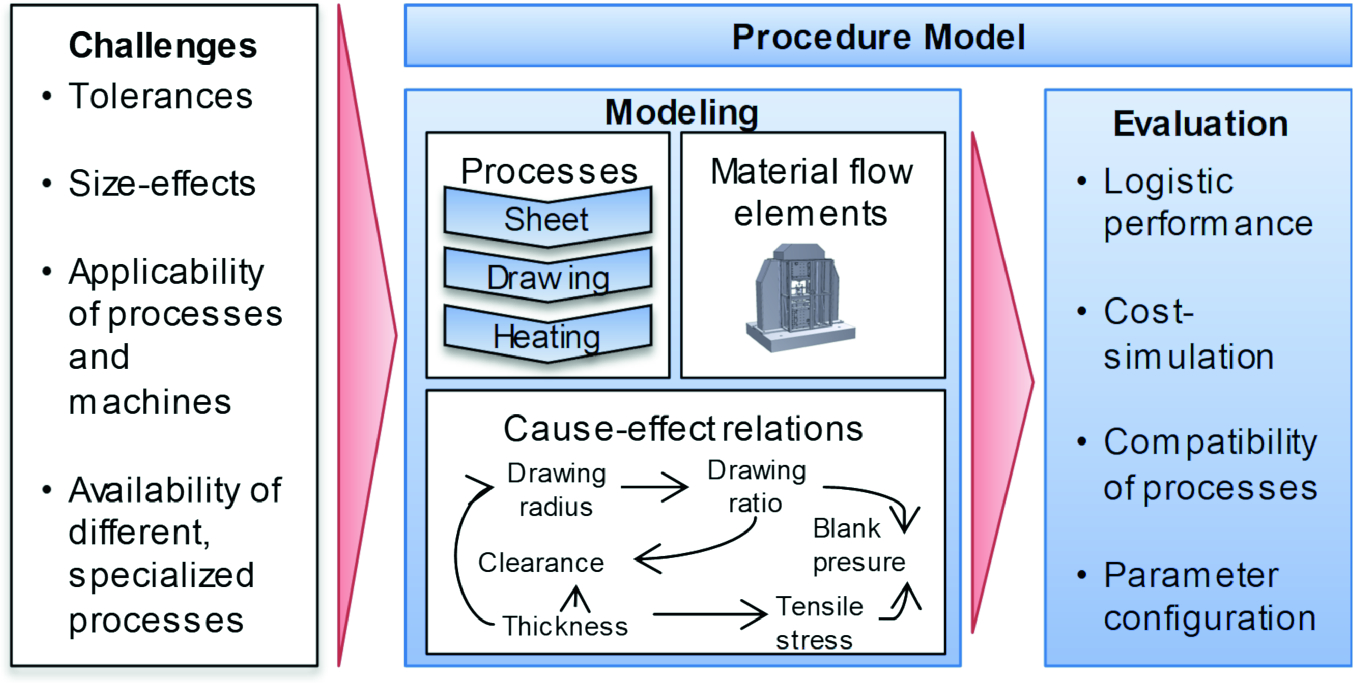

Abstract The occurrence of size-effects requires detailed planning and configuration of process chains in micro manufacturing to ensure an economic industrial production. Existing methods lack the required level of detail and complexity as well as the handling of partly missing data and information, which is required for the micro domain. Consequently, this chapter describes a methodology for the planning and configuration of processes in micro manufacturing that aims to circumvent the mentioned drawbacks of the existing approaches. The methodology itself provides tools and methods to model, configure and evaluate process chains in micro manufacturing. Whereas the process design allows the detailed specification of micro manufacturing processes, the configuration is performed by the application of so-called cause–effect networks. Such cause–effect networks depict the interrelationships between processes, machines, tools and workpieces by relevant parameters. Thereby expert knowledge is combined with methods from statistics and artificial intelligence to cope with the influences of size-effects and other uncertain effects.

Keywords Modeling ∙ Production planning ∙ Predictive Model

3.3.1 Introduction

During the last decades, the demand for metallic micro parts has continuously increased. On the one hand, these components have become increasingly smaller while on the other hand, their shape complexity and their functionality are constantly increasing. Major factors contributing to this development are the increasing number and complexity of applications for micro components as well as the increasing demand for such components in the growing markets of medical and consumer electronics [Mou13]. Besides the growing demand for Micro-Electro-Mechanical-Systems (MEMS), which are generally produced using methods from the semi-conductor industry, the demand for metallic micromechanical components is growing similarly. These micromechanical components are used as connectors for MEMS, casings, or as contacts. They cannot be manufactured using semi-conductor technology and are usually produced by applying processes from the areas of micro forming, micro injection, micro milling etc. [Han06]. In this context, cold forming processes constitute an option for the economic mass production of metallic micromechanical components, as these processes generally provide high throughput rates at comparably low energy and waste costs [DeG03].

The efficient industrial production of such components usually requires high throughput rates of up to several hundred parts per minute [Flo14], whereby very small tolerances have to be achieved. These tolerances result from the components’ small geometrical dimensions, which, by definition, are below one millimeter in at least two dimensions [Gei01]. Additionally, so called size-effects can result in increasing uncertainties and unexpected process behaviors when processes, workpieces and tools originating from the macro domain are miniaturized [Vol08].

As a result, the planning and configuration of process chains is seen as a major factor of success for the industrial production of metallic micromechanical components [Afa12]. To cope with the occurrence of size-effects, companies require tools and methods for highly precise planning, covering the complete process chain. Thereby, they have to consider the interrelationships between processes, materials, tools and devices. In micro manufacturing, small variations in single parameters or characteristics can have a significant influence along the process chain and can finally impede the compliance with the respective tolerances [Rip14].

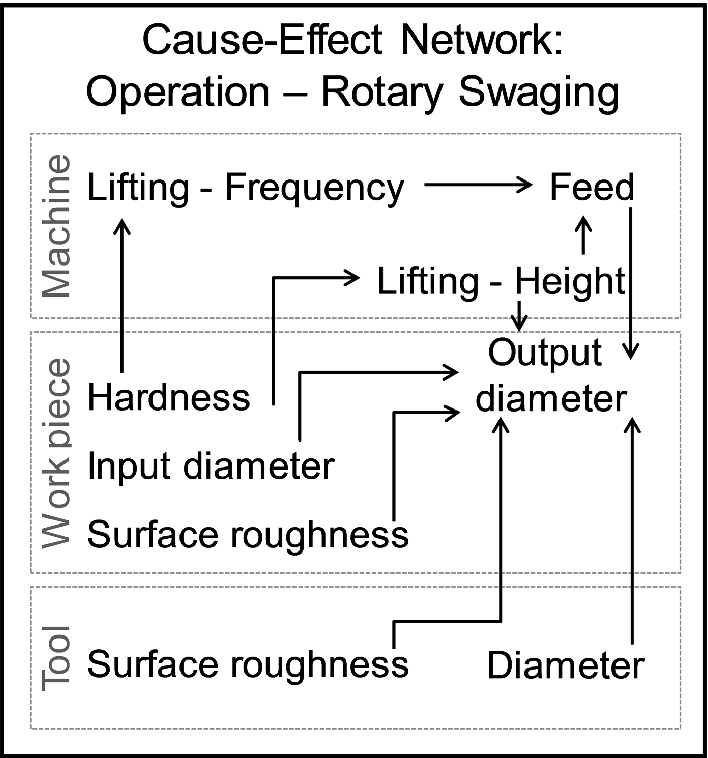

In order to facilitate the planning and configuration process, this chapter presents the “Micro-Processes Planning and Analysis” methodology which was designed to support process planners with a tool to plan and configure manufacturing process chains with the required level of detail. The methodology itself provides tools and methods to model, configure and evaluate process chains in micro manufacturing. Thereby, the configuration is performed by the application of so-called cause–effect networks, detailing the interrelationships between relevant process, machine, tool and workpiece parameters. These cause–effect networks combine expert knowledge with methods from the areas of statistics and artificial intelligence to cope with the influences of size-effects.

3.3.2 Process Planning in Micro Manufacturing

Within the literature, there are only very few approaches which allow the joint planning of process chains as well as the configuration of the processes involved. During the last few years, different articles have focused on the configuration of specific processes. Thereby, the configuration relies on detailed studies of the corresponding processes and is usually supported by very detailed physical models in the form of finite element simulations (e.g. [Afa12]). Another approach found in the literature focuses on the use of sample data (historical or experimental) as templates for the configuration of processes. Although these approaches enable a very precise configuration of a single process, the interrelations between different processes cannot be considered easily. Moreover, the construction of finite element simulations or the direct application of historical information requires a deep understanding of the processes, which is often unavailable due to size-effects or the novelty of processes.

Usually, classic methods like event-driven process chains, UML or simple flow charts are used in the context of process chain planning. Whereas these methods do not include the configuration of processes, Denkena et al. proposed an approach that indirectly addresses this topic [Den06]. This approach builds upon the modeling concept for process chains [Den11]. In this concept, a process chain consists of different process elements, again consisting of localized operations. These operations are interconnected by so-called technological interfaces that describe sets of pre- or post-conditions for each operation. While these interfaces can be configured manually, Denkena et al. also proposed the use of physical, numerical or empirical models to estimate the relationships between the pre- and post-conditions [Den06, Den14]. Although this approach enables configuration of the processes, the design of these models again requires very detailed insights into the processes. Moreover, within the micro domain, a single model can quickly be unsuitable to capture all relevant interrelations between process, machine, tool and workpiece parameters, particularly under the influence of size-effects, as its construction would require tremendous amounts of data.

3.3.3 Micro-Process Planning and Analysis (µ-ProPlAn)

The methodology “Micro-Process Planning and Analysis” (µ-ProPlAn) is being developed to support process designers in designing and configuring process chains (see [Rip17] for a more detailed description of the methodology). The modeling methodology µ-ProPlAn covers all phases from the process and material flow planning to the configuration and evaluation of these models [Rip14]. In this context, it enables the integrated planning of manufacturing, handling and quality inspection activities at different levels of detail. It consists of a modeling notation, a procedure model. and a set of methods and tools for the evaluation of the corresponding models. The evaluation covers methods to ensure the technical feasibility of the modeled process chains and production systems. Moreover, it includes a component to execute µ-ProPlAn models directly in terms of a material flow simulation to assess the modeled production system’s logistic performance.

Components of the µ-ProPlAn Methodology [Sch13]

3.3.3.1 Modeling View: Process Chains

In general, process chains enable the structured modeling and planning of business processes, and depict the logic-temporal order of manufacturing, handling and quality inspection processes. Each process consists of one or more operations. Operations transform a set of input variables into output variables. These variables are called technological interfaces and describe the specific technical properties of the processed workpiece before and after each operation [Den11]. For example, a hardening operation modifies the variable hardness, which serves as an input and an output variable for the operation. On an aggregated level, processes provide and require similar technical interfaces with regard to other preceding or succeeding processes.

The notion of process chains provides an abstraction from material flow related resources and thus from specific manufacturing techniques. The hierarchical composition of the process chain enables the planning of the overall process chain based on processes and parameters relevant to the final product. In order to integrate the classic process chain models with the other views, the technological interfaces are extended to include logistic parameters, e.g. process durations or costs. Additionally, the operations themselves are extended to hold references of model elements from the material flow view, further detailing which devices and resources are involved in performing each single operation. At this, they directly provide the connection to the material flow modeling view.

3.3.3.2 Modeling View: Material Flow

Machines | Tools, workers, supplies | Operations |

|---|---|---|

• Downtime on failure • Downtime on maintenance • Maintenance intervals • Setup times and costs • Mean time between failures • Costs while processing • Costs while idle | • Investment costs • (Tool) Service time in operations • (Worker) Costs while processing | • Duration • Probability of causing defect • Probability of detecting defect • Probability of falsely detecting defect |

3.3.3.3 Modeling View: Configuration (Cause–Effect Networks)

Hierarchy of cause–effect networks—Example of composition of a rotary swaging operation from the networks of the corresponding machine, tool and workpiece (after [Rip14a])

The design of cause–effect networks is divided into two steps: qualitative modeling and quantification. The qualitative model of the network is created by collecting all relevant parameters and denoting their influences among each other (see Fig. 3.20). Thereby, the process expert can facilitate the creation of the networks by pointing out the most relevant parameters and by indicating the relationships. Another option to attain a qualitative cause–effect network is the application of data mining techniques. For example, if experimental or production data are available, an analysis of this data can deliver preliminary insights into the cause–effect relations.

The second step concerns the quantification of the cause–effect networks. The objective is to enable the propagation of different parametrizations throughout the network. For example, this propagation allows the estimation of the outcome of processes for different materials or machining strategies. In Fig. 3.20, the use of a different material for the forging tool will result in a different surface roughness, thus influencing the overall output diameter of the processes. Through quantifying the cause–effect relationships, it is possible to estimate the results of parameter changes for all connected parameters along complete process chains.

3.3.3.4 Basic Quantification of Cause–Effect Networks

Regression and learning methods included in µ-ProPlAn [Rip14a]

Method | Description |

|---|---|

Linear regression | As a fundamental multivariate regression function, µ-ProPlAn offers the option to perform a linear regression on the data provided. Additionally, µ-ProPlAn offers functionality to linearize e.g. exponential or logarithmic data |

Polynomial regression | In the case of univariate cause–effect relations, a polynomial regression can be conducted |

Tree/Rule regressions (e.g. [Hol99]) | In contrast to the methods above, tree- or rule-based approaches do not result in a single analytic function. In general, they divide the search space into smaller segments for which a regression can be performed. Usually both methods use linear regressions for each segment |

Locally weighted linear regression (LWL/LOESS) (e.g. [Fra03]) | LWL constitutes an abstract prediction model, which performs a locally weighted linear regression each time a prediction is requested. Thereby, a kernel function is used to weight adjacent data points and finally a linear regression is performed. This method particularly excels in interpolating missing data in between available data points |

Support vector regression(e.g. [Pla98]) | Support vector machines are usually used as classifiers. Thereby, they learn models, which separate a set of data points into classes, maximizing the distance between each data point and the classification curve. The same method can be used for regression, particularly if the data provided contains strong variances |

Artificial neural networks | A neural network usually consists of a number of layers, each containing a defined number of so-called perceptrons. The perceptrons of each layer are interconnected. During the supervised training of the network, these connections’ weights are adapted to recreate the desired output on the last layer |

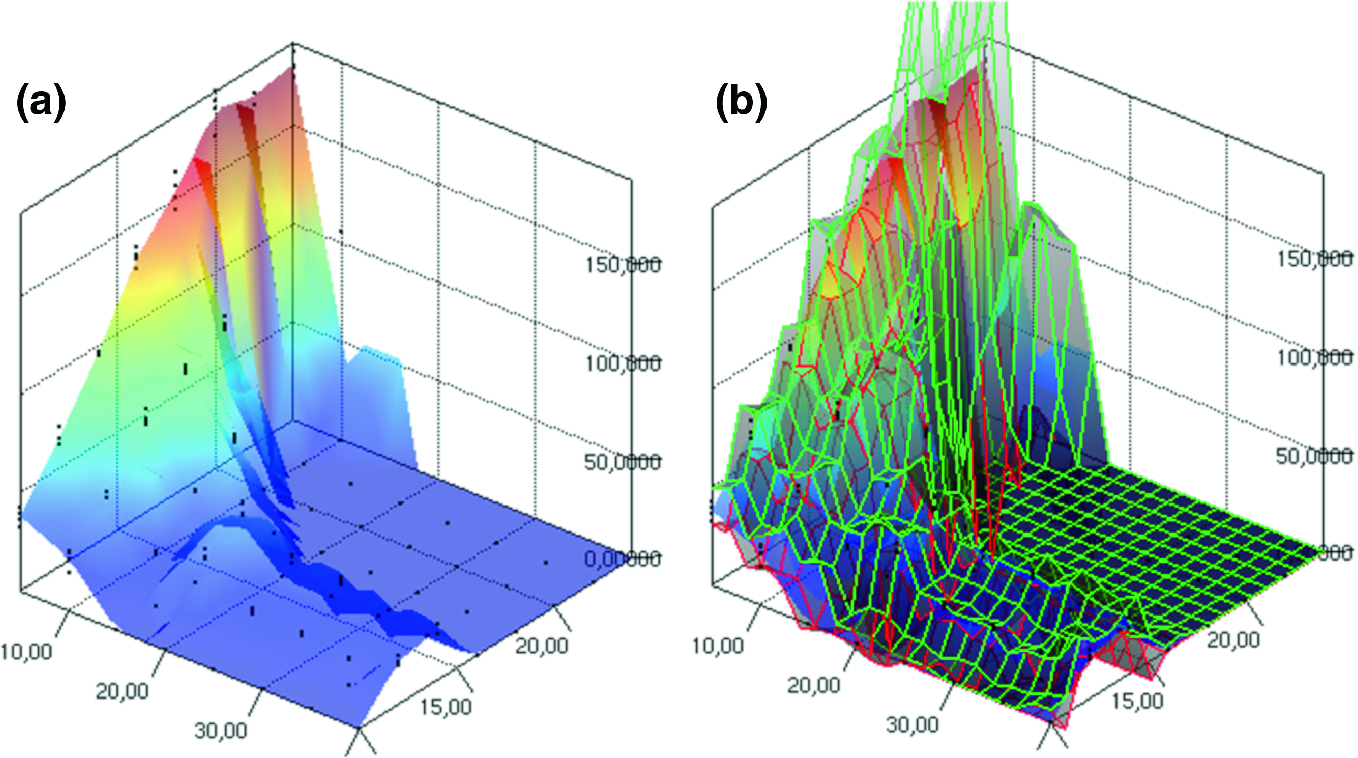

3.3.3.5 Characterization of Local Variances

While the application of the described methods enables the cause–effect networks to predict the estimated (mean) value of a parameter, they do not support the characterization and use of variances. Nevertheless, coping with these variances poses a major task for the configuration of process chains in micro manufacturing, in order to achieve a stable and efficient production. Moreover, the variance of a process can change for different parametrizations, requiring a localized approach. For example, when using a laser-chemical ablation process, the variance can change according to the flow rate of the chemicals. Therefore, µ-ProPlAn provides an estimated distribution as a prediction for a parameter’s value instead of a single mean value. Using these distributions, the process designer can e.g. try to select configurations with less variance, resulting in a more stable process/process chain, or assess the impact of less tolerated materials on the process chain.

Local calculation of the variance of each sampling point

In addition to the mean estimator, the local variance is computed for each point of the available sample data based on the corresponding standard deviation (σ). The method uses a set of experiments, each consisting of a number of independent variables (sampling points/configurations) x = (x1, x2,…) and a corresponding dependent variable (measurement). In the case of repeated measurements, each sampling point x corresponds to several values for the dependent variable, resulting in a vector yx where the number of values |yx| equals the number of measurements.

In a first step, the mean estimator μ(x) over all experiments is acquired using regression or learning techniques as described before. As a second step, the approach first determines a localized value for the standard deviation at each given point of sampling data and then further interpolates this information to enable a prediction of the variance for arbitrary points/configurations (σ(x)). The localized standard deviation for each sampling point is thereby calculated as usual, whereby the target vector y and the matrix source Xm×n, with n = |y| and m = |x|, are specifically constructed using a newly introduced parameter t to control the degree of locality. In general, the algorithm only tries to incorporate measurements for the single sampling point/configuration x. In cases where the number of repeated measurements yx for x are insufficient (|yx| < t), the algorithm incorporates additional sampling points into the calculation. On the one hand, small values for t ensure a very high locality, focusing on single sampling points or at least on very small areas around them. While a strong locality reduces the window size, it can negatively influence the estimation precision, as a precise estimation of the standard deviation requires a sufficient amount of data. On the other hand, a broader window size can usually yield better estimation results, while smoothing local changes in the standard deviation.

In the case of normally distributed values, this approach provides an accurate estimation of the overall standard deviation for each sampling point. Nevertheless, several practical applications require the estimation of skewed distributions. In this case, µ-ProPlAn estimates distinct standard deviations for both sides of the estimated mean value. Therefore, each vector of repeated measurements yx is separated into two vectors, one containing all values smaller than the estimated mean value and the other containing all values greater than the estimated mean value for that given sampling point. The only exception are values that are equal to the estimated mean. If such a measurement is available within the data, its value is added to both vectors. Afterwards, the local standard deviation is calculated for each of these vectors as σUi(xi) and σLi(xi). This separation enables the estimation of different standard deviations for both sides of the curve at the cost of precision. Due to the reduction of the available data points, the window size will inevitably increase, resulting in a stronger smoothing for each sampling point. The only option to counteract this would be the inclusion of additional repeated measurements.

Interpolation for arbitrary points and example

Example of application on a three-dimensional problem. a The heat-map colored surface depicts the mean estimator. b Additional green and red grids depict the 95% confidence interval

In general, µ-ProPlAn uses these standard deviations to describe the stability of a process in terms of its variance and its adherence to tolerances. In addition, it includes a technique to generate probability distributions based on these values. If only a single standard deviation was estimated, or if σUi(xi) and σLi(xi) are close together, a normal distribution Ɲ(μ(x), σ(x)2) is generated. If they differ from each other, a Gumbel distribution is generated (also known as Extreme-Value Distribution). Therefore, a large number of sampling points is generated using two normal distributions derived as ƝL(μ(x), σL(x)2) and ƝU(μ(x), σU(x)2).

![$$ C_{pk} = \hbox{min} \left[ {\frac{USL - \mu }{3\sigma },\frac{\mu - LSL}{3\sigma }} \right] $$](../images/463048_1_En_3_Chapter/463048_1_En_3_Chapter_TeX_Equ2.png)

3.3.3.6 Simultaneous Engineering Procedure Model

To guide users through the process of model creation, a procedure model accompanies the methodology. This procedure model utilizes concepts of Simultaneous Engineering [Rip14]. In general, “Simultaneous Engineering describes an approach in which the different phases of new product development, from the first basic idea to the moment when the new product finally goes into production, are carried out in parallel.” [Sch02]. By parallelizing the development of the product and of the manufacturing processes, all characteristics and demands regarding the product’s complete life cycle can be taken into account early on. Ignoring such issues can lead to high costs in later stages of the product development or manufacturing [Pul11]. The application of Simultaneous Engineering techniques enables the early determination of these problems, which would usually only emerge during later stages of the production planning [Rei12].

The procedure model comprises three phases: process specification, configuration and analysis. The first two phases in particular should be conducted in parallel to the product development. Whenever the product design is extended or changed, these modifications should be integrated directly into the process chains.

The top-level process chain is designed during the process specification phase. First, the designer aligns all the required manufacturing processes with respect to the product design and defines the process interfaces. In a second step, the designer integrates quality inspection processes in between the manufacturing processes. As a last step of the specification, the designer integrates the required handling processes and operations. Some cases may require complex handling processes (e.g. the separation of single products or samples), while other cases can be satisfied by the integration of handling operations into existing processes. During the process configuration, suitable technologies for each process should be identified, as they determine or at least influence the process interfaces.

After defining the process chain and the according interfaces, the designer configures the processes. Based on the selection of technologies, he integrates relevant production parameters into the model and describes the parameters’ interdependencies (cause–effect relations). For each parameter, the designer specifies tolerances, which describe the range of allowed parameter values, in order to guarantee the required output values. In a second step, the parameters are set, thus describing concrete values for all production-relevant parameters in the process chain. By first specifying the tolerances, the designer is able to select resources according to the requirements of the process. Consequently, the process is aligned to the product’s quality requirements.

The last phase of the procedure is the analysis. Partially, it can occur in parallel to the specification of the material flow. Whenever a parameter is set to a specific value, the impact on the complete process chain can be analyzed according to the modeled cause–effect relations. As a result, the impact on later processes can be estimated and, if necessary, the designer can select other technologies or resources and adapt the model accordingly. In cases where no available technology is suitable to satisfy the product’s requirements, modifications to the product design or the development of new technologies can be addressed. Due to the proposed parallel development of the product and the design of the manufacturing process, such incompatibilities can be detected early on in the development process and higher costs can be prevented. In the last step of the analysis, technically feasible process chains can be simulated, using a material flow simulation, to determine their logistic performance and their behavior. The designer can compare different setups or processes and select the best option. In particular, if certain tools or workstations have to be purchased, the simulation can facilitate the decision between options [Sch08].

3.3.3.7 Geometry-Oriented Modelling of Process Chains

The geometry-oriented process chain design aims to provide an alternative way of modeling and designing process chains in µ-ProPlAn. The procedure model described before assumes a manual modeling and evaluation of each alternative chain. The geometry-oriented approach focuses on additional annotations to already modeled operations, in order to select and combine suitable processes. Thereby, each operation is annotated with information on the processes’ capabilities and limitations. In addition, workpieces can be specified by their geometrical features. Using a constraint-based search, suitable processes can be selected, combined and evaluated automatically. For these annotations, additional parameters are introduced to the cause–effect networks. These describe which geometries can be achieved by a process. Basically, µ-ProPlAn is adapted twofold. First, processes need to be annotated with their type (what they do). Second, each process requires the annotation of which geometrical features can be achieved under which circumstances.

The type of a process describes its function according to the standards DIN 8580 and VDI 2860. For manufacturing processes, the type describes if it is a (primary) forming process, a separating or joining process, if it is a coating process or if it just modifies the workpiece characteristics without changing the geometry. For handling processes, the type determines e.g. if it is a positioning or conveying process. Besides the definition of the type (optical, tactile, acoustic), quality inspection processes additionally require information on whether the process is in-line or destructive and about its resolution and measurement uncertainties.

Example of the geometry based annotations and generated process chain alternative

By using these annotations, it becomes possible to apply constraint-based search algorithms to select suitable processes. During the modeling process, the designer specifies the hierarchical order of the base geometries and geometrical features required for the product. The software tool can search through all the stored processes within the model and select those that can achieve each feature. In the example in Fig. 3.22 there is only one alternative to join a sphere to a wire (melting). In contrast, there exist two alternatives to form this sphere into a cone. As the characteristics of each base geometry and each feature are expressed as parameters integrated into the processes’ cause–effect networks, the configuration of each alternative process chain can be performed in the usual way.

3.3.3.8 Analysis and Model Optimization

As mentioned before, µ-ProPlAn supports different methods to evaluate the process chain models using simulation-based and model-based evaluations. Technical characteristics (e.g. material or surface properties) or process capabilities can be estimated through the cause–effect networks, while logistic properties and the dynamic behavior of different alternatives can be estimated by transforming and simulating the different alternatives using the integrated discrete-event material flow simulation jasima [Hil12]. Using the respective results, the process designer can adapt the model and perform a manual optimization of different process chains, or compare different manufacturing scenarios. Nevertheless, a manual configuration of the processes can be time-consuming and prone to error. Therefore, µ-ProPlAn supports the configuration by providing methods to identify suitable values for input parameters, given a set of desired output values (e.g. for geometrical features) using meta-heuristics (i.e. a genetic search algorithm) and a pruned-depth first search algorithm. Both methods provide multiple settings, which are able to achieve the desired result within the specified tolerances. Moreover, Gralla et al. proposed a method to invert the cause–effect networks’ prediction models [Gra17] using techniques from the mathematical field of inverse modeling. The results show more reliable results in shorter times using these inverted cause–effect networks in combination with mathematical optimization techniques [Gra18].

The proposed methodology supports process designers during all stages from the manufacturing process design, the process configuration until the evaluation of process chain alternatives. In combination with the software prototype, it can be applied to different topics, e.g. for cost assessments of different configurations [Rip14], for the evaluation of different machining strategies [Rip14a], for the process characterization and configuration [Rip17b], or even as an abstraction for physics-based finite-element simulations to enable fast and precise process planning [Rip18a]. In addition, the methods underlying the cause–effect networks can easily be extended to utilize time-related information, thus enabling a re-training of cause–effect networks to cope with a lack of initial training data [Rip18] or in the context of predictive maintenance [Rip17a].

Acknowledgements The editors and authors of this book like to thank the Deutsche Forschungsgemeinschaft DFG (German Research Foundation) for the financial support of the SFB 747 “Mikrokaltumformen – Prozesse, Charakerisierung, Optimierung” (Collaborative Research Center “Micro Cold Forming – Processes, Characterization, Optimization”). We also like to thank our members and project partners of the industrial working group as well as our international research partners for their successful cooperation.

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.