Appendix: Taxonomies of Stupidity and Wisdom

A Taxonomy of Stupidity

Bias blind spot: Our tendency to see others’ flaws, while being oblivious to the prejudices and errors in our own reasoning.

Cognitive miserliness: A tendency to base our decision making on intuition rather than analysis.

Contaminated mindware: An erroneous baseline knowledge that may then lead to further irrational behaviour. Someone who has been brought up to distrust scientific evidence may then be more susceptible to quack medicines and beliefs in the paranormal, for instance.

Dysrationalia: The mismatch between intelligence and rationality, as seen in the life story of Arthur Conan Doyle. This may be caused by cognitive miserliness or contaminated mindware.

Earned dogmatism: Our self-perceptions of expertise mean we have gained the right to be closed-minded and to ignore other points of view.

Entrenchment: The process by which an expert’s ideas become rigid and fixed.

Fachidiot: Professional idiot. A German term to describe a one-track specialist who is an expert in their field but takes a blinkered approach to a multifaceted problem.

Fixed mindset: The belief that intelligence and talent are innate, and exerting effort is a sign of weakness. Besides limiting our ability to learn, this attitude also seems to make us generally more closed-minded and intellectually arrogant.

Functional stupidity: A general reluctance to self-reflect, question our assumptions, and reason about the consequences of our actions. Although this may increase productivity in the short term (making it ‘functional’), it reduces creativity and critical thinking in the long term.

‘Hot’ cognition: Reactive, emotionally charged thinking that may give full rein to our biases. Potentially one source of Solomon’s paradox (see below).

Meta-forgetfulness: A form of intellectual arrogance. We fail to keep track of how much we know and how much we have forgotten; we assume that our current knowledge is the same as our peak knowledge. This is common among university graduates; years down the line, they believe that they understand the issues as well as they did when they took their final exams.

Mindlessness: A lack of attention and insight into our actions and the world around us. It is a particular issue in the way children are educated.

Moses illusion: A failure to spot contradictions in a text, due to its fluency and familiarity. For example, when answering the question, ‘How many animals of each kind did Moses take on the Ark?’, most people answer two. This kind of distraction is a common tactic for purveyors of misinformation and fake news.

Motivated reasoning: The unconscious tendency to apply our brainpower only when the conclusions will suit our predetermined goal. It may include the confirmation or myside bias (preferentially seeking and remembering information that suits our goal) and discomfirmation bias (the tendency to be especially sceptical about evidence that does not fit our goal). In politics, for instance, we are far more likely to critique evidence concerning an issue such as climate change if it does not fit with our existing worldview.

Peter principle: We are promoted based on our aptitude at our current job – not on our potential to fill the next role. This means that managers inevitably ‘rise to their level of incompetence’. Lacking the practical intelligence necessary to manage teams, they subsequently underperform. (Named after management theorist Laurence Peter.)

Pseudo-profound bullshit: Seemingly impressive assertions that are presented as true and meaningful but are actually vacuous under further consideration. Like the Moses illusion, we may accept their message due to a general lack of reflection.

Solomon’s paradox: Named after the ancient Israelite king, Solomon’s paradox describes our inability to reason wisely about our own lives, even if we demonstrate good judgement when faced with other people’s problems.

Strategic ignorance: Deliberately avoiding the chance to learn new information to avoid discomfort and to increase our productivity. At work, for instance, it can be beneficial not to question the long-term consequences of your actions, if that knowledge will interfere with the chances of promotion. These choices may be unconscious.

The too-much-talent effect: The unexpected failure of teams once their proportion of ‘star’ players reaches a certain threshold. See, for instance, the England football team in the Euro 2016 tournament.

A Taxonomy of Wisdom

Actively open-minded thinking: The deliberate pursuit of alternative viewpoints and evidence that may question our opinions.

Cognitive inoculation: A strategy to reduce biased reasoning by deliberately exposing ourselves to examples of flawed arguments.

Collective intelligence: A team’s ability to reason as one unit. Although it is very loosely connected to IQ, factors such as the social sensitivity of the team’s members seem to be far more important.

Desirable difficulties: A powerful concept in education: we actually learn better if our initial understanding is made harder, not easier. See also Growth mindset.

Emotional compass: A combination of interoception (sensitivity to bodily signals), emotion differentiation (the capacity to label your feelings in precise detail) and emotion regulation that together help us to avoid cognitive and affective biases.

Epistemic accuracy: Someone is epistemically accurate if their beliefs are supported by reason and factual evidence.

Epistemic curiosity: An inquisitive, interested, questioning attitude; a hunger for information. Not only does curiosity improve learning; the latest research shows that it also protects us from motivated reasoning and bias.

Foreign language effect: The surprising tendency to become more rational when speaking a second language.

Growth mindset: The belief that talents can be developed and trained. Although the early scientific research on mindset focused on its role in academic achievement, it is becoming increasingly clear that it may drive wiser decision making, by contributing to traits such as intellectual humility.

Intellectual humility: The capacity to accept the limits of our judgement and to try to compensate for our fallibility. Scientific research has revealed that this is a critical, but neglected, characteristic that determines much of our decision making and learning, and which may be particularly crucial for team leaders.

Mindfulness: The opposite of mindlessness. Although this can include meditative practice, it refers to a generally reflective and engaged state that avoids reactive, overly emotional responses to events and allows us to note and consider our intuitions more objectively. The term may also refer to an organisation’s risk management strategy (see Chapter 10).

Moral algebra: Benjamin Franklin’s strategy to weigh up the pros and cons of an argument, often over several days. By taking this slow and systematic approach, you may avoid issues such as the availability bias – our tendency to base judgements on the first information that comes to mind – allowing you to come to a wiser long-term solution to your problem.

Pre-mortem: Deliberately considering the worst-case scenario, and all the factors that may have contributed towards it, before making a decision. This is one of the most well-established ‘de-biasing’ strategies.

Reflective competence: The final stage of expertise, when we can pause and analyse our gut feelings, basing our decisions on both intuition and analysis. See also Mindfulness.

Socrates effect: A form of perspective taking, in which we imagine explaining our problem to a young child. The strategy appears to reduce ‘hot’ cognition and reduce biases and motivated reasoning.

Tolerance of ambiguity: A tendency to embrace uncertainty and nuance, rather than seeking immediate closure on the issue at hand.

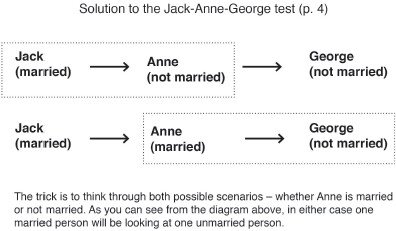

![]()