To see the results, click on the Status generated model that was added automatically after the model was built.

The following observations are required for the testing dataset. Let's understand them in detail:

- You will see a Model Summary:

You can see what our target is; we know that we ran a multilayer perceptron model and then it gives you the information as to why the model stopped, and you can see that it stopped because the error cannot be further decreased. Basically, this means that the model was not improving anymore and we have one hidden layer and that hidden layer has seven neurons. We can also see that on the training dataset the overall accuracy was about 79%.

- Click on the next tab on the right side, the Predictor Importance tab:

This gives you information on predictor importance. Hence, you can observe which predictors are the most important and contribute largely to the predictions. This is showing the most important predictors. In our case, the Speakers predictor tops the list. To see the importance of more predictors, you can just drag the scale down toward the left.

- Classification for Status is our next observation:

Here, we can see that how accurately we're predicting each one of the two groups in the training dataset; notice the overall percent correctly predicted. You can even switch to the cell-counts view form the Style tab at the bottom.

- Let's go down a little further and click on the next tab:

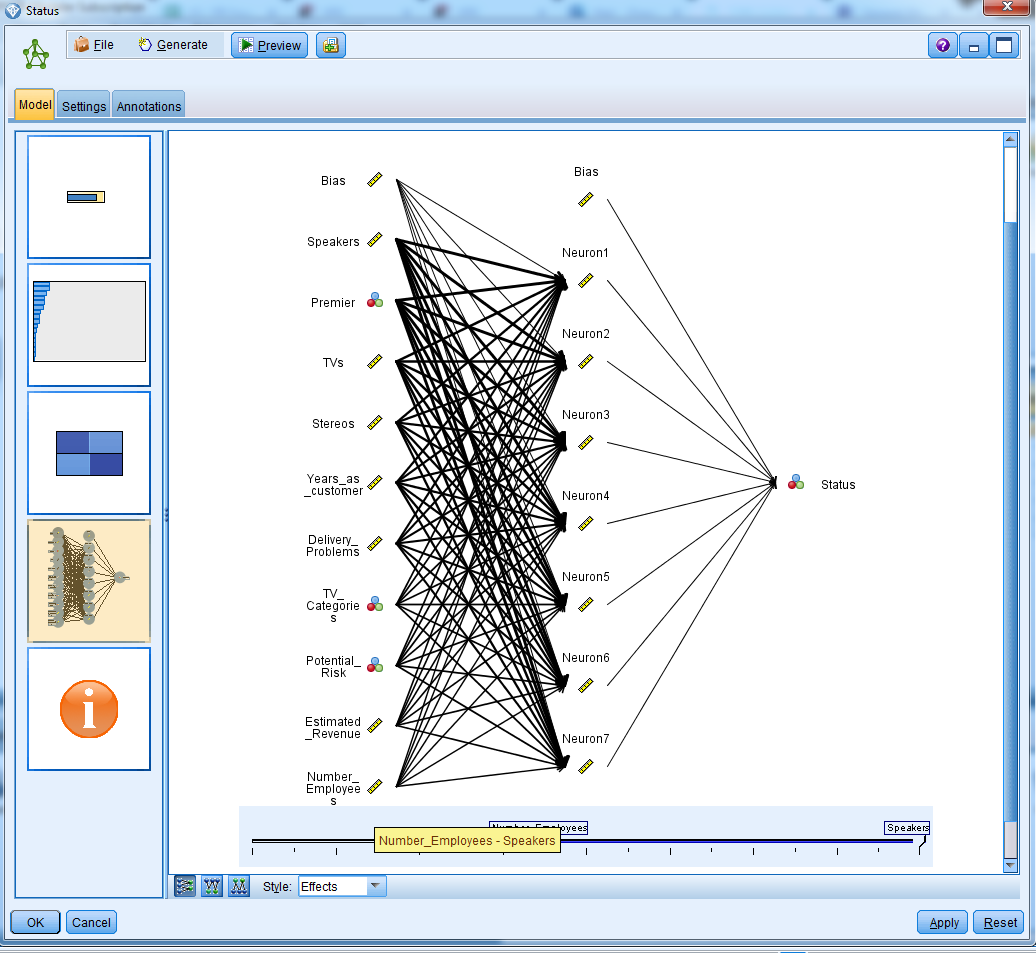

This is showing us the actual neural net model. We are predicting the variable status; we had one hidden layer, and that hidden layer had seven neurons, and this is what we can see here. You can see the connections from each one of the predictors to the neurons in the hidden layer.

You can also switch the Style from Effects to Coefficients:

The thicker the line, the more important the predictor is in that equation, and you can even see the coefficients.

- Click the final icon to get this information:

This just gives us information in terms of which field was our target and which ones are our predictors.

Close the window, and now we will see information about our training dataset. To do this, connect the model that we have to the Table icon:

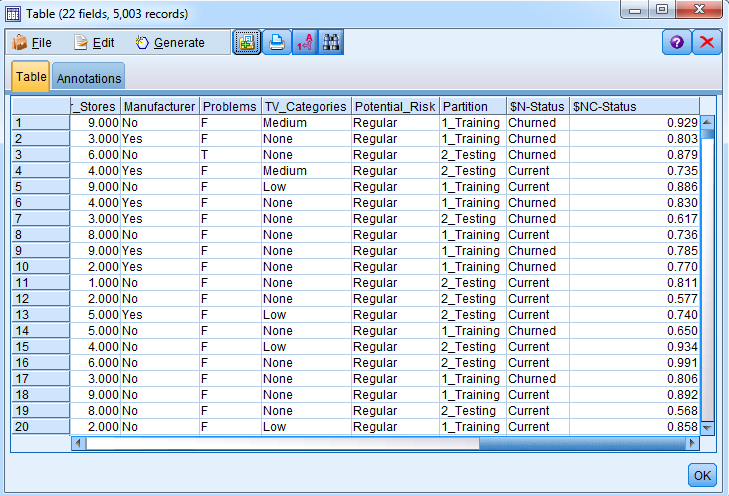

And you can run the Table node:

Here, you will have the two new fields, $N-Status, the prediction field, and $NC-Status, the confidence in that prediction. Here, we have the data for the training as well as the testing dataset.