9

DevOps Using Docker

By the end of Chapter 8, Designing Authentication and Authorization, we had a fairly sophisticated app. In Chapter 4, Automated Testing, CI, and Release to Production, I emphasized the need to ensure that every code push we create has passing tests, adheres to the coding standards, and is an executable artifact that team members can run tests against as we develop our application. By the end of Chapter 7, Creating a Router-First Line-of-Business App, you should have replicated the same CircleCI setup we implemented for the Local Weather app for LemonMart. If not, before we start building more complicated features for our Line-of-Business (LOB) app, go ahead and do this.

We live in an era of moving fast and breaking things. However, the latter part of that statement rarely works in an enterprise. You can choose to live on the edge and adopt the YOLO lifestyle, but this doesn't make good business sense.

Figure 9.1: A creative CLI option for a tool

Continuous Integration (CI) is critical to ensuring a quality deliverable by building and executing tests on every code push. Setting up a CI environment can be time-consuming and requires specialized knowledge of the tool being used. In Chapter 4, Automated Testing, CI, and Release to Production, we implemented GitHub flow with CircleCI integration. However, we manually deployed our app. To move fast without breaking things, we need to implement Continuous Deployment (CD) using DevOps best practices such as Infrastructure-as-Code (IaC), so we can verify the correctness of our running code more often.

In this chapter, we will go over a Docker-based approach to implement IaC that can be run on most CI services and cloud providers, allowing you to achieve repeatable builds and deployments from any CI environment to any cloud provider. Working with flexible tools, you will avoid overspecializing in one service and keep your configuration management skills relevant across different CI services.

This book leverages CircleCI as the CI server. Other notable CI servers are Jenkins, Azure DevOps, and the built-in mechanisms within GitLab and GitHub.

In this chapter, you will learn the following:

- DevOps and IaC

- Containerizing web apps using Docker

- Deploying containerized apps using Google Cloud Run

- CD to multiple cloud providers

- Advanced CI

- Code coverage reports

The following software is required to follow along with this chapter:

- Docker Desktop Community version 2+

- Docker Engine CE version 18+

- A Google Cloud Engine account

- A Coveralls account

The most up-to-date versions of the sample code for the book are on GitHub at the repository linked in the following list. The repository contains the final and completed version of the code. Each section contains information boxes to help direct you to the correct filename or branch on GitHub so that you can use them to verify your progress.

For the Chapter 9 examples based on local-weather-app, do the following:

- Clone the repo at https://github.com/duluca/local-weather-app.

- Execute

npm installon the root folder to install dependencies. - Use

.circleci/config.ch9.ymlto verify yourconfig.ymlimplementation. - To run the CircleCI Vercel Now configuration, execute

git checkout deploy_VercelnowRefer to the pull request at https://github.com/duluca/local-weather-app/pull/50.

- To run the CircleCI GCloud configuration, execute

git checkout deploy_cloudrunRefer to the pull request at https://github.com/duluca/local-weather-app/pull/51.

Note that both branches leverage modified code to use the projects/ch6 code from the local-weather-app repo.

For the Chapter 9 examples based on lemon-mart, do the following:

- Clone the repo at https://github.com/duluca/lemon-mart.

- Use

.circleci/config.ch9.ymlandconfig.docker-integration.ymlto verify yourconfig.ymlimplementation. - Execute

npm installon the root folder to install dependencies. - To run the CircleCI Docker integration configuration, execute

git checkout docker-integrationRefer to the pull request at https://github.com/duluca/lemon-mart/pull/25.

Note that the docker-integration branch is slightly modified to use code from the projects/ch8 folder on the lemon-mart repo.

Beware that there may be slight differences in implementation between the code in the book and what's on GitHub because the ecosystem is ever-evolving. It is natural for the sample code to change over time. Also, on GitHub, expect to find corrections, fixes to support newer versions of libraries, or side-by-side implementations of multiple techniques for the reader to observe. The reader is only expected to implement the ideal solution recommended in the book. If you find errors or have questions, please create an issue or submit a pull request on GitHub for the benefit of all readers.

You can read more about updating Angular in Appendix C, Keeping Angular and Tools Evergreen. You can find this appendix online from https://static.packt-cdn.com/downloads/9781838648800_Appendix_C_Keeping_Angular_and_Tools_Evergreen.pdf or at https://expertlysimple.io/stay-evergreen.

Let's start by understanding what DevOps is.

DevOps

DevOps is the marriage of development and operations. In development, it is well established that code repositories like Git track every code change. In operations, there has long been a wide variety of techniques to track changes to environments, including scripts and various tools that aim to automate the provisioning of operating systems and servers.

Still, how many times have you heard the saying, "it works on my machine"? Developers often use that line as a joke. Still, it is often the case that software that works perfectly well on a test server ends up running into issues on a production server due to minor differences in configuration.

In Chapter 4, Automated Testing, CI, and Release to Production, we discussed how GitHub flow can enable us to create a value delivery stream. We always branch from the master before making a change. Enforce that change to go through our CI pipeline, and once we're reasonably sure that our code works, we can merge back to the master branch. See the following diagram:

Figure 9.2: Branching and merging

Remember, your master branch should always be deployable, and you should frequently merge your work to the master branch.

Docker allows us to define the software and the specific configuration parameters that our code depends on in a declarative manner using a special file named a Dockerfile. Similarly, CircleCI allows us to define the configuration of our CI environment in a config.yml file. By storing our configuration in files, we are able to check the files in alongside our code. We can track changes using Git and enforce them to be verified by our CI pipeline. By storing the definition of our infrastructure in code, we achieve IaC. With IaC, we also achieve repeatable integration, so no matter what environment we run our infrastructure in, we should be able to stand up our full-stack app with a one-line command.

You may remember that in Chapter 1, Introduction to Angular and Its Concepts, we covered how TypeScript covers the JavaScript Feature Gap. Similar to TypeSript, Docker covers the configuration gap, as demonstrated in the following diagram:

Figure 9.3: Covering the configuration gap

By using Docker, we can be reasonably sure that our code, which worked on our machine during testing, will work exactly the same way when we ship it.

In summary, with DevOps, we bring operations closer to development, where it is cheaper to make changes and resolve issues. So, DevOps is primarily a developer's responsibility, but it is also a way of thinking that the operations team must be willing to support. Let's dive deeper into Docker.

Containerizing web apps using Docker

Docker, which can be found at https://docker.io, is an open platform for developing, shipping, and running applications. Docker combines a lightweight container virtualization platform with workflows and tooling that help manage and deploy applications. The most obvious difference between Virtual Machines (VMs) and Docker containers is that VMs are usually dozens of gigabytes in size and require gigabytes of memory, whereas containers take up megabytes in terms of disk and memory size requirements. Furthermore, the Docker platform abstracts away host operating system (OS) - level configuration settings, so every piece of configuration that is needed to successfully run an application is encoded within a human-readable format.

Anatomy of a Dockerfile

A Dockerfile consists of four main parts:

- FROM – where we can inherit from Docker's minimal "scratch" image or a pre-existing image

- SETUP – where we configure software dependencies to our requirements

- COPY – where we copy our built code into the operating environment

- CMD – where we specify the commands that will bootstrap the operating environment

Bootstrap refers to a set of initial instructions that describe how a program loads or starts up.

Consider the following visualization of the anatomy of a Dockerfile:

Figure 9.4: Anatomy of a Dockerfile

A concrete representation of a Dockerfile is demonstrated in the following code:

Dockerfile

FROM duluca/minimal-nginx-web-server:1-alpine

COPY /dist/local-weather-app /var/www

CMD 'nginx'

You can map the FROM, COPY, and CMD parts of the script to the visualization. We inherit from the duluca/minimal-nginx-web-server image using the FROM command. Then, we copy the compiled result of our app from our development machine or build environment into the image using the COPY (or, alternatively, the ADD) command. Finally, we instruct the container to execute the nginx web server using the CMD (or, alternatively, the ENTRYPOINT) command.

Note that the preceding Dockerfile doesn't have a distinct SETUP part. SETUP doesn't map to an actual Dockerfile command but represents a collection of commands you can execute to set up your container. In this case, all the necessary setup was done by the base image, so there are no additional commands to run.

Common Dockerfile commands are FROM, COPY, ADD, RUN, CMD, ENTRYPOINT, ENV, and EXPOSE. For the full Dockerfile reference, refer to https://docs.docker.com/engine/reference/builder/.

The Dockerfile describes a new container that inherits from a container named duluca/minimal-nginx-web-server. This is a container that I published on Docker Hub, which inherits from the nginx:alpine image, which itself inherits from the alpine image. The alpine image is a minimal Linux operating environment that is only 5 MB in size. The alpine image itself inherits from scratch, which is an empty image. See the inheritance hierarchy demonstrated in the following diagram:

Figure 9.5: Docker inheritance

The Dockerfile then copies the contents of the dist folder from your development environment into the container's www folder, as shown in the following diagram:

Figure 9.6: Copying code into a containerized web server

In this case, the parent image is configured with an nginx server to act as a web server to serve the content inside the www folder. At this point, our source code is accessible from the internet but lives inside layers of secure environments. Even if our app has a vulnerability of some kind, it would be tough for an attacker to harm the systems we are operating on. The following diagram demonstrates the layers of security that Docker provides:

Figure 9.7: Docker security

In summary, at the base layer we have our host OS, such as Windows or macOS, that runs the Docker runtime, which will be installed in the next section. The Docker runtime is capable of running self-contained Docker images, which are defined by the aforementioned Dockerfile. duluca/minimal-nginx-web-server is based on the lightweight Linux operating system, Alpine. Alpine is a completely pared-down version of Linux that doesn't come with any GUI, drivers, or even most of the sCLI tools you may expect from a Linux system. As a result, the OS is around only ~5 MB in size. We then inherit from the nginx image, which installs the web server, which itself is around a few megabytes in size. Finally, our custom nginx configuration is layered over the default image, resulting in a tiny ~7 MB image. The nginx server is configured to serve the contents of the /var/www folder. In the Dockerfile, we merely copy the contents of the /dist folder in our development environment and place it into the /var/www folder. We will later build and execute this image, which will run our Nginx web server containing the output of our dist folder. I have published a similar image named duluca/minimal-node-web-server, which clocks in at ~15 MB.

duluca/minimal-node-web-server can be more straightforward to work with, especially if you're not familiar with Nginx. It relies on an Express.js server to serve static content. Most cloud providers provide concrete examples using Node and Express, which can help you narrow down any errors. In addition, duluca/minimal-node-web-server has HTTPS redirection support baked into it. You can spend a lot of time trying to set up a nginx proxy to do the same thing, when all you need to do is set the environment variable ENFORCE_HTTPS in your Dockerfile. See the following sample Dockerfile:

Dockerfile

FROM duluca/minimal-node-web-server:lts-alpine

WORKDIR /usr/src/app

COPY dist/local-weather-app public

ENTRYPOINT [ "npm", "start" ]

ENV ENFORCE_HTTPS=xProto

You can read more about the options minimal-node-web-server provides at https://github.com/duluca/minimal-node-web-server.

As we've now seen, the beauty of Docker is that you can navigate to https://hub.docker.com, search for duluca/minimal-nginx-web-server or duluca/minimal-node-web-server, read its Dockerfile, and trace its origins all the way back to the original base image that is the foundation of the web server. I encourage you to vet every Docker image you use in this manner to understand what exactly it brings to the table for your needs. You may find it either overkill, or that it has features you never knew about that can make your life a lot easier.

Note that the parent images should pull a specific tag of duluca/minimal-nginx-web-server, which is 1-alpine. Similarly, duluca/minimal-node-web-server pulls from lts-alpine. These are evergreen base packages that always contain the latest release of version 1 of Nginx and Alpine or an LTS release of Node. I have pipelines set up to automatically update both images when a new base image is published. So, whenever you pull these images, you will get the latest bug fixes and security patches.

Having an evergreen dependency tree removes the burden on you as the developer to go hunting down the latest available version of a Docker image. Alternatively, if you specify a version number, your images will not be subject to any potential breaking changes. However, it is better to remember to test your images after a new build, than never update your image and potentially deploy compromised software. After all, the web is ever-changing and will not slow down for you to keep your images up to date.

Just like npm packages, Docker can bring great convenience and value, but you must take care to understand the tools you are working with.

In Chapter 13, Highly Available Cloud Infrastructure on AWS, we are going to leverage the lower-footprint Docker image based on Nginx, duluca/minimal-nginx-web-server. If you're comfortable configuring nginx, this is the ideal choice.

Installing Docker

In order to be able to build and run containers, you must first install the Docker execution environment on your computer. Refer back to Chapter 2, Setting Up Your Development Environment, for instructions on installing Docker.

Setting up npm scripts for Docker

Now, let's configure some Docker scripts for your Angular apps that you can use to automate the building, testing, and publishing of your container. I have developed a set of scripts called npm scripts for Docker that work on Windows 10 and macOS. You can get the latest version of these scripts and automatically configure them in your project by executing the following code:

- Install the npm scripts for Docker task:

$ npm i -g mrm-task-npm-docker - Apply the npm scripts for Docker configuration:

$ npx mrm npm-docker

After you execute the mrm scripts, we're ready to take a deep dive into the configuration settings using the Local Weather app as an example.

Build and publish an image to Docker Hub

Next, let's make sure that your project is configured correctly so we can containerize it, build an executable image, and publish it to Docker Hub, thereby allowing us to access it from any build environment. We will be using the Local Weather app for this section that we last updated in Chapter 6, Forms, Observables, and Subjects:

This section uses the local-weather-app repo.

- Sign up for a Docker Hub account on https://hub.docker.com/.

- Create a public (free) repository for your application.

- In

package.json, add or update theconfigproperty with the following configuration properties:package.json ... "config": { "imageRepo": "[namespace]/[repository]", "imageName": "custom_app_name", "imagePort": "0000", "internalContainerPort": "3000" }, ...The

namespacewill be your Docker Hub username. You will define what your repository will be called during creation. An example imagerepositoryvariable should look likeduluca/localcast-weather. The image name is for easy identification of your container while using Docker commands such asdocker ps. I will just call minelocalcast-weather. TheimagePortproperty will define which port should be used to expose your application from inside the container. Since we use port5000for development, pick a different one, like8080. TheinternalContainerPortdefines the port that your web server is mapped to. For Node servers, this will mostly be port3000, and for Nginx servers,80. Refer to the documentation of the base container you're using. - Let's review the Docker scripts that were added to

package.jsonby themrmtask from earlier. The following snippet is an annotated version of the scripts that explains each function.Note that with npm scripts, the

preandpostkeywords are used to execute helper scripts, respectively, before or after the execution of a given script. Scripts are intentionally broken into smaller pieces to make it easier to read and maintain them.The

buildscript is as follows:Note that the following

cross-conf-envcommand ensures that the script executes equally well in macOS, Linux, and Windows environments.package.json ... "scripts": { ... "predocker:build": "npm run build", "docker:build": "cross-conf-env docker image build . -t $npm_package_config_imageRepo:$npm_package_version", "postdocker:build": "npm run docker:tag", ...npm run docker:buildwill build your Angular application in theprescript, then build the Docker image using thedocker image buildcommand, and tag the image with a version number in thepostscript:In my project, the

precommand builds my Angular application in prod mode and also runs a test to make sure that I have an optimized build with no failing tests.My pre command looks like:

"predocker:build":"npm run build:prod && npm test -- --watch=false"The

tagscript is as follows:package.json ... "docker:tag": " cross-conf-env docker image tag $npm_package_config_imageRepo:$npm_package_version $npm_package_config_imageRepo:latest", ...npm run docker:tagwill tag an already built Docker image using the version number from theversionproperty inpackage.jsonand the latest tag.The

stopscript is as follows:package.json ... "docker:stop": "cross-conf-env docker stop $npm_package_config_imageName || true", ...npm run docker:stopwill stop the image if it's currently running, so therunscript can execute without errors.The

runscript is as follows:Note that the

run-sandrun-pcommands ship with thenpm-run-allpackage to synchronize or parallelize the execution of npm scripts.package.json ... "docker:run": "run-s -c docker:stop docker:runHelper", "docker:runHelper": "cross-conf-env docker run -e NODE_ENV=local --rm --name $npm_package_config_imageName -d -p $npm_package_config_imagePort:$npm_package_config_internalContainerPort $npm_package_config_imageRepo", ...npm run docker:runwill stop if the image is already running, and then run the newly built version of the image using thedocker runcommand. Note that theimagePortproperty is used as the external port of the Docker image, which is mapped to the internal port of the image that the Node.js server listens to, port3000.The

publishscript is as follows:package.json ... "predocker:publish": "echo Attention! Ensure `docker login` is correct.", "docker:publish": "cross-conf-env docker image push $npm_package_config_imageRepo:$npm_package_version", "postdocker:publish": "cross-conf-env docker image push $npm_package_config_imageRepo:latest", ...npm run docker:publishwill publish a built image to the configured repository, in this case, Docker Hub, using thedocker image pushcommand.First, the versioned image is published, followed by one tagged with

latestin post. Thetaillogsscript is as follows:package.json ... "docker:taillogs": "cross-conf-env docker logs -f $npm_package_config_imageName", ...npm run docker:taillogswill display the internal console logs of a running Docker instance using thedocker log -fcommand, a very useful tool when debugging your Docker instance.The

openscript is as follows:package.json ... "docker:open": "sleep 2 && cross-conf-env open-cli http://localhost:$npm_package_config_imagePort", ...npm run docker:openwill wait for 2 seconds and then launch the browser with the correct URL for your application using theimagePortproperty.The

debugscript is as follows:package.json ... "predocker:debug": "run-s docker:build docker:run", "docker:debug": "run-s -cs docker:open:win docker:open:mac docker:taillogs" }, ...npm run docker:debugwill build your image and run an instance of it inpre, open the browser, and then start displaying the internal logs of the container. - Customize the pre-build script to build your angular app in production mode and execute unit tests before building the image:

package.json "build": "ng build", "build:prod": "ng build --prod", "predocker:build": "npm run build:prod && npm test -- --watch=false",Note that

ng buildis provided with the--prodargument, which achieves two things: the size of the app is optimized to be significantly smaller with Ahead-of-Time (AOT) compilation to increase runtime performance, and the configuration items defined insrc/environments/environment.prod.tsare used. - Update

src/environments/environment.prod.tsto look like you're using your ownappIdfromOpenWeather:export const environment = { production: true, appId: '01ff1xxxxxxxxxxxxxxxxxxxxx', username: 'localcast', baseUrl: 'https://', geonamesApi: 'secure', }We are modifying how

npm testis executed, so the tests are run only once and the tool stops executing. The--watch=falseoption is provided to achieve this behavior, as opposed to the development-friendly default continuous execution behavior. - Create a new file named

Dockerfilewith no file extensions in the project root. - Implement or replace the contents of the

Dockerfile, as shown here:Dockerfile FROM duluca/minimal-node-web-server:lts-alpine WORKDIR /usr/src/app COPY dist/local-weather-app publicBe sure to inspect the contents of your

distfolder to ensure you're copying the correct folder, which contains theindex.htmlfile at its root. - Execute

npm run predocker:buildand make sure it runs without errors in the Terminal to ensure that your application changes have been successful. - Execute

npm run docker:buildand make sure it runs without errors in the Terminal to ensure that your image builds successfully.While you can run any of the provided scripts individually, you really only need to remember two of them going forward:

npm run docker:debugwill test, build, tag, run, tail, and launch your containerized app in a new browser window for testing.npm run docker:publishwill publish the image you just built and test to the online Docker repository.

- Execute

docker:debugin your Terminal:$ npm run docker:debugA successful

docker:debugrun should result in a new in-focus browser window with your application and the server logs being tailed in the Terminal, as follows:Current Environment: local. Server listening on port 3000 inside the container Attention: To access server, use http://localhost:EXTERNAL_PORT EXTERNAL_PORT is specified with 'docker run -p EXTERNAL_PORT:3000'. See 'package.json->imagePort' for the default port. GET / 304 2.194 ms - - GET /runtime-es2015.js 304 0.371 ms - - GET /polyfills-es2015.js 304 0.359 ms - - GET /styles-es2015.js 304 0.839 ms - - GET /vendor-es2015.js 304 0.789 ms - - GET /main-es2015.js 304 0.331 ms - -You should always run

docker psto check whether your image is running, when it was last updated, and whether it is clashing with any existing images claiming the same port. - Execute

docker:publishin your Terminal:$ npm run docker:publishYou should observe a successful run in the Terminal window like this:

The push refers to a repository [docker.io/duluca/localcast- weather] 60f66aaaaa50: Pushed ... latest: digest: sha256:b680970d76769cf12cc48f37391d8a542fe226b66d9a6f8a7ac81ad77be4 f58b size: 2827

Over time, your local Docker cache may grow to a significant size; for example, on my laptop, it's reached roughly 40 GB over two years. You can use the docker image prune and docker container prune commands to reduce the size of your cache. For more detailed information, refer to the documentation at https://docs.docker.com/config/pruning.

By defining a Dockerfile and scripting our use of it, we created living documentation in our code base. We have achieved DevOps and closed the configuration gap.

Make sure to containerize lemon-mart in the same way you've done with local-weather-app and verify your work by executing npm run docker:debug.

You may find it confusing to interact with npm scripts in general through the CLI. Let's look at VS Code's npm script support next.

NPM scripts in VS Code

VS Code provides support for npm scripts out of the box. In order to enable npm script explorer, open VS Code settings and ensure that the "npm.enableScriptExplorer": true property is present. Once you do, you will see an expandable title named NPM SCRIPTS in the Explorer pane, as highlighted with an arrow in the following screenshot:

Figure 9.8: NPM scripts in VS Code

You can click on any script to launch the line that contains the script in package.json or right-click and select Run to execute the script.

Let's look at an easier way to interact with Docker next.

Docker extensions in VS Code

Another way to interact with Docker images and containers is through VS Code. If you have installed the ms-azuretools.vscode-docker Docker extension from Microsoft, as suggested in Chapter 2, Setting Up Your Development Environment, you can identify the extension by the Docker logo on the left-hand navigation menu VS Code, as circled in white in the following screenshot:

Figure 9.9: Docker extension in VS Code

Let's go through some of the functionality provided by the extension. Refer to the preceding screenshot and the numbered steps in the following list for a quick explanation:

- Images contain a list of all the container snapshots that exist on your system.

- Right-clicking on a Docker image brings up a context menu to run various operations on it, like Run, Push, and Tag.

- Containers list all executable Docker containers that exist on your system, which you can start, stop, or attach to.

- Registries display the registries that you're configured to connect to, such as Docker Hub or AWS Elastic Container Registry (AWS ECR).

While the extension makes it easier to interact with Docker, the npm scripts for Docker (which you configured using the mrm task) automate a lot of the chores related to building, tagging, and testing an image. They are both cross-platform and will work equally well in a CI environment.

The npm run docker:debug script automates a lot of chores to verify that you have a good image build!

Now let's see how we can deploy our containers to the cloud and later achieve CD.

Deploying a Dockerfile to the cloud

One of the advantages of using Docker is that we can deploy it on any number of operating environments, from personal PCs to servers and cloud providers. In any case, we would expect our container to function the same way. Let's deploy the LocalCast Weather app to Google Cloud Run.

Google Cloud Run

Google Cloud Run allows you to deploy arbitrary Docker containers and execute them on the Google Cloud Platform without any onerous overhead. Fully managed instances offer some free time; however, there's no free-forever version here. Please be mindful of any costs you may incur. Refer to https://cloud.google.com/run/pricing?hl=en_US%20for%20pricing.

Refer to Chapter 2, Setting Up Your Development Environment, for instructions on how to install glcoud.

This section uses the local-weather-app repo.

Let's configure glcoud so we can deploy a Dockerfile:

- Update your

Dockerfileto override theENTRYPOINTcommand:Dockerfile FROM duluca/minimal-node-web-server:lts-alpine WORKDIR /usr/src/app COPY dist/local-weather-app public ENTRYPOINT [ "npm", "start" ] - Create a new gcloud project:

$ gcloud projects create localcast-weatherRemember to use your own project name!

- Navigate to https://console.cloud.google.com/

- Locate your new project and select the Billing option from the sidebar, as shown in the following screenshot:

Figure 9.10: Billing options

- Follow the instructions to set up a billing account.

If you see it, the Freemium account option will also work. Otherwise, you may choose to take advantage of free trial offers. However, it is a good idea to set a budget alert to be notified if you get charged over a certain amount per month. Find more info at https://cloud.google.com/billing/docs/how-to/modify-project.

- Create a

.gcloudignorefile and ignore everything but yourDockerfileanddistfolder:.gcloudignore /* !Dockerfile !dist/ - Add a new npm script to build your

Dockerfilein the cloud:package.json scripts: { "gcloud:build": "gcloud builds submit --tag gcr.io/localcast-weather/localcast-weather --project localcast-weather", }Remember to use your own project name!

- Add another npm script to deploy your published container:

package.json scripts: { "gcloud:deploy": "gcloud run deploy --image gcr.io/localcast-weather/localcast-weather --platform managed --project localcast-weather --region us-east1" }Note that you should provide the region closest to your geographical location for the best possible experience.

- Build your

Dockerfileas follows:$ npm run gcloud:buildBefore running this command, remember to build your application for

prod. Whatever you have in yourdistfolder will get deployed.Note that on the initial run, you will be prompted to answer questions to configure your account for initial use. Select your account and project name correctly, otherwise, take the default options. The

buildcommand may fail during the first run. Sometimes it takes multiple runs for gcloud to warm up and successfully build your container. - Once your container is published, deploy it using the following command:

$ npm run gcloud:deploy

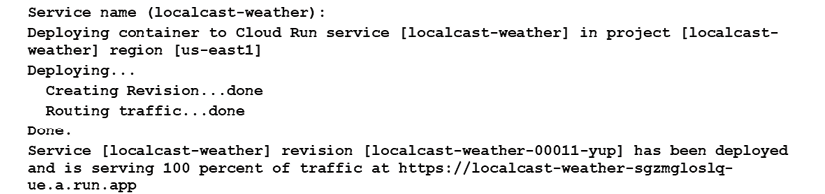

A successful deployment will look like the following:

Figure 9.11: A successful deployment

Congrats, you've just deployed your container on Google Cloud. You should be able to access your app using the URL in the Terminal output.

As always, consider adding CLI commands as npm scripts to your project so that you can maintain a living documentation of your scripts. These scripts will also allow you to leverage pre and post scripts in npm, allowing you to automate the building of your application, your container, and the tagging process. So, the next time you need to deploy, you only need to run one command. I encourage the reader to seek inspiration from the npm scripts for Docker utility we set up earlier to create your own set of scripts for gcloud.

For more information and some sample projects, refer to https://cloud.google.com/run/docs/quickstarts/prebuilt-deploy and https://cloud.google.com/run/docs/quickstarts/build-and-deploy.

Configuring Docker with Cloud Run

In the previous section, we submitted our Dockerfile and dist folder to gcloud so that it can build our container for us. This is a convenient option that avoids some of the additional configuration steps. However, you can still leverage your Docker-based workflow to build and publish your container.

Let's configure Docker with gcloud:

- Set your default region:

$ gcloud config set run/region us-east1 - Configure Docker with the gcloud container registry:

$ gcloud auth configure-docker - Tag your already built container with a gcloud hostname:

$ docker tag duluca/localcast-weather:latest gcr.io/localcast-weather/localcast-weather:latestFor detailed instructions on how to tag your image, refer to https://cloud.google.com/container-registry/docs/pushing-and-pulling.

- Publish your container to gcloud using Docker:

$ docker push gcr.io/localcast-weather/localcast-weather:latest - Execute the

deploycommand:$ gcloud run deploy --image gcr.io/localcast-weather/localcast-weather --platform managed --project localcast-weatherDuring initial deployment, this command may appear to be stuck. Try again in 15 minutes or so.

- Follow the onscreen instructions to complete your deployment.

- Follow the URL displayed on screen to check that your app has been successfully deployed.

The preceding steps demonstrate a deployment technique that is similar to the one we leveraged when deploying to AWS ECS in Chapter 13, Highly Available Cloud Infrastructure on AWS.

For more information, refer to https://cloud.google.com/sdk/gcloud/reference/run/deploy. For the following few sections, we will be switching back to LemonMart.

Troubleshooting Cloud Run

In order to troubleshoot your glcoud commands, you may utilize the Google Cloud Platform Console at https://console.cloud.google.com/.

Under the Cloud Run menu, you can keep track of the containers you are running. If errors occur during your deployment, you may want to check the logs to see the messages created by your container. Refer to the following screenshot, which shows the logs from my localcast-weather deployment:

Figure 9.12: Cloud Run logs

To learn more about troubleshooting Cloud Run, refer to https://cloud.google.com/run/docs/troubleshooting.

Congratulations! You have mastered the fundamentals of working with Docker containers in your local development environment and pushing them to multiple registries and runtime environments in the cloud.

Continuous deployment

CD is the idea that code changes that successfully pass through your pipeline can be automatically deployed to a target environment. Although there are examples of continuously deploying to production, most enterprises prefer to target a dev environment. A gated approach is adopted to move the changes through the various stages of dev, test, staging, and finally production. CircleCI can facilitate gated deployment with approval workflows, which is covered later in this section.

In CircleCI, to deploy your image, we need to implement a deploy job. In this job, you can deploy to a multitude of targets such as Google Cloud Run, Docker Hub, Heroku, Azure, or AWS ECS. Integration with these targets will involve multiple steps. At a high level, these steps are as follows:

- Configure an orb for your target environment, which provides the CLI tools required to deploy your software.

- Store login credentials or access keys specific to the target environment as CircleCI environment variables.

- Build a container in the CI pipeline, if not using a platform-specific

buildcommand. Then usedocker pushto submit the resulting Docker image to the target platform's Docker registry. - Execute a platform-specific

deploycommand to instruct the target to run the Docker image that was just pushed.

By using a Docker-based workflow, we achieve great amounts of flexibility in terms of systems and target environments we can use. The following diagram illustrates this point by highlighting the possible permutation of choices that are available to us:

Figure 9.13: n-to-n deployment

As you can see, in a containerized world, the possibilities are limitless. I will demonstrate how you can deploy to Google Cloud Run using containers and CI later in this chapter. Outside of Docker-based workflows, you can use purpose-built CLI tools to quickly deploy your app. Next, let's see how you can deploy your app to Vercel Now using CircleCI.

Deploying to Vercel Now using CircleCI

In Chapter 4, Automated Testing, CI, and Release to Production, we configured the LocalCast Weather app to build using CircleCI. We can enhance our CI pipeline to take the build output and optionally deploy it to Vercel Now.

Note that ZEIT Now has rebranded to Vercel Now in 2020.

This section uses the local-weather-app repo. The config.yml file for this section is named .circleci/config.ch9.yml. You can also find a pull request that executes the .yml file from this chapter on CircleCI at https://github.com/duluca/local-weather-app/pull/50 using the branch deploy_Vercelnow.

Note that this branch has a modified configuration in config.yml and Dockerfile to use the projects/ch6 code from local-weather-app.

Let's update the config.yml file to add a new job named deploy. In the upcoming Workflows section, we will use this job to deploy a pipeline when approved:

- Create a token from your Vercel Now account.

- Add an environment variable to your CircleCI project named

NOW_TOKENand store your Vercel Now token as the value. - In

config.yml, update thebuildjob with the new steps and add a new job nameddeploy:.circleci/config.yml ... jobs: build: ... - run: name: Move compiled app to workspace command: | set -exu mkdir -p /tmp/workspace/dist mv dist/local-weather-app /tmp/workspace/dist/ - persist_to_workspace: root: /tmp/workspace paths: - dist/local-weather-app deploy: docker: - image: circleci/node:lts working_directory: ~/repo steps: - attach_workspace: at: /tmp/workspace - run: npx now --token $NOW_TOKEN --platform-version 2 --prod /tmp/workspace/dist/local-weather-app --confirmIn the

buildjob, after the build is complete, we add two new steps. First, we move the compiled app that's in thedistfolder to a workspace and persist that workspace so we can use it later in another job. In a new job, nameddeploy, we attach the workspace and use npx to run thenowcommand to deploy thedistfolder. This is a straightforward process.Note that

$NOW_TOKENis the environment variable we stored on the CircleCI project. - Implement a simple CircleCI workflow to continuously deploy the outcome of your

buildjob:.circleci/config.yml ... workflows: version: 2 build-test-and-deploy: jobs: - build - deploy: requires: - buildNote that the

deployjob waits for thebuildjob to complete before it can execute. - Ensure that your CI pipeline executed successfully by inspecting the test results:

Figure 9.14: Successful Vercel Now deployment of local-weather-app on the deploy_Vercelnow branch

Most CLI commands for cloud providers need to be installed in your pipeline to function. Since Vercel Now has an npm package, this is easy to do. CLI tools for AWS, Google Cloud, or Microsoft Azure need to be installed using tools such as brew or choco. Doing this manually in a CI environment is tedious. Next, we will cover orbs, which helps to solve the problem.

Deploying to GCloud using orbs

Orbs contain a set of configuration elements to encapsulate shareable behavior between CircleCI projects. CircleCI provides orbs that are developed by the maintainers of CLI tools. These orbs make it easy for you to add a CLI tool to your pipeline without having to set it up manually, with minimal configuration.

To work with orbs, your config.yml version number must be set to 2.1 and, in your CircleCI security settings, you must select the option to allow uncertified orbs.

The following are some orbs that you can use in your projects:

circleci/aws-cliandcircleci/aws-ecrprovide you with the AWS CLI tool and help you to interact with AWS Elastic Container Service (AWS ECS), performing tasks such as deploying your containers to AWS ECR.circleci/aws-ecsstreamlines your CircleCI config to deploy your containers to AWS ECS.circleci/gcp-cliandcircleci/gcp-gcrprovide you with the GCloud CLI tool and access to Google Container Registry (GCR).circleci/gcp-cloud-runstreamlines your CircleCI config to deploy your containers to Cloud Run.circleci/azure-cliandcircleci/azure-acrprovide you with the Azure CLI tools and access to Azure Container Registry (ACR).

Check out the Orb registry for more information on how to use these orbs at https://circleci.com/orbs/registry.

Now, let's configure the circleci/gcp-cloud-run orb with the Local Weather app so we can continuously deploy our app to GCloud, without having to manually install and configure the gcloud CLI tool on our CI server.

On the local-weather-app repo, you can find a pull request that executes the Cloud Run configuration from this step on CircleCI, at https://github.com/duluca/local-weather-app/pull/51, using the deploy_cloudrun branch.

Note that this branch has a modified configuration in config.yml and Dockerfile to use the projects/ch6 code from local-weather-app.

First, configure your CircleCI and GCloud accounts so you can deploy from a CI server. This is markedly different from deploying from your development machine, because the gcloud CLI tools automatically set up the necessary authentication configuration for you. Here, you will have to do this manually:

- In your CircleCI account settings, under the security section, ensure you allow execution of uncertified/unsigned orbs.

- In the CircleCI project settings, add an environment variable named

GOOGLE_PROJECT_ID.If you used the same project ID as I did, this should be

localcast-weather. - Create a GCloud service account key for your project's existing service account.

Creating a service account key will result in a JSON file. Do not check this file into your code repository. Do not share the contents of it over insecure communication channels such as email or SMS. Exposing the contents of this file means that any third party can access your GCloud resources permitted by the key permissions.

- Copy the contents of the JSON file to a CircleCI environment variable named

GCLOUD_SERVICE_KEY. - Add another environment variable named

GOOGLE_COMPUTE_ZONEand set it to your preferred zone.I used

us-east1. - Update your

config.ymlfile to add an orb namedcircleci/gcp-cloud-run:.circleci/config.yml version: 2.1 orbs: cloudrun: circleci/gcp-cloud-run@1.0.2 ... - Next, implement a new job named

deploy_cloudrun, leveraging orb features to initialize, build, deploy, and test our deployment:.circleci/config.yml ... deploy_cloudrun: docker: - image: 'cimg/base:stable' working_directory: ~/repo steps: - attach_workspace: at: /tmp/workspace - checkout - run: name: Copy built app to dist folder command: cp -avR /tmp/workspace/dist/ . - cloudrun/init - cloudrun/build: tag: 'gcr.io/${GOOGLE_PROJECT_ID}/test-${CIRCLE_SHA1}' source: ~/repo - cloudrun/deploy: image: 'gcr.io/${GOOGLE_PROJECT_ID}/test-${CIRCLE_SHA1}' platform: managed region: us-east1 service-name: localcast-weather unauthenticated: true - run: command: > GCP_API_RESULTS=$(curl -s "$GCP_DEPLOY_ENDPOINT") if ! echo "$GCP_API_RESULTS" | grep -nwo "LocalCast Weather"; then echo "Result is unexpected" echo 'Result: ' curl -s "$GCP_DEPLOY_ENDPOINT" exit 1; fi name: Test managed deployed service.We first load the

distfolder from thebuildjob. We then runcloudrun/init, so that the CLI tool can be initialized. Withcloudrun/build, we build theDockerfileat the root of our project, which automatically stores the result of our build in GCR. Then,cloudrun/deploydeploys the image we just built, taking our code live. In the last command, using thecurltool, we retrieve theindex.htmlfile of our website and check to see that it's properly deployed by searching for the LocalCast Weather string. - Update your workflow to continuously deploy to gcloud:

.circleci/config.yml ... workflows: version: 2 build-test-and-deploy: jobs: - build - deploy_cloudrun: requires: - buildNote that you can have multiple

deployjobs that simultaneously deploy to multiple targets. - Ensure that your CI pipeline executed successfully by inspecting the test results:

Figure 9.15: Successful gcloud deployment of local-weather-app on the deploy_cloudrun branch

CD works great for development and testing environments. However, it is usually desirable to have gated deployments, where a person must approve a deployment before it reaches a production environment. Next, let's see how you can implement this with CircleCI.

Gated CI workflows

In CircleCI, you can define a workflow to control how and when your jobs are executed. Consider the following configuration, given the jobs build and deploy:

.circleci/config.yml

workflows:

version: 2

build-and-deploy:

jobs:

- build

- hold:

type: approval

requires:

- build

- deploy:

requires:

- hold

First, the build job gets executed. Then, we introduce a special job named hold with type approval, which requires the build job to be successfully completed. Once this happens, the pipeline is put on hold. If or when a decision-maker approves the hold, then the deploy step can execute. Refer to the following screenshot to see what a hold looks like:

Figure 9.16: A hold in the pipeline

Consider a more sophisticated workflow, shown in the following code snippet, where the build and test steps are broken out into two separate jobs:

workflows:

version: 2

build-test-and-approval-deploy:

jobs:

- build

- test

- hold:

type: approval

requires:

- build

- test

filters:

branches:

only: master

- deploy:

requires:

- hold

In this case, the build and test jobs are executed in parallel. If we're on a branch, this is where the pipeline stops. Once the branch is merged with master, then the pipeline is put on hold and a decision-maker has the option to deploy a particular build or not. This type of branch filtering ensures that only code that's been merged to master can be deployed, which is in line with GitHub flow.

Next, we dive deeper into how you can customize Docker to fit your workflow and environments.

Advanced continuous integration

In Chapter 4, Automated Testing, CI, and Release to Production, we covered a basic CircleCI pipeline leveraging default features. Beyond the basic automation of unit test execution, one of the other goals of CI is to enable a consistent and repeatable environment to build, test, and generate deployable artifacts of your application with every code push. Before pushing some code, a developer should have a reasonable expectation that their build will pass; therefore, creating a reliable CI environment that automates commands that developers can also run in their local machines is paramount. To achieve this goal, we will build a custom build pipeline that can run on any OS without configuration or any variation in behavior.

This section uses the lemon-mart repo. Ensure that your project has been properly configured by executing npm run docker:debug as described earlier in the chapter.

Containerizing build environments

In order to ensure a consistent build environment across various OS platforms, developer machines, and CI environments, you may containerize your build environment. Note that there are at least half a dozen common CI tools currently in use. Learning the ins and outs of every tool is an almost impossible task to achieve.

Containerization of your build environment is an advanced concept that goes above and beyond what is currently expected of CI tools. However, containerization is a great way to standardize over 90% of your build infrastructure and can be executed in almost any CI environment. With this approach, the skills you learn and the build configuration you create become far more valuable, because both your knowledge and the tools you create become transferable and reusable.

There are many strategies to containerize your build environment with different levels of granularity and performance expectations. For the purpose of this book, we will focus on reusability and ease of use. Instead of creating a complicated, interdependent set of Docker images that may allow for more efficient fail-first and recovery paths, we will focus on a single and straightforward workflow. Newer versions of Docker have a great feature called multi-stage builds, which allow you to define a multi-image process in an easy-to-read manner and maintain a singular Dockerfile.

At the end of the process, you can extract an optimized container image as our deliverable artifact, shedding the complexity of the images used previously in the process.

As a reminder, your single Dockerfile would look like the following sample:

Dockerfile

FROM duluca/minimal-node-web-server:lts-alpine

WORKDIR /usr/src/app

COPY dist/lemon-mart public

Multi-stage Dockerfiles

Multi-stage builds work by using multiple FROM statements in a single Dockerfile, where each stage can perform a task and make any resources within its instance available to other stages. In a build environment, we can implement various build-related tasks as their own stages, and then copy the end result, such as the dist folder of an Angular build, to the final image, which contains a web server. In this case, we will implement three stages of images:

- Builder: Used to build a production version of your Angular app

- Tester: Used to run unit and e2e tests against headless Chrome instances

- Web server: The final result containing only the optimized production bits

Multi-stage builds require Docker version 17.05 or higher. To read more about multi-stage builds, read the documentation at https://docs.docker.com/develop/develop-images/multistage-build.

As the following diagram shows, the builder will build the application and the tester will execute the tests:

Figure 9.17: Multi-stage Dockerfile

The final image will be built using the outcome of the builder step.

Start by creating a new file to implement the multi-stage configuration, named integration.Dockerfile, at the root of your project.

Builder

The first stage is builder. We need a lightweight build environment that can ensure consistent builds across the board. For this purpose, I've created a sample Alpine-based Node build environment complete with the npm, bash, and Git tools. This minimal container is called duluca/minimal-node-build-env, which is based on node-alpine and can be found on Docker Hub at https://hub.docker.com/r/duluca/minimal-node-build-env. This image is about 10 times smaller than node.

The size of Docker images has a real impact on build times, since the CI server or your team members will spend extra time pulling a larger image. Choose the environment that best fits your needs.

Let's create a builder using a suitable base image:

- Ensure that you have the

build:prodcommand in place inpackage.json:package.json "scripts": { "build:prod": "ng build --prod", } - Inherit from a Node.js-based build environment, such as

node:lts-alpineorduluca/minimal-node-build-env:lts-alpine. - Implement your environment-specific build script in a new

Dockerfile, namedintegration.Dockerfile, as shown:integration.Dockerfile FROM duluca/minimal-node-build-env:lts-alpine as builder ENV BUILDER_SRC_DIR=/usr/src # setup source code directory and copy source code WORKDIR $BUILDER_SRC_DIR COPY . . # install dependencies and build RUN npm ci RUN npm run style RUN npm run lint RUN npm run build:prod

CI environments will check out your source code from GitHub and place it in the current directory. So, copying the source code from the current working directory (CWD) using the dot notation should work, as it does in your local development environment. If you run into issues, refer to your CI provider's documentation.

Next, let's see how you can debug your Docker build.

Debugging build environments

Depending on your particular needs, your initial setup of the builder portion of the Dockerfile may be frustrating. To test out new commands or debug errors, you may need to directly interact with the build environment.

To interactively experiment and/or debug within the build environment, execute the following command:

$ docker run -it duluca/minimal-node-build-env:lts-alpine /bin/bash

You can test or debug commands within this temporary environment before baking them into your Dockerfile.

Tester

The second stage is tester. By default, the Angular CLI generates a testing requirement that is geared toward a development environment. This will not work in a CI environment; we must configure Angular to work against a headless browser that can execute without the assistance of a GPU and, furthermore, a containerized environment to execute the tests against.

Angular testing tools are covered in Chapter 4, Automated Testing, CI, and Release to Production.

Configuring a headless browser for Angular

The protractor testing tool officially supports running against Chrome in headless mode. In order to execute Angular tests in a CI environment, you will need to configure your test runner, Karma, to run with a headless Chrome instance:

- Update

karma.conf.jsto include a new headless browser option:Karma.conf.js ... browsers: ['Chrome', 'ChromiumHeadless', 'ChromiumNoSandbox'], customLaunchers: { ChromiumHeadless: { base: 'Chrome', flags: [ '--headless', '--disable-gpu', // Without a remote debugging port, Google Chrome exits immediately. '--remote-debugging-port=9222', ], debug: true, }, ChromiumNoSandbox: { base: 'ChromiumHeadless', flags: ['--no-sandbox', '--disable-translate', '--disable- extensions'] }, },The

ChromiumNoSandboxcustom launcher encapsulates all the configuration elements needed for a good default setup. - Update the

protractorconfiguration to run in headless mode:e2e/protractor.conf.js ... capabilities: { browserName: 'chrome', chromeOptions: { args: [ '--headless', '--disable-gpu', '--no-sandbox', '--disable-translate', '--disable-extensions', '--window-size=800,600', ], }, }, ...In order to test your application for responsive scenarios, you can use the

--window-sizeoption, as shown earlier, to change the browser settings. - Update the

package.jsonscripts to select the new browser option in the production build scenarios:package.json "scripts": { ... "test": "ng test lemon-mart --browsers Chrome", "test:prod": "npm test -- --browsers ChromiumNoSandbox -- watch=false" ... }Note that

test:proddoesn't includenpm run e2e. e2e tests are integration tests that take longer to execute, so think twice about including them as part of your critical build pipeline. e2e tests will not run on the lightweight testing environment mentioned in the next section, as they require more resources and time to execute.

Now, let's define the containerized testing environment.

Configuring our testing environment

For a lightweight testing environment, we will be leveraging an Alpine-based installation of the Chromium browser:

- Inherit from

duluca/minimal-node-chromium:lts-alpine. - Append the following configuration to

integration.Dockerfile:integration.Dockerfile ... FROM duluca/minimal-node-chromium:lts-alpine as tester ENV BUILDER_SRC_DIR=/usr/src ENV TESTER_SRC_DIR=/usr/src WORKDIR $TESTER_SRC_DIR COPY --from=builder $BUILDER_SRC_DIR . # force update the webdriver, so it runs with latest version of Chrome RUN cd ./node_modules/protractor && npm i webdriver-manager@latest WORKDIR $TESTER_SRC_DIR RUN npm run test:prod

The preceding script will copy the production build from the builder stage and execute your test scripts in a predictable manner.

Web server

The third and final stage generates the container that will be your web server. Once this stage is complete, the prior stages will be discarded, and the end result will be an optimized sub-10 MB container:

- Append the following

FROMstatement at the end of the file to build the web server, but this time,COPYthe production-ready code frombuilder, as shown in the following code snippet:integration.Dockerfile ... FROM duluca/minimal-nginx-web-server:1-alpine as webserver ENV BUILDER_SRC_DIR=/usr/src COPY --from=builder $BUILDER_SRC_DIR/dist/lemon-mart /var/www CMD 'nginx' - Build and test your multi-stage

Dockerfile:$ docker build -f integration.Dockerfile .Depending on your operating system, you may see Terminal errors. So long as the Docker image successfully builds in the end, then you can safely ignore these errors. For reference purposes, when we later build this image on CircleCI, no errors are logged on the CI server.

- Save your script as a new npm script named

build:integration, as shown:package.json "scripts": { ... "build:integration": "cross-conf-env docker image build -f integration.Dockerfile . -t $npm_package_config_imageRepo:latest", ... }

Great work! You've defined a custom build and test environment. Let's visualize the end result of our efforts as follows:

Figure 9.18: Multi-stage build environment results

By leveraging a multi-stage Dockerfile, we can define a customized build environment, and only ship the necessary bytes at the end of the process. In the preceding example, we are avoiding shipping 250+ MB of development dependencies to our production server and only delivering a 7 MB container that has a minimal memory footprint.

Now, let's execute this containerized pipeline on CircleCI.

CircleCI container-in-container

In Chapter 4, Automated Testing, CI, and Release to Production, we created a relatively simple CircleCI file. Later on, we will repeat the same configuration for this project as well, but for now, we will be using a container-within-a-container setup leveraging the multi-stage Dockerfile we just created.

On the lemon-mart repo, the config.yml file for this section is named .circleci/config.docker-integration.yml. You can also find a pull request that executes the .yml file from this chapter on CircleCI at https://github.com/duluca/lemon-mart/pull/25 using the docker-integration branch.

Note that this build uses a modified integration.Dockerfile to use the projects/ch8 code from lemon-mart.

In your source code, create a folder named .circleci and add a file named config.yml:

.circleci/config.yml

version: 2.1

jobs:

build:

docker:

- image: circleci/node:lts

working_directory: ~/repo

steps:

- checkout

- setup_remote_docker

- run:

name: Execute Pipeline (Build Source -> Test -> Build Web Server)

command: |

docker build -f integration.Dockerfile . -t lemon-mart:$CIRCLE_BRANCH

mkdir -p docker-cache

docker save lemon-mart:$CIRCLE_BRANCH | gzip > docker-cache/built-image.tar.gz

- save_cache:

key: built-image-{{ .BuildNum }}

paths:

- docker-cache

- store_artifacts:

path: docker-cache/built-image.tar.gz

destination: built-image.tar.gz

workflows:

version: 2

build-and-deploy:

jobs:

- build

In the preceding config.yml file, a workflow named build-and-deploy is defined, which contains a job named build. The job uses CircleCI's pre-built circleci/node:lts image.

The build job has five steps:

checkoutchecks out the source code from GitHub.setup_remote_dockerinforms CircleCI to set up a Docker-within-Docker environment, so we can run containers within our pipeline.runexecutes thedocker build -f integration.Dockerfile .command to initiate our custom build process, caches the resulting Alpine-based image, and tags it with$CIRCLE_BRANCH.save_cachesaves the image we created in the cache, so it can be consumed by the next step.store_artifactsreads the created image from the cache and publishes the image as a build artifact, which can be downloaded from the web interface or used by another job to deploy it to a cloud environment.

After you sync your changes to GitHub, if everything goes well, you will have a passing green build. As shown in the following screenshot, this build was successful:

Figure 9.19: Green build on CircleCI using the lemon-mart docker-integration branch

Note that the tarred and gzipped image file size is 9.2 MB, which includes our web applications on top of the roughly 7 MB base image size.

At the moment, the CI server is running and executing our three-step pipeline. As you can see in the preceding screenshot, the build is producing a tarred and gzipped file of the web server image, named built-image.tar.gz. You can download this file from the Artifacts tab. However, we're not deploying the resulting image to a server.

You have now adequately mastered working with CircleCI. We will revisit this multi-stage Dockerfile to perform a deployment on AWS in Chapter 13, Highly Available Cloud Infrastructure on AWS.

Next, let's see how you can get a code coverage report from your Angular app and record the result in CircleCI.

Code coverage reports

A good way to understand the amount and the trends of unit test coverage for your Angular project is through a code coverage report.

In order to generate the report for your app, execute the following command from your project folder:

$ npx ng test --browsers ChromiumNoSandbox --watch=false --code-coverage

The resulting report will be created as an HTML file under a folder named coverage; execute the following command to view it in your browser:

$ npx http-server -c-1 -o -p 9875 ./coverage

Install http-server as a development dependency in your project.

Here's the folder-level sample coverage report generated by istanbul/nyc for LemonMart:

Figure 9.20: Istanbul code coverage report for LemonMart

You can drill down on a particular folder, such as src/app/auth, and get a file-level report, as shown here:

Figure 9.21: Istanbul code coverage report for src/app/auth

You can drill down further to get line-level coverage for a given file, such as cache.service.ts, as shown here:

Figure 9.22: Istanbul code coverage report for cache.service.ts

In the preceding screenshot, you can see that lines 5, 12, 17-18, and 21-22 are not covered by any test. The I icon denotes that the if path was not taken. We can increase our code coverage by implementing unit tests that exercise the functions that are contained within CacheService. As an exercise, the reader should attempt to at least cover one of these functions with a new unit test and observe the code coverage report change.

Code coverage in CI

Ideally, your CI server configuration should generate and host the code coverage report with every test run. You can then use code coverage as another code quality gate to prevent pull requests being merged if the new code is bringing down the overall code coverage percentage. This is a great way to reinforce the test-driven development (TDD) mindset.

You can use a service such as Coveralls, found at https://coveralls.io/, to implement your code coverage checks, which can embed your code coverage levels directly on a GitHub pull request.

Let's configure Coveralls for LemonMart:

On the lemon-mart repo, the config.yml file for this section is named .circleci/config.ch9.yml.

- In your CircleCI account settings, under the security section, ensure that you allow execution of uncertified/unsigned orbs.

- Register your GitHub project at https://coveralls.io/.

- Copy the repo token and store it as an environment variable in CircleCI named

COVERALLS_REPO_TOKEN. - Create a new branch before you make any code changes.

- Update

karma.conf.jsso it stores code coverage results under thecoveragefolder:karma.conf.js ... coverageIstanbulReporter: { dir: require('path').join(__dirname, 'coverage'), reports: ['html', 'lcovonly'], fixWebpackSourcePaths: true, }, ... - Update the

.circleci/config.ymlfile with the Coveralls orb as shown:.circleci/config.yml version: 2.1 orbs: coveralls: coveralls/coveralls@1.0.4 - Update the

buildjob to store code coverage results and upload them to Coveralls:.circleci/config.yml jobs: build: ... - run: npm test -- --watch=false --code-coverage - run: npm run e2e - store_test_results: path: ./test_results - store_artifacts: path: ./coverage - coveralls/upload - run: name: Tar & Gzip compiled app command: tar zcf dist.tar.gz dist/lemon-mart - store_artifacts: path: dist.tar.gzNote that the orb automatically configures Coveralls for your account, so the

coveralls/uploadcommand can upload your code coverage results. - Commit your changes to the branch and publish it.

- Create a pull request on GitHub using the branch.

- On the pull request, verify that you can see that Coveralls is reporting your project's code coverage, as shown:

Figure 9.23: Coveralls reporting code coverage

- Merge the pull request to your master branch.

Congratulations! Now, you can modify your branch protection rules to require that code coverage levels must be above a certain percentage before a pull request can be merged to master.

The LemonMart project at https://github.com/duluca/lemon-mart implements a full-featured config.yml file. This file also implements Cypress.io, a far more robust solution compared to Angular's e2e tool, in CircleCI as well. The Cypress orb can record test results and allow you to view them from your CircleCI pipeline.

Leveraging what you have learned in this chapter, you can incorporate the deploy scripts from LocalCast Weather for LemonMart and implement a gated deployment workflow.

Summary

In this chapter, you learned about DevOps and Docker. You containerized your web app, deployed a container to Google Cloud Run using CLI tools, and learned how to implement gated CI workflows. You leveraged advanced CI techniques to build a container-based CI environment leveraging a multi-stage Dockerfile. Also, you became familiar with orbs, workflows, and code coverage tools.

We leveraged CircleCI as a cloud-based CI service and highlighted the fact that you can deploy the outcome of your builds to all major cloud hosting providers. You have seen how you can achieve CD. We covered example deployments to Vercel Now and Google Cloud Run via CircleCI, allowing you to implement automated deployments.

With a robust CI/CD pipeline, you can share every iteration of your app with clients and team members and quickly deliver bug fixes or new features to your end users.

Exercise

- Add CircleCI and Coveralls badges to the

README.mdfile on your code repository. - Implement Cypress for e2e testing and run it in your CircleCI pipeline using the Cypress orb.

- Implement a Vercel Now deployment and a conditional workflow for the Lemon Mart app. You can find the resulting

config.ymlfile on the lemon-mart repo, named.circleci/config.ch9.yml.

Further reading

- Dockerfile reference, 2020, https://docs.docker.com/engine/reference/builder/

- CircleCI orbs, 2020, https://circleci.com/orbs/

- Deploying container images, 2020, https://cloud.google.com/run/docs/deploying

- Creating and managing service account keys, 2020, https://cloud.google.com/iam/docs/creating-managing-service-account-keys#iam-service-account-keys-create-console

Questions

Answer the following questions as best as you can to ensure that you've understood the key concepts from this chapter without Googling. Do you need help answering the questions? See Appendix D, Self-Assessment Answers online at https://static.packt-cdn.com/downloads/9781838648800_Appendix_D_Self-Assessment_Answers.pdf or visit https://expertlysimple.io/angular-self-assessment.

- Explain the difference between a Docker image and a Docker container.

- What is the purpose of a CD pipeline?

- What is the benefit of CD?

- How do we cover the configuration gap?

- What does a CircleCI orb do?

- What are the benefits of using a multi-stage

Dockerfile? - How does a code coverage report help maintain the quality of your app?