Following the same approach we performed in the previous example-Minimal face API using OpenCV, we are going to create a deep learning API using OpenCV. More specifically, we will see how to create a deep learning cat detection API. The cat_detection_api project codes the web server application. The main.py script is responsible for parsing the requests and for building the response to the client. The code of this script is as follows:

# Import required packages:

from flask import Flask, request, jsonify

import urllib.request

from image_processing import ImageProcessing

app = Flask(__name__)

ip = ImageProcessing()

@app.errorhandler(400)

def bad_request(e):

# return also the code error

return jsonify({"status": "not ok", "message": "this server could not understand your request"}), 400

@app.errorhandler(404)

def not_found(e):

# return also the code error

return jsonify({"status": "not found", "message": "route not found"}), 404

@app.errorhandler(500)

def not_found(e):

# return also the code error

return jsonify({"status": "internal error", "message": "internal error occurred in server"}), 500

@app.route('/catfacedetection', methods=['GET', 'POST', 'PUT'])

def detect_cat_faces():

if request.method == 'GET':

if request.args.get('url'):

with urllib.request.urlopen(request.args.get('url')) as url:

return jsonify({"status": "ok", "result": ip.cat_face_detection(url.read())}), 200

else:

return jsonify({"status": "bad request", "message": "Parameter url is not present"}), 400

elif request.method == 'POST':

if request.files.get("image"):

return jsonify({"status": "ok", "result": ip.cat_face_detection(request.files["image"].read())}), 200

else:

return jsonify({"status": "bad request", "message": "Parameter image is not present"}), 400

else:

return jsonify({"status": "failure", "message": "PUT method not supported for API"}), 405

@app.route('/catdetection', methods=['GET', 'POST', 'PUT'])

def detect_cats():

if request.method == 'GET':

if request.args.get('url'):

with urllib.request.urlopen(request.args.get('url')) as url:

return jsonify({"status": "ok", "result": ip.cat_detection(url.read())}), 200

else:

return jsonify({"status": "bad request", "message": "Parameter url is not present"}), 400

elif request.method == 'POST':

if request.files.get("image"):

return jsonify({"status": "ok", "result": ip.cat_detection(request.files["image"].read())}), 200

else:

return jsonify({"status": "bad request", "message": "Parameter image is not present"}), 400

else:

return jsonify({"status": "failure", "message": "PUT method not supported for API"}), 405

if __name__ == "__main__":

# Add parameter host='0.0.0.0' to run on your machines IP address:

app.run(host='0.0.0.0')

As you can see, we make use of the route() decorator to bind the detect_cat_faces() function to the /catfacedetection URL and, also, to bind the detect_cats() function to the /catdetection URL. Additionally, we make use of the jsonify() function to create the JSON representation of the given arguments with an application/json MIME type. As you can also see, this API supports both GET and POST requests. Additionally, in main.py script, we also register error handlers by decorating functions with errorhandler(). Remember to also set the error code when returning the response to the client.

The image processing is performed in the image_processing.py script, where the ImageProcessing() class is coded. In this sense, only the cat_face_detection() and cat_detection() methods are shown:

class ImageProcessing(object):

def __init__(self):

...

...

def cat_face_detection(self, image):

image_array = np.asarray(bytearray(image), dtype=np.uint8)

img_opencv = cv2.imdecode(image_array, -1)

output = []

gray = cv2.cvtColor(img_opencv, cv2.COLOR_BGR2GRAY)

cats = self.cat_cascade.detectMultiScale(gray, scaleFactor=1.1, minNeighbors=5, minSize=(25, 25))

for cat in cats:

# face.tolist(): returns a copy of the array data as a Python list

x, y, w, h = cat.tolist()

face = {"box": [x, y, x + w, y + h]}

output.append(face)

return output

def cat_detection(self, image):

image_array = np.asarray(bytearray(image), dtype=np.uint8)

img_opencv = cv2.imdecode(image_array, -1)

# Create the blob with a size of (300,300), mean subtraction values (127.5, 127.5, 127.5):

# and also a scalefactor of 0.007843:

blob = cv2.dnn.blobFromImage(img_opencv, 0.007843, (300, 300), (127.5, 127.5, 127.5))

# Feed the input blob to the network, perform inference and ghe the output:

self.net.setInput(blob)

detections = self.net.forward()

# Size of frame resize (300x300)

dim = 300

output = []

# Process all detections:

for i in range(detections.shape[2]):

# Get the confidence of the prediction:

confidence = detections[0, 0, i, 2]

# Filter predictions by confidence:

if confidence > 0.1:

# Get the class label:

class_id = int(detections[0, 0, i, 1])

# Get the coordinates of the object location:

left = int(detections[0, 0, i, 3] * dim)

top = int(detections[0, 0, i, 4] * dim)

right = int(detections[0, 0, i, 5] * dim)

bottom = int(detections[0, 0, i, 6] * dim)

# Factor for scale to original size of frame

heightFactor = img_opencv.shape[0] / dim

widthFactor = img_opencv.shape[1] / dim

# Scale object detection to frame

left = int(widthFactor * left)

top = int(heightFactor * top)

right = int(widthFactor * right)

bottom = int(heightFactor * bottom)

# Check if we have detected a cat:

if self.classes[class_id] == 'cat':

cat = {"box": [left, top, right, bottom]}

output.append(cat)

return output

As seen here, two methods are implemented. The cat_face_detection() method performs cat face detection using the OpenCV detectMultiScale() function.

The cat_detection() method performs cat detection using MobileNet SSD object detection, which was trained in Cafe-SSD framework, and it can detect 20 classes. In this example, we will be detecting cats. Therefore, if class_id is a cat, we will add the detection to the output. For more information about how to process detections and use pre-trained deep learning models, we recommend Chapter 12, Introduction to Deep Learning, which is focused on deep learning. The entire code can be found at https://github.com/PacktPublishing/Mastering-OpenCV-4-with-Python/blob/master/Chapter13/01-chapter-content/opencv_examples/cat_detection_api_axample/cat_detection_api/image_processing.py.

In order to test this API, the demo_request_drawing.py script can be used, which is as follows:

# Import required packages:

import cv2

import numpy as np

import requests

from matplotlib import pyplot as plt

def show_img_with_matplotlib(color_img, title, pos):

"""Shows an image using matplotlib capabilities"""

img_RGB = color_img[:, :, ::-1]

ax = plt.subplot(1, 1, pos)

plt.imshow(img_RGB)

plt.title(title)

plt.axis('off')

CAT_FACE_DETECTION_REST_API_URL = "http://localhost:5000/catfacedetection"

CAT_DETECTION_REST_API_URL = "http://localhost:5000/catdetection"

IMAGE_PATH = "cat.jpg"

# Load the image and construct the payload:

image = open(IMAGE_PATH, "rb").read()

payload = {"image": image}

# Convert the loaded image to the OpenCV format:

image_array = np.asarray(bytearray(image), dtype=np.uint8)

img_opencv = cv2.imdecode(image_array, -1)

# Submit the POST request:

r = requests.post(CAT_DETECTION_REST_API_URL, files=payload)

# See the response:

print("status code: {}".format(r.status_code))

print("headers: {}".format(r.headers))

print("content: {}".format(r.json()))

# Get JSON data from the response and get 'result':

json_data = r.json()

result = json_data['result']

# Draw cats in the OpenCV image:

for cat in result:

left, top, right, bottom = cat['box']

# To draw a rectangle, you need top-left corner and bottom-right corner of rectangle:

cv2.rectangle(img_opencv, (left, top), (right, bottom), (0, 255, 0), 2)

# Draw top-left corner and bottom-right corner (checking):

cv2.circle(img_opencv, (left, top), 10, (0, 0, 255), -1)

cv2.circle(img_opencv, (right, bottom), 10, (255, 0, 0), -1)

# Submit the POST request:

r = requests.post(CAT_FACE_DETECTION_REST_API_URL, files=payload)

# See the response:

print("status code: {}".format(r.status_code))

print("headers: {}".format(r.headers))

print("content: {}".format(r.json()))

# Get JSON data from the response and get 'result':

json_data = r.json()

result = json_data['result']

# Draw cat faces in the OpenCV image:

for face in result:

left, top, right, bottom = face['box']

# To draw a rectangle, you need top-left corner and bottom-right corner of rectangle:

cv2.rectangle(img_opencv, (left, top), (right, bottom), (0, 255, 255), 2)

# Draw top-left corner and bottom-right corner (checking):

cv2.circle(img_opencv, (left, top), 10, (0, 0, 255), -1)

cv2.circle(img_opencv, (right, bottom), 10, (255, 0, 0), -1)

# Create the dimensions of the figure and set title:

fig = plt.figure(figsize=(6, 7))

plt.suptitle("Using cat detection API", fontsize=14, fontweight='bold')

fig.patch.set_facecolor('silver')

# Show the output image

show_img_with_matplotlib(img_opencv, "cat detection", 1)

# Show the Figure:

plt.show()

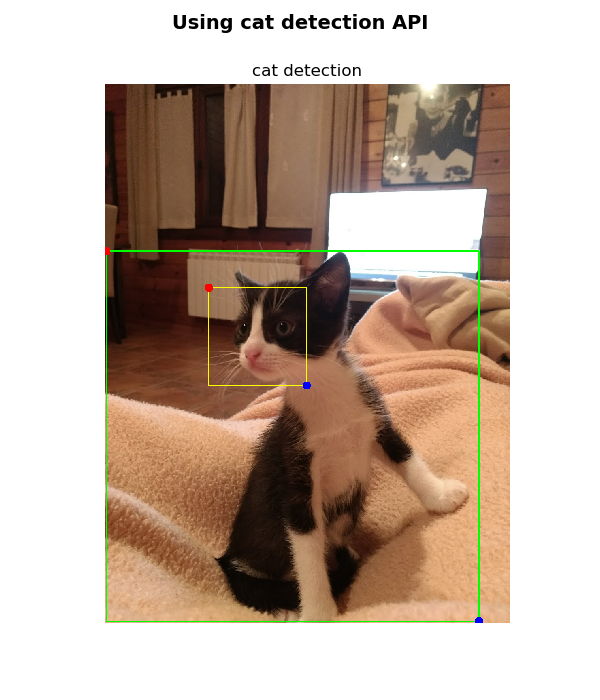

In the previous script, we perform two POST requests in order to detect both the cat faces and, also, the cats in the cat.jpg image. Additionally, we also parse the response from both requests and draw the results, which can be seen in the output of this script, as shown in the following screenshot:

As shown in the previous screenshot, both the cat face detection and the full-body cat detection are drawn.