The linear_regression_keras_training.py dataset performs the training of a linear regression model. The first step is to create the data to be used for training/testing the algorithm as follows:

# Generate random data composed by 50 (N = 50) points:

x = np.linspace(0, N, N)

y = 3 * np.linspace(0, N, N) + np.random.uniform(-10, 10, N)

The next step is to create the model. To do so, we have created the create_model() function, as demonstrated in the following code snippet:

def create_model():

"""Create the model using Sequencial model"""

# Create a sequential model:

model = Sequential()

# All we need is a single connection so we use a Dense layer with linear activation:

model.add(Dense(input_dim=1, units=1, activation="linear", kernel_initializer="uniform"))

# Compile the model defining mean squared error(mse) as the loss

model.compile(optimizer=Adam(lr=0.1), loss='mse')

# Return the created model

return model

When using Keras, the simplest type of model is the Sequential model, which can be seen as a linear stack of layers, and is used in this example to create the model. Additionally, for more complex architectures, the Keras functional API, which allows building arbitrary graphs of layers, can be used. So, using the Sequential model, we build the model by stacking layers using the model.add() method. In this example, we are using a single dense or fully connected layer with a linear activation function. Next, we can compile (or configure) the model defining the mean squared error (MSE) as the loss. In this case, the Adam optimizer is used and a learning rate of 0.1 is set.

At this point, we can now train the model feeding the data using the model.fit() method as follows:

linear_reg_model.fit(x, y, epochs=100, validation_split=0.2, verbose=1)

After training, we can get the values of both w and b (learned parameters), which are going to be used to calculate the predictions as follows:

w_final, b_final = get_weights(linear_reg_model)

The get_weights() function returns the values for these parameters as follows:

def get_weights(model):

"""Get weights of w and b"""

w = model.get_weights()[0][0][0]

b = model.get_weights()[1][0]

return w, b

At this point, we can build the following predictions:

# Calculate the predictions:

predictions = w_final * x + b_final

We can also save the model as follows:

linear_reg_model.save_weights("my_model.h5")

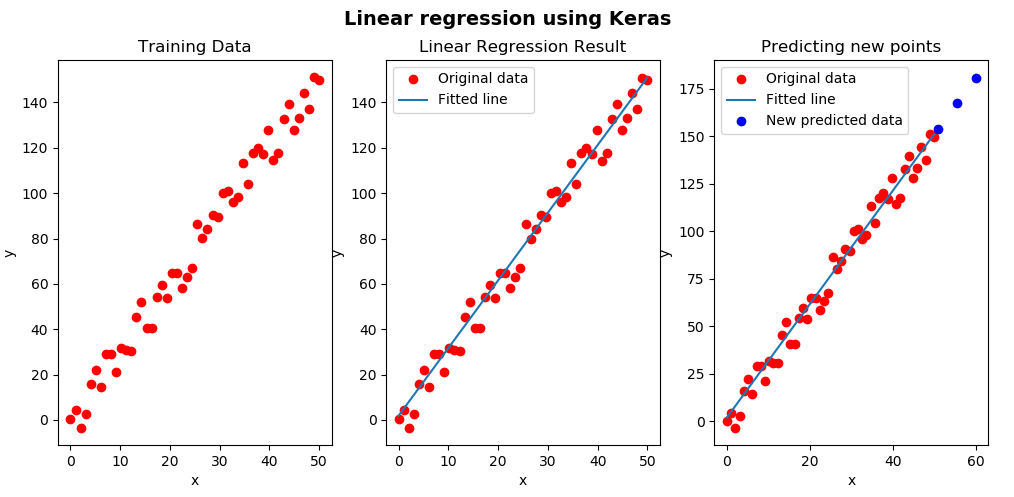

The output of this script can be seen in the following screenshot:

As shown in the previous screenshot, we can see both the training data (on the left) and the fitted line corresponding to the linear regression model (on the right).

We can load the pre-trained model to make predictions. This example can be seen in the linear_regression_keras_testing.py script. The first step is to load the weights as follows:

linear_reg_model.load_weights('my_model.h5')

Using the get_weights() function, we can get the learned parameters as follows:

m_final, b_final = get_weights(linear_reg_model)

At this point, we get the following predictions of the training data and also get new predictions:

predictions = linear_reg_model.predict(x)

new_predictions = linear_reg_model.predict(new_x)

The final step is to show the results obtained, which can be seen in the following screenshot:

As shown in the previous screenshot, we have used the pre-trained model for making new predictions (blue points).