In the next example, we are going to see how to match the detected features. OpenCV provides two matchers, as follows:

- Brute-Force (BF) matcher: This matcher takes each descriptor computed for each detected feature in the first set and it is matched with all other descriptors in the second set. Finally, it returns the match with the closest distance.

- Fast Library for Approximate Nearest Neighbors (FLANN) matcher: This matcher works faster than the BF matcher for large datasets. It contains optimized algorithms for nearest neighbor search.

In the feature_matching.py script, we will use BF matcher to see how to match the detected features. So, the first step is to both detect keypoints and compute the descriptors:

orb = cv2.ORB_create()

keypoints_1, descriptors_1 = orb.detectAndCompute(image_query, None)

keypoints_2, descriptors_2 = orb.detectAndCompute(image_scene, None)

The next step is to create the BF matcher object using cv2.BFMatcher():

bf_matcher = cv2.BFMatcher(cv2.NORM_HAMMING, crossCheck=True)

The first parameter, normType, sets the distance measurement to use cv2.NORM_L2 by default. In case of using ORB descriptors (or other binary-based descriptors such as BRIEF or BRISK), the distance measurement to use is cv2.NORM_HAMMING. The second parameter, crossCheck (which is False by default), can be set to True in order to return only consistent pairs in the matching process (the two features in both sets should match each other). Once created, the next step is to match the detected descriptors using the BFMatcher.match() method:

bf_matches = bf_matcher.match(descriptors_1, descriptors_2)

The descriptors_1 and descriptors_2 are the descriptors that should have been previously calculated; this way, we get the best matches in two images. At this point, we can sort the matches in ascending order of their distance:

bf_matches = sorted(bf_matches, key=lambda x: x.distance)

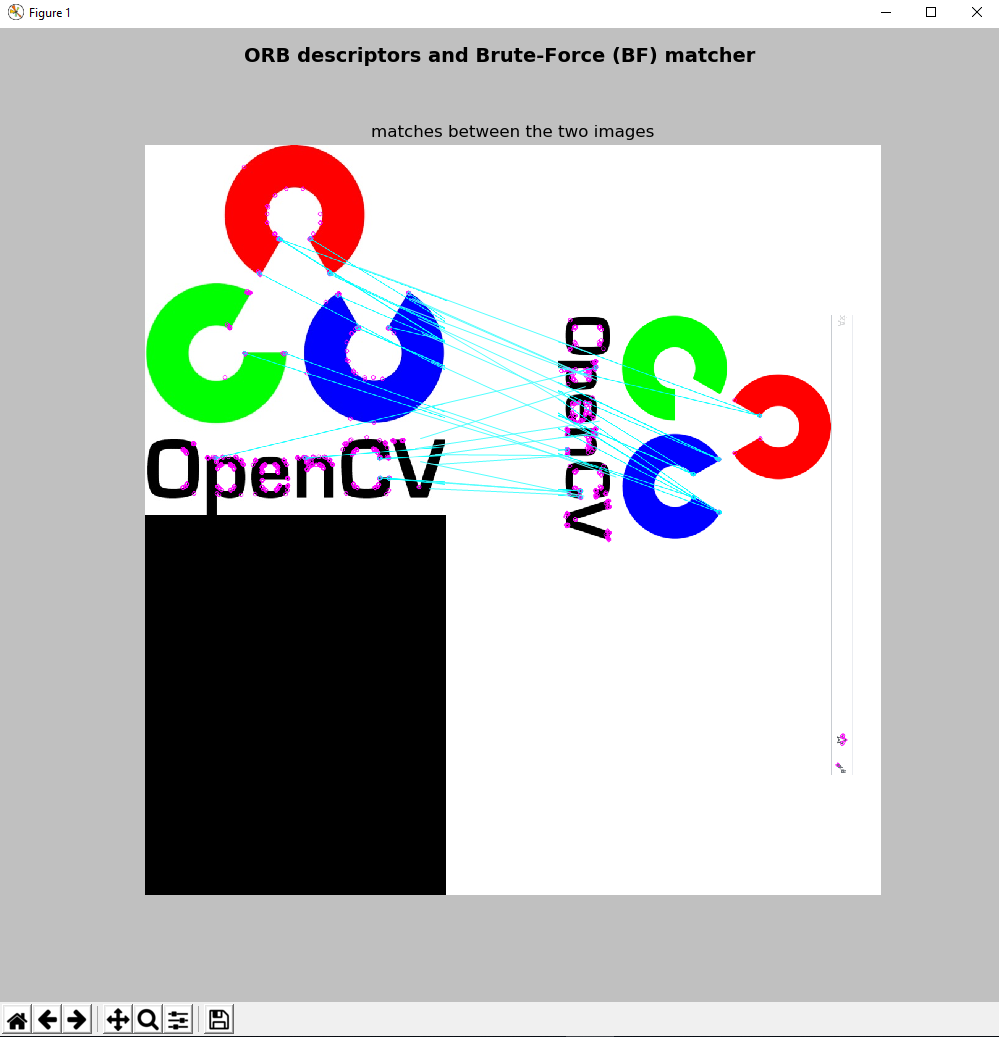

Finally, we can draw the matches using the cv2.drawMatches() function. In this case, only the first 20 matches (for the sake of visibility) are shown:

result = cv2.drawMatches(image_query, keypoints_1, image_scene, keypoints_2, bf_matches[:20], None, matchColor=(255, 255, 0), singlePointColor=(255, 0, 255), flags=0)

The cv2.drawMatches() function concatenates two images horizontally, and draws lines from the first to the second image showing the matches.

The output of the feature_matching.py script can be seen in the next screenshot:

As you can see in the previous screenshot, the matches between both images (image_query, image_scene) are drawn.