Chapter 5

Turing’s Fantastic Machine

It is possible to invent a single machine which can be used to compute any computable sequence.

– Alan Turing

Computers seem to have more in common with engineering than they do with maths, and when it comes to hardware or programming applications that’s certainly true. But the theory of computation – theoretical computer science – belongs very much within the realm of mathematics. Our trek through the weird maths of computers, to explore the outer limits of what it’s possible to compute, begins almost a century ago, well before the first electronic brains flickered into life.

In 1928, the German mathematician David Hilbert, renowned for challenging his peers with unsolved questions, posed what he called the Entscheidungsproblem or ‘decision problem’. This asked whether it’s always possible to find a step-by-step procedure to decide, in a finite time, if a given mathematical statement is true or not. Hilbert thought the answer would turn out to be ‘yes’ but, in less than a decade, that hope had been dashed.

The first blow came in a paper published in 1931 by the Austrian-born logician Kurt Gödel, whose work, which we’ll come across in more detail in the final chapter, was concerned with axiomatic systems – collections of rules, or axioms, taken to be self-evidently true – that can be used to derive theorems. Gödel showed that in any axiomatic system that’s logically consistent, and big enough to encompass all the rules of arithmetic, some things are bound to be true that can’t be proven to be true from within the system. What became known as Gödel’s incompleteness theorems meant that there’d always be some mathematical truths that were unprovable. This revelation came as a nasty shock to many but still left the door open on the question of the decidability of mathematical propositions, in other words, of finding a series of steps, or an algorithm, that was guaranteed to decide whether any given proposition was provable or not – and if provable, whether it was true or false. Soon, though, that door was to be slammed shut as well, in part due to a young Englishman, Alan Turing, who helped give the final verdict on the Entscheidungsproblem.

Turing’s life was a blend of triumph and tragedy – triumph because he was a genius who helped found computer science and shorten World War II, tragedy because of the way society treated homosexuals at the time. From an early age it was clear that Turing had remarkable talents in maths and science. These were in evidence at Sherborne School, in Dorset, which he began attending in 1926 at the age of 13. A fellow pupil was Christopher Morcom, another outstanding student, with whom Turing forged a deep friendship. The sudden death of Morcom in 1930 had a profound effect on Turing. He threw himself even more single-mindedly into his mathematical studies and acquired, because of the loss, a keen interest in the nature of mind and the possible survival of the spirit after death, a subject he thought might find a solution in quantum mechanics.

As an undergraduate at Cambridge, Turing took a course in logic during which he learned about the Entscheidungsproblem. He decided to focus on it as part of his graduate research having become convinced that Hilbert was wrong. There did not always exist, he believed, an algorithm for deciding whether or not a specific mathematical assertion could be proved. To tackle the decision problem, Turing needed a way of implementing algorithms in general: an idealised device that could carry out any logical set of instructions that was given to it. What he came up with was a purely abstract machine – he called it an a-machine (a for ‘automatic’), though it soon became known as a Turing machine – which he never intended should actually be built. Its design is purposely very basic and it would be painfully slow to operate. It’s meant to be just a mathematical model of a computing machine and could hardly be simpler.

A Turing machine consists of an indefinitely long tape divided into squares, on each of which may be a 1, a 0, or a blank, and a read/write head. The head scans one square at a time and performs an action based on the head’s internal state, the contents of the square, and the current instruction in its logbook or program. The instruction might be, for instance: ‘If you’re in state 18 and the square you’re looking at contains a 0 then change it to a 1, move the tape one square to the left, and switch into state 25.’

On the machine’s tape to start with is its input, in the form of a finite pattern of 1’s and 0’s. The read/write head is positioned over the first square of the input, say the leftmost, and follows the first instruction that it’s been given. Gradually, it works its way through the instruction list, or program, transforming the initial string of 1’s and 0’s on the tape into a different string, until, eventually, it comes to a halt. When the machine reaches this final state, what’s left on the tape is the output.

A very simple example would be adding one more to a row of n 1’s; in other words, turning n into n + 1. The input would be the string of 1’s followed by a blank square, or, in the special case where n = 0, just a blank square. The first instruction would tell the read/write head to start at the first non-blank square, or at any square if it were known that the tape was completely blank, and read what was on the square there. If it were a 1, the instruction would be to leave it unchanged and move one square to the right while remaining in the same state; if it were a blank, the instruction would be to write a 1 in that square and stop. Having added a 1 to the string, the head might be told to stop where it was or return to the start, possibly to repeat the whole process again and add one more to the total. Alternatively, a different state could be introduced when the read/write head is positioned at the final 1 and a new program of actions continued from there.

Some Turing machines might never stop, or never stop for a given input. For example, a Turing machine that’s instructed always to move to the right, whatever is in the squares it reads, will never stop, and it’s easy to see this in advance.

Turing then envisioned a specific kind of Turing machine, now known as a universal Turing machine. In theory this could run any possible program. The tape would consist of two distinct parts. One part would encode the program while the other held the input. The read/write head of a universal Turing machine would move between these parts and carry out the program’s instructions on the input. It’s such a simple device: an infinitely long tape that holds both the program to be run and the input/output, and a read/write head. It can carry out just six basic operations: read, write, move left, move right, change state, and halt. Yet, despite this simplicity, the universal Turing machine is astonishingly capable.

You probably own at least one computer. It could have any operating system – some version of Windows perhaps, or of Mac or Android, or some other system, such as Linux. Manufacturers like to point out the advantages and special features of their own operating systems that distinguish them from the competition. However, from a mathematical standpoint, given enough memory and time, all the different operating systems are identical. What’s more, they’re all equivalent to a universal Turing machine, which at first glance looks far too simple to be as powerful, in terms of capability although not efficiency, as any computer in existence.

The universal Turing machine led to a concept known as emulation. One computer can emulate another if it can run a program (known as an emulator!) that effectively turns it into that computer. For example, a computer running Mac OS can execute a program that makes it behave as if it were running Windows – although this takes a lot of memory and is slow to process. If such an emulation is possible, the two computers are mathematically equivalent.

A programmer can also quite easily write a program to enable any computer to emulate any specific Turing machine – including a universal Turing machine (again, assuming an unlimited amount of memory is available). By the same token, a universal Turing machine can emulate any other computer by running a suitable emulator. The bottom line is that all computers, granted enough memory, can run the same programs, although it may be necessary to code them in certain specific languages depending on how the system is set up.

There have even been various physical implementations that follow Turing’s original design. These have been done mainly either as an engineering exercise or to explain how simple computation works. A number have been built using Lego, including one from a single Lego Mindstorms NXT set. In contrast, the working model created by Wisconsin inventor Mike Davey ‘embodies the classic look and feel of the machine presented in Turing’s paper’, and is on long-term display at the Computer History Museum in Mountain View, California.

A Turing machine of the form originally conceived by Alan Turing, constructed by Mike Davey.

Turing’s actual purpose in coming up with his cunning device, as mentioned earlier, was to solve Hilbert’s decision problem, which he did in 1936 in a paper titled ‘On Computable Numbers with an Application to the Entscheidungsproblem’. A universal Turing machine may or may not stop given any input. Turing asked: is it possible to determine whether it stops or not? You might try running it indefinitely and see what happens. However, if it went on for a long time and you chose to give up at a certain point, you’d never know whether the Turing machine was going to stop right after that point or later on, or whether it would carry on forever. Of course, it’s possible to evaluate the outcome on a case-by-case basis, just as we can work out whether a simple Turing machine ever halts. But what Turing wanted to know was if there was a general algorithm that could decide the outcome – whether the machine stops or not – for all inputs. This is known as the halting problem, and Turing proved that no such algorithm exists. He then went on to show, in the final part of his paper, that this implies that the Entscheidungsproblem can’t be solved. We can be certain that no matter how ingenious a program is, it can never work out, in all cases, whether any other program will terminate.

A month before Turing’s landmark paper first appeared in print, the American logician Alonzo Church, who was Turing’s PhD supervisor, independently published a paper that reached the same conclusion but using a completely different approach called lambda calculus. Like a Turing machine, lambda calculus provides a universal model of computation but more from a programming language than a hardware point of view; it deals with ‘combinators’, which are essentially functions that act on other functions. Both Church and Turing, using their different methods, arrived at essentially the same result, which became known as the Church–Turing thesis. The gist of this is that something can be calculated or evaluated by human beings (ignoring the little matter of resource limitations) only if it’s computable by a Turing machine or a device that’s equivalent to a Turing machine. For something to be computable, it means that a Turing machine, given the program as input (encoded into binary), can run before ultimately terminating with the answer (similarly encoded) as output. A key implication of the Church–Turing thesis is that a general solution to the Entscheidungsproblem is impossible.

Although Turing invented his machine to solve a mathematical problem he effectively laid down a blueprint for the development of digital computers. All modern computers basically do what Turing machines do, and the concept is also used to measure the strength of computer instruction sets and programming languages. These are said to be Turing complete, and therefore at the pinnacle of computer program strengths, if they can be used to simulate any single-tape Turing machine.

No one has yet come up with a way of computing things that can do more than what a Turing machine does. Recent developments in quantum computers seem, at first sight, to offer a way of transcending the Turing machine’s powers. But, in fact, even a quantum computer, given enough time, can be emulated by any ordinary (classical) computer. For some types of problems, quantum computers may be much more efficient than any classical equivalent but, in the end, everything of which they’re capable can be done on the simple device that Turing envisioned. What this shows is that there are some things we can’t hope to compute and get a general answer that’s guaranteed to be accurate (although we may be able to do it on a case-by-case basis).

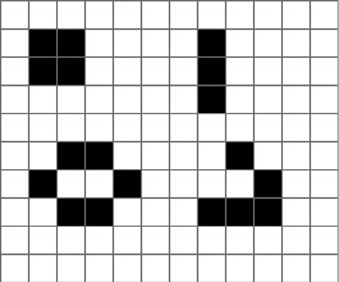

There are other things in maths that, superficially, look nothing like Turing machines, but turn out to be equivalent to them by emulation. One example is the Game of Life, devised by the English mathematician John Conway. The game came about because of Conway’s interest in a problem investigated in the 1940s by the American mathematician and computer pioneer John von Neumann. Could a hypothetical machine be contrived that could make exact copies of itself? Von Neumann found that it could by creating a mathematical model for such a machine using very complicated rules on a rectangular grid. Conway wondered if there was a much simpler way of proving the same result, and so arrived at the Game of Life. Conway’s game is played on an (in theory) infinite square grid of cells that can be coloured either black or white. Some starting pattern of black cells is laid down and then allowed to evolve according to two rules:

1. A black cell remains black if exactly 2 or 3 of its eight neighbours are black.

2. A white cell turns black if exactly 3 of its neighbours are black.

That’s all there is to it. Yet, despite the fact that a child could play it, Life has all the capability of a universal Turing machine – and therefore of any computer that has ever been built. Conway’s remarkable game was first brought to the attention of the wider world through Martin Gardner’s Mathematical Games column in the October 1970 edition of Scientific American. Gardner introduced his reader to some of the basic patterns in Life, such as the ‘block’, a single 2 by 2 black rectangle, which under the rules of the game never changes, and the ‘blinker’, a 1 by 3 black rectangle, which alternates between two states, one horizontal and the other vertical, keeping a fixed centre. The ‘glider’ is a 5-unit shape that moves diagonally by a distance of one square every 4 turns.

Four common Life patterns. On the left is a ‘block’ (top) and a ‘beehive’ (bottom). These are both ‘still lifes’, which means that they stay the same in each generation. The top-right pattern is a ‘blinker’, the most common oscillator, which returns to its starting position after a number of generations. In this case, it alternates between a vertical and horizontal form. The bottom-right pattern is a ‘glider’.

A ‘glider’, in four generations, moves diagonally by one cell.

Conway originally thought that no pattern that was chosen at the outset would grow indefinitely – that all patterns would eventually reach some stable or oscillating state, or die out altogether. In Gardner’s 1970 article on the game, Conway issued a challenge with a $50 reward for the first person who could either prove or disprove this conjecture. Within weeks the prize had been claimed by a team from the Massachusetts Institute of Technology led by mathematician and programmer Bill Gosper, one of the founders of the hacker community. The so-called Gosper glider gun, as part of its endless, repetitive activity, spits out a steady stream of gliders at a rate of one per 30 generations. As well as being fascinating to watch, this also turns out to be of great interest from a theoretical standpoint. Ultimately, the Gosper guns are crucial for building computers in Life because the streams of gliders they emit can be considered analogous to electrons in a computer. In a real computer, though, we need ways of controlling these streams in order to actually compute, which is where logic gates come in.

A logic gate is an electronic component that takes in one or more signals as an input and gives a signal as an output. It’s possible to build a computer from just one type of logic gate but using three types makes the task a lot easier. The gates in question are NOT, AND, and OR. A NOT gate outputs a high signal as an output if and only if it receives a low signal as an input. An AND gate outputs a high signal as an output if and only if both its inputs are high. An OR gate outputs a high signal if and only if at least one input is high. These gates can be combined to form circuits that can both process and store data.

An infinite circuit of logic gates can be used to simulate a Turing machine. In turn, logic gates can be simulated by patterns in Conway’s Game of Life – specifically, by using various combinations of Gosper glider guns. A stream of gliders issuing from one of these guns can represent a ‘high’ signal (a ‘1’), and an absence of gliders a ‘low’ signal (a ‘0’). Crucially, one glider can block another one because if two gliders meet in the right way, they annihilate each other. The final piece of the puzzle is something known as an ‘eater’, which is a simple configuration of seven black cells. An eater can absorb excess gliders, thereby preventing them from disrupting other parts of the pattern, while itself remaining unchanged. Combinations of Gosper glider guns and eaters are all it takes to simulate various logic gates, which can then be put together to simulate a complete Turing machine. So, remarkably, there’s nothing that even the world’s most powerful supercomputer can do that, given enough time, can’t be computed using the Game of Life. It’s also impossible to write a program that will predict the fate of any arbitrary Life pattern, as such a program would then be able to solve the halting problem. The Game of Life, like life itself, is unpredictable and full of surprises.

The modern field of computation theory is built on Turing’s ideas, but now also embraces another concept that Turing did not consider. In his famous 1936 paper, Turing was concerned only with the existence of algorithms not their efficiency. But in practice we also want our algorithms to be fast so that computers can solve problems as quickly as possible. Two algorithms might be equivalent – that is to say, they can both solve the same problem – but if one takes a second and the other a million years we’d obviously choose the former. The trouble with quantifying the speed of algorithms is that it depends on many factors, to do with both hardware and software. For example, different programming languages may result in different execution speeds for the same set of instructions. Computer scientists commonly use Big O (‘O’ for ‘order’) notation to quantify speed in relation to the size of the input (n). If a program runs in order n, or O(n) time, this means that the time taken for the program to run is roughly proportional to the size of the input. This is the case, for example, when adding two numbers together using decimal notation. Multiplying numbers, however, takes longer – O(n2) time.

If a program runs in something called polynomial time, it means that the time taken is no more than the input size raised to a fixed power. This is generally considered fast enough for most purposes. Of course, if the power were huge – say, the 100th power – the program would take far too long to run, but this hardly ever happens. One example of an algorithm with a reasonably high power is the Agrawal–Kayal–Saxena (AKS) algorithm, which can be used for testing whether a number is prime. This runs in O(n12) time so in most cases a different algorithm is used, which runs slower than polynomial time but is faster than the AKS algorithm for many practical values. In searching for new, very large primes, however, the AKS algorithm comes into its own.

Suppose we take a very simple-minded approach to determining whether or not a number, consisting of n digits, is prime. This would involve checking all the numbers from 2 up to the square root of the given number to see if they were factors. We can exploit a few shortcuts, such as skipping even numbers, but still the time taken to test for primality this way comes out to be O(√10n), or roughly O(3n). This is exponential time, which is manageable using a computer providing that n is fairly small. To test a 1-digit number for primality using this method involves three steps, which, assuming 1 quadrillion steps per second (a typical speed for a supercomputer), would take 3 femtoseconds (3 quadrillionths of a second). A 10-digit number would take about 60 picoseconds to check, and a 20-digit number about 3.5 microseconds. But running on exponential time, a program eventually gets hopelessly bogged down. A 70-digit number, using our primitive method, would take about 2.5 quintillion seconds to check, which is far longer than the present age of the universe. Fast algorithms prove their worth in such situations.

Using something like AKS, assuming the time taken is the twelfth power of the input size, a 70-digit number takes ‘only’ 14 million seconds, or 160 days, to check for primality. This is still a long time for a high-speed computer run, but is like the wink of an eye compared with the cosmic timescales demanded by an exponential algorithm. Polynomial algorithms may or may not be practical in reality, but exponential algorithms are certainly not practical when dealing with large inputs. Fortunately, there’s also a wide range of algorithms between the two, and it’s often the case that algorithms that are nearly polynomial work well enough in practice.

The Turing machines we’ve talked about so far have all had one important thing in common. The lists of rules – the algorithms – telling them what to do have always prescribed just one action to be carried out in any situation. Such Turing machines are said to be deterministic Turing machines (DTMs). When given an instruction, they mechanically follow that instruction: they cannot ‘choose’ between two different instructions. However, it’s also possible to conceive of another type, known as non-deterministic Turing machines (NTMs), which for any given state of the read/write head and any given input, allow more than one instruction to be carried out. NTMs are just thought experiments – it would be impossible to actually build one. For example, in its program an NTM might have both ‘If you are in state 19 and see a “1”, change it to a “0” and move one place to the right’ and ‘If you are in state 19 and see a “1”, leave it unchanged and move one place to the left.’ In this case, the internal state of the machine and the symbol being read on the tape don’t specify a unique action. The question then is: how does the machine know which action to take?

An NTM explores all the possibilities for solving a problem and then, at the end, chooses which, if any, is the correct answer. One way to think of this is that the machine is an exceptionally lucky guesser that always manages to pick the right solution. Another, and perhaps more reasonable, way to picture an NTM is as a device that gains in computational power as it goes along, so that whatever is thrown at it at each step of the calculation takes no longer to process than the previous step. The task in hand might be, for instance, to search a binary tree – an arrangement of data that, at each point or node, splits off into two more options. Suppose the object is to find a certain number, say 358, in the tree. The machine has to go down every possible route until it comes across this value. An ordinary Turing machine – a DTM – would have to go down every possible path through the tree, one after another, until it hit upon the target value. Because the number of branches increases exponentially, doubling at every level of the tree, the time taken to locate the node containing 358 would be hopelessly long unless, by good fortune, it didn’t lie too far down the tree. With an NTM available, however, the situation changes dramatically. As each level of the binary tree is reached the NTM can be imagined to double in processing speed, so that it searches every level of the tree in the same amount of time, no matter how many nodes there are.

In principle, everything an NTM can do, a DTM could also do, given enough time. But that ‘enough time’ is the catch. An NTM could do in polynomial time what would take a DTM exponential time. Too bad, we can’t ever actually build one. What these computers of the imagination let us do, however, is get to grips with one of the great unsolved problems of computer science and of mathematics as a whole: the so-called P versus NP problem. One million US dollars, from the Clay Mathematics Institute, awaits the first researcher to come up with a provably correct solution. P and NP are the names given to two sets of problems having different classes of complexity. Problems in set P (‘polynomial’) are those that can be solved by an algorithm running in polynomial time on an ordinary (deterministic) Turing machine. Problems in set NP (‘non-deterministic polynomial’) are those that we know how to solve in polynomial time if we had access to an NTM. (Factoring large numbers is one such problem. An NTM can search through the binary tree for the ‘right’ factor rapidly, in polynomial time, whereas a DTM has to search every single branch, taking exponential time.)What this means is that every problem in P is also in NP as an NTM can do anything an ordinary Turing machine can do in the same amount of time.

It seems reasonable to suppose that NP is bigger than P because it includes problems that are only known to be tractable on a Turing machine with super powers: one that’s enhanced by making it outrageously lucky or ridiculously fast. However, there is, as yet, no proof that a regular DTM cannot do everything an NTM can, even though it seems highly likely. To mathematicians, though, there’s a world of difference between reasonable supposition and certainty. Until it’s demonstrated otherwise it remains possible that someone will prove that the sets P and NP are equal, which is why it’s called the P versus NP problem. One million dollars is a handsome prize, but how could anyone ever claim it when it means proving (or disproving) that all NP problems are P? A small ray of hope is offered by certain problems in NP that are said to be NP-complete. NP-complete problems are remarkable in that if a polynomial algorithm could be found that would run on an ordinary Turing machine and solve any one of these problems, then it would follow that there’s a polynomial algorithm for every single problem in NP. In this case, P = NP would be true.

The first NP-complete problem was found by the American-Canadian computer scientist and mathematician Stephen Cook in 1971. Known as the Boolean satisfiability problem, or SAT, it can be expressed in terms of logic gates. It starts with an arrangement of arbitrarily many logic gates and inputs (but no feedback), and exactly one output. It then asks whether there’s some combination of inputs that will turn the output on. A solution could always be found, in principle, by testing every possible combination of inputs to the entire system, but this would be equivalent to an exponential algorithm. To show that P = NP, it would have to be shown that there was a faster – polynomial – way of getting the answer.

While SAT was the first NP-complete problem to be identified, it isn’t the most famous. That honour belongs to the Travelling Salesman problem, which had its origins in the middle part of the nineteenth century. A manual for travelling salesmen published in 1832 talked about the most effective way to tour locations in Germany and Switzerland. The first academic treatment came a decade or two later from the Irish physicist and mathematician William Hamilton and the Church of England minister and mathematician Thomas Kirkman. Suppose a salesman has to travel to a large number of cities and knows the distance, which doesn’t necessarily have to be by a straight route, between each pair of cities. The problem is to find the shortest path that visits all of the cities and returns to the start. Only in 1972 was it shown that the Travelling Salesman problem is NP-complete (meaning that a polynomial algorithm for this problem would prove P = NP), which explained why generations of mathematicians, latterly even using computers, had trouble finding optimal solutions for complicated routes.

The Travelling Salesman problem may be easy to grasp but it’s no easier to solve than any other NP-complete problem: they’re all as hard as each other. Mathematicians are tantalised by the fact that finding a polynomial algorithm for any NP-complete problem would prove that P = NP. Such a proof would have serious implications, including that there’d be a polynomial algorithm for cracking RSA – the method of encryption, described later on, that we rely upon on a daily basis, for example for banking. But in all likelihood none exists.

While non-deterministic Turing machines exist only in the mind, quantum computers, which are also potentially very powerful, are in the early stages of development. As their name suggests, they make use of some of the very strange goings-on in the realm of quantum mechanics and work, not with ordinary bits (binary digits), but with quantum bits, or ‘qubits’. A qubit, which can be as simple as an electron with an unknown spin, has two properties due to quantum effects that an ordinary bit in a computer does not. First, it may be in a superposition of states. This means a qubit may represent both a 1 and a 0 at the same time, and only resolves into one or the other when it’s observed. Another way of interpreting this is that the quantum computer, along with the rest of the universe, splits into two copies of itself, one of which has the bit 1 and the other the bit 0. Only when the qubit is measured does it, and the universe around it, coalesce to a specific value. The other curious property upon which quantum computers depend is entanglement. Two entangled qubits, although separated in space, are linked by what has been called ‘spooky action at a distance’ so that measuring one instantaneously affects the measurement for the other.

Professor Winfried Hensinger (left) and Dr Sebastian Weidt (right) working on a quantum computer prototype.

Quantum computers are computationally equivalent to Turing machines. But, as we’ve seen, there’s a difference between being able to compute something at all (given enough time) and being able to compute it efficiently. Anything a quantum computer can do (or will be able to do) is also achievable on a classical paper-tape Turing machine if we’re prepared to wait a few geological eras or more. Efficiency is a different matter altogether. For some types of problem, quantum computers are likely to be many times faster than today’s conventional computers, although, in terms of what is actually computable, all have exactly the same capability as Turing’s original design.

It’s tempting to think that quantum computers are the same as non-deterministic Turing machines, but this isn’t the case. Computationally, they’re equivalent, because non-deterministic Turing machines can’t surpass deterministic Turing machines in terms of what is computationally possible (you could program a DTM to simulate either of them). In terms of efficiency, however, quantum computers are suspected to be inferior to NTMs – not surprisingly, since NTMs are entirely devices of the imagination. In particular, it’s unlikely, although it remains to be seen, whether they’ll be able to solve NP-complete problems in polynomial time. One problem that has been solved in polynomial time with quantum computers, which was previously thought to have no such solution (assuming P = NP is false), is the factoring of large numbers. In 1994 the American applied mathematician Peter Shor found a quantum algorithm that makes use of the special properties of the problem. Unfortunately, similar techniques can’t be applied to other problems, such as those known to be NP-complete. If there’s any polynomial algorithm to solve an NP-complete problem with quantum computing, it will again have to exploit specific features of the situation.

Quantum computers, like most new upstart technologies, bring both hope and headaches. Among the latter is the possibility of cracking codes that were previously thought to be highly secure, mainly because, despite decades of research, no one has found any polynomial-time method of cracking them. Modern encryption methods are based on an algorithm known as RSA, which is an initialism of its inventors, Ron Rivest, Adi Shamir, and Leonard Adleman. Using the algorithm to encrypt data can be done very quickly and happens many times, every second of every day, during online data transactions. However, applying RSA in reverse, to decrypt data, is extremely slow, requiring exponential time, unless special information is provided. This asymmetry of speed and the need for special information explains why RSA is so effective. The way RSA works is that each person using the system has two keys, a public key and a private key. The public key allows encryption and can be known to everyone, whereas the private key enables decryption and is known only to the owner of the keys. Sending a message is easy because it involves just applying the algorithm with the public key. However, the message can be read only by the intended recipient, who knows the private key. It’s theoretically possible to work out the private key if given the public key but it depends on being able to factor huge numbers with hundreds of digits. If the keys are large enough, it would take all the computers in the world, working together far longer than the current age of the universe, to decrypt the messages we send daily during our banking and other confidential transactions. Quantum computers, however, threaten to change all this.

In 2001, Shor’s algorithm, a method of factoring numbers in polynomial time, was used with a 7-qubit computer to factor 15 into 3 × 5. A decade later, the factorisation of 21 was achieved by the same method. Both achievements may seem comically underwhelming, given that any child who knows their times tables could do the same without much delay. However, in 2014, a different technique in quantum computing was used to find the prime factors of much larger numbers, the biggest of which was 56,153. Even this may not seem very impressive when faced with trying to factorise numbers with hundreds of digits. But as quantum computers with more and more qubits become available, it’s only a matter of time before it becomes possible to crack, efficiently, all RSA ciphers. When this happens the current method of handling online transactions will no longer be secure and the banking industry, along with every other aspect of modern life that depends on the secure transfer of data, will be thrown into chaos. Perhaps it will be possible to develop a new encryption system based on an NP-hard problem: in other words, a problem at least as hard as an NP-complete problem, though not necessarily belonging to the NP set. NP-complete problems are very difficult to solve in the worst-case scenarios but good algorithms can usually be found in more typical cases. They would give an encryption method that is generally easy to crack, but with a small probability of being extremely difficult. What’s needed is something that is almost always extremely difficult, taking exponential time or longer, to crack. No encryption method like this has yet been discovered, though it remains a possibility. If quantum computers can’t crack NP-complete (and therefore NP-hard) problems, then if one were ever discovered we might have security again – at least for a while.

Most computer scientists suspect that P ≠ NP. It’s a belief bolstered by decades of research that have failed to find a single polynomial-time algorithm for solving any of the more than 3,000 significant known NP-complete problems. The failure-to-date argument, however, isn’t very convincing, especially in light of the unexpected proof of Fermat’s Last Theorem – a simply stated problem that took intensive effort and cutting-edge methods to resolve. Nor is it particularly convincing to believe that P ≠ NP purely on philosophical grounds. Theoretical computer scientist Scott Aaronson of MIT has said: ‘If P = NP, then the world would be a profoundly different place than we usually assume it to be. There would be no special value in “creative leaps”, no fundamental gap between solving a problem and recognising the solution once it’s found.’ However, both maths and science have shown themselves to be perfectly capable of blindsiding us and transforming our intellectual worldview almost overnight. If it did turn out that P = NP, then, to begin with, there might be little practical impact because a proof, if it existed, would most likely be non-constructive. In other words, although a proof might show that polynomial algorithms existed for NP-complete problems, it wouldn’t be able to actually describe any. For a while at least, our encrypted data would remain secure – though for how long would be uncertain once major mathematical efforts began in search of such an algorithm.

In any event, before the security of our data is threatened by any developments on the P versus NP problem or more efficient algorithms, quantum mechanics may come to our rescue. The field of quantum cryptography may result in a cipher that is completely unbreakable, no matter what decryption techniques are brought to bear on it. An example of a truly unbreakable cipher was found as long ago as 1886 and is known as the one-time pad. The key is a random sequence of letters that is as long as the message. The message is combined with the key by converting letters into numbers (A = 1, B = 2, and so on), adding the corresponding numbers for each letter of the message and key, subtracting 26 if the sum is greater than 26, and converting back to letters. This has been proven to be completely unbreakable. Even if someone had enough time to try out every combination, they’d be completely unable to tell the correct message apart from every possible incorrect message. The whole set-up, however, depends on the key being destroyed after use, because if it were reused then anyone who got hold of both encrypted messages would be able to decrypt them if they knew that the key had been reused. The keys must also be exchanged privately, as anyone who gained access to the key could instantly decrypt the supposedly secure message. One-time pads were once used by Soviet spies and kept in tiny books that were highly flammable to aid in their secure destruction, and are still used on the hotline between the presidents of the United States and Russia. But the need to exchange keys privately and securely is a big disadvantage and makes the method impractical for most purposes, such as online transactions.

Quantum mechanics promises to change all this. It relies on the fact that measuring a certain property of light particles, or photons, known as polarisation, affects the polarisation. (Polarisation describes the way in which the waves associated with photons vibrate at right angles to the direction of their travel.) The crucial fact is, if the polarisation is measured twice in the same direction, the result will be the same. One method of doing the measurement uses a type of filter called an orthogonal filter. If the light is polarised vertically or horizontally, it will pass through an orthogonal filter and retain its polarisation. If it starts off polarised any other way, the light will still pass through, but its polarisation will change to become either vertical or horizontal. Another method of doing the polarisation measurement is using a diagonal filter, which works in a similar way but with light vibrating midway between horizontal and vertical. The final components of the cryptography system are two more filters. One of these tests whether light that has passed through the orthogonal filter is horizontally or vertically polarised; the other performs the same test on light that’s gone through the diagonal filter, testing in which diagonal direction the light is polarised.

Suppose we wanted to send a random bit for use in a one-time pad. We’d send a photon through either the orthogonal or diagonal filter, chosen randomly, and then record whether it was polarised vertically or horizontally. We’d ask the recipient to do the same. They would then tell us which filter they’d used, and we would confirm which filter we’d used. If they were the same, this bit could be stored for later use in a one-time pad. If not, the bit would be thrown out and the process repeated. An eavesdropper wouldn’t be able to tell which filter was used until after that photon had passed through the system and they were no longer able to measure it. Moreover, since measuring the polarisation could change it, after we had enough bits we could compare a small number of them, discarding them afterwards. If they all matched, our channel could be assumed secure and the rest of the bits safely be used in a one-time pad. If not, it would demonstrate the presence of an eavesdropper and all of the bits would be rejected as useless. So, quantum cryptography not only protects a one-time pad from eavesdroppers, but can also detect that an attempt at eavesdropping took place – something beyond the capability of conventional cryptography.

Progress in quantum computing is now very rapid. In 2017, physicists at the University of Sussex published construction plans for future large-scale quantum computers, thereby making the design freely available to all. The Sussex blueprint shows how to avoid a problem, known as decoherence, that had blighted previous laboratory attempts to build devices with more than 10 or 15 qubits. It also describes some specific technologies that would help to make powerful quantum computers, with much larger numbers of qubits, a reality. These include the use of room-temperature ions (charged atoms), confined in traps, to serve as qubits, the application of electric fields to push the ions from one module of the system to another, and logic gates that are controlled by microwaves and variations in voltage. The Sussex team next intends to build a small prototype quantum computer. Meanwhile, other groups at Google, Microsoft, and various start-ups, such as IonQ, are pursuing their own schemes, based on the trapped ion approach, superconductivity, or (in Microsoft’s case) a design known as topological quantum computing. IBM has announced its intention to market a 50-qubit quantum computer ‘in the next few years’, and scientists are already looking ahead to the time when machines with millions or billions of qubits become a reality.

If Turing were alive today he would no doubt be involved in the latest developments in computation, including, very possibly, theoretical work on quantum computers. He would have avoided the primitive attitudes towards sexuality that were pervasive during his life and that surely contributed to his early death. But one thing that he would find unchanged is the concepts of algorithms and universal computation that, through his remarkable, and remarkably simple, machine, he played such a major part in developing.