Chapter 8

The Last Line of Defense

When Christine Urban reported on newspaper credibility in 1989, she gave equal weight to factual errors and mistakes in spelling or grammar as sources of public mistrust.1 In Chapter 5, we found support for her assertion that factual errors are important. Now we turn to spelling and grammar.

Copy editors were the last line of defense in protecting newspapers from error. They had more control over spelling and grammar than they did over factual error. Beyond verifying names and addresses, newspapers did not routinely fact-check their writers.2 A copy editor who lived in the community and knew it well might question a reporter's less intuitive assertions, verify a fact in the newspaper's archives, or even call a source to check the spelling of a name. But the main concern was with form.

In a 2003 survey, Frank Fee and I discovered that copy editors representing no more than 15 percent of daily newspaper circulation in the United States agreed with the statement, “My newspaper rewards copy editors who catch errors in the paper.” While catching errors before they appear in the paper is of understandably higher priority, it shows that copy editors were considered more as pre-production processors than as quality monitors.

Spelling and grammar are important in literate societies because consistency makes the task of reading easier. Seeing a misspelled word in print can stop you in your tracks and break your concentration. For this reason, media go beyond the guidance found in dictionaries and create their own stricter set of standards. A dictionary is intended to describe how the language is actually used, and so it is tolerant of variant spellings and changes in usage. A style book prescribes much more specific rules for spelling and grammar. The basic style book in the newspaper business of the twentieth century was the one produced by the Associated Press. Since most major newspapers were its clients, it served as the default standard, and all the others were variations on it.

In 1989, Morgan David Arant and I realized that an electronic database could be employed for a task its designers never intended: finding errors in spelling and grammar and comparing their rates in different newspapers. Electronic archives were still a novelty, but we were able to search fifty-eight newspapers archived in the Data Times and VU/TEXT systems that were dominant at the time.

Our technique was simple. We searched for the following errors.

miniscule (instead of minuscule)

judgement (instead of judgment)

accomodate (instead of accommodate)

most unique (instead of unique)

The misspellings miniscule and judgement have become so common that dictionaries are starting to recognize them. Style books do not. The absence of the second “m” in accommodate is not allowed by dictionaries. And unique, by definition, is not modifiable by a superlative. A thing is either the only one of its kind or it is not.

To allow for differences in newspaper size and varying word frequencies, we expressed each misspelling or misuse as a proportion of the total—correct and incorrect—uses. We found that “minuscule” was misspelled 20 percent of the time across all forty-eight newspapers. The unwanted e in judgment and the missing m in accommodate accounted for 2 percent of all usages of each word. And the inappropriate most was attached to 1 percent of all uses of unique.

Because databases were new, our sample then was limited to a six-month period in the first half of 1989. The four items were nicely intercorrelated, a sign that they measured some underlying factor that we chose to identify as editing accuracy.

To adjust for the differing frequency of the test words, we standardized the scores before ranking the newspapers. In other words, the error rates were expressed in terms of their standard deviations from the average across all fifty-eight newspapers.

The Ghost in the Newsroom

The best-edited of the fifty-eight papers was the Akron Beacon Journal, scoring nearly a full standard deviation above the mean. I called Dale Allen, the editor, to congratulate him and ask how that feat was managed. He attributed it to corporate culture.

“That's the residue of Jack Knight spending six months of the year here,” he said. “He hated stuff like that.” Knight had been dead for eight years. The culture that he created was persisting.3

The lowest-ranking paper on the list was The Annapolis Capital, seriously lagging at 2.6 standard deviations below the mean. Its explanation was equally simple: the newspaper had not yet invested in a spell-checker for its electronic editing system.

Most papers did use computer spell checking back then. Errors still got into the paper. How? The spell check is not the last operation before a story goes into the paper. Last-minute changes can involve the introduction of words and sentences between the spell check and making the plate for the printing press. Sometimes the copy editor will introduce the error.

When Arant and I reported our results, we warned future researchers that electronic librarians might forestall efforts like ours by fixing spelling errors in the archives. In most cases, that hasn't happened, although the Lexis system incorporates an automatic spelling fixer that hides obvious errors like accomodate.

To see how editing accuracy compares with reporting accuracy in making newspapers credible, I set out to replicate our methodology for the twenty-two newspapers for which Scott Maier and I had collected data on reporting accuracy. Easily accessible databases were available for twenty of them (The Detroit News and the Durham Herald were the exceptions), and I engaged a world-class newspaper librarian, Marion Paynter of The Charlotte Observer, to do the searching. She used Dialog for seventeen of them and Factiva for three.4

Instead of limiting the search to six months as Arant and I had done, we used the period 1995 to 2003, roughly the span for which I had been tracking circulation success.5

And a broader selection of errors was employed. Here is the list, along with the overall frequency of each:

Now it is true that these usages might appear legitimately, as, for example, in a story about misspelling. Or an exact quote from a news source who calls something “the most unique.” And the AP stylebook authorizes the use of underway as one word in those rare cases where it is used as an adjective before a noun in a nautical sense: “the underway flotilla.”6 But these instances are so rare that I judged their influence, if any, to be random.

The search of the twenty newspapers yielded a total of 250,158 word finds of which 3.95 percent were errors. It's not clear how these results compare to the 1989 study. Minuscule was misspelled much less often this time—twelve instead of 20 percent of all attempts—but there is no way to tell whether spelling has improved or whether the current sample of twenty newspapers is of better intrinsic quality than the previous sample of fifty eight. Most unique appeared with about the same frequency in 1989, while judgment with the unwanted e was somewhat more common back then—2.2 percent of all attempts compared to the current 1.7 percent.

The different samples at different times were close enough to give us some reason to believe that these are fairly stable measures, not something idiosyncratic and meaningless. It really is a way to measure editing quality.

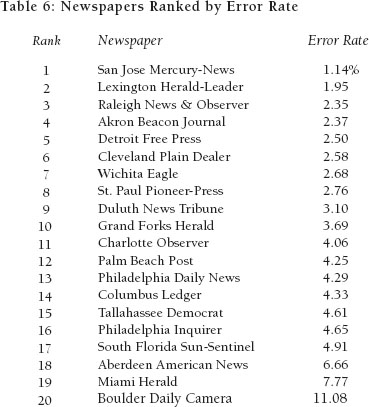

There are two ways to analyze these data. First, let's look at it from the reader perspective and just count the raw percentage of errors. The more often a spelling or usage is wrong or inconsistent, the greater the burden on the reader. So for our first look, here is the ranking of the twenty newspapers according to the number of errors as a percent of all uses. The best are listed first.

Here's what this means to a reader. If you were perusing a random issue of the Boulder Daily Camera from the study period, and you encountered one of the eight words, chances are better than one in ten that it would be wrong. At the San Jose Mercury-News, the risk was closer to one in a hundred. That's variance.

But to really be interesting, variance has to correlate with something. If editing accuracy is an indicator of general newspaper quality, then it should predict all sorts of things, including reporting accuracy, credibility, circulation penetration, and robustness.

It didn't. Among all the relevant scatterplots and correlations, no interesting pattern turned up. Whatever readers want in a newspaper, spelling accuracy appeared not to be a primary concern. I can think of two possible reasons, one of which requires further exploration.

The first possibility is that the overall standard for newspaper editing in the United States is so high that readers feel no cause to complain about the exceptional minor lapse. Whether you write miniscule or minuscule, the reader is going to know what you mean. It might bring a few picky old journalism professors like me up short, but most readers won't notice, and those who will don't care.

Standardization in spelling is a relatively recent development. Colonial printers were content to get the phonetics right, and with information still a scarce good, readers were so happy to be getting it that the fine points of presentation didn't matter much. This laxness applied even to proper names. In searching my own family history, I found that I come from a long line of bad spellers because some of my eighteenth-and nineteenth-century ancestors freely interchanged the spellings of Meyer, Myer, Meier, and Maier. Sometimes different spellings referred to the same person in the same document.

So you could make an argument that spelling is not important. But we don't. One notably bad speller at the Miami Herald in the 1950s was the late Tom Lownes. His spelling was so bad that Al Neuharth, then the assistant managing editor, sat him down for a talk.

“Every newsroom needs one bad speller,” Neuharth said. “We need that person to hold up as an example for the rest. He can be a lesson for all the others. We can say to the staff, ‘Look at this person, a terrible speller, a sad case. You don't want to be like him.’ Having such a person is useful, and we appreciate it.”

As soon as Tom relaxed visibly, Al added:

“In this newsroom that person is Steve Trumbull. You learn to spell.”7

There is another possible explanation for the negative findings about the effect of bad spelling. Look at Table 5 again. Two of the most misspelled words, supersede and minuscule, have relatively rare occurrences. Unique is used far more than the others, but misuses are rare. By basing the evaluation of newspaper editing on raw frequency, we are giving disproportionate weight to unique and hardly any weight to the two most-abused words.

That's fine if effect on the reader is what we are looking for. But if we want to measure copy-editing skill, the eight test words should be given equal weight. A simple statistical trick, using standardized scores, makes that possible.

But first, let's pull one more trick out of the bag. Factor analysis is a tool invented for psychology to find the underlying elements influencing responses to a large number of test items. Applying it to these eight words reveals that they form two logically distinct groups.

The first group includes the words that are pure misspelling rather than matters of style and grammar: all right, minuscule, judgment, inoculate and supersede. Together, they form the cleanest test of spelling skill.

Two of the remaining three are more indicative of grammar than spelling issues: unique and consensus. For reasons that are not entirely clear, newspapers that got those wrong also tended to mess up under way. So let's treat those three in a separate index that measures grammar and style more than pure spelling.

By calculating the standardized scores (where the mean is zero and each newspaper's score is the number of standard deviations away from the mean— positive if above, negative if below), we give each item equal weight regardless of the number of times it turns up in the newspaper.

Copy-Editing Skill

That leads to results less meaningful to readers, but it is a better test of editing skill. Perhaps it will be correlated with something interesting.

The rankings of the newspapers on these new dimensions are in Tables 7 and 8. Best-spelling editors were at the San Jose Mercury-News, which is more than one standard deviation below the mean in the standardized error score.

Just by eyeballing the list, we can see that it correlates with something interesting: market size. Papers in larger markets are found near the top of the list and those in small places are closer to the bottom. Larger papers have more resources because of their economies of scale. They can afford to have more (and better) copy editors than smaller papers.

An empirical check is possible because of the availability of newsroom census data from the American Society of Newspaper Editors. Sure enough, there was a statistically significant correlation between number of copy editors and spelling accuracy. The number of copy editors explained 25 percent of the variation in spelling accuracy across the newspapers.8

There were some interesting outliers. One pretty big newspaper with lots of copy editors ranked low on spelling accuracy. Another paper with less than half as many copy editors had one of the better spelling scores.

If partial correlation is used to take out the effect of market size, number of copy editors still has a strong effect that leans toward statistical significance.9 But when the direct effect of circulation size on spelling errors is sought— with number of copy editors controlled—there is no effect at all. So newspaper size was not really an issue except to the extent that it enabled the publisher to hire more copy editors.10

The ratio of copy editors to reporters, found to be important when we looked at math errors in Chapter 5, had no measurable effect on spelling accuracy. Yet, absolute size of the copy desk did have an effect, perhaps because it increases the interaction and reinforcing behavior of the copy editors. Perhaps the size increases the esprit de corps and morale. Or maybe it just means that copy editors have more time to spend on each story. (I'll have more to say about that.)

Spelling skill appears to be fairly stable over time. A before-after comparison is possible for twelve of the twenty newspapers because they were part of the Meyer-Arant list of fifty-eight in 1989. The standardized scores were positively correlated across the years, with 1989 results explaining 31 percent of the variation in the 1995–2003 period.11

Rankings among the twelve changed drastically for three papers. The Akron Beacon Journal fell eight places, from first to ninth, Jack Knight's ghost evidently having vacated the premises. The Charlotte Observer fell seven places, from fourth to eleventh. And The Miami Herald improved by six places, climbing from ninth to third.

All of the other newspapers on the list remained within one or two places of their 1989 rankings.

There is more to editing than spelling, of course. We turn now to the three often-misused words that were measured in addition to the five spelling examples. They are not highly intercorrelated, so there is not much of a case to made that they are indicators of the same kind of editing skill. That needn't stop us from using them in an index because a newspaper is still better off getting them right. Using the standardized scores for success at avoiding underway, most unique, and general consensus, we got the following set of newspaper rankings.

There is some correlation with the spelling list, but not a lot.12 The San Jose Mercury News topped both lists. Could this have been a consequence of having Knight Ridder corporate headquarters in San Jose? The Miami Herald is inconsistent, ranking high on spelling but low on grammar. The Boulder Daily Camera is at the bottom by virtue of its compulsion to use general consensus. The word consensus appeared in the paper 627 times in the study period, and it was linked to “general” on 47 of those occasions.

Let's look now at the characteristics of the copy desk that might enhance or retard its ability to do good editing.

What is a copy desk? When Polly Paddock joined the desk at The Charlotte Observer in 2003, she provided a colorful definition: “a crack team of eagle-eyed editors who ferret out misspellings, errors of grammar and syntax, muddled thinking, potential libel and all other manner of messes that might otherwise find their way into the newspaper.”13

“Time Is Quality”

Copy editors don't get the glory and the bylines enjoyed by reporters, but their skills are in great demand, and their starting salaries are generally higher than what is needed to attract reporters. They don't meet as many interesting people, but they don't have to go out in bad weather, either. Their job can be frustrating because in addition to the list of responsibilities given by Paddock, the job of page composition, once part of the back shop, was moved by computer technology to the copy desk. That made many pressed for time. “To a publisher, time is money; to an editor, time is quality,” said John T. Russial in one of the early studies of the effects of newsroom pagination.14

To find out what copy editors thought of their jobs, Frank Fee, former copy desk chief of the Rochester Times-Union and now my colleague at Chapel Hill, designed a survey. I helped with the sample.

Surveys of newspaper personnel are tricky. If you make them representative of all newspapers, you get a lot of small papers because, after all, most newspaper are small. To get around this problem, many academic newspaper surveys are stratified, meaning they draw separately from groups of papers of different size.

Fee and I used a different strategy. We used what sampling guru Leslie Kish has called a “PPS” sample for “probability proportionate to size.” Each newspaper in the United States that is audited by the Audit Bureau of Circulation had a chance of being included that was proportionate to its total circulation size in 2002.15

This procedure means that the largest papers fell into the sample automatically, and it has the advantage of representing newspaper customers rather than the newspapers themselves. In other words, the editors in the sample represent the total audience of people who buy newspapers.16

The last line of defense for quality in newspaper journalism was not a happy place in the early years of the twenty-first Century. John Russial has noted that when computerized page composition moved work from the back shop to the copy desk, the newsroom did not get proportional extra staffing. Other responses to competition, including zoned editions and feeding copy to online editions, put still more pressure on the copy desk.

Fee knew from personal experience the kinds of things that bugged copy editors, and he included a long list of them in the questionnaire as an agree-disagree list. There was much more disagreement than agreement on the following (numbers show percent agreement and disagreement. They don't add to 100 because some responses were neutral).

• My newspaper rewards copy editors for good story editing (Disagree by 68 to 10).

• My newspaper rewards copy editors who catch errors in the paper (Disagree by 63 to 14).

• Proportionate to their numbers in the newsroom, as many copy editors went to professional conventions and conferences last year as reporters (Disagree by 61 to 18).

• Copy editors at my paper have as much power as reporters to shape content and quality (Disagree by 47 to 30).

Most copy editors feel appreciated at my newspaper (disagree by 47 to 21).

• Copy editors at my paper are held in the same esteem as reporters (Disagree by 40 to 17).

These six items make the kind of scale that statisticians love because of their strong intercorrelation—a sign that six items, despite their diverse content, are tapping the same underlying factor. We get to name it whatever we want, so let's call it “respect.” Copy editors who feel respected will have higher agreement scores on these items.17 The mean respect score was 2.37—on a scale where 1 is the minimum, 3 is neutral and 5 is the maximum.

Now the question we've all been waiting for. If copy editing makes a difference in the newspaper's business success, it stands to reason that those news organizations that treat their editors with respect will do better in resisting the long-term decline in circulation penetration.

They do, and the difference leans toward statistical significance.18 Newspapers whose copy editors score a two or better on the five-point respect scale hung on to an additional 1.5 percentage points of home county penetration between the 2000 and 2003 ABC reports. (This is the difference after a few papers with extreme shifts due to unusual local situations or changes in the method of counting were eliminated.)19

To make this perfectly clear, here's the same information expressed in a different way.

In the period, 2000–2003, the low respect papers kept 96.8 percent of their home-county circulation penetration from one year to the next.

The high-respect papers kept 97.3 percent. That half-percentage point difference per year adds up mighty fast.

This leads us to wonder what causes copy editors to feel respected. The biggest factor uncovered in the survey was a light work load. Fifty-nine percent of the editors reported having processed fourteen or fewer stories in their last shift.

Their mean respect score was 2.43, well below the neutral point of 3.0. But it was worse for those who had to work more stories.

The harder-pressed copy editors had a collective respect score of 2.26, a difference that is significant in the traditional sense.20

And they were unhappier on a broad array of factors. They felt less respected by their newspapers' reporters, they saw fewer opportunities for professional development, they liked their bosses less, and they were less likely to feel rewarded for good work.

Here we have strong support for Russial's finding that the new technology-imposed demands on the copy desk created some pressure in newsrooms. The Fee survey indicates it is also creating some foul air. Even though this development was relatively new, its effect was already working its way down to the bottom line enough to show a clear hint of a difference. Attention should be paid.

1. Urban, Examining Our Credibility: Perspectives of the Public and the Press (Reston, Va.: American Society of Newspaper Editors, 1989), 5.

2. One exception was USA TODAY, which employed a fact-checker to vet the work of free-lancers who contributed to the editorial pages.

3. Philip Meyer and Morgan David Arant, “Use of an Electronic Database to Evaluate Newspaper Editorial Quality,” Journalism Quarterly 69:2 (Summer 1992), 447–54.

4. There was no visible systematic difference between the two systems. The three newspapers with Factiva archives were near the top, middle, and bottom in the rankings of editing accuracy.

5. The starting month was January 1995, with the following exceptions: Boulder Daily Camera, March 1995; Duluth News-Tribune, November 1995. All finished in November 2003.

6. Norm Goldstein, ed., AP Stylebook and Briefing on Media Law (New York: Associated Press, 2000), 255.

7. Tom Lownes, personal conversation, Miami, Fla., ca. 1959. Quoted from memory.

8. R = −.497, p = .026.

9. Partial R = −.423, p = .071, controlling for daily circulation in 1995.

10. Partial R = .149, p = .542. Note the sign change. With number of copy editors controlled, error increases (but not significantly) with circulation size.

11. R = .554, p = .062, which leans toward statistical significance. Rank-order correlation was also positive with Spearman's R at .503, p = .095. Referring to the range of .05 < p < .15 as a test value that “leans in a positive direction” has been suggested by John Tukey and is discussed in Robert P. Abelson, Statistics as Principled Argument (Hillsdale, N.J.: Lawrence Erlbaum Associates, 1995), 74–75.

12. Spearman's rank order correlation = .286, p = .209.

13. Polly Paddock, “Writing My Life's Next Chapter,” Charlotte Observer (July 20, 2003): 6H.

14. John Russial, “Pagination and the Newsroom: A Question of Time,” Newspaper Research Journal 15:1 (Winter 1994): 91–101. See also Doug Underwood, C. Anthony Giffard and Keith Stamm, “Computers and Editing: The Displacement Effect of Pagination Systems in the Newsroom,” Newspaper Research Journal, 15:2 (Spring 1994), 116–27.

15. Kish, Survey Sampling (New York: Wiley Interscience, 1965), 217–53.

16. The basic sample had a list of 221 newspapers. We merged it with the membership list of the American Copy Editors Society (ACES) to get names of individuals. For newspapers with no ACES members, we wrote and phoned editors listed in the 2003 Editor & Publisher Yearbook and asked them to nominate three copy editors for the survey. With this procedure, we obtained names and addresses of copy editors for eighty percent of the newspapers in the sample.

The mail survey was conducted in the fall of 2003 and yielded a response rate of seventy-three percent of all those invited to participate. Of the original list of newspapers, seventy-six percent were represented in the final survey. To maintain the PPS feature, weights were added to give each of these newspapers the same representation whether the number of editors responding was one, two, or three. The final Ns were 337 editors and 169 newspapers.

17. Cronbach's Alpha for the six-item scale is .741.

18. Student's t = 1.810, equal variances assumed, p = .071.

19. Outliers and extreme values were identified using the SPSS Explore procedure. Seventeen newspapers were eliminated for this part of the analysis.

20. Student's t = 1.977, equal variances assumed, p = .049.