1

On Your Marks

In the Introduction we began our analysis of some of the mental processes that are used to read. For example, we said, “well, somehow you've got to recognize the letters on the page, and then figure out what word those letters signify.” That seems clear enough, but it will help if we back up a step and consider what reading is for. Cognitive psychologists often begin their study of a mental process by trying to understand the “why” before they tackle the “how.”

Visual scientist David Marr is often credited with this idea because he emphasized its importance in such a clear way, via this example.1 Suppose you want to know the mechanism inside a cash register, but you aren't allowed to tear it open. That's akin to being a psychologist trying to understand how the mind reads; you want to describe how something works, but you can't look inside. If we watched a cash register in operation, we might say things like “when a button is pushed, there's a beeping sound,” and “sometimes a drawer opens and the operator puts in cash or takes some out, or both,” and so on. Fine, but what's the purpose of the beeps and the drawer? What's the goal here?

If we watched the cash register in operation and paid attention to function (not just what we're seeing), we might make observations like the order of purchases doesn't affect the total, and if you buy something and then return it, you end up with the same amount of money, and if you pay for items individually or all at once, the cost is the same. A sharp observer might derive some basic principles of arithmetic, as shown in Table 1.1.

Table 1.1. Watching a cash register. Observations of a cash register might lead to basic principles of arithmetic.

| Observation | Arithmetic expression | Principle |

| The order of purchases doesn't affect the total | A + B + C = A + C + B = B + A + C, etc. | Commutativity |

| If you buy something, and then return it, you end up the same amount of money you started with | X – Y + Y = X | Negative numbers |

| If you pay for items individually or all at once, the cost is the same | (A) + (B) + (C) = (A + B + C) | Associativity |

Knowing that the purpose of a cash register is to implement principles of arithmetic puts our earlier observations—keys to be pushed, numerals displayed—in a different perspective. We know what these components of cash register operation contribute to.

Let's try that idea with reading. What is reading for? We read in order to understand thoughts: either someone else's thoughts, or our own thoughts from the past. That characterization of the function of reading highlights that another mental act had to precede it: the mental act of writing. So perhaps we should begin by thinking about the function of writing. I think I need milk, I write that thought on a note to myself, and later I read what I've written and I recover the thought again: I need milk. Writing is an extension of memory.

Researchers believe that this memory function was likely the impetus for the invention of writing. Writing was invented on at least three separate occasions: about 5,300 years ago in Mesopotamia, 3,400 years ago in China, and 2,700 years ago in Mesoamerica.2 In each case, it is probable that writing began as an accounting system. It was needed to keep records about grain storage, property boundaries, taxation, and other legal matters. Writing is more objective than memory—if you and I disagree about how much money I owe you, it's helpful to have a written record. Writing not only extends memory, it expands it. Creating new memories takes effort. It's much easier to create new written records.

Writing also serves a second, perhaps more consequential function: writing is an extension of speech. Speech allows the transmission of thought. The ability to communicate confers an enormous advantage because it allows me to benefit from your experience rather than having to learn something myself. Much better if you were to tell me to stay out of the river because the current is dangerous than for me to learn that through direct experience. Writing represents a qualitative leap over and above speech in terms of the opportunity it creates for sharing knowledge. Speech requires that speaker and listener be in the same place at the same time. Writing does not. Speech is ephemeral but writing is (in principle) permanent. Speech occurs in just one place, but writing is portable.

Frances Bacon wrote “Knowledge is power” in 1597, presumably after entertaining this thought. When I read his words, I think what Bacon thought, separated in time and space by more than 400 years and 3,500 miles. As poet James Russell Lowell put it, “books are the bees which carry the quickening pollen from one to another mind.”3

Let me remind you of the point of this discussion. We're trying to describe the function of writing as an entrée into our discussion of the mental process of reading. I'm suggesting that writing is meant to preserve one's own thoughts, and to transmit thoughts to others. So now we must ask, “how is writing designed, such that it enables the transmission of thoughts?”

How Writing Might Work

Suppose that we live in a culture without writing, and we encounter a need to transmit thoughts to others who are not present. What method of written communication would seem the most natural? Probably the drawing of pictures. For example, suppose I know that an especially aggressive ram frequents a particular place. I want to warn others, so I incise the image of a ram in a rock wall near where I've seen it before (Figure 1.1).

Figure 1.1. A pictograph of a ram.

Photo by David~O, Flickr, used under CC BY

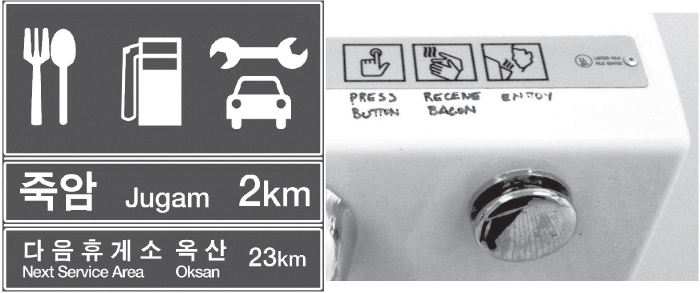

The drawing I've made is called a pictograph, a picture that carries meaning. Pictographs have real functional advantages. Writing them requires no training, and they are readily interpretable; no one is illiterate when it comes to pictographs. But pictographs do have serious drawbacks. First, their very advantage—they are readily interpreted without study—also brings a disadvantage—they are open to misinterpretation (Figure 1.2). My intended warning may be taken to mean Hey, there are lots of rams around here—good place to hunt!

Figure 1.2. The ambiguity of pictographs. The Korean highway sign offers fairly unambiguous pictographs: food, gas, auto repair. Some jokester has added text to the pictographs on the bathroom hand drier showing that they are ambiguous, even if the alternative interpretation is improbable.

© P.Cps1120a, via Wikimedia Commons: http://bit.ly/2a2QSYy; Press button receive bacon © Sebastian Kuntz, Flickr

Another problem is that some thoughts I want to communicate do not lend themselves to pictographs. The ram image would have been less ambiguous if I had put a picture representing danger next to it . . . but what image would represent danger? Or genius? Or possessives like mine or his? (When I want to signify a mental concept, that is, an idea someone is having, I'll use bold italics).

The problem brings to mind the story Herodotus tells in The Histories concerning the fifth‐century BC conflict between the Persians and Scythians.4 The king of the Scythians sent the king of the Persians a mouse, a frog, a bird, and some arrows. What could such a message mean? The Persian King thought it was a message of capitulation: we surrender our land (mouse), water (frog), horses (which are swift like birds), and military power (arrows). One of his advisors disagreed, saying the message meant unless you can fly into the air (like a bird), hide in the ground (like a mouse), or hide in the water (like a frog), you will die from our arrows. The image of an object might represent the object itself, but when we use it to represent anything else, it is subject to misinterpretation. Pictographs won't do. (By the way, the Persian King was wrong; the Scythians attacked.)

I might turn instead to logographs—images that need not look like what they are intended to represent. For example, I could represent the idea mine by, say, a circle with a square inscribed within. I've sacrificed the immediate legibility of pictographs—you need some training to read the writing now. But I've gained specificity and I've gained flexibility. I can represent abstract ideas like danger and mine and surrender.

But this solution carries a substantial disadvantage. I have introduced the requirement that the writer (and the reader) have some training. They have to memorize the abstract symbols. Educated adults know at least 50,000 words, and memorizing 50,000 symbols is no small job. We could find ways to reduce the burden, for example, by creating logographs so that words with similar meanings could be matched to similar‐looking symbols, but we're still looking at a heavy burden of learning.

Furthermore, we are overlooking an enormous amount of vital grammatical machinery that conveys meaning. When we think about coding our thoughts into written symbols, it's natural to focus on nouns like ram, adjectives like aggressive, and verbs like run. But writing with only those symbols would be cramped Tarzan‐talk: “Ram here. Aggressive. You run.” We want to be able to convey other aspects of meaning like time (The ram is here vs. The ram was here), counterfactual states (The ram is here vs. If the ram were here), whether the aggression is habitual (That ram acts aggressively vs. That ram is acting aggressively), and whether or not I am to referring rams in general (That ram is an aggressive animal vs. A ram is an aggressive animal).

Couldn't I just create symbols for all that stuff? For example, when I wanted to indicate that something happened in the past I could, I don't know, draw a horizontal line over the symbol that functions as a verb. Here's the problem. Grammar is complex. So complex that an entire field of study—linguistics—is devoted to describing how it works, and that description remains incomplete. That's a wild fact to contemplate, considering that children learn to use grammar effortlessly when they learn to talk. No one has to drill them in the rule that past tense is usually indicated by adding ed to a verb. (I will use boldface to indicate spoken language, whether a simple sound or a whole word.) But children (or adults) can describe very few of these rules; we use them without being fully aware of them, just as we know how to stay upright on a bicycle but can't tell anyone just how we do it. That we find it so hard to describe the rules of grammar is likely an important reason that there is not a fully logographic writing system that captures a spoken language. (Westerners often think that modern Chinese is a logographic language; actually, characters may also represent a syllable of spoken Chinese.)

Sound and Meaning

Some of the very earliest writing systems (e.g., Sumerian cuneiform and Egyptian hieroglyphics) included a partial solution to the problem of conveying grammar. Some of the logographs would be used as symbols for sound. For example, the symbol for duck might also, in some contexts, be used to signify the sound d. That allowed writers to denote grammatical features like conjugation. It also allowed the spelling of proper names.5

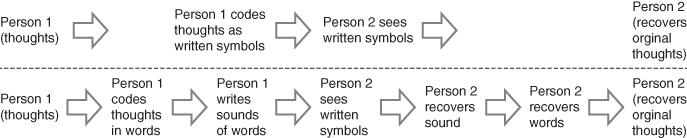

This strategy might have been a stepping stone to phonetic writing systems—systems in which symbols stand for sound, not meaning, such as how we use the Roman alphabet to correspond to the sounds of spoken English. More accurately, we should say mostly phonetic writing systems—all contain some logographs. For example, in English we use “$,” “&,” and emoticons such as “:‐).” (I'll use quotation marks to indicate writing as it would appear on the page.) Sound‐based systems have the enormous advantage of letting the writer use grammar unconsciously, just as we do when we speak. Writing is a code for what you say, not what you think (Figure 1.3). All known writing systems code the sound of spoken language.6

Figure 1.3. Writing is a code for what you say. The top row shows written communication that directly codes meaning. The bottom row shows written communication that codes thoughts into words, and then words into sound.

© Daniel Willingham

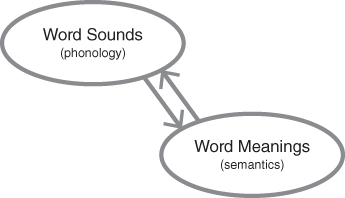

Here's another way to think about how reading works. Humans are born with the ability to learn spoken language with ease. Children don't need explicit instruction in vocabulary or syntax; exposure to a community of speakers is enough. So on the first day of school, before any reading instruction has begun, every child in the class has bicameral mental representations of words: they know the sound of a word (which scientists called phonology), and its meaning (which scientists call semantics) (Figure 1.4).

Figure 1.4. The relationship of word sound and meaning.

© Daniel Willingham

Notice that I've depicted the sound of words and the meaning of words as separate, but linked. How do we know they are separate? Maybe they are different aspects of a single entity in the mind, like a dictionary entry, which gives you the definition of the word and the pronunciation.

A lot of technical experiments indicate that sound and meaning are separate in the mind, but everyday examples will probably be enough to make this idea clear. We know meaning and sound are separate because you can know one without the other. For example, suppose you use the word quotidian. The word might sound familiar to me—I know I've heard it before—even if I don't know the meaning. The familiarity suggests I have some sound‐based representation of the word; it's not like you said pleeky, about which I might think that certainly could be a word, but it's not one I've ever heard. The opposite situation is also possible; there's a concept with which you're familiar, but you have no word associated with it. For example, everyone knows that people have a crease above their lips and below their nose, but few people have a memory entry for the sound of the word naming this anatomic feature, the philtrum.

We also know that sound and meaning are located in separate parts of the brain. Brain damage can compromise one without much affecting the other. Damage to part of the brain toward the front and on the left side can result in terrible difficulty in finding words; the patient knows what she wants to say but cannot remember the words to express it.7 It's the same feeling you have when you feel a word is on the tip of your tongue; you're trying to think of the name of the Pennsylvania Dutch breakfast food made with ground pork and cornmeal, and you know it's in your memory somewhere, you just can't quite find it.

But of course, most of the time, you can find it. If that word is in your memory, my providing the definition is very likely to make the sound of the word (scrapple) come to mind. And conversely, if someone says a word you know—market, for example—you automatically think of the word's meaning. So these mental representations—the sound and the meaning of a word—are separate, but linked; and the link is typically strong and works reliably.

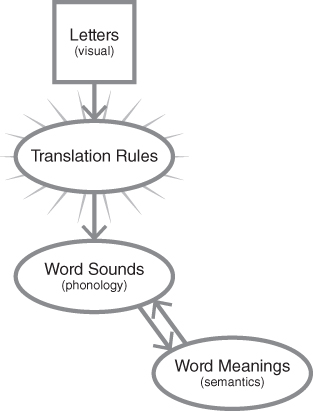

Reading, then, will build on this existing relationship between sound and meaning. It will entail adding some translation process from letters to the sound representations, which already have a robust association with meaning (Figure 1.5).

Figure 1.5. Letters, translation rules, sound, and meaning.

© Daniel Willingham

It's all very nice to say, “we'll code sound instead of meaning,” but it's not obvious how to do so. An architect of writing might first think of coding syllables because they are pretty easy for adults to distinguish. People can hear that daddy has two sounds: da and dee. So we create a symbol for da, another symbol for dee, one for ka, another for ko, and so on. There are some languages—Cherokee, for example, and Japanese kana—that use that strategy. But in English (and indeed, in most languages), there would still be a memorization problem. Spoken Japanese uses a relatively small number of syllables—fewer than 50. English has over 1,000! That's many fewer than the 50,000 symbols we were speculating that a logographic system might require, but it's still a lot of memorization.

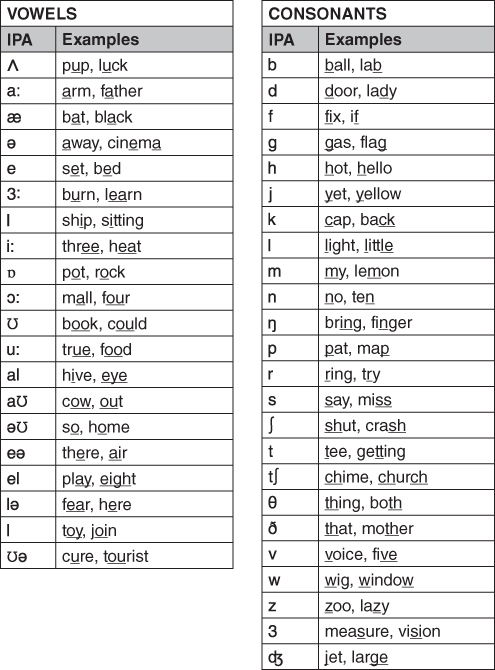

Instead of syllables, English uses an alphabetic system. That means each symbol corresponds to a speech sound, also called a phoneme. There are about 44 phonemes in English (Figure 1.6).

Figure 1.6. The phonemes used in American English. IPA stands for International Phonetic Alphabet.

© Anne Carlyle Lindsay

Now the memorization problem seems manageable—just 44 sounds and 26 letters! That's nothing!

I hope it is now clear to you why we took this side trip through an analysis of writing. Our initial question was “how does the mind read words?” Our analysis of writing gives us a much better idea of what “reading words” will actually involve. First, we must be able to visually distinguish one letter from another, to differentiate “b” from “p,” for example. Second, because writing codes sound, we must be able to hear the difference between bump and pump. Actually, it's not enough to be able to hear that they are different words. We must be able to describe that difference, to say that one word begins with the sound corresponding to the letter “b” and the other begins with the sound corresponding to the letter “p.” Third, we must know the mapping between the visual and auditory components, that is, how they match up. Reading brings challenges in all three processes, and we'll consider them in the next chapter.

References

- 1. Marr, D. (1982). Vision. New York: Freeman.

- 2. Robinson, A. (2007). The story of writing (2nd ed.). London: Thames & Hudson.

- 3. “Review of Kavanagh, a Tale by Henry Wadsworth Longfellow.” (1849, July). The North American Review, 69(144), 207.

- 4. Available at: http://perseus.uchicago.edu/perseus‐cgi/citequery3.pl?dbname=GreekFeb2011&query=Hdt.%204.131.2&getid=1/.

- 5. Schmandt‐Besserat, D., & Erard, M. (2008). Origins and forms of writing. In C. Bazerman (Ed.), Handbook of research on writing (pp. 7–22). New York: Erlbaum.

- 6. Perfetti, C. A. (2003). The universal grammar of reading. Scientific Studies of Reading, 7(1), 3–24. http://doi.org/10.1207/S1532799XSSR0701_02.

- 7. Dick, F., Bates, E., Wulfeck, B., Utman, J. A., Dronkers, N., & Gernsbacher, M. A. (2001). Language deficits, localization, and grammar: Evidence for a distributive model of language breakdown in aphasic patients and neurologically intact individuals. Psychological Review, 108(4), 759–788.

- 8. Bell, L. C., & Perfetti, C. A. (1994). Reading skill: Some adult comparisons. Journal of Educational Psychology, 86(2), 244–255;Gernsbacher, M. A., Varner, K. R., & Faust, M. E. (1990). Investigating differences in general comprehension skill. Journal of Experimental Psychology: Learning, Memory, and Cognition, 16(3), 430–445.

- 9. National Institute of Child Health and Human. (2000). National Reading Panel. Teaching children to read: An evidence‐based assessment of the scientific research literature on reading and its implications for reading instruction. Report of the subgroups. Washington, DC. Retrieved from www.nichd.nih.gov/research/supported/Pages/nrp.aspx/.