Code coverage is a measurement of what percentage of the code under test is being tested by a test suite. When you run the tests for the Tasks project, some of the Tasks functionality is executed with every test, but not all of it. Code coverage tools are great for telling you which parts of the system are being completely missed by tests.

Coverage.py is the preferred Python coverage tool that measures code coverage. You’ll use it to check the Tasks project code under test with pytest.

Before you use coverage.py, you need to install it. I’m also going to have you install a plugin called pytest-cov that will allow you to call coverage.py from pytest with some extra pytest options. Since coverage is one of the dependencies of pytest-cov, it is sufficient to install pytest-cov, as it will pull in coverage.py:

| | $ pip install pytest-cov |

| | Collecting pytest-cov |

| | Using cached pytest_cov-2.5.1-py2.py3-none-any.whl |

| | Collecting coverage>=3.7.1 (from pytest-cov) |

| | Using cached coverage-4.4.1-cp36-cp36m-macosx_10_10_x86_64.whl |

| | ... |

| | Installing collected packages: coverage, pytest-cov |

| | Successfully installed coverage-4.4.1 pytest-cov-2.5.1 |

Let’s run the coverage report on version 2 of Tasks. If you still have the first version of the Tasks project installed, uninstall it and install version 2:

| | $ pip uninstall tasks |

| | Uninstalling tasks-0.1.0: |

| | /path/to/venv/bin/tasks |

| | /path/to/venv/lib/python3.6/site-packages/tasks.egg-link |

| | Proceed (y/n)? y |

| | Successfully uninstalled tasks-0.1.0 |

| | $ cd /path/to/code/ch7/tasks_proj_v2 |

| | $ pip install -e . |

| | Obtaining file:///path/to/code/ch7/tasks_proj_v2 |

| | ... |

| | Installing collected packages: tasks |

| | Running setup.py develop for tasks |

| | Successfully installed tasks |

| | $ pip list |

| | ... |

| | tasks (0.1.1, /path/to/code/ch7/tasks_proj_v2/src) |

| | ... |

Now that the next version of Tasks is installed, we can run our baseline coverage report:

| | $ cd /path/to/code/ch7/tasks_proj_v2 |

| | $ pytest --cov=src |

| | ===================== test session starts ====================== |

| | plugins: mock-1.6.2, cov-2.5.1 |

| | collected 62 items |

| | |

| | tests/func/test_add.py ... |

| | tests/func/test_add_variety.py ............................ |

| | tests/func/test_add_variety2.py ............ |

| | tests/func/test_api_exceptions.py ......... |

| | tests/func/test_unique_id.py . |

| | tests/unit/test_cli.py ..... |

| | tests/unit/test_task.py .... |

| | |

| | ---------- coverage: platform darwin, python 3.6.2-final-0 ----------- |

| | Name Stmts Miss Cover |

| | -------------------------------------------------- |

| | src/tasks/__init__.py 2 0 100% |

| | src/tasks/api.py 79 22 72% |

| | src/tasks/cli.py 45 14 69% |

| | src/tasks/config.py 18 12 33% |

| | src/tasks/tasksdb_pymongo.py 74 74 0% |

| | src/tasks/tasksdb_tinydb.py 32 4 88% |

| | -------------------------------------------------- |

| | TOTAL 250 126 50% |

| | |

| | |

| | ================== 62 passed in 0.47 seconds =================== |

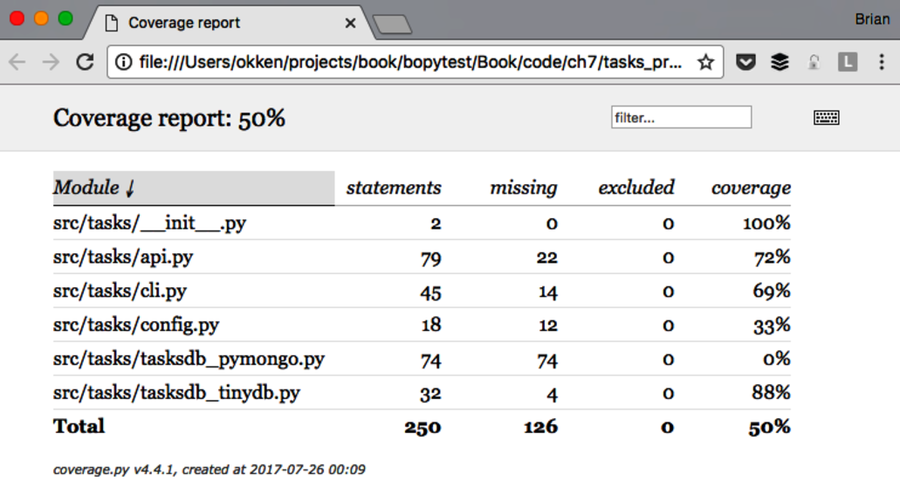

Since the current directory is tasks_proj_v2 and the source code under test is all within src, adding the option --cov=src generates a coverage report for that specific directory under test only.

As you can see, some of the files have pretty low, to even 0%, coverage. These are good reminders: tasksdb_pymongo.py is at 0% because we’ve turned off testing for MongoDB in this version. Some of the others are pretty low. The project will definitely have to put tests in place for all of these areas before it’s ready for prime time.

A couple of files I thought would have a higher coverage percentage are api.py and tasksdb_tinydb.py. Let’s look at tasksdb_tinydb.py and see what’s missing. I find the best way to do that is to use the HTML reports.

If you run coverage.py again with --cov-report=html, an HTML report is generated:

| | $ pytest --cov=src --cov-report=html |

| | ===================== test session starts ====================== |

| | plugins: mock-1.6.2, cov-2.5.1 |

| | collected 62 items |

| | |

| | tests/func/test_add.py ... |

| | tests/func/test_add_variety.py ............................ |

| | tests/func/test_add_variety2.py ............ |

| | tests/func/test_api_exceptions.py ......... |

| | tests/func/test_unique_id.py . |

| | tests/unit/test_cli.py ..... |

| | tests/unit/test_task.py .... |

| | |

| | ---------- coverage: platform darwin, python 3.6.2-final-0 ----------- |

| | Coverage HTML written to dir htmlcov |

| | |

| | |

| | ================== 62 passed in 0.45 seconds =================== |

You can then open htmlcov/index.html in a browser, which shows the output in the following screen:

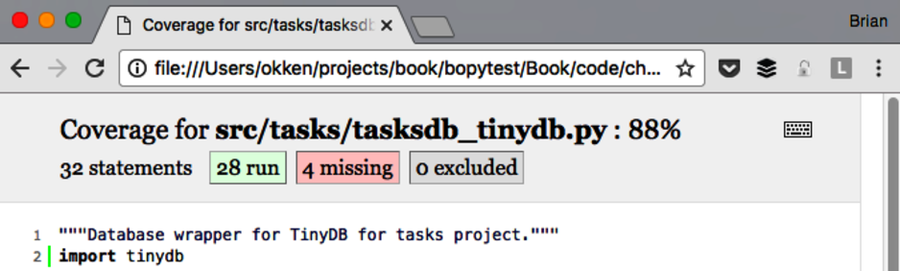

Clicking on tasksdb_tinydb.py shows a report for the one file. The top of the report shows the percentage of lines covered, plus how many lines were covered and how many are missing, as shown in the following screen:

Scrolling down, you can see the missing lines, as shown in the next screen:

Even though this screen isn’t the complete page for this file, it’s enough to tell us that:

Great. We can put those on our testing to-do list, along with testing the config system.

While code coverage tools are extremely useful, striving for 100% coverage can be dangerous. When you see code that isn’t tested, it might mean a test is needed. But it also might mean that there’s some functionality of the system that isn’t needed and could be removed. Like all software development tools, code coverage analysis does not replace thinking.

Quite a few more options and features of both coverage.py and pytest-cov are available. More information can be found in the coverage.py[21] and pytest-cov[22] documentation.