The point is not that Motorola erred in its early development of Iridium or that TI had greater prescience in developing DSP. If you always knew ahead of time which new ideas would work for sure, you would invest in only those. But you don’t. That’s why great companies experiment with a lot of little things that might not pan out in the end. At the start of Iridium and DSP, both Motorola and TI wisely invested in small-scale experimentation and development, but TI, unlike Motorola, bet big only once it had the weight of accumulated empirical evidence on its side. Audacious goals stimulate progress, but big bets without empirical validation, or that fly in the face of mounting evidence, can bring companies down, unless they’re blessed with unusual luck. And luck is not a reliable strategy.

In 1985, a Motorola engineer vacationed in the Bahamas. His wife tried to keep in touch with her clients via cell phone (which had only recently been offered to consumers for the first time) but found herself stymied. This sparked an idea: why not create a grid of satellites that could ensure a crisp phone connection from any point on Earth? You may remember reading how New Zealand mountaineer Rob Hall died on Mount Everest in 1996 and how he bade farewell to his wife thousands of miles away as his life ebbed away in the cold at 28,000 feet. His parting words—“Sleep well, my sweetheart. Please don’t worry too much”—riveted the world’s attention. Without a satellite phone link, Hall would not have been able to have that last conversation with his life partner. Motorola envisioned making this type of anywhere-on-Earth connection available to people everywhere with its bold venture called Iridium.74

Motorola’s second-generation chief executive Robert Galvin had assiduously avoided big discontinuous leaps, favoring instead a series of well-planned, empirically tested evolutionary steps in which new little things turned into new big things that replaced old big things, in a continuous cycle of renewal. Galvin saw Iridium as a small experiment that, if successful, could turn into a Very Big Thing. In the late 1980s, he allocated seed capital to prototype a low-orbiting satellite system. In 1991, Motorola spun out the Iridium project into a separate company, with Motorola as the largest shareholder, and continued to fund concept development. By 1996, Motorola had invested $537 million in the venture and had guaranteed $750 million in loan capacity on Iridium’s behalf, the combined amount exceeding Motorola’s entire profit for 1996.75

In their superb analysis “Learning from Corporate Mistakes: The Rise and Fall of Iridium,” Sydney Finkelstein and Shade H. Sanford demonstrate that the pivotal moment for Iridium came in 1996, not at its inception in the 1980s.76 In the technology-development stage prior to 1996, Iridium could have been suspended with relatively little loss. After that, it entered the launch phase. To go forward would require a greater investment than had been spent for all the development up to that point; after all, you can’t launch sixty-six satellites as a cheap experiment.

But by 1996, years after Galvin had retired (and years after he’d allocated seed capital), the case for Iridium had become much less compelling. Traditional cellular service now blanketed much of the globe, erasing much of Iridium’s unique value. If the Motorola scientist’s wife had tried to call her clients from vacation in 1996, odds are she would have found a good cell connection. Furthermore, the Iridium phones had significant disadvantages. A handset nearly the size of a brick that worked only outside (where you can get a direct ping to a satellite) proved less useful than a traditional cell phone. How many people would lug a brick halfway around the world, only to take the elevator to street level to make an expensive phone call, or ask a cab driver to stop in order to step onto a street corner to check in with the office? Iridium handsets cost $3,000, with calls running at $3 to $7 per minute, while cell phone charges continued to drop. Sure, people in remote places could benefit from Iridium, but remote places lacked the one thing Iridium needed: customers. There just aren’t that many people who need to call home from the South Pole or the top of Mount Everest.77

When the Motorola engineer came up with the idea for Iridium in 1985, few people envisioned cellular service’s nearly ubiquitous coverage. But by 1996, empirical evidence weighed against making the big launch. Meanwhile, Motorola had multiplied revenues fivefold, from $5 billion to $27 billion, fueled by its Stage 2–like commitment to double in size every five years (a goal put in place after Robert Galvin retired).78 Motorola hoped for a big hit with Iridium, and its 1997 annual report boasted, “With the development of the IRIDIUM® global personal communications system, Motorola has created a new industry.”79 And so, despite the mounting negative evidence, Iridium launched, and in 1998 went live for customers. The very next year Iridium filed for bankruptcy, defaulting on $1.5 billion in loans.80 Motorola’s 1999 proxy report recorded more than $2 billion in charges related to the Iridium program, which helped accelerate Motorola’s plummet toward Stage 4.81

MAKING BIG BETS IN THE FACE OF MOUNTING EVIDENCE TO THE CONTRARY

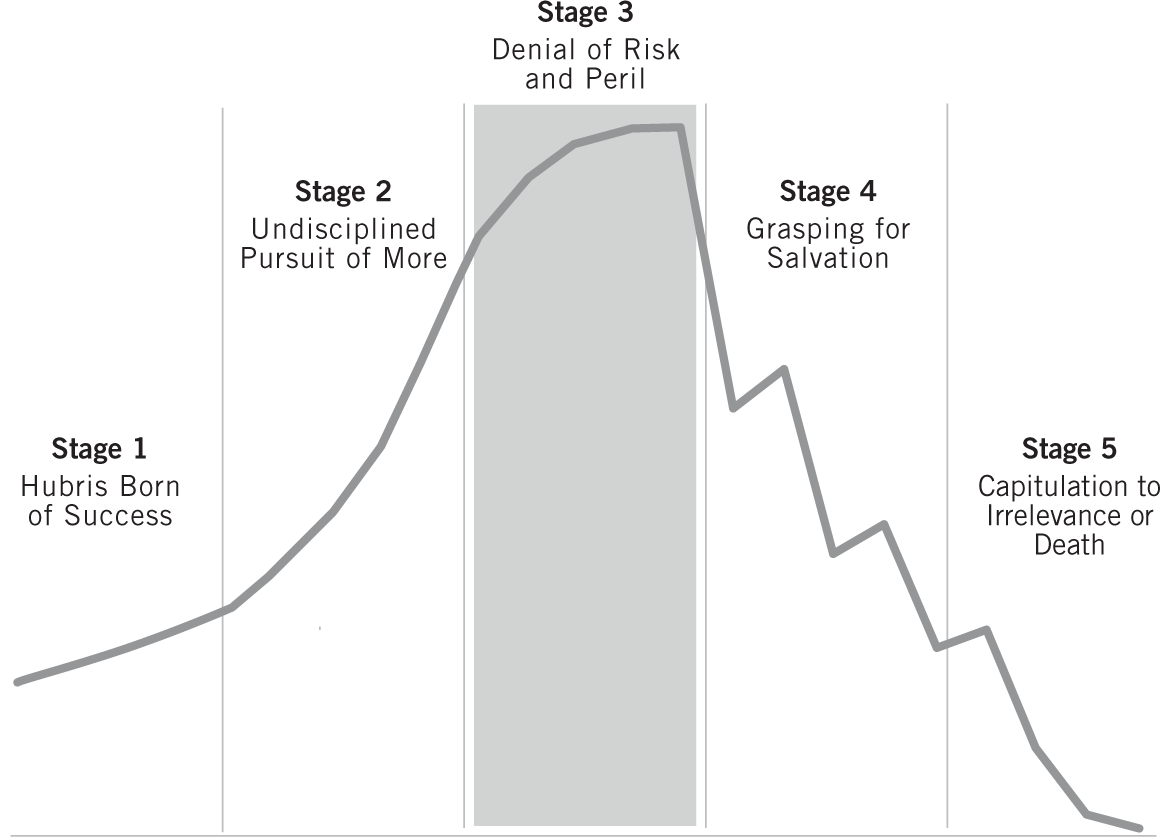

As companies move into Stage 3, we begin to see the cumulative effects of the previous stages. Stage 1 hubris leads to Stage 2 overreaching, which sets the company up for Stage 3, Denial of Risk and Peril. This describes what happened with Iridium. In contrast, let’s look at Texas Instruments (TI) and its gradual evolution to become the Intel of digital-signal processing, or DSP.

In the late 1970s, TI engineers came up with a great idea to help children learn to spell: an electronic toy that “spoke” words and then asked kids to type the word on a keypad. This was the genesis of Speak & Spell, the first consumer product to use DSP technology. (DSP chips enable analog chunks of data, such as voice, music, and video, to be crunched and reassembled like digital bits.) In 1979, TI made a tiny bet of $150,000 (less than one hundredth of one percent of 1979 revenues) to further investigate DSP, and by 1986, TI had garnered $6 million in revenues from DSP chips—hardly enough to justify a bet-the-company move, but enough evidence to support their continued exploration of DSP. TI customers found new uses for DSP (e.g., modems, speech translation, and communications), and TI set up separate DSP business units.82 Then in 1993, TI scored a contract to create DSP chips for Nokia’s digital cell phones, and by 1997, it had DSP chips in more than twenty-two million phones.

And that’s when TI set the audacious goal to become the Intel of DSP. “When somebody says DSP,” said CEO Tom Engibous, “I want them to think of TI, just like they think of Intel when they say microprocessors.”83 In a bold stroke, he sold both TI’s defense and memory-chip businesses, having the guts to shrink the company to increase its focus on DSP. By 2004, TI had half of the $8 billion rapidly growing DSP market.84

Note that TI dared its big leap only after diligently turning the DSP flywheel for fifteen years. It didn’t bet big in 1978, when it had the Speak & Spell. It didn’t bet big in 1982, when it first put DSP on a single chip. It didn’t bet big in 1986, when it had only $6 million in DSP revenues. Engibous set a big, hairy goal, to be sure, but not one born of hubris or denial of risk. Drawing upon two decades of growing empirical evidence, he set the goal based on a firm foundation of proven success.

Now you might be thinking, “Okay, so just don’t ignore the evidence—just don’t launch an Iridium when the data is so clear—and we’ll avoid Stage 3.” But life doesn’t always present the facts with stark clarity; the situation can be confusing, noisy, unclear, open to interpretation. And in fact, the greatest danger comes not in ignoring clear and unassailable facts, but in misinterpreting ambiguous data in situations when you face severe or catastrophic consequences if the ambiguity resolves itself in a way that’s not in your favor. To illustrate, I’m going to digress to review the tale of a famous tragedy.

TAKING RISKS BELOW THE WATERLINE

On the afternoon of January 27, 1986, a NASA manager contacted engineers at Morton Thiokol, a subcontractor that provided rocket motors to NASA. The forecast for the Kennedy Space Center in Florida, where the space shuttle Challenger sat in preparation for a scheduled launch the next day, called for temperatures in the twenties during early morning hours of the 28th, with the launch-time temperature expected to remain below 30 degrees F. The NASA manager asked the Morton Thiokol engineers to consider the effect of cold weather on the solid-rocket motors, and the engineers quickly assembled to discuss a specific component called an O-ring. When rocket fuel ignites, the rubber-like O-rings seal joints—like putty in a crack—against searing hot gases that, if uncontained, could cause a catastrophic explosion.

The lowest launch temperature in all twenty-four previous shuttle launches had been 53 degrees, more than twenty degrees above the forecast for the next day’s scheduled launch, and the engineers had no conclusive data about what would happen to the O-rings at 25 or 30 degrees. They did have some data to suggest that colder temperatures harden O-rings, thereby increasing the time they’d take to seal. (Think of a frozen rubber band in your freezer contrasted with that same rubber band at room temperature and how it becomes much less malleable.) The engineers discussed their initial concerns and scheduled a teleconference with thirty-four people from NASA and Morton Thiokol for 8:15 p.m. Eastern.85

The teleconference began with nearly an hour of discussion, leading up to Morton Thiokol’s engineering conclusion that it could not recommend launch below 53 degrees. NASA engineers pointed out that the data were conflicting and inconclusive. Yes, the data clearly showed O-ring damage on launches below 60 degrees, but the data also showed O-ring damage on a 75-degree launch. “They did have a lot of conflicting data in there,” reflected a NASA engineer. “I can’t emphasize that enough.” Adding further confusion, Morton Thiokol hadn’t challenged on previous flights that had projected launch temperatures below 53 degrees (none close to the twenties, to be sure, but lower than the now-stated 53-degree mark), which appeared inconsistent with their current recommendations. And even if the first O-ring were to fail, a redundant second O-ring was supposed to seal into place.

In her authoritative book The Challenger Launch Decision, sociologist Diane Vaughan demolishes the myth that NASA managers ignored unassailable data and launched a mission absolutely known to be unsafe. In fact, the conversations on the evening before launch reflected the confusion and shifting views of the participants. At one point, a NASA manager blurted, “My God, Thiokol, when do you want me to launch, next April?” But at another point on the same evening, NASA managers expressed reservations about the launch; a lead NASA engineer pleaded with his people not to let him make a mistake and stated, “I will not agree to launch against the contractor’s recommendation.” The deliberations lasted for nearly three hours. If the data had been clear, would they have needed a three-hour discussion? Data analyst extraordinaire Edward Tufte shows in his book Visual Explanations that if the engineers had plotted the data points in a compelling graphic, they might have seen a clear trend line: every launch below 66 degrees showed evidence of O-ring damage. But no one laid out the data in a clear and convincing visual manner, and the trend toward increased danger in colder temperatures remained obscured throughout the late-night teleconference debate. Summing up, the O-Ring Task Force chair noted, “We just didn’t have enough conclusive data to convince anyone.”

Convince anyone of what exactly? That’s the crux of the matter. Somehow, in all the dialogue, the decision frame had turned 180 degrees. Instead of framing the question, “Can you prove that it’s safe to launch?”—as had traditionally guided launch decisions—the frame inverted to “Can you prove that it’s unsafe to launch?” If they hadn’t made that all-important shift or if the data had been absolutely definitive, Challenger very likely would have remained on the launch pad until later in the day. After all, the downside of a disaster so totally dwarfed the downside of waiting a few hours that it would be difficult to argue for running such an unbalanced risk. If you’re a NASA manager concerned about your career, why would you push for a decision to launch if you saw a very high likelihood it would end in catastrophe? No rational person would do that. But the data were highly ambiguous and the decision criteria had changed. Unable to prove beyond a reasonable doubt that it was unsafe to launch, Morton Thiokol reversed its stance and voted to launch, faxing its confirmation to NASA shortly before midnight. At 11:38 the next morning, in 36-degree temperatures, an O-ring failed upon ignition, and 73 seconds later, Challenger exploded into a fireball. All seven crew members perished as remnants of Challenger fell nine miles into the ocean.

The Challenger story highlights a key lesson. When facing irreversible decisions that have significant, negative consequences if they go awry—what we might call “launch decisions”—the case for launch should require a preponderance of empirical evidence that it’s safe to do so. Had the burden of proof rested on the side of safety (“If we cannot prove beyond a reasonable doubt that it’s safe to launch, we delay”) rather than the other way around, Challenger might have been spared its tragedy.

Bill Gore, founder of W. L. Gore & Associates, articulated a helpful concept for decision making and risk taking, what he called the “waterline” principle. Think of being on a ship, and imagine that any decision gone bad will blow a hole in the side of the ship. If you blow a hole above the waterline (where the ship won’t take on water and possibly sink), you can patch the hole, learn from the experience, and sail on. But if you blow a hole below the waterline, you can find yourself facing gushers of water pouring in, pulling you toward the ocean floor.86 And if it’s a big enough hole, you might go down really fast, just like some of the financial-company catastrophes in 2008.

To be clear, great enterprises do make big bets, but they avoid big bets that could blow holes below the waterline. When making risky bets and decisions in the face of ambiguous or conflicting data, ask three questions:

1. What’s the upside, if events turn out well?

2. What’s the downside, if events go very badly?

3. Can you live with the downside? Truly?

Suppose you are on the side of a cliff with a potential storm bearing down, but you don’t know for sure how bad the storm will be or whether it will involve dangerous lightning. You have to decide: do we go up, or do we go down? Two climbers in Eldorado Canyon, Colorado, faced this scenario on a famous climb called the Naked Edge. A Colorado summer storm roiled in the distance, and they had to decide whether to continue with their planned outing for the day. Now think of the three questions. What’s the upside if the storm passes by uneventfully? They complete their planned ascent for the day. What’s the downside if the storm turns into a full-fledged fusillade of lightning while they’re sitting high on the exposed summit pitch? They can die. They chose to continue. They anchored into the top of the cliff, perched right out on the top of an exposed pinnacle, just as the storm rushed into the canyon. The ropes popped and buzzed with building electricity. Then—bang!—a lightning bolt hit the top climber, melting his metal gear and killing him instantly.87

Of course, probabilities play a role in this thinking. If the probability of events going terribly awry is, for all practical purposes, zero, or if it is small but stable, that leads to different decisions than if the probability is high, increasing, unstable, or highly ambiguous. (Otherwise, we would never get on a commercial airliner, never mind climb the Naked Edge or El Capitan.) The climbers on the Naked Edge saw increasing probability of a bad storm in an asymmetric-risk scenario (minimal upside with catastrophic downside) yet went ahead anyway.

The 2008 financial crisis underscores how mismanaging these questions can destroy companies. As the housing market bubble grew, so did the probability of a real estate crash. What’s the upside of increasing leverage dramatically (in some cases 30 to 1, or more) and increasing exposure to mortgage-backed securities? More profit, if the weather remains clear and calm. What’s the downside if the entire housing market crashes and we enter one of the most perilous credit crises in history? Merrill Lynch sells out its independence to Bank of America. Fannie Mae gets taken over by the government. Bear Stearns flails and then disappears in a takeover. And Lehman Brothers fails outright, sending the financial markets into a liquidity crisis that sends the economy spiraling downward.

A CULTURE OF DENIAL

Of course, not every case of decline involves big launch decisions like Iridium, or lethal decisions like going for the summit on a dangerous rock climb. Companies can also gradually weaken, and as they move deeper into Stage 3, they begin to accumulate warning signs. They might see a decline in customer engagement, an erosion of inventory turns, a subtle decline in margins, a loss in pricing power, or any number of other indicators of growing mediocrity. What indicators should you most closely track? For businesses, our analysis suggests that any deterioration in gross margins, current ratio, or debt-to-equity ratio indicates an impending storm. Our financial analyses revealed that all eleven fallen companies showed a negative trend in at least one of these three variables as they moved toward Stage 4, yet we found little evidence of significant management concern and certainly not the productive paranoia they should have had about these trends. Customer loyalty and stakeholder engagement also deserve attention. And as we discussed in Stage 2, take heed of any decline in the proportion of right people in key seats.

As companies hurtle deeper into Stage 3, the inner workings of the leadership team can veer away from the behaviors we found on teams that built great companies. In the table “Leadership-Team Dynamics,” I’ve contrasted the leadership dynamics of companies on the way down with companies on the way up.

LEADERSHIP-TEAM DYNAMICS: ON THE WAY DOWN VERSUS ON THE WAY UP

| Teams on the Way Down | Teams on the Way Up |

| People shield those in power from grim facts, fearful of penalty and criticism for shining light on the harsh realities. | People bring forth unpleasant facts—“Come here, look, man, this is ugly”—to be discussed; leaders never criticize those who bring forth harsh realities. |

| People assert strong opinions without providing data, evidence, or a solid argument. | People bring data, evidence, logic, and solid arguments to the discussion. |

| The team leader has a very low questions-to-statements ratio, avoiding critical input and/or allowing sloppy reasoning and unsupported opinions. | The team leader employs a Socratic style, using a high questions-to-statements ratio, challenging people, and pushing for penetrating insight. |

| Team members acquiesce to a decision yet do not unify to make the decision successful, or worse, undermine the decision after the fact. | Team members unify behind a decision once made and work to make the decision succeed, even if they vigorously disagreed with the decision. |

| Team members seek as much credit as possible for themselves yet do not enjoy the confidence and admiration of their peers. | Each team member credits other people for success yet enjoys the confidence and admiration of his or her peers. |

| Team members argue to look smart or to improve their own interests rather than argue to find the best answers to support the overall cause. | Team members argue and debate, not to improve their personal position, but to find the best answers to support the overall cause. |

| The team conducts “autopsies with blame,” seeking culprits rather than wisdom. | The team conducts “autopsies without blame,” mining wisdom from painful experiences. |

| Team members often fail to deliver exceptional results, and blame other people or outside factors for setbacks, mistakes, and failures. | Each team member delivers exceptional results, yet in the event of a setback, each accepts full responsibility and learns from mistakes. |

One common behavior of late Stage 3 (and that often carries well into Stage 4) is when those in power blame other people or external factors—or otherwise explain away the data—rather than confront the frightening reality that the enterprise may be in serious trouble. As IBM began its historic fall in the late 1980s and early 1990s, it faced the onslaught of distributed computing that threatened its mainframe business. An executive who reported these disturbing trends to IBM senior leadership found himself chastised, a powerful IBM leader brushing his report aside with a dismissive sweep of the hand: “There must be something wrong with your data.” The young executive knew then IBM would fall. “Doing a start-up seemed less risky than working in a climate of denial,” he later quipped about his decision to leave IBM to become an entrepreneur. IBM reorganized and reengineered, but it didn’t successfully address the perilous erosion of its position until it had fallen so far that it would be likened in 1992 to a dinosaur, soon to be extinct. In his historic turnaround of IBM (which we will discuss in subsequent pages), Louis V. Gerstner, Jr. confronted the harsh reality of IBM’s shortcomings head-on, challenging his team early in his tenure, “One hundred and twenty-five thousand IBMers are gone. … Who did it to them? Was it an act of God? These guys came in and beat us.”88

In this analysis, we found evidence of externalizing blame during the era of decline in seven of eleven cases. When Zenith hit a hard patch in the mid-1970s, its CEO pointed out the window to a range of factors: “Who could have predicted the Arabs could have gotten together on any subject? Who could have foreseen Watergate? The great inflation we had? . . . Then we were hit by a strike.”89 Zenith also began to blame “unfair” Japanese competition for eroding profits and declining market share. Even if the Japanese did compete unfairly (although the Justice Department did not act in response to Zenith’s pleas for help), Zenith’s response to the Japanese resembled that of the American auto industry in the same era, a failure to confront head-on the fact that the Japanese had learned how to lower costs and increase quality. Shortly thereafter, Zenith fell into Stage 4.

One final manifestation of denial deserves special attention: obsessive reorganization. By 1961, Scott Paper had built the most successful paper-based consumer products franchise in the world, with commanding positions in all manner of products, including napkins, towels, and tissue. Then P&G entered Scott’s territory for the first time, while other companies like Kimberly-Clark and Georgia Pacific persistently encroached on Scott’s markets. P&G launched Bounty paper towels on the high end, while private label brands encircled Scott from below. From 1960 to 1971, Scott’s share of the paper-based consumer business fell from nearly half the market to a third.90 Then in 1971, P&G went national with its Charmin toilet tissue—a direct assault on one of Scott’s most important product lines.

And how did Scott respond?

By reorganizing.91

Scott restructured marketing and research, moving boxes around on the organizational chart, but failed to mount a vigorous response to Charmin for five years.92 Five years! Scott continued to restructure through the 1980s, at one point reorganizing three times in four years.93 With eroding market share in nearly every category, Scott Paper fell into Stage 4.94

Reorganizations and restructurings can create a false sense that you’re actually doing something productive. Companies are in the process of reorganizing themselves all the time; that’s the nature of institutional evolution. But when you begin to respond to data and warning signs with reorganization as a primary strategy, you may well be in denial. It’s a bit like responding to a severe heart condition or a cancer diagnosis by rearranging your living room.

There is no organizational utopia. All organizational structures have trade-offs, and every type of organization has inefficiencies. We have no evidence from our research that any one structure is ideal in all situations, and no form of reorganization can make risk and peril melt away.

MARKERS FOR STAGE 3

• AMPLIFY THE POSITIVE, DISCOUNT THE NEGATIVE: There is a tendency to discount or explain away negative data rather than presume that something is wrong with the company; leaders highlight and amplify external praise and publicity.

• BIG BETS AND BOLD GOALS WITHOUT EMPIRICAL VALIDATION: Leaders set audacious goals and/or make big bets that aren’t based on accumulated experience, or worse, that fly in the face of the facts.

• INCURRING HUGE DOWNSIDE RISK BASED ON AMBIGUOUS DATA: When faced with ambiguous data and decisions that have a potentially severe or catastrophic downside, leaders take a positive view of the data and run the risk of blowing a hole “below the waterline.”

• EROSION OF HEALTHY TEAM DYNAMICS: There is a marked decline in the quality and amount of dialogue and debate; there is a shift toward either consensus or dictatorial management rather than a process of argument and disagreement followed by unified commitment to execute decisions.

• EXTERNALIZING BLAME: Rather than accept full responsibility for setbacks and failures, leaders point to external factors or other people to affix blame.

• OBSESSIVE REORGANIZATIONS: Rather than confront the brutal realities, the enterprise chronically reorganizes; people are increasingly preoccupied with internal politics rather than external conditions.

• IMPERIOUS DETACHMENT: Those in power become more imperious and detached; symbols and perks of executive-class status amplify detachment; plush new office buildings may disconnect executives from daily life.