Backpropagation takes place once feed forward is completed. It stands for backward propagation of errors. In the case of neural networks, this step begins to compute the gradient of error function (loss function) with respect to the weights. One can wonder why the term back is associated with it. It's due to gradient computation that starts backwards through the network. In this, the gradient of the final layer of weights gets calculated first and the the weights of the first layer are calculated last.

Backpropagation needs three elements:

- Dataset: A dataset that consists of pairs of input-output

where

where  is the input and

is the input and  is the output that we are expecting. Hence, a set of such input-outputs of size N is taken and denoted as

is the output that we are expecting. Hence, a set of such input-outputs of size N is taken and denoted as  .

. - Feed-forward network: In this, the parameters are denoted as θ. The parameters,

, the weight between node j in layer lk and node i in layer lk-1, and the bias

, the weight between node j in layer lk and node i in layer lk-1, and the bias  for node i in layer lk-1. There are no connections between nodes in the same layer and layers are fully connected.

for node i in layer lk-1. There are no connections between nodes in the same layer and layers are fully connected. - Loss function: L(X,θ).

Training a neural network with gradient descent requires the calculation of the gradient of the loss/error function E(X,θ) with respect to the weights  and biases

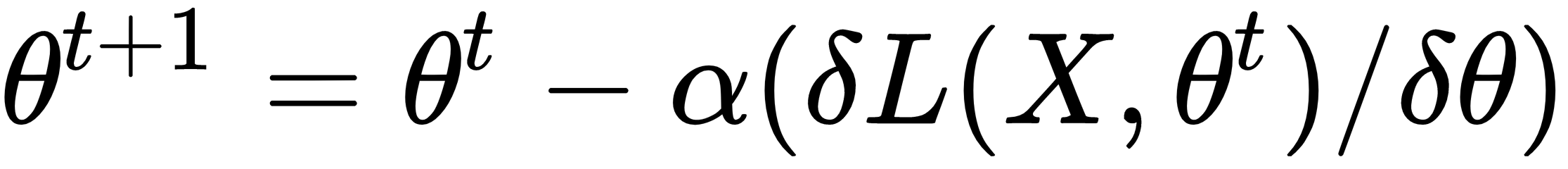

and biases  . Then, according to the learning rate α, each iteration of gradient descent updates the weights and biases collectively, denoted according to the following:

. Then, according to the learning rate α, each iteration of gradient descent updates the weights and biases collectively, denoted according to the following:

Here  denotes the parameters of the neural network at iteration in gradient descent.

denotes the parameters of the neural network at iteration in gradient descent.