Let's see how these equations set up for layer 1 and layer 2. If the training example set, X is (x1, x2, x3) for the preceding network.

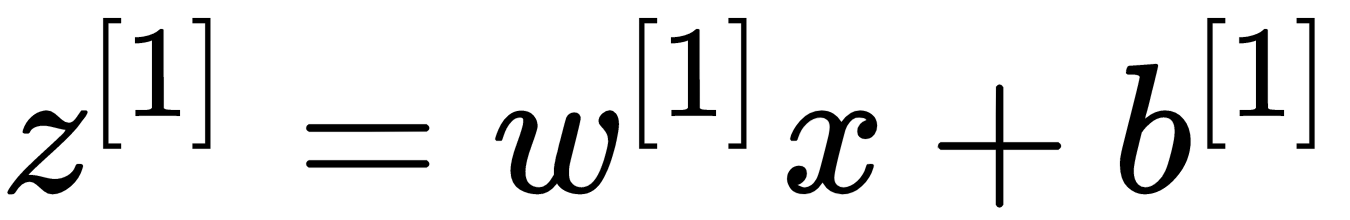

Let's see how the equation comes along for layer 1:

The activation function for layer 1 is as follows:

The input can also be expressed as follows:

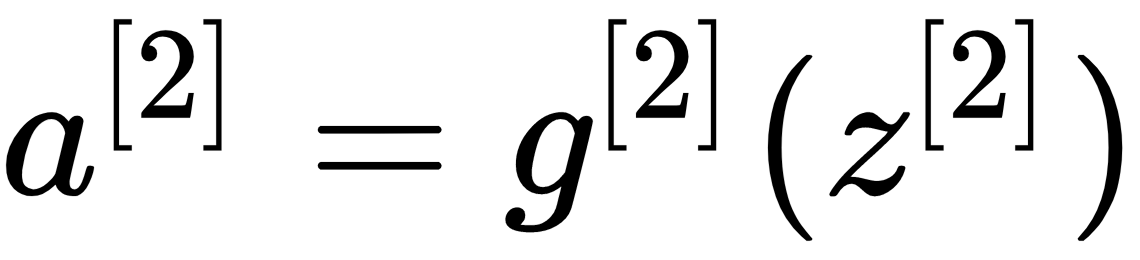

For layer 2, the input will be as follows:

The activation function that's applied here is as follows:

Similarly, for layer 3, the input that's applied is as follows:

The activation function for the third layer is as follows:

Finally, here's the input for the last layer:

This is its activation:

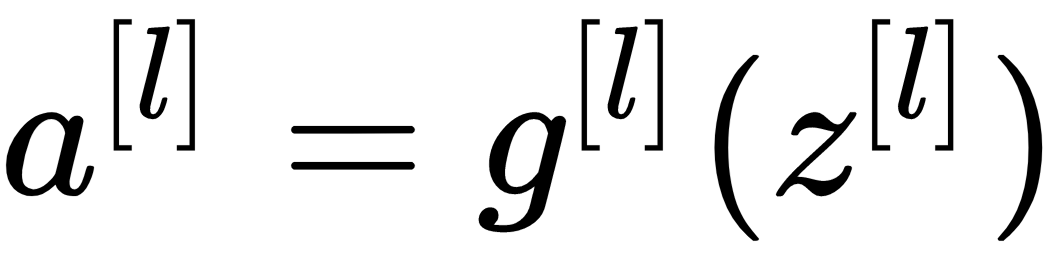

Hence, the generalized forward propagation equation turns out to be as follows: