A decision tree is a supervised learning technique that works on the divide-and-conquer approach. It can be used to address both classification and regression. The population undergoes a split into two or more homogeneous samples based on the most significant feature.

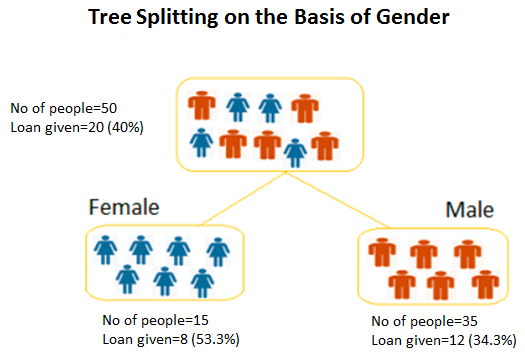

For example, let's say we have got a sample of people who applied for a loan from the bank. For this example, we will take the count as 50. Here, we have got three attributes, that is, gender, income, and the number of other loans held by the person, to predict whether to give them a loan or not.

We need to segment the people based on gender, income, and the number of other loans they hold and find out the most significant factor. This tends to create the most homogeneous set.

Let's take income first and try to create the segment based on it. The total number of people who applied for the loan is 50. Out of 50, the loan was awarded to 20 people. However, if we break this up by income, we can see that the breakup has been done by income <100,000 and >=100,000. This doesn't generate a homogeneous group. We can see that 40% of applicants (20) have been given a loan. Of the people whose income was less than 100,000, 30% of them managed to get the loan. Similarly, 46.67 % of people whose income was greater than or equal to 100,000 managed to get the loan. The following diagram shows the tree splitting on the basis of income:

Let's take up the number of loans now. Even this time around, we are not able to see the creation of a homogeneous group. The following diagram shows the tree splitting on the basis of the number of loans:

Let's get on with gender and see how it fares in terms of creating a homogeneous group. This turns out to be the homogeneous group. There were 15 who were female, out of which 53.3% got the loan. 34.3% of male also ended up getting the loan. The following diagram shows the tree splitting based on gender:

With the help of this, the most significant variable has been found. Now, we will dwell on how significant the variables are.

Before we do that, it's imperative for us to understand the terminology and nomenclature associated with the decision tree:

- Root Node: This stands for the whole population or dataset that undergoes a split into two or more homogeneous groups

- Decision Node: This is created when a node is divided into further subnodes

- Leaf Node: When there is no possibility of nodes splitting any further, that node is termed a leaf node or terminal node

- Branch: A subsection of the entire tree is called a branch or a Sub-tree: