Let me show you how forward pass and backward pass work with the help of an example.

We have got a network that has got two layers (1 hidden layer and 1 output layer). Every layer (including the input) has got two nodes. It has got bias nodes as well, as shown in the following diagram:

The notations that are used in the preceding diagram are as follows:

- IL: Input layer

- HL: Hidden layer

- OL: Output layer

- w: Weight

- B: Bias

We have got the values for all of the required fields. Let's feed this into the network and see how it flows. The activation function that's being used here is the sigmoid.

The input that's given to the first node of the hidden layer is as follows:

InputHL1 = w1*IL1 + w3*IL2 + B1

InputHL1= (0.2*0.8)+(0.4*0.15) + 0.4 =0.62

The input that's given to the second node of the hidden layer is as follows:

InputHL2 = w2*IL1 + w4*IL2 + B1

InputHL2 = (0.25*0.8) +(0.1*0.15) + 0.4 = 0.615

To find out the output, we will use our activation function, like so:

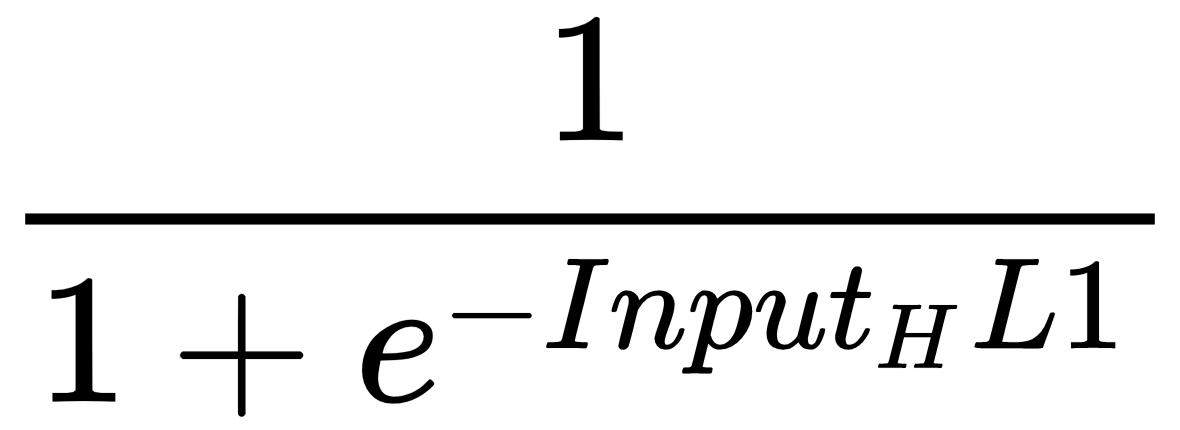

OutputHL1 =  = 0.650219

= 0.650219

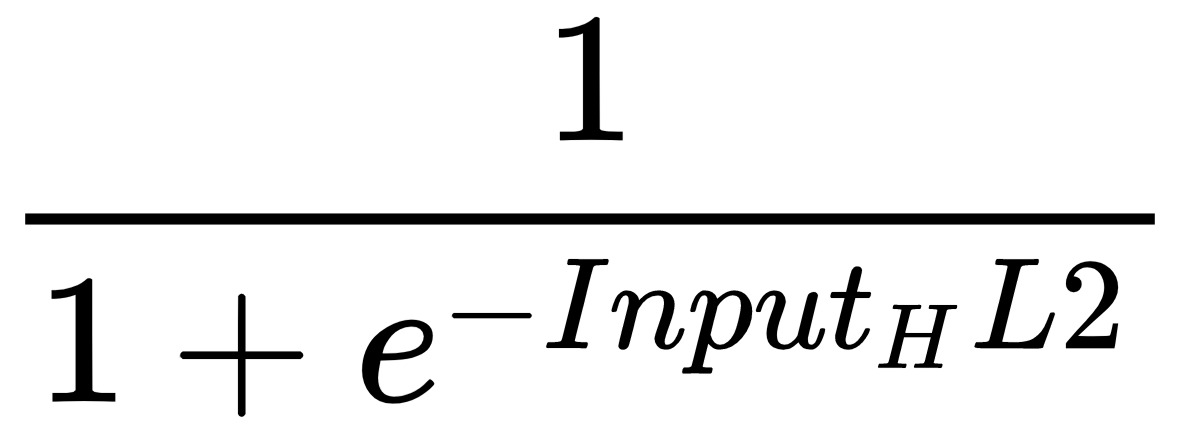

OutputHL2 =  = 0.649081

= 0.649081

Now, these outputs will be passed on to the output layer as input. Let's calculate the value of the input for the nodes in the output layer:

InputOL1 = w5*Output_HL1 + w7*Output_HL2 + B2 = 0.804641

InputOL2= w6*Output_HL1 + w8*Output_HL2 + B2= 0.869606

Now, let's compute the output:

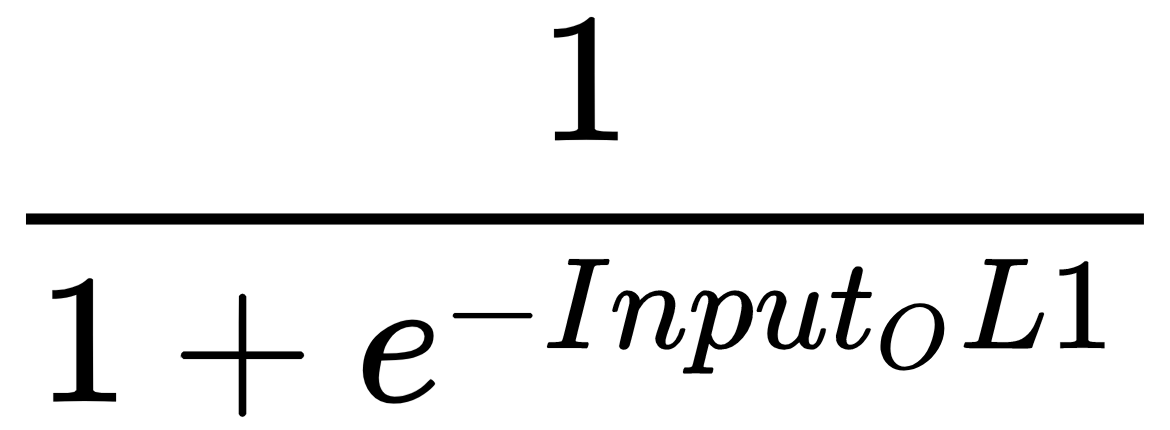

OutputOL1 =  = 0.690966

= 0.690966

OutputOL2 = = 0.704664

= 0.704664