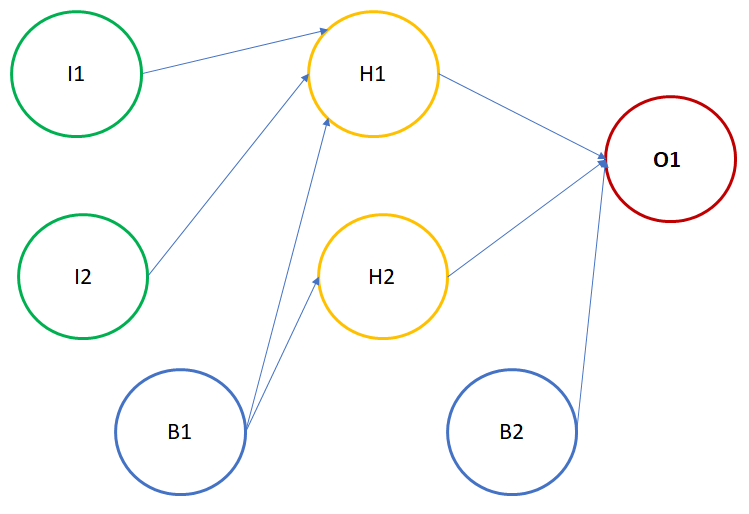

Now, let's look at a simple and shallow network:

Where:

- I1: Input neuron 1

- I2: Input neuron 2

- B1: Bias 1

- H1: Neuron 1 in hidden layer

- H2: Neuron 2 in hidden layer

- B2: Bias 2

- O1: Neuron at output layer

The final value comes at the output neuron O1. O1 gets the input from H1, H2, and B2. Since B2 is a bias neuron, the activation for it is always 1. However, we need to calculate the activation for H1 and H2. In order to calculate activation of H1 and H2, activation for I1, I2, and B1 would be required. It may look like H1 and H2 will have the same activation, since they have got the same input. But this is not the case here as weights of H1 and H2 are different. The connectors between two neurons represent weights.