CHAPTER 7

Ecosystem at Large: Hadoop with Apache Bigtop

In the modern world software is becoming more and more sophisticated all of the time. The main complexity, however, lies not in the algorithms or the tricky UI experience. It is hidden from the end user and resides in the back—in the relations and communications between different parts of a software solution commonly referred to as a software stack. Why are stacks so important, and what is so special about the Apache Hadoop data processing stack?

In this chapter you will be presented with materials to help you get a better grip of data processing stacks powered by the software that forms the foundation of all modern Apache Hadoop distributions. The chapter, by no means, is a complete text book on Apache Bigtop. Instead, we will put together a quick guide on the key features of the project, and explain how it is designed. There will be a collection of available resources that help you to grow your expertise with the ecosystem.

Bigtop is an Apache Foundation project aimed to help infrastructure engineers, data scientists, and application developers to develop and advance comprehensive packaging. This requires you to test and manage the configurations of the leading open source big data components. Right now, Bigtop supports a wide range of projects, including, but not limited to, Hadoop, HBase, Ignite, and Spark. Bigtop packages RPM and DEB formats, so that you can manage and maintain your data processing cluster. Bigtop includes mechanisms, images, and recipes for deploying Hadoop stack from zero to many supported operating systems, including Debian, Ubuntu, CentOS, Fedora, openSUSE and many others. Bigtop delivers tools and a framework for testing at various levels (packaging, platform, runtime, etc.) for both initial deployments as well as upgrade scenarios for the entire data platform, not just the individual components.

It's now time to dive into the details of this exciting project that truly spans every single corner of the modern data processing landscape.

BASICS CONCEPTS

Developers working on various applications should have a focal point where products from different teams get integrated into a final ecosystem of the software stack. In order to lower the mismatch between the storage layer and the log analysis subsystem, the APIs of the former have to be documented, supported, and stable. Developers of a log processing component can fix the version of the file system API by using Maven, Gradle, or another build and dependency management software. This approach will provide strong guarantees about compatibility at the API and binary levels.

The situation changes dramatically when both pieces of the software are deployed into a real datacenter with a configuration that is very different from one used on the developer's laptop. A lot of things will be different in the datacenter environment: kernel updates, operating system packages, disk partitioning, and more. Another variable sometimes unaccounted for is the build environment and process, tasked with producing binary artifacts for production deployment. Build servers can have stale or unclean caches. These will pollute a product's binaries with incorrect or outdated versions of the libraries, leading to different behavior in different deployment scenarios.

In many cases, software developers aren't aware about operational realities and deployment conditions where their software is being used. This leads to production releases that aren't directly consumable by the IT. My “favorite” example of this is when a large company's datacenters' operation team has a 23-step cheat-sheet on how to prepare an official release of a software application to make it deployable into a production environment.

IT professionals tasked with provisioning, deployment, and day-to-day operations of the production multi-tenant systems know how tedious and difficult it is to maintain, update, and upgrade parts of a software system including the application layer, RDBMS, web servers, and file storage. They no doubt know how non-trivial the task of changing the configuration on hundreds of computers is in one or more datacenters.

What is the “software stack” anyway? How can developers produce deployment-ready software? How can operation teams simplify configuration management and component maintenance complexities? Follow along as we explore the answers to all of these questions.

Software Stacks

A typical software stack contains a few components (usually more than two) combined together to create a complete platform so that no additional software is needed to support any applications. Applications are said to “run on” or “run on top of” the resulting platform. The most common stack examples includes Debian, LAMP, and OpenStack. In the world of data processing you should consider Hadoop-based stacks like Apache Bigtop™, as well as commercial distributions of Apache Hadoop™ based on the Bigtop: Amazon EMR. Hortonworks Data Platform is another such example.

Probably the most widely known data processing stack is Apache Hadoop™, composed of HDFS (storage layer) and MR (computation framework on top of distributed storage). This simple composition, however, is insufficient for modern day requirements. Nonetheless, Hadoop is often used as the foundation of advanced computational systems. As a result, a Hadoop-proper stack would be extended by other components such as Apache Hbase™, Apache Hive™, Apache Ambari™ (installation and management application), and so on. In the end, you might end up with something like what is shown in Table 7.1.

Table 7.1 Extended components make up a Hadoop proper stack

| hadoop | 2.7.1 |

| hbase | 0.98.12 |

| hive | 1.2.1 |

| ignite-hadoop | 1.5.0 |

| giraph | 1.1.0 |

| kafka | 0.8.1.1 |

| zeppelin | 0.5.5 |

The million dollar question is: How do you build, validate, deploy, and manage such a “simple” software stack? First let's talk about validation.

Test Stacks

Like the software stacks described above, you should be able to create a set of components with the sole aim of making sure that the software isn't dead on arrival and that it certainly delivers what was promised. So, by extension: A test stack contains a number of applications combined together to verify the viability of a software stack by running certain workloads, exposing components' integration points, and validating their compatibility with each other.

In the example above the test stack will include, but not be limited by, the integration testing applications, which ensures that Hbase v 0.98.16 works properly on top of Hadoop 2.7.1. It must also work with Hive 1.2.1, it must be able to use the underlying Hadoop Mapreduce, work with YARN (Hadoop resource navigator), and use Hbase 0.98.16 as the storage for external tables. Keep in mind that Zeppelin validation applications guarantee that data scientists' notebooks are properly built and configured to work with Hbase, Hive, and Ignite.

Works on My Laptop

If you aren't yet thinking “How in the world can all of these stacks be produced at all?” here's something to make you go “Hmm.” A typical modern data processing stack includes anywhere between 10 and 30+ different software components. Most of them, being an independent open source project, have their own release trains, schedules, and road maps.

Don't forget that you need to add a requirement to run this stack on a CentOS7 and Ubuntu 14.04 clusters using OpenJDK8, in both development and production configurations. Oh, and what about that yesteryear stack, which right now is still used by the analytical department? It now needs to be upgraded in Q3! Given all of this, the “works on my laptop” approach is no longer viable.

DEVELOPING A CUSTOM-TAILORED STACK

How can a software developer, a data scientist, or a commercial vendor go about the development, validation, and management of a complex system that might include versions of the components not yet available on the market? Let's explore what it takes to develop a software stack that satisfies your software and operation requirements.

Apache Bigtop: The History

Apache Bigtop (http://bigtop.apache.org) has a history of multiple incarnations and revisions. Back in 2004-2005, the creation and delivery of Sun Microsystems Java Enterprise Stack (JES) was done with a mix of Tinderbox CI along with a build manifest describing the stack's composition: common libraries, software components such as directory server, JDK, application server versions, and so on.

Another attempt to develop the stack framework concept further was taken at Sun Microsystems, and was aimed at managing the software stacks for enterprise storage servers. The modern storage isn't simply Just a Bunch of Disks, but rather an intricate combination of the hardware, operating system, and application software on top of it. The framework was tracking a lot more things, including system drivers, different versions of the operating system, JES, and more. It was, however, very much domain-specific, had a rigid DB schema, and a very implementation aware build system.

The penultimate incarnation, and effectively the ancestor of what we know today as Apache Bigtop, was developed to manage and support the production of the Hadoop 0.20.2xx software stack at the Yahoo! Hadoop team. With the advent of security, the combinatorial complexity blew up and it became impossible to manage it with the existing time and resource constraints. It was very much specific for Yahoo's internal packaging formats and operational infrastructure, but in hindsight you could see a lot of similarities with today's open source implementation, discussed later in this chapter.

The first version of modern Apache Bigtop was initially developed by the same engineers who implemented Yahoo!'s framework. This time it introduced the correct conceptualization of software and test stacks. It was properly abstracted from component builds (they are different, because the development teams are coming from different backgrounds), it has provided the integration testing framework capable of working in a distributed environment, and it has many other improvements. An early version of the deployment recipes and packaging was contributed by developers from Cloudera. By Spring 2011 it was submitted to the Apache Software Foundation for incubation and later became a top-level project.

Today, Apache Bigtop is employed by all commercial vendors of Apache Hadoop as the base framework for their distributions. This has a number of the benefits for end users, since all of the package layouts, configuration locations, and life-cycle management routings are done in the same way across different distros. The exception to the latter rule is introduced by Apache Ambari and some closed-source cluster managers, which use their own life-cycle control circuits, circumventing the standard Linux init.d protocol.

Apache Bigtop: The Concept and Philosophy

Conceptually, Bigtop is a combination of four subsystems serving different purposes:

- A stack composition manifest or Bill of Materials (BOM).

- The Gradle build system managing artifacts creation (packages), the development environment configuration, the execution of the integration tests, and some additional functions.

- Integration and smoke tests along with the testing framework called iTest.

- A deployment layer providing for seamless cluster deployment using binary artifacts and the configuration management of the provisioned cluster. Deployment is implemented via Puppet recipes. It helps to quickly deploy a fully functional distributed cluster, secured or non-secured, with a properly configured components' subset. The composition of the final stack is controlled by user input.

Apache Bigtop provides the means and toolkit for stack application developers, data scientists, and commercial vendors to have a predictable and fully controllable process to iteratively design, implement, and deliver a data processing stack. Philosophically, it represents the idea of empirical bake-in, versus a rational approach in the software development. Why are all of these complications needed, and why not to use unit and functional tests, you might ask?

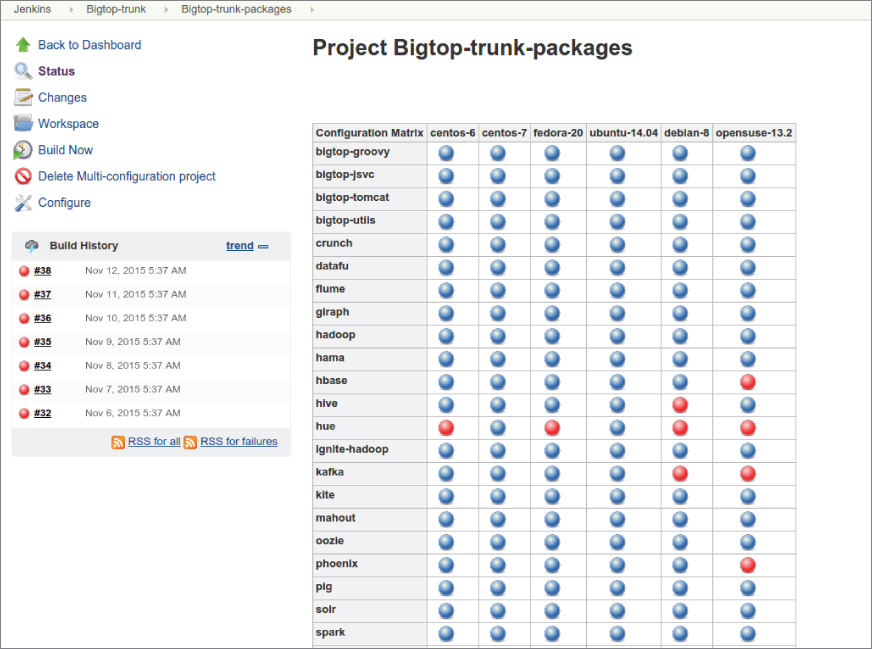

The complications are dictated by the nature of the environments where the stacks are designed to work. Many things can have an effect on how well the distributed system works. Permutations of kernel patch-levels and configurations could affect the stability. Versions of the runtime environment, network policies, and bandwidth allocation could directly cut into your software performance. Failures of the communication lines or services, and nuances of your own software configuration, could result in data corruption or total loss. In some situations a developer needs to do an A-B test on the stack where only a single property is getting changed, such as by applying a specific patch-set to Hbase and checking if the stack is still viable. In general, it is impossible to rationalize all of those variances: You only can guarantee that a particular composition of a software stack works in an X environment with Y configuration if you have a way to empirically validate and prove such a claim. At times an already released project has a backward incompatible change that was missed during the development, testing, and release process. This can be discovered by a full stack integration validation with Apache Bigtop (see Figure 7.1). Such findings always lead to consequent updates, thus fixing the issues for the end users.

Continuous integration tools like Jenkins and TeamCity have become a part of the day-to-day software engineering process. Naturally, using a continuous integration setup with Apache Bigtop shortens the development cycle, and improves the quality of the software by providing another tremendous benefit of the quick discovery of bugs. You can quickly glance at the current issues using information radiators like this: https://cwiki.apache.org/confluence/display/BIGTOP/Index.

Now let's proceed to a hands-on exercise of creating your own Apache data processing stack. All examples in this chapter are based on the latest (at the time of writing) Apache Bigtop 1.1 release candidate.

The Structure of the Project

At the top-level, Bigtop has a few important moving parts. Let's review some of them:

build.gradle: Represents the core of the build systempackages.gradle: Represents the core of the build systembigtop.bom: The default stack composition manifestbigtop_toolchain/:Sets up the development environmentsbigtop-test-framework/: A home of iTest, the integration testing frameworkbigtop-tests/: Contains all the code for integration and system tests along with the Maven build to configure the environment and run the tests against a clusterbigtop-deploy/: Has all the deployment code for distributed clusters, as well as virtual and container environmentsbigtop-packages/: Provides all the content needed for the creation of installable binary artifacts

Let's get into some more detail about some of them.

Meet the Build System

The Apache Bigtop build system uses Gradle (http://gradle.org/). The Bigtop source tree includes a gradlew wrapper script, and in order to start working with Bigtop you need JDK7 or later, and the cloned repo of the Bigtop:

git clone https://git-wip-us.apache.org/repos/asf/bigtop.gitYou can also fork this from the github.com mirror: http://github.com/apache/bigtop.git.

And now, to see the list of available tasks you can simply run:

cd bigtop

./gradlew tasksStack components can be built all at once:

./gradlew deb or ./gradlew rpmYou can also use an explicit selection:

./gradlew allclean hive-rpmThe Bigtop build is the center of most all of the activities and functionalities in the framework. It is used to create the development environment and the build binaries, to compile and run tests, to deploy project artifacts to a central repository, to build the project web-site, and to do many other things.

Bigtop has a way to specify inter-component dependencies in the stack so all upstream dependencies are automatically built first if needed. In the above example, if -Dbuildwithdeps=true is passed in the build time, Bigtop will first download and build Hadoop, and only then will it proceed with Hive. Hadoop, however, requires ZooKeeper, so its build will precede the creation of the Hadoop component. By default, however, Bigtop will only build the component that is explicitly specified.

Bigtop provides the functionality to generate local apt and yum repositories from existing packages. It allows a stack developer to quickly test freshly-built packages by pointing to the repo location in the local filesystem. This can be done by running:

./gradlew apt|yumA corresponding repository will be created using all of the DEB or RPM packages found under the top-level output/ directory.

At any point, to explore all of the standard tasks available for the end user, you can execute:

./gradlew tasksAnd now we are ready to check how to configure and work with the development environment, which is needed to build all of the highly complex data processing software, known as the Hadoop stack.

Toolchain and Development Environment

In order to create a stack of dozens of components, your system will need to be equipped with a lot of development tools. Keeping track of these requirements is a full-time job. Fortunately, the development needs for all of the supported platforms are readily provided by the Bigtop toolchain located under the bigtop_toolchain/ top-level directory. Bigtop is using Puppet not only for deployment, but also for its own needs, like setting up the development environment. You don't need to be a Puppet expert, however, to take advantage of it. Just make sure you have sudo rights and type this:

./gradlew toolchainThis will automatically install all of the packages for your system, including a correct version of the JDK needed for the stack components.

BOM Definition

Bigtop provides a Bill of Materials, or a BOM file, that expresses what components are included, their versions, the location of the source code and some other properties. The default BOM file name is bigtop.bom, and it describes the stack that will be created. BOM is using a simple self-documented DSL. Here's a typical component description:

'hbase' {

name = 'hbase'

relNotes = 'Apache Hbase'

version { base = '0.98.12'; pkg = base; release = 1 }

tarball { destination = "${name}-${version.base}.tar.gz"

source = "${name}-${version.base}-src.tar.gz"}

url { download_path = "/$name/$name-${version.base}/"

site = "${apache.APACHE_MIRROR}/${download_path}"

archive = "${apache.APACHE_ARCHIVE}/${download_path}"}

}As you can see, it is possible to use the already defined variables, pkg = base, from the same scope, or from other sections of the BOM:

download_path = "/$name/$name-${version.base}.The DSL processor will stop the build if any errors are detected.

The standard sources of the components are official Apache project releases. You can choose, however, to build a component from elsewhere by pointing to a different location of the source archive. The downloadable URL is automatically constructed as url.site/url.download_path/tarball.source. So, if you want to build Hbase from a GitHub repository using branch-1.2, change the definition to:

tarball { destination = "${name}-${version.base}.tar.gz"

source = "hbase-1.2.zip" }

url { download_path = "apache/hbase/archive"

site = "https://github.com/${download_path}"

archive = site }You can then run ./gradlew hbase-clean hbase-deb to produce a new set of binaries for Debian.

There's also a way to create component packages directly from a Git version control system. Please refer to README.md in the top level folder of the Bigtop source tree for more information about this capability. Further in the chapter, if the location of a file isn't specified explicitly, it can be found in the top-level folder.

DEPLOYMENT

Evidently, having all of the tools to develop and validate the stack only allows you to build the packages, which has little value if there's no way to deploy it and run some workloads. Generally, software stacks come with some means to install them (or deploy them in the case of distributed environments), and to manage and control their components and state. And of course Bigtop provides a couple of ways to provision your environment. The simplest one is to use the Bigtop provisioner, which we'll cover in more detail next. More complex cases might involve editing some configuration files, and running Puppet from the command line. We cover both cases for the benefit of people who manage clusters at their day jobs, and those who just need to quickly set up an environment to verify things they are developing. Let's start with a simple case.

Bigtop Provisioner

The Bigtop provisioner is a subsystem of the framework, which provides a convenient way to spin up a fully distributed Hadoop cluster using virtual machines or Docker containers. It can be found under the bigtop-deploy/vm directory of the project source tree. The most up-to date information about this deployment method can be found at: https://cwiki.apache.org/confluence/display/BIGTOP/Bigtop+Provisioner+User+Guide. We will, however, explain how it works here.

Provisioner uses Vagrant and makes cluster deployment to a virtual or containerized environment quite uniform. Try the following to see how easy it is:

- Start with

<BIGTOP_ROOT>/bigtop-deploy/vm/vagrant-puppet-dockervi vagrantconfig.yaml - Update the docker image name from

bigtop/deploy:centos-6orbigtop/deploy:debian-8and point the repository to https://cwiki.apache.org/confluence/display/BIGTOP/Index. Select the component you'd like to deploy: [hadoop, yarn, hbase] - And type the following:

./docker-hadoop.sh --create 3

The standard provisioner comes with a pre-defined configuration, and if you don't need anything special you can just use it. The provisioner is integrated into the build system:

./gradlew -Pnum_instances=3 docker-provisionerAssuming that your computer has Vagrant and Docker already installed, you will get a fully distributed cluster up and running as the result of the above command. You should be able to SSH into cluster nodes and perform the usual activities as expected. The provisioner script supports a few more commands, so refer to the top-level README.md for the most up-to-date information. To learn more about Hadoop provisioning with Docker we recommend the Evans Ye presentation on the topic available from http://is.gd/FRP1MG.

Master-less Puppet Deployment of a Cluster

For more complex cases of cluster provisioning, let's look into the deployment system. Bigtop Puppet recipes can be found under the bigtop-deploy/puppet directory of the project source tree. Let's see how Apache Bigtop allows you to quickly deploy a fully distributed software stack.

This is an advanced way of setting up a cluster, and unless you need to manage one on your own, you can skip the rest of this section and go directly to the “Integration Validation” section. A fully distributed deployment requires a few more steps compared to the Provisioner example above, but essentially with a few more commands so you can spin a cluster as big as you need, with optional High Availability for HDFS and/or YARN, and with or without security. Securing a Hadoop cluster includes standing and setting up your own KDC server, which isn't easy to do. As you saw in earlier chapters, Hadoop security is a difficult topic involving many variables, but combined with the security across the stack, it can quickly turn into a management nightmare. If you are interested in the topic please familiarize yourself with the presentation by Olaf Flebbe on “How to Deploy a Secure, Highly-Available Hadoop Platform.” The PDF slides can be downloaded from http://is.gd/awcCoD.

One of the requirements we have for the deployment mechanism is to be able to work under different operation environments. The specific host names and their roles might not be known, as in the case of a company-wide deployment system. This is why the implementation is done as a master-less dynamic system where all nodes have the same set of recipes, but different nodes receive their own configuration files. Once the groundwork is done, all nodes will simultaneously be brought into their specific states, resulting in a working cluster with as many nodes as needed. The deployment system collects node information using Puppet Hiera for lookup and collection modules, and juxtaposes it with the roles definitions from Bigtop recipes. Here's a high-level example of how it works:

- Bigtop provides a default topology template file bigtop-deploy/puppet/hieradata/site.yaml defining a few key parameters of the cluster such as

bigtop::hadoop_head_node,hadoop::hadoop_storage_dirs,hadoop_cluster_node::cluster_components, and optionally the list ofbigtop::roles. Important: the head node has to be set as a fully qualified domain name (FQDN); otherwise the node identification won't work. - By default, the roles mechanism is turned off. All nodes in the cluster are assigned a worker role, and the head node carries on the master role. So, the head node will run a NameNode process and Resource Manager process, if you deploy HDFS and YARN. The set of components is defined by

hadoop_cluster_node::cluster_components.If the list isn't set explicitly, all available packages will be installed and configured. - To take advantage of the roles, set

bigtop::roles_enabled: truein thesite.yamland specify the roles as per node. This, however, might lead to a need to manage separate configurations for different nodes, especially if your cluster topology is trivial. We will cover a possible way of handling this in the next section. The full list of roles per daemon can be found in the bigtop-deploy/puppet/manifests/cluster.pp manifest. - The complete list of configuration parameters and their default values can be found in the bigtop-deploy/puppet/hieradata/bigtop/cluster.yaml file.

Let's now proceed to the deployment itself. First, you need a set of nodes for your cluster. It's outside of the scope of this chapter and the book to go into every single detail of the hardware provisioning. If you're reading this book, however, you're probably already aware of tools like Foreman, EC2, or others. For the simplicity of this example we won't deal with role-based deployment, and we will leave it out as an exercise for the reader.

Let's say there are five nodes up and running Ubuntu 14.04, with the node[1-5].my.domain as their hostnames. Nodes will be carrying their functions as follows:

- node1 through node5 will be workers.

- node1 will serve as the head node.

- node5 will be handling the gateway functions.

The deployed stack will include HDFS, the mapred-app, the ignite-hadoop, and the Hive components. The set of the component is minimalistic, yet functional, and we'll be using it in the next chapter.

Let's start working with the node1.my.domain and clone the project Git repo under /work. According to that layout, site.yaml will have the following content:

bigtop::hadoop_head_node: "node1.my.domain"

bigtop::hadoop_gateway_node: "node5.my.domain"

hadoop::hadoop_storage_dirs:

- /data/1

- /data/2

hadoop_cluster_node::cluster_components:

- ignite_hadoop

- hive

bigtop::jdk_package_name: "openjdk-7-jre-headless"

bigtop::bigtop_repo_uri: \

"http://bigtop-repos.s3.amazonaws.com/releases/1.1.0/

ubuntu/14.04/x86_64"The latest versions of Bigtop have an ability to automatically detect and set the URL of the package repo for difference platforms, but I will leave it as it is here for better clarity.

Now we need to make sure that the all of the nodes have the same recipes and configurations. Because Puppet modifies the state of the system, it has to be executed under a privileged account such as root. It would be easier if you have a password-less SSH login between all of your nodes. Alternatively, you can manually enter the password when requested. To sync-up the project's content, simply rsync or otherwise distribute the content of the /work folder to all of the nodes in your cluster. In our experience, the best way to achieve this is by using pdsh and rsync. The following commands will do the trick. Be aware, though, that you need to specify the SSH user name and the path to the SSH key. Check with the rsync man page for more details.

export SSH_OPTS="ssh -p 22 -i /root/.ssh/id_dsa.pub -l root"

pdsh -w node[2-5].my.domain rsync $SSH_OPTS -avz –-delete node1.my.domain:/work /At this point, all of the nodes should have an identical /work folder. Puppet Hiera, however, must be able to read the configuration file and some other files from the workspace:

vi bigtop-deploy/puppet/hiera.yamlYou can then point datadir to the workspace, so the line looks like this:

:datadir: /work/bigtop-deploy/puppet/hieradata

cp bigtop-deploy/puppet/hiera.yaml /etc/puppet/hiera

pdsh -w node[2-5].my.domain rsynch $SSH_OPTS -avz –-delete

node1.my.domain:/etc/puppet/hiera.yaml /etc/puppet/The last preparation step is to make sure that all nodes have the required Puppet modules by running:

pdsh -w node[1-5].my.domain 'cd /work && \

puppet apply --modulepath="bigtop-deploy/puppet/modules" -e "include

bigtop_toolchain::puppet-modules"'And we are now ready for the deployment:

pdsh -w node[1-5].my.domain 'cd /work && \

puppet apply -d --modulepath="bigtop-deploy/puppet/modules:/etc/puppet/modules"

bigtop-deploy/puppet/manifests/site.pp'After a few minutes (your mileage might vary with different connection speeds) you should have a fully functional Hadoop cluster with a formatted HDFS. You will also have your user directories that are set with correct permissions, as well as other fully configured components with their services up and running. The node5.my.domain now has all client binaries and libraries working with cluster services. Enjoy!

Each release of Apache Bigtop comes with generated repo files for a variety of operating systems. The set for release 1.0.0 can be found at https://cwiki.apache.org/confluence/display/BIGTOP/Index. Similarly, release 1.1.0 will be published at https://dist.apache.org/repos/dist/release/bigtop/bigtop-1.1.0/repos/ once the release candidate is officially accepted.

Configuration Management with Puppet

As you can see, standing up a real distributed cluster is a bit more complex than a simple provisioner, but it is still trivial enough. You might end up with nodes built from different hardware batches with different amounts of RAM and/or hard drives. The software composition of the nodes might be dissimilar to each other, and carry their own function in the pipeline. So, a subset of the nodes might only carry Apache Kafka nodes and serve the logs collection, whereas another subset may be designated to the events stream processing using Apache Flink. These node configurations and packages would have to be maintained or updated on different schedules; some of the maintenance might require service restarts and some might not. Handling these intricacies is a full time job. That's why cluster orchestration is an important topic. The orchestration is quite different from management, but both terms are often and incorrectly used interchangeably. Without getting into too many details let's consider orchestration to be composed of architecture, tools, and a process to deliver a pre-defined service. Management, on the other hand, provides tools and information radiators for automation, monitoring, and control.

Hadoop vendors do provide some tooling to help with the management routine. These tools are available as free and open source, and as proprietary commercial tools as well. You should be able to easily identify them with a quick search, but we don't recommend focusing on those tools for three main reasons:

- They aren't compatible with the standard Linux

init.d(or systemd) life-cycle management, and they use their own custom ways of standing up, configuring, and managing cluster services. - The tools are all webUI-based and interactive, which makes scripting and automation around them either impossible or considerably more difficult.

- These tools have their custom implementations for system management, which pretty much disregards decades of operation experience put together by producers of Chef and Puppet. This is a very complex topic, and professionals are sticking to well-known frameworks, which are also well-integrated with provisional systems like Foreman.

These management tools, however, help to significantly lower the entry barrier for people not familiar with the software in question. They hide a lot of complexities and deliver a central console to observe and control system behavior. This of course has its own benefits.

So what can a professional system administrator or a DevOps engineer do? With Unix, the best result is achieved by putting together a set of smaller tools and utilities, each being responsible for a smaller piece of action. In our case, we should have a Version Control System (VCS) that is responsible for the versioning aspect of the cluster configuration, and Puppet to take care about the state management of it. It is wise to use a distributed VCS, given how all of the configuration changes must be propagated to multiple hosts. We use Git, but you can use Subversion or Mercurial if it suites you better.

The idea is pretty simple: Specific configurations have to be separated, and should be updated independently. A VCS branch mechanism fits here perfectly, with different group or role configurations living in their own branches. Now the configurations files, or their templates, as well as the versions of the software packages, can be independently managed by people with domain expertise, which are typically DevOps engineers. Once a stack update is validated in a testing environment, it can be easily pushed into production, by merging or explicitly picking certain configuration changes between branches. As soon as the change is pushed to the VCS server, all nodes can pick it up and consequently apply it in full isolation from each other. The state machine (Puppet or Chef) will automatically restart the services according to the given recipes. This process can be as fine grained as needed and can easily change without massive outages of the cluster. There's no single point of failure either, given how there isn't a single management host or service.

Here's a sketch of how it works, but please note that the installation and configuration of the Git servers is outside the scope of this chapter. This solution has essentially three parts: VCS, cron, and master-less Puppet. Each node in the cluster has a crontab entry to do the following:

cd /work

git pull origin/node-$ROLE-branch

puppet apply manifests/site.ppThe environment variable $ROLE might be set during the initial provisioning of the operating system or specified otherwise. The cron will execute the above set of commands as often as practical, keeping the nodes of the cluster in a coherent state per specified configurations.

INTEGRATION VALIDATION

Now that we have our desirable Hadoop-based cluster up and running, we should check if it is working as expected, and that all of the components can play nicely with each other. The best way to figure this out is by running some workloads that will not only check if separate parts of the clusters are working as expected, but will also be crossing the components' boundaries to make sure they are binary and API compatible. Bigtop has two ways to do this: via integration or smoke tests. Both kinds of tests can be written in any JVM language.

You can immediately spot how a lot of tests in the Bigtop, as well as the iTest framework, are written using the Groovy language. The main reason is because Groovy provides a unique mix of dynamic capabilities with a strong-typed language. Being a truly polyglot language, you don't have to worry much about arbitrary file extensions. Depending upon the problem at hand, you can just write your code in Java or Groovy. Groovy scripting is a very powerful tool, as you'll see in the later section discussing smoke tests. In fact, Groovy is quite deeply intertwined with the Bigtop build, the actual deployment, and the stack. Bigtop's build system, Gradle, is a type of Groovy DSL. We will use a Groovy script to format an HDFS file system and stuff the distributed cache with all of the expected libraries and files (bigtop-packages/src/common/hadoop/init-hcfs.groovy). Note how bigtop-groovy is a standard package of the Apache Bigtop stack.

Bigtop is currently in the almost completed transition from the Maven build system to Gradle. This proved to be more comprehensive and a better fit for the variety of the tasks needed to be managed in the Bigtop project. As a result, smoke tests are controlled by the Gradle build, whereas the old-fashioned integration tests are still relying on Maven. The latter is retrofitted into a top-level Gradle build, but full integration is not yet finished. Don't be alarmed—it is coming.

Once the transition is finished, instead of Maven modules as explained below, Bigtop will be using Gradle multi-project builds, although conceptually it won't change much for your users.

iTests and Validation Applications

All tests in Bigtop are considered to be first class citizens, similar to the production code. They aren't really tests, but rather validation applications tuned for the particular software stack. Each application has two parts to it:

- The application code can be found under test-artifacts/ along with its build environment where the target dependencies are set and particular APIs are exercised.

- Maven executors start the applications. These are simple Maven modules sitting under test-execution/ to perform the checking and setting of the environment where needed.

The Integration validation application can use the helper functionality provided by the Bigtop integration test framework (iTest). It can complement both an application and its executor. iTest is an extension to the standard JUnit v4, adding nice things like the ordered execution of tests, the ability to run tests directly from JAR files, and some other useful features. We won't, however, focus much on the iTest itself. If you are interested to learn more you can find all related information under the bigtop-test-framework/ top-level directory.

Now, tests or validation applications are much more fun. Let's first look under the hood of the development of integration application artifacts.

Stack Integration Test Development

Each validation application is represented by a Maven module. There are two parts in the development of any integration application: code changes and artifact deployment. But first, the new application has to be added to the test stack. As with any Maven project you need to create the module's structure under the bigtop-tests/test-artifacts/ folder and list it in the top-level bigtop-tests/test-artifacts/pom.xml. The module's Project Object Model (or POM in Maven-speak) will need to define all of the dependencies and resources it needs. The project's top-level POM has all of the component versions included in the stack, so in most cases modules should be able to simply list their needs in the <dependencies> section and versions will automatically be inherited via the parent POM.

If you look into the code of existing validation applications you will notice that they may be written as direct calls to component APIs such as Hadoop and HBase. Another possibility is to invoke command line utilities using the components that they themselves would provide. And the last, but not least, approach includes the mix of both. Some of the Hadoop or HBase tests can start calling platform APIs to bring the system into a certain state, followed up by an execution of either the Hadoop or Hbase CLI in order to check the viability of certain functionality.

Both approaches have their own merits and purposes. The validation applications working with a component API have a better chance of catching unexpected and incompatible changes. The programming interfaces tend to be finer grained yet they may not necessarily be immediately exposed in the user-facing functionality. As the result, a change at the API level might go unnoticed until the software is released to the customer. And testing aimed at the API level is especially important for publicly exposed integration layers where changes in a method semantic might not be immediately caught by the lower-level tests, yet it has all the potential to break the application contracts. Changes of this sort most likely aren't possible to test with a unit or functional test, because they might require complex setups impossible to mock or emulate. In this case, integration tests provide a valuable service for the application developers. This type of test is quite sensitive and tends to catch a lot of issues before the code hits the production clusters. On the flip side, there's a potential for a higher maintenance cost, and they might fail every now and then.

The second kind of Bigtop validation applications are most suitable for user-facing functionality involving command-line tooling like Hadoop, Hive, and Hbase. A good example of this kind is TestDFSCLI.java in a Hadoop module. The test uses an external definition of the Hadoop CLI command semantics and runs the Hadoop utility from the deployed cluster to validate if its functionality is as advertised. These tests tend to be more stable and carry less of a burden on the developer, but they justify the time spent on their implementation.

And now we are ready to begin with the development of a new validation application. You may need to run the new code against an existing system to make sure it performs as expected. For the applications validating APIs, all of the code needs should be satisfied by the build system, and it is easy to run and debug them in your favorite IDE. We recommend IntelliJ IDEA as the development tool of the highest caliber, but of course you can use something else.

Whenever an integration application artifact needs to be set up for the execution, you should be able to use the Maven deployment facility to install it either locally or to deploy it into a remote repository server. During the development, the local installation works for most cases. You can do it by running the following (for Hadoop tests):

./gradlew install-hadoopRun this from the project's top-level directory. This command will also cover the installation of all additional helper modules and POM files. All artifacts JARs will be pushed into the local Maven repo, and they are used to run the integration application. Similarly, you can deploy the artifacts to a remote repo server using the not-yet-gradelized command:

mvn deploy -f bigtop-tests/test-artifacts/hadoop/pom.xmlThis deployment will require you to configure your repo location and credentials using ˜/.m2/settings.xml. Please refer to the Maven documentation for further instructions.

If your test code relies on the client parts of a cluster application, you might need to deploy gateway bits into your development environment. A cleverly written executor module can be very handy by enforcing particular environmental constraints and by automatically constructing the classpath. This route relies on both steps of the development process—development and artifact deployment—as well as the use of the executor modules.

Before we look at the work flow, let's quickly examine an integration application executor. They can be found under test-execution. One for Hadoop is located and managed by smokes/hadoop/pom.xml. Like the counterparts over in the artifacts section, executors are implemented as Maven modules. Unlike the artifact modules, these involve more complex build logic using multiple plugins and the additional common module. The latter defines a number of system properties commonly used across most of the executors, such as test include and exclude patterns, the dynamic creation of the test lists derived from the artifact JARs, and so on.

The complete flow of an integration application development looks as follows:

- Develop the code of the application as in any other software development process.

- Whenever the application artifact or its executor needs to be shared with other teammates, use the Maven install/deploy feature to deliver them to a local or shared Maven repo as explained above.

- The application executor can be started with a command as follows:

mvn verify -f \ bigtop-tests/test-execution/smokes/hadoop/pom.xml - From the project's top-level folder, by default, all tests matching

**/Test*will be run. The executor's behavior can be controlled by supplying a few system properties:- -

Dorg.apache.maven-failsafe-plugin.testInclude=\ '**/IncludingTestsMask*'to run only a subset of tests- -

Dorg.apache.maven-failsafe-plugin.testExclude=\ '**/ExcludingTests*'to avoid running certain validation applications

- -

- Setting the log level to TRACE level is handy when you need to trace any issues with the code or the logic. This can be done by specifying

Dorg.apache.bigtop.itest.log4j.level=TRACEin the runtime.

The up-to-date information about how to deploy and run integration and system tests can be found at the project's Wiki page.

Validating the Stack

Running the integration application in the distributed environment is quite simple once the cluster is fully deployed and you're familiar with all the tooling described earlier. As a convenience, we recommend you run your integration applications from a node that isn't a part of the worker pool, such as a gateway node. The reason is pretty simple: The worker node might be sitting behind a firewall or get fully-loaded during the validation. In both cases it might be challenging to access it if you need to debug your code. Besides, the gateway node normally would have all of the client binaries and libraries, so the integration applications will have all of the bits readily available.

Another good reason to run the tests from a designated node is to have an easier integration with the Continuous Integration infrastructure. Taking Jenkins as an example, it is quite trivial to bring up a Jenkins slave, or run a container inside of an existing slave, provision it with Hadoop stack client packages, and a clone of the Bigtop repo. Once test runs are completed, Jenkins will collect the results and present them for further processing and analysis. Trying to achieve the same results using a regular cluster node might involve additional administrative efforts, as well as ways of combining the test results back in the CI server.

Per blueprints explained in the “Development” section, node5.my.domain is configured as the cluster gateway. It also has the Bigtop source repo under the /work directory. Once the steps to install the validation artifacts are completed, as shown in “Stack Integration Tests Development” above, the gateway node will possess all libraries and POM files in its local Maven repo. And now you're ready to validate the integrity of the cluster stack.

cd /work

./gradles install-hadoop

mvn verify -f bigtop-tests/test-execution/smoke/hadoop/pom.xmlThe above command will probably fail immediately with an angry message from Maven enforcer, telling you to set up certain environment variables. At the very least the following has to be set:

export HADOOP_HOME=/usr/lib/hadoop

export HADOOP_CONF_DIR=/etc/hadoop/confOnce the issue is dealt with it should be smooth sailing from here. If you want to validate other components as well, you can subsequently run:

mvn verify -f bigtop-tests/test-execution/smoke/hive/pom.xml

mvn verify -f \ bigtop-tests/test-execution/smoke/ignite-hadoop/pom.xmlOr you can simply run all of the available applications in the test stack with mvn verify. If the deployed stack has just a few components, many tests are likely to fail. When the run is completed, the results will be available under the components' target/ folders, as is customary with Maven executed tests.

Cluster Failure Tests

The Bigtop's iTest isn't a complete distributed integration test framework if it isn't providing a facility to introduce faulty events into normal operation of the system. This is commonly called fault injection and is similar to throwing a monkey wrench into a well-working mechanism. Indeed, iTest has that monkey wrench ready. iTest currently provides three types of distributed failure:

- Service termination failure

- Service restart failure

- Network shutdown failure

The fault injection framework requires SSH password-less access to the nodes where failures will be introduced, as well as password-less sudo on these nodes. The latter is needed for the test to manipulate the system events such as network interface failures, and service start/stop. For a more detailed write-up on how to write the cluster failure tests please refer to https://cwiki.apache.org/confluence/display/BIGTOP/Running+integration+and+system+tests#Runningintegrationandsystemtests-ClusterFailureTests.

The current fault injection framework could be improved and extended in a variety of ways. The Bigtop community is always on the lookout for new contributions to the project, including, but not limited by the patches, bug fixes, documentation improvements, and more.

Smoke the Stack

There's a reason why integration validating applications could be deployed as Maven artifacts. A test stack represents a particular state of the software stack. Freezing and releasing the corresponding state of the test stack has numerous benefits. One such benefit is to be able to repeat the validation of the software on any new deployment from the same set of binary artifacts. Let's say you're spinning up development clusters from Apache Bigtop v1.1. On every provision, before the cluster is handled to its end user, it should be quickly verified. One way to do it is by running the integration suite v1.1 from the previously published artifacts.

In a different use case, however, it might be desirable to repeatedly validate the functionality inside of a development cycle without doing any extra steps. Recently, the Bigtop community started working toward the simplification of the test system by introducing smoke tests. The main difference between this and the integration tests described earlier is that smokes can be run directly from the source code. Unlike the use case with integration application artifacts, no extra preparation and deployment steps are needed.

And indeed it is quite simple. Just switch to bigtop-tests/smoke-tests/ and run:

./gradlew clean test -Dsmoke.tests=ignite-hadoop,hive –infoThis will test ignite-hadoop and Hive deployments. A couple of things to keep in mind:

- Not all components are currently covered by the new smoke tests. There's an argument that perhaps even the existing integration validation applications should be converted into new smokes. But is hasn't been settled one way or another.

- Unless you're running smoke tests from the same branch or tag that was used to produce the components for the software stack, you might not be testing exactly what you expect. As in the case with two kinds of integration tests, the smokes could use the lower-level public APIs, or user-facing CLI tooling. The former case is more prone to failures if the targeted implementation keeps changing or is merely different. So perhaps more thorough version management discipline will have to be exercised by the user.

In general, new smoke tests are a real easy way to assess a cluster viability, verify the integration points, and stress or load the system. We certainly look forward to new development in this part of the project.

PUTTING IT ALL TOGETHER

Why should anyone be bothered with a framework to do things that anyone can perhaps build with a few keystrokes and some shell scripts? Or, what about a few lines of Python and Scala code? Let's quickly recapture what Bigtop covers and the key functionality it provides.

- Software stack composition allows a user to define a consistent presentation of a set of software components to deliver a complete platform solution or a service. This architecture increases the productivity and predictability of the software development by setting up a formal process and mechanisms at all levels of engineering organizations.

- Validation stack composition allows you to fasten together a variety of functional requirements delivered by the software stack via the provided integration test suites and feature-rich integration test framework.

- Standard Linux packaging represents an easy way to install and configure a vertical amount of software services with standard life-cycle management interfaces and configurations.

- A deployment and configuration management framework guarantees repeatable and controllable provisioning of software and validation stacks to achieve better levels of system orchestration and continuous delivery of the services.

These are four key principles of the Apache Bigtop framework. The community behind the project has invested decades of the combined experience in the system architecture and integration to deliver this top-notch industry standard facility to provide an easy way of dealing with daily data processing needs.

SUMMARY

Some people still might not be convinced that Apache Bigtop is the best thing since sliced bread. And they don't want to be burdened with all of the intricacies of a software stack development process. After all, not everybody wants to deal with system architectures and integration design. In this case, Apache Bigtop still can help you to build, manage, and improve the data processing pipeline.

The Bigtop community works very hard to regularly produce high quality releases of the Apache data processing stack, including the latest most stable releases of Apache projects. The last released version at the time of this writing is Bigtop 1.1. You can immediately start using it as was described in the “Deployment” section of this chapter.

Beyond that, you might find Bigtop's statistical modeling applications to be of a high value for data professionals. The source code and more information about it can be found in the bigtop-data-generators/ folder. The framework and the use-cases are explained in great detail in the recent presentation by RJ Nowling about “Synthetic Data Generation for Realistic Analytics” available from http://is.gd/wQ0riv.