CHAPTER 9

Consolidating Databases with the Oracle Exadata Database Machine

Regardless of economic conditions, IT is constantly feeling the pressure to optimize resources that they provide to the business. The senior management of your organization or company expects IT to be able to deploy and run new applications with agility, scale these applications while meeting or exceeding Service Level Agreements (SLAs), and do it all efficiently. The CIO is asked to significantly reduce IT costs but still remain receptive to new business functionality without disrupting users or their current applications.

That’s quite a tall order. One way that many companies reduce costs, improve return on investment, and meet future business needs is to standardize and simplify IT costs through consolidation of computer servers, storage, other hardware infrastructure, and software platforms. In this chapter, our primary focus is how you can use the Oracle Exadata Database Machine to deliver such solutions.

Why Consolidate?

Taking the consolidation path is not always simple. However, many IT analysts today call attention to production server utilization rates that are barely 10 percent where individual applications are dedicated to their own specific legacy hardware. Therein lies a golden opportunity for IT departments to support line-of-business objectives and transform their environment to drive out costs while improving utilization and performance and reducing management.

One example of a consolidation effort is the one that Oracle Corporation itself went through between 2002 and 2004 that resulted in tremendous costs savings and improved operating margins. During this time, Oracle consolidated 40 data centers down to 3 and moved from 52 applications to a single global E-Business Suite. In many respects, what Oracle did is one of the most difficult consolidation efforts to undertake, since it requires rationalization of applications, databases, hardware platforms, and strong top-down leadership that can overcome the political implications of such an effort.

Far more common is the consolidation of multiple overlapping databases to single databases or simply using the Oracle Exadata Database Machine as a single platform to host multiple Oracle databases. Since the Oracle Exadata Database Machine also contains storage and its own high-speed network, it also provides other forms of infrastructure consolidation.

The most obvious benefits to IT are usually associated with lower costs from reduced power consumption and a smaller data center footprint. But there are frequently other compelling benefits that should be explored as well. For example, speed of change management can greatly accelerate. A critical mass of IT skills is often easier to attain with fewer people since they focus on fewer technologies. Cost and complexity of integration should be dramatically reduced. Business benefits and time to value for deployment of new solutions can be achieved much faster.

Many organizations use such initiatives to transition IT into providing software and hardware infrastructure as a service. When deployed on corporate intranets behind firewalls to deliver high efficiency, high availability, and resources on demand (sometimes called elasticity), these are popularly described as “private clouds.” When deployed to the Internet, these deployment models are known as “public clouds.”

The Oracle Exadata Database Machine can help in a variety of ways to consolidate database deployment.

How the Oracle Exadata Database Machine Helps Consolidation

As described in earlier chapters, the Oracle Exadata Database Machine is a complete and integrated solution that provides database servers, storage servers, networking, and Oracle Database software and storage software. This optimal combination of Sun hardware coupled with software provides the performance and high-availability characteristics needed for consolidating Oracle Databases. Given the power and flexibility of the platform, it can enable consolidation of databases to a single machine that address workload types ranging from transaction processing to data warehouses.

The complexity you might introduce by moving such a wide variation in the types of databases to a single Oracle Exadata Database Machine depends on many factors. These factors can include the types and nature of any packaged applications, schema naming conventions and possible conflicts, and SLA and high availability requirements that could vary widely. Certainly, if you are considering moving both transaction processing and data warehousing databases to the same Oracle Exadata Database Machine, you should consider the consistency of their SLAs, whether you have an understanding of clear workload priorities across the databases, and whether you’ll have excess capacity that might address some unplanned demands.

Consolidation of mixed workloads to a single Oracle Exadata Database Machine is possible. But where there is wide variation in workloads and other requirements, some organizations may choose to standardize on the Oracle Exadata Database Machine but deploy different racks of the platform in different ways that can best address unique workload and availability demands.

Database Server Consolidation

As in sizing any database server platform, when consolidating database servers, you must plan around a specific set of current needs on a specific platform footprint. This section covers how you might define that footprint. We’ll examine the CPU and memory sizing considerations, I/O or throughput sizing, and overall system sizing considerations. We’ll also examine storage and network consolidation aspects of the platform.

CPU Sizing Considerations

Although the Oracle Exadata Database Machine contains the latest generation of Intel CPU chips and high-performance memory, you still need to be careful when sizing legacy databases that are CPU-constrained on another platform. This is especially true for applications deployed on a single monolithic SMP (symmetric multiprocessor) platform. The Oracle Exadata Database Machine X2-8 configurations that provide large SMP nodes and memory can be especially relevant for handling such workloads. The architecture of SMP is often described as scale-up because the only options for this type of architecture, when constrained by CPU limits, are

Tuning the application(s) Attempting to reduce CPU consumption by tuning Oracle SQL access paths through analysis of data structures and usage patterns by the application(s). This process involves investigation of SQL statements that may be causing excessive CPU usage, as well as selective denormalization, partitioning, compression, index reviews, and other schema adjustments that are typically used to improve access paths. Once these alterations have been made, the execution plan is frozen using a SQL Plan Baseline or a SQL Profile.

Tuning the application(s) Attempting to reduce CPU consumption by tuning Oracle SQL access paths through analysis of data structures and usage patterns by the application(s). This process involves investigation of SQL statements that may be causing excessive CPU usage, as well as selective denormalization, partitioning, compression, index reviews, and other schema adjustments that are typically used to improve access paths. Once these alterations have been made, the execution plan is frozen using a SQL Plan Baseline or a SQL Profile.

Upgrading existing CPUs Scaling up the platform by working with the hardware vendor to upgrade current system boards or adding/activating more CPUs where the machine has spare capacity.

Upgrading existing CPUs Scaling up the platform by working with the hardware vendor to upgrade current system boards or adding/activating more CPUs where the machine has spare capacity.

Buying a bigger machine The newer machine is likely to be relatively more expensive than adding CPUs to a current platform. These “fork-lift upgrades” can be expensive, especially as the new machines are normally sized for growth, and hence there can be a lot of initial capacity that is not used.

Buying a bigger machine The newer machine is likely to be relatively more expensive than adding CPUs to a current platform. These “fork-lift upgrades” can be expensive, especially as the new machines are normally sized for growth, and hence there can be a lot of initial capacity that is not used.

The Oracle Exadata Database Machine can provide scale-up within a single database server node, but also provides the capability for a scale-out approach. With a scale-out architecture, you can increase capacity by simply adding more database server nodes using Real Application Clusters (RAC) configurations to support the databases and their applications. This capability is consistent with Oracle’s grid computing architectural vision.

When considering scale-up implications, you should take into consideration the CPU differences between the existing CPU power of the database servers and those of the suggested Oracle Exadata Database Machine. Standardized CPU benchmarks are used in the industry to compare the relative CPU performance of different servers. Although not an ideal measure of system performance, they are still a useful gauge. For instance, a SPECint rate indicates a CPU’s integer processing power. Existing benchmarks that are published or unpublished, comparative system sizes, and proof of concept results can also be used to help system sizing.

In reality, Oracle looks at these performance results when sizing the system to support the required database servers while also including capacity to handle available application growth projections. The most reliable indicators are often comparative systems sizes and hence, it’s useful when the following metrics have already been discovered for the current production system:

Database version Different versions of the Oracle Database have different capabilities, and databases from other vendors can have even larger functionality gaps that can affect hardware sizing.

Database version Different versions of the Oracle Database have different capabilities, and databases from other vendors can have even larger functionality gaps that can affect hardware sizing.

Database options used Options such as compression can greatly influence storage required and partitioning can influence backup strategies.

Database options used Options such as compression can greatly influence storage required and partitioning can influence backup strategies.

Operating system Variations among Linux, Unix, Windows, Z/OS, etc., should be taken into account when sizing the new platform.

Operating system Variations among Linux, Unix, Windows, Z/OS, etc., should be taken into account when sizing the new platform.

Server hardware Model number and make, as well as differences in the previous platform generation, also affect go-forward sizing.

Server hardware Model number and make, as well as differences in the previous platform generation, also affect go-forward sizing.

Server CPUs Differences in the number of cores available to support the database and the processor speed are a consideration.

Server CPUs Differences in the number of cores available to support the database and the processor speed are a consideration.

Clustering method Sizing considerations can be influenced by previous clustering types, federated approaches used, and how the processors were leveraged.

Clustering method Sizing considerations can be influenced by previous clustering types, federated approaches used, and how the processors were leveraged.

Growth predictions The speed that the applications’ workloads are growing and the projected future growth and utilization rates.

Growth predictions The speed that the applications’ workloads are growing and the projected future growth and utilization rates.

Based on these metrics, it is possible to determine how many raw database servers and Oracle Exadata Database Machine racks are needed to provide equivalent CPU horsepower. When you gauge the effect of the CPU speeds provided by the Oracle Exadata Database Machine, keep in mind that CPUs on your legacy systems are almost always slower than current server CPUs in your Database Machine. The CPU sizing process is especially critical where the applications to be consolidated are CPU-bound.

Keep in mind, though, that CPU sizing will give you an idea of the Exadata configuration that can handle the workload, but the performance gains provided by the Exadata software, described in Chapter 3, will allow CPUs to perform more work, since some of their normal operations will be offloaded to the CPUs in the Exadata Storage Server.

Memory Sizing

Legacy databases and earlier versions of Oracle use varying amounts of database server main memory, as they were optimized for the hardware platforms’ memory capacities of the time. It is important to ensure that a new single consolidated database or all of the databases consolidated to the single Oracle Exadata Database Machine have access to at least the same amount of “usable” memory as before. As some legacy systems could be oversized on memory, it is also important to review the database usage reports to see the actual “peak” memory that is used by each database. When migrating from another database where structures such as the Oracle PGA do not exist, memory should be carefully sized to ensure that the applications can take best advantage of the new Oracle environment.

Concurrency rates also need to be examined to determine what the peak memory is for both dedicated and shared user database connections. Buffer sizes for programs that attach directly to the database server should be considered (e.g., for backup servers or application-dedicated server attached processes). Finally, database option memory usage, such as for RAC, should be factored in.

Although it sounds complicated, memory sizing is relatively straightforward, as many of the important numbers are often stated in vendor installation manuals (especially the Oracle Database installation manuals) and retrievable from monitoring tools and database reports. It might be noted that when collecting the average number of Oracle-connected users to determine concurrency, the memory usage for this task is trivial since these statistics are easily viewable from the Oracle AWR or Statspack reports, as well as being accessible from internal database V$ and X$ views. In terms of consolidation, you will have to figure memory usage that is an aggregation from the different servers where the soon-to-be consolidated application databases are running.

Most importantly, though, the Oracle Exadata Database Machine comes in a limited number of configurations, each with its own allocation of memory. The memory included in each configuration is designed to support the model in a balanced configuration. Since adding or subtracting memory from a configuration is not an option, checking on the memory sizing is a way to double check the sizing provided by a comparison of CPU horsepower.

I/O Sizing

Oracle’s best practices for Exadata Database Machine sizing is not to take into consideration possible I/O improvement from Smart Scan and to size the system for the maximum I/Os in terms of IOPS and throughput (GB/s). However, as an exercise, let’s examine what would need to be considered if this wasn’t the case. When looking at random I/O rates that need to be supported for physical reads, you should take into account the Exadata Smart Flash Cache I/Os provided by the Oracle Exadata Database Machine. For large sequential I/Os, you could also consider the impact of the use of Oracle Smart Scan Exadata software optimization and other I/O performance features such as storage indexes. In almost all cases, large sequential physical I/Os (table scans) can be offloaded to Smart Scans that take advantage of the memory and CPU power of the Oracle Exadata Database Machine storage server cells.

These offloaded operations have several effects—reducing the CPU requirements for the database servers, reducing the bandwidth requirements to send data to the database servers, and, in cases where storage indexes are used, actually reducing the I/O operations necessary to retrieve data from disks.

When you are considering database consolidation and I/O rates, you need to consider the following data points:

Database version and server hardware version, as discussed earlier.

Database version and server hardware version, as discussed earlier.

How the data is stored (e.g., NAS, SAN, DAS, or hybrids).

How the data is stored (e.g., NAS, SAN, DAS, or hybrids).

Storage array Make, model, capacity, performance, connection architecture to database servers.

Storage array Make, model, capacity, performance, connection architecture to database servers.

HBAs and HCAs The ability of the plumbing, that is, the ability of the storage networking and related database servers to transfer data. If you are going to consolidate many storage arrays and database servers on the Oracle Exadata Database Machine, you need a target that has lots of CPUs, wide pipes, and very fast server-to-storage connection points. The Oracle Exadata Database Machine has been designed with these in mind.

HBAs and HCAs The ability of the plumbing, that is, the ability of the storage networking and related database servers to transfer data. If you are going to consolidate many storage arrays and database servers on the Oracle Exadata Database Machine, you need a target that has lots of CPUs, wide pipes, and very fast server-to-storage connection points. The Oracle Exadata Database Machine has been designed with these in mind.

I/O system metrics MBPS, IOPS, peak rates, peak transfers, waits and queue lengths, and utilization rates. These metrics are all measures of disk and controller performance. IOPS refers to I/Os per second and is a useful measure of disk saturation. MBPS is short for megabytes per second and is a useful measure of the size of the disk transfer rate and the amount of data the disk can move. Peak rates and peak transfers are useful measures of maximum burst performance. These measurements can be useful where response times are critical because the system must also be able to handle peak rates without disrupting response times. Waits and queue lengths can be used to determine if the current I/O design is keeping up with its current usage. As disk waits and queue lengths increase, response times can suffer and the system can degrade. Utilization rates are useful to determine over and under capacity.

I/O system metrics MBPS, IOPS, peak rates, peak transfers, waits and queue lengths, and utilization rates. These metrics are all measures of disk and controller performance. IOPS refers to I/Os per second and is a useful measure of disk saturation. MBPS is short for megabytes per second and is a useful measure of the size of the disk transfer rate and the amount of data the disk can move. Peak rates and peak transfers are useful measures of maximum burst performance. These measurements can be useful where response times are critical because the system must also be able to handle peak rates without disrupting response times. Waits and queue lengths can be used to determine if the current I/O design is keeping up with its current usage. As disk waits and queue lengths increase, response times can suffer and the system can degrade. Utilization rates are useful to determine over and under capacity.

Cache sizes, utilization, and real performance rates.

Cache sizes, utilization, and real performance rates.

Database I/O performance metrics I/Os per partition, tablespace, I/O skewing, layout, I/Os per transaction, SQL, etc.

Database I/O performance metrics I/Os per partition, tablespace, I/O skewing, layout, I/Os per transaction, SQL, etc.

Normally, the current storage model and make and current utilization provide a good yardstick to understand how many equivalent storage cells are needed to exceed the current I/O performance. An offloaded Smart Scan utilizes the Exadata Storage Server CPUs in parallel, and as more and more unique Smart Scans and other offloaded activities such as encryption handling are being performed in parallel, it might be possible for the CPUs on the Exadata Storage Server to become saturated. If this happens, the system will automatically start to queue requests just like a normal server. If this type of condition could arise in a production environment, it is prudent to leverage I/O Resource Manager (IORM) or Database Resource Manager (DBRM) plans to control the prioritization of the applications and users.

System Sizing

When considering consolidation, all of the sizing guidelines for CPU, network, I/O, and memory are combined to form a comprehensive sizing methodology needed to determine the proper platform for your migration and consolidation of databases. Where packaged applications are being migrated, Oracle also bases sizing considerations on performance testing performed within the Oracle Solution Centers.

That said, complete system sizing can be more of an art than a science when all these pieces are combined. When consolidating databases and applications on the Oracle Exadata Database Machine, database performance might far exceed expectations to such a degree that the real limitation might be the number of middle-tier application servers. This can especially be true for large packaged applications. In such cases, you will be able to determine from the AWR reports that the database server is not waiting for anything the CPU utilization is very low. Increasing the number of transactions in the database server layer by increasing the number of connections from new middle tiers (or adjusting existing connection pools if the current middle tiers are not strained) will be needed to take full advantage of the processing power of the database server. In other cases, consolidating more applications that can access the Oracle database on the Oracle Exadata Database Machine can help ensure that the entire system is more equitably utilized.

Storage Consolidation

An Oracle Database deployed on legacy storage typically utilizes many different types of storage architectures, including NAS (Network Attached Storage), SAN (Storage Area Network), and DAS (Direct Attached Storage). You may have all these storage types deployed or hybrids of them. You might also have many different database versions within the infrastructure. Because of this, you might consider reviewing all existing deployed Oracle databases and consolidating all storage by deploying the Oracle Exadata Database Machine.

Storage consolidation is the result of a number of factors in the Exadata Database Machine. The Oracle Exadata Database Machine offers a choice of high-performance and high-capacity disk types. Combined with the various types of compression that the Oracle Database and Exadata Storage Server Software support, it is possible to store dozens of terabytes of data in a single rack and more where multiple racks are configured. Compression varies depending on such things as repetition of values in data sets, so capacity can vary. Most organizations size storage by first considering disk requirements when data is uncompressed and then use more conservative estimates of compression than might appear in the marketing literature.

The Oracle Exadata Database Machine’s IORM feature allows the I/O from many different databases to be prioritized efficiently when those I/O resources are oversubscribed, and assists in meeting or exceeding SLAs when performing storage consolidation.

Network Consolidation

An InfiniBand network within the Oracle Exadata Database Machine runs a low-latency, database-specific protocol between the database servers and storage servers called iDB. Two InfiniBand switches within the racks are provided for redundancy. This lightweight, high-performance network is used in transfers of data between the database servers and the storage servers and also handles database interconnect communications, including RAC interconnect traffic for extremely fast database server-to-database server communications. A third switch is provided in Half Racks and Full Racks to enable connections to additional like configurations of the Database Machine.

This high-performance network architecture also allows the Oracle Exadata Database Machine to connect to InfiniBand-based clients for backup, restore, and archive activities. Architecturally, this opens up some interesting options and allows flexibility to connect to other Oracle-integrated hardware and software platforms. For example, the InfiniBand is used as a high-speed connection from the Oracle Exadata Database Machine to Oracle Exalogic.

An Ethernet GbE switch is also provided in the Oracle Exadata Database Machine for administrative purposes. External user connections are generally into 10 GbE ports that reside in the database server nodes.

Workload Consolidation and Isolation

As mentioned earlier in the chapter, the Oracle Exadata Database Machine enables database consolidation and can scale database(s) across database servers using RAC. When you need more steam (CPU or memory) from your database server platform, RAC can support a growing shared database cluster by simply adding more database server nodes into the cluster. Adjusting the number of nodes in a RAC cluster is a dynamic online process, such as when upgrading from a Half Rack to a Full Rack of the Database Machine. This flexibility in configurations allows more wiggle room for consolidation sizing and capacity planning. You could consolidate databases onto a RAC One single database server node until you start to saturate the available resources, and then you can decide to spread RAC-enabled databases across a single or many Oracle Exadata Database Machine racks. This provides flexibility and power to business owners and IT departments that want to save money and gain performance through consolidation. Within RAC, the concepts of workload management (services, server pools, and instance caging) can be used to assist in database consolidation. The actual implementation of RAC on an Exadata Database Machine used for consolidation is discussed later in this chapter.

Services

Oracle first introduced the concept of database services in Oracle Database 10g. This facility creates a virtual target to which database applications can connect. You can target a single application to a single service, multiple applications, or a functional subset of an application. A service can be to an instance so that it resides on a single database server, or it can run simultaneously across multiple database servers or even multiple Database Machines.

The service provides a high-availability target and simplified construct that hides cluster complexities from the connecting database application. The application connects to a service that runs on the database servers and is automatically directed to a suitable database server to handle the transaction. When SQL executes in parallel, services limit which database servers can be involved in the parallel execution. For instance, any session connection to a service can only start parallel server processes on database servers that are associated with that service.

Once you define a service, a resource profile is automatically created that describes how Oracle’s Clusterware will manage that service, which instance the service will use as a failover target, and service dependencies between the instance and the database so that instances and services are started and stopped in the correct order.

The Oracle Database Resource Manager allows you to restrict resources that are used by the users who connect with that service to an instance. This is done by mapping a consumer group to a service so that the service users are members of the consumer group.

From a consolidation perspective, similar application transaction signatures can be mapped to the same service. For instance, you could create an order-taking service that could be used to receive orders from the database application. At the simplest level, you could create batch and data warehousing services and run these services on different database servers from the order-taking OLTP service.

Database Server Pools

When considering database consolidation, DBAs will typically have to understand which application databases require their own physical database servers and which can be consolidated onto single database servers. To assist in this process, Oracle Database server pools can be created, which logically divide up a cluster of database servers into database server pools, which were described as part of the implementation of Quality of Service in Chapter 8. Each pool can be defined by four attributes:

Server Pool Name

Server Pool Name

Min Specifies the minimum required number of database servers that should be run in the database server pool.

Min Specifies the minimum required number of database servers that should be run in the database server pool.

Max Specifies the maximum number of database servers that can be run in the database server pool.

Max Specifies the maximum number of database servers that can be run in the database server pool.

Imp Specifies the “importance” of each pool. The relative value of importance or priority can be used to determine database server assignment when resources are short, for instance, after database server failure.

Imp Specifies the “importance” of each pool. The relative value of importance or priority can be used to determine database server assignment when resources are short, for instance, after database server failure.

With a database server pool, the database server can only be allocated to a single pool at any one time. Database server pools can be automatically created by Database Configuration Assistant (DBCA) and managed by the Server Control Utility (SRVCTL) or Oracle Enterprise Manager. There are two main ways of doing a database server consolidation:

Administrator managed Specify where the database should run with a list of database server names. Then define which services will run on each database instance. You can define PREFERRED instances, which are the instances that normally run that service, and also can define other instances to support a service if the service’s preferred instance is not available. These instances are known as AVAILABLE instances.

Administrator managed Specify where the database should run with a list of database server names. Then define which services will run on each database instance. You can define PREFERRED instances, which are the instances that normally run that service, and also can define other instances to support a service if the service’s preferred instance is not available. These instances are known as AVAILABLE instances.

Policy managed Specify initial resource requirements based on the expected workload. Oracle will automatically start the required instances to support the workload. No direct association between a service and an instance is required. You can define this service as either UNIFORM so that it automatically runs on all instances in the database server pool or as SINGLETON so that it only runs on one instance in the database server pool. Oracle Clusterware will automatically start the service on a suitable database server within the database server pool.

Policy managed Specify initial resource requirements based on the expected workload. Oracle will automatically start the required instances to support the workload. No direct association between a service and an instance is required. You can define this service as either UNIFORM so that it automatically runs on all instances in the database server pool or as SINGLETON so that it only runs on one instance in the database server pool. Oracle Clusterware will automatically start the service on a suitable database server within the database server pool.

Workload Management

Once database servers have been consolidated onto the Oracle Exadata Database Machine, the manner of client connection needs to be considered. A Single Client Access Name (SCAN) provides a single, consistent name for client access to a RAC database cluster.

The advantage of SCAN is that additions or removals of servers from the cluster require no application client connect changes and it also allows the use of the EZConnect client and the simple Java Database Connectivity (JDBC) thin URL to access any database instance in the cluster, regardless of which database server it is currently running on. Previously, each node in the cluster required a separate entry in the TNSNAMES.ora file, but now, only one entry is required that utilizes the SCAN syntax and the correct DNS or GNS entries. For the orders cluster shown here, you can connect to any node in the cluster by using the orders-scan entry:

Sqlplus system/manager@orders-scan:1521/oe

Jdbc:oracle:thin:@orders-scan:1521/oe

It is recommended that clients connecting to the Oracle Exadata Database Machine leverage the Universal Connection Pool (UCP). The UCP is a Java-based connection pool that supports JDBC, the Lightweight Directory Access Protocol (LDAP), and Java EE Connector Architecture (JCA) connection types from any middle tier. The UCP has integration with database-side functionality such as fast connection failover, runtime connection load balancing, and RAC connection affinity.

The UCP connection affinity feature allows two types of affinity: web session and XA. With the UCP and the Database Machine, the first connection request from the middle tier is load balanced using hints from the Load Balancing Advisory (e.g., least used node). Additional requests for the duration of a web session or XA transaction are directed to the same instance on the same database server as the initial request. This can improve performance by ensuring that interinstance RAC communication is significantly reduced for transactions that access the same segments from the database.

Meeting and Exceeding SLAs

Once the calculations have been made on how many databases can be consolidated onto a Oracle Exadata Database Machine, the next step is to ensure that for each database, SLAs are met or exceeded. A single database on a single Oracle Exadata Database Machine doesn’t need to share anything except potentially the same InfiniBand network for the RAC interconnect and I/O traffic. However, as more databases are consolidated onto the same Oracle Exadata Database Machine, then I/O, memory, and CPU need to be shared if applications access these databases concurrently. Any resource-intensive database can significantly degrade the performance of the other databases unless steps are taken to enable resource management and isolation.

A number of techniques can be used to ensure SLAs are met or exceeded, and they can be used individually or they can be combined.

Instance Caging

Instance caging is the process by which a DBA can limit the physical cores that can be allocated to a database instance that runs on a particular database server. This is achieved by using the CPU_COUNT database parameter and the Oracle Database Resource Manager, which limits the amount of CPU that a particular database instance consumes. This technique is important from a consolidation perspective, because you do not want any particular database instance bringing a database server to its knees by using most of the CPU resources and affecting other database instances that might be running on that particular database server. The upper limit of CPU_COUNT can be adjusted to ensure that each instance cannot expend database server CPU cores that it is not authorized to access.

Enabling instance caging is a two-step process.

1. Set the value of CPU_COUNT. This dynamic parameter should be set to the maximum number of CPU cores (or logical hyperthread cores) that the database instance can utilize at any one time. For example, to set the parameter to 32, you would use the following syntax:

Alter system set cpu_count=32;

2. Enable the Oracle Resource Manager plan. The next step is to enable the Oracle Database Resource Manager plan that manages CPU. Any resource plan such as “DEFAULT_PLAN” or “DEFAULT_MAINTENANCE_PLAN” that uses CPU directives can be used. Alternatively, you can create your own resource plan, as shown in an example later in this chapter. The resource plan then needs to be enabled.

Alter system set resource_manager_plan ='DEFAULT_

MAINENANCE_PLAN';

Instance caging is now enabled.

How do you make sure that you are running correctly? The following query can determine if the CPU_COUNT has been set correctly:

Select value from v$parameter where name='cpu_count' and

(isdefault='FALSE' or ismodified !='FALSE');

This query should return the current value of CPU_COUNT if you have modified it; if you have not, it will return no rows.

Next, you need to determine if the right plan is active within the Database Resource Manager.

Select name from v$rsrc_plan where cpu_managed='ON' and is_top_

plan='TRUE';

If a row is returned, then the plan is active. Otherwise, either the Database Resource Manager is off or the Database Resource Manager is on but not managing the CPU.

Instance caging works by throttling database instance processes that require more CPUs than the instance is entitled to. The wait event “resmgr:cpu quantum” may appear in the AWR reports if this throttling becomes significant, after which point it may become necessary to increase CPU_COUNT or add another database server node via RAC dynamic cluster expansion. The v$rsrcmgrmetric_history view shows the amount of throttling and CPU consumption for each minute of the past hour.

From a consolidation perspective, there are two main methods of leveraging instance caging.

Highest SLA Database Instances

Highest SLA database instances are critical to a business and need to run without any adverse CPU effects from any other database instances that might be running on the same physical database server. The easiest option would be to consolidate each database instance onto its own physical database server, but this is likely to waste CPUs and result in low utilization, as the Oracle Exadata Database Machine CPUs are relatively powerful. In order to avoid low utilization rates on the database servers, it is possible to partition up a physical database server so that a single database instance on one physical database server will not affect the CPU of another database instance running on the same physical database server.

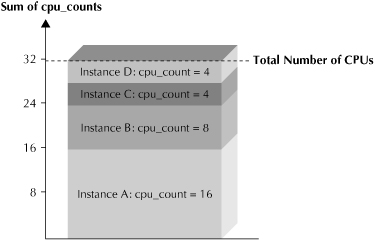

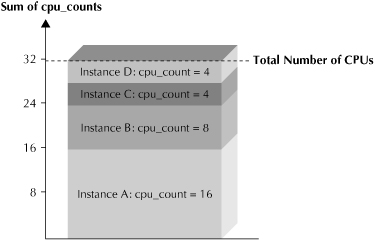

In Figure 9-1, we have partitioned up a single database server into four different CPU zones. Each zone runs a consolidated database instance. Each separate database instance has set its own CPU_COUNT, and the total CPU_COUNTs add up to the number of cores in the database server. This guarantees and limits the CPU consumption of each consolidated database instance and yet still meets any SLA goals related to dedicated CPUs. Later, we will see how to perform the same process for I/O.

Less Critical Database Instances

Less critical database instances are lower-priority database instances or test and development database instances that are used at different times and only need some processing power to be operational. Instance caging can be used to assure that any one database instance cannot spike and take resources from all the other running database instances.

FIGURE 9-1. Highest SLA example for a 32-way database server

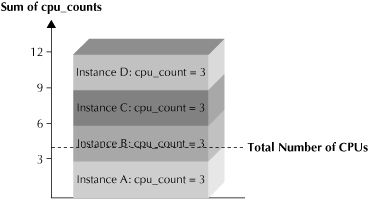

This is best explained with an example. Assume we have four database instances that have been consolidated onto a single database server. If any one of these database instances should spike its CPU resource usage, we don’t want it to consume the entire CPU on the database server. If we set CPU_COUNT = 3 for each of these four database instances, then each of them can never consume the entire database server CPU, but the Database Resource Manager can share the available CPU resources between them, as they are running concurrently.

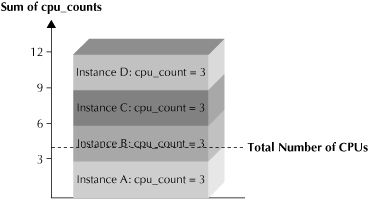

Figure 9-2 helps show how this would work in practice. Here, the database server has only four physical cores to keep things simple. We have assigned CPU_COUNT = 3 for each database instance and have effectively overprovisioned the database server. The maximum percentage of CPU that a single database instance can consume at any point in time is its own limit divided by the sum of the limits for all active database instances.

In this example, if all four database instances are active and CPU bound, then one instance will only be able to consume 25 percent of the CPU, since all instances are allocated three CPU cores and all instances are requesting the maximum amount of CPU. The percentage is determined by adding up the cores requested by a single instance (3) and then dividing by the number of all the requested cores [3/(3 + 3 + 3 + 3) = 3/12 = ¼], as a percentage (25 percent). If only two instances are active and CPU bound, then a third instance (not instance caged) will be able to consume 50 percent of the CPU ((3 + 3)/12 = 6/12 = 1/2 = 50%). Overprovisioning, or stacking and packing as many database instances as possible onto a single database server, will definitely reduce the idle CPU and wasted CPU, but instance caging will ensure that in the worse case possible, an instance can still acquire a predictable amount of CPU resources.

FIGURE 9-2. Less critical database instances consolidated onto a single database server

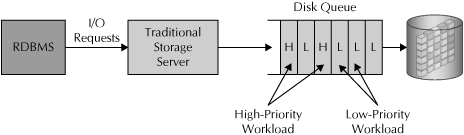

I/O Resource Manager (IORM)

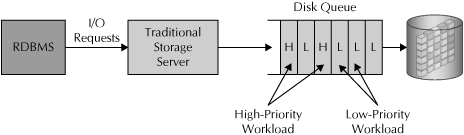

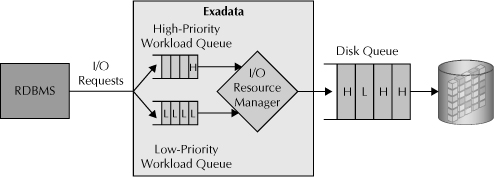

Many traditional storage devices are unable to effectively isolate I/O requests and therefore distribute I/O loads between high-priority and low-priority workloads. In Figure 9-3, a low-priority workload can overwhelm a storage device that is also supporting a high-priority workload. The scheduling algorithms within the storage array are hard to influence unless they can somehow be integrated with the application that is making the initial I/O request. Although the storage scheduling algorithm may be able to reorder requests on the disk queue, this is not the best solution for I/O load isolation.

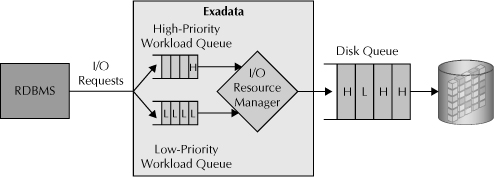

The Oracle Exadata Database Machine has a more advanced form of I/O scheduling, called the I/O Resource Manager or IORM (see Figure 9-4). This advanced scheduling, also described in Chapter 3, is possible because the storage layer understands that it is servicing one or more Oracle databases. This facility then allows a request to be queued if a higher-priority request needs to be serviced. This stops low-priority I/O requests from flooding the disk queue when both high- and low-priority requests are active. IORM can prioritize I/O requests from multiple consumer groups with multiple priority queues.

FIGURE 9-3. Traditional storage I/O scheduling

FIGURE 9-4. Database Machine I/O scheduling

You may be wondering if such disk scheduling intervention is expensive in terms of performance. Optimizations have been made to improve performance. If there is only one active workload or if the disks are not heavily utilized, then IORM does not manage the workload. IORM and the Oracle Database also ensure that Oracle background-process I/Os are scheduled appropriately. For instance, redo writes and control file accesses are always prioritized above other types of Oracle database I/Os.

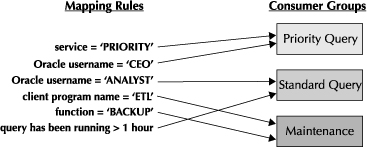

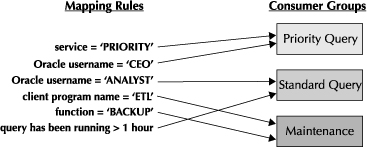

The first step in setting up IORM is to group user sessions that have similar performance objectives into a consumer group, as illustrated in Figure 9-5. A resource plan is then created that defines how I/O requests should be prioritized. Then, session attribute rules are created that dynamically map sessions to consumer groups. Consumer groups and resource plans are created using the Database Resource Manager.

FIGURE 9-5. IORM mapping

A resource plan is added that specifies how CPU and I/O resources are allocated among consumer groups for a specific database. We have already outlined how CPU can be limited using instance caging and the Database Resource Manager. Each consumer group in a resource plan contains a resource allocation directive, which consists of a percentage and a level (up to 8). Consumer groups at level 2 get resources that were available after all groups at level 1 received allocations up to their designated amounts. A level 3 consumer group would get resources that were not allocated or consumed at level 1 or level 2. This prioritization process continues until level 8. Resource plans can use percentages, priorities, or a combination of the two.

A resource plan can allocate I/O resources between different consumer groups in an individual database with an intradatabase plan, which was described in Chapter 3.

You might want to physically dedicate both CPU and I/O resources to a specific database instance or RAC clustered instances. This allocation can be achieved by using a single instance for a single database server and also allocating specific Oracle Exadata Database Machine storage server cells for a particular database ASM diskgroup. Each database would leverage its own ASM diskgroups and the diskgroups would never be on the same physical storage cells. Although an interesting physical isolation technique, it can lead to wasted resources and an inability for other databases to leverage resources like I/O that another database is not even using. In addition, each database would lose the ability to use the full bandwidth delivered by all Storage Server cells. A more efficient alternative method is to consider interdatabase I/O Resource Management.

Interdatabase I/O Resource Management allows the management of multiple databases that will be accessing the same Oracle Exadata Database Machine storage server cell. When you consolidate many database instances to a single database server or clustered database servers, you will have multiple databases that are accessing the same physical storage server cell. This means that the same principle of managing and prioritizing I/O requests to the Oracle Exadata Database Machine storage cells from different databases is needed to ensure that the I/O subsystem can be as efficient as possible without slowing down due to contention from conflicting I/O.

Interdatabase I/O management is configured using an interdatabase plan. This plan allocates how resources are shared among the database instances that are sharing cells. Only one interdatabase plan can be active on any server storage cell at any one time and it may only contain I/O resource directives. If an interdatabase plan is not specified, then all databases receive the same equal allocation of I/O from the storage cells.

If you are consolidating three separate database instances onto a single database server and you have already configured instance caging to lock down the CPU resources, you then will create an interdatabase plan to perform similar prioritization and isolation for the storage server cells. The Oracle Database Resource Manager has been enhanced to understand resources at the storage cell level and where user sessions can be mapped to particular consumer groups across a number of cells. Alternatively, interdatabase plans can be directed to individual database storage cells. Let’s examine these two methods and how they can be used for consolidation.

You can allocate I/O to specific database instances by leveraging a strict percentage share. For instance, if your database applications are OrderEntry, Reporting, and Marketing, each using its own database, you might want to allocate I/O resources for the cells containing those databases as 65 percent I/O to OrderEntry, 15 percent I/O to Reporting, and 10 percent to Marketing. The remaining 10 percent can be allocated as a pool to other database instances collectively. The percentage assigned is only used as a maximum if I/O resources are oversubscribed. If other groups are not using their allocation, then it will be redistributed to the other consumer groups in the plan-specified ratios. This example is shown here:

BEGIN

DBMS_RESOURCE_MANAGER.CREATE_PENDING_AREA();

DBMS_RESOURCE_MANAGER.CREATE_PLAN('CONSOLIDATION','Consolidation

plan for our three applications');

DBMS_RESOURCE_MANAGER.CREATE_CONSUMER_GROUP('ORDERENTRY',

'OrderEntry Database App');

DBMS_RESOURCE_MANAGER.CREATE_CONSUMER_GROUP('REPORTING','Reporting

Database App');

DBMS_RESOURCE_MANAGER.CREATE_CONSUMER_GROUP('MARKETING','Marketing

Database App');

DBMS_RESOURCE_MANAGER.CREATE_PLAN_

DIRECTIVE('CONSOLIDATION','ORDERENTRY','OrderEntry allocation',

MGMT_P1=>65);

BMS_RESOURCE_MANAGER.CREATE_PLAN_DIRECTIVE('CONSOLIDATION',

'REPORTING','Reporting allocation',MGMT_P1=>15);

DBMS_RESOURCE_MANAGER.CREATE_PLAN_DIRECTIVE('CONSOLIDATION',

'MARKETING','Marketing allocation',MGMT_P1=>10);

DBMS_RESOURCE_MANAGER.CREATE_PLAN_DIRECTIVE('CONSOLIDATION',

'OTHERS','Others allocation',MGMT_P1=>10);

DBMS_RESOURCE_MANAGER.SUBMIT_PENDING_AREA();

END;

/

In this example, we are calling the DBMS_RESOURCE_MANAGER PL/SQL package to create the CONSOLIDATION plan. Then we create consumer groups within that plan and then assign each a percentage of the I/O. The resource plan is now created but not active. In order to activate this plan, you will have to issue the following command:

ALTER SYSTEM SET RESOURCE_MANAGEMENT_PLAN='CONSOLIDATION';

Where you want to consolidate two significantly different I/O-intensive database instances onto the same physical storage cell, you can leverage an interdatabase plan on the Oracle Exadata Database Machine storage cells directly. In this example, you can consider an OLTP database instance that is highly sensitive to the latency of physical I/O requests. You will also consider a data warehousing database instance called DW that issues larger I/O requests but is more sensitive to throughput. In order to consolidate these two very different databases onto the same physical Oracle Exadata Database Machine storage cell, you must be able to prevent the DW from slowing down the OLTP database, yet allow the DW to open up on the storage cells when the OLTP database is no longer performing physical I/Os (because it is being satisfied from main memory or from the secondary flash memory area). Directly activating an interdatabase plan on the storage cells themselves will provide a solution to this common historic problem.

CellCLI> ALTER IORMPLAN -

dbPlan=( -

(name=OLTP,level=1,allocation=80), -

(name=DW,level=2,allocation=80), -

(name=other,level=3,allocation=100))

In this example, the OLTP database gets priority and can access 80 percent of the I/O resources. The DW can access 20 percent of the I/O resources when the OLTP database is actively running and using up its maximum allocation; otherwise, it can use 80 percent of OLTP’s unused allocation. Other databases can get any unused I/O at 100 percent, respectively.

Quality of Service Management

To better ensure that SLAs are met in real time and assure predictable performance for consolidated workloads, Oracle recommends quality of service (QoS) management. Oracle QoS is a policy-driven architecture that includes instrumentation of the entire Oracle stack, end-to-end work classification, real-time generation of correlated performance and resource wait data, identification of bottlenecks and their causes, incremental recommendations and impact projections, responses with just-in-time resource allocations, and protection of existing workloads from memory starvation.

Enterprise Manager simplifies QoS management by providing wizards and dashboards that support the policy-driven architecture. Policies are defined and enabled through a policy definition wizard. Bottlenecks and alerts are monitored through an applications performance dashboard. Reallocation of resources in solving problems takes into account expert system recommendations. Historical views of applications metrics are maintained for audit purposes. QoS is described in more detail in Chapter 8.

Consolidation Design

Now that you have all the various pieces that are required to consolidate database instances onto the Oracle Exadata Database Machine, you can consider an end-to-end consolidation design.

RAC Considerations

Oracle RAC provides tremendous consolidation benefits to database instances and the applications that connect to them. The ability to provide out-of-the-box high availability to applications, coupled with the benefit to scale inside a node (scale-up) or scale across the RAC cluster (scale-out) or both is unparalleled for everyday applications. When you are migrating multiple applications to the Oracle Exadata Database Machine, one of the key decisions you will have to make involves how you isolate instances and clusters. You could use one large RAC cluster for all consolidated applications, which would reduce management overhead and give you the option of allocation of resources through services, described in the next section. You could also choose to migrate each database to its own RAC cluster, which in many ways is the easier path, but which loses some flexibility in allocating resources and a smaller ration of availability with fewer nodes.

Along this same line, if you are buying a Full Rack configuration, you could choose to go with 2 heavy nodes in the X2-8 or 8 smaller nodes in the X2-2. The heavy nodes also come with more processors and more memory, which make them a more preferable target for OLTP workloads.

Finally, you could decide to not use RAC at all, but simply migrate stand-alone instances. This approach may seem like easiest approach, but you will sacrifice both availability and I/O bandwidth, since each individual instance will not benefit from multiple nodes.

There may be situations where scalability across multiple nodes is not seen as necessary. RAC One Node can be deployed on individual nodes as an alternative and provides enhanced application upgrade, maintenance, and mobility, as well as extremely fast failover for a database server node and other applications-related critical failure modes.

Workload-Based Consolidation

In workload-based consolidation of database instances, you attempt to group together applications that have similar resource profiles. For instance, applications that use roughly the same order of magnitude of I/O and CPU (say, 0 to 150 small IOPs at two cores each) can be consolidated together onto a similar number of RAC-enabled database server nodes.

Traditionally, OLTP was separated physically from data warehousing platforms, but by using IORM, this scenario might be accommodated despite such applications sometimes having wildly different sensitivity to I/O latency and throughput. Since OLTP applications are typically highly sensitive to CPU availability, you might want to use instance caging to ensure that OLTP applications always get priority for the CPU and I/O that they need. You will probably want to group together database instances onto database server nodes based on their similar workloads, priorities, and SLA levels of availability. That way, administration, patching, and security can be adjusted for higher priority applications that can run on physically separate database servers.

For extremely risk-adverse and security-sensitive environments, corporate policies may dictate that database instances must be physically separate and the same for the database storage cells that perform the I/O. This is still possible using the Oracle Exadata Database Machine, though it is usually not the optimal approach, since segregating these resources eliminates the possibility of the increased bandwidth and CPU resources being shared between different instances, as well as reducing availability options.

Time Zone-Based Consolidation

An important and often overlooked opportunity for consolidation is when multiple similar local databases service different time zones and locations. Consolidating database instances separately servicing different time zones onto common database servers can be enabled through Oracle’s granular security, control, and monitoring. Resource groups in Database Resource Manager can take into account the time of day, switching consumers from one group to another or, in cooperation with Oracle Scheduler jobs, switching resource plans based on times.

Another important consideration involves providing adequate time windows for patching. Although almost all maintenance operations for the Oracle Exadata Database Machine can be implemented through a process of rolling upgrades, there are always going to be some things that can potentially require some downtime or require running in a performance-degraded mode for an extended period. Of course, Oracle Data Guard or Oracle GoldenGate can be deployed to always ensure that a secondary Oracle Exadata Database Machine is available. If you cannot tolerate any performance degradation during the online day or any downtime, you might want to patch during a natural outage window (i.e., during the night when fewer users are online as opposed to during the active day).

By consolidating applications based on their end-user time zone, patching and maintenance can be scheduled during times that are not so busy and security can be enhanced significantly.

Overprovisioning Consolidation

You might decide to group lower-priority database instances and their respective application connections onto specific database servers. By overstuffing database instances like test, development, reporting, quality assurance, and installation onto specific database servers, you can maximize Oracle Exadata Database Machine utilization rates and reduce costs. In cases where the database instances can still run satisfactorily within a minimum CPU quantity, both instance caging and IORM can help ensure that worst-case scenario outcomes, such as all database instances spiking their resources at the same time, can still be managed with predictable performance.

Tight SLA Provisioning

If you decide to not overprovision database servers, you might strictly allocate CPUs through instance caging on a 1:1 basis until the entire database server CPUs are allocated to instances exclusively. IORM can be used to define and enforce I/O priority scheduling or percentage sharing. Such a consolidation plan ensures that the applications will always have exclusive access to the CPU and I/O priorities that they need to optimally run, but the risk is that some resources will go to waste if they are not actively being used. Many take this option, as their database instances and their applications are particularly sensitive to response times and delivering to the SLA is critically important.

Testing

This chapter has outlined some of the strategies you can use when using the Oracle Exadata Database Machine as a platform for consolidation. These are approaches which will give you some good information on which to base your plan. But nothing substitutes for testing how multiple applications will interact on the target platform. You can perform testing pro-actively by using a tool like Real Application Testing, which can capture actual workloads on one machine and replay then on another. You could use other load testing tools, or run systems in parallel during initial deployment. Any of these tactics will give you a better chance of success than simply trying a bunch of applications together and hoping it works—especially since the competition for resources between potentially conflicting applications can be extraordinarily complex in the real world.

For many people, the best way to implement consolidation is to start with the largest or most crucial application running alone on a Database Machine, and then migrating other applications to the platform, using tools like IORM and instance caging to allocate resources where appropriate. Although this approach may not limit your ability to properly predict the extent of consolidation on a Database Machine, it has the advantage of a greater likelihood of ongoing success and happier users.

Summary

This chapter provided an introduction to some of the unique aspects of consolidation projects building upon techniques for deploying single database solutions described elsewhere in this book. As you have seen, consolidation can take multiple forms on the Oracle Exadata Database Machine. Projected cost savings by reducing the number of servers and storage platforms and thereby reducing overall power usage, floor space required, or management personnel are likely driving you in this direction.

Given the options that you have in deploying and managing such an environment that were described here, you have a lot of flexibility and can be extremely successful. Careful planning is essential. Evaluate consolidation opportunities based on goals that you establish, such as achieving better processor or storage utilization, retirement of oldest platforms first, and/or improving performance of databases where faster delivery of data will have meaningful business impact. Meet or exceed SLAs by clearly mapping how you will manage, maintain, secure, and deliver solutions that are also highly available.

As with any project of this type, the best advice is to plan for eventual full deployment, but deploy incrementally. This will help you better mitigate risk in such projects. Of course, business users of individual applications and data warehouses may change their usage patterns when they discover the power of this platform. Addressing these changing demands in an incremental fashion is always the best road to success.

Consolidation can provide a significant return on investment. Besides providing cost savings, improved speed of deployment and simplified management can help your organization react to changes in business conditions and achieve top line business benefits sooner. However, some legacy platforms will be more difficult to include in such a consolidation strategy. As you plan your initiative, you should consider all of these aspects as you prioritize the platforms to include in your initial and longer term consolidation efforts.

Tuning the application(s) Attempting to reduce CPU consumption by tuning Oracle SQL access paths through analysis of data structures and usage patterns by the application(s). This process involves investigation of SQL statements that may be causing excessive CPU usage, as well as selective denormalization, partitioning, compression, index reviews, and other schema adjustments that are typically used to improve access paths. Once these alterations have been made, the execution plan is frozen using a SQL Plan Baseline or a SQL Profile.

Tuning the application(s) Attempting to reduce CPU consumption by tuning Oracle SQL access paths through analysis of data structures and usage patterns by the application(s). This process involves investigation of SQL statements that may be causing excessive CPU usage, as well as selective denormalization, partitioning, compression, index reviews, and other schema adjustments that are typically used to improve access paths. Once these alterations have been made, the execution plan is frozen using a SQL Plan Baseline or a SQL Profile.