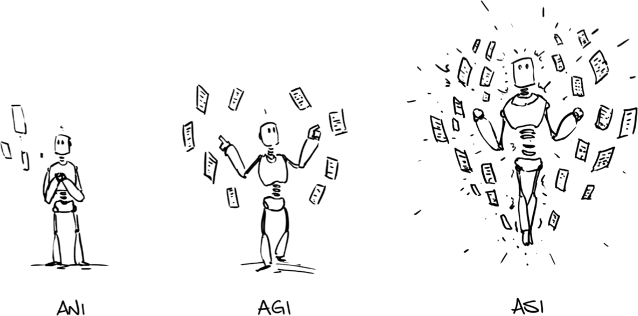

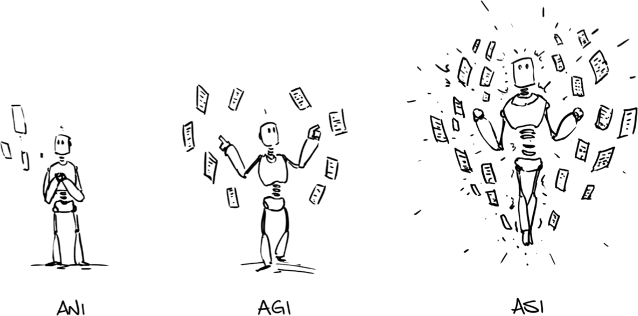

We’ve seen already that AI comes with a raft of terminology for the methods it uses to learn. More broadly, the various levels of AI fall into three common theoretical tiers with reference to human intelligence:

1. Artificial narrow intelligence (ANI)

AI is narrow in its abilities and is limited to learning in specific areas, but it can still perform those limited abilities incredibly well.

2. Artificial general intelligence (AGI)

AI can draw upon its varied learnings and effectively reach human-level intelligence.

3. Artificial super intelligence (ASI)

AI goes beyond general intelligence, and thus beyond human intelligence, and exponentially improves on its own into a form that far surpasses the intellect of our species.

These theoretical AI tiers are anthropomorphised here with a robot illustration. Let’s examine each in more detail.

Figure 4: Theoretical tiers of artificial intelligence

This basic form of AI is also known as weak AI, but don’t be fooled – ANI has been and will continue to be used for some mind-melting, groundbreaking things. It can learn very narrowly defined tasks, up to and exceeding the capabilities of humans, usually with a view to making big steps in a single subset of abilities. ANI covers everything we’ve achieved in AI so far – mind-controlled smart wheelchairs, driverless vehicles; healthcare and triage, utilising data of the past to recognise potential diagnoses; prediction machines; extracting intention and context from natural language; learning to speak a language like chatbots and even translating between languages; machine vision detecting people and remembering them; and excelling at games such as chess or Jeopardy! or Go.

The reason these are all narrow in relation to humans is that even AlphaGo, with the incredible abilities it used to topple Lee Sedol in that groundbreaking five-game match, would have no idea how to read you a children’s book. And that’s because it was made to excel in a single subset of abilities, in this case the patterns and strategies in the game of Go. This is the tier we’re currently in, and all AI designs thus far fall under ANI. As our implementations improve, diversify, connect and converge, we will take critical steps towards the next tier, artificial general intelligence.

Also known as strong AI, this is human-level AI. For humans to achieve a general level of intelligence, they must be able to draw effectively on numerous varied abilities. In AGI this will be done to such a great level that programs will successfully operate on par with the human brain and intellect and, in effect, exhibit the full range of human cognitive abilities. This AI will show intellect, logic, intuition, adaptability, creativity, will beat you in Go while reading you a children’s book, and may even display emotions and empathy.

Empathy was what threw off the robots known as Replicants in Blade Runner. In one great scene an interrogator closely watches the response of a Replicant being questioned about a hypothetical situation that for humans would elicit an emotional and empathetic response. This is a take on the Turing test. The physical appearance of the humanoid is so lifelike to the human eye it is indistinguishable from a human, and its reasoning and answers are enough to trick most humans, hence the need for deep questioning. True AGI, however, will undoubtedly pass Turing test varia-tions, no matter what level of questioning you throw at it. This tier is unlikely to last long, as an AGI would very likely be capable of improving upon itself quickly – moving towards the next tier, the ultimate tier, the tier that shatters all tiers ever in the history of tiers. Artificial super intelligence.

This is it. We’re now literally talking about the invention to rule all human inventions. Ever. Period. To reach this tier we will have passed the hypothetical point in time where AGI has evolved an ability to increase its own intelligence exponentially, far beyond human intelligence, including the brightest minds of our species. We call this the technological singularity. The point of no return. In other words, put on a few extra sets of those undies you’re wearing, because if things don’t get really, really good for us at this point, they’ll get really, really bad. One way or another, if ASI is made possible, the changes to humanity and civilisation as we know them will be so extreme it’s difficult to fathom. What we do know is that it will be uncontrollable and irreversible. We will have created a new alpha ‘species’.

We will no longer be able to simply pull the plug, find the kill switch or revert back to the information dark ages by taking out all satellites, servers and computing systems, the backbone of AI. None of this is a possibility, unless the ASI lets us – remember, by this stage it is not only smarter than our now primitive selves but it also has control of all connected technology – defence systems, vehicles, weapons, computers, rocket control systems, robots. We’re not going to have much left in our arsenal.

Humanity as we know it will completely transform in one way or another. We will have built god-like entities with intelligence, reasoning and problem-solving capabilities we can only dream of. If the values and goals of such an ASI are not aligned with our own, we will be merely an anthill in the way of whatever it aims to achieve. But if we continue with these important conversations and build in alignment between AI and humanity, it may take us to seemingly infinite new possibilities, helping us to really understand the universe and even find ways to traverse it, to live in sustainable harmony with our planet and our solar system, while also being set up with an ability to escape the confines of our earth with ease. If a utopian world is what we want, ASI could give us the blueprints to start creating. If quality immortality full of adventure is what we desire, ASI could show us such a possibility. If reaching the stars is what we seek, ASI could design the transportation or teleportation methods necessary. We would still be limited by resources, but we will understand how to harness more of what we have, while potentially requiring much less to operate – and so humanity could become more efficient. We may even merge with technology to help achieve this, becoming cyborg and bionic.

Of course, many problems seem to outstrip any theorised positives of this level of AI. Humans would likely be much more void of purpose if everything could be done and created by ASI, and resting on the hope that it would choose to help us is foolish. Humanity must actively shape the possibilities to come, whether we want to prevent elements of such technology or guide its advancement towards those beneficial for our existence.

For now, we are firmly placed in the weak ANI tier, moving rapidly towards strong AGI. Having said that, it’s difficult to know exactly where that point is, and truly definitive tests for AGI would need to be carefully devised and well-rounded to even be certain we’ve reached it. We could find an AGI that passes the Turing test with flying colours when interviewed by one human subject, while failing dismally with another. That’s because human interests, intelligence and capabilities are astoundingly vast and varied. And the truth is, AI can be built to simulate human emotions or intellect well enough to make people believe it might be conscious. That doesn’t mean it is.

This raises another profound philosophical dilemma. How do you know with certainty that any person other than yourself is conscious? You know you are living, that you experience the world around you, that you are human and that you are self-aware. How can you be certain anyone else you know is too and not just seemingly so? You can know it to be true, but you can’t yet prove it.

This kind of dilemma, only one where we’ll be more sceptical about the outcome, will start to occur with AI before we hit true human-level AGI. I predict we’ll see an artificial consciousness paradox, in which AI will be able to sufficiently dupe humans into believing, thinking and feeling that the AI experiences subjectively and lives with consciousness. It has the potential to effectively emulate all manners of feelings: fear, sadness, excitement, empathy, even love . . . without actually consciously experiencing them. But it will definitely be difficult to tell, and then we’ll have a similar dilemma to the one described above for humans. You can know these machines don’t actually feel, but it may be very difficult to prove it. So then what happens? Do we give these systems rights? Will they feel the need to have rights? We may find ourselves searching for tests like the one from the Blade Runner Replicant interrogation, searching for the presence of those small human nuances when put through emotional and empathetic reasoning – only AI doesn’t necessarily come in humanoid form, so this will definitely call for an advanced form of the Turing test.

One thing we do know is that AI is here to stay, no matter what forms it takes on in the future. A school of thought exists that we’re moving into an age where we ourselves will merge with AI, and to some extent even connect our minds to the internet. Either way, we’re consistently outsourcing more and more of our own cognitive processes to technology. If a greater level of intelligence were available to us on demand, how might that change your life and what would you go on to do with it?

An important factor is that even ANI designs may help us lead longer and better lives. AI that tracks a range of our personal data, including the kinds of health tech that are already on the rise, can monitor all the stuff that’s going on with us – depending on how much data we’re willing to give it to perform this duty. I’ve seen first-hand how AI can merge with health tech, DNA profiling and social media to provide powerful insights into our own health and our probability of ending up with various diseases and conditions.

I met one of the young co-founders of a company who used a form of virtual twinning technology (we’ll explore this further in Part V) to have a curated diet created for him to manage his AI-predicted predisposition to diabetes. He has not yet been diagnosed with this condition, and hopes he never will now he’s been given this powerful data-driven information. Insights like these will improve as more people enter such platforms and provide a greater range of base data for comparison.

Now this may seem quite confronting and a little unnerving in terms of data privacy. Many of us are not instantly keen to give away that amount of personal information about our lives. But what if the outcome could guarantee you an extra twenty quality years of life? Now how protective do you feel about that data?

This is all taking steps down the path towards a sci-fi–style period known as the posthuman era, but to get there we’re steadily moving into a transitional period, the transhuman era.This is the idea that we increasingly integrate our biology with technology, until we become one over time. These philosophical eras lead us towards redefining what it means to be human and charting our own next evolutionary steps. If we move so significantly past our current understanding and form of human – say, if we were to somehow completely digitise or quantise ourselves into immortal beings, transitioning our consciousness entirely outside of our own biology – we will by that point become posthumans. This is largely imaginative, philosophical, and very sci-fi as far as abstract concepts go. But given the advancements we’ve already witnessed, combined with exponential growth in technology and AI . . . well, nothing is impossible. I’m not telling you how to feel about it, I’m merely mentioning these as topics that could very well be relevant to our future humanity. I’ll leave it up to you to decide how you feel.

The truth is, AI is one of the many building blocks of technology. So will this new super force be one that leads to destruction and annihilation, or one that helps humanity thrive into the future, finding ways to fix our world and maybe even allowing us to reach the stars?

It’s a bold leap of the imagination into the future, trying to predict what this type of technology could be capable of. The ability of intelligent systems to assist in our understanding of where the future is headed is one of the great benefits AI provides. In my 2017 trip to Tibet, filming Living on the Roof of the World for Discovery Channel, I saw one astounding use of predictive analytics, using lots of data with the likes of statistics and AI to make predictions about the future, in research on the Tibetan glaciers. I trekked up to the glaciers with a team of scientists, our film crew, local Sherpas and guides. After a rough four hours, we finally reached the base of this one glacial site and, with chainsaws and tools, the scientists take samples. This has been done for a number of years at a range of sites, resulting in a massive storage bank of glacial ice, labelled with the various locations from which they were acquired. These are used to analyse how old the ice is at each site and determine whether the glaciers are increasing, stable or declining.

The way they calculate the age of the ice is to carefully drill into each sample, then extract and radiocarbon date the likes of plant matter held within. Why? Well, the older the ice on the top of the glacier, the more frequently ice has been melted off the top due to such factors as climate change. I even held a sample of ice core that had been dated at roughly 20,000 years old. Over time, these scientists have gathered masses of data on each of the Tibetan glacier sites. This large-scale project is very important because more than 46,000 glaciers on the Tibetan Plateau supply nearly 2 billion people with water. And they give birth to rivers that flow through China, India, Pakistan, Bangladesh, Burma, Laos, Thailand, Vietnam and Cambodia. These include such important waterways as the Yangtze, Mekong, Salween, Indus, Brahmaputra and Yellow rivers.

Patterns can be found in the data, and through predictive analysis, computer models can be created to make future projections and better understand the impact of the glacial retreat. If humanity harnesses its own intelligence alongside that of our artificial computing counterparts, we may mitigate great risk and even conflict. The analysis so far has found that the glaciers have been in major decline since the 1950s and that the ongoing affects could be catastrophic. Over the past 50 years, the temperature in this region has increased by about 1.3 degrees Celsius, three times the global average, and if this trend continues, it is believed that 40 per cent of the plateau’s glaciers could disappear by 2050, meaning these countries need to seriously consider alternative water sources before then.

In the past, wars have been fought over land and energy, but the next may happen over this dwindling resource as we see tensions rise, particularly between China and India. But intelligent analytics and predictions could possibly help find new solutions as well. Armed with this incredibly valuable knowledge, we could perhaps bring about much needed change to help alleviate the rate of decline, maybe even finding the major specific sources of temperature increase – one of which here has been found to be black carbon, an atmospheric pollutant. These very small particles are formed from the combustion of fossil fuels, biofuel and biomass, then slowly fall from the air and settle on the glacial ice. This soot, as it’s also known, absorbs solar radiation and contributes to heating and melting of the glaciers. The more we understand our impact on our planet, the faster we can act to prevent further disastrous repercussions.

Where AI may play a greater role as we move into the future is in looking further than individual examples and bringing them together more holistically to examine complex sets of flow-on effects from single decisions. It may help us gain a glimpse of the future through data, and assist in solving the problems it identifies. The fear with AI comes from the idea that as it gets more intelligent it will also become more cognitive and self-aware. My take on the advancement of AI is that there are many different types all advancing at different rates, and when compared to our own brain, these types will not hit ASI level or even AGI level at the same time.

So how do common sense and consciousness fare in the artificial do-mains? Well these have not advanced as rapidly because, for many creators, their return benefit is simply not as easy to see. Humans can find immediate use cases for AI when it is still dependent upon our vision, our data and our tasks. We like knowing that we can contain such a technology and retain control over it. But in the wrong hands, the technology could be used by hu-mans to assert control over populations of humans, and hence it becomes a new arms race. This is why we must think of ourselves as a single species and learn to thrive together. It has already happened in part. I’ve noticed the term ‘human’ used much more frequently in recent years, and I believe this is due to the perception of a growing, external threat – automation and AI. At the same time, we have also shown many signs of becoming more separatist across the world. Let’s not forget our humanity, our shared experience as a single species inhabiting this one planet we all call home.

Instead of thinking of AI as a threat, if we see it as a powerful tool, perhaps even something of a human-made prophet fuelled by data, then we could use it to plan long-term ways for humanity to flourish. It could assist us in unravelling ways to decode our own immunity and become more resistant to disease, an endeavour made ever more pressing given the devastation of the 2020 COVID-19 pandemic. If this were the case, and humans lived longer, healthier lives, AI could also be used to project the impact longer-living humans will have on the earth. Will short-term life extension lead to long-term disaster for our species, or will there be further flow-on benefits? Instead of fear, let’s continue cultivating hope and a desire to understand and act towards a better world.

When I was young I remember hearing about how Earth had a hole growing in the ozone layer, and that without the ozone layer we were sitting ducks for an unprotected onslaught of the sun’s relentless radiation. And we humans were causing that hole with something called CFCs, or chlorofluorocarbons, chemicals used in aerosol sprays, air-conditioners and refrigerators. The Montreal Protocol, an international treaty designed to protect our ozone layer, came about in 1987 to phase out the production and use of many of the responsible substances, starting with CFCs. As a result of this international agreement, and its widespread adoption and implementation, the hole in the ozone layer over Antarctica has been slowly recovering. These agreements take great vision, clarity and international collaboration to begin in the first place, and then to be upheld over the many years of implementation. The inspiring thing is, as this example shows, humanity is capable of it. It’s not the only time important international agreements with global reach have occurred, and I hope we’ll see many more – for the benefit of our one planet and the life it harbours.

If we do indeed reach the widely acknowledged idea of technological singularity, where intelligent systems move beyond humans in every way possible, including consciousness, then thinking we can retain control over it is ridiculous. Imagine giving chimpanzees, one of our species’ closest living relatives, a chance to create a cage that a group of humans cannot break out of. It’s inconceivable that they could succeed, because our intelligence and ability to solve problems will go well beyond any ideas and implementations of a cage chimps could come up with. Similarly, there is no chance we could ever cage or control an AI that has surpassed our own human intelligence. Instead of attempting to cage it, if we roll back development ahead of time in some areas of potential AGI intelligence – such as self-awareness, self-preservation and consciousness of existence – then what we may be left with is something amazing. We will need to have built in positive human value systems, so that the goals of AI are aligned with grand humanitarian visions of harmony between life and Earth and beyond. We may then have a humanity-preserving super-powerful solution-creating machine!

Think about it, the ability of computers to perform calculations combining complex mathematics, large numbers, calculus, arithmetic and statistics already goes well beyond human capability, so in essence some elements of AI have already well surpassed humans. Our computers didn’t suddenly get up and try to wipe out humans because they calculated that we are inferior mathematicians. In fact, they have no comprehension or understanding of mathematics, because cognitive abilities have not been built into them. As a result, humans can harness our computers to run calculations we cannot do ourselves. They are a tool we use towards achieving our dreams.

In a similar way, we could build amazing problem-solving AI that could examine many possible solutions and innovations humans could not otherwise conceive of, and even predict the flow-on positives and negatives of each solution. If this were to be made in a similar way that we can ask a machine to master a game like Go without it having any real comprehension of what it all means or a true understanding of its own existence and the world around it, then this will undoubtedly become a useful invention for our species. Since realising the importance of such technology I’ve been set on a path to contribute to steering the directions we take with AI and helping others to understand and shape its impacts.

The funny thing is, we haven’t yet figured out exactly what consciousness is, but it’s clearly significantly more complicated than inputs, processing, calculations and outputs. We are special types of beings. Evolved to adapt, to harness the elements, to create tools, to communicate, to collaborate. If we cannot figure out what consciousness is, how could we possibly create an artificial version of it? What I find is, the more we advance our technologies, the more we understand ourselves – through new tools to measure and analyse, and through general reflection upon each advancement. Ultimately, we’re always learning more about what it means to be human.

We do, however, find it more difficult to imagine the impact these agents could have if freed of our shackles to create their own goals and strategies, even innovating through self-critique and criticism. This is the idea of transcendence. The hope and aim for AI is that we instil good morals, ethics and values before ever getting to a point where we could lose control. This is something we must never stop planning and preparing for, making sure people have a say on what gets coded into these machines as an underlying purpose system – an approach acknowledging Asimov’s laws, but perhaps more quantifiable.

In contrast to the predictions of doom and gloom, we could utilise AI as a tool to help humanity thrive into the future. I believe we don’t need to worry too much about getting AI to even emulate consciousness, but instead harness the technology for prediction, automation, efficiency, sustainability, disaster recovery and even prevention, problem-solving and solution-creating capabilities for the benefit of humanity. If we continue to advance AI, it needs to work within the value system we design. This may mean designing AI without striving to achieve artificial consciousness and without building in the capacity, desire or need for AI to go against its in-built rules and boundaries. We should also look to prevent AI making decisions and acting on taking human life without any accountability. These are starting points that will ensure we don’t lose control of these creations.

If AI can augment us beyond our capabilities, this might be the sweet spot. In combination with bionics, it may enhance our inherent unexplored abilities. It may gift us ways of reaching the stars and exploring the far reaches of our universe. And given our current level of outsourcing of our own cognitive abilities to the likes of mildly intelligent smartphone apps and home assistants, our ability to adapt to these big changes could mean AI increasingly becomes a part of us.

A cognitive extension.

We may become one and the same.

The big thing to realise is that creativity, innovation and human experience will flourish in these times of AI and automation. So in this age of rising automation, we should remember the wonderful things about being human. Our conscious experience that breathes meaning and appreciation into our world. Without it, this incredible planet, the wondrous night sky full of stars and life itself, wouldn’t be appreciated at all. These powerful technological tools should only be used to improve our humanity, and that is what we must strive towards. Your brain and body are an interconnected superhighway, your mind a natural-built supercomputer, your life a near completely impossible reality, your ability to love a truly magical gift and your consciousness a thing so unique we don’t know of anything like it in the entire universe. And that, my friend, is a beautiful thing.

Transcendence may create the next great evolution.

Will AI transcend its boundaries of current inability,

or will we give it the ability to comprehend?

Will we humans transcend our biological limitations

and finite lifespans before AI takes over?

Will we instil purpose, morals, ethics, humanity?

Or will we, perhaps, become one,

and transcend together?