It’s 2007 and I have finally made it to working on smart wheelchairs for my undergraduate thesis and my PhD. I work on two different robot wheelchairs, the first named SAM (for Semi-Autonomous Machine) for half a year; and the second, more advanced robot wheelchair, TIM (for Thought-controlled Intelligent Machine) for about five years. SAM gives me my first insights into the many challenges that come with taking control of a wheelchair through a computer. The chair is connected to my big tube computer monitor on my work desk in the Centre for Health Technologies at UTS. I’m trialling design after design on the code to provide my programs the ability to control the wheel motors and take control of SAM. But this proves much more difficult than anticipated. Each time I attempt a new test with my hand on the emergency stop button, nothing happens. So I grab some lunch, throw the code into debug mode so I can find what’s going wrong and where, and munch into my sandwich as I lean back in my seat.

Little do I know that debug mode will actually get rid of the problem preventing my wheelchair from running, known as interrupts. Basically, my code isn’t given enough time to get all the way through its calculations and send the result to the motors before it starts again. Now, with this barrier removed, and me leaning back in my chair completely unaware of it, SAM suddenly takes off, pulling the monitor off the table. I lunge for the screen, catching it before it smashes on the ground and immediately look up towards the runaway chair . . .

BAM!

Straight into a wall smashing a hole in it and bending both footrests. Agh! I’m going to need to get those repaired, I think. On the plus side, it works! Time to give it an ability to see so that it might avoid these types of accidents.

The more I make my way through the world of technology, coming up with ideas and designing, the more I start to realise the undeniable influence nature has had on various technologies of today. From the sensors that allow robotics to perceive their environments – in other words, converting real-life information into digital representation – to their mechanical physics and movements and the intelligent algorithms operating their abilities, much has been gained from nature. Having had millions if not billions of years to get things right, nature provides a wealth of inspiration for many facets of technological innovation.

Mathematical principles similar to those used in robotics applications are extremely useful, and in some cases necessary, for a more complete understanding of the human body and how we interact with our environment and each other. They can also allow us to better learn from nature and continue to improve our own technologies. Our sensory technology is modelled on things in nature, such as our own senses, as well as those we discover in the animal kingdom, including unique ways other creatures perceive their environment. But instead of feeding the data as electrochemical signals to the brain, which is how our own systems operate, technological sensory systems usually take the information they sense from the real world and convert them to digital streams of ones and zeros to be interpreted by such systems as the most common form of computing ‘brains’, central processing units (CPUs) and graphical processing units (GPUs).

We use cameras practically every day now that we all have them so conveniently built into the devices we take everywhere with us. Originally designed to capture moments in light, cameras have undergone much evolution over the generations, as have our knowledge and understanding of eyes and vision systems in nature. The more we’ve learnt about our eyes and those of other living creatures, the more the abilities of cameras have been able to advance. Take stereoscopic cameras, which are modelled on the human eye. We have binocular vision, meaning we can see everything from two slightly different perspectives. We don’t have two eyes just because it’s more aesthetically pleasing than having one (though if we were all Cyclopes we might think two eyes were strange), nor do we have both as a redundant backup system in case we lose one. Our two eyes actually help provide us with three-dimensional (3D) vision of the world.

You may have seen the Simpsons Halloween episode ‘Treehouse of Horror VI’ where Homer gets stuck in a 3D virtual land, and this concept is described so perfectly by the scientist, Professor Frink. In his overly geeky voice he draws on a blackboard and says, ‘Here is an ordinary square.’ Policeman Chief Wiggum responds, ‘Whoa, whoa, slow down, egghead!’

‘But suppose we extend the square beyond the two dimensions of our universe,’ the boffin continues, ‘along the hypothetical z-axis there. This forms a three-dimensional object known as a cube . . .’

I love this. As our universe isn’t a 2D cartoon, the z-axis is a very real one for us defined in the Cartesian coordinate system (another inventive contribution by René Descartes), allowing our reality to exist in three dimensions: the x-axis, the y-axis and the z-axis. So how do we (and some robots) see in 3D? Each of our two eyes provides something closer to a 2D image, but as they bring in two slightly shifted views of our environment simultaneously – at exactly the same time – they help us see in 3D through a concept known as parallax. To see this process in action put your index finger up in front of your nose (at the distance you would hold a drink in between sips). Close one eye, then switch which eye is closed. You’ll notice a change, as if the finger moves position when you switch eyes. Alternatively, with both eyes open, focus on any object beyond your finger and you should instantly notice two slightly shifted ghostly versions of the same finger. This is parallax. It’s caused by our different viewpoints of the same object and is an important principle in understanding 3D vision in animals and other creatures, and it also plays a huge role in the way many technologies (including robotics) perceive their environments. Your brain also has ways to figure out how far away an object is. One of these is by comparing the distance between the two perceived ghostly fingers – the horizontal displacement – which is known as disparity. The closer your finger gets to your face, the further they appear apart (the greater the disparity), and so your brain can work out how far away your finger is because it has to cross your eyes further to focus on it.

This idea was hacked long ago in 3D movies, where you are in effect watching two different movies at the same time – which is why it can make you feel sick if the alignment is off. Usually glasses are involved, initially with blue and red lenses (and the video playing was also blue and red – blue lens blocks out blue, red lens blocks out red), and later on with polarisation in the lenses. What they did was allow your left eye only to see the video meant for your left eye and your right eye only to see the video meant for your right eye. If filmmakers wanted to make an object, like a biting dinosaur, come out of the screen, all they had to do was horizontally displace the dinosaur between the left and right images and your brain would fill in the rest. The further apart the two images, the closer your brain tells you the object is to you. What was found over time was if the object appeared too close to you as the viewer, you would naturally cross your eyes to converge them on the object, which would make it lose its effect. So limits were placed on how far the objects could appear to come out of the screen. It’s an amazing illusion that demonstrates our understanding of how our eyes and brain construct our 3D reality.

But what if you have an eye closed, like to wear an eye patch because you’re a pirate, or in fact only have one eye? Do you lose your depth perception? The answer is yes and no. You lose the parallax effect from two eyes simultaneously focusing on the object, but your brain is so clever it has built up many other methods of figuring out how far away objects are and of localising yourself. For example, we automatically interpret the perceived size of the object versus the size we know it to be to indicate its distance away from us – if we’re seeing a very small skyscraper building, say, our brain knows it’s probably a long distance away. Our brain can also pick up on the tiny changes in our eyes as we change focus between things close to us and things far away.

But there’s another small trick we inherently know, usually without ever having thought about it. It’s the thing owls do to work out the distance to their prey before they swoop. They bob their heads around. And we sometimes do this too, moving our head or even our entire body left and right to try to see around objects a bit and figure out their location in our environment. This happens when the relatively small separation between our two eyes is not large enough for us to tell the distance to an object. It’s another form of parallax, known as motion parallax, where we build up different perspectives on objects by moving. Those that are closer to us move further across our field of view than do objects in the distance. Instead of simultaneously seeing it from two different perspectives, you’re achieving multiple perspectives over time. Yup, just like an owl! Give it a try, you owl-person you.

Our two slightly different perspectives from our eyes, or movement of the head when only one eye is taking in light, also help us to overcome blind spots. These are very small areas in our visual field that are obscured, actually from within our own eyes (I’m not talking here about an overtaking car not being visible to your side mirror). This is where the optic nerve fibres pass through the retina in one spot at the back of the eye, creating a very small area in the visual field of each of our eyes that is obscured. To test this out, use the letters R and L below.

R L

First close or cover your left eye and look at this letter ‘R’ with your right eye. Depending on the distance your face is from the page you should still be able to see the ‘L’ – blurry of course but still there. Keep your focus on that ‘R’ and move the page closer or further away until the letter ‘L’ completely disappears from your vision (which should occur when your face is about three times as far away from the page as the letters ‘R’ and ‘L’ are from each other). Likewise, you can close your right eye and look at the letter ‘L’ with your left eye, then move the page until the ‘R’ disappears. If, however, you open both eyes when in either of these positions, the obscured letter reappears, as it becomes visible to the eye that was closed. This means we don’t often perceive that our blind spots are even there. Much of our vision and visual perception often relies upon this combined vision from both our eyes being processed in unison by our brain.

Let’s return to the lab in 2007 and my work on SAM. For nearly a year I’ve been working on stereoscopic cameras for 3D vision for SAM. I’m using a pair of cameras inspired by our very own eyes, placed next to each other with a short distance between them. I program the system to analyse their data using the sum of absolute differences correlation algorithm. The two simultaneous 2D images can be used to calculate the disparity of individual objects between them and thus the distance a given object is away from them. The result is a low-resolution 3D point cloud, indicating where all the pixels the cameras have collected sit in 3D space – think of it as like a dark room you can move around in, with each point in the cloud represented by suspended, illuminated, coloured sand.

Later that year, working on my mind-controlled wheelchair, TIM, I couple stereoscopic vision with spherical cameras to create a system that can see everything all around in 360 degrees. This is unheard of at this point in time. I have to figure out an effective method of combining the data from these two different camera systems to give the smart wheelchair a great way of observing and navigating unknown environments, as well as avoiding unpredictable objects and people along the way. As for many great engineering designs, I look to systems in nature for inspiration. I initially think the idea of eagle vision would be awesome, but quickly realise a bird of prey might not be the way to go. Predators tend to have vision honed for the attack, but what I am looking for is something defensive, so that my wheelchair avoids collisions and creates safe pathways of travel for the operator. Looking through a range of veterinary journals for information on various vision systems, I finally stumble upon the perfect biological inspiration – horses!

As it turns out, equine vision is very effective. With elongated pupils, placed towards the sides of their head, they can see in 3D in front due to the binocular overlap (as we do), but can also see much of the environment around them too. While their heads are lowered, they watch what’s going on around them, which is vital given they can spend up to 70 per cent of their time grazing. Although the vision around the sides is not 3D, they only need it to detect predators, so they can leg it right outta there if and when they do.

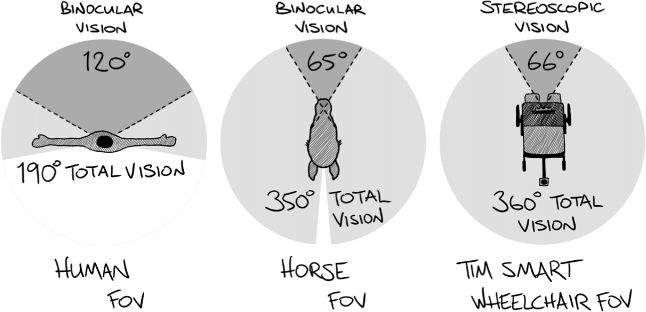

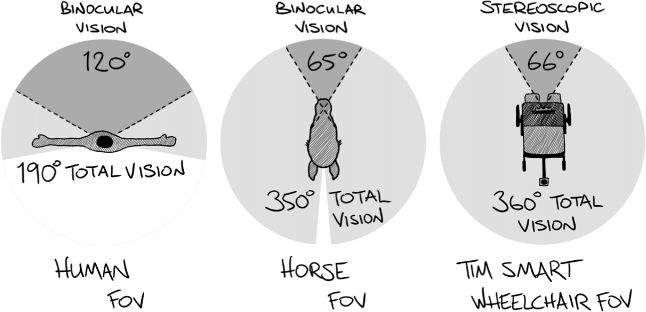

Human eyes have about 120 degrees of binocular field of view (FOV) – the width of view we can see with both left and right eyes at the same time, and about 190 degrees of total FOV vision. When we look straight ahead and stretch our arms out to both sides we should only just be able to see both sets of fingers at the same time if we wiggle them. Interestingly though, as we’re seeing them with the edges of our vision, they’ll only be in greyscale, as our retinas can’t process colour from our peripherals.

Horses have their eyes towards the sides of their heads so they have a smaller binocular FOV than us – 65 degrees – but a much greater total FOV of close to 350 degrees, which is not far off the full 360 degrees. This means that when horses are looking forward they can pretty much see everything except their own arse! Told you it was an effective vision system.

So I model the wheelchair on this system while removing the blind areas behind, resulting in a 60 degree binocular FOV and a 360 degree total FOV. And so an equine-inspired vision system becomes the eyes for my smart wheelchair.

Figure 6: Vision field of view for human, horse and TIM smart wheelchair

Another commonly used concept from nature, brought into many of today’s devices, is the time-of-flight principle. Humans, bats and dolphins all utilise this to estimate various distances. Think about those moments when you’ve yelled in a large empty space or even on a cliff. The greater the distance the sound has to travel before it bounces off objects and returns, the longer it takes to hear that echo of your own voice yelling ‘COO-EE’ or whatever call you make. This is the time-of-flight principle in play. If you’re on a cliff, you yell the sound out, it travels all the way to the nearest cliff wall facing back at you, hits it, bounces back, then travels all the way back to you. Because of this distance in travel, you might wait a few seconds to hear the echo.

That time to the echo can be easily used to determine the distance, as we already know the speed of sound, which is a constant in air of about 343 metres per second, or 1235 kilometres per hour. If we multiply the speed of sound by the time taken, and then halve it because the sound has travelled the same distance there and back again, we can find the distance to the cliff the sound is bouncing off:

distance to object = ½ × echo time × speed of sound

Bats sometimes use this exact approach to perceive their environment, making noises and listening to the echoes, in a process we call echolocation. This type of process inspired engineers to create a range of modern sensors, including sonar sensors (you can hear the clicks – or pings – of sound they send out) and ultrasonic sensors (the pings are outside our hearing range), which can help robotic devices figure out where objects around them are located. These are also used on the back of many of today’s vehicles as the reversing sensors. Incredibly, some humans have even developed abilities to use echolocation to, in effect, see through sound. I met one such person in my first ABC documentary, Becoming Superhuman.

Chris has been completely blind since birth. From a young age he developed an ability to echolocate. This is largely thanks to how our brains can rewire themselves, a wonder known as neuroplasticity. This phenomenon is one of the most important breakthroughs in modern science for understanding our brains, showing that they can change continuously throughout our life, though the plasticity is exhibited to a greater degree in children than in adults. These changes are physiological, occurring as a result of the brain adapting to interactions, learning, environment, thoughts and emotions, even damage and trauma.

The way Chris echolocates is an amazing display of neuroplasticity. How he perceives the objects around him is closer to the echolocation of bats and more advanced than most ultrasonic or sonar sensors today. It even gives us clues as to how the technology can be improved upon for future uses. Chris makes sharp clicks with his mouth and listens to the echoes with a seemingly superhuman level of sensitivity in hearing. He can not only figure out how far away objects are from the echo, which is everything I was hoping to witness upon meeting him, but he can also infer information about what he’s bouncing sound off, which I found completely astonishing.

Based on the quality of the echo, Chris tells me everything sounds different – concrete, plants, trees, humans – so he has a very good idea of where the objects are placed in his environment, as well as what those objects are. When the objects are too far away for his mouth-clicks, he uses a cane, giving it a quick pound on the ground to make a louder crack. We were standing a whole 25 metres away from a building he was facing when he demonstrated this. He cracked the cane on the ground and listened, and then repeated and listened. His next words were simply awesome: ‘Okay, it’s 25 metres to the wall ahead, but if you look up it’s 35 metres to where the building comes out from the wall,’ even telling me that the windows and shades up there sound really cool. He was spot on. I couldn’t help but laugh in amazement.

This same time-of-flight principle is the basis for lidar, which is much, much faster than sonar and ultrasonic, because it bounces light rather than sound, and the speed of light is nearly 300 million metres per second – about 875,000 times the speed of sound. It does, however, have trouble seeing windows, as the light tends to pass right through. It’s also expensive compared to ultrasonic sensors. But every sensor comes with its own set of advantages and disadvantages. No sensor is perfect. This is why many robots today utilise sensor fusion. They’ll be designed with many different types of sensors – such as lidar and ultrasonic – that can work together to tackle many scenarios.

Driverless vehicles are a good example of this. They are built with many different types of sensors because there are just so many different tasks they need to complete and situations they need to adapt to. A driverless car might have a range of sensors, incorporating cameras with AI that can see in colour and recognise such objects as traffic lights, people, lane markings and street signs; radar for the fast and accurate detection of other cars on the road around it; lidar for fast 360 degree mapping of the surrounding environment around, including gutters, trees and other solid obstacles; and ultrasonic sensors (as most new cars already have for reversing) to detect lower obstacles closer to the car that the other main sensors have no line of sight on.

Be it a robot, sensor, algorithm, system configuration or mechanical operation, many of our technological advancements have been inspired by the wonders of nature. Maybe our next advancements might even contribute to restoring our natural world. So the next time you’re looking for boundary-pushing inspiration, have a look at what nature has already invented for us. It has had much more time to perfect it than you or I can even comprehend.

With millions if not billions of years

of creating, changing, updating, evolving,

nature inspires our most sophisticated inventions.

So let’s guide those inventions to return the favour,

and use them to repair our natural world.