2 SENSORY SUBSTITUTION

In the early 1800s, neurology began to flourish as researchers investigated the brain and formulated new theories about how its structure and function are related to behavior and mental functions.

During the first half of the century the field was dominated by phrenology, a pseudoscientific discipline that attempted to determine people’s mental traits from skull measurements. This approach eventually fell into disrepute, giving way to another theory called the localization of cerebral function, according to which the brain is composed of discrete anatomical areas, each specialized to perform a specific function.

Subsequent work identified the sensory and motor regions of the brain, revealing not only that they are responsible for feeling and moving, respectively, but that these regions are always located in the same part of the brain. And so, when modern neuroscience was born, around the turn of the twentieth century, the idea that the cerebral cortex is composed of discrete regions specialized for language, touch, vision, and so on had already taken firm root.

With time, however, evidence began to emerge that the cortex is in fact highly plastic, and that the so-called modular organization of the brain is not set in stone. Much of this evidence comes from studies of blind and deaf people, whose brains have been completely deprived of a certain type of sensory input. Such work clearly shows that these cortical areas are not as specialized as we once thought—for example, the visual and auditory regions of the cortex can not only process information from other sense organs, but they can also contribute to non-sensory processes such as language.

With time, however, evidence began to emerge that the cortex is in fact highly plastic, and that this so-called modular organization is not set in stone.

From Phrenology to the Localization of Cerebral Function

Phrenology was founded by the great anatomist Franz Joseph Gall, who stated that he first formulated his ideas at nine years of age. As a schoolchild, Gall had noticed that a classmate with a superior memory for words also had bulging eyes, and believed that the two characteristics appeared together in others. “Although I had no preliminary knowledge, I was seized with the idea that eyes thus formed were the mark of an excellent memory,” he wrote. “Later on… I said to myself; if memory shows itself by a physical characteristic, why not other features? And this gave me the first incentive for all my researches.”

Gall began lecturing about phrenology in 1796, a year after graduating from medical school, and first published his theory in 1808. He came to believe that the region above the eyes was devoted to the “Faculty of Attending to and Distinguishing Words, Recollections of Words, or Verbal Memory.” Later on, he documented the cases of two men who could not recall the names of relatives and friends as a result of sword injuries above the eye, which he took as confirmation of the early observations he had made at school.

He believed that “Destructiveness” resides above the ear, because this region was prominent in another schoolchild he knew, who was “fond of torturing animals,” and in an apothecary who went on to become an executioner. He localized “Acquisitiveness” to another region slightly further back, because that region seemed to be disproportionately large in the pickpockets he had met; and “Ideality” to a region he believed to be prominent in statues of poets, writers, and other great thinkers, the area of the head they rubbed while writing.

Gall collected some 400 skulls throughout his career, including those of public intellectuals and psychopaths, and his theory was based almost exclusively on measurements he took from them. Overall, he claimed to have localized 27 mental faculties, and argued that 19 of them—including courage and the senses of space and color—could also be demonstrated in animals, whereas others—such as wisdom, passion, and a sense of satire—were unique to humans.

Though they faced criticism all along, the phrenologists remained influential up to the mid-nineteenth century. Their methods were eventually discredited as unscientific, however—Gall and his colleagues had “cherry-picked” their evidence, discarding any that was inconsistent with their theory—and so, by the 1870s the localization theory had become widely accepted, largely as the result of clinical investigations involving patients with brain damage.

In 1861, a French physician named Pierre Paul Broca described a handful of stroke patients who had been admitted to the hospital where he worked, all of whom had lost the ability to speak. Upon their death, Broca examined their brains, and noted that all of them were damaged in the same region of the left frontal lobe. Ten years later, the German pathologist Karl Wernicke described another group of stroke patients, who had lost the ability to understand spoken language due to damage affecting a region of the left temporal lobe.

Others found yet more evidence for the localization of cerebral function. Notably, the physiologists Gustav Fritz and Eduard Hitzig electrically stimulated and selectively destroyed parts of animals’ brains; in doing so they localized the primary motor cortex to the precentral gyrus, and confirmed that this strip of brain tissue in each hemisphere controls movements of the opposite side of the body. But it was largely due to Broca’s work that the cortical localization theory gained widespread acceptance.1

The Brain Mappers

By the time modern neuroscience was born around the turn of the twentieth century, the idea that the cerebral cortex is composed of discrete anatomical regions with specialized functions was already firmly established. Even so, more evidence emerged in the early part of the twentieth century, and so the concept became further entrenched.

At around this time, a German neuroanatomist named Korbinian Brodmann began examining the microscopic structure of the human brain, and noticed that he could distinguish between different parts according to how the cells are organized in each. On this basis, Brodmann divided the cerebral cortex into 52 regions and assigned a number to each. Brodmann’s system of neuroanatomical classification is still used to this day—Brodmann’s areas 1, 2, and 3 make up the primary somatosensory cortex, which is located in the postcentral gyrus and receives touch information from the skin surface; Brodmann’s area 4 is the primary motor cortex; and Brodmann’s area 17 is the primary visual cortex.

In the 1920s, the Canadian neurosurgeon Wilder Penfield pioneered a technique to electrically stimulate the brains of conscious epilepsy patients, in order to determine the location of the abnormal brain tissue causing their seizures. Epilepsy can usually be treated effectively with anticonvulsant medication, but for the minority of patients who are unresponsive to drugs, surgery may be performed as a last resort, to remove the abnormal tissue and alleviate the debilitating seizures.

The brain is an extremely complex organ, and neurosurgery always runs the risk of causing collateral damage to areas involved in important functions such as language and movement. To avoid such damage, Penfield deliberately kept his patients conscious while he electrically stimulated the cortex, so that they could report their experiences back to him. When he stimulated the postcentral gyrus, for example, patients described feeling a touch sensation on some part of their body; stimulation of the precentral gyrus caused muscles in the corresponding part of the body to twitch; and stimulation of parts of the left frontal lobe interfered with the ability to speak. In this way he could delineate the boundaries of the abnormal tissue and remove it without inflicting damage on the surrounding tissue.

Penfield operated on approximately 400 patients, and in the process mapped the primary motor and somatosensory areas to the pre- and postcentral gyrus, respectively. He found that both strips of brain tissue are organized topographically, such that adjacent body parts are represented in adjacent regions of brain tissue (with a few minor exceptions); and that not all body parts are represented equally in the brain: the vast majority of the primary motor and somatosensory cortices are devoted to the face and hands, which are the most articulable and sensitive parts of the body.

Penfield summarized these important discoveries in homunculus (“little man”) diagrams drawn up by his secretary. These drawings illustrated the organization of the primary motor and somatosensory cortices and the proportion of their tissues devoted to each body part, and were subsequently adapted into well-known three-dimensional models.2

Sensory Substitution

Early evidence that this localization of brain function is not fixed came from studies performed in the late 1960s by Paul Bach-y-Rita, who built a device that enabled blind people to “see” with their sense of touch. The device consisted of a modified dentist’s chair, fitted with 400 large vibrating pins arranged in a 20 by 20 array in the backrest, and connected to a large video camera that stood behind it on a large tripod.

Bach-y-Rita recruited a handful of blind people to test the apparatus, including a psychologist who had lost his sight at the age of four. To use it, the subjects simply sat in the chair and slowly moved the camera from side to side with a handle. As they did so, the image from the camera was converted into a pattern of vibrations on the array of pins in the backrest.

With extensive training, the subjects learned to use the touch sensations to interpret visual scenes accurately, beginning, after about an hour of training, with the ability to discriminate vertical, horizontal, diagonal, and curved lines, and then to recognize shapes. After more than about 10 hours of training, all of them could recognize common household objects, discern shadows and perspective, and even identify other people from their facial features.3

Bach-y-Rita argued that this ability was due to “cross-modal” mechanisms, whereby information that is normally conveyed by one sense, such as vision, is somehow transformed and conveyed by another, such as touch or sound. Since then, researchers have documented numerous examples of cross-modal plasticity, using modern neuroimaging techniques such as functional magnetic resonance imaging (fMRI) and transcranial magnetic stimulation (TMS).

Brain imaging studies reveal that the primary visual cortex is activated when blind people read braille, which requires fine motor control and touch discrimination to recognize the patterns of raised dots. This activation is associated with increased activity in downstream visual regions involved in shape recognition and with reduced activity in the somatosensory area, compared to sighted people. The same pattern is found not only in people who were born blind and those who lost their sight at an early age, but also in those who went blind later in life.

Interference with visual cortical activity, for example by the use of TMS, impedes touch perception in blind people but not in sighted controls, confirming that the activity in the visual cortex is indeed related to the processing of touch information, rather than merely coincidental.

Blind people can also learn to navigate by echolocation, by making clicking sounds with their tongue or tapping sounds with their feet, and using information in the returning echoes to perceive physical aspects of their surroundings. This requires huge amounts of training, but those who become adept at it can use echolocation to perform extremely complex actions that most of us could not imagine doing without sight, such as playing video games or riding a bike. And when blind people echolocate, the sound information is processed in visual rather than auditory parts of the brain.4,5

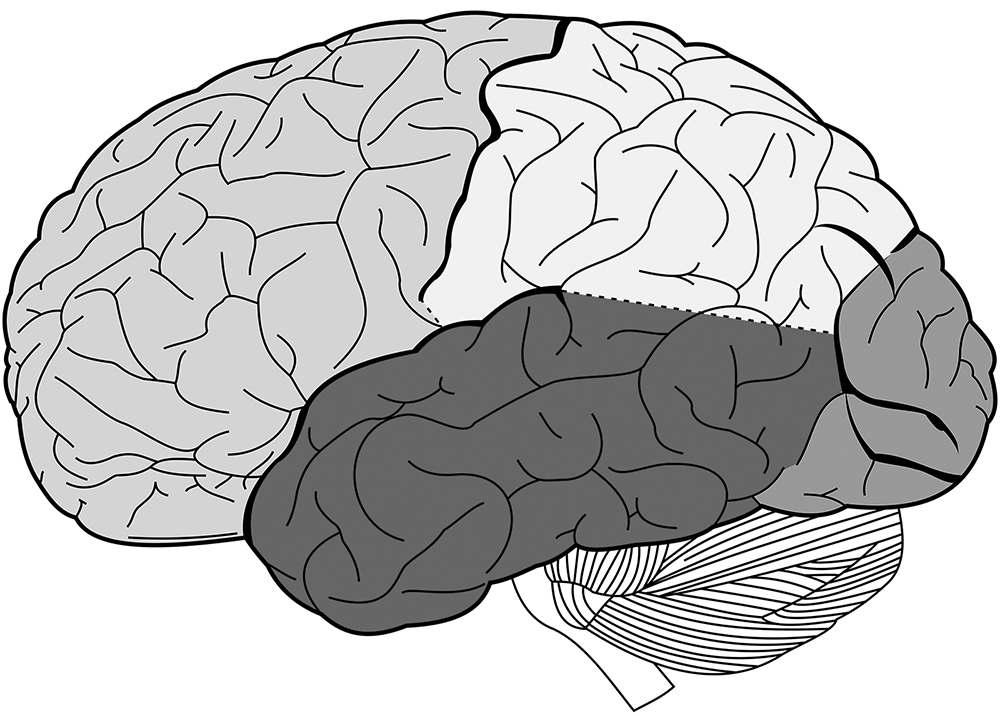

Figure 2 The lobes of the brain. Clockwise, from left to right: frontal lobe, parietal lobe, occipital lobe, temporal lobe.

The visual system is often divided into two distinct pathways that run in parallel through the occipital lobe at the back of the brain—an upper stream that processes spatial information (the “where” pathway) and a lower one involved in object recognition (the “what” pathway). This organization seems to be preserved in the blind: when blind people learn to echolocate, the upper part of the visual cortex is activated when they are locating objects, and the lower part when they are identifying them.6

Thus, when deprived of the sensory inputs it normally receives, the visual cortex can switch roles and process other types of sensory information. Even more remarkably, it can adapt in such a way as to perform other, nonsensory functions, such as language. The same kind of brain scanning experiments show that this brain region is activated when blind people generate verbs, listen to spoken language, and perform verbal memory and high-level verbal processing tasks.

Blind people outperform sighted subjects on these tasks, and the extent of activation in their visual cortices is closely correlated to their performance in verbal memory tests. These studies also show that reading braille preferentially activates the front end of the visual cortex, whereas language activates the back region, and some find that the left visual cortex becomes more active than the right during language tasks, possibly because language centers are usually located in the left hemisphere. And just as interfering with visual cortical activity disrupts blind people’s ability to process touch sensations and understand braille, so too does it impair their performance on verbal memory tasks.7

The brains of deaf people also show major plastic changes. In hearing people, sound information from the ears is processed by the auditory cortices in the temporal lobes. In people who are born deaf, however, these same brain areas are activated in response to visual stimuli. Deaf people also appear to have enhanced peripheral vision. This is linked to an increase in the overall area of the optic disc, where fibers of the optic nerve exit the eye on their way to the brain, and to thickening around its edges; it also suggests that the “where” stream of the visual pathway is stronger.

Neuroplasticity in deaf people is not confined to the visual and auditory systems. Using diffusion tensor imaging (DTI) to visualize brain connectivity, researchers have found that deafness is associated with major changes in long-range neural pathways, especially those between sensory areas of the cerebral cortex and a subcortical structure called the thalamus.

The thalamus has many important functions, particularly in relaying information from the sense organs to the appropriate cortical region, thus regulating the flow of information between different regions of the cortex. Deaf people exhibit changes in the microscopic structure of thalamus-cortex connections in every lobe of the brain, when compared to hearing people. Thus, deafness appears to induce brain-wide plastic changes that profoundly alter the way in which information flows through the brain.8

With advances in technology, sensory substitution devices have come a long way from Bach-y-Rita’s cumbersome contraption. Rather than using them only as experimental tools, many research groups are now developing these devices as prosthetics that help blind and deaf people compensate for their sensory loss, and in June 2015 one such device was approved for use by the United States Food and Drug Administration (FDA). The BrainPort V100 is essentially a miniaturized version of Bach-y-Rita’s apparatus, consisting of a video camera mounted onto a pair of sunglasses, and a 20 by 20 array of electrodes fitted onto a small, flat piece of plastic that is placed in the mouth. Computer software translates visual images from the camera, and transmits them to the electrodes, so that they are perceived as a pattern of tingling sensations on the tongue. In tests, about 70% of blind people learn to use the device to recognize objects after about a year of training.

Cross-Modal Processing and Multisensory Integration

As studies of blindness and deafness show, the cerebral cortex has a remarkable capacity for plasticity, and the localization of brain function is not as strict as the neurologists of the nineteenth century believed it to be. Regions that are normally specialized to perform a specific function can switch roles and process other kinds of information, and the visual cortex in particular has been shown to be capable of performing a variety of nonvisual functions.

Regions that are normally specialized to perform a specific function can switch roles and process other kinds of information, and the visual cortex in particular has been shown to be capable of performing a variety of nonvisual functions.

Under normal circumstances, the brain’s sensory pathways are not entirely separate, but are interconnected and so can interact and influence each other in various ways. And while most primary sensory areas specialize in processing information from one particular sense organ, most of their downstream partners are so-called association areas, which combine various types of information in a process called multisensory integration.

Cross-modal processing and multisensory integration are important aspects of normal brain function, as the McGurk effect demonstrates. The McGurk effect is a powerful illusion that arises when there is a discrepancy between what we see and what we hear: the best example is a film clip of someone saying the letter g, dubbed with a voice saying the letter b, which is perceived as d. This consistent error clearly shows that vision and hearing interact and that the interaction aids our perception of speech.

Some researchers now argue that sensory substitution shares characteristics of, and is an artificial form of, a neurological condition called synesthesia, in which sensory information of one type gives rise to percepts in another sensory modality.9 For example, the physicist Richard Feynman was a grapheme-color synesthete, for whom each letter of the alphabet elicited the sensation of a specific color, so that he saw colored letters when he looked at equations. The artist Wassily Kandinsky had another form of synesthesia. He experienced sound sensations in response to colors, and once said that he tried to create the visual equivalent of a Beethoven symphony in his paintings.

Once thought to be extremely rare, synesthesia is now believed to be relatively common, and may be experienced by one in every hundred people, or more. More than 40% of synesthetes have a relative with the condition, indicating that genetics plays a big role. With training, however, non-synesthetes can learn to associate letters with colors or sounds, so that they evoke synesthetic experiences, and it is likely that this learning also occurs as a result of cross-modal plasticity.

Exactly how cross-modal plasticity arises is still unclear, but it probably involves a number of processes. During development, neural connections form somewhat haphazardly, and are then pruned back in response to sensory experiences that refine and fine-tune them (see chapter 3). Normally, most cross-modal connections are eliminated, but some remain in place for multisensory processing. Cross-modal plasticity may involve the “unmasking” of existing cross-modal connections and pathways that had been dormant, or the formation of entirely new ones, or both. Synesthesia may occur because of similar mechanisms, and the genes associated with it may play a role in preventing proper pruning of cross-modal pathways during brain development.

The question of how regions of the cerebral cortex become specialized to perform a particular function is particularly intriguing. Specialization is likely to occur as a result of both genetic and environmental factors. Cells in a given region are likely to activate specific combinations of genes that predispose them to perform a particular function, based on exactly where they are located and the connections they form. This blueprint can then be built on as sensory information sculpts the developing circuitry, or modified as necessary in the absence of one kind of information or another. Such a picture is supported by a 2014 study, which showed that deleting a single gene could respecify the identity of neurons in the primary somatosensory cortex of adult mice so that those cells processed information from other sensory modalities.10