9

TOWARD A SCIENCE OF COMPANIES

Companies, like people and households, are fundamental elements of the socioeconomic life of cities and states. Innovation, wealth generation, entrepreneurship, and job creation are all manifested through the formation and growth of businesses, firms, and corporations, all of which I shall refer to generically as companies. Companies dominate the economy. For example, the total worth of all publicly traded companies in the United States—technically referred to as their total market capitalization—is more than $21 trillion, which is more than 15 percent larger than the entire GDP. The value and annual sales of any of the very largest companies, such as Walmart, Shell, Exxon, Amazon, Google, and Microsoft, approach half a trillion dollars ($500 billion), implying that a relatively small number of them have the lion’s share of the total market.

Given our previous revelations concerning the rank and size frequency distributions of personal incomes (Pareto’s law) and cities (Zipf’s law), it will come as no great surprise to learn that this lopsidedness is a reflection of a similar power law distribution for the ranking of companies in terms of their market capitalization or annual sales.1 This was already shown in Figure 41 in chapter 8. Thus there are very few extremely large companies, but an enormous number of very small ones, with all of those in between following a simple systematic power law distribution. So while there are almost 30 million independent businesses in the United States, most of which are private with very few employees, there are only about four thousand publicly traded companies, and these constitute the bulk of economic activity.

Given this observation it is natural to ask, as we did for cities and organisms, whether companies scale in terms of their measurable metrics such as their sales, assets, expenses, and profits. Do companies manifest systematic regularities that transcend their size, individuality, and business sector? And if so, could there possibly be a quantitative, predictive science of companies paralleling the science of cities that was developed in previous chapters? Is it possible to understand the general quantitative features of their life histories, how they grow, mature, and eventually die?

As with cities there is an extensive body of literature on companies stretching back to Adam Smith and the founding of modern economics. Much of the work is qualitative, often gathered through case studies of specific companies or business sectors, from which the general dynamical and organizational features of companies are intuited. Historically, companies have been viewed as the necessary agents that organize people to work collaboratively to take advantage of economies of scale, thereby reducing the transaction costs of production or services between the manufacturer or provider and the consumer. The drive to minimize costs so as to maximize profits and gain greater market share has been extraordinarily successful in creating the modern market economy by providing goods and services at affordable prices to vast numbers of people. Despite all of its pitfalls, abuses, and negative unintended consequences, this free market credo has been instrumental in creating an unprecedented standard of living across the globe. It is potentially a harsh and simplistic vision that often ignores quality and, more important, the role of explicit corporate social responsibility as a basic complementary component for why companies exist beyond the primitive drive to maximize profits and compensation.

Most of the literature on companies has been developed from the vantage points of economics, finance, law, and organizational studies, though in more recent years ideas from ecology and evolutionary biology have begun to gain prominence. There is also a large popular literature written by successful investors and CEOs revealing the secrets of their own success, which they tend to extrapolate to explain and prescribe what makes some companies successful and others failures. To varying degrees, all of these provide insights into the nature, dynamics, and structure of companies, but none brings a broad scientific perspective to the problem in the sense I have been using in this book.2

The mechanisms that have traditionally been suggested for understanding companies can be divided into three broad categories: transaction costs, organizational structure, and competition in the marketplace. Although these are interrelated they have very often been treated separately. In the language of the framework developed in previous chapters these can be expressed as follows: (1) Minimizing transaction costs reflects economies of scale driven by an optimization principle, such as maximizing profits. (2) Organizational structure is the network system within a company that conveys information, resources, and capital to support, sustain, and grow the enterprise. (3) Competition results in the evolutionary pressures and selection processes inherent in the ecology of the marketplace.

Automobiles, computers, ballpoint pens, and insurance portfolios cannot be produced on a grand scale without creating a complex organizational structure, which must be adaptive if it is going to survive in a competitive market. As in cities, this necessitates the integration of energy, resources, and capital—the metabolism of the company—with the exchange of information in order to fuel innovation and creativity. In this sense, companies at all scales are classic complex adaptive systems and it is this framework, rooted in the scaling paradigm, that I want to explore. To what extent can a quantitative, mechanistic theory for understanding their growth, longevity, and organization be developed that is complementary to traditional ways of viewing them?

There have been surprisingly few studies on the nature of companies using large data sets spanning the entire spectrum of economic activities and company histories. Most of these have been carried out by researchers inspired by ideas from complex systems, a good example of which is the discovery that the size distribution of companies follows a systematic Zipf-like power law (shown in Figure 41). This insight was made by the computational social scientist Robert Axtell, who was trained in public policy and computer science at Carnegie Mellon University, where he came under the influence of the great polymath Herbert Simon, whom I mentioned earlier.

Axtell, who is at George Mason University in Virginia and is also on the external faculty of the Santa Fe Institute, is a leading expert in agent-based modeling, which is a computational technique used for simulating systems composed of huge numbers of components.3 Basically, the strategy involves postulating simple rules governing the interactions between individual constituent agents, which could be companies, cities, or people, coupled with an algorithm that specifies how they evolve in time and letting the resulting system run on a computer. More sophisticated versions include rules for learning, adaptation, and even reproduction so as to model more realistic evolutionary processes.

With the development of powerful computers, agent-based modeling has become a standard tool for gaining insight into many problems in ecological and social systems such as modeling the structure of terrorist organizations, the Internet, traffic patterns, stock-market behavior, pandemics, ecosystem dynamics, and business strategies. Over the past few years Axtell has used agent-based modeling to try to simulate the entire ecosystem of companies in the United States, encompassing more than six million companies and 120 million workers. This ambitious project relies heavily on census data both as input to constrain the simulation and to test its outcomes.

More recently, he has teamed up with other prominent members of the SFI community, including Doyne Farmer, now a professor at Oxford, and John Geanakoplos, a well-known economist at Yale, to extend this project to try to simulate the entire economy. This is truly ambitious, requiring enormous amounts of input data on everything from financial transactions and industrial production to real estate, government spending, taxes, business investments, foreign trade and investments, and even consumer behavior. The hope is that such an integrated simulation of the whole economy could provide a realistic test bed for evaluating different strategies for economic stimulus, such as whether to reduce taxes or increase public spending; and perhaps most important, to be able to predict tipping points or forecast imminent crises so as to avoid potential recessions or even eventual collapse.4

It is sobering that no such detailed model for how the economy actually works exists and that policy is typically determined by relatively localized, sometimes intuitive, ideas of how it should work. Very little of the thinking explicitly acknowledges that the economy is an ever-evolving complex adaptive system and that deconstructing its multitudinous interdependent components into finer and finer semiautonomous subsystems can lead to misleading, even dangerous, conclusions as testified by the history of economic forecasting. Like long-term weather forecasting, this is a notoriously difficult challenge, and to be fair to economists we should recognize that they are pretty good at forecasting the relatively short term, provided the system remains stable. Traditional economic theory relies heavily on the economy remaining in an approximately equilibrium state. The serious challenge is to be able to predict outlying events, major transitions, critical points, and devastating economic hurricanes and tornadoes where their record has mostly been pretty dismal.

Nassim Taleb, author of the best-selling, highly influential book The Black Swan, has been particularly harsh on economists despite, or maybe because of, having been trained in business and finance.5 He has held positions at several distinguished universities including New York University and Oxford and has focused on the importance of coming to terms with outlying events and developing a deeper understanding of risk. He has been brutally outspoken in his condemnation of classical economic thinking with hyperbolic comments such as: “Years ago, I noticed one thing about economics, and that is that economists didn’t get anything right.” He has even called for the Nobel Memorial Prize in economics to be withdrawn, saying that the damage from economic theories can be devastating. I may disagree with some of Taleb’s ideas and polemics but it’s important and healthy to have such outspoken mavericks challenging the orthodoxy, especially when it’s had such a poor record and its proclamations have major implications for our lives.

The great virtue of agent-based modeling is its potential for providing an alternative framework for addressing some of these big issues by treating the entire system as an integrated entity rather than as a sum of its idealized bits and pieces. It recognizes up front that the economy is typically not in equilibrium but is an evolving system with emergent properties that result from the underlying interactions between its multiple constituent parts.

It does, however, have some serious shortcomings. To begin with, a crucial input is the specification of the rules for how agents behave, interact, and make decisions, and in many cases this has to be based on intuition rather than on fundamental knowledge or principles. Furthermore, it is often very difficult to interpret the results of a detailed simulation and determine the causal relationships between different components and subunits of the system. It can therefore be unclear what the important drivers are that determine specific outputs versus those that are consequences of general principles common to all such systems. In its extreme version the underlying philosophy of agent-based modeling is antithetical to the traditional scientific framework, where the primary challenge is to reduce huge numbers of seemingly disparate and disconnected observations down to a few basic general principles or laws: as in biology, where the principle of natural selection applies to all organisms from cells to whales, or in physics, where Newton’s laws apply to all motion from automobiles to planets. In contrast, the aim of agent-based modeling is to construct an almost one-to-one mapping of each specific system. General laws and principles that constrain its structure and dynamics play a secondary role. For example, in simulating a specific company, every individual worker, administrator, transaction, sale, cost, et cetera is in effect included and each company consequently treated as a separate, almost unique entity, typically without explicit regard either to its systematic behavior or its relationship to the bigger picture.

Clearly, both approaches are needed: the generality and parsimony of “universal” laws and systematic behavior reflecting the big picture and dominant forces shaping general behavior, coupled with and informed by detailed modeling reflecting the individuality and uniqueness of each company. In the case of cities, scaling laws revealed that 80 to 90 percent of their measurable characteristics are determined from just knowing their population size, with the remaining 10 to 20 percent being a measure of their individuality and uniqueness, which can be understood only from detailed studies that incorporate local historical, geographical, and cultural characteristics. It is in this spirit that I now want to explore to what extent this framework can be used to reveal emergent laws obeyed by companies.

1. IS WALMART A SCALED-UP BIG JOE’S LUMBER AND GOOGLE A GREAT BIG BEAR?

The financial services company Standard & Poor’s, best known for its stock market index of U.S.-based companies, the S&P 500, provides a valuable database of all publicly traded companies going back to 1950 with summaries of their financial statements and balance sheets. It’s called Compustat. Unlike analogous databases for organisms and cities, this database does not come for free. S&P wants about $50,000 for access to it. That may be chicken feed to most investors, corporations, and business schools for whom it is intended, but for us mere academics it’s a lot of money, equivalent to the annual salary of a postdoc. Unfortunately, when we organized the ISCOM project to study companies from a scaling perspective we didn’t have that kind of money available, so the study of companies had to go on the back burner and the focus of the project was instead directed to cities, where data came for free.

The city work proved to be much more exciting and productive than I for one had foreseen, and it took much longer than anticipated to return to thinking about companies with the kind of focus the subject deserved, even after we had eventually gained access to the Compustat database via explorative funding from the National Science Foundation. Partly because of this, the analysis and the theoretical framework are less developed than for cities. Nevertheless, significant headway has been made and a coherent picture has emerged to provide the foundations of a coarse-grained science of companies.

The modern concept of a company and the kind of rapid market turnover we see today in which most companies don’t survive for very long has been around for only the last couple of hundred years at most. This is a much shorter time period than the many hundreds or even thousands of years that cities and urban systems have been evolving and in marked contrast to the billions of years that biological life has been thriving. Consequently, there has been much less time for the market forces that act on companies to reach the kind of meta-stable configuration manifested in the systematic scaling laws obeyed by cities and organisms.

As explained in earlier chapters, scaling laws are a consequence of the optimization of the network structures that sustain these various systems resulting from the continuous feedback mechanisms inherent in natural selection and the “survival of the fittest.” In the case of cities, we would therefore expect the emergent scaling laws to exhibit much greater variance around idealized power laws than organisms do, because the time over which evolutionary forces have acted is so much shorter. Comparing fits to scaling in the two cases, such as Figure 1 for animal metabolic rates versus Figure 3 for the patent production in cities, confirms this prediction: there is a consistently larger spread around the fits for cities than for organisms. Extrapolating this to companies where “evolutionary” timescales are even shorter suggests that if they do indeed scale, there should be an even greater spread in the data around idealized scaling curves than for cities and organisms.

The Compustat data set used for the analysis consists of all 28,853 companies that were traded on U.S. markets in the sixty years between 1950 and 2009. The database includes standard accounting measures such as the number of employees, total sales, assets, expenses, and liabilities, each broken down into subcategories that include interest expenses, investments, inventory, depreciation, and so on. The flow diagram shown below indicates how all of these are interrelated.

It was constructed by Marcus Hamilton, a young anthropologist whom we had hired as a postdoc to help lead this effort. Even as a student Marcus had a mission in life: to make anthropology and archaeology more quantitative, computational, and mechanistic. For good reasons these fields have been among the least of the social sciences in appreciating this perspective, so Marcus has had a tough journey. But for us he was perfect. After getting his doctorate he worked with Jim Brown on global sustainability issues from an ecological and anthropological viewpoint before joining us at SFI. He has pioneered some fascinating work on trying to understand hunter-gatherer societies from our scaling perspective and together with José Lobo and me has been developing a theory for how and why our hunter-gatherer ancestors made the crucial transition to sedentary communities that eventually led to city formation. Together with José and Marcus, I recently coauthored a paper that was published in a leading anthropology journal—one of the crowning achievements of my career!

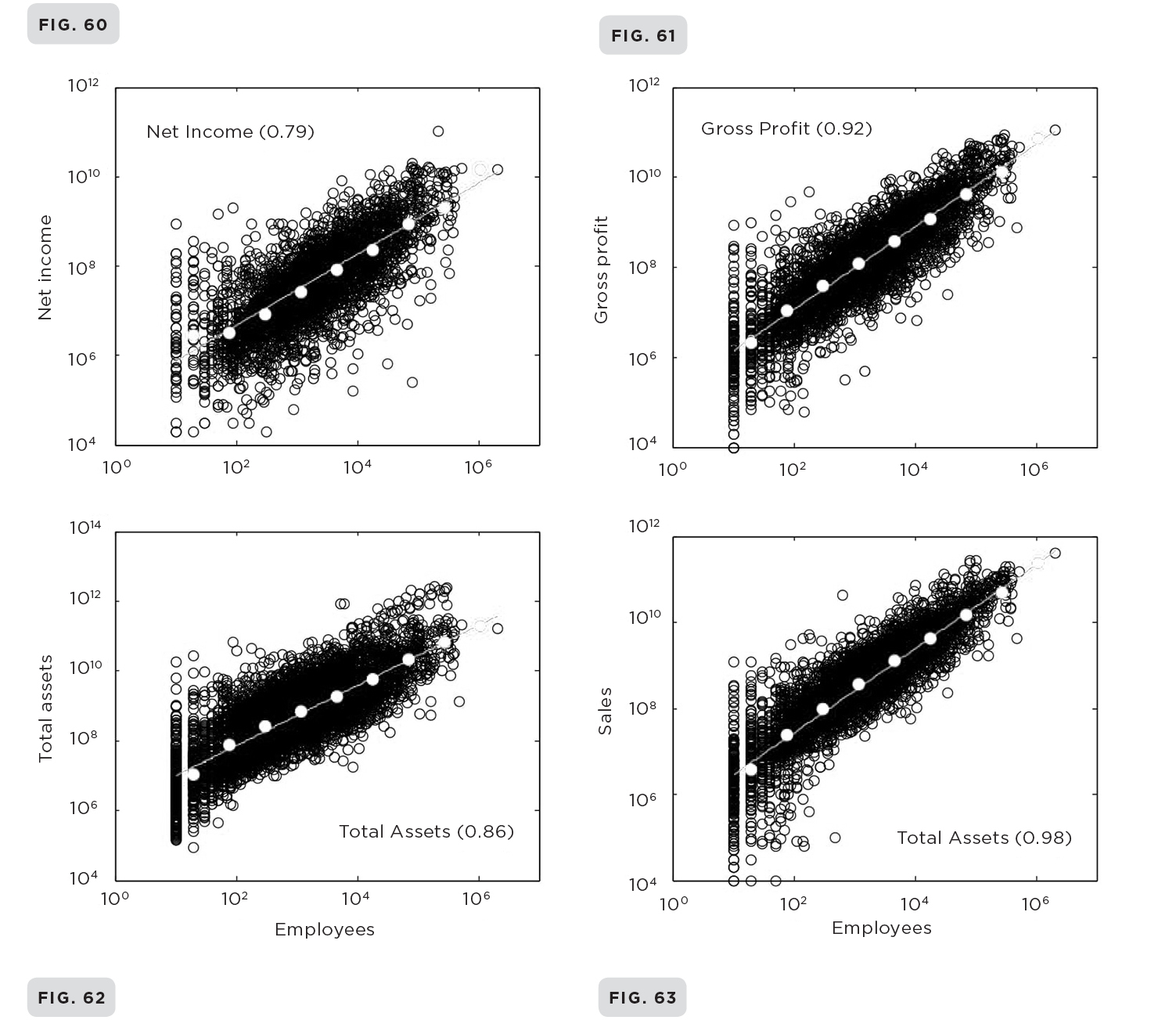

The initial results and conclusions of our investigation into the scaling of companies are very compelling. They provide a powerful basis for developing an understanding of their generic structure and life histories. Figures 60–63 show the sales, incomes, and assets of all 28,853 companies plotted logarithmically against their number of employees. These are the dominant financial characteristics of any company and are standard measures of their fiscal health and dynamics. As these graphs clearly demonstrate, companies do indeed scale following simple power laws and as anticipated they do so with a much greater spread around their average behavior than for either cities or organisms. So in this statistical sense, companies are approximately scaled, self-similar versions of one another: Walmart is an approximately scaled-up version of a much smaller, modest-size company. Even after taking this greater variance into account, this scaling result reveals remarkable regularities in the size and dynamics of companies and is quite surprising given the tremendous variety of different business sectors, locations, and age.

Before expounding further on this, it’s instructive to examine how scaling regularities are extracted from big data sets having a large variance such as these. A standard strategy is to bin the data into a series of equal intervals much like a histogram, and then take the average within each interval. This effectively averages over the fluctuations and reduces the large number of data points to a relatively small number, which is just the number of bins used to divide up the entire interval. The number of employees ranges by a factor of more than a million from the very smallest, mostly young companies with few employees up to giants like Walmart with more than a million. To illustrate the procedure, the data in Figures 60–63 have been binned into eight equal intervals each covering a single order of magnitude. Thus, the first bin includes all companies with fewer than 10 employees, the second all those with 10 to 100, the third all those with 100 to 1,000, and so on, the last bin containing all those with more than a million employees.

Income, profit, assets, and sales for all 28,853 publicly traded companies in the United States from 1950 to 2009 plotted logarithmically against their number of employees showing sublinear scaling with a substantial variance. The dotted line represents the result of the binning procedure explained in the text.

The six points resulting from averaging over each bin are shown as gray dots in the graph. They represent a highly coarse-grained reduction of the data, and as you can see follow a very good straight line supporting the idea that underlying the statistical spread is an idealized power law. Because the size and number of bins used is arbitrary, we could just as well have divided up the entire interval into ten, fifty, or one hundred bins rather than just eight, and test whether the straight line remains robust against increasingly finer resolutions of the data. It does. Although binning is not a rigorous mathematical procedure, the stability of obtaining approximately the same straight-line fit using different resolutions lends strong support to the hypothesis that on average companies are self-similar and satisfy power law scaling. The graph in Figure 4 at the opening of the book is in fact the result of this binning procedure, as is the graph in Figure 41 taken from Axtell’s work on showing that companies follow Zipf’s law. These results strongly suggest that companies, like cities and organisms, obey universal dynamics that transcend their individuality and uniqueness and that a coarse-grained science of companies is conceivable.

Additional evidence supporting this discovery came from an unlikely source, namely the Chinese stock market. In 2012 Zhang Jiang, a young faculty member in the School of Systems Science at Beijing Normal University, joined our collaboration. Jake, as he is known to most of us, visited SFI in 2010 and became enthusiastic about getting involved in the company project. He had access to a database similar to Compustat covering all Chinese companies participating in their emerging stock market. Following the collapse of the Cultural Revolution and the rise to power of Deng Xiaoping, economic reform led to the reestablishment of a securities market in China, and at the end of 1991, the Shanghai Stock Exchange opened for business.

When Jake analyzed the data, he found to our great satisfaction that Chinese companies scaled in a similar fashion to U.S. companies, as can be seen in Figures 64–67. This, however, came as something of a surprise considering that the Chinese market had been in operation for less than fifteen years. Apparently, in a vigorous fast-track setting, competitive “free” market dynamics are sufficiently potent for systematic trends to begin to emerge relatively quickly. This is no doubt related to the extraordinarily rapid pace at which the Chinese stock market and its overall economy have grown in such a short time. The Shanghai exchange is already the fifth largest in the world and in Asia is second only to Hong Kong’s. Its total market capitalization is $3.5 trillion, compared with more than $21 trillion for the New York Stock Exchange and $7 trillion for Hong Kong’s.

Comparison of the scaling of U.S. companies with those of China showing a similar behavior.

2. THE MYTH OF OPEN-ENDED GROWTH

A crucial aspect of the scaling of companies is that many of their key metrics scale sublinearly like organisms rather than superlinearly like cities. This suggests that companies are more like organisms than cities and are dominated by a version of economies of scale rather than by increasing returns and innovation. This has profound implications for their life history and in particular for their growth and mortality. As we saw in chapter 4, sublinear scaling in biology leads to bounded growth and a finite life span, whereas in chapter 8 we saw that the superlinear scaling of cities (and of economies) leads to open-ended growth.

Their sublinear scaling therefore suggests that companies also eventually stop growing and ultimately die, hardly the image that many CEOs would cherish. It’s actually not quite as simple as that because the prediction for the growth of companies is more subtle than just a simple extrapolation from biology. To explain this I am going to present a simplified version of how the general theory applies to companies focusing on the essential features that determine their growth and mortality.

The sustained growth of a company is ultimately fueled by its profits (or net revenue), where these are defined as the difference between sales (or total income) and total expenses; expenses include salaries, costs, interest payments, and so on. To continue growth over a prolonged period, companies must eventually return a profit, part of which is sometimes used to pay dividends to shareholders. Together with other investors, they in turn may buy additional stocks and bonds to help support the future health and growth of the company. However, to understand their generic behavior it is more transparent to ignore dividends and investments, which are primarily important for smaller, younger companies, and concentrate on profits, which are the dominant driver of growth for larger ones.

As we’ve seen, growth in both organisms and cities is fueled by the difference between metabolism and maintenance. Using that language, the total income (or sales) of a company can be thought of as its “metabolism” while expenses can be thought of as its “maintenance” costs. In biology, metabolic rate scales sublinearly with size, so as organisms increase in size the supply of energy cannot keep up with the maintenance demands of cells, leading to the eventual cessation of growth. On the other hand, the social metabolic rate in cities scales superlinearly, so as cities grow the creation of social capital increasingly outpaces the demands of maintenance, leading to faster and faster open-ended growth.

So how does this dynamic play out in companies? Intriguingly, companies manifest yet another variation on this general theme by following a path that sits at the cusp between organisms and cities. Their effective metabolic rate is neither sub- nor superlinear but falls right in the middle by being linear. This is illustrated in Figures 63 and 64, where sales are plotted logarithmically against the number of employees showing a best fit with a slope very close to one. Expenses, on the other hand, scale in a more complicated fashion: they start out sublinearly but, as companies become larger, eventually transition to becoming approximately linear. Consequently, the difference between sales and expenses, which is the driver of growth, also eventually scales approximately linearly.

This is good news because, mathematically, linear scaling leads to exponential growth and this is what all companies strive for. Furthermore, this also shows why, on average, the economy continues to expand at an exponential rate because the overall performance of the market is effectively an average over the growth performances of all its individual participating companies. Although this may be good news for the overall economy, it sets a major challenge for each individual company because each one has to keep up with an exponentially expanding market. So even if a company is growing exponentially (the good news), this may not be sufficient for it to survive unless its expansion rate is at least that of the market (the bad news). This primitive version of the “survival of the fittest” for companies is the essence of the free market economy.

More good news is that the nonlinear scaling of maintenance expenses in younger companies, buoyed by investments and the ability to borrow large amounts relative to their size, fuels their rapid growth. Consequently, the idealized growth curve of companies has characteristics in common with classic sigmoidal growth in biology in that it starts out relatively rapidly but slows down as companies become larger and maintenance expenses transition to becoming linear. However, unlike biology, whose maintenance costs do not transition to linearity, companies do not cease growing but continue to grow exponentially, though at a more modest rate.

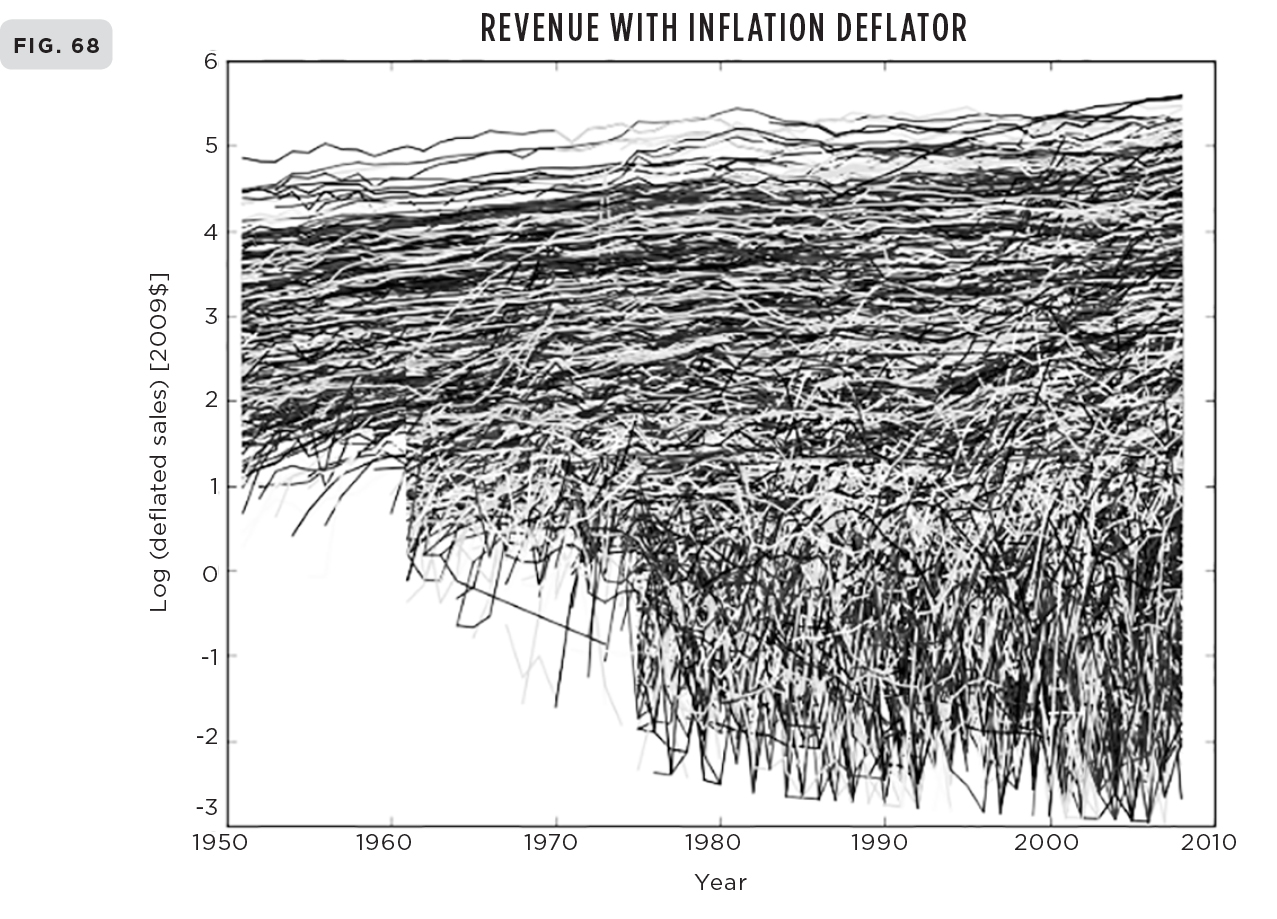

Let’s see how this scenario compares with data. Figure 68 is a wonderful graph showing the growth of sales for all 28,853 companies in the Compustat data set plotted together in real calendar time, adjusted for inflation. To get all of them onto a single manageable graph, the vertical axis representing sales is logarithmic. Despite being a “spaghetti” plot, the graph is surprisingly illuminating. The overall trend is clear: as predicted, many young companies shoot out of the starter’s block and grow rapidly before slowing down, while older, more mature ones that have survived continue growing but at a much slower rate. Furthermore, the upward trends of these older, slower-growing companies all follow an approximate straight line with similar shallow slopes. On this semilogarithmic plot, where the vertical axis (sales) is logarithmic but the horizontal one (time) is linear, a straight line means mathematically that sales are growing exponentially with time. Thus, on average, all surviving companies eventually settle down to a steady but slow exponential growth, as predicted.

This is very encouraging, but there’s a potential pitfall that becomes apparent when the growth of each company is measured relative to the growth of the overall market. In that case, as can be clearly seen in Figure 70 where the overall growth of the market has been factored out, all large mature companies have stopped growing. Their growth curves when corrected for both inflation and the expansion of the market now look just like typical sigmoidal growth curves of organisms in which growth ceases at maturity, as illustrated in Figures 15–18 of chapter 4. This close similarity with the growth of organisms when viewed in this way provides a natural segue into whether this similarity extends to mortality and whether, like us, all companies are destined to die.

3. THE SURPRISING SIMPLICITY OF COMPANY MORTALITY

After growing rapidly in their youth, almost all companies with sales over about $10 million end up floating on top of the ripples of the stock market. Of these, many operate with their metaphorical noses just above the surface. This is a precarious situation because if a big wave comes along they may well drown. Even with profits growing exponentially, let alone if they are suffering losses, companies become vulnerable if they are unable to keep up with the growth of the market. This is greatly exacerbated if a company is not sufficiently robust to withstand the continual ups and downs inherent in the market as well as in its own finances. A sizable fluctuation in the market or some unexpected external perturbation or shock at the wrong time can be devastating to a company whose sales and expenses are finely balanced. This may lead to contraction and decline from which a company might recover, but in severe cases it can be devastating and lead to its demise.

(68) “Spaghetti” graph showing the growth of sales for all 28,853 publicly traded companies plotted together in real time and adjusted for inflation; notice the rapid “hockey stick” rise of small, younger companies versus the relative slow growth of larger, mature ones. (69) Growth curves of a sampling of the oldest and largest companies showing their relatively slow growth; also shown is Walmart, which is a much younger company but whose sales leveled off to similar values after a rapid rise.

“Spaghetti” graph showing the growth of sales for all 28,853 publicly traded companies relative to the expansion of the overall market plotted together in real time, corrected for inflation. When adjusted for the expansion of the market, the largest companies cease growing.

This sequence of events probably sounds familiar because it’s not so very different from the process that leads to our own death. We, too, are finely balanced between metabolism and maintenance costs, a condition biologists refer to as homeostasis. The gradual buildup of unrepaired damage resulting from the wear and tear inherent in the process of living makes us less resilient and increasingly vulnerable to fluctuations and perturbations as we age. A case of the flu or pneumonia or a heart attack or stroke that might have been handled in youth and throughout midlife is often fatal once we have reached “old age.” Ultimately, we reach a stage where even a small perturbation such as a minor cold or heart flutter can lead to death.

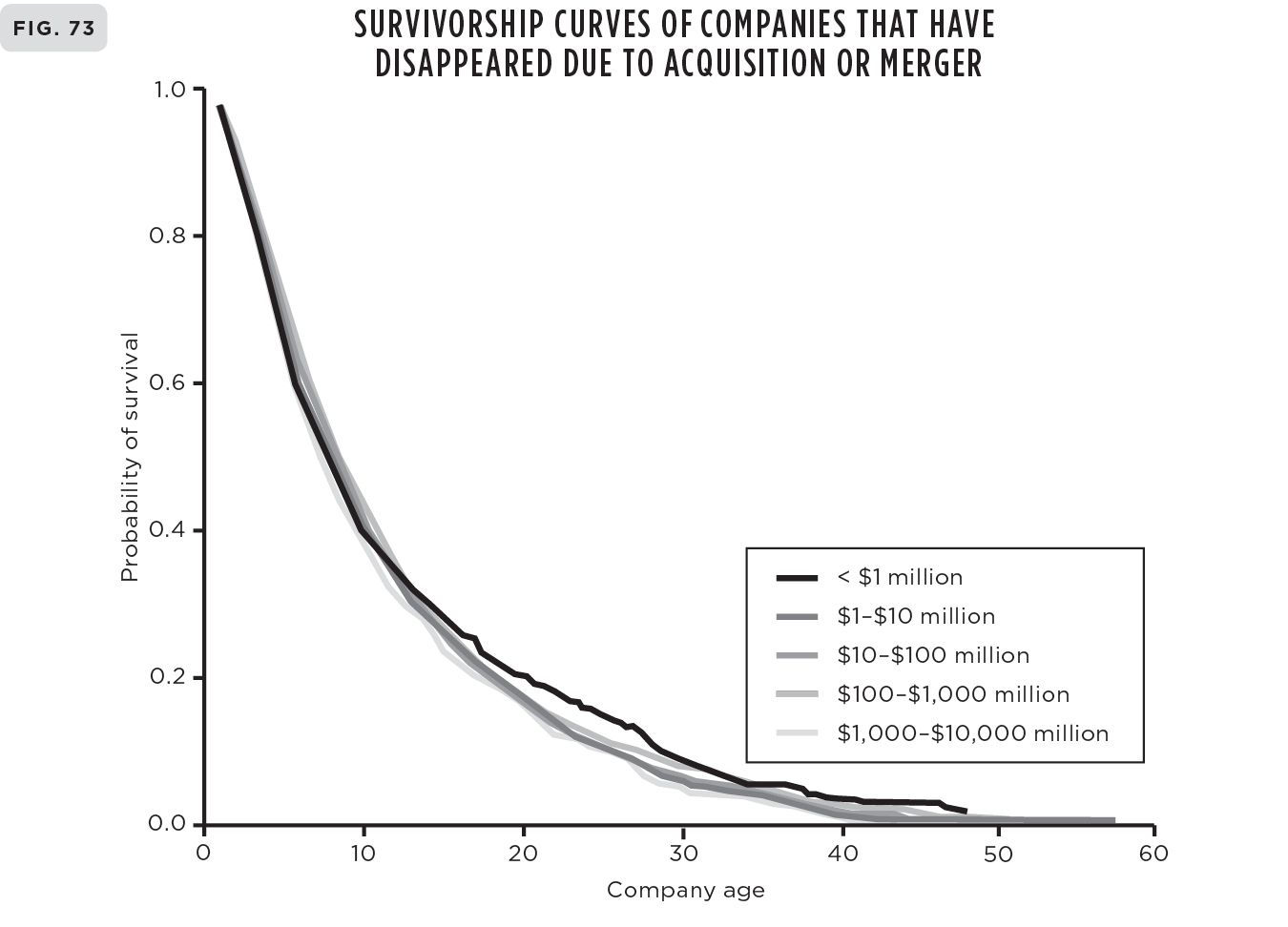

While this image provides a useful metaphor for the mortality of companies, it represents only part of the picture. To dig a little deeper we must first define what we mean by death of a company because many of them disappear by mergers or acquisitions rather than by liquidating or going bankrupt. A useful definition is to use sales as the indicator of a company’s viability, the idea being that if it’s metabolizing then it’s alive. Thus birth is defined as the time when a company first reports sales and death as the time when it ceases to do so. With this definition companies may die through a variety of processes: they may split, merge, or liquidate as economic and technological conditions change. While liquidation is often responsible for the death of companies, a much more common cause is their disappearance through mergers and acquisitions.

Of the 28,853 companies that have traded on U.S. markets since 1950, 22,469 (78 percent) had died by 2009. Of these 45 percent were acquired by or merged with other companies, while only about 9 percent went bankrupt or were liquidated; 3 percent privatized, 0.5 percent underwent leveraged buyouts, 0.5 percent went through reverse acquisitions, and the remainder disappeared for “other reasons.”

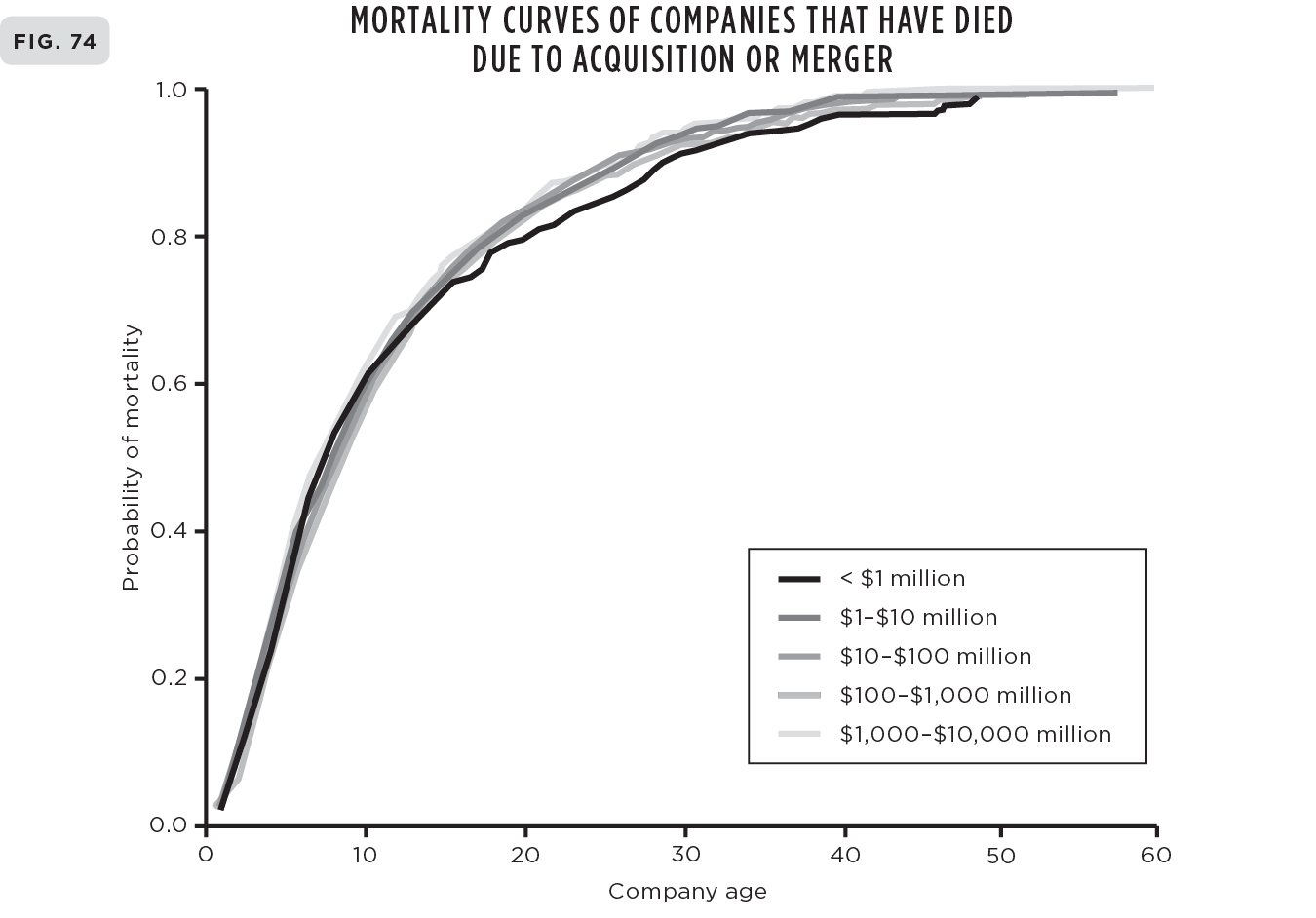

Figures 71–74 show the survivorship and mortality curves for companies that were born and died within the period covered by the data set (1950–2009) as a function of how long they lived.6 The curves have been separated into bankruptcies and liquidations on the one hand and into acquisitions and mergers on the other, and then further deconstructed according to the size of their sales. As can clearly be seen, the general structure of these curves is almost the same regardless of how the data are sliced, even when companies are separated into individual business sectors. In all cases the number of survivors falls rapidly immediately following their initial public offering, with fewer than 5 percent remaining alive after thirty years. Similarly, the mortality curves show that the number that have died reaches almost 100 percent within fifty years, with almost half of them having already disappeared in less than ten. It’s tough being a company! The survival curves are well approximated by a simple exponential as shown in Figure 75, where the number of companies that have survived is plotted logarithmically versus their age; plotted this way, exponentials appear as straight lines.

You might have thought that these results would depend sensitively on whether death occurred via mergers and acquisitions rather than from bankruptcies and liquidations. However, as you can see, they both follow very similar exponential survival curves with only slightly different values for their mortality. One might also have expected the results to depend on which business sector a company is in. The dynamics and competitive market forces would seem to be quite different, for instance, in the energy sector compared with IT, transportation, or finance. Surprisingly, however, all business sectors show similar characteristic exponential survival curves with similar timescales: no matter which sector or what the stated cause is, only about half of the companies survive for more than ten years.

This is consistent with an analysis showing that companies scale in approximately the same way when broken down into separate business categories. Within each sector, power laws are obtained having exponents close to those found for the entire cohort of companies—those shown in Figure 75. In other words, the general dynamics and overall life history of companies are effectively independent of the business sector in which they operate. This strongly suggests that there is indeed a universal dynamic at play that determines their coarse-grained behavior, independent of their commercial activity or whether they are eventually going to go bankrupt or merge with or be bought by another company. In a word, this strongly supports the idea of a quantitative science of companies.

Here: (71–74) Survivorship and mortality curves for U.S. publicly traded companies between 1950 and 2009 separated into bankruptcies and liquidations and acquisitions and mergers, and further deconstructed into different-size classes according to sales. Note how little variation there is among them. (75) Number of companies (N) plotted logarithmically versus their life span (t) showing classic exponential decay and therefore a constant mortality rate, as indicated by the straight line.

This is really quite amazing. After all, when we think of the birth, death, and general life history of companies as they struggle to establish and maintain themselves in the marketplace dealing with the vagaries, uncertainties, and unpredictability of economic life and the myriad specific decisions and accidents that led to the successes and failures that preceded their death, it’s hard to believe that collectively they were following such simple general rules. This revelation echoes the surprise that organisms, ecosystems, and cities are likewise subject to generic constraints, despite the apparent uniqueness and individuality of their life histories.

Exponential survival curves similar to those exhibited by companies are manifested in many other collective systems such as bacterial colonies, animals and plants, and even the decay of radioactive materials. It is also believed that the mortality of prehistoric humans followed these curves before they became sedentary social creatures reaping the benefits derived from community structures and social organization. Our modern survival curve has evolved from being a classic exponential to developing a long plateau stretching over fifty years, as illustrated in Figure 25 in chapter 4, showing that we now live much longer on average than our hunter-gatherer forebears even though our maximum life span has remained pretty much what it always was.

What is the special property of exponentials that they describe the decay of so many disparate systems? It’s simply that they arise whenever the death rate at any given time is directly proportional to the number that are still alive. This is equivalent to saying that the percentage of survivors that die within equal slices of time at any age remains the same. A simple example will make this clear: taking one year as the time slice, this says that the percentage of five-year-old companies that die before they reach six years old is the same as the percentage of fifty-year-old companies that die before they reach fifty-one. In other words: the risk of a company’s dying does not depend on its age or size.

A lingering issue of possible concern is that the data cover only sixty years, so companies older than this are automatically excluded. Actually, it’s worse than this because the analysis includes only those companies that were born and died in the time window between 1950 and 2009, thereby excluding all those that were born before 1950 and/or were still alive in 2009. This could clearly lead to a systematic bias in the estimates of life expectancy. A more complete analysis therefore needs to include these so-called censored companies, whose life spans are at least as long as and likely longer than the period over which they appear in the data set. This actually involves a sizable number of companies: in the sixty years covered, 6,873 firms were still alive at the end of the window in 2009. Fortunately, there is a well-established sophisticated methodology, called survival analysis, that has been developed precisely for addressing this issue.

Survival analysis was developed in medicine in order to estimate survival probabilities for patients who have undergone therapeutic interventions under test conditions. These tests have necessarily to be conducted over a limited time period, leading to the problem we face here, namely, that many subjects die after the test period has ended. The technique commonly used, called the Kaplan-Meier estimator, employs the entire data set and optimizes probabilities assuming that each death event is statistically independent of every other death.7

A detailed analysis using this technique was carried out for the complete cohort of companies in the Compustat data set, including all those that were previously censored, with the result that there was only a modest change from the previous censored estimates. The half-life of U.S. publicly traded companies was found to be close to 10.5 years, meaning that half of all companies that began trading in any given year have disappeared in 10.5 years.

Most of the hard work on this was done by an undergraduate intern, Madeleine Daepp, who joined us under the aegis of a wonderful program called Research Experience for Undergraduates (REU) funded primarily by the NSF. This provides support for undergraduates to spend a summer working in real-life research at institutions across the entire spectrum of scientific activity. At SFI we usually have about ten such bright young minds on-site, all of whom are treated as equal members of the institute and who work closely with individual researchers. It’s a great experience for both us and them. Madeleine was a junior in mathematics at Washington University in St. Louis when she joined us and worked under the direct supervision of Marcus Hamilton. It’s hard to complete such a project from scratch in just ten weeks, so Madeleine returned several times over the ensuing three years before the work was eventually completed and a successful paper published. I was delighted to learn just recently that she has been accepted to the PhD program in urban planning at MIT, which is one of the best in the world. I expect to hear great things of her in the future.

The survival analysis technique used for dealing with “incomplete observations” such as we have here was invented in 1958 by two statisticians, Edward Kaplan and Paul Meier. It has since been extended to areas outside of medicine and used to estimate, for example, how long people can expect to remain unemployed after a job loss or how long it takes for machine parts to fail. Amusingly, Kaplan and Meier each submitted similar but independent papers to the prestigious Journal of the American Statistical Association for publication, and a wise editor persuaded them to combine them into a single paper. This has since been cited in other scholarly papers more than 34,000 times, which is an extremely large number for an academic paper. For instance, Stephen Hawking’s most famous paper, “Particle Creation by Black Holes,” has been cited less than 5,000 times. Depending on the field, most papers are fortunate to receive even 25 citations. Several of my own that I thought were pretty damn good have been cited less than 10 times, which is pretty discouraging, although I am a coauthor of two of the most cited papers in ecology, each having more than 3,000 citations.

4. REQUIESCANT IN PACE

Although there are significant differences, it’s hard not to be struck by how similar the growth and death of companies and organisms are when viewed through the lens of scaling—and how dissimilar they both are to cities. Companies are surprisingly biological and from an evolutionary perspective their mortality is an important ingredient for generating innovative vitality resulting from “creative destruction” and “the survival of the fittest.” Just as all organisms must die in order that the new and novel may blossom, so it is that all companies disappear or morph to allow new innovative variations to flourish: better to have the excitement and innovation of a Google or Tesla than the stagnation of a geriatric IBM or General Motors. This is the underlying culture of the free market system.

The great turnover of companies and especially the continual churning of mergers and acquisitions are integral to the market process. And of course this means that the Googles and Teslas, which may seem invincible now, will themselves eventually fade away and disappear. From this point of view we should not lament the passing of any company—it’s an essential component of economic life—we should only mourn and be concerned about the fate of the people who often suffer when companies disappear, whether they are the workers, management, or even the owners. If only we could tame the potential brutality and greed of the survival of the fittest and soften some its more egregious consequences by formulating a magic algorithm for how to balance the classic tension between regulation, government intervention, and uncontrolled rampant capitalism. This struggle painfully played itself out as we witnessed the struggle between the death throes of corporations that probably should have died and the desire to save jobs and protect the lives of workers because certain incompetent if not duplicitous corporations were deemed “too big to fail” during the 2008 financial crisis.

It may be a platitude, but it’s nevertheless true that nothing stays the same. Both Standard & Poor’s and the business magazine Fortune construct ongoing lists of the five hundred most successful companies, and there is a certain prestige associated with being named on both of these lists. Richard Foster, who was for twenty-two years a director and senior partner of the well-known business consultants McKinsey & Company, analyzed the tenure of companies on these lists and discovered that it has been regularly decreasing over the past sixty years. For example, he found that in 1958 a company could expect to stay on the S&P 500 for about sixty-one years, whereas today it’s more like eighteen. Of the Fortune 500 companies in 1955, only sixty-one were still on the list in 2014. That’s only a 12 percent survival rate, the other 88 percent having gone bankrupt, merged, or fallen from the list because of underperformance. More poignant, perhaps, is that most of those on the list in 1955 are unrecognizable and completely forgotten today; how many remember Armstrong Rubber or Pacific Vegetable Oil?

In 2000 Foster wrote an influential best-seller on business, aptly titled Creative Destruction.8 He was much taken by the ideas on complexity being developed at the Santa Fe Institute, so much so that he joined the board of trustees and persuaded McKinsey to fund a professorship in finance which was held by Doyne Farmer. I got to know him when I first engaged with SFI in the late 1990s. He was convinced that the scaling and network ideas that we had been developing in biology could provide major insights into how companies function. He pointed out that there were no quantitative, mechanistic theories of companies, and that because they are very often compared to organisms, this approach might provide a novel way of developing such a theory. He generously offered to give me access to the large McKinsey database on companies and to help support a postdoc to carry out the research. I was still at Los Alamos at the time, engaged with running high energy physics and knew even less about companies than I do now. Furthermore, the research in biology was still at quite an early stage and I was not convinced that it could readily be extended to companies, so despite being flattered by the offer, I did not pursue it. In retrospect that was probably the right decision at the time, but it speaks volumes about Dick Foster that he had the foresight to see that the scaling approach might provide a useful basis for understanding companies. It took more than a decade of extensive work on organisms, ecosystems, and cities before we were in a position to address Dick’s challenge.

Unfortunately, it’s not a straightforward exercise to relate the observations regarding the tenure of companies on the S&P and Fortune 500 lists to their actual life spans without a detailed analysis that takes into account their age and whether or not they died. Nevertheless, the findings dramatically illustrate the fragility of seemingly powerful companies and are also a striking example of the speeding up of socioeconomic life.

The survival analyses tell us that there should be very few very old companies. An extrapolation of the theory and data predicts that the probability of a company’s lasting for one hundred years is only about forty-five in a million, and for it to last two hundred years it’s a minuscule one in a billion. These numbers should not be taken too seriously, but they do give us a sense of the scale of long-term survivability and provide an interesting insight into the characteristics of companies that have remained viable for hundreds of years. There are at least 100 million companies in the world, so if they all obey similar dynamics, then one would expect only about 4,500 to survive for a hundred years, but none for two hundred. However, it is well known that there are lots of companies, especially in Japan and Europe, that have lived for hundreds of years. Unfortunately, there are no comprehensible data sets or any systematic statistical analyses of these remarkable outliers, though there are plenty of anecdotal stories. Nevertheless, we can learn something instructive about the aging of companies from the general characteristics of these very long-lived outliers.

Most of them are of relatively modest size, operating in highly specialized niche markets, such as ancient inns, wineries, breweries, confectioners, restaurants, and the like. These have quite a different character from the kinds of companies we have been considering in the Compustat data set and the S&P and Fortune 500 lists. In contrast to most of these, these outliers have survived not by diversifying or innovating but by continuing to produce a perceived high-quality product for a small, dedicated clientele. Many have maintained their viability through reputation and consistency and have barely grown. Interestingly, most of them are Japanese. According to the Bank of Korea, of the 5,586 companies that were more than two hundred years old in 2008, over half (3,146 to be precise) were Japanese, 837 German, 222 Dutch, and 196 French. Furthermore, 90 percent of those that were more than one hundred years old had fewer than three hundred employees.

There are some wonderful examples of these geriatric survivors. For instance, the oldest shoemaker in Germany is the Eduard Meier company, founded in Munich in 1596, which became purveyors to the Bavarian aristocracy. It still has only a single store that sells, though no longer makes, quality upscale shoes. The oldest hotel in the world according to Guinness World Records is Nishiyama Onsen Keiunkan in Hayakawa, Japan, which was founded in 705. It has been in the same family for fifty-two generations and even in its modern incarnation has only thirty-seven rooms. Its main attraction seems to be its hot springs. The world’s oldest company was purported to be Kongo Gumi, founded in Osaka, Japan, in 578. It was also a family business going back many generations, but after almost 1,500 years of continuously being in business it went into liquidation in 2006 and was purchased by the Takamatsu Corporation. And what was the niche market that Kongo Gumi cornered for 1,429 years? Building beautiful Buddhist temples. But sadly, with the changes in Japanese culture following the Second World War the demand for temples dried up and Kongo Gumi was unable to adapt fast enough.

5. WHY COMPANIES DIE, BUT CITIES DON’T

The power of scaling is that it can potentially reveal the underlying principles that determine the dominant behavior of highly complex systems. For organisms and cities this fruitfully led to a network-based theory for quantitatively understanding the principal features of their dynamics and structure, from which many of their salient properties could be understood. In both cases we know quite a bit about their network structures, whether they are circulatory systems, road networks, or social systems. On the other hand, despite an extensive literature on the subject, we know much less about the network structures of companies other than that they are for the most part hierarchical. Standard company organizational charts are typically top-down, having a treelike structure superficially suggestive of a classic self-similar fractal. That would explain why companies manifest power law scaling.

Unfortunately, however, we don’t have extensive quantitative data on these organizational networks comparable to what we have for cities and organisms. For instance, we don’t typically know how many people are functioning at each level, how much of the company’s finances and resources are flowing between them, and how much information they are exchanging. And even if some of this were available, we need to have it across the entire size range of companies. Furthermore, it is not at all clear that the “official” organizational charts of companies represents the actuality of what the real operational network structures are. Who is really communicating with whom, how often are they doing it, how much do they exchange, and so on? What is really needed is access to all of the company’s communication channels, such as the phone calls, the e-mails, the meetings, et cetera, quantified analogously to the cell phone data we used for helping to develop a science of cities. It’s unlikely such comprehensive data exist and even less likely that we would ever gain ready access to them. Companies are quite wary of exposing themselves to outside investigators unless they are paying them exorbitant consultant fees, presumably so that they can maintain control. But if you want to understand how a company really functions, or want to develop a serious science of companies, then this is the kind of data that is ultimately needed.

Consequently, we don’t have a well-developed mechanistic framework analogous to the network-based theory for organisms and to a lesser extent cities for analytically understanding the dynamics and structure of companies and, in particular, for calculating the values of their exponents. Nevertheless, just as we have been able to construct a theory of their growth trajectories, we can address the question of their mortality by extrapolating from what we have already learned.

I emphasized earlier that most companies operate close to a critical point where sales and expenses are finely balanced, making them potentially vulnerable to fluctuations and perturbations. A major shock at the wrong time can lead to their demise. Younger companies, which are buffered against this by an initial capital endowment, become particularly vulnerable once this initial infusion is expended if they are unable to turn a significant profit. This is sometimes referred to as the liability of adolescence.

The fact that companies scale sublinearly, rather than superlinearly like cities, suggests that they epitomize the triumph of economies of scale over innovation and idea creation. Companies typically operate as highly constrained top-down organizations that strive to increase efficiency of production and minimize operational costs so as to maximize profits. In contrast, cities embody the triumph of innovation over the hegemony of economies of scale. Cities aren’t, of course, driven by a profit motive and have the luxury of being able to balance their books by raising taxes. They operate in a much more distributed fashion, with power spread across multiple organizational structures from mayors and councils to businesses and citizen action groups. No single group has absolute control. As such, they exude an almost laissez-faire, freewheeling ambience relative to companies, taking advantage of the innovative benefits of social interactions whether good, bad, or ugly. Despite their apparent bumbling inefficiencies, cities are places of action and agents of change relative to companies, which by and large usually project an image of stasis unless they are young.

To achieve greater efficiency in the pursuit of greater market share and increased profits, companies stereotypically add more rules, regulations, protocols, and procedures at increasingly finer levels of organization, resulting in the increased bureaucratic control that is typically needed to administer, manage, and oversee their execution. This is often accomplished at the expense of innovation and R&D (research and development), which should be major components of a company’s insurance policy for its long-term future and survivability. It’s difficult to obtain meaningful data on “innovation” in companies because it’s not straightforward to quantify. Innovation is not necessarily synonymous with R&D, especially as there are significant tax advantages in labeling all sorts of extraneous activities as R&D expenses. Nevertheless, from analyzing the Compustat data set we found that the relative amount allocated to R&D systematically decreases as company size increases, suggesting that support for innovation does not keep up with bureaucratic and administrative expenses as companies expand.

The increasing accumulation of rules and constraints is often accompanied by stagnating relationships with consumers and suppliers that lead companies to become less agile and more rigid and therefore less able to respond to significant change. In cities we saw that one very important hallmark is that they become ever more diverse as they grow. Their spectrum of business and economic activity is incessantly expanding as new sectors develop and new opportunities present themselves. In this sense cities are prototypically multidimensional, and this is strongly correlated with their superlinear scaling, open-ended growth, and expanding social networks—and a crucial component of their resilience, sustainability, and seeming immortality.

While the dimensionality of cities is continually expanding, the dimensionality of companies typically contracts from birth through adolescence, eventually stagnating or even further contracting as they mature and move into old age. When still young and competing for a place in the market, there is a youthful excitement and enthusiasm as new products are developed and ideas bubble up, some may be crazy and unrealistic and some grandiose and visionary. But market forces are at work so that only a few of these are successful as the company gains a foothold and an identity. As it grows, the feedback mechanisms inherent in the market lead to a narrowing of its product space and inevitably to greater specialization. The great challenge for companies is how to balance the positive feedback from market forces, which strongly encourage staying with “tried and true” products versus the long-term strategic need to develop new areas and commodities that may be risky and won’t give immediate return.

Most companies tend to be shortsighted, conservative, and not very supportive of innovative or risky ideas, happy to stay almost entirely with their major successes while the going is good because these “guarantee” short-term returns. Consequently, they tend toward becoming more and more unidimensional. This reduction in diversity coupled with the predicament described earlier in which companies sit near a critical point is a classic indicator of reduced resilience and a recipe for eventual disaster. By the time a company realizes its condition it is often too late. Reconfiguring and reinventing become increasingly difficult and expensive. So when a large enough unanticipated fluctuation, perturbation, or shock comes along the company becomes seriously at risk and ripe for a takeover, buyout, or simply going belly-up. In a word, it is, as the Mafiosi put it, il bacio della morte—the kiss of death.9