6

“What” and “Where” in Visual Attention: Evidence from the Neglect Syndrome

Martha J. Farah, Marcie A. Wallace and Shaun P. Vecera

Department of Psychology, Carnegie-Mellon University, Pittsburgh, Pennsylvania, USA

Attention and Representation

Psychologists often speak of “allocating attention to stimuli”, but in fact this manner of speaking obscures an important fact about attention. Attention is not allocated to stimuli, but to internal representations of stimuli. Although it may seem pedantic to emphasise such an obvious point, doing so alerts us to the central role of representation in any theory of attention. One cannot explain attention without having specified the nature of the visual representations on which it operates.

The goal of this chapter is to characterise the representations of visual stimuli to which attention is allocated. Two general alternatives will be considered. The first is that attention is allocated to regions of an array-format representation of the visual field. According to this view, when some subset of the contents of the visual field is selected for further, attention-demanding perceptual processing, the attended subset is spatially delimited. In other words, stimuli are selected a location at a time. The second alternative is that attention is allocated to a representation that makes explicit the objects in the visual field, but not necessarily their locations, and the attended subset is therefore some integral number of objects. According to this alternative, stimuli are selected an object at a time.

In this chapter, we will review what is known about the representation of locations and objects in visual attention from studies of normal subjects and parietal-damaged subjects. The latter include unilaterally damaged subjects who have neglect, and bilaterally damaged subjects, who have a disorder known as “simultanagnosia”.

Attention to Locations and Objects: A Brief Review of Evidence from Normal Subjects

Evidence for Location-Based Attention

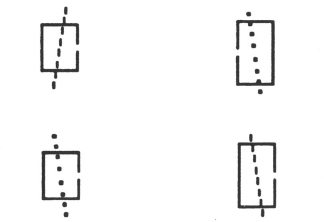

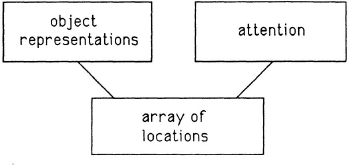

Most research on visual attention in normal subjects has focused on selection of stimuli by spatial location. Visual attention is often compared to a spotlight (e.g. Posner, Snyder, & Davidson, 1980), which is moved across locations in the visual field. One common task used in studying spatial attention is the simple reaction time paradigm developed by Posner and colleagues, in which cues and targets appear to the left and right of fixation as shown in Fig. 6.1. The cue consists of a brightening of the box surrounding the target location and the target itself is a plus sign inside the box. The subject’s task is to respond as quickly as possible once the target appears. The cues precede the targets, and occur either at the same location as the target (a “valid” cue) or at the other location (an “invalid” cue). Subjects respond more quickly to validly than invalidly cued targets, and this difference has been interpreted as an attentional effect. Specifically, when a target is invalidly cued, attention must be disengaged from the location of the cue, moved to the location of the target, and re-engaged there before the subject can complete a response. In contrast, when the target is validly cued, attention is already engaged at the target’s location, and responses are therefore faster (see Posner & Cohen, 1984).

Fig. 6.1. Two sequences of stimulus displays from the simple reaction time paradigm used by Posner and colleagues to demonstrate spatially selective attention. Part A shows a validly cued trial, in which the cue (brightening of one box) occurs on the same side of space as the target (asterisk). Part B shows an invalidly cued trial, in which the cue and target occur in different spatial locations.

The hypothesis that attention is shifted across a representation of locations in the visual field also finds striking support from an experiment by Shulman, Remington and McLean (1979). The subjects received cues and targets in far peripheral locations. However, a probe event could occur between the cue and target in an intermediate peripheral location. When the subjects were cued to a far peripheral location and a probe appeared in the intermediate location, they showed facilitation in processing the probe prior to the maximal facilitation of the far peripheral location. These results fit with the idea that if attention is moved from point A to point B through space, then it moves through the intermediate points.

Another representative result supporting spatially allocated visual attention was reported by Hoffman and Nelson (1981). Their subjects were required to perform two tasks: a letter search task, in which the subjects determined which of two target letters appeared in one of four spatial locations, and an orientation discrimination, in which the subjects determined the orientation of a small U-shaped figure. Hoffman and Nelson found that when letters were correctly identified, the orientation of the U-shaped figure was better discriminated when it was adjacent to the target letter, compared to when the target letter and U-shape were not adjacent. These results support the hypothesis that attention is allocated to stimuli as a function of their location in visual space.

Evidence for Object-Based Attention

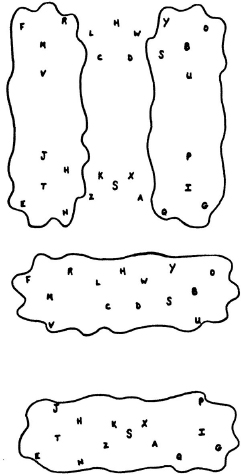

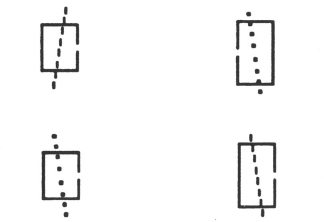

In addition to the many results supporting the view that attention is allocated to representations of spatial locations, there are other results suggesting that it is allocated to representations of objects, independent of their spatial location. Perhaps the clearest evidence for object-based attention comes from Duncan (1984). Duncan presented subjects with brief presentations of superimposed boxes and lines, like the ones shown in Fig. 6.2. Each of the two objects could vary on two dimensions: the box could be either short or tall and have a gap on the left or right, and the line could be tilted clockwise or counterclockwise and be either dotted or dashed. The critical finding was that when the subjects were required to make two decisions about the stimuli, they were more accurate when both decisions were about the same object. For example, the subjects were more accurate at reporting the box’s size and side of gap, compared to, say, reporting the box’s height and the line’s texture. This finding fits with the notion that attention is allocated to objects per se: It would be more efficient to attend to a single object representation rather than either attending to two object representations simultaneously, or attending to one representation and then the other.

Fig. 6.2. Examples of stimuli used by Duncan (1984) to demonstrate that attention is object-based.

To summarise the findings with normal subjects, there appears to be evidence of both location-based and object-based attention. It is also possible that the findings in support of one type of attention could be explained by the alternative hypothesis. In general, being in the same location is highly correlated with being on the same object and vice versa. This raises the possibility that there is just one type of visual attention, either location-based or object-based. For example, in the type of display shown in Fig. 6.1, the cue-target combination might be seen as forming a single object—a box with a plus inside—when the cue occurs on the same side of space as the target, whereas a box on one side of space and a plus on the other would not be grouped together as a single object. The tendency of the visual system to group stimuli at adjacent spatial locations into objects (the Gestalt principle of grouping by proximity) could be used to explain the effects of spatial location on the allocation of attention in terms of object-based attention. Similarly, evidence for object-based attention could be interpreted in terms of spatial attention. For example, Duncan discusses the possibility that the objects in his experiment may have been perceived as being at different depths, so that shifting attention between objects may infact have involved shifting attention to a different depth location. It is, of course, also possible that both types of attention exist. The current state of research with normal subjects does not decisively choose among these alternatives. Let us now turn to the neuropsychological evidence on this issue.

Attention to Locations and Objects after Unilateral Parietal Damage

Unilateral posterior parietal damage often results in the neglect syndrome, in which patients may fail to attend to the contralesional side of space, particularly when ipsilesional stimuli are present. The neglect syndrome provides clear evidence for a location-based visual attention system, in that what does and does not get attention depends on location, specifically how far to the left or right a stimulus is. Patients with right parietal damage, for example, tend to neglect the left to a greater extent than the right, no matter where the boundaries of perceived objects lay. This fundamentally spatial limitation of attention cannot be explained in terms of object-based attentional processes.

It is, of course, possible that visual attention has both location-based components and object-based components. The following experiments test the possibility that there is an object-based component of attention contributing to neglect patients’ performance, as well as the more obvious location-based component.

Object-based Attention in Neglect

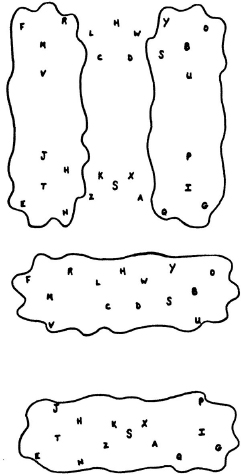

We set out to discover whether or not object representations play a role in the distribution of attention in neglect. Eight right parietal-damaged patients with left neglect were given a visual search task, in which they had to name all of the letters they could see in a scattered array. Figure 6.3 shows the two types of stimuli that were used. The patients were simply asked to read as many letters as they could see, and tell us when they were finished. Note that the blob objects are completely irrelevant to the task. Of course, they are perceived by at least some levels of the visual system, and so the question is what, if anything, does that do to the distribution of attention over the stimulus field?

Fig. 6.3. Examples of stimuli used to demonstrate an object-based component to visual attention in neglect patients.

If attention is object-based as well as location-based, then there will be a tendency for entire blobs to be either attended or non-attended, in addition to the tendency for the right to be attended and the left to be non-attended. This leads to different predictions for performance with the horizontal and vertical blobs. Each of the horizontal blobs will be at least partially attended because they extend into the right hemifield. On the hypothesis that attention is allocated to entire objects, then even their left side will receive some additional, object-based attention. The hypothesis of object-based attention, therefore, predicts that there will be more attention allocated to the left when the blobs are horizontal and straddle the two sides of space than when they are vertical and each contained within one side. Note that the letters and their locations are perfectly matched for all pairs of horizontal and vertical blobs, so any difference in performance can’t be due to differences in the location-based allocation of attention.

We examined two measures of the distribution of attention in the search task. The simplest measure is the number of letters correctly reported from the left-most third of the displays. Consistent with the object-based hypothesis, all but one subject read more letters on the left when the blobs were horizontal and therefore straddled the left and right hemifields. One might wonder whether this measure of performance reflects the effects of the blobs on spatial distribution of attention per se over the display, or whether it reflects the effects of the blobs on the subjects’ search strategy adopted in the presence of a rightward (i.e. location-based) attentional bias that is not itself affected by the blobs. That is, it is possible that the subjects generally started on the right sides of both kinds of displays, and allowed their search path to be guided by the boundaries of the blobs. This would lead them to read more letters on the left with the horizontal blobs than with the vertical blobs. Our second measure allows us to rule this possibility out. We examined the frequency with which the subjects started their searches on the left. Consistent with an effect of the blobs on the distribution of attention at the beginning of each visual search, all of the subjects started reading letters on the left more often with the horizontal blobs than with the vertical blobs. The results of this experiment suggest that objects do affect the distribution of attention in neglect, and imply that visual attention is object-based as well as location-based. These results do not tell us much about how the visual attention system identifies objects, aside from the low-level figure-ground segregation processes that are required to parse the displays shown in Fig. 6.3 into blob objects. Does knowledge also play a role in determining what the visual attention system takes to be an object?

What Does the Attention System Know about “Objects” in Neglect?

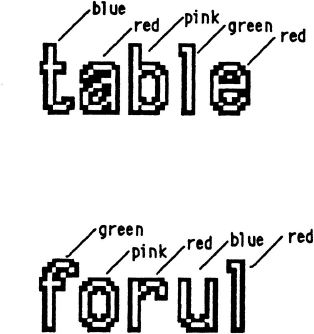

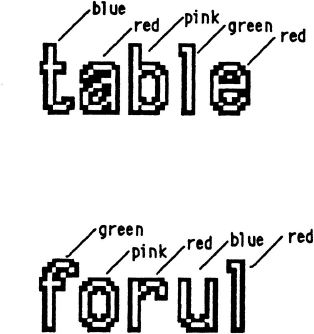

In order to find out whether the damaged attention system in neglect takes knowledge about objects into account when allocating attention, or merely takes objects to be the products of low-level grouping principles, we followed up on a phenomenon first observed by Sieroff, Pollatsek and Posner (1988; Brunn & Farah, 1991). They observed that neglect patients are less likely to neglect the left half of a letter string if the string makes a word than if it makes a nonword. For example, patients are less likely to omit or misread the “t” in “table” than in “tifcl”. Sieroff and Posner have assumed that the spatial distribution of attention is the same when reading words and nonwords, and that the difference in reading performance for words and nonwords is attributable to top-down support from word representations “filling in” the missing low-level information. However, there is another possible explanation for the superiority of word over nonword reading in neglect patients, in terms of object-based attentional processes. Just as a blob object straddling the two hemifields causes a reallocation of attention to the leftward extension of the blob, because attention is being allocated to whole blobs, so, perhaps, might a lexical object straddling the two hemifields cause a reallocation of attention to the leftward extension of the word. Of course, this would imply that the “objects” that attention selects can be defined by very abstract properties such as familiarity of pattern, as well as low-level physical features.

In order to test this interpretation of Sieroff and co-workers’ observation, and thereby determine whether familiarity can be a determinant of objecthood for the visual attention system, we devised the following two tasks. In one task, we showed word and nonword letter strings printed in different colours, as shown in Fig. 6.4, and asked patients to both read the letters and to name the colours—half read the letters first, half named the colours first. Colour naming is a measure of how well they are perceiving the actual stimulus, independent of whatever top-down support for the orthographic forms there might be. If there is a reallocation of attention to encompass entire objects, in this case lexical objects, then patients should be more accurate at naming the colours on the left sides of words than nonwords. In a second task, we used line bisection to assess the distribution of attention during word and nonword reading. Here, the task was to mark the centre of the line that was presented underneath a letter string. If there is a reallocation of attention to encompass the entire word, then line bisection should be more symmetrical with words than nonwords.

Fig. 6.4. Examples of stimuli used by Brunn and Farah (1991) to demonstrate that the lexicality of letter strings affects the distribution of visual attention in neglect patients.

In both tasks, we replicated Seiroff et al., in that more letters from words than nonwords were read in both experiments. Was this despite identical distributions of attention in the two conditions, or was attention allocated more to the leftward sides of word than nonword letter strings? The answer was found by looking at performance in the colour-naming and line-bisection conditions. In both of these tasks, performance was significantly better with words than nonwords. This implies that lexical “objects”, like the blob objects of the previous experiment, tend to draw attention to their entirity. In terms of the issue of what determines objecthood for the allocation of attention, these results suggest that knowledge does indeed play a role.

To summarise the conclusions that can be drawn from these studies of patients with neglect, it appears that attention is both location-based and object-based. Furthermore, the object representations to which attention can be allocated include such abstract objects as words.

Attention to Locations and Objects after Bilateral Parietal Damage

Patients with bilateral posterior parietal damage sometimes display a symptom known as “simultanagnosia”, or “dorsal simultanagnosia” (to distinguish this syndrome from a superficially similar but distinct syndrome that follows ventral visual system damage; see Farah, 1990). They may have full visual fields, but are nevertheless able to see only one object at a time. This is manifest in several ways: When shown a complex scene, they will report seeing only one object; when asked to count even a small number of items, they will lose track of each object as soon as they have counted it and therefore tend to recount it again; if their attention is focused on one object, they will fail to see even so salient a stimulus as the examiner’s finger being thrust suddenly towards their face. Not surprisingly, given the typical lesions causing this syndrome, these patients have been described as having a kind of bilateral neglect (e.g. Bauer & Rubens, 1985).

Like neglect, dorsal simultanagnosia seems to involve both location- and object-based limitations on attention. Many authors report better performance with small, foveally located stimuli (e.g. Holmes, 1918; Tyler, 1968), demonstrating the role of spatial limitations on the attentional capacities of these patients. In addition, simultanagnosic patients tend to see one object at a time. Luria and colleagues provided some particularly clear demonstrations of the object-limited nature of the impairment. In one experiment (Luria, Pravdina-Vinarskaya, & Yarbuss, 1963), a patient was shown simple shapes and line drawings, such as a circle, cross, carrot or fork, in a tachistoscope. He could name the stimuli when presented one at a time, whether they were small (6–8°) or large (15–20°). However, when shown simultaneously, even at the smaller size, the patient could not name both. Note that the angle subtended by the pair of smaller stimuli would have been no larger than a single large stimulus. This confirms that there is an object limitation per se, rather than a spatial limitation, in the subject’s ability to attend to visual stimuli.

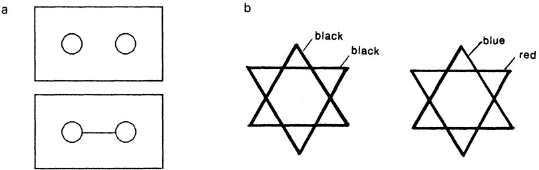

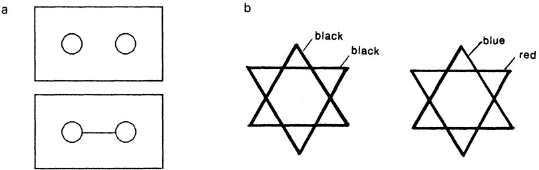

Given that the limitation of attention seems not to depend upon size per se, is it truly a function of number of objects, or is it merely a limitation on some measure of complexity? The foregoing observations by Luria are consistent with both possibilities, as two shapes taken together are more complex than one. Luria provides some insightful demonstrations to answer this question as well. In a study with a different patient, Luria (1959) presented brief tachistoscopic displays of the kind shown in Fig. 6.5a. He found that both objects were more likely to be seen if he simply connected them with a line! Godwin-Austen (1965) and Humphreys and Riddoch (in press) have replicated this finding with similar patients. This suggests that complexity, in the simple sense of amount of information or contour, is not a limiting factor. Because a line connecting two shapes makes them more likely to be grouped as a single object by Gestalt mechanisms, we can infer that low-level geometric properties of a stimulus, such as continuity or connectivity, help define what the visual attention system takes to be an “object”. Luria also presented two versions of the Star of David to this patient (Fig. 6.5b). In one version, the two triangles of the star were the same colour, and would thus group together as a single object, and in the other version the triangles were different colours and therefore more likely to be seen as two distinct but superimposed objects. Consistent with a limitation in number of objects, rather than spatial region or complexity, the patient reported seeing a star in the first kind of display and a single triangle in the second.

Fig. 6.5. Examples of stimuli used by Luria (1959) to demonstrate object-based attentional limitations in a dorsal simultanagnosic patient. The patient was more likely to see multiple shapes if they were connected by a line, as shown in (a). When the star shown in (b) was presented in a single colour, the patient saw a star, but when each component triangle was drawn in a different colour, he saw only a single triangle.

The reading ability of patients with dorsal simultanagnosia is also informative about the nature of their attentional limitation. Recall that in neglect, words act more like objects than nonwords, from the point of view of attentional allocation. This is generally true in simultanagnosia as well. Many cases are reported to see a word at a time, regardless of its length or the size of the print.

Conclusions

Evidence from unilaterally and bilaterally parietal-damaged subjects converges with evidence from normal subjects to suggest that visual attention is allocated to representations of both locations and objects. Let us consider some of the implications of this for our understanding of visual attention and its physiological substrates.

Representation and Visual Attention

The hypotheses of location-based and object-based attention were originally put forth by cognitive psychologists and supported by evidence from normal subjects. However, like any single source of evidence, the research with normal subjects is open to alternative explanations. Neuropsychological evidence is therefore a valuable source of additional constraints on the nature of the representations used by attention. The fundamentally spatial (i.e. left vs right) nature of the attentional limitation of neglect patients provides strong evidence that visual attention acts on representations of spatial location. In addition, in experiments with neglect patients in which location was held constant, we found evidence for a distinct object-based component of attention as well. Perhaps the most dramatic evidence for object-based attention comes from the study of patients with bilateral parietal damage. The most salient limitation on what can and cannot be attended to in dorsal simultanagnosia is the limitation on the number of objects, almost entirely independent of the complexity or size of the objects.

In addition to complementing the evidence from normal subjects to object-based attention, the neuropsychological evidence also tells us something new about object-based attention, namely that knowledge-based factors such as familiarity and meaningfulness help to determine what the attention system takes to be an object. In contrast to objects such as boxes and lines, which can be individuated on the basis of relatively low-level visual properties such as continuity, words can be identified as objects, distinct from nonwords, only with the use of knowledge. The fact that neglect patients allocate attention differently to words and nonwords, and that dorsal simultanagnosics tend to see whole words and whole complex objects, suggests that the attention system has access to considerable object knowledge.

One possible confusion concerning the role of objects in the allocation of attention involves the frames of reference used in allocating attention to locations in space (see Chapter 9, this volume). In a previously reported study, we found that right parietal-damaged patients neglect the left side of space relative to their own bodies and relative to the fixed environment, but not relative to the intrinsic left/right of an object (Farah et al., 1990). That is, when they recline sideways, the allocation of their attention is determined by where their personal left is (up or down with respect to the fixed environment) as well as where the left side of the environment is. In contrast, when neglect patients view an object with an intrinsic left and right (such as a telephone) turned sideways, the allocation of their attention is not altered. This is not inconsistent with the hypothesis that neglect patients allocate attention to objects. Rather, it implies that, when neglect patients allocate attention to locations, those locations are represented with respect to two different co-ordinate systems—viewer-centred and environment-centred— but not to an object-centred co-ordinate system. In other words, attention is allocated to objects as well as to locations, but objects do not help to determine which locations the attention system takes to be “left” and “right”.

Visual Attention and the Two Cortical Visual Systems

An influential organising framework in visual neurophysiology has come to be known as the “two cortical visual systems” hypothesis (Ungerleider & Mishkin, 1982). According to this hypothesis, higher visual perception is carried out by two relatively distinct neural pathways with distinct functions. The dorsal visual system, going from occipital cortex through posterior parietal cortex, is concerned with representing the spatial characteristics of visual stimuli, primarily their location in space. Animals with lesions of the dorsal visual system are impaired on tasks involving location discrimination, but perform normally on tasks involving pattern discrimination. Single-cell recordings show that neurons in this region of the brain respond to stimuli dependent primarily on the location of the stimuli, and not their shape or colour. The ventral visual system, going from occipital cortex through inferotemporal cortex, is concerned with representing the properties of stimuli normally used for identification, such as shape and colour. Animals with lesions disrupting this pathway are impaired at tasks that involve shape and colour discrimination, but perform normally on most tasks involving spatial discriminations. Consistent with these findings, recordings from single neurons in inferotemporal cortex reveal little spatial selectivity for location or orientation, but high degrees of selectivity for shape and colour. Some neurons in this area respond selectively to shapes as complex as a hand or even a particular individual’s face.

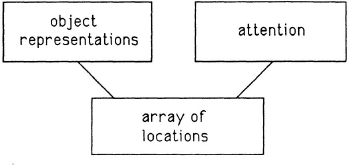

The findings reviewed earlier seem in conflict with the classical view of the two cortical visual systems, in that patients with bilateral damage to the dorsal system suffer a limitation on object-based attention as well as location-based attention. The results from dorsal simultanagnosics and neglect patients seem to imply that the dorsal visual system represents objects, even words. Actually, these results imply only that the dorsal visual system is part of an interactive network, other parts of which have object and word knowledge. An outline of one possible model that would account for these results was proposed by Farah (1990) and is shown in Fig. 6.6.

Fig. 6.6. A sketch of the possible relations between location-based representations of the visual world, visual attention, and object representations based on Farah (1990).

According to the ideas sketched out in Fig. 6.6, the effects of objects on the allocation of attention can be explained as a result of interactions between the object representations needed for object recognition and the array-format representation of the visual field that is operated on by attention. One of the basic problems in object recognition is to parse the array into regions that correspond to objects, which can then be recognised. This is a difficult problem because objects in the real world often overlap and occlude each other. One good source of help in segmenting the visual field into objects is our knowledge of what objects look like. Unfortunately, this puts us in a chicken-and-egg situation, in that the configuration of features in the array can be used to determine what objects are being seen, but we need to know what objects are being seen to figure out how to parse the features in order to feed them into the object recognition system. A good solution to this kind of problem is what McClelland and Rumelhart (1981) call an interactive activation system, in which the contents of the array put constraints on the set of candidate objects at the same time as the set of candidate objects put constraints on how the contents of the array should be segmented. What this means in terms of activation flow in Fig. 6.6 is that the contents of the array are activating certain object representations in the ventral visual system, and that the activated object representations are simultaneously activating the regions of the array that correspond to an object.

The interactive activation relation between the object representations and the array-format representation of the visual field implies that there are two sources of activation in the array, both of which will have the potential to engage the dorsal attention system: bottom-up activation from stimulus input, and top-down activation from object representations. Whereas stimulus input can activate any arbitrary configuration of locations, top-down activation from the object system will activate regions of the array that correspond to objects. Thus, the dorsal attention system will tend to be engaged by regions of the array that are occupied by objects, including objects defined by the kinds of knowledge stored in the ventral visual system. Whether or not this is the correct interpretation of the object-based attention effects in normal and brain-damaged subjects awaits further experimental tests. At present, it is useful merely as a demonstration that one need not abandon the basic assumptions of the two cortical visual systems hypothesis in order to account for object effects in the attentional limitations of parietal-damaged patients.

Acknowledgements

Preparation of this chapter was supported by ONR grant NS0014-89-J3016, NIH career development award K04-NS01405 and NIMH training grant 1-T32-MH19102.

References

Bauer, R.M. & Rubens, A.B, (1985). Agnosia. In K.M. Heilman & E. Valenstein (Eds), Clinical neuropsychology. New York: Oxford University Press.

Brunn, J.L. & Farah, M.J. (1991). The relation between spatial attention and reading: Evidence from the neglect syndrome. Cognitive Neuropsychology, 8, 59–75.

Duncan, J. (1984). Selective attention and the organization of visual information. Journal of Experimental Psychology: General, 113, 501–517.

Farah, M.J. (1990). Visual agnosia: Disorders of object recognition and what they tell us about normal vision. Cambridge MA: MIT Press.

Farah, M.J., Brunn, J.L., Wong, A.B., Wallace, M., & Carpenter, P.A. (1990). Frames of reference for allocation of spatial attenation: Evidence from the neglect syndrome. Neuropsychologia, 28, 335–347.

Godwin-Austen, R.B. (1965). A case of visual disorientation. Journal of Neurology, Neurosurgery and Psychiatry, 28, 453–458.

Hoffman, J.E. & Nelson, B. (1981). Spatial selectivity in visual search. Perception and Psychophysics, 30, 283–290.

Holmes, G. (1918). Disturbances of visual orientation. British Journal of Ophthalmology, 2, 449–468, 506–518.

Humphreys, G.W. & Riddoch, M.J. (in press). Interactions between object and space systems revealed through neuropsychology. In D. Meyer & S. Kornblum (Eds), Attention and Performance XIV. Cambridge, MA: MIT Press.

Luria, A.R. (1959). Disorders of “simultaneous perception” in a case of bilateral occipitoparietal brain injury. Brain, 83, 437–449.

Luria, A.R., Pravdina-Vinarskaya, E.N., & Yarbuss. A.L. (1963). Disorders of ocular movement in a case of simultanagnosia. Brain, 86, 219–228.

McClelland, J.L. & Rumelhart, D.E. (1981). An interactive activation model of context effects in letter perception: Part 1. An account of basic findings. Psychological Review. 88. 375–407.

Posner, M.I. & Cohen, Y. (1984). Components of visual orienting. In H. Bouma & D.G. Bouwhuis (Eds), Attention and performance X: Control of language processes, pp. 531–556. Hove: Lawrence Erlbaum Associates Ltd.

Posner, M.I., Snyder, C.R.R., & Davidson, B.J. (1980). Attention and the detection of signals. Journal of Experimental Psychology: General, 109, 160–174.

Shulman, G.L., Remington, R.W., & McLean, J.P. (1979). Moving attention through visual space. Journal of Experimental Psychology: Human Perception and Performance, 5, 522–526.

Sieroff, E., Pollatsek, A., & Posner, M.I. (1988). Recognition of visual letter strings following injury to the posterior visual spatial attention system. Cognitive Neuropsychology, 5, 427–449.

Tyler, H.R. (1968). Abnormalities of perception with defective eye movements (Balint’s syndrome). Cortex, 3, 154–171.

Ungerleider, L.G. & Mishkin, M. (1982). Two cortical visual systems. In D.J. Ingle, M.A. Goodale, & R.J.W. Mansfield (Eds), Analysis of visual behavior, Cambridge, MA: MIT Press.