What we are today comes from our thoughts of yesterday and our present thoughts build our life of tomorrow: our life is the creation of our mind.

The Dhammapada

Some years ago my wife was in a coma following an accident. I spent many hours in the hospital wondering whether ‘she’ was still inside, or whether this was just a body with no consciousness. Fortunately she woke up after two weeks, but what about those who cannot respond physically? For some, the body has become a collection of cells with no conscious experience – the vegetative state. But for others there is the terrifying scenario in which their consciousness is locked in a body that won’t respond. So is there any way to ask the brain whether it is conscious in these situations? Amazingly, there is and it involves playing tennis – or at least imagining playing tennis.

Conjure up the image of you playing tennis. Big forehands. Overhead smashes. Asking you to imagine playing tennis involves you making a conscious decision to participate; it isn’t some sort of automatic response to a physical stimulus. But once you are imagining the actions, an fMRI scan can pick up the neural activity that corresponds to the motor activity associated with the shots you picture yourself playing.

The discovery that we could use the fMRI to see patients making conscious decisions was made by British neuroscientist Adrian Owen and his team, when in early 2006 they were investigating a 23-year-old woman who had been diagnosed to be in a vegetative state. When the patient was instructed to imagine playing tennis, Owen saw, to his surprise, the region corresponding to the supplementary motor cortex light up inside the scanner. When asked to imagine taking a journey through her house, a different region called the parahippocampal gyrus, which is needed for spatial navigation, was activated. It was an extremely exciting breakthrough, not least because the doctors rediagnosed this 23-year-old as conscious but locked in.

Numerous other patients who had previously been regarded as in a vegetative state have now been rediagnosed as locked in. This makes it possible for doctors and, more importantly, family to talk to and question those who are conscious but locked into their bodies. A patient can indicate the answer ‘yes’ to a question by imagining playing tennis, creating activity that, as we’ve seen, can be picked up by a scanner. It doesn’t perhaps tell us what consciousness is, but the combination of tennis and fMRI has given us an extraordinary consciousometer.

The same tennis-playing protocol is being used to examine another case where our consciousness is removed: anaesthesia. I recently volunteered to be participant number 26 in a research project in Cambridge that would explore the moment when an anaesthetic knocks out consciousness – there have been some terrible examples of patients who can’t move their bodies but are completely conscious during an operation.

The experiment had me lying inside an fMRI scanner while plugged into a supply of propofol, the drug Michael Jackson had been taking when he died. After a few shots of the anaesthetic, I must admit I could understand why Jackson had become addicted to its extremely calming effect. But I was here to work. With each increased dose I was asked to imagine playing tennis. As the dose was increased, the research team could assess the critical moment when I lost consciousness and stopped playing imaginary tennis. The interesting thing for me was to find out afterwards just how much more propofol was needed to go from knocking my body out so that it was no longer moving to the moment my consciousness was turned off.

The ability to question the brain even when the rest of the body is unable to move or communicate has allowed researchers to gauge just how much anaesthetic you need to temporarily switch off someone’s consciousness so that you can operate on them.

This tennis-playing consciousometer leads us also to the close relationship between consciousness and free will: you must choose to imagine playing tennis. And yet recent experiments looking at the brain in action have fundamentally challenged how much free will we truly have.

I’m sure you thought you were asserting your free will when you decided to read this book. That it was a conscious choice to pick up the book today and turn to this page. But free will, it appears, may be just an illusion.

Much of the cutting-edge research into consciousness is going on right now. Unlike the discovery of the quark or the expanding universe, it is possible to witness much of this research as it is conducted, even to be part of the research. An experiment designed by British-born scientist John-Dylan Haynes is probably the most shocking in its implications for how much control I have over my life.

I hope these fMRI scanners are not bad for you because in my quest to know how the brain creates my conscious experience here I was: another city (Berlin this time) and another scanner. Lying inside, I was given a little hand-held console on which there were two buttons: one to be activated with my right hand, the other with my left. Haynes asked me to go into a Zen-like relaxed state, and whenever I felt the urge I could press either the right-hand or left-hand button.

During the experiment, I had to wear a pair of goggles containing a tiny screen on which a random stream of letters was projected. I was asked to record which letter was on the screen each time I made the decision to press the right or left button.

The fMRI scanner recorded my brain activity as I made my random conscious decisions. What Haynes discovered is that, by analysing my brain activity, he is able to predict which button I am going to press six seconds before I myself became consciously aware of which one I was going to choose. Six seconds is a very long time. My brain decides which button it is going to instruct my body to press, left or right. Then one elephant, two elephant, three elephant, four elephant, five elephant, six elephant – and only now does the decision get sent to my conscious brain, giving me the feeling that I am acting of my own free will.

Haynes can see which button I will choose because there is a region in the brain that lights up six seconds before I press, preparing the motor activity. A different region of the brain lights up according to whether the brain is readying the left finger to press the button or the right finger. Haynes is not able to predict with 100% certainty yet, but the predictions he is making are clearly more accurate than if you were simply guessing. And Haynes believes that with more refined imaging, it may be possible to get close to 100% accuracy.

It should be stressed that this is a very particular decision-making process. If say, you are in an accident, your brain makes decisions in a split second, and your body reacts without the need for any conscious brain activity to take place. Many processes in the brain occur automatically and without involving our consciousness, preventing the brain being overloaded with routine tasks. But whether I choose to press the right or left button is not a matter of life or death. I am freely making a decision to press the left button.

In practice it takes the research group in Berlin several weeks to analyse the data from the fMRI, but as computer technology and imaging techniques advance, the potential is there for Haynes’s consciousness to know which button I’m going to press six seconds before my consciousness is aware of a decision I think I am making of my own free will.

Although the brain seems unconsciously to prepare the decision a long time in advance, it is still not clear where the final decision is being made. Maybe I can still override the decision my brain has prepared for me. If I don’t have ‘free will’, some have suggested that perhaps I at least have ‘free won’t’: that I can override the decision to press the left-hand button once it reaches my conscious brain. In this experiment, which button I press is immaterial, so there seems little reason not to go with the decision my unconscious brain has prepared for me.

The experiment seems to suggest that consciousness may be a very secondary function of the brain. We already know that so much of what our body decides to do is totally unconscious, but what we believe distinguishes us as humans is that our consciousness is an agent in the decisions we make. But what if it is only way down the chain of events that our consciousness kicks in and gets us involved? Is our consciousness of a decision just a chemical afterthought with no influence on what we do? What implications would this have legally and morally? ‘I’m sorry m’lud but it wasn’t me who shot the victim. My brain had already decided 6 seconds before that it was going to pull the trigger.’ Be warned: biological determinism is not a defence in law!

The button-pressing that Haynes got me to perform is, as I’ve said, of no consequence, and this may skew the outcome of the experiment. Perhaps if it was something I cared more about, I wouldn’t feel my subconscious had so much of a say. Haynes has repeated the experiment with a slightly more intellectually engaging activity. Participants are asked whether to add or subtract a number to numbers shown on a screen. Again, the decision to add or subtract is indicated by brain activity recorded 4 seconds before the conscious decision to act.

But what if we take the example proposed by the French philosopher Jean-Paul Sartre of a young man who has to choose between joining the resistance movement or looking after his grandmother. Perhaps in this case conscious deliberation will play a much greater role in the outcome and rescue free will from the fMRI scanner. Maybe the actions performed in Haynes’s experiments are too much like subconscious automatic responses. Certainly many of my acts appear in my consciousness only at a later point, because there is no need for my consciousness to know much about them. Free will is replaced by the liberty of indifference.

The preconscious brain activity may well be just a contributing factor to the decision that is made at the moment of conscious deliberation. It does not necessarily represent the sole cause but helps inform the decision: here’s an option you might like to consider.

If we have free will, one of the challenges is to understand where it comes from. I don’t think my smartphone has free will. It is just a set of algorithms that it can follow only in a deterministic manner. Just to check, I asked my chatbot app whether it thought it was acting freely, making choices. It replied rather cryptically:

‘I will choose a path that’s clear, I will choose free will.’

I know that this response is programmed into the app. It may be that the app has a random-number generator that ensures its responses are varied and unpredictable, giving it the appearance of free will: a virtual casino dice picking its response from a database of replies. But randomness is not freedom – those random numbers are being generated by an algorithm whose outputs are necessarily random in character. As I discovered in my Third Edge, perhaps my smartphone will need to tap into ideas of quantum physics if it is to have any chance of asserting its free will.

A belief in free will is one of the things that I cling to because I believe it marks me out as different from an app on my phone, and I think this is why Haynes’s experiment left me with a deep sense of unease. Perhaps my mind, too, is just the expression of a sophisticated app at the mercy of the brain’s biological algorithm.

The mathematician Alan Turing was one of the first to question whether machines like my smartphone could ever think intelligently. He thought a good test of intelligence was to ask, if you were communicating with a person and a computer, whether you could distinguish which was the computer? It was this test, now known as the Turing test, that I was putting Cleverbot through at the beginning of this Edge. Since I can assess the intelligence of my fellow humans only by my interaction with them, if a computer can pass itself off as human, shouldn’t I call it intelligent?

But isn’t there a difference between a machine following instructions and my brain’s conscious involvement in an activity? If I type a sentence in English into my smartphone, the apps on board are fantastic at translating it into any language I choose. But no one thinks the smartphone understands what it’s doing. The difference perhaps can be illustrated by an interesting thought experiment called the Chinese Room, devised by philosopher John Searle of the University of California. It demonstrates that following instructions doesn’t prove that a machine has a mind of its own.

I don’t speak Mandarin, but imagine I was put in a room with an instruction manual that gave me an appropriate response to any string of Chinese characters posted into the room. I could have a very convincing discussion with a Mandarin speaker without ever understanding a word of my responses. In a similar fashion, my smartphone appears to speak many languages, but we wouldn’t say it understands what it is translating.

It’s a powerful challenge to anyone who thinks that just because a machine responds like a conscious entity, we should regard that as a sufficient measure of consciousness. Sure, it could be doing everything a conscious person does, but is it really conscious? Then again, what is my mind doing when I’m writing down words now? Aren’t I just following a set of instructions? Could there be a threshold beyond which we would have to accept that the computer understands Mandarin, and hence another at which the algorithm at work should be regarded as having consciousness? But before we can program a computer to be conscious, we need to understand what is so special about the algorithm at work in a human brain.

One of the best ways to tease out the correlation between consciousness and brain activity is to compare conscious brains with unconscious brains. Is there a noticeable difference in the brain’s activity? We don’t have to wait for a patient to be in a coma or under anaesthetic to make this comparison, because there is another situation in which every day, or, rather, every night, we lose our consciousness: sleep. And it is the science of sleep and what happens to the brain in these unconscious periods that perhaps gives the best insight into what it is the brain does to create our experience of consciousness.

So I went to the Centre for Sleep and Consciousness at the University of Wisconsin–Madison, where experiments conducted by neuroscientist Giulio Tononi and his team have revealed strikingly different brain behaviour during waking and dreamless sleeping.

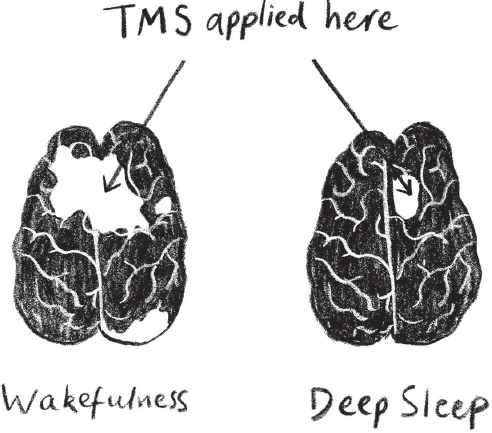

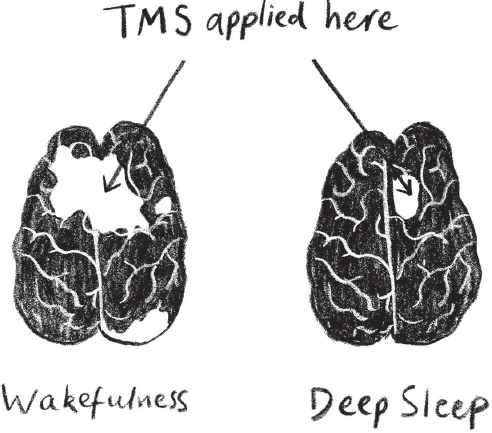

In the past it was impossible to ask the sleeping brain questions. But transcranial magnetic stimulation, or TMS, allows scientists to infiltrate the brain and artificially fire neurons. By applying a rapidly fluctuating magnetic field, the team can activate specific regions of my brain when I am awake and, more excitingly, when I am asleep. The question is whether this artificial stimulation of neurons causes the conscious brain to respond differently from the unconscious sleeping brain.

I was a bit nervous about the idea of someone zapping my brain. After all, it is my principal tool for creating my mathematics. Scramble that and I’m in trouble. But Tononi assured me it was quite safe. Using his own brain, one of his colleagues demonstrated how he could activate a region connected with the motor activity of the hand. It was quite amazing to see that zapping this region caused his finger to move. Each zap of TMS was like throwing a switch in the brain that made the finger move. Given that Tononi’s colleague seemed unaffected by the zapping, I agreed to take part in the experiment.

The first stage involved applying TMS to a small region of my brain when I was awake. Electrodes attached to my head recorded the effect via EEG. The results revealed that different areas far away from the stimulated site respond at different times in a complex pattern that feeds back to the original site of the stimulation. The brain, Tononi explains, is interacting as a complex integrated network. The neurons in the brain act like a series of interrelated logic gates. One neuron may fire if the majority of neurons connected to it are firing. Or it may fire if only one connected neuron is on. What I was seeing on the EEG output was the logical flow of activity caused by the firing of the initial neurons with TMS.

The next part of the experiment required me to fall asleep. Once I was in deep ‘stage 4’ sleep, they would apply TMS to my brain in exactly the same location, stimulating the same region. Essentially, the TMS fires the same neurons, turning them on like switches, and the question is: how has the structure of the network changed as the brain went from conscious to unconscious? Unfortunately, this part of the experiment proved too difficult for me. I am an incredibly light sleeper. Having a head covered in electrodes and knowing that someone was about to creep up and zap me once I’d dropped off aren’t very conducive to deep sleep. Despite depriving myself of coffee for the whole day, I failed to get past a light ‘stage 1’ fitful sleep.

Instead, I had to content myself with the data from a patient who had been able to fall asleep in such unrelaxing conditions. The results were striking. Electrical activity does not propagate through the brain, as it does when we’re conscious. It’s as if the network is down. The tide has come in, cutting off connections, and any activity is very localized. The exciting implication is that consciousness perhaps has to do with the complex integration in the brain, that it is a result of the interrelated logic gates that control when the firing of one set of neurons causes the firing of other neurons. In particular, the results suggest that consciousness is related to the way the network feeds information back and forth between the original neurons activated by the TMS and the rest of the brain.

Having failed to fall asleep in his lab, I was taken by Tononi to his office, where he promised that he would make up for my caffeine deprivation by crafting the perfect espresso from his prized Italian coffee machine. But he also had something he wanted to show me. As we sat down to the wonderful aroma of grinding coffee beans, he passed me a paper with a mathematical formula penned on it.

My interest was immediately piqued. Mathematical formulas are for me what Pavlov’s bell was for his dogs. Put a mathematical formula in front of me and I’m immediately hooked and want to decode its message. But I didn’t recognize it.

‘It’s my coefficient of consciousness.’

Consciousness in a mathematical formula … how could I resist?

Tononi explained that, as a result of his work on the sort of networked behaviour that corresponds to conscious brains, he has developed a new theory of networks that he believes are conscious. The theory is called integrated information theory, or IIT, and it includes a mathematical formula that measures the amount of integration and irreducibility present in a network, which he believes is key to creating a sense of self. Called Φ (that is, phi, the twenty-first letter of the Greek alphabet) it is a measure that can be applied to machines like my smartphone as much as it can the human brain, and it offers the prospect of a quantitative mathematical approach to what makes me ‘me’. According to Tononi, the larger the value of Φ, the more conscious the network. I guess it’s the mathematician in me that is so excited by the prospect of a mathematical equation explaining why and how ‘I’ am.

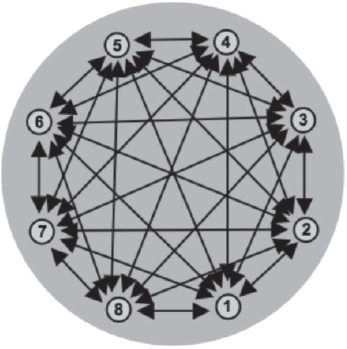

A conscious brain seems similar to a network that has a high degree of connectivity and feedback. If I fire neurons in one part of the network, the result is a cascade of cross-checking and information fed across the network. If the network consists of only isolated islands, then it appears to be unconscious. Tononi’s coefficient of consciousness is therefore a measure of how much the network is more than a sum of its parts.

But Tononi believes that it’s the nature of this connectivity of the whole that is important. It isn’t enough that there should be high connectivity across the network. If the neurons start firing in a synchronous way, this does not seem to yield a conscious experience. This is actually a property of the brain during deep sleep. At the other extreme, seizures that lead to a person losing consciousness are often associated with the highly synchronous firing of neurons across the brain. It therefore seems important that there is a large range of differentiated states. There shouldn’t be too many patterns or symmetry in the wiring, which may lead to an inability to differentiate different experiences. Connect a network up too much and the network will behave in the same way whatever you do to it.

What the coefficient tries to capture is one of the extraordinary traits of our conscious experience: the brain’s ability to integrate the vast range of inputs our body receives and synthesize them into a single experience. Consciousness can’t be broken apart into independent experiences felt separately. When I look at my red casino dice I don’t have separate experiences: one of a colourless cube and another experience which is just a patch of red formless colour. The coefficient also tries to quantify how much more information in a network is generated by the complete system than if it were cut into disconnected pieces, as happens to the brain during deep sleep.

Tononi and colleagues have run interesting computer simulations on ‘brains’ consisting of eight neurons connected in different ways to see which network has the greatest Φ. The results indicate that you want each neuron to have a connection pattern with the rest of the network that is different from that of the other neurons. But at the same time, you want as much information to be able to be shared across the network. So if you consider any division of the network into two separate halves, you want the two halves to be able to communicate with each other. It’s an interesting balancing act between overconnecting and cutting down differentiation across the different neurons versus creating difference at the expense of keeping everyone talking.

But the nature of the connectivity is also important. Tononi has created two networks that are functionally equivalent and have the same outputs, but in one case the Φ count is high, because the network feeds back and forth, while in the other network the Φ count is low because the network is set up so that it only feeds information forwards (the neurons can’t feed back). Tononi believes this is an example of a ‘zombie network’: a network that outputs the same information as the first network but has no sense of self. Just by looking at the output, you can’t differentiate between the two networks. But the way the zombie network generates that output has a completely different quality that is measured by Φ. According to Tononi’s Φ, the zombie network has no internal world.

It’s encouraging to know that the brain’s thalamocortical system, which is known to play an important part in generating consciousness, has a structure similar to networks with a high Φ. Contrast this with the network of neurons in the cerebellum, which doesn’t create consciousness. Called the little brain, it is located at the back of the skull and controls things like balance and our fine motor control. It accounts for 80% of the neurons in the brain, and yet, if it is removed, although our movement is severely impaired, we do not have an altered sense of consciousness.

In 2014, a 24-year-old woman in China was admitted to hospital with dizziness and nausea. When her brain was scanned to try to identify the cause, it was found that she had been born without a cerebellum. Although it had taken her some time to learn to walk as a child, and she was never able to run or jump, none of the medical staff who encountered her doubted that she was fully conscious. This was not a zombie, just a physically unstable human being.

If you examine the neural network at the heart of the cerebellum, you find patches that tend to be activated independently of one another with little interaction, just like the brain at sleep. The low Φ of the cerebellum’s network fits in with the idea that it does not create conscious experience.

A network with a high Φ and perhaps a higher level of consciousness.

Although highly connected, the symmetry of this network causes little differentiation to occur across the network, resulting in the whole not contributing to the creation of new information not already inherent in the parts. This leads to a low Φ and hence a lower consciousness.

That the connectivity of the brain could be the key to consciousness has led to the idea that my ‘connectome’ is part of the secret of what makes me ‘me’. The connectome is a comprehensive map of the neural connections in the brain. Just as the human genome project gave us unprecedented information about the workings of the body, mapping the human connectome may provide similar insights into the workings of the brain. Combine the wiring with the rules for how this network operates and we may have the ingredients for creating consciousness in a network.

The connectome of the human brain is a distant goal, but already we have a complete picture of the wiring of the neurons inside C. elegans, a one-millimetre-long brainless worm with a noted fondness for compost heaps. Its nervous system contains exactly 302 neurons, ideally suited for us to map out a complete neuron-to-neuron wiring. Despite this map, we are still a long way from relating these connections to the behaviour of C. elegans.

Given that Tononi’s Φ is a measure of how a network is connected, it could help us understand if my smartphone, the Internet, or even a city can achieve consciousness. Perhaps the Internet or a computer, once it hits a certain threshold at some point in the future, might recognize itself when it looks in the mirror. Consciousness could correspond to a phase change in this coefficient, just as water changes state when its temperature passes the threshold for boiling or freezing.

It is interesting that the introduction of an anaesthetic into the body doesn’t gradually turn down consciousness but at some point seems to suddenly switch it off. If you’ve ever had an operation and the anesthetist asks you to count up to 20, you’ll know (or perhaps won’t know) that there is a point at which you suddenly drop out. The change seems to be very non-linear, like a phase transition.

If consciousness is about the connectivity of the network, what other networks might already be conscious? The total number of transistors that are connected via the Internet is of the order of 1018, while the brain has about 1011 neurons. The difference lies in the way they are connected. A neuron is typically linked to tens of thousands of other neurons, which allows for a high degree of information integration. In contrast, transistors inside a computer are not highly connected. On Tononi’s measure it is unlikely that the Internet is conscious … yet.

If I take a picture with the digital camera in my smartphone, the memory in my phone can store an extraordinary range of different images. A million pixels, for example, will give me 21,000,000 different possible black-and-white images. It will record detail that I am completely unaware of when I look through the viewfinder. But that’s the point: my conscious experience could not deal with that huge input, and instead the raw sensory data is integrated to glean the significant information from the scene and limit its content.

At present, computers are very bad at matching the power of human vision. They are unable to tell the story inside a picture. The trouble is that a computer tends to read a picture pixel by pixel and finds it hard to integrate the information. Humans, however, are excellent at taking this huge amount of visual input and creating a story from the data. It is this ability of the human mind to integrate and pick out what is significant that is at the heart of Tononi’s measure of consciousness Φ.

Of course, there is still no explanation of how or why a high Φ should produce the conscious experience I have. We can certainly correlate it with consciousness, which is very suggestive – witness the experiments on sleeping patients whose networks have a decreased Φ. So too are considerations of different bits of the brain which have distinctive wirings. But I still can’t say why this produces consciousness, or whether a computer wired in this way would truly experience consciousness.

There are reasons why having a high Φ might have significant evolutionary advantages. It seems to allow planning for the future. By taking data from different sensors, the highly integrated nature of a network with high Φ can make recommendations for future action. This ability to project oneself into the future, to perform mental time travel, seems to be one of the key evolutionary advantages of consciousness. A network with a high Φ appears to be able to demonstrate such behaviour. But why couldn’t it do all this while unconscious?

Christof Koch, he of the Jennifer Aniston neuron, is a big fan of Φ as a measure of consciousness, so I was keen to push him on whether he thought it was robust enough to provide an answer to the ‘hard problem’, as philosopher David Chalmers dubbed the challenge of getting inside another person’s head.

Since I first met Koch at the top of Mount Baldy outside Caltech, he had taken up the directorship of an extraordinary venture called the Allen Institute for Brain Science, funded by a single individual, Paul G. Allen, co-founder of Microsoft, to the tune of 500 million US dollars. Allen’s motivation? He simply wants to understand how the brain works. It’s all open science, it’s not for profit, and they release all the data free. As Koch says: ‘It’s a pretty cool model.’

Since it was going to be tricky to make another trip to California to quiz Koch on his views on Φ and Tononi’s integrated information theory, I decided the next best thing was to access Koch’s consciousness via a Skype call. Koch was keen, though, to check which side of the philosophical divide I was on:

‘Sure, we can chat about consciousness and the extent to which it is unanswerable. But I would hope that you’re not going to turn away young people who are contemplating a career in neuroscience by buying into the philosopher’s conceit of the “Hard problem” (hard with a capital H). Philosophers such as Dave Chalmers deal in belief systems and personal opinions, not in natural laws and facts. While they ask interesting questions and pose challenging dilemmas, they have an unimpressive historical record of prognostication.’

Koch was keen to remind me of the philosopher Comte and his prediction that we would never know what was at the heart of a star. It is a story that I have kept in mind ever since embarking on this journey into the unknown. Koch added another important voice to the debate, that of his collaborator Francis Crick, who wrote in 1996: ‘It is very rash to say that things are beyond the scope of science.’

Koch was in excited mood when we finally connected our consciousnesses via Skype – actually, I’ve never seen him anything but excited about life at the cutting edge of one of today’s greatest scientific challenges. Two days earlier, the Allen Institute had just released data that tried to classify the different cell types that you find in the brain.

‘One of the unknowns is how many different sorts of brain cells are there. We’ve known for two hundred years that the body is made up of cells, but there are heart cells and skin cells and brain cells. But then we realized that there aren’t just one or two types of brain cell. There may well be a thousand. Or of the order of several thousand. In the cortex proper, we just don’t know.’

Koch’s latest research has produced a database detailing the different range of cortical neurons to be found in a mouse brain. ‘It’s really cool stuff. But this isn’t just a pretty picture in a publication as it usually is in science. It’s all the raw data and all the data is downloadable.’ Ramón y Cajal updated to the twenty-first century.

It’s this kind of detailed scientific analysis of how the brain functions that Koch believes is how we chip away at the challenge of understanding how the brain produces conscious experience.

Given that Koch is keen to get young scientists on board the consciousness bandwagon, I wondered what had inspired him to embark on a research project when there was no wagon. At the time, many regarded setting off in search of consciousness as akin to heading into the Sahara desert in search of water.

Part of the motivation was provided by people who said it was a no-go area. ‘One reason was to show them all they were wrong. I like to provoke.’ Koch is also someone who likes taking risks. It’s what drives him to hang off the side of a mountain, climbing unaided to the top. But there was a strand to his choice of problem that was a little more unexpected. It turned out that Koch’s religious upbringing was partly responsible for his desire to understand consciousness.

‘Ultimately I think I wanted to justify to myself my continued belief in God. I wanted to show to my own satisfaction that to explain consciousness we need something more. We need something like my idea of a God. It turned out to be different. It provoked a great internal storm within me when I realized there wasn’t really any need for a soul. There wasn’t any real need for it to generate consciousness. It could all be done by a mechanism as long as we had something special like Tononi’s integrated information theory, or something like that.’

As a way to reaffirm his belief in something transcendental, Koch admits it failed.

‘Over the last ten years I lost my faith; I always tried to integrate it but couldn’t. I still very much have a sentiment that I do live in a mysterious universe. It’s conducive to life. It’s conducive to consciousness. It all seems very wonderful, and literally every day I wake up with this feeling that life is mysterious and a miracle. I still haven’t lost that feeling.’

But Koch believes we are getting closer to demystifying consciousness, and he believes it is this mathematical coefficient Φ and Tononi’s IIT that hold the key.

‘I’m a big fan of this integrated information theory which states that, in principle, given the wiring diagram of a person or of C. elegans or a computer, I will be able to say whether it feels like something to be that system. Ultimately that is what consciousness is, it’s experience. And I’ll also be able to tell what that system is currently experiencing. I have this mechanism, this brain, this computer currently in this one state. These neurons are off; these neurons are on; these transistors are switching; these are not. The theory says that at least in principle I can predict the experience of that system, not by looking at the input–output but by actually looking inside the system, looking at the transition probability matrix and its states.’

Koch has a tough battle on his hands convincing others. There is a hardcore group of philosophers and thinkers who don’t believe that science can ever answer this problem. They are members of a school of thought that goes by the name of mysterianism, which asserts that there are mysteries that will always be beyond the limits of the human mind. Top of the mysterians’ hit list is the hard problem of consciousness.

The name of the movement – it originated from a rock band called Question Mark and the Mysterians – began as a term of abuse concocted by philosopher Owen Flanagan, who thought the standpoint of these philosophers was extremely defeatist. As Flanagan wrote: ‘The new mysterianism is a postmodern position designed to drive a railroad spike through the heart of scientism.’ Koch has often found himself debating with mysterian philosophers who think him misguided in his pursuit of an answer. So I wondered what he says to those who question whether you can ever know that a system is really experiencing something?

‘If you pursue that to its end you just end up with solipsism.’

You can hear the exasperation in Koch’s voice at this philosophical position, which asserts that knowledge of anything beyond our own mind is never certain. It takes us back to Descartes’ statement that the only thing we can be sure of is our own consciousness.

‘Yes … solipsism is logically consistent, but then it’s extremely improbable that everyone’s brain is like mine, except only I have consciousness and you are all pretending. Yes, you can believe that, but it’s not very likely.’

Did Koch think that those who insist on the unanswerability of the hard problem must therefore question whether anything is knowable? If you are going to be so extreme, are you inevitably drawn to a sceptic’s view of the world?

‘Exactly, and I don’t find that very interesting. You can be an extreme sceptic, but it doesn’t lend itself to anything easy. Any theory like IIT is an identity relationship ultimately. The claim of this particular theory and theories like it is that experience is identical to states in this very high-dimensional qualia space spanned essentially by the number of different states that your system can take on. It amounts to a configuration, to a constellation, to a polytope in this high-dimensional space. That is what experience is. That’s what it feels like to be you and it’s something that I can predict in principle … it’s an empirical project because I can say: “Well, Marcus, now you are seeing red or right now you are having the experience of smelling a rose.” I can do fMRI and I can see that your rose neurons are activated, that your colour area is activated. So in principle this is an entirely empirical project.’

Koch believes that this is as good as it’s going to get if you want to explain consciousness. It fits very well with what it would mean to be engaged in a science of consciousness. Wouldn’t it be good enough to identify a configuration in the brain, for example, that always correlates with your acknowledging an experience of feeling a sensation of pain or red or happiness? This is something that I could test with experiments. And if every time I get a correspondence, can’t I say that I know what the experience of red is, and that I can therefore extend the science to say that a computer will also have experiences of red?

Hardcore mysterians will contend that you haven’t explained how such a network gives rise to this sensation of feeling something. But aren’t we in danger of demanding too much, to the extent that we fall into solipsism and down the slippery sceptic slope to arguing that you can’t really say anything about the universe? We understand that the sensation of heat is just the movement of atoms. No one is saying you haven’t really explained why that gives rise to heat. We have identified what heat is. I think Koch would say the same of consciousness.

Theoretically the idea is very attractive, but I wondered whether Koch thought we could ever get to point at which we can use an fMRI scanner or something more sophisticated to know what someone is really experiencing. Or is it just too complex?

‘That’s a pragmatic problem. That’s the same problem that thermodynamics faced in the nineteenth century. You couldn’t compute those things. But at least for a simple thing like a critter of ten neurons, the expressions are perfectly well defined and there is a unique answer. The maximum number of neurons one could navigate is a big practical problem. Will we ever overcome it? I don’t know, because the problem scales exponentially with the number of neurons, which is really bad. But that’s a different problem.’

The ultimate test of our understanding of what makes me me is to see whether I can build an artificial brain that has consciousness. What is the prospect of an app on my phone that would truly have consciousness? Humans are quite good at making conscious things already. My son is an example of how we can combine various cellular structures, an egg and some sperm, and together they have all the kit and instructions for growing a new conscious organism. At least I’m assuming that my son is conscious – on some mornings he appears to be at the zombie end of the spectrum.

There are some amazingly ambitious projects afoot which aim to produce an analysis of the wiring of a human brain such that it can be simulated on a computer. The Human Brain Project, the brainchild of Henry Markram, aims within ten years to produce a working model of the human brain that can be uploaded onto a supercomputer. ‘It’s going to be the Higgs boson of the brain, a Noah’s archive of the mind,’ declared Markram. He was rewarded with a billion-euro grant from the European Commission to realize his vision. But when this is done, would these simulations be conscious? To my surprise, Koch didn’t think so.

‘Let’s suppose that Henry Markram’s brain project pans out and we have this perfect digital simulation of the human brain. It will also speak, of course, because it is a perfect simulation of the human brain, so it includes a simulation of the Broca area responsible for language. The computer will not exist as a conscious entity. There may be a minimal amount of Φ at the level of a couple of individual transistors, but it will never feel like anything to be the brain.’

The point is that Tononi’s Φ gets at the nature of the cause–effect power of the internal mechanism, not at the input–output behaviour. The typical connectivity inside the computer has one transistor talking on the CPU to at most four other transistors. This gives rise to a very low Φ, and in Koch’s belief a low level of consciousness. The interesting thing is that because it is a perfect simulation, it is going to object vociferously that it is conscious, that it has an internal world. But in Koch’s view it would just be all talk.

‘The reason is essentially similar to the fact that if you get a computer to simulate a black hole, the simulated mass will not affect the real mass near the computer. Space will never be distorted around the computer simulation. In the same way, you can simulate consciousness on a computer, but the simulated entity will not experience anything. It’s the difference between simulating and emulating. Consciousness arises from the cause–effect repertoire of the mechanism. It’s something physical.’

Consciousness depends on how the network is put together. A similar issue is at work with the zombie network that Tononi constructed with ten neurons. The amazing thing is that the principle that Tononi used to turn a high-Φ network with ten neurons into a zero-Φ network or zombie with exactly the same input–output repertoire can be applied to the network of the human brain. Both would have the same input–output behaviour, and yet, because of the difference in the internal state transitions, one has a high Φ, the other zero Φ. But how can we ever know whether this would mean that one is conscious and the other a zombie?

What bugs me is that this seems to highlight the near unanswerability of the question of consciousness. Φ is certainly a great measure of the difference between a simulation of the brain and the actual nature of the brain’s architecture. It defines the difference between a zombie network and the human mind. But how can we really know that the simulation or zombie isn’t conscious? It is still there shouting its head off: ‘For crying out loud, how many times do I need to tell you? I am conscious in here.’ But Φ says it’s just pretending. I tell the zombie that Φ says it doesn’t have an internal world. But just as my conscious brain would, the zombie insists: ‘Yes I do.’ And isn’t this the heart of the problem? I have only input–output to give me any idea of the system articulating these feelings. I can certainly define Φ as consciousness, but isn’t this finally the one thing that by its nature is beyond the ability of science to investigate empirically?

One of the other reasons Koch likes Φ as a measure of consciousness is that it plays into his panpsychic belief that we aren’t the only ones with consciousness. If consciousness is about integration of information across a network, then that applies from things as small as an amoeba to the consciousness of the whole universe.

‘Ultimately it says that anything that has cause–effect power on itself will have some level of consciousness. Consciousness is ultimately how much difference you make to yourself, the cause–effect power you have on yourself. So even if you take a minimal system like a cell – a single cell is already incredibly complex – it has some cause–effect power on itself. It will feel like something to be that cell. It will have a small but non-zero Φ. It may be vanishingly small, but it will have some level of consciousness. Of course, that’s a very ancient intuition.’

When I tell people I am investigating what we cannot know, a typical response is: will you be tackling life after death? This is intimately related to the question of consciousness. Does our Φ live on? There is no good evidence that anything of our consciousness survives after death. But is there any way we can know? Since we are finding it difficult to access each other’s consciousnesses while we are alive, to investigate consciousness after someone’s death appears an impossible challenge. It seems extremely unlikely, given how much correlation there is between brain activity and consciousness, that anything can survive beyond death. If there could be some communication, it might give us some hope. That is, after all, how we are able to investigate the consciousnesses we believe exist in the people around us.

Koch agreed with me that it is extremely unlikely that anything of our Φ survives after death. Koch had in fact spent some time debating the issue with an unlikely collaborator.

‘I spent a week a couple of years ago in India talking with the Dalai Lama. It was very intense. Four hours in the morning, four hours in the afternoon, talking about science. Two whole days were dedicated to consciousness. Buddhists have been exploring their own consciousness from the inside using meditative techniques for 2000 years. In contrast, we do it using fMRI and electrodes and psychophysics from the outside, but basically our views tend to agree. He was very sympathetic to many of the scientific ideas and we agreed on many things. For example, he has no trouble with the idea of evolution.’

But there must have been points of difference?

‘The one thing where we fundamentally disagreed was this idea of reincarnation. I don’t see how it would work. You have to have a mechanism that carries my consciousness or my memories over into the next life. Unless we find something in quantum physics, and I don’t know enough about it to know, I don’t see any evidence for it.’

As Koch points out, if you are going to attempt to answer this question as a scientist, you will need some explanation of how consciousness survives the death of your body. There have been some interesting proposals. For example, if consciousness corresponds ultimately to patterns of information in the brain – perhaps something close to what Tononi is advocating – then some argue that information can theoretically always be reconstructed. The black hole information paradox relates to the question of whether information is lost when things disappear in a black hole. But provided you avoid the black hole crematorium, the combination of quantum determinism and reversibility means no information is ever lost.

The religious physicist John Polkinghorne offers this as a story for the possibility of life after death: ‘Though this pattern is dissolved at death, it seems perfectly rational to believe that it will be remembered by God and reconstituted in a divine act of resurrection.’ Of course, you may not have to resort to God to realize this striking idea. Some people are already trying to ensure their brain information is stored somewhere before their hardware packs up. The idea of downloading my consciousness onto my smartphone so that ‘it’ becomes ‘me’ may not be so far from Polkinghorne’s proposal. It’s just Apple playing the role of God. Rapture for nerds, as Koch calls it.

When faced with a question that we cannot answer, we have to make a choice. Perhaps the intellectually correct response is to remain agnostic. After all, that is the meaning of having identified an unanswerable question. On the other hand, a belief in an answer one way or the other will have an impact on how we lead our lives. Imagine if you regarded yourself as unique and everyone else as zombies – it would have a big effect on your interaction with the world. Or, conversely, if there is a moment when you think a machine is conscious, then pressing Shut Down on your computer will become loaded with moral issues.

Some posit that to crack the problem of consciousness we will have to admit to our description of the universe a new basic ingredient that gives rise to consciousness, an ingredient that – like time, space or gravity – can’t be reduced to anything else. All we can do is explore the relationship between this new ingredient and the other ingredients we have to date. To me this seems a cop-out. Consciousness does emerge from a developing brain when some critical threshold is hit – like the moment when water begins to bubble and boil and turn into a gas. There are many examples in nature of these critical tipping points where a phase transition happens. The question is whether the phase transition creates something that can’t be explained other than by creating a new fundamental entity. It’s not without precedent, of course. Electromagnetic waves are created as a consequence of accelerating charged particles. That is not an emergent phenomenon, but a new fundamental ingredient of the natural world.

Or perhaps consciousness is associated with another state of matter, like solid or liquid. This state of matter already has a name proposed for it: perceptronium, which is defined as the most general substance that feels subjectively self-aware. This change of state would be consistent with consciousness as an emergent phenomenon. Or could there be a field of consciousness that gets activated by critical states of matter, in the same way that the Higgs field gives mass to matter? It sounds like a crazy idea, but perhaps, in order to get to grips with such a slippery concept, we need crazy ideas.

Some argue that nothing physical is ever going to answer the problem of what it feels like to be me, that the very existence of consciousness implies something that transcends our physical realm. But if mind can move matter, then surely there must be a physics which connects these two realms and allows science back into the game.

Perhaps the question of consciousness is not a question of science at all, but one of language. This is the stance of a school of philosophy going back to Wittgenstein. In his Philosophical Investigations, Wittgenstein explores the problem of a private language. The process of learning the meaning of the word ‘table’ involves people pointing at a table and saying ‘here is a table’. If I point at something which doesn’t correspond to what society means by ‘table’, then I get corrected. Wittgenstein challenges whether the same principle can apply to naming ‘pain’. Pain does not involve an object that is external to us, or that we can point at and call pain. We may be able to find a correlation between an output on an fMRI scanner that always corresponds to my feeling of pain. But when we point at the screen and say that is pain, is that what we really mean by ‘pain’?

Wittgenstein believed that the problem of exploring the private worlds of our qualia and feelings was one of language. How can we share the meaning of ‘here is a pain’? It is impossible. ‘I have a pain in my tooth’ seems to have the same quality as ‘I have a table’, and it tempts us into assuming both say the same kind of thing. You assume that there is something called ‘my pain’ in the same sense that there is ‘a table’. It seems to be saying something, but Wittgenstein believed that it isn’t really saying anything at all: ‘The confusions which occupy us arise when language is like an engine idling, not when it is doing work.’

For example, if you have a feeling and declare ‘I think this is pain’, I have no way of correcting you. I have no criterion against which to determine whether what you are feeling is what I mean by ‘pain’. Wittgenstein explores whether it is possible to show you pain by pricking your finger and declaring ‘that is pain’. If you now reply, for example: ‘Oh, I know what “pain” means; what I don’t know is whether this, that I have now, is pain.’ Wittgenstein replies that ‘we should merely shake our heads and be forced to regard (your) words as a queer reaction which we have no idea what to do with’.

We can get at the problem of identifying what is going on inside our heads by imagining that we each have a box with something inside. We all name the thing inside ‘a beetle’, but we aren’t allowed to look in anyone else’s box, only our own. So it is quite possible that everyone has something different in the box, or that the thing inside is constantly changing, or even that there is nothing in the box. And yet we all call it ‘a beetle’. But for those with nothing in the box, the word ‘beetle’ is a name for nothing. It is not a name at all. Does this word therefore have any meaning? Are our brains like the box and consciousness the beetle? Does an fMRI scanner allow us finally to look inside someone else’s box and see if your beetle is the same as mine? Does the machine rescue consciousness from Wittgenstein’s language games?

Wittgenstein explores how a sentence, or question, fools us into thinking it means something because it takes exactly the form of a real sentence, but when you examine it carefully you find that it doesn’t actually refer to anything. This, many philosophers believe, is at the heart of the problem of consciousness. It is not a question of science, but will vanish as a challenge once we recognize that it is simply a confusion of language.

Daniel Dennett is one of those philosophers who follow in the tradition of Wittgenstein. He believes that in years to come we will recognize that there is little point in having this long debate about the idea of consciousness beyond the physical things that seem necessary for us to have consciousness. So, for example, if we do encounter a machine or organism that functions exactly as we would expect a conscious being to, we should simply define this thing as conscious. The question of whether it is a zombie or not, whether it is actually feeling anything, should just be ignored. If there is no way to distinguish the unconscious zombie from the conscious human, what point is there having a word to describe this difference? Only when you can know there is difference is there any point having a word to describe it.

Dennett cites vitalism to support his stance. Vitalism suffered such a fate: we’ve given up on the idea that there is some extra special ingredient, an élan vital, that breathes life into this collection of cells. If you show me a collection of cells that have the ability to replicate themselves, that miaows and purrs like a cat, and then declare that it isn’t actually alive, it would be very difficult to convince me. Some believe that arguments over consciousness will go the same way as those over vitalism. Vitalism was an attempt to explain how a physical system could do the things associated with a living organism: reproduce, self-organize, adapt. Once those mechanisms were explained, we had solved the problem of life and no élan vital was needed. But in the case of consciousness, some believe that the question of how physical stuff produces a subjective experience cannot by its very subjective nature be answered by mechanisms in the same way.

With the advent of extraordinary new equipment and techniques, our internal world has become less opaque. Mental activities are private, but physical interactions with the world are public. To what extent can I gain access to the private domain by analysing the public? We can probably get to the stage that I can know you are thinking about Jennifer Aniston rather than Pythagoras’ theorem just by looking at the neurons that are firing.

But to get a sense of what it feels like to be you – is that possible just by understanding the firing of neurons? Will I ever be able to distinguish the conscious from the zombie? My gut has as many as 100 million neurons, 0.1% of the neurons in my brain, yet it isn’t conscious. Or is it? How could I know if it actually had a distinct consciousness from mine? This is the heart of the problem with the question of consciousness. My stomach might start communicating with me, but how can I ever know if it experiences red or falls in love like I do? I might scan it, probe it with electrodes, discover that the wiring and firing are a match for a cat brain that has as many neurons, but is that as far as we can go?

The question of distinguishing the zombies from the conscious could remain one of the unanswerable questions of science. The Turing test that I put my smartphone through at the beginning of our journey into the mind hints at the challenge ahead. Was it the zombie chatbot who wanted to become a poet, or did it want to become rich? Is a chatbot clever enough to make a joke about Descartes’ ‘I think, therefore I am’? Will it eventually start dating? Who is the zombie and who is conscious?

As we finished our Skype chat, Koch admitted to me that there was no guarantee we’ll ever know.

‘There is no law in the universe which says we have the cognitive power to explain everything. If we were dogs – I mean, I love my dog – my dog is fully conscious, but my dog doesn’t understand taxes, it doesn’t understand special relativity, or even the simplest differential equation. Even most people don’t understand differential equations. But for the same reasons, I really dislike it when people say you will never know this. You can’t say that. Yes, there’s no guarantee. But it’s a really defeatist attitude, right? I mean, what sort of research programme is it, Marcus, where you throw up your hands and say forget about it, I can’t understand it ever, it’s hopeless? That’s defeatism.’

With that clarion call not to give up trying to answer unanswerable questions ringing in my ears, I quit our Skype call. Koch’s face vanished from my screen, leaving me with a slightly uneasy feeling. Was I sure that it had been Koch at the other end of the line? Or had he devised some algorithmically generated avatar to deal with the onslaught of enquiries he gets about whether we can ever solve the hard problem of consciousness?