A key aspect of any solution that spans the on-premises infrastructure of an organization and the cloud concerns the way in which the elements that comprise the solution connect and communicate. A typical distributed application contains many parts running in a variety of locations, which must be able to interact in a safe and reliable manner. Although the individual components of a distributed solution typically run in a controlled environment, carefully managed and protected by the organizations responsible for hosting them, the network that joins these elements together commonly utilizes infrastructure, such as the Internet, that is outside of these organizations' realms of responsibility.

Consequently the network is the weak link in many hybrid systems; performance is variable, connectivity between components is not guaranteed, and all communications must be carefully protected. Any distributed solution must be able to handle intermittent and unreliable communications while ensuring that all transmissions are subject to an appropriate level of security.

The Windows Azure™ technology platform provides technologies that address these concerns and help you to build reliable and safe solutions. This appendix describes these technologies.

Jana says: Jana says: |

|

|---|---|

|

Making the most appropriate choice for selecting the way in which components communicate with each other is crucial, and can have a significant bearing on the entire systems design. |

Uses Cases and Challenges

In a hybrid cloud-based solution, the various applications and services will be running on-premises or in the cloud and interacting across a network. Communicating across the on-premises/cloud divide typically involves implementing one or more of the following generic use cases. Each of these use cases has its own series of challenges that you need to consider.

Accessing On-Premises Resources From Outside the Organization

Description: Resources located on-premises are required by components running elsewhere, either in the cloud or at partner organizations.

The primary challenge associated with this use case concerns finding and connecting to the resources that the applications and services running outside of the organization utilize. When running on-premises, the code for these items frequently has direct and controlled access to these resources by virtue of running in the same network segment. However, when this same code runs in the cloud it is operating in a different network space, and must be able to connect back to the on-premises servers in a safe and secure manner to read or modify the on-premises resources.

Accessing On-Premises Services From Outside the Organization

Description: Services running on-premises are accessed by applications running elsewhere, either in the cloud or at partner organizations.

In a typical service-based architecture running over the Internet, applications running on-premises within an organization access services through a public-facing network. The environment hosting the service makes access available through one or more well-defined ports and by using common protocols; in the case of most web-based services this will be port 80 over HTTP, or port 443 over HTTPS. If the service is hosted behind a firewall, you must open the appropriate port(s) to allow inbound requests. When your application running on-premises connects to the service it makes an outbound call through your organization's firewall. The local port selected for the outbound call from your on-premises application depends on the negotiation performed by the HTTP protocol (it will probably be some high-numbered port not currently in use), and any responses from the service return on the same channel through the same port. The important point is that to send requests from your application to the service, you do not have to open any additional inbound ports in your organization's firewall.

When you run a service on-premises, you are effectively reversing the communication requirements; applications running in the cloud and partner organizations need to make an inbound call through your organization's firewall and, possibly, one or more Network Address Translation (NAT) routers to connect to your services. Remember that the purpose of this firewall is to help guard against unrestrained and potentially damaging access to the assets stored on-premises from an attacker located in the outside world. Therefore, for security reasons, most organizations implement a policy that restricts inbound traffic to their on-premises business servers, blocking access to your services. Even if you are allowed to open up various ports, you are then faced with the task of filtering the traffic to detect and deny access to malicious requests.

The vital question concerned with this use case therefore, is how do you enable access to services running on-premises without compromising the security of your organization?

Poe says: Poe says: |

|

|---|---|

|

Opening ports in your corporate firewall without due consideration of the implications can render your systems liable to attack. Many hackers run automated port-scanning software to search for opportunities such as this. They then probe any services listening on open ports to determine whether they exhibit any common vulnerabilities that can be exploited to break into your corporate systems. |

Implementing a Reliable Communications Channel across Boundaries

Description: Distributed components require a reliable communications mechanism that is resilient to network failure and enables the components to be responsive even if the network is slow.

When you depend on a public network such as the Internet for your communications, you are completely dependent on the various network technologies managed by third party operators to transmit your data. Utilizing reliable messaging to connect the elements of your system in this environment requires that you understand not only the logical messaging semantics of your application, but also how you can meet the physical networking and security challenges that these semantics imply.

A reliable communications channel does not lose messages, although it may choose to discard information in a controlled manner in well-defined circumstances. Addressing this challenge requires you to consider the following issues:

- How is the communications channel established? Which component opens the channel; the sender, the receiver, or some third-party?

- How are messages protected? Is any additional security infrastructure required to encrypt messages and secure the communications channel?

- Do the sender and receiver have a functional dependency on each other and the messages that they send?

- Do the sender and receiver need to operate synchronously? If not, then how does the sender know that a message has been received?

- Is the communications channel duplex or simplex? If it is simplex, how can the receiver transmit a reply to the sender?

- Do messages have a lifetime? If a message has not been received within a specific period should it be discarded? In this situation, should the sender be informed that the message has been discarded?

- Does a message have a specific single recipient, or can the same message be broadcast to multiple receivers?

- Is the order of messages important? Should they be received in exactly the same order in which they were sent? Is it possible to prioritize urgent messages within the communications channel?

- Is there a dependency between related messages? If one message is received but a dependent message is not, what happens?

Cross-Cutting Concerns

In conjunction with the functional aspects of connecting components to services and data, you also need to consider the common non-functional challenges that any communications mechanism must address.

Security

The first and most important of these challenges is security. You should treat the network as a hostile environment and be suspicious of all incoming traffic. Specifically, you must also ensure that the communications channel used for connecting to a service is well protected. Requests may arrive from services and organizations running in a different security domain from your organization. You should be prepared to authenticate all incoming requests, and authorize them according to your organization's data access policy to guard your organization's resources from unauthorized access.

You must also take steps to protect all outgoing traffic, as the data that you are transmitting will be vulnerable as soon as it leaves the environs of your organization.

The questions that you must consider when implementing a safe communications channel include:

- How do you establish a communications channel that traverses the corporate firewall securely?

- How do you authenticate and authorize a sender to enable it to transmit messages over a communications channel? How do you authenticate and authorize a receiver?

- How do you prevent an unauthorized receiver from intercepting a message intended for another recipient?

- How do you protect the contents of a message to prevent an unauthorized receiver from examining or manipulating sensitive data?

- How do you protect the sender or receiver from attack across the network?

Poe says: Poe says: |

|

|---|---|

|

Robust security is a vital element of any application that is accessible across a network. If security is compromised, the results can be very costly and users will lose faith in your system. |

Responsiveness

A well designed solution ensures that the system remains responsive, even while messages are flowing across a slow, error prone network between distant components. Senders and receivers will probably be running on different computers, hosted in different datacenters (either in the cloud, on-premises, or within a third-party partner organization), and located in different parts of the world. You must answer the following questions:

- How do you ensure that a sender and receiver can communicate reliably without blocking each other?

- How does the communications channel manage a sudden influx of messages?

- Is a sender dependent on a specific receiver, and vice versa?

Interoperability

Hybrid applications combine components built using different technologies. Ideally, the communications channel that you implement should be independent of these technologies. Following this strategy not only reduces dependencies on the way in which existing elements of your solution are implemented, but also helps to ensure that your system is more easily extensible in the future.

Maintaining messaging interoperability inevitably involves adopting a standards-based approach, utilizing commonly accepted networking protocols such as TCP and HTTP, and message formats such as XML and SOAP. A common strategy to address this issue is to select a communications mechanism that layers neatly on top of a standard protocol, and then implement the appropriate libraries to format messages in a manner that components built using different technologies can easily parse and process.

Windows Azure Technologies for Implementing Cross-Boundary Communication

If you are building solutions based on direct access to resources located on-premises, you can use Windows Azure Connect to establish a safe, virtual network connection to your on-premises servers. Your code can utilize this connection to read and write the resources to which it has been granted access.

If you are following a service-oriented architecture (SOA) approach, you can build services to implement more functionally focused access to resources; you send messages to these services that access the resources in a controlled manner on your behalf. Communication with services in this architecture frequently falls into one of two distinct styles:

-

Remote procedure call (RPC) style communications.

In this style, the message receiver is typically a service that exposes a set of operations a sender can invoke. These operations can take parameters and may return results, and in some simple cases can be thought of as an extension to a local method call except that the method is now running remotely. The underlying communications mechanism is typically hidden by a proxy object in the sender application; this proxy takes care of connecting to the receiver, transmitting the message, waiting for the response, and then returning this response to the sender. Web services typically follow this pattern.

This style of messaging lends itself most naturally to synchronous communications, which may impact responsiveness on the part of the sender; it has to wait while the message is received, processed, and a response sent back. Variations on this style support asynchronous messaging where the sender provides a callback that handles the response from the receiver, and one-way messaging where no response is expected.

You can build components that provide RPC-style messaging by implementing them as Windows Communication Foundation services. If the services must run on-premises, you can provide safe access to them using the Windows Azure Service Bus Relay mechanism described later in this appendix.

If these services must run in the cloud you can host them as Windows Azure roles. This scenario is described in detail in the patterns & practices guide "Developing Applications for the Cloud (2nd Edition)" on MSDN.

Markus says:

Markus says:

Older applications and frameworks also support the notion of remote objects. In this style of distributed communications, a service host application exposes collections of objects rather than operations. A client application can create and use remote objects using the same semantics as local objects. Although this mechanism is very appealing from an object-oriented standpoint, it has the drawback of being potentially very inefficient in terms of network use (client applications send lots of small, chatty network requests), and performance can suffer as a result. This style of communications is not considered any further in this guide. -

Message-oriented communications.

In this style, the message receiver simply expects to receive a packaged message rather than a request to perform an operation. The message provides the context and the data, and the receiver parses the message to determine how to process it and what tasks to perform.

This style of messaging is typically based on queues, where the sender creates a message and posts it to the queue, and the receiver retrieves messages from the queue. It's also a naturally asynchronous method of communications because the queue acts as a repository for the messages, which the receiver can select and process in its own time. If the sender expects a response, it can provide some form of correlation identifier for the original message and then wait for a message with this same identifier to appear on the same or another queue, as agreed with the receiver.

Windows Azure provides an implementation of reliable message queuing through Service Bus queues. These are covered later in this appendix.

The following sections provide more details on Windows Azure Connect, Windows Azure Service Bus Relay, and Service Bus queues; and describe when you should consider using each of them.

Accessing On-Premises Resources from Outside the Organization Using Windows Azure Connect

Windows Azure Connect enables you integrate your Windows Azure roles with your on-premises servers by establishing a virtual network connection between the two environments. It implements a network level connection based on standard IP protocols between your applications and services running in the cloud and your resources located on-premises, and vice versa.

Guidelines for Using Windows Azure Connect

Using Windows Azure Connect provides many benefits over common alternative approaches:

- Setup is straightforward and does not require any changes to your on-premises network. For example, your IT staff do not have to configure VPN devices or perform any complex network or DNS configuration, nor are they required to modify the firewall configuration of any of your on-premises servers or change any router settings.

Bharath says:

Bharath says:

If you are exposing resources from a VM role, you may need to configure the firewall of the virtual machine hosted in the VM role. For example, file sharing may be blocked by Windows Firewall. - You can selectively relocate applications and services to the cloud while protecting your existing investment in on-premises infrastructure.

- You can retain your key data services and resources on-premises if you do not wish to migrate them to the cloud, or if you are required to retain these items on-premises for legal or compliance reasons.

Windows Azure Connect is suitable for the following scenarios:

-

An application or service running in the cloud requires access to network resources located on on-premises servers.

Using Windows Azure Connect, code hosted in the cloud can access on-premises network resources using the same procedures and methods as code executing on-premises. For example, a cloud-based service can use familiar syntax to connect to a file share or a device such as a printer located on-premises. The application can implement impersonation using the credentials of an account with permission to access the on-premises resource. With this technique, the same security semantics available between on-premises applications and resources apply, enabling you to protect resources using access control lists (ACLs).

-

An application running in the cloud requires access to data sources managed by on-premises servers.

Windows Azure Connect enables a cloud-based service to establish a network connection to data sources running on-premises that support IP connectivity. Examples include SQL Server, a DCOM component, or a Microsoft Message queue. Services in the cloud specify the connection details in exactly the same way as if they were running on-premises. For instance, you can connect to SQL Server by using SQL Server authentication, specifying the name and password of an appropriate SQL Server account (SQL Server must be configured to enable SQL Server or mixed authentication for this technique to work.)

This approach is especially suitable for third-party packaged applications that you wish to move to the cloud. The vendors of these applications do not typically supply the source code, so you cannot modify the way in which they work.

-

An application running in the cloud needs to execute in the same Windows security domain as code running on-premises.

Windows Azure Connect enables you to join the roles running in the cloud to your organization's Windows Active Directory® domain. In this way you can configure corporate authentication and resource access to and from cloud-based virtual machines. This feature enables you take advantage of integrated security when connecting to data sources hosted by your on-premises servers. You can either configure the role to run using a domain account, or it can run using a local account but configure the IIS application pool used to run the web or worker role with the credentials of a domain account.

Poe says:

Poe says:

Using Windows Azure Connect to bring cloud services into your corporate Windows domain solves many problems that would otherwise require complex configuration, and that may inadvertently render your system open to attack if you get this configuration wrong. -

An application requires access to resources located on-premises, but the code for the application is running at a different site or within a partner organization.

You can use Windows Azure Connect to implement a simplified VPN between remote sites and partner organizations. Windows Azure Connect is also a compelling technology for solutions that incorporate roaming users who need to connect to on-premises resources such as a remote desktop or file share from their laptop computers.

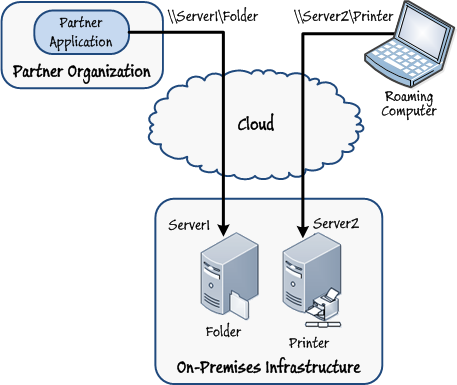

Figure 1

Figure 1

Connecting to on-premises resources from a partner organization and roaming computers

Note: Note: |

|---|

| For up-to-date information about best practices for implementing Windows Azure Connect, visit the Windows Azure Connect Team Blog. |

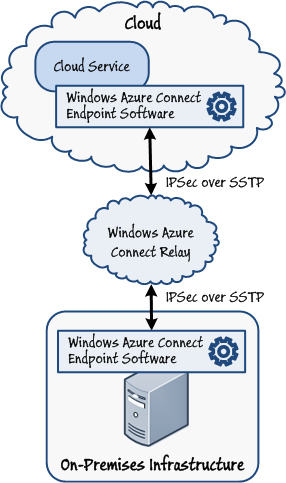

Windows Azure Connect Architecture and Security Model

Windows Azure Connect is implemented as an IPv6 virtual network by Windows Azure Connect endpoint software running on each server and role that participates in the virtual network. The endpoint software transparently handles DNS resolution and manages the IP connections between your servers and roles. It is installed automatically on roles running in the cloud that are configured as connect-enabled. For servers running on-premises, you download and install the Windows Azure Connect endpoint software manually. This software executes in the background as a Windows service. Similarly, if you are using Windows Azure Connect to connect from a VM role, you must install the Windows Azure Connect endpoint software in this role before you deploy it to the cloud.

You use the Windows Azure Management Portal to generate an activation token that you include as part of the configuration for each role and each instance of the Windows Azure Connect endpoint software running on-premises. Windows Azure Connect uses this token to link the connection endpoint to the Windows Azure subscription and ensures that the virtual network is only accessible to authenticated servers and roles. Network traffic traversing the virtual network is protected end-to-end by using certificate-based IPsec over Secure Socket Tunneling Protocol (SSTP). Windows Azure Connect provisions and configures the appropriate certificates automatically, and does not require any manual intervention on the part of the operator.

The Windows Azure Connect endpoint software establishes communications with each node by using Connect Relay service, hosted and managed by Microsoft in their datacenters. The endpoint software uses outbound HTTPS connections only to communicate with the Windows Azure Connect Relay service. However, the Windows Azure Connect endpoint software creates a firewall rule for Internet Control Message Protocol for IPv6 (ICMPv6) communications which allows Router Solicitation (Type 133) and Router Advertisement (Type 134) messages when it is installed. These messages are required to establish and maintain an IPv6 link. Do not disable this rule.

Bharath says: Bharath says: |

|

|---|---|

|

Microsoft implements separate instances of the Windows Azure Connect Relay service in each region. For best performance, choose the relay region closest to your organization when you configure Windows Azure Connect. |

You manage the connection security policy that governs which servers and roles can communicate with each other using the Management Portal; you create one or more endpoint groups containing the host servers that comprise your solution (and that have the Windows Azure Connect endpoint software installed), and then specify the Windows Azure roles that they can connect to. This collection of host servers and roles constitutes a single virtual network.

Note: Note: |

|---|

| For more information about configuring Windows Azure Connect and creating endpoint groups, see "Windows Azure Connect" on MSDN. |

Limitations of Windows Azure Connect

Windows Azure Connect is intended for providing direct access to corporate resources, either located on-premises or in the cloud. It provides a general purpose solution, but is subject to some constraints as summarized by the following list:

- Windows Azure Connect is an end-to-end solution; participating on-premises computers and VM roles in the cloud must be defined as part of the same virtual network. Resources (on-premises and in a VM role) can be protected by using ACLs, although this protection relies on the users accessing those resources being defined in the Windows domain spanning the virtual network or in another domain trusted by this domain. Consequently, Windows Azure Connect is not suitable for sharing resources with public clients, such as customers communicating with your services across the Internet. In this case, Windows Azure Service Bus Relay is a more appropriate technology.

- Windows Azure Connect implements an IPv6 network, although it does not require any IPv6 infrastructure on the underlying platform. However, any applications using Windows Azure Connect to access resources must be IPv6 aware. Older legacy applications may have been constructed based on IPv4, and these applications will need to be updated or replaced to function correctly with Windows Azure Connect.

- If you are using Windows Azure Connect to join roles to your Windows domain, the current version of Windows Azure Connect requires that you install the Windows Azure Connect endpoint software on the domain controller. This same machine must also be a Windows Domain Name System (DNS) server. These requirements may be subject to approval from your IT department, and you organization may implement policies that constrain the configuration of the domain controller. However, these requirements may change in future releases of Windows Azure Connect.

- Windows Azure Connect is specifically intended to provide connectivity between roles running in the cloud and servers located on-premises. It is not suitable for connecting roles together; if you need to share resources between roles, a preferable solution is to use Windows Azure storage, Windows Azure queues, or databases tables deployed to the SQL Azure™ technology platform.

- You can only use Windows Azure Connect to establish network connectivity with servers running the Windows® operating system; the Windows Azure Connect endpoint software is not designed to operate with other operating systems. If you require cross-platform connectivity, you should consider using Windows Azure Service Bus.

Accessing On-Premises Services from Outside the Organization Using Windows Azure Service Bus Relay

Windows Azure Service Bus Relay provides the communication infrastructure that enables you to expose a service to the Internet from behind your firewall or NAT router. The Windows Azure Service Bus Relay service provides an easy to use mechanism for connecting applications and services running on either side of the corporate firewall, enabling them to communicate safely without requiring a complex security configuration or custom messaging infrastructure.

Guidelines for Using Windows Azure Service Bus Relay

Windows Azure Service Bus Relay is ideal for enabling secure communications with a service running on-premises, and for establishing peer-to-peer connectivity. Using Windows Azure Service Bus Relay brings a number of benefits:

- It is fully compatible with Windows Communication Foundation (WCF). You can leverage your existing WCF knowledge without needing to learn a new model for implementing services and client applications. Windows Azure Service Bus Relay provides WCF bindings that extend those commonly used by many existing services.

- It is interoperable with platforms and technologies for operating systems other than Windows. Windows Azure Service Bus Relay is based on common standards such as HTTPS and TCP/SSL for sending and receiving messages securely, and it exposes an HTTP REST interface. Any technology that can consume and produce HTTP REST requests can connect to a service that uses Windows Azure Service Bus Relay. You can also build services using the HTTP REST interface.

- It does not require you to open any inbound ports in your organization's firewall. Windows Azure Service Bus Relay uses only outbound connections.

- It supports naming and discovery. Windows Azure Service Bus Relay maintains a registry of active services within your organization's namespace. Client applications that have the appropriate authorization can query this registry to discover these services, and download the metadata necessary to connect to these services and exercise the operations that they expose. The registry is managed by Windows Azure and exploits the scalability and availability that Windows Azure provides.

- It supports federated security to authenticate requests. The identities of users and applications accessing an on-premises service through Windows Azure Service Bus Relay do not have to be members of your organization's security domain.

- It supports many common messaging patterns, including two-way request/response processing, one-way messaging, service remoting, and multicast eventing.

- It supports load balancing. You can open up to 25 listeners on the same endpoint. When the Service Bus receives requests directed towards an endpoint, it load balances the requests across these listeners.

You should note that Windows Azure Service Bus Relay is not suitable for implementing all communication solutions. For example, it imposes a temporal dependency between the services running on-premises and the client applications that connect to them; a service must be running before a client application can connect to it otherwise the client application will receive an EndpointNotFoundException exception (this limitation applies even with the NetOnewayRelayBinding and NetEventRelayBinding bindings described in the section "Selecting a Binding for a Service" later in this appendix.) Furthermore, Windows Azure Service Bus Relay is heavily dependent on the reliability of the network; a service may be running, but if a client application cannot reach it because of a network failure the client will again receive an EndpointNotFoundException exception. In these cases using Windows Azure Service Bus queues may provide a better alternative; see the section "Implementing a Reliable Communications Channel across Boundaries Using Service Bus Queues" later in this appendix for more information.

You should consider using Windows Azure Service Bus Relay in the following scenarios:

-

An application running in the cloud requires controlled access to your service hosted on-premises. Your service is built using WCF.

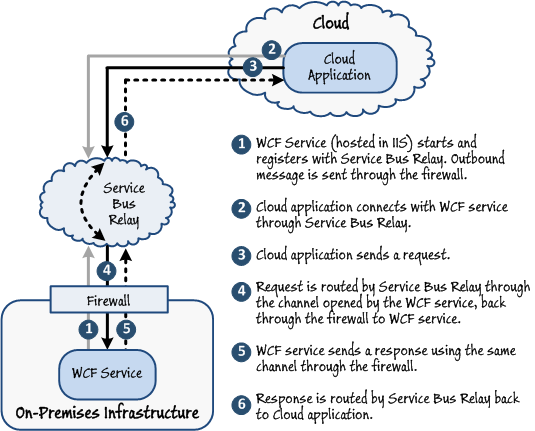

This is the primary scenario for using Windows Azure Service Bus Relay. Your service is built using WCF and the Windows Azure SDK. It uses the WCF bindings provided by the Microsoft.ServiceBus assembly to open an outbound communication channel through the firewall to the Windows Azure Service Bus Relay service and wait for incoming requests. Client applications in the cloud also use the same Windows Azure SDK and WCF bindings to connect to the service and send requests through the Windows Azure Service Bus Relay service. Responses from the on-premises services are routed back through the same communications channel through the Windows Azure Service Bus Relay service to the client application. On-premises services can be hosted in a custom application or by using Internet Information Services (IIS). When using IIS, an administrator can configure the on-premises service to start automatically so that it registers a connection with Windows Azure Service Bus Relay and is available to receive requests from client applications.

Figure 3

Figure 3

Routing requests and responses through Windows Azure Service Bus RelayThis pattern is useful for providing remote access to existing on-premises services that were not originally accessible outside of your organization. You can build a façade around your services that publishes them in Windows Azure Service Bus Relay. Applications external to your organization and that have the appropriate security rights can then connect to your services through Windows Azure Service Bus Relay.

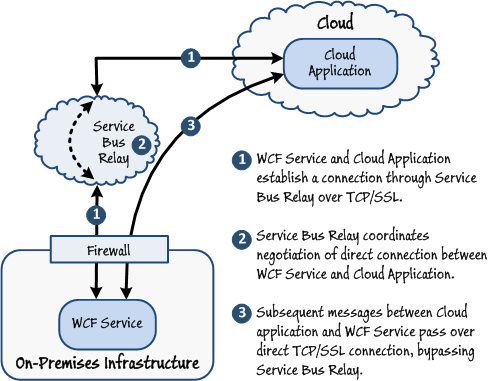

Initially all messages are routed through the Windows Azure Service Bus Relay service, but as an optimization mechanism a service exposing a TCP endpoint can use a direct connection, bypassing the Windows Azure Service Bus Relay service once the service and client have both been authenticated. The coordination of this direct connection is governed by the Windows Azure Service Bus Relay service. The direct socket connection algorithm uses only outbound connections for firewall traversal and relies on a mutual port prediction algorithm for NAT traversal. If a direct connection can be established, the relayed connection is automatically upgraded to the direct connection. If the direct connection cannot be established, the connection will revert back to passing messages through the Windows Azure Service Bus Relay service.

The NAT traversal algorithm is dependent on a very narrowly timed coordination and a best-guess prediction about the expected NAT behavior. Consequently the algorithm tends to have a very high success rate for home and small business scenarios with a small number of clients but degrades in its success rate as the size of the network increases.

Figure 4

Figure 4

Establishing a direct connection over TCP/SSL -

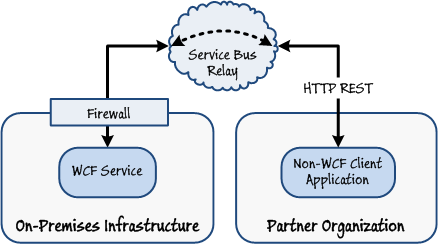

An application running at a partner organization requires controlled access to your service hosted on-premises. The client application is built by using a technology other than WCF.

In this scenario, a client application can use the HTTP REST interface exposed by the Windows Azure Service Bus Relay service to locate an on-premises service and send it requests.

Figure 5

Figure 5

Connecting to an on-premises service by using HTTP REST requests -

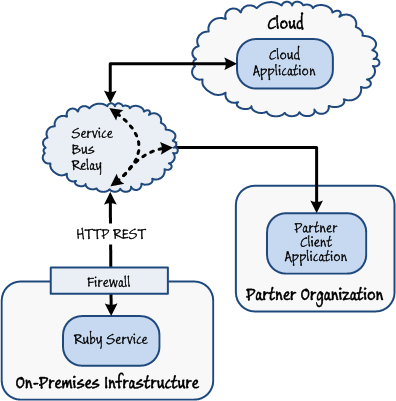

An application running in the cloud or at a partner organization requires controlled access to your service hosted on-premises. Your service is built by using a technology other than WCF.

You may have existing services that you have implemented using a technology such as Perl or Ruby. In this scenario, the service can use the HTTP REST interface to connect to the Windows Azure Service Bus Relay service and await requests.

Figure 6

Figure 6

Connecting to an on-premises service built with Ruby using Windows Azure Service Bus Relay -

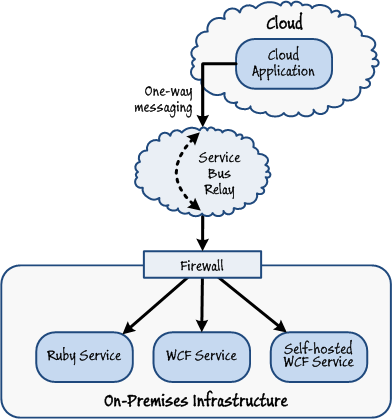

An application running in the cloud or at a partner organization submits requests that can be sent to multiple services hosted on-premises.

In this scenario, a single request from a client application may be sent to and processed by more than one service running on-premises. Effectively, the message from the client application is multicast to all on-premises services registered at the same endpoint with the Windows Azure Service Bus Relay service. All messages sent by the client are one-way; the services do not send a response. This approach is useful for building event-based systems; each message sent by a client application constitutes an event, and services can transparently subscribe to this event by registering with the Windows Azure Service Bus Relay service.

Figure 7

Figure 7

Multicasting using Windows Azure Service Bus Relay Bharath says:

Bharath says:

You can also implement an eventing system by using Service Bus topics and subscriptions. Windows Azure Service Bus Relay is very lightweight and efficient, but topics and subscriptions provide more flexibility. Guidelines for routing messages using Service Bus topics and subscriptions are provided in "Appendix D - Implementing Business Logic and Message Routing across Boundaries." -

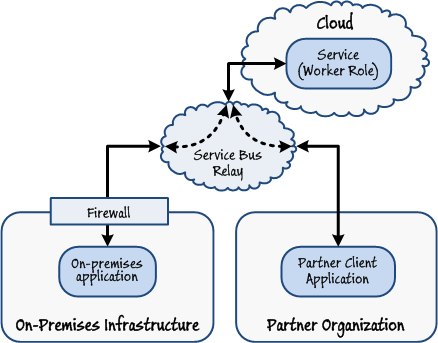

An application running on-premises or at a partner organization requires controlled access to your service hosted in the cloud. Your service is implemented as a Windows Azure worker role and is built using WCF.

This scenario is the opposite of the situation described in the previous cases. In many situations, an application running on-premises or at a partner organization can access a WCF service implemented as a worker role directly, without the intervention of Windows Azure Service Bus Relay. However, this scenario is valuable if the WCF service was previously located on-premises and code running elsewhere connected via Windows Azure Service Bus Relay as described in the preceding examples, but the service has now been migrated to Windows Azure. Refactoring the service, relocating it to the cloud and publishing it through Windows Azure Service Bus Relay enables you to retain the same endpoint details, so client applications do not have to be modified or reconfigured in any way; they just carry on running as before. This architecture also enables you to more easily protect communications with the service by using the appropriate WCF bindings.

Figure 8

Figure 8

Routing requests to a worker role through Windows Azure Service Bus Relay

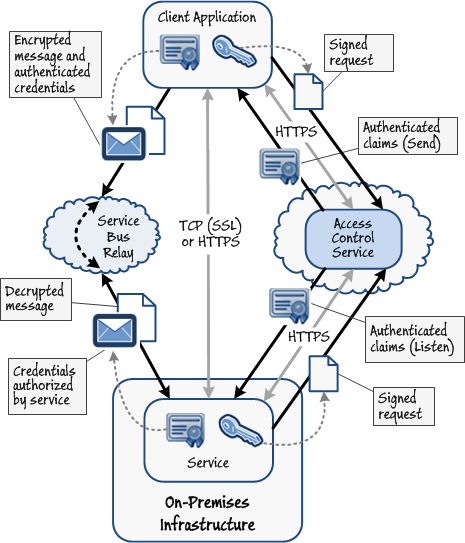

Guidelines for Securing Windows Azure Service Bus Relay

Windows Azure Service Bus Relay endpoints are organized by using Service Bus namespaces. When you create a new service that communicates with client applications by using Windows Azure Service Bus Relay you can use the Management Portal to generate a new service namespace. This namespace must be unique, and it determines the uniform resource identifier (URI) that your service exposes; client applications specify this URI to connect to your service through Windows Azure Service Bus Relay. For example, if you create a namespace with the value TreyResearch and you publish a service named OrdersService in this namespace, the full URI of the service is sb://treyresearch.servicebus.windows.net/OrdersService.

The services that you expose through Windows Azure Service Bus Relay can provide access to sensitive data, and are themselves valuable assets; therefore you should protect these services. There are several facets to this task:

Poe says: Poe says: |

|

|---|---|

|

Remember that even though Service Bus is managed and maintained by one or more Microsoft datacenters, applications connect to Windows Azure Service Bus Relay across the Internet. Unauthorized applications that can connect to your Service Bus namespaces can implement common attacks, such as denial of service to disrupt your operations, or Man-in-the-Middle to steal data as it is passed to your services. Therefore, you should protect your Service Bus namespaces and the services that use it as carefully as you would defend your on-premises assets. |

- You should restrict access to your Service Bus namespace to authenticated services and client applications only. This requires that each service and client application runs with an associated identity that the Windows Azure Service Bus Relay service can verify. As described in "Appendix B - Authenticating Users and Authorizing Requests," Service Bus includes its own identity provider as part of the Windows Azure Access Control Service (ACS), and you can define identities and keys for each service and user running a client application. You can also implement federated security through ACS to authenticate requests against a security service running on-premises or at a partner organization.

When you configure access to a service exposed through Windows Azure Service Bus Relay, the realm of the relying party application with which you associate authenticated identities is the URL of the service endpoint on which the service accepts requests.

Bharath says:

Bharath says:

Service Bus can also use third party identity providers, such as Windows Live ID, Google, and Yahoo!, but the default is to use the built-in identity provider included within ACS. - You should limit the way in which clients can interact with the endpoints published through your Service Bus namespace. For example, most client applications should only be able to send messages to a service (and obtain a response) while services should be only able to listen for incoming requests. Service Bus defines the claim type net.windows.servicebus.action which has the possible values Send, Listen, and Manage. Using ACS you can implement a rule group for each URI defined in your Service Bus namespace that maps an authenticated identity to one or more of these claim values.

When a service starts running and attempts to advertise an endpoint, it provides its credentials to Windows Azure Service Bus Relay service. These credentials are validated, and are used to determine whether the service should be allowed to create the endpoint. A common approach used by many services is to define an endpoint behavior that references the transportClientEndpointBehavior element in the configuration file. This element has a clientCredentials element that enables a service to specify the name of an identity and the corresponding symmetric key to verify this identity. A client application can take a similar approach, except that it specifies the name and symmetric key for the identity running the application rather than that of the service.

Note:

Note:For more information about protecting services through Windows Azure Service Bus Relay, see "Securing and Authenticating a Service Bus Connection" on MSDN. Note that using the shared secret token provider is just one way of supplying the credentials for the service and the client application. When you specify this provider, ACS itself authenticates the name and key, and if they are valid it generates a Simple Web Token (SWT) containing the claims for this identity, as determined by the rules configured in ACS. These claims determine whether the service or client application has the appropriate rights to listen for, or send messages. Other authentication provider options are available, including using SAML tokens. You can also specify a different Security Token Service (STS) other than that provided by ACS to authenticate credentials and issue claims.

- When a client application has established a connection to a service through Windows Azure Service Bus Relay, you should carefully control the operations that the client application can invoke. The authorization process is governed by the way in which you implement the service and is outside the scope of ACS, although you can use ACS to generate the claims for an authenticated client, which your service can use for authorization purposes.

Markus says:

Markus says:

If you are using WCF to implement your services, you should consider building a Windows Identity Foundation authorization provider to decouple the authorization rules from the business logic of your service. - All communications passing between your service and client applications is likely to pass across a public network or the Internet. You should protect these communications by using an appropriate level of data transfer security, such as SSL or HTTPS.

Markus says:

Markus says:

If you are using WCF to implement your services, implementing transport security is really just a matter of selecting the most appropriate WCF binding and then setting the relevant properties to specify how to encrypt and protect data.

Figure 9 illustrates the core recommendations for protecting services exposed through Windows Azure Service Bus Relay.

Note: Note: |

|---|

| You can configure message authentication and encryption by configuring the WCF binding used by the service. For more information, see "Securing and Authenticating a Service Bus Connection" on MSDN. |

Many organizations implement outbound firewall rules that are based on IP address allow-listing. In this configuration, to provide access to Service Bus or ACS you must add the addresses of the corresponding Windows Azure services to your firewall. These addresses vary according to the region hosting the services, and they may also change over time, but the following list shows the addresses for each region at the time of writing:

- Asia (SouthEast): 207.46.48.0/20, 111.221.16.0/21, 111.221.80.0/20

- Asia (East): 111.221.64.0/22, 65.52.160.0/19

- Europe (West): 94.245.97.0/24, 65.52.128.0/19

- Europe (North): 213.199.128.0/20, 213.199.160.0/20, 213.199.184.0/21, 94.245.112.0/20, 94.245.88.0/21, 94.245.104.0/21, 65.52.64.0/20, 65.52.224.0/19

- US (North/Central): 207.46.192.0/20, 65.52.0.0/19, 65.52.48.0/20, 65.52.192.0/19, 209.240.220.0/23

- US (South/Central): 65.55.80.0/20, 65.54.48.0/21, 65.55.64.0/20, 70.37.48.0/20, 70.37.64.0/18, 65.52.32.0/21, 70.37.160.0/21

Poe says: Poe says: |

|

|---|---|

|

IP address allow-listing is not really a suitable security strategy for an organization when the target addresses identify a massively multi-tenant infrastructure such as Windows Azure (or any other public cloud platform, for that matter). |

Guidelines for Naming Services in Windows Azure Service Bus Relay

If you have a large number of services, you should adopt a standardized convention for naming the endpoints for these services. This will help you manage, protect, and monitor services and the client applications that connect to them. Many organizations commonly adopt a hierarchical approach. For example, if Trey Research had sites in Chicago, New York, and Washington, each of which provided ordering and shipping services, an administrator might register URIs following the naming convention shown in this list:

- sb://treyresearch.servicebus.windows.net/chicago/ordersservice

- sb://treyresearch.servicebus.windows.net/chicago/shippingservice

- sb://treyresearch.servicebus.windows.net/newyork/ordersservice

- sb://treyresearch.servicebus.windows.net/newyork/shippingservice

- sb://treyresearch.servicebus.windows.net/washington/ordersservice

- sb://treyresearch.servicebus.windows.net/washington/shippingservice

However, when you register the URI for a service with Windows Azure Service Bus Relay, no other service can listen on any URI scoped at a lower level than your service. What this means that if in the future Trey Research decided to implement an additional orders service for exclusive customers, they could not register it by using a URI such as sb://treyresearch.servicebus.windows.net/chicago/ordersservice/exclusive.

To avoid problems such as this, you should ensure that the initial part of each URI is unique. You can generate a new GUID for each service, and prepend the city and service name elements of the URI with this GUID. In the Trey Research exampl , the URIs for the Chicago services, including the exclusive orders service, could be:

- sb://treyresearch.servicebus.windows.net/B3B4D086-BEB9-4773-97D3-064B0DD306EA/chicago/ordersservice

- sb://treyresearch.servicebus.windows.net/DD986578-EAB6-FC84-5490-075F34CD8B7A/chicago/ordersservice/exclusive

- sb://treyresearch.servicebus.windows.net/A8B3CC55-1256-5632-8A9F-FF0675438EEC/chicago/shippingservice

For more information about naming guidelines for Windows Azure Service Bus Relay services, see "AppFabric Service Bus – Things You Should Know – Part 1 of 3 (Naming Your Endpoints)."

Selecting a Binding for a Service

The purpose of Windows Azure Service Bus Relay is to provide a safe and reliable connection to your services running on-premises for client applications executing on the other side of your corporate firewall. Once a service has registered with the Windows Azure Service Bus Relay service, much of the complexity associated with protecting the service and authenticating and authorizing requests can be handled transparently outside the scope of the business logic of the service. If you are using WCF to implement your services, you can use the same types and APIs that you are familiar with in the System.ServiceModel assembly. The Windows Azure SDK includes transport bindings, behaviors, and other extensions in the Microsoft.ServiceBus assembly for integrating a WCF service with Windows Azure Service Bus Relay.

Markus says: Markus says: |

|

|---|---|

|

If you are familiar with building services and client applications using WCF, you should find Windows Azure Service Bus Relay quite straightforward. |

As with a regular WCF service, selecting an appropriate binding for a service that uses Windows Azure Service Bus Relay has an impact on the connectivity for client applications and the functionality and security that the transport provides. The Microsoft.ServiceBus assembly provides four sets of bindings:

- HTTP bindings; BasicHttpRelayBinding, WSHttpRelayBinding, WS2007HttpRelayBinding and WebHttpRelayBinding.

These bindings are very similar to their standard WCF equivalents (BasicHttpBinding, WSHttpBinding, WS2007HttpBinding, and WebHttpBinding) except that they are designed to extend the underlying WCF channel infrastructure and route messages through the Windows Azure Service Bus Relay service. They offer the same connectivity and feature set as their WCF counterparts, and they can operate over HTTP and HTTPS. For example, the WS2007HttpRelayBinding binding supports SOAP message-level security, reliable sessions, and transaction flow. These bindings open a channel to the Windows Azure Service Bus Relay service by using outbound connections only; you do not need to open any additional inbound ports in your corporate firewall.

- TCP binding; NetTcpRelayBinding.

This binding is functionally equivalent to the NetTcpBinding binding of WCF. It supports duplex callbacks and offers better performance than the HTTP bindings although it is less portable. Client applications connecting to a service using this binding may be required to send requests and receive responses using the TCP binary encoding, depending on how the binding is configured by the service. Although this binding does not require you to open any additional inbound ports in your corporate firewall, it does necessitate that you open outbound TCP port 808, and port 828 if you are using SSL.

This binding also supports the hybrid mode through the ConnectionMode property (the HTTP bindings do not support this type of connection). The default connection mode for this binding is Relayed, but you should consider setting it to Hybrid if you want to take advantage of the performance improvements that bypassing the Windows Azure Service Bus Relay service provides. However, the NAT prediction algorithm that establishes the direct connection between the service and client application requires that you also open outbound TCP ports 818 and 819 in your corporate firewall. Finally, note that the hybrid connection mode requires that the binding is configured to implement message-level security.

Markus says:

Markus says:

The network scheme used for addresses advertised through the NetTcpRelayBinding binding is sb rather than the net.tcp scheme used by the WCF NetTcpBinding binding. For example, the address of the Orders service implemented by Trey Research could be sb://treyresearch.servicebus.windows.net/OrdersService - One-way binding; NetOnewayRelayBinding.

This binding implements one-way buffered messaging. A client application sends requests to a buffer managed by the Windows Azure Service Bus Relay service which delivers the message to the service. This binding is suitable for implementing a service that provides asynchronous operations as they can be queued and scheduled by the Windows Azure Service Bus Relay service, ensuring an orderly throughput without swamping the service. However message delivery is not guaranteed; if the service shuts down before the Windows Azure Service Bus Relay service has forwarded messages to it then these messages will be lost. Similarly, the order in which messages submitted by a client application are passed to the service is not guaranteed either.

This binding uses a TCP connection for the service, so it requires outbound ports 808 and 828 (for SSL) to be open in your firewall.

- Multicasting binding; NetEventRelayBinding.

This binding is a specialized version of the NetOnewayRelayBinding binding that enables multiple services to register the same endpoint with the Windows Azure Service Bus Relay service. Client applications can connect using either the NetEventRelayBinding binding or NetOnewayRelayBinding binding. All communications are one-way, and message delivery and order is not guaranteed.

This binding is ideal for building an eventing system; n client applications can connect to n services, with the Windows Azure Service Bus Relay service effectively acting as the event hub. As with the NetOnewayRelayBinding binding, this binding uses a TCP connection for the service, so it requires outbound ports 808 and 828 (for SSL) to be open.

Windows Azure Service Bus Relay and Windows Azure Connect Compared

There is some overlap in the features provided by Windows Azure Service Bus Relay and Windows Azure Connect. However, when deciding which of these technologies you should use, consider the following points:

- Windows Azure Service Bus Relay can provide access to services that wrap on-premises resources. These services can act as façades that implement highly controlled and selective access to the wrapped resources. Client applications making requests can be authenticated with a service by using ACS and federated security; they do not have to provide an identity that is defined within your organization's corporate Windows domain.

Windows Azure Connect is intended to provide direct access to resources that are not exposed publicly. You protect these resources by defining ACLs, but all client applications using these resources must be provisioned with an identity that is defined within your organization's corporate Windows domain.

- Windows Azure Service Bus Relay maintains a registry of publicly accessible services within a Windows Azure namespace. A client application with the appropriate security rights can query this registry and obtain a list of services and their metadata (if published), and use this information to connect to the service and invoke its operations. This mechanism supports dynamic client applications that discover services at runtime.

Windows Azure Connect does not support enumeration of resources; a client application cannot easily discover resources at runtime.

- Client applications communicating with a service through Windows Azure Service Bus Relay can establish a direct connection, bypassing the Windows Azure Service Bus Relay service once an initial exchange has occurred.

All Windows Azure Connect requests pass through the Windows Azure Service Bus Relay service; you cannot make direct connections to resources (although the way in which Windows Azure Connect uses the Windows Azure Service Bus Relay service is transparent).

Implementing a Reliable Communications Channel across Boundaries Using Service Bus Queues

Service Bus queues enable you to decouple services from the client applications that use them, both in terms of functionality (a client application does not have to implement any specific interface or proxy to send messages to a receiver) and time (a receiver does not have to be running when a client application posts it a message). Service Bus queues implement reliable, transactional messaging with guaranteed delivery, so messages are never inadvertently lost. Moreover, Service Bus queues are resilient to network failure; as long as a client application can post a message to a queue it will be delivered when the service is next able to connect to the queue.

When you are dealing with message queues, keep in mind that client applications and services can both send and receive messages. The descriptions in this section therefore refer to "senders" and "receivers" rather than client applications and services.

Service Bus Messages

A Service Bus message is an instance of the BrokeredMessage class. It consists of two elements; the message body which contains the information being sent, and a collection of message properties which can be used to add metadata to the message.

The message body is opaque to the Service Bus queue infrastructure and it can contain any application-defined information, as long as this data can be serialized. The message body may also be encrypted for additional security. The contents of the message are never visible outside of a sending or receiving application, not even in the Management Portal.

Markus says: Markus says: |

|

|---|---|

|

The data in a message must be serializable. By default the BrokeredMessage class uses a DataContractSerializer object with a binary XmlDictionaryWriter to perform this task, although you can override this behavior and provide your own XmlObjectSerializer object if you need to customize the way the data is serialized. The body of a message can also be a stream. |

In contrast, the Service Bus queue infrastructure can examine the metadata of a message. Some of the metadata items define standard messaging properties that an application can set; and are used by the Service Bus queues infrastructure for performing tasks such as uniquely identifying a message, specifying the session for a message, indicating the expiration time for a message if it is undelivered, and many other common operations. Messages also expose a number of system-managed read-only properties, such as the size of the message and the number of times a receiver has retrieved the message in PeekLock mode but not completed the operation successfully. Additionally, an application can define custom properties and add them to the metadata. These items are typically used to pass additional information describing the contents of the message, and they can also be used by Service Bus to filter and route messages to message subscribers.

Guidelines for Using Service Bus Queues

Service Bus queues are perfect for implementing a system based on asynchronous messaging. You can build applications and services that utilize Service Bus queues by using the Windows Azure SDK. This SDK includes APIs for interacting directly with the Service Bus queues object model, but it also provides bindings that enable WCF applications and services to connect to queues in a similar way to consuming Microsoft Windows Message Queuing queues in an enterprise environment.

Bharath says: Bharath says: |

|

|---|---|

|

Prior to the availability of Service Bus queues, Windows Azure provided message buffers. These are still available, but they are only included for backwards compatibility. If you are implementing a new system, you should use Service Bus queues instead. You should also note that Service Bus queues are different from Windows Azure storage queues, which are used primarily as a communication mechanism between web and worker roles running on the same site. |

Service Bus queues enable a variety of common patterns and can assist you in building highly elastic solutions as described in the following scenarios:

-

A sender needs to post one or more messages to a receiver. Messages should be delivered in order and message delivery must be guaranteed, even if the receiver is not running when the sender posts the message.

This is the most common scenario for using a Service Bus queue and is the typical model for implementing asynchronous processing. A sender posts a message to a queue and at some later point in time a receiver retrieves the message from the same queue. A Service Bus queue is an inherently first-in-first-out (FIFO) structure, and by default messages will be received in the order in which they are sent.

The sender and receiver are independent; they may be executing remotely from each other and, unlike the situation when using Windows Azure Service Bus Relay, they do not have to be running concurrently. For example, the receiver may be temporarily offline for maintenance. The queue effectively acts as a buffer, reliably storing messages for subsequent processing. An important point to note is that although Service Bus queues reside in the cloud, both the sender and the receiver can be located elsewhere. For example, a sender could be an on-premises application and a receiver could be a service running in a partner organization.

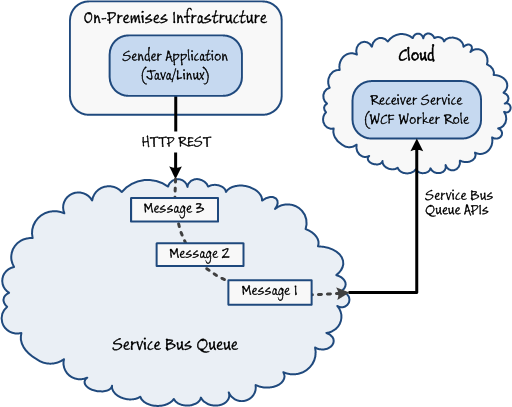

The Service Bus queue APIs in the Windows Azure SDK are actually wrappers around a series of HTTP REST interfaces. Applications and services built by using the Windows Azure SDK, and applications and services built using technologies not supported by the Windows Azure SDK, can all interact with Service Bus queues. Figure 10 shows an example architecture where the sender is an on-premises application and the receiver is a worker role running in the cloud. In this example, the on-premises application is built using the Java programming language and is running on Linux, so it performs HTTP REST requests to post messages. The worker role is a WCF service built using the Windows Azure SDK and the Service Bus queue APIs.

Figure 10Sending and receiving messages, in order, using a Service Bus queue

Figure 10Sending and receiving messages, in order, using a Service Bus queue -

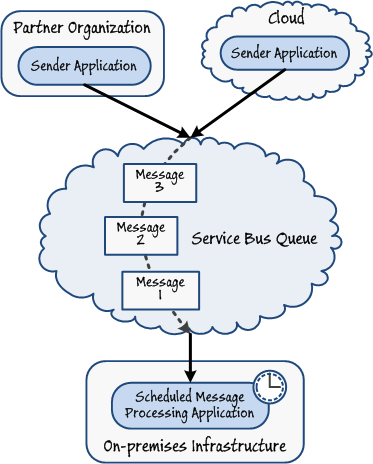

Multiple senders running at partner organizations or in the cloud need to send messages to your system. These messages may require complex processing when they are received. The receiving application runs on-premises, and you need to optimize the time at which it executes so that you do not impact your core business operations.

Service Bus queues are highly suitable for batch-processing scenarios where the message-processing logic runs on-premises and may consume considerable resources. In this case, you may wish to perform message processing at off-peak hours so as to avoid a detrimental effect on the performance of your critical business components. To accomplish this style of processing, senders can post requests to a Service Bus queue while the receiver is offline. At some scheduled time you can start the receiver application running to retrieve and handle each message in turn. When the receiver has drained the queue it can shut down and release any resources it was using.

Figure 11

Figure 11

Implementing batch processing by using a Service Bus queueYou can use a similar solution to address the fan-in problem, where an unexpectedly large number of client applications suddenly post a high volume of requests to a service running on-premises. If the service attempts to process these requests synchronously it could easily be swamped, leading to poor performance and failures caused by client applications being unable to connect. In this case, you could restructure the service to use a Service Bus queue. Client applications post messages to this queue, and the service processes them in its own time. In this scenario, the Service Bus queue acts as a load-leveling technology, smoothing the workload of the receiver while not blocking the senders.

-

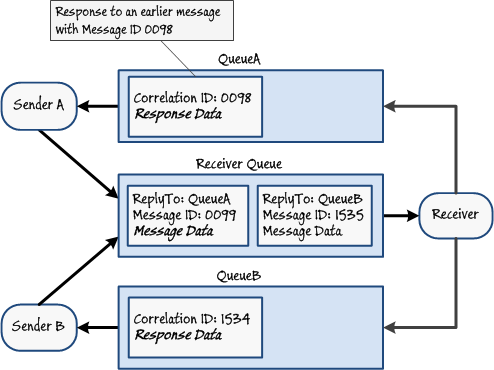

A sender posting request messages to a queue expects a response to these requests.

A message queue is an inherently asynchronous one-way communication mechanism. If a sender posting a request message expects a response, the receiver can post this response to a queue and the sender can receive the response from this queue. Although this is a straightforward mechanism in the simple case with a single sender posting a single request to a single receiver that replies with a single response, in a more realistic scenario there will be multiple senders, receivers, requests, and responses. In implementing a solution, you have to address two problems:

- How can you prevent a response message being received by the wrong sender?

- If a sender can post multiple request messages, how does the sender know which response message corresponds to which request?

The answer to the first question is to create an additional queue that is specific to each sender. All senders post messages to the receiver using the same queue, but listen for the response on their own specific queues. All Service Bus messages have a collection of properties that you use to include metadata. A sender can populate the ReplyTo metadata property of a request message with a value that indicates which queue the receiver should use to post the response.

All messages should have a unique MessageId value, set by the sender. The second problem can be handled by the sender setting the CorrelationId property of the response message to the value held in the MessageId of the original request. In this way, the sender can determine which response relates to which original request.

Figure 12

Figure 12

Implementing two-way messaging with response queues and message correlation -

You require a reliable communications channel between a sender and a receiver.

You can extend the message-correlation approach if you need to implement a reliable communications channel based on Service Bus queues. Service Bus queues are themselves inherently reliable, but the connection to them across the network might not be, and neither might the applications sending or receiving messages. It may therefore be necessary not only to implement retry logic to handle the transient network errors that might occur when posting or receiving a message, but also to incorporate a simple end-to-end protocol between the sender and receiver in the form of acknowledgement messages.

As a simple example, when a sender posts a message to a queue, it can wait (using an asynchronous task or background thread) for the receiver to respond with an acknowledgement. The CorrelationId property of the acknowledgement message should match the MessageId property of the original request. If no correlating response appears after a specified time interval, the sender can repost the message and wait again. This process can repeat until either the sender receives an acknowledgement, or a specified number of iterations have occurred; in which case the sender gives up and handles the situation as a failure to send the message.

However, it is possible that the receiver has retrieved the message posted by the sender and has acknowledged it, but this acknowledgement has failed to reach the sender. In this case, the sender may post a duplicate message that the receiver has already processed. To handle this situation, the receiver should maintain a list of message IDs for messages that it has handled. If it receives another message with the same ID, it should simply reply with an acknowledgement message but not attempt to repeat the processing associated with the message.

Note:

Note:Do not use the duplicate detection feature of Service Bus queues to eliminate duplicate messages in this scenario. If you enable duplicate detection, repeated request or acknowledgement messages may be silently removed causing the end-to-end protocol to fail. For example, if a receiver reposts an acknowledgement message, duplicate detection may cause this reposted message to be removed and the sender will eventually abort, possibly causing the system to enter an inconsistent state; the sender assumes that the receiver has not received or processed the message while the receiver is not aware that the sender has aborted. -

Your system experiences spikes in the number of messages posted by senders and needs to handle a highly variable volume of messages in a timely manner.

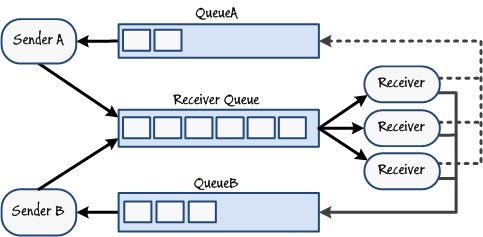

Service Bus queues are a good solution for implementing load-leveling, preventing a receiver from being overwhelmed by a sudden influx of messages. However, this approach is only useful if the messages being sent are not time sensitive. In some situations, it may be important for a message to be processed within a short period of time. In this case, the solution is to add further receivers listening for messages on the same queue. This fan-out architecture naturally balances the load amongst the receivers as they compete for messages from the queue; the semantics of message queuing prevents the same message from being dequeued by two concurrent receivers. A monitor process can query the length of the queue, and dynamically start and stop receiver instances as the queue grows or drains.

Bharath says:

Bharath says:

You can use the Enterprise Library Autoscaling Application Block to monitor the length of a Service Bus queue and start or stop worker roles acting as receivers, as necessary. Senders do not have to be modified in any way as they continue to post messages to the queue in the same manner as before. This solution even works for implementing two-way messaging, as shown in Figure 13.

Figure 13

Figure 13

Implementing load-balancing with multiple receivers Poe says:

Poe says:

You should bear in mind that a sudden influx of a large number of requests might be the result of a denial of service attack. To help reduce the threat of such an attack, it is important to protect the Service Bus queues that your application uses to prevent unauthorized senders from posting messages. For more information, see the section "Guidelines for Securing Service Bus Queues" later in this appendix. -

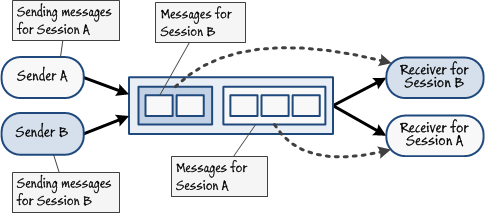

A sender posts a series of messages to a queue. The messages have dependencies on each other and must be processed by the same receiver. If multiple receivers are listening to the queue, the same receiver must handle all the messages in the series.

In this scenario, a message might convey state information that sets the context for a set of following messages. The same message receiver that handled the first message may be required to process the subsequent messages in the series.

In a related case, a sender might need to send a message that is bigger than the maximum message size (currently 256Kb). To address this problem, the sender can divide the data into multiple smaller messages. These messages will need to be retrieved by the same receiver and then reassembled into the original large message for processing.

Service Bus queues can be configured to support sessions. A session consists of a set of messages that comprise a single conversation. All messages in a session must be handled by the same receiver. You indicate that a Service Bus queue supports sessions by setting the RequiresSession property of the queue to true when it is created. All messages posted to this queue must have their SessionId property set to a string value. The value stored in this string identifies the session, and all messages with the same SessionId value are considered part of the same session. Notice that it is possible for multiple senders to post messages with the same session ID, in which case all of these messages are treated as belonging to the same session.

A receiver willing to handle the messages in the session calls the AcceptMessageSession method of a QueueClient object. This method establishes a session object that the receiver can use to retrieve the messages for the session, in much the same way as retrieving the messages from an ordinary queue. However, the AcceptMessageSession method effectively pins the messages in the queue that comprise the session to the receiver and hides them from all other receivers. Another receiver calling AcceptMessageSession will receive messages from the next available session. Figure 14 shows two senders posting messages using distinct session IDs; each sender generates its own session. The receivers for each session only handle the messages posted to that session.

Figure 14

Figure 14

Using sessions to group messagesYou can also establish duplex sessions if the receiver needs to send a set of messages as a reply. You achieve this by setting the ReplyToSessionId property of a response message with the value in the SessionId property of the received messages before replying to the sender. The sender can then establish its own session and use the session ID to correlate the messages in the response session with the original requests.

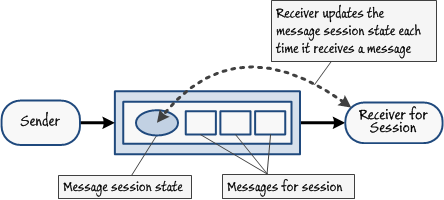

A message session can include session state information, stored with the messages in the queue. You can use this information to track the work performed during the message session and implement a simple finite state machine in the message receiver. When a receiver retrieves a message from a session, it can store information about the work performed while processing the message in the session state and write this state information back to the session in the queue. If the receiver should fail or terminate unexpectedly, another instance can connect to the session, retrieve the session state information, and continue the necessary processing where the previous failed receiver left off. Figure 15 illustrates this scenario.

Markus says:

Markus says:

Use the GetState and SetState methods of a MessageSession object to retrieve and update the state information for a message session.  Figure 15

Figure 15

Retrieving and storing message session state informationIt is possible for a session to be unbounded—there might be a continuous stream of messages posted to a session at varying and sometimes lengthy intervals. In this case, the message receiver should be prepared to hibernate itself if it is inactive for a predefined duration. When a new message appears for the session, another process monitoring the system can reawaken the hibernated receiver which can then resume processing.

-

A sender needs to post one or more messages to a queue as a singleton operation. If some part of the operation fails, then none of the messages should be sent and they must all be removed from the queue.

The simplest way to implement a singleton operation that posts multiple messages is by using a local transaction. You initiate a local transaction by creating a TransactionScope object. This is a programmatic construct that defines the scope for a set of tasks that comprise a single transaction.

To post a batch of messages as part of the same transaction you should invoke the send operation for each message within the context of the same TransactionScope object. In effect, the messages are simply buffered and are not actually sent until the transaction completes. To ensure that all the messages are actually sent, you must complete the transaction successfully. If the transaction fails, none of the messages are sent but are instead removed from the queue. For more information about the TransactionScope class, see the topic "TransactionScope Class" on MSDN.

If you are sending messages asynchronously (the recommended approach), it may not be feasible to send messages within the context of a TransactionScope object. Note that if you are incorporating operations from other transactional sources, such as a SQL Server database, then these operations cannot be performed within the context of the same TransactionScope object; the resource manager that wraps Service Bus queues cannot share transactions with other resource managers.

Markus says:

Markus says:

If you attempt to use a TransactionScope object to perform local transactions that enlist a Service Bus queue and other resource managers, your code will throw an exception. In these scenarios, you can implement a custom-pseudo transactional mechanism based on manual failure detection, retry logic, and the duplicate message elimination (dedupe) of Service Bus queues.

To use dedupe, each message that a sender posts to a queue should have a unique message ID. If two messages are posted to the same queue with the same message ID, both messages are assumed to be identical and duplicate detection will remove the second message. Using this feature, in the event of failure in its business logic, a sender application can simply attempt to resend a message as part of its failure/retry processing. If the message had been successfully posted previously the duplicate will be eliminated; the receiver will only see the first message. This approach guarantees that the message will always be sent at least once (assuming that the sender has a working connection to the queue) but it is not possible to easily withdraw the message if the failure processing in the business logic determines that the message should not be sent at all.

Markus says:

Markus says:

You enable duplication detection by setting the RequiresDuplicateDetection property of the queue to true when you create it. It is not possible to change the value of this property on a queue that already exists. Additionally, you should set the DuplicateDetectionHistoryTimeWindow property to a TimeSpan value that indicates the period during which duplicate messages for a given message ID are discarded; if a new message with an identical message ID appears when this period has expired then it will be queued for delivery. -

A receiver retrieves one or more messages from a queue, again as part of a transactional operation. If the transaction fails, then all messages must be replaced into the queue to enable them to be read again.

A message receiver can retrieve messages from a Service Bus queue by using one of two receive modes; ReceiveAndDelete and PeekLock. In ReceiveAndDelete mode, messages are removed from the queue as soon as they are read. In PeekLock mode, messages are not removed from the queue as they are read, but rather they are locked to prevent them from being retrieved by another concurrent receiver, which will instead retrieve the next available unlocked message. If the receiver successfully completes any processing associated with the message it can call the Complete method of the message, which removes the message from the queue. If the receiver is unable to handle the message successfully, it can call the Abandon method, which releases the lock but leaves the message on the queue. This approach is suitable for performing asynchronous receive operations.

Markus says:

Markus says:

The ReceiveAndDelete receive mode provides better performance than the PeekLock receive mode, but PeekLock provides a greater degree of safety. In ReceiveAndDelete mode, if the receiver fails after reading the message then the message will be lost. In PeekLock mode, if the receive operation or message processing are not successfully completed, the message can be abandoned and returned to the queue from where it can be read again.

The default receive mode for a Service Bus queue is PeekLock.As with message send operations, a message receive operation performed using PeekLock mode can also be performed synchronously as part of a local transaction by defining a TransactionScope object, as long as the transaction does not attempt to enlist any additional resource managers. If the transaction does not complete successfully, all messages received and processed within the context of the TransactionScope object will be returned to the queue.

Markus says:

Markus says:

Only PeekLock mode respects local transactions; ReceiveAndDelete mode does not. -

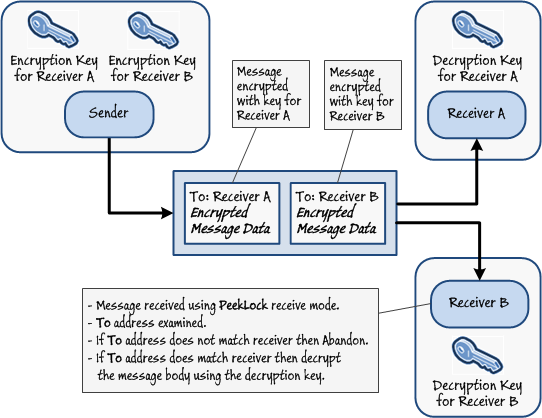

A receiver needs to examine the next message on the queue but should only dequeue the message if it is intended for the receiver.