A key feature of the Windows Azure™ technology platform is the robustness that the platform provides. A typical Windows Azure solution is implemented as a collection of one or more roles, where each role is optimized for performing a specific category of tasks. For example, a web role is primarily useful for implementing the web front-end that provides the user interface of an application, while a worker role typically executes the underlying business logic such as performing any data processing required, interacting with a database, orchestrating requests to and from other services, and so on. If a role fails, Windows Azure can transparently start a new instance and the application can resume.

However, no matter how robust an application is, it must also perform and respond quickly. Windows Azure supports highly scalable services through the ability to dynamically start and stop instances of an application, enabling a Windows Azure solution to handle an influx of requests at peak times, while scaling back as the demand lowers, reducing the resources consumed and the associated costs.

Poe says: Poe says: |

|

|---|---|

|

If you are building a commercial system, you may have a contractual obligation to provide a certain level of performance to your customers. This obligation might be specified in a service level agreement (SLA) that guarantees the response time or throughput. In this environment, it is critical that you understand the architecture of your application, the resources that it utilizes, and the tools that Windows Azure provides for building and maintaining an efficient system. |

However, scalability is not the only issue that affects performance and response times. If an application running in the cloud accesses resources and databases held in your on-premises servers, bear in mind that these items are no longer directly available over your local high-speed network. Instead the application must retrieve this data across the Internet with its lower bandwidth, higher latency, and inherent unpredictably concerning reliability and throughput. This can result in increased response times for users running your applications or reduced throughput for your services.

Of course, if your application or service is now running remotely from your organization, it will also be running remotely from your users. This might not seem like much of an issue if you are building a public-facing website or service because the users would have been remote prior to you moving functionality to the cloud, but this change may impact the performance for users inside your organization who were previously accessing your solution over a local area network. Additionally, the location of an application or service can affect its perceived availability if the path from the user traverses network elements that are heavily congested, and network connectivity times out as a result. Finally, in the event of a catastrophic regional outage of the Internet or a failure at the datacenter hosting your applications and services, your users will be unable to connect.

This appendix considers issues associated with maintaining performance, reducing application response times, and ensuring that users can always access your application when you relocate functionality to the cloud. It describes solutions and good practice for addressing these concerns by using Windows Azure technologies.

Requirements and Challenges

The primary causes of extended response times and poor availability in a distributed environment are lack of resources for running applications, and network latency. Scaling can help to ensure that sufficient resources are available, but no matter how much effort you put into tuning and refining your applications, users will perceive that your system has poor performance if these applications cannot receive requests or send responses in a timely manner because the network is slow. A crucial task, therefore, is to organize your solution to minimize this network latency by making optimal use of the available bandwidth and utilizing resources as close as possible to the code and users that need them.

The following sections identify some common requirements concerning scalability, availability, and performance, summarizing many of the challenges you will face when you implement solutions to meet these requirements.

Managing Elasticity in the Cloud

Description: Your system must support a varying workload in a cost-effective manner.

Many commercial systems must support a workload that can vary considerably over time. For much of the time the load may be steady, with a regular volume of requests of a predictable nature. However, there may be occasions when the load dramatically and quickly increases. These peaks may arise at expected times; for example, an accounting system may receive a large number of requests as the end of each month approaches when users generate their month-end reports, and it may experience periods of increased usage towards the end of the financial year. In other types of application the load may surge unexpectedly; for example, requests to a news service may flood in if some dramatic event occurs.

The cloud is a highly scalable environment, and you can start new instances of a service to meet demand as the volume of requests increases. However, the more instances of a service you run, the more resources they occupy; and the costs associated with running your system rise accordingly. Therefore it makes economic sense to scale back the number of service instances and resources as demand for your system decreases.

How can you achieve this? One solution is to monitor the solution and start up more service instances as the number of requests arriving in a given period of time exceeds a specified threshold value. If the load increases further, you can define additional thresholds and start yet more instances. If the volume of requests later falls below these threshold values you can terminate the extra instances. In inactive periods, it might only be necessary to have a minimal number of service instances. However, there are a couple of challenges with this solution:

- You must automate the process that starts and stops service instances in response to changes in system load and the number of requests. It is unlikely to be possible to perform these tasks manually as peaks and troughs in the workload may occur at any time.

- The number of requests that occur in a given interval might not be the only measure of the workload; for example, a small number of requests that each incur intensive processing might also impact performance. Consequently the process that predicts performance and determines the necessary thresholds may need to perform calculations that measure the use of a complex mix of resources.

Bharath says: Bharath says: |

|

|---|---|

|

Remember that starting and stopping service instances is not an instantaneous operation. It may take 10-15 minutes for Windows Azure to perform these tasks, so any performance measurements should include a predictive element based on trends over time, and initiate new service instances so that they are ready when required. |

Reducing Network Latency for Accessing Cloud Applications

Description: Users should be connected to the closest available instance of your application running in the cloud to minimize network latency and reduce response times.

A cloud application may be hosted in a datacenter in one part of the world, while a user connecting to the application may be located in another, perhaps on a different continent. The distance between users and the applications and services they access can have a significant bearing on the response time of the system. You should adopt a strategy that minimizes this distance and reduces the associated network latency for users accessing your system.

If your users are geographically dispersed, you could consider replicating your cloud applications and hosting them in datacenters that are similarly dispersed. Users could then connect to the closest available instance of the application. The question that you need to address in this scenario is how do you direct a user to the most local instance of an application?

Maximizing Availability for Cloud Applications

Description: Users should always be able to connect to the application running in the cloud.

How do you ensure that your application is always running in the cloud and that users can connect to it? Replicating the application across datacenters may be part of the solution, but consider the following issues:

- What happens if the instance of an application closest to a user fails, or no network connection can be established?

- The instance of an application closest to a user may be heavily loaded compared to a more distant instance. For example, in the afternoon in Europe, traffic to datacenters in European locations may be a lot heavier than traffic in the Far East or West Coast America. How can you balance the cost of connecting to an instance of an application running on a heavily loaded server against that of connecting to an instance running more remotely but on a lightly-loaded server?

Optimizing the Response Time and Throughput for Cloud Applications

Description: The response time for services running in the cloud should be as low as possible, and the throughput should be maximized.

Windows Azure is a highly scalable platform that offers high performance for applications. However, available computing power alone does not guarantee that an application will be responsive. An application that is designed to function in a serial manner will not make best use of this platform and may spend a significant period blocked waiting for slower, dependent operations to complete. The solution is to perform these operations asynchronously, and this approach has been described throughout this guide.

Aside from the design and implementation of the application logic, the key factor that governs the response time and throughput of a service is the speed with which it can access the resources it needs. Some or all of these resources might be located remotely in other datacenters or on-premises servers. Operations that access remote resources may require a connection across the Internet. To mitigate the effects of network latency and unpredictability, you can cache these resources locally to the service, but this approach leads to two obvious questions:

- What happens if a resource is updated remotely? The cached copy used by the service will be out of date, so how should the service detect and handle this situation?

- What happens if the service itself needs to update a resource? In this case, the cached copy used by other instances of this or other services may now be out of date.

Caching is also a useful strategy for reducing contention to shared resources and can improve the response time for an application even if the resources that it utilizes are local. However, the issues associated with caching remain the same; specifically, if a local resource is modified the cached data is now out of date.

Bharath says: Bharath says: |

|

|---|---|

|

The cloud is not a magic remedy for speeding up applications that are not designed with performance and scalability in mind. |

Windows Azure and Related Technologies

Windows Azure provides a number of technologies that can help you to address the challenges presented by each of the requirements in this appendix:

- Enterprise Library Autoscaling Application Block. You can use this application block to define performance indicators, measure performance against these indicators, and start and stop instances of services to maintain performance within acceptable parameters.

- Windows Azure Traffic Manager. You can use this service to reduce network latency by directing users to the nearest instance of an application running in the cloud. Windows Azure Traffic Manager can also detect whether an instance of a service has failed or is unreachable, automatically directing user requests to the next available service instance.

- Windows Azure Caching. You can use this service to cache data in the cloud and provide scalable, reliable, and shared access for multiple applications.

-

Content Delivery Network (CDN). You can use this service to improve the response time of web applications by caching frequently accessed data closer to the users that request it.

Note:

Note:Windows Azure Caching is primarily useful for improving the performance of web applications and services running in the cloud. However, users will frequently be invoking these web applications and services from their desktop, either by using a custom application that connects to them or by using a web browser. The data returned from a web application or service may be of a considerable size, and if the user is very distant it may take a significant time for this data to arrive at the user's desktop. CDN enables you to cache frequently queried data at a variety of locations around the world. When a user makes a request, the data can be served from the most optimal location based on the current volume of traffic at the various Internet nodes through which the requests are routed. Detailed information, samples, and exercises showing how to configure CDN are available on MSDN; see the topic "Windows Azure CDN." Additionally Chapter 3, "Accessing the Surveys Application" in the guide "Developing Applications for the Cloud, 2nd Edition" provides further implementation details.

The following sections describe the Enterprise Library Autoscaling Application Block, Windows Azure Traffic Manager, and Windows Azure Caching, and provide guidance on how to use them in a number of scenarios.

Managing Elasticity in the Cloud by Using the Microsoft Enterprise Library Autoscaling Application Block

It is possible to implement a custom solution that manages the number of deployed instances of the web and worker roles your application uses. However, this is far from a simple task and so it makes sense to consider using a prebuilt library that is sufficiently flexible and configurable to meet your requirements.

Poe says: Poe says: |

|

|---|---|

|

External services that can manage autoscaling do exist but you must provide these services with your management certificate so that they can access the role instances, which may not be an acceptable approach for your organization. |

The Enterprise Library Autoscaling Application Block (also known as "Wasabi") provides such a solution. It is part of the Microsoft Enterprise Library 5.0 Integration Pack for Windows Azure, and can automatically scale your Windows Azure application or service based on rules that you define specifically for that application or service. You can use these rules to help your application or service maintain its throughput in response to changes in its workload, while at the same time minimize and control hosting costs.

Scaling operations typically alter the number of role instances in your application, but the block also enables you to use other scaling actions such as throttling certain functionality within your application. This means that there are opportunities to achieve very subtle control of behavior based on a range of predefined and dynamically discovered conditions. The Autoscaling Application Block enables you to specify the following types of rules:

- Constraint rules, which enable you to set minimum and maximum values for the number of instances of a role or set of roles based on a timetable.

- Reactive rules, which allow you to adjust the number of instances of a role or set of roles based on aggregate values derived from data points collected from your Windows Azure environment or application. You can also use reactive rules to change configuration settings so that an application can modify its behavior and change its resource utilization by, for example, switching off nonessential features or gracefully degrading its UI as load and demand increases.

Rules are defined in XML format and can be stored in Windows Azure blob storage, in a file, or in a custom store that you create.

By applying a combination of these rules you can ensure that your application or service will meet demand and load requirements, even during the busiest periods, to conform to SLAs, minimize response times, and ensure availability while still minimizing operating costs.

How the Autoscaling Application Block Manages Role Instances

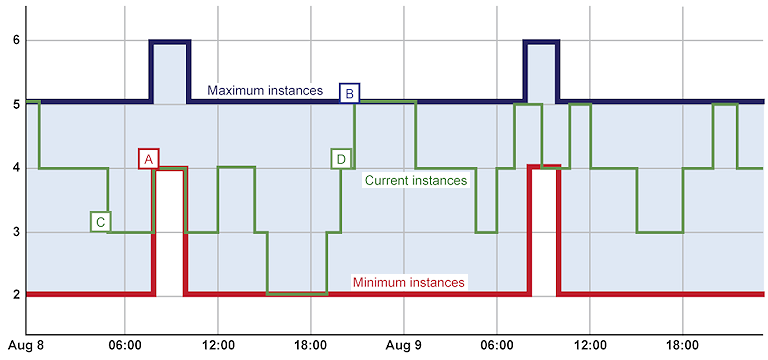

The Autoscaling Application Block can monitor key performance indicators in your application roles and automatically deploy or remove instances. For example, Figure 1 shows how the number of instances of a role may change over time within the boundaries defined for the minimum and maximum number of instances.

The behavior shown in Figure 1 was the result of the following configuration of the Autoscaling Application Block:

- A default Constraint rule that is always active, with the range set to a minimum of two and a maximum of five instances. At point B in the chart, this rule prevents the block from deploying any additional instances, even if the load on the application justifies it.

- A Constraint rule that is active every day from 08:00 for two hours, with the range set to a minimum of four and a maximum of six instances. The chart shows how, at point A, the block deploys a new instance of the role at 08:00.

- An Operand named Avg_CPU_RoleA bound to the average value over the previous 10 minutes of the Windows performance counter \Processor(_Total)\% Processor Time.

- A Reactive rule that increases the number of deployed role instances by one when the value of the Avg_CPU_RoleA operand is greater than 80. For example, at point D in the chart the block increases the number of roles to four and then to five as processor load increases.

- A Reactive rule that decreases the number of deployed role instances by one when the value of the Avg_CPU_RoleA operand falls below 20. For example, at point C in the chart the block has reduced the number of roles to three as processor load has decreased.

Poe says: Poe says: |

|

|---|---|

|

By specifying the appropriate set of rules for the Autoscaling Application Block you can configure automatic scaling of the number of instances of the roles in your application to meet known demand peaks and to respond automatically to dynamic changes in load and demand. |

Constraint Rules

Constraint rules are used to proactively scale your application for the expected demand, and at the same time constrain the possible instance count, so that reactive rules do not change the instance count outside of that boundary. There is a comprehensive set of options for specifying the range of times for a constraint rule, including fixed periods and fixed durations, daily, weekly, monthly, and yearly recurrence, and relative recurring events such as the last Friday of each month.

Reactive Rules

Reactive rules specify the conditions and actions that change the number of deployed role instances or the behavior of the application. Each rule consists of one or more operands that define how the block matches the data from monitoring points with values you specify, and one or more actions that the block will execute when the operands match the monitored values.

Operands that define the data points for monitoring activity of a role can use any of the Windows® operating system performance counters, the length of a Windows Azure storage queue, and other built-in metrics. Alternatively you can create a custom operand that is specific to your own requirements, such as the number of unprocessed orders in your application.

The Autoscaling Application Block reads performance information collected by the Windows Azure diagnostics mechanism from Windows Azure storage. Windows Azure does not populate this with data from the Windows Azure diagnostics monitor by default; you must run code in your role when it starts or execute scripts while the application is running to configure the Windows Azure diagnostics to collect the required information and then start the diagnostics monitor.

Reactive rule conditions can use a wide range of comparison functions between operands to define the trigger for the related actions to occur. These functions include the typical greater than, greater than or equal, less than, less than or equal, and equal tests. You can also negate the tests using the not function, and build complex conditional expressions using AND and OR logical combinations.

Actions

The Autoscaling Application Block provides the following types of actions:

- The setRange action specifies the maximum and minimum number of role instances that should be available over a specified time period. This action is only applicable to Constraint rules.

- The scale action specifies that the block should increase or decrease the number of deployed role instances by an absolute or relative number. You specify the target role using the name, or you can define a scale group in the configuration of the block that includes the names of more than one role and then target the group so that the block scales all of the roles defined in the group.

- The changeSetting action is used for application throttling. It allows you to specify a new value for a setting in the application's service configuration file. The block changes this setting and the application responds by reading the new setting. Code in the application can use this setting to change its behavior. For example, it may switch off nonessential features or gracefully degrade its UI to better meet increased demand and load. This is usually referred to as application throttling.

- The capability to execute a custom action that you create and deploy as an assembly. The code in the assembly can perform any appropriate action, such as sending an email notification or running a script to modify a database deployed to the SQL Azure™ technology platform.

Poe says: Poe says: |

|

|---|---|

|

You can use the Autoscaling Application Block to force your application to change its behavior automatically to meet changes in load and demand. The block can change the settings in the service configuration file, and the application can react to this to reduce its demand on the underlying infrastructure. |

The Autoscaling Application Block logs events that relate to scaling actions and can send notification emails in response to the scaling of a role, or instead of scaling the role, if required. You can also configure several aspects of the way that the block works such as the scheduler that controls the monitoring and scaling activates, and the stabilizer that enforces "cool down" delays between actions to prevent repeated oscillation and optimize instance counts around the hourly boundary.

Note: Note: |

|---|

| For more information, see "Microsoft Enterprise Library 5.0 Integration Pack for Windows Azure" on MSDN. |

Guidelines for Using the Autoscaling Application Block

The following guidelines will help you understand how you can obtain the most benefit from using the Autoscaling Application Block:

- The Autoscaling Application Block can specify actions for multiple targets across multiple Windows Azure subscriptions. The service that hosts the target roles and the service that hosts the Autoscaling Application Block do not have to be in the same subscription. To allow the block to access applications, you must specify the ID of the Windows Azure subscription that hosts the target applications, and a management certificate that it uses to connect to the subscription.

- Consider using Windows Azure blob storage to hold your rules and service information. This makes it easy to update the rules and data when managing the application. Alternatively, if you want to implement special functionality for loading and updating rules, consider creating a custom rule store.

- You must define a constraint rule for each monitored role instance. Use the ranking for each constraint or reactive rule you define to control the priority where conditions overlap.

- Constraint rules do not take into account daylight saving times. They simply use the UTC offset that you specify at all times.

- Use scaling groups to define a set of roles that you target as one action to simplify the rules. This also makes it easy to add and remove roles from an action without needing to edit every rule.

- Consider using average times of half or one hour to even out the values returned by performance counters or other metrics to provide more consistent and reliable results. You can read the performance data for any hosted application or service; it does not have to be the one to which the rule action applies.

- Consider enabling and disabling rules instead of deleting them from the configuration when setting up the block and when temporary changes are made to the application.

- Remember that you must write code that initializes the Windows Azure Diagnostics mechanism when your role starts and copies the data to Windows Azure storage.

- Consider using the throttling behavior mechanism as well as scaling the number of roles. This can provide more fine-grained control of the way that the application responds to changes in load and demand. Remember that it can take 10-15 minutes for newly deployed role instances to start handling requests, whereas changes to throttling behavior occur much more quickly.

- Regularly analyze the information that the block logs about its activities to evaluate how well the rules are meeting your initial requirements, keeping the application running within the required constraints, and meeting any SLA commitments on availability and response times. Refine the rules based on this analysis.

Reducing Network Latency for Accessing Cloud Applications with Windows Azure Traffic Manager

Windows Azure Traffic Manager is a Windows Azure service that enables you to set up request routing and load balancing based on predefined policies and configurable rules. It provides a mechanism for routing requests to multiple deployments of your Windows Azure-hosted applications and services, irrespective of the datacenter location. The applications or services could be deployed in one or more datacenters.

Windows Azure Traffic Manager monitors the availability and network latency of each application you configure in a policy, on any HTTP or HTTPS port. If it detects that an application is offline it will not route any requests to it. However, it continues to monitor the application at 30 second intervals and will start to route requests to it, based on the configured load balancing policy, if it again becomes available.

Windows Azure Traffic Manager does not mark an application as offline until it has failed to respond three times in succession. This means that the total time between a failure and that application being marked as offline is three times the monitoring interval you specify.

How Windows Azure Traffic Manager Routes Requests

Windows Azure Traffic Manager is effectively a DNS resolver. When you use Windows Azure Traffic Manager, web browsers and services accessing your application will perform a DNS query to Windows Azure Traffic Manager to resolve the IP address of the endpoint to which they will connect, just as they would when connecting to any other website or resource.

Bharath says: Bharath says: |

|

|---|---|

|

Windows Azure Traffic Manager does not perform HTTP redirection or use any other browser-based redirection technique because this would not work with other types of requests, such as from smart clients accessing web services exposed by your application. Instead, it acts as a DNS resolver that the client queries to obtain the IP address of the appropriate application endpoint. Windows Azure Traffic Manager returns the IP address of the deployed application that best satisfies the configured policy and rules. |

Windows Azure Traffic Manager uses the requested URL to identify the policy to apply, and returns an IP address resulting from evaluating the rules and configuration settings for that policy. The user's web browser or the requesting service then connects to that IP address, effectively routing them based on the policy you select and the rules you define.

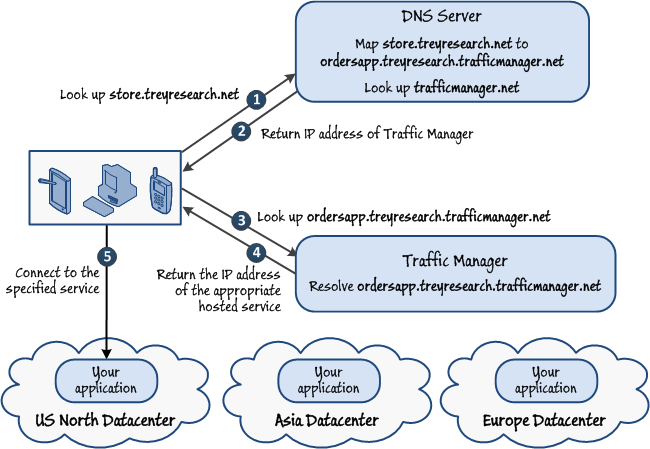

This means that you can offer users a single URL that is aliased to the address of your Windows Azure Traffic Manager policy. For example, you could use a CNAME record to map the URL you want to expose to users of your application, such as http://store.treyresearch.net, in your own or your ISPs DNS to the entry point and policy of your Windows Azure Traffic Manager policy. If you have named your Windows Azure Traffic Manager namespace as treyresearch and have a policy for the Orders application named ordersapp, you would map the URL in your DNS to http://ordersapp.treyresearch.trafficmanager.net. All DNS queries for store.treyresearch.net will be passed to Windows Azure Traffic Manager, which will perform the required routing by returning the IP address of the appropriate deployed application. Figure 2 illustrates this scenario.

The default time-to-live (TTL) value for the DNS responses that Windows Azure Traffic Manager will return to clients is 300 seconds (five minutes). When this interval expires, any requests made by a client application may need to be resolved again, and the new address that results can be used to connect to the service. For testing purposes you may want to reduce this value, but you should use the default or longer in a production scenario.

Remember that there may be intermediate DNS servers between clients and Windows Azure Traffic Manager that are likely to cache the DNS record for the time you specify. However, client applications and web browsers often cache the DNS entries they obtain, and so will not be redirected to a different application deployment until their cached entries expire.

Using Monitoring Endpoints

When you configure a policy in Windows Azure Traffic Manager you specify the port and relative path and name for the endpoint that Windows Azure Traffic Manager will access to test if the application is responding. By default this is port 80 and "/" so that Windows Azure Traffic Manager tests the root path of the application. As long as it receives an HTTP "200 OK" response within ten seconds, Windows Azure Traffic Manager will assume that the hosted service is online.

You can specify a different value for the relative path and name of the monitoring endpoint if required. For example, if you have a page that performs a test of all functions in the application you can specify this as the monitoring endpoint. Hosted applications and services can be included in more than one policy in Windows Azure Traffic Manager, so it is a good idea to have a consistent name and location for the monitoring endpoints in all your applications and services so that the relative path and name is the same and can be used in any policy.

Markus says: Markus says: |

|

|---|---|

|

If you implement special monitoring pages in your applications, ensure that they can always respond within ten seconds so that Windows Azure Traffic Manager does not mark them as being offline. Also consider the impact on the overall operation of the application of the processes you execute in the monitoring page. |

If Windows Azure Traffic Manager detects that every service defined for a policy is offline, it will act as though they were all online, and continue to hand out IP addresses based on the type of policy you specify. This ensures that clients will still receive an IP address in response to a DNS query, even if the service is unreachable.

Windows Azure Traffic Manager Policies

At the time of writing Windows Azure Traffic Manager offers the following three routing and load balancing policies, though more may be added in the future:

- The Performance policy redirects requests from users to the application in the closest data center. This may not be the application in the data center that is closest in purely geographical terms, but instead the one that provides the lowest network latency. This means that it takes into account the performance of the network routes between the customer and the data center. Windows Azure Traffic Manager also detects failed applications and does not route to these, instead choosing the next closest working application deployment.

- The Failover policy allows you to configure a prioritized list of applications, and Windows Azure Traffic Manager will route requests to the first one in the list that it detects is responding to requests. If that application fails, Windows Azure Traffic Manager will route requests to the next applications in the list, and so on. The Failover policy is useful if you want to provide backup for an application, but the backup application(s) are not designed or configured to be in use all of the time. You can deploy different versions of the application, such as restricted or alternative capability versions, for backup or failover use only when the main application(s) are unavailable. The Failover policy also provides an opportunity for staging and testing applications before release, during maintenance cycles, or when upgrading to a new version.

- The Round Robin policy routes requests to each application in turn; though it detects failed applications and does not route to these. This policy evens out the loading on each application, but may not provide users with the best possible response times as it ignores the relative locations of the user and data center.

To minimize network latency and maximize performance you will typically use the Performance policy to redirect all requests from all users to the application in the closest data center. The following sections describe the Performance policy. The other policies are described in the section "Maximizing Availability for Cloud Applications with Windows Azure Traffic Manager" later in this appendix.

Guidelines for Using Windows Azure Traffic Manager

The following list contains general guidelines for using Windows Azure Traffic Manager:

- When you name your hosted services and services, consider using a naming pattern that makes them easy to find and identify in the Windows Azure Traffic Manager list of services. Use a naming pattern makes it easier to search for related services using part of the name. Include the datacenter name in the service name so that it is easy to identify the datacenter in which the service is hosted.

- Ensure that Windows Azure Traffic Manager can correctly monitor your hosted applications or services. If you specify a monitoring page instead of the default "/" root path, ensure that the page always responds with an HTTP "200 OK" status, accurately detects the state of the application, and responds well within the ten seconds limit.

- To simplify management and administration, use the facility to enable and disable policies instead of adding and removing policies. Create as many policies as you need and enable only those that are currently applicable. Disable and enable individual services within a policy instead of adding and removing services.

- Consider using Windows Azure Traffic Manager as a rudimentary monitoring solution, even if you do not deploy your application in multiple datacenters or require routing to different deployments. You can set up a policy that includes all of your application deployments (including different applications) by using "/" as the monitoring endpoint. However, you do not direct client requests to Windows Azure Traffic Manager for DNS resolution. Instead, clients connect to the individual applications using the specific URLs you map for each one in your DNS. You can then use the Windows Azure Traffic Manager Web portal to see which deployments of all of the applications are online and offline.

Guidelines for Using Windows Azure Traffic Manager to Reduce Network Latency

The following list contains guidelines for using Windows Azure Traffic Manager to reduce network latency:

- Choose the Performance policy so that users are automatically redirected to the datacenter and application deployment that should provide best response times.

- Ensure that sufficient role instances are deployed in each application to ensure adequate performance, and consider using a mechanism such as that implemented by the Autoscaling Application Block (described earlier in this appendix) to automatically deploy additional instances when demand increases.

- Consider if the patterns of demand in each datacenter are cyclical or time dependent. You may be able to deploy fewer role instances at some times to minimize runtime cost (or even remove all instances so that users are redirected to another datacenter). Again, consider using a mechanism such as that described earlier in this appendix to automatically deploy and remove instances when demand changes.

If all of the hosted applications or services in a Performance policy are offline or unavailable (or availability cannot be tested due to a network or other failure), Windows Azure Traffic Manager will act as though all were online and route requests based on its internal measurements of global network latency based on the location of the client making the request. This means that clients will be able to access the application if it actually is online, or as soon as it comes back online, without the delay while Windows Azure Traffic Manager detects this and starts redirecting users based on measured latency.

Limitations of Using Windows Azure Traffic Manager

The following list identifies some of the limitations you should be aware of when using Windows Azure Traffic Manager:

- All of the hosted applications or services you add to a Windows Azure Traffic Manager policy must exist within the same Windows Azure subscription, although they can be in different namespaces.

- You cannot add hosted applications or services that are staged; they must be running in the production environment. However, you can perform a virtual IP address (VIP) swap to move hosted applications or services into production without affecting an existing Windows Azure Traffic Manager policy.

- All of the hosted applications or services must expose the same operations and use HTTP or HTTPS through the same ports so that Windows Azure Traffic Manager can route requests to any of them. If you expose a specific page as a monitoring endpoint, it must exist at the same location in every deployed application defined in the policy.

- Windows Azure Traffic Manager does not test the application for correct operation; it only tests for an HTTP "200 OK" response from the monitoring endpoint within ten seconds. If you want to perform more thorough tests to confirm correct operation, you should expose a specific monitoring endpoint and specify this in the Windows Azure Traffic Manager policy. However, ensure that the monitoring request (which occurs by default every 30 seconds) does not unduly affect the operation of your application or service.

- Take into account the effects of routing to different deployments of your application on data synchronization and caching. Users may be routed to a datacenter where the data the application uses may not be fully consistent with that in another datacenter.

- Take into account the effects of routing to different deployments of your application on the authentication approach you use. For example, if each deployment uses a separate instance of Windows Azure Access Control Service (ACS), users will need to sign in when rerouted to a different datacenter.

Maximizing Availability for Cloud Applications with Windows Azure Traffic Manager

Windows Azure Traffic Manager provides two policies that you can use to maximize availability of your applications. You can use the Round Robin policy to distribute requests to all application deployments that are currently responding to requests (applications that have not failed). Alternatively, you can use the Failover policy to ensure that a backup deployment of the application will receive requests should the primary one fail. These two policies provide opportunities for two very different approaches to maximizing availability:

- The Round Robin policy enables you to scale out your application across datacenters to achieve maximum availability. Requests will go to a deployment in a datacenter that is online, and the more role instances you configure the lower the average load on each one will be. However, you are charged for each role and application deployment in every datacenter, and you should consider carefully how many role instances to deploy in each application and datacenter.

Bharath says:

Bharath says:

There is little reason to use the Round Robin policy if you only deploy your application to one datacenter. You can maximize availability and scale it out simply by adding more role instances. However, the Failover policy is useful if you only deploy to one datacenter because it allows you to define reserve or backup deployments of your application, which may be different from the main highest priority deployment. - The Failover policy enables you to deploy reserve or backup versions of your application that only receive client requests when all of the higher deployments in the priority list are offline. Unlike the Performance and Round Robin policies, this policy is suitable for use when you deploy to only one datacenter as well as when deploying the application to multiple datacenters. However, you are charged for each application deployment in every datacenter, and you should consider carefully how many role instances to deploy in each datacenter.

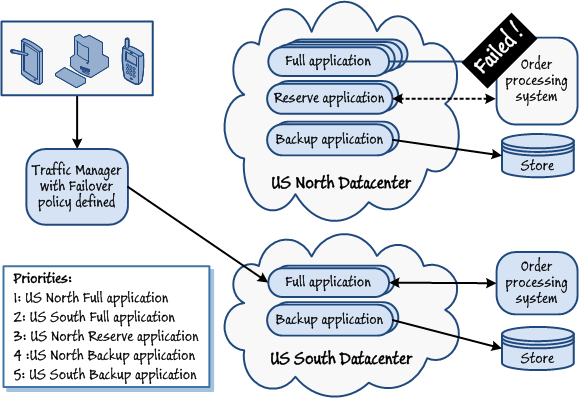

A typical scenario for using the Failover policy is to configure an appropriate priority order for one or more deployments of the same or different versions of the application so that the maximum number of features and the widest set of capabilities are always available, even if services and systems that the application depends on should fail. For example, you may deploy a backup version that can still accept customer orders when the order processing system is unavailable, but stores them securely and informs the customer of a delay.

By arranging the priority order to use the appropriate reserve version in a different datacenter, or a reduced functionality backup version in the same or a different datacenter, you can offer the maximum availability and functionality at all times. Figure 3 shows an example of this approach.

Figure 3Using the Failover policy to achieve maximum availability and functionality

Figure 3Using the Failover policy to achieve maximum availability and functionality

Guidelines for Using Windows Azure Traffic Manager to Maximize Availability

The following list contains guidelines for using Windows Azure Traffic Manager to maximize availability. Also see the sections "Guidelines for Using Windows Azure Traffic Manager" and "Limitations of Using Windows Azure Traffic Manager" earlier in this appendix.

- Choose the Round Robin policy if you want to distribute requests evenly between all deployments of the application. This policy is typically not suitable when you deploy the application in datacenters that are geographically widely separated as it will cause undue traffic across longer distances. It may also cause problems if you are synchronizing data between datacenters because the data in every datacenter may not be consistent between requests from the same client. However, it is useful for taking services offline during maintenance, testing, and upgrade periods.

- Choose the Failover policy if you want requests to go to one deployment of your application, and only change to another if the first one fails. Windows Azure Traffic Manager chooses the application nearest the top of the list you configured that is online. This policy is typically suited to scenarios where you want to provide backup applications or services.

- If you use the Round Robin policy, ensure that all of the deployed applications are identical so that users have the same experience regardless of the one to which they are routed.

- If you use the Failover policy, consider including application deployments that provide limited or different functionality, and will work when services or systems the application depends on are unavailable, in order to maximize the users' experience as far as possible.

- Consider using the Failover or Round Robin policy when you want to perform maintenance tasks, update applications, and perform testing of deployed applications. You can enable and disable individual applications within the policy as required so that requests are directed only to those that are enabled.

- Because a number of the application deployments will be lightly loaded or not servicing client requests (depending on the policy you choose), consider using a mechanism such as that provided by the Autoscaling Application Block, described earlier in this appendix, to manage the number of role instances for each application deployed in each datacenter to minimize runtime cost.

If all of the hosted applications or services in a Round Robin policy are offline or unavailable (or availability cannot be tested due to a network or other failure), Windows Azure Traffic Manager will act as though all were online and will continue to route requests to each configured application in turn. If all of the applications in a Failover policy are offline or unavailable, Windows Azure Traffic Manager will act as though the first one in the configured list is online and will route all requests to this one.

Note: Note: |

|---|

| For more information about Windows Azure Traffic Manager, see "Windows Azure Traffic Manager." |

Optimizing the Response Time and Throughput for Cloud Applications by Using Windows Azure Caching

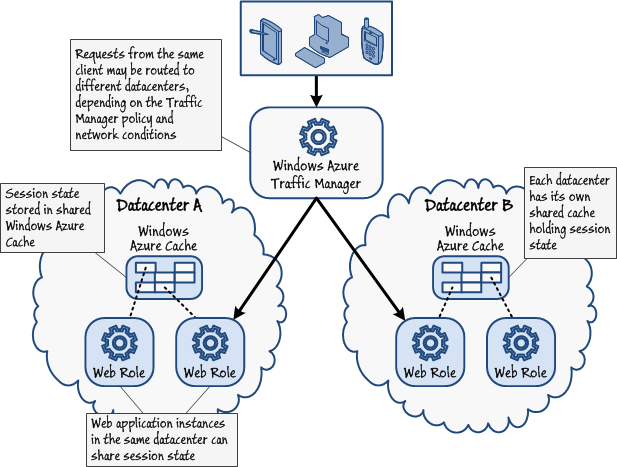

Windows Azure Caching service provides a scalable, reliable mechanism that enables you to retain frequently used data physically close to your applications and services. Windows Azure Caching runs in the cloud, and you can cache data in the same datacenter that hosts your code. If you deploy services to more than one datacenter, you should create a separate cache in each datacenter, and each service should access only the co-located cache. In this way, you can reduce the overhead associated with repeatedly accessing remote data, eliminate the network latency associated with remote data access, and improve the response times for applications referencing this data.

However, caching does not come without cost. Caching data means creating one or more copies of that data, and as soon as you make these copies you have concerns about what happens if you modify this data. Any updates have to be replicated across all copies, but it can take time for these updates to ripple through the system. This is especially true on the Internet where you also have to consider the possibility of network errors causing updates to fail to propagate quickly. So, although caching can improve the response time for many operations, it can also lead to issues of consistency if two instances of an item of data are not identical. Consequently, applications that use caching effectively should be designed to cope with data that may be stale but that eventually becomes consistent.

Do not use Windows Azure Caching for code that executes on-premises as it will not improve the performance of your applications in this environment. In fact, it will likely slow your system down due to the network latency involved in connecting to the cache in the cloud. If you need to implement caching for on-premises applications, you should consider using Windows Server AppFabric Caching instead. For more information, see "Windows Server AppFabric Caching Features."

Bharath says: Bharath says: |

|

|---|---|

|

Windows Azure Caching is primarily intended for code running in the cloud, such as web and worker roles, and to gain the maximum benefit you implement Windows Azure Caching in the same datacenter that hosts your code. |

Provisioning and Sizing a Windows Azure Cache

Windows Azure Caching is a service that is maintained and managed by Microsoft; you do not have to install any additional software or implement any infrastructure within your organization to use it. An administrator can easily provision an instance of the Caching service by using the Windows Azure Management Portal. The portal enables an administrator to select the location of the Caching service and specify the resources available to the cache. You indicate the resources to provision by selecting the size of the cache. Windows Azure Caching supports a number of predefined cache sizes, ranging from 128MB up to 4GB. Note that the bigger the cache size the higher the monthly charge.

The size of the cache also determines a number of other quotas. The purpose of these quotas is to ensure fair usage of resources, and imposes limits on the number of cache reads and writes per hour, the available bandwidth per hour, and the number of concurrent connections; the bigger the cache, the more of these resources are available. For example, if you select a 128MB cache, you can currently perform up to 40,000 cache reads and writes, occupying up to 1,400MB of bandwidth (MB per hour), spanning up to 10 concurrent connections, per hour. If you select a 4GB cache you can perform up to 12,800,000 reads and writes, occupying 44,800 MB of bandwidth, and supporting 160 concurrent users each hour.

Note: Note: |

|---|

| The values specified here are correct at the time of writing, but these quotas are constantly under review and may be revised in the future. You can find information about the current production quota limits and prices at "Windows Azure Shared Caching FAQ." |

You can create as many caches as your applications require, and they can be of different sizes. However, for maximum cost effectiveness you should carefully estimate the amount of cache memory your applications will require and the volume of activity that they will generate. You should also consider the lifetime of objects in the cache. By default, objects expire after 48 hours and will then be removed. You cannot change this expiration period for the cache as a whole, although you can override it on an object by object basis when you store them in the cache. However, be aware that the longer an object resides in cache the more likely it is to become inconsistent with the original data source (referred to as the "authoritative" source) from which it was populated.

To assess the amount of memory needed, for each type of object that you will be storing:

- Measure the size in bytes of a typical instance of the object (serialize objects by using the NetDataContractSerializer class and write them to a file),

- Add a small overhead (approximately 1%) to allow for the metadata that the Caching service associates with each object,

- Round this value up to the next nearest value of 1024 (the cache is allocated to objects in 1KB chunks),

- Multiply this value by the maximum number of instances that you anticipate caching.

Sum the results for each type of object to obtain the required cache size. Note that the Management Portal enables you to monitor the current and peak sizes of the cache, and you can change the size of a cache after you have created it without stopping and restarting any of your services. However, the change is not immediate and you can only request to resize the cache once a day. Also, you can increase the size of a cache without losing objects from the cache, but if you reduce the cache size some objects may be evicted.

You should also carefully consider the other elements of the cache quota, and if necessary select a bigger cache size even if you do not require the volume of memory indicated. For example, if you exceed the number of cache reads and writes permitted in an hour, any subsequent read and write operations will fail with an exception. Similarly, if you exceed the bandwidth quota, applications will receive an exception the next time they attempt to access the cache. If you reach the connection limit, your applications will not be able to establish any new connections until one or more existing connections are closed.

Markus says: Markus says: |

|

|---|---|

|

Windows Azure Caching enables an application to pool connections. When connection pooling is configured, the same pool of connections is shared for a single application instance. Using connection pooling can improve the performance of applications that use the Caching service, but you should consider how this affects your total connection requirements based on the number of instances of your application that may be running concurrently. For more information, see "Understanding and Managing Connections in Windows Azure". |

You are not restricted to using a single cache in an application. Each instance of the Windows Azure Caching service belongs to a service namespace, and you can create multiple service namespaces each with its own cache in the same datacenter. Each cache can have a different size, so you can partition your data according to a cache profile; small objects that are accessed infrequently can be held in a 128MB cache, while larger objects that are accessed constantly by a large number of concurrent instances of your applications can be held in a 2GB or 4GB cache.

Implementing Services that Share Data by Using Windows Azure Caching

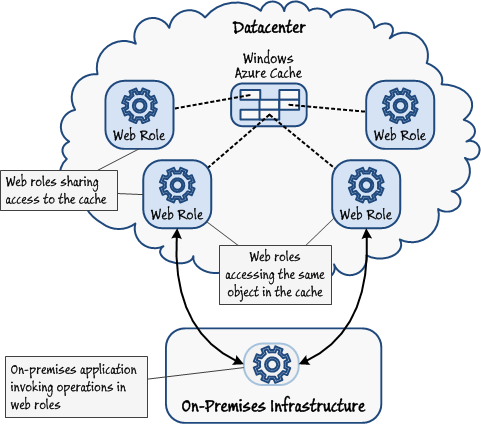

The Windows Azure Caching service implements an in-memory cache, located on a cache server in a Windows Azure datacenter, which can be shared by multiple concurrent services. It is ideal for holding immutable or slowly changing data, such as a product catalog or a list of customer addresses. Copying this data from a database into a shared cache can help to reduce the load on the database as well as improving the response time of the applications that use this data. It also assists you in building highly scalable and resilient services that exhibit reduced affinity with the applications that invoke them. For example, an application may call an operation in a service implemented as a Windows Azure web role to retrieve information about a specific customer. If this information is copied to a shared cache, the same application can make subsequent requests to query and maintain this customer information without depending on these requests being directed to the same instance of the Windows Azure web role. If the number of client requests increases over time, new instances of the web role can be started up to handle them, and the system scales easily. Figure 4 illustrates this architecture, where an on-premises applications employs the services exposed by instances of a web role. The on-premises application can be directed to any instance of the web role, and the same cached data is still available.

Web applications access a shared cache by using the Windows Azure Caching APIs. These APIs are optimized to support the cache-aside programming pattern; a web application can query the cache to find an object, and if the object is present it can be retrieved. If the object is not currently stored in the cache, the web application can retrieve the data for the object from the authoritative store (such as a SQL Azure database), construct the object using this data, and then store it in the cache.

Markus says: Markus says: |

|

|---|---|

|

Objects you store in the cache must be serializable. |

You can specify which cache to connect to either programmatically or by providing the connection information in a dataCacheClient section in the web application configuration file. You can generate the necessary client configuration information from the Management Portal, and then copy this information directly into the configuration file. For more information about configuring web applications to use Windows Azure Caching, see "How to: Configure a Cache Client using the Application Configuration File (Windows Azure Shared Caching)."

As described in the section "Provisioning and Sizing a Windows Azure Cache," an administrator specifies the resources available for caching data when the cache is created. If memory starts to run short, the Windows Azure Caching service will evict data on a least recently used basis. However, cached objects can also have their own independent lifetimes, and a developer can specify a period for caching an object when it is stored; when this time expires, the object is removed and its resources reclaimed.

Markus says: Markus says: |

|

|---|---|

|

With the Windows Azure Caching service, your applications are not notified when an object is evicted from the cache or expires, so be warned. |

For detailed information on using Windows Azure Caching APIs see "Developing for Windows Azure Shared Caching."

Updating Cached Data

Web applications can modify the objects held in cache, but be aware that if the cache is being shared, more than one instance of an application might attempt to update the same information; this is identical to the update problem that you meet in any shared data scenario. To assist with this situation, the Windows Azure Caching APIs support two modes for updating cached data:

-

Optimistic, with versioning.

All cached objects can have an associated version number. When a web application updates the data for an object it has retrieved from the cache, it can check the version number of the object in the cache prior to storing the changes. If the version number is the same, it can store the data. Otherwise the web application should assume that another instance has already modified this object, fetch the new data, and resolve the conflict using whatever logic is appropriate to the business processing (maybe present the user with both versions of the data and ask which one to save). When an object is updated, it should be assigned a new unique version number when it is returned to the cache.

-

Pessimistic, with locking.

The optimistic approach is primarily useful if the chances of a collision are small, and although simple in theory the implementation inevitably involves a degree of complexity to handle the possible race conditions that can occur. The pessimistic approach takes the opposite view; it assumes that more than one instance of a web application is highly likely to try and simultaneously modify the same data, so it locks the data when it is retrieved from the cache to prevent this situation from occurring. When the object is updated and returned to the cache, the lock is released. If a web application attempts to retrieve and lock an object that is already locked by another instance, it will fail (it will not be blocked). The web application can then back off for a short period and try again. Although this approach guarantees the consistency of the cached data, ideally, any update operations should be very quick and the corresponding locks of a very short duration to minimize the possibility of collisions and to avoid web applications having to wait for extended periods as this can impact the response time and throughput of the application.

Markus says:

Markus says:

An application specifies a duration for the lock when it retrieves data. If the application does not release the lock within this period, the lock is released by the Windows Azure Caching service. This feature is intended to prevent an application that has failed from locking data indefinitely. You should stipulate a period that will give your application sufficient time to perform the update operation, but not so long as to cause other instances to wait for access to this data for an excessive time.

If you are hosting multiple instances of the Windows Azure Caching service across different datacenters, the update problem becomes even more acute as you may need to synchronize a cache not only with the authoritative data source but also other caches located at different sites. Synchronization necessarily generates network traffic, which in turn is subject to the latency and occasionally unreliable nature of the Internet. In many cases, it may be preferable to update the authoritative data source directly, remove the data from the cache in the same datacenter as the web application, and let the cached data at each remaining site expire naturally, when it can be repopulated from the authoritative data source.

The logic that updates the authoritative data source should be composed in such a way as to minimize the chances of overwriting a modification made by another instance of the application, perhaps by including version information in the data and verifying that this version number has not changed when the update is performed.

The purpose of removing the data from the cache rather than simply updating it is to reduce the chance of losing changes made by other instances of the web application at other sites and to minimize the chances of introducing inconsistencies if the update to the authoritative data store is unsuccessful. The next time this data is required, a consistent version of the data will be read from the authoritative data store and copied to the cache.

If you require a more immediate update across sites, you can implement a custom solution by using Service Bus topics implementing a variation on the patterns described in the section "Replicating and Synchronizing Data Using Service Bus Topics and Subscriptions" in "Appendix A - Replicating, Distributing, and Synchronizing Data."

Both approaches are illustrated later in this appendix, in the section "Guidelines for Using Azure Caching."

Jana says: Jana says: |

|

|---|---|

|

Incorporating Windows Azure Caching into a web application must be a conscious design decision as it directly affects the update logic of the application. To some extent you can hide this complexity and aid reusability by building the caching layer as a library and abstracting the code that retrieves and updates cached data, but you must still implement this logic somewhere. |

The nature of the Windows Azure Caching service means that it is essential you incorporate comprehensive exception-handling and recovery logic into your web applications. For example:

- A race-condition exists in the simple implementation of the cache-aside pattern, which can cause two instances of a web application to attempt to add the same data to the cache. Depending on how you implement the logic that stores data in the cache, this can cause one instance to overwrite the data previously added by another (if you use the Put method of the cache), or it can cause the instance to fail with a DataCacheException exception (if you use the Add method of the cache). For more information, see the topic "Add an Object to a Cache."

- Be prepared to catch exceptions when attempting to retrieve locked data and implement an appropriate mechanism to retry the read operation after an appropriate interval, perhaps by using the Transient Fault Handling Application Block.

- You should treat a failure to retrieve data from the Windows Azure Caching service as a cache miss and allow the web application to retrieve the item from the authoritative data source instead.

- If your application exceeds the quotas associated with the cache size, your application may no longer be able to connect to the cache. You should log these exceptions, and if they occur frequently an administrator should consider increasing the size of the cache.

Implementing a Local Cache

As well as the shared cache, you can configure a web application to create its own local cache. The purpose of a local cache is to optimize repeated read requests to cached data. A local cache resides in the memory of the application, and as such it is faster to access. It operates in tandem with the shared cache. If a local cache is enabled, when an application requests an object, the caching client library first checks to see whether this object is available locally. If it is, a reference to this object is returned immediately without contacting the shared cache. If the object is not found in the local cache, the caching client library fetches the data from the shared cache and then stores a copy of this object in the local cache. The application then references the object from the local cache. Of course, if the object is not found in the shared cache, then the application must retrieve the object from the authoritative data source instead.

Once an item has been cached locally, the local version of this item will continue to be used until it expires or is evicted from the cache. However, it is possible that another application may modify the data in the shared cache. In this case the application using the local cache will not see these changes until the local version of the item is removed from the local cache. Therefore, although using a local cache can dramatically improve the response time for an application, the local cache can very quickly become inconsistent if the information in the shared cache changes. For this reason you should configure the local cache to only store objects for a short time before refreshing them. If the data held in a shared cache is highly dynamic and consistency is important, you may find it preferable to use the shared cache rather than a local cache.

After an item has been copied to the local cache, the application can then access it by using the same Windows Azure Caching APIs and programming model that operate on a shared cache; the interactions with the local cache are completely transparent. For example, if the application modifies an item and puts the updated item back into the cache, the Windows Azure Caching APIs update the local cache and also the copy in the shared cache.

A local cache is not subject to the same resource quotas as the shared cache managed by the Windows Azure Caching service. You specify the maximum number of objects that the cache can hold when it is created, and the storage for the cache is allocated directly from the memory available to the application.

Markus says: Markus says: |

|

|---|---|

|

You enable local caching by populating the LocalCacheProperties member of the DataCacheFactoryConfiguration object that you use to manage your cache client configuration. You can perform this task programmatically or declaratively in the application configuration file. You can specify the size of the cache and the default expiration period for cached items. For more information, see the topic "Enable Windows Server AppFabric Local Cache (XML)." |

Caching Web Application Session State

The Windows Azure Caching service enables you to use the DistributedCacheSessionStateStoreProvider session state provider for ASP.NET web applications and services. With this provider, you can store session state in a Windows Azure cache. Using a Windows Azure cache to hold session state gives you several advantages:

- It can share session state among different instances of ASP.NET web applications providing improved scalability,

- It supports concurrent access to same session state data for multiple readers and a single writer, and

- It can use compression to save memory and bandwidth.

You can configure this provider either through code or by using the application configuration file; you can generate the configuration information by using the Management Portal and copy this information directly into the configuration file. For more information, see "How to: Configure the ASP.NET Session State Provider for Windows Azure Caching."

Once the provider is configured, you access it programmatically through the Session object, employing the same code as an ordinary ASP.NET web application; you do not need to invoke the Windows Azure Caching APIs.

Caching HTML Output

The DistributedCacheOutputCacheProvider class available for the Windows Azure Caching service implements output caching for web applications. Using this provider, you can build scalable web applications that take advantage of the Windows Azure Caching service for caching the HTTP responses that they generate for web pages returned to client applications, and this cache can be shared by multiple instances of an application. This provider has several advantages over the regular per process output cache, including:

- You can cache larger amounts of output data.

- The output cache is stored externally from the worker process running the web application and it is not lost if the web application is restarted.

- It can use compression to save memory and bandwidth.

Again, you can generate the information for configuring this provider by using the Management Portal and copy this information directly into the application configuration file. For more information, see "How to: Configure the ASP.NET Output Cache Provider for Windows Azure Caching."

Like the DistributedCacheSessionStateStoreProvider class, the DistributedCacheOutputCacheProvider class is completely transparent; if your application previously employed output caching, you do not have to make any changes to your code.

Guidelines for Using Windows Azure Caching

The following scenarios describe some common scenarios for using Windows Azure Caching:

-

Web applications and services running in the cloud require fast access to data. This data is queried frequently, but rarely modified. The same data may be required by all instances of the web applications and services.

This is the ideal case for using Windows Azure Caching. In this simple scenario, you can configure the Windows Azure Caching service running in the same datacenter that hosts the web applications and services (implemented as web or worker roles). Each web application or service can implement the cache-aside pattern when it needs a data item; it can attempt to retrieve the item from cache, and if it is not found then it can be retrieved from the authoritative data store and copied to cache. If the data is static, and the cache is configured with sufficient memory, you can specify a long expiration period for each item as it is cached. Objects representing data that might change in the authoritative data store should be cached with a shorter expiration time; the period should reflect the frequency with which the data may be modified and the urgency of the application to access the most recently updated information.

Markus says:

Markus says:

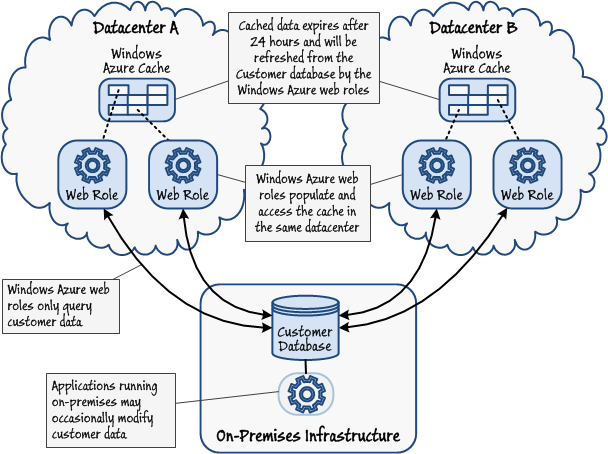

To take best advantage of Windows Azure Caching, only cache data that is unlikely to change frequently. Figure 5 shows a possible structure for this solution. In this example, a series of web applications implemented as web roles, hosted in different datacenters, require access to customer addresses held in a SQL Server database located on-premises within an organization. To reduce the network latency associated with making repeated requests for the same data across the Internet, the information used by the web applications is cached by using the Windows Azure Caching service. Each datacenter contains a separate instance of the Caching service, and web applications only access the cache located in the same datacenter. The web applications only query customer addresses, although other applications running on-premises may make the occasional modification. The expiration period for each item in the cache is set to 24 hours, so any changes made to this data will eventually be visible to the web applications.

Figure 5

Figure 5

Caching static data to reduce network latency in web applications -

Web applications and services running in the cloud require fast access to shared data, and they may frequently modify this data.

This scenario is a potentially complex extension of the previous case, depending on the location of the data, the frequency of the updates, the distribution of the web applications and services, and the urgency with which the updates must be visible to these web applications and services.

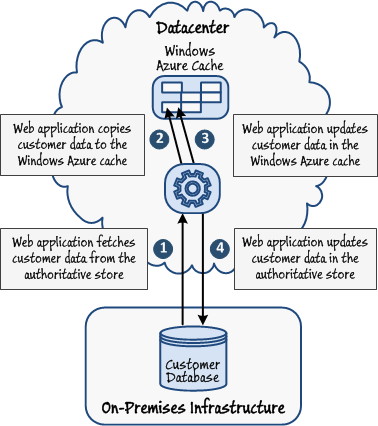

In the most straightforward case, when a web application needs to update an object, it retrieves the item from cache (first fetching it from the authoritative data store if necessary), modifies this item in cache, and makes the corresponding change to the authoritative data store. However, this is a two-step process, and to minimize the chances of a race condition occurring all updates must follow the same order in which they perform these steps. Depending on the likelihood of a conflicting update being made by a concurrent instance of the application, you can implement either the optimistic or pessimistic strategy for updating the cache as described in the earlier section "Updating Cached Data." Figure 6 depicts this process. In this example, the on-premises Customer database is the authoritative data store.

Figure 6

Figure 6

Updating data in the cache and the authoritative data storeThe approach just described is suitable for a solution contained within a single datacenter. However, if your web applications and services span multiple sites, you should implement a cache at each datacenter. Now updates have to be carefully synchronized and coordinated across datacenters and all copies of the cached data modified. As described in the section "Updating Cached Data," you have at least two options available for tackling this problem:

- Only update the authoritative data store and remove the item from the cache in the datacenter hosting the web application. The data cached at each other datacenter will eventually expire and be removed from cache. The next time this data is required, it will be retrieved from the authoritative store and used to repopulate the cache.

- Implement a custom solution by using Service Bus topics similar to that described in the section "Replicating and Synchronizing Data Using Service Bus Topics and Subscriptions" in "Appendix A - Replicating, Distributing, and Synchronizing Data."

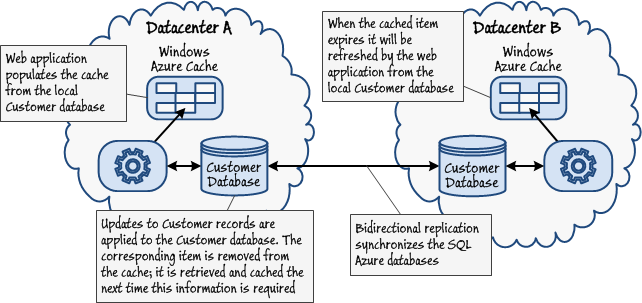

The first option is clearly the simpler of the two, but the various caches may be inconsistent with each other and the authoritative data source for some time, depending on the expiration period applied to the cached data. Additionally, the web applications and services may employ a local SQL Azure database rather than accessing an on-premises installation of SQL Server. These SQL Azure databases can be replicated and synchronized in each datacenter as described in "Appendix A - Replicating, Distributing, and Synchronizing Data." This strategy reduces the network latency associated with retrieving the data when populating the cache at the cost of yet more complexity if web applications modify this data; they update the local SQL Azure database, and these updates must be synchronized with the SQL Azure databases at the other datacenters.

Depending on how frequently this synchronization occurs, cached data at the other datacenters could be out of date for some considerable time; not only does the data have to expire in the cache, it also has to wait for the database synchronization to occur. In this scenario, tuning the interval between database synchronization events as well as setting the expiration period of cached data is crucial if a web application must minimize the amount of time it is prepared to handle stale information. Figure 7 shows an example of this solution with replicated instances of SQL Azure acting as the authoritative data store.

Figure 7

Figure 7

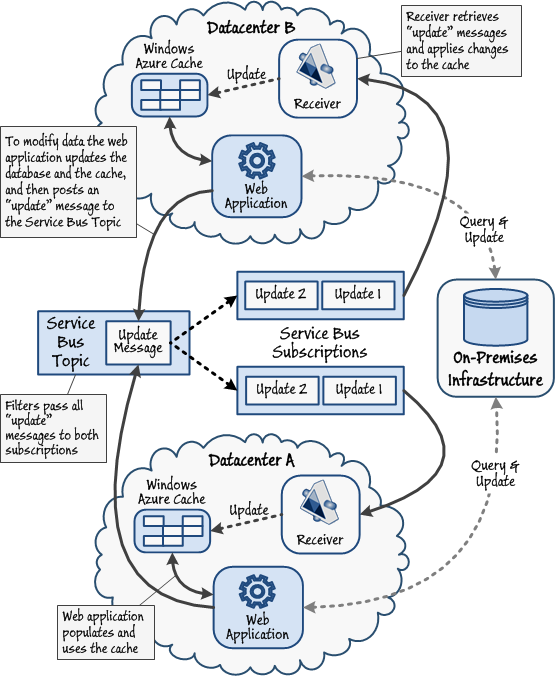

Propagating updates between Windows Azure caches and replicated data storesImplementing a custom solution based on Service Bus topics and subscriptions is more complex, but results in the updates being synchronized more quickly across datacenters. Figure 8 illustrates one possible implementation of this approach. In this example, a web application retrieves and caches data in the Windows Azure cache hosted in the same datacenter. Performing a data update involves the following sequence of tasks:

- The web application updates the authoritative data store (the on-premises database).

- If the database update was successful, the web application duplicates this modification to the data held in the cache in the same datacenter.

- The web application posts an update message to a Service Bus topic.

- Receiver applications running at each datacenter subscribe to this topic and retrieve the update messages.

- The receiver application applies the update to the cache at this datacenter if the data is currently cached locally.

Note:

Note:If the data is not currently cached at this datacenter the update message can simply be discarded. The receiver at the datacenter hosting the web application that initiated the update will also receive the update message. You might include additional metadata in the update message with the details of the instance of the web application that posted the message; the receiver can then include logic to prevent it updating the cache unnecessarily (when the web application instance that posted the message is the same as the current instance).

Note that, in this example, the authoritative data source is located on-premises, but this model can be extended to use replicated instances of SQL Azure at each datacenter. In this case, each receiver application could update the local instance of SQL Azure as well as modifying the data in-cache.

Figure 8

Figure 8

Propagating updates between Windows Azure caches and an authoritative data store Note:

Note:It is also possible that there is no permanent data store and the caches themselves act as the authoritative store. Examples of this scenario include online gaming, where the current game score is constantly updated but needs to be available to all instances of the game application. In this case, the cache at each datacenter holds a copy of all of the data, but the same general solution depicted by Figure 8, without the on-premises database, can still be applied. -

A web application requires fast access to the data that it uses. This data is not referenced by other instances of the web application.