There is perhaps no topic that has inspired science fiction quite as much as space exploration. As the television series Star Trek (all versions) famously stated during its opening credits, space is indeed the final frontier. It is not only a vast physical frontier, but also an expansive frontier of the imagination. Many of the pioneers of space travel were themselves inspired by science fiction. The masters of science fiction have often dreamed up methods of travel that were deemed impossible at the time, but then were later to be shown to be full of possibilities. Here we start with a brief history of space travel as it stands today, followed by a summary of the basic background physics involved in rocketry, and then we review some possible methods for future space travel and how they have been handled in science fiction films, television and print.

To better understand space travel in fiction, we need to first understand the history of space travel, which starts with the development of rockets over many centuries. Though rockets in rudimentary form may be over 1,000 years old, written records of their use go back to the Chinese of the 13th century, who used small rockets in warfare as fire bombs. Some of these records suggest that these crude rockets could have been used as early as the 12th century (Riper 2004). Though there is some further mention of rockets over the ages (particularly, the spread of these same sorts of rockets through to Poland and Spain by the 13th century), the next major development did not occur until the Napoleonic Wars of the early 19th century (which included the War of 1812 that was fought against the new United States), during which British inventor William Congreve developed small rockets that were comprised of iron-cased gunpowder (Rogers 2008). This was a significant improvement over centuries of previous rockets that were made out of tubes of paper pasted together. In addition, the powder for these rockets was prepared and packed consistently for each rocket (Van Riper 2004). These rockets had a range a bit over one mile (about 1.6 km), but with very limited accuracy, they caused some significant damage and fear, but had mixed results in the course of these wars. In fact, perhaps the most famously recorded use of these rockets was at Fort McHenry near Baltimore, Maryland, since this rocket attack was immortalized in the U.S. national anthem (“And the rockets’ red glare / The bombs bursting in air”). However, as it turned out, the rockets were actually fairly ineffective in that particular attack. Though there were some further successes in the ensuing decades in stabilizing rockets during flight (an important step in achieving accuracy), the next major developments were some decades later in the late 19th century, and were primarily theoretical. Due to increased capabilities of artillery, rockets were not seen as being as effective in the warfare of the late 19th century and so practical developments were not seen much during this period (Van Riper 2004). In fact, it was at this time that Jules Verne’s novel From Earth to the Moon suggested that the first flight to the Moon would use a giant artillery gun to send the astronauts there (it was Tsiolkovskii, who we introduce below, who showed that this would be impractical, since the gun would need too long of a muzzle in order to be sure that accelerations wouldn’t be so high that they’d kill the astronauts). The Russian physicist Konstantin Tsiolkovsky wrote extensively on the design of rockets, and was the first to write about the use of liquid fuels to create hot gases for exhaust, as well as step rockets (staged rockets), which would be critical to the advancement of the American space program in the 1960s. Unfortunately, his work had only been published in relatively obscure Russian journals that were not translated until well after his death and thus had little impact on the developing field. The next major advancements were more practical. In 1926, Robert Goddard launched the first liquid propellant rocket. The general idea was to mix a liquid fuel such as gasoline from one tank with an oxidizer from another that would control the burning of the fuel and result in a high velocity exhaust gas. If the fuel is allowed to burn uncontrollably, then the spacecraft could explode, as is was the case with the space shuttle Challenger disaster in 1986. Goddard knew that solid fuels would not be as useful for his experiments since he would not be able to control their burning very well. The first flight was very brief (2.5 seconds) and did not go very high (41 feet), but the proof of concept was important in the serious development of rocketry, and Goddard himself was able to improve on his designs and flight over the next few years prior to the outbreak of World War II (by 1935 his rockets reached 7,500 feet and were the first to include gyroscopes for stabilization). In parallel to these developments, Hermann Oberth of Germany published the first treatise on rocketry that was to be taken seriously, since Tsiolkoviskii’s publications were largely ignored on an international scale during his time. One of the most important developments as a result of Oberth’s work was the establishment of independent rocket research in Germany (Moore 1969). Independent rocket research (which was regarded as military research) was not highly tolerated by Hitler’s new government, so these independent research organizations (and their personnel) were effectively absorbed into formal military programs, where they could be monitored more closely. Not knowing of Goddard’s earlier work, rocket developer Werner von Braun and his colleagues in Germany then perfected liquid rockets while building rocket weapons for Germany during World War II. The V2 rocket they built was impressive for the time, since it was able to reach just under 100 km altitude, the boundary of what we now think of as “space.” Though the accuracy as a weapon was relatively crude, and did not change the outcome of the war as Hitler had wanted (thankfully!), it still killed thousands of Allied troops and civilians as well as indirectly leading to the deaths of forced laborers who worked on the construction of the V-2. As the war was ending, and it was becoming clear that Germany would be defeated, Von Braun gave himself over to the United States where the Americans were quite happy in using his knowledge in the establishment of their own rocket program in competition with the Soviet Union (Moore 1969). Thus, the American space program, especially the push toward the Moon, was largely dependent on Von Braun and the German war machine that had supported him. Moon voyages were a particularly popular subject of early science fiction (though that term didn’t exist yet) in the 19th century and early 20th century, with Jules Verne’s 1865 From Earth to the Moon and H. G. Wells’ 1901 novel The First Men in the Moon being prime examples. Interestingly, the latter novel brings up the concept of a propulsion system that can counteract gravity, using some of the same principles used in blocking out radiation. Though this form of “anti-gravity” is not possible, it does pre-sage some notions about gravity (and similarity to other field theories) that we wouldn’t see until Einstein came up with his theory of relativity a few years later.

Von Braun’s contributions remain controversial. He admittedly had been a member of the Nazi party (and, in particular, was an S.S. captain), and was later accused of personally abusing prisoners in the camp (Mittlebau/Dora) at which prison labor was used for the construction of the V2 rockets. As stated before, the rockets were effective in killing thousands of civilians in London, and many Allied troops (Neufeld 2007). We can ask ourselves whether it is ever ethical to use technology that was developed in this manner, and turn it around for good use. It is interesting to note that such ethical dilemmas often play out in science fiction as well. The most directly parallel situation comes about in the Space: 1999 episode “Voyager’s Return” in which the denizens of Moonbase Alpha come across a probe that had been launched by Earth many years prior. In order to obtain much needed information from the probe, this required interfering with the computer and drive systems of the probe. It had been revealed that this particular spacecraft had been created by scientist Ernst Queller, who created a similar probe that was responsible for killing hundreds as a result of an accident with the experimental drive system during testing. In addition, it was later revealed that the probe they were trying to recover was responsible for killing the Sidons, an alien race that lived in the path of this probe, Voyager One. The dilemma becomes two-fold: Is it worth putting Moonbase Alpha at risk by fiddling with the drive system of the recovered probe, in the hopes that they will better be able to retrieve its data? Also, can Ernst Queller (who had been secretly present on the base under an assumed name, Ernst Linden) be trusted to help with the probe? In the end, Queller redeems himself by using the probe’s deadly drive system to fend off an attack by avenging Sidons. Queller is killed in the process (Muir, Exploring Space: 1999 1997).

Up to the present day, and likely well into the future, the physics of space travel really just depends on one fundamental concept, and that is Newton’s Third Law, the principle of action and reaction as discussed in Chapter 1. From the point of view of conceptual physics, it doesn’t matter whether you are throwing hot gas, baseballs or rocks outside the bottom of your spacecraft to get it going upward. But, as a practical concern, the only things we can throw back at high enough velocity are hot gases, so that’s what we use for now. Keep in mind that all of the gas is not exhausted at once. There is a continuous release of the gas as it is converted from fuel. Thus, it is actually more analogous to slowly pouring sandbags out off the back of a boat to get it to move forward than throwing one big heavy item overboard (Moore 1969). An equivalent method for thinking about rockets is in terms of conservation of momentum, which we discussed in detail in Chapter 1. That is, for every step along the way, as the rocket is losing mass in terms of fuel converted to exhaust gas thrown out the back of the rocket, the rest of the mass lurches forward with a new velocity that is related to the velocity of the exhaust gas. However, it’s cumbersome to solve for the velocity at every step along the way. In this case, it’s actually easier to use calculus to determine the final velocity. This has been the method used in modern rocketry since Robert Goddard had his first successful flight in 1926. However, there is a limit to how fast these gases can move, and the maximum velocity is about 6,600 miles/hr (about 3 km/sec). We refer the interested reader to calculations in the endnotes,1 but the final speed of the spacecraft depends on how much fuel is being expelled out the back as hot gases, and how much mass is left over (as rocket, passengers and payload) to be sent through space. Practically speaking, we can reach rocket velocities about two times greater than the gas exhaust velocity. We have probably reached the limit of how fast we can expel gases, so if we ever want to go significantly faster through space, we will have to think of what we can expel at higher velocity with significant mass. We can gain a little bit of extra final velocity for the amount of fuel we put in by ejecting some of the rocket’s own mass as the rocket becomes emptied of fuel. This process, known as staged rocketry, has been used in the worlds’ rocket programs for decades. Much of science fiction, especially that which deals with the near future, depends on this same concept for chemical rockets.

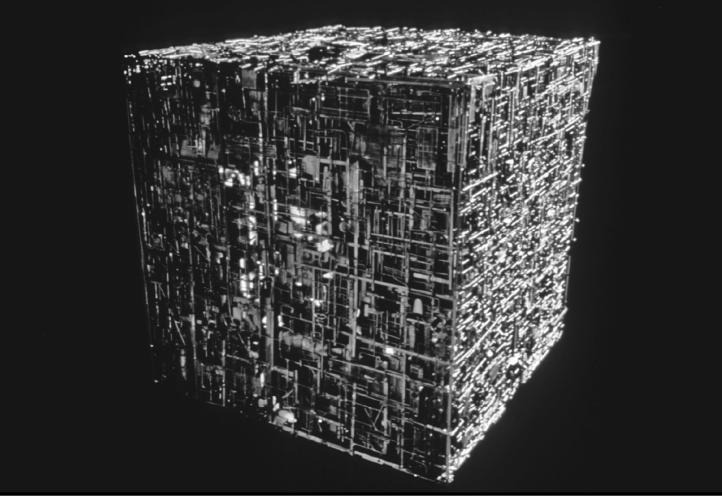

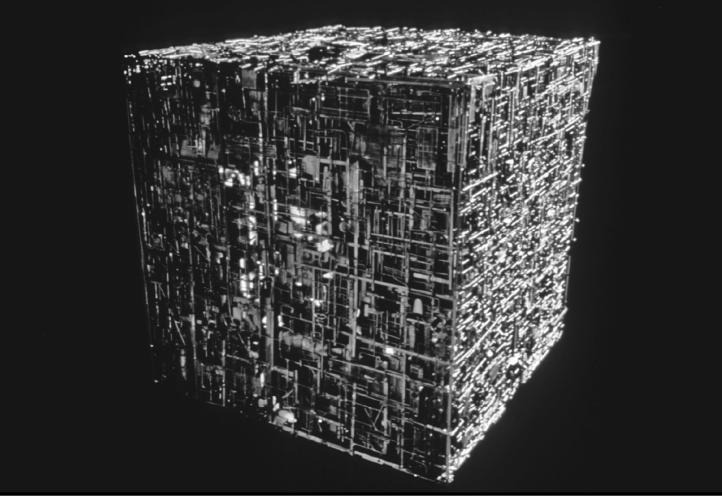

The Borg spacecraft, here as depicted in Star Trek: Voyager. Photofest.

Also, since, for now, we are sending rockets from the Earth’s surface, both gravity and air resistance act against the upward motion of the rocket. The second effect is very important for designing rockets. Air resistance is stronger if the object has more contact with the air (bigger surface area) and if the object is moving fast, since air resistance is proportional to the velocity squared for large fast-moving objects. The former is the essential reason for giving rockets a tapered look and pointed “top” so that there is reduced contact surface of the rocket with air, especially in the direction of motion. For similar reasons, a rocket solely traveling through the vacuum of space need not have this sort of look at all. Thus, oddball designs, such as the starships and the cubical Borg craft from ST:TNG are perfectly reasonable, although would make it more difficult to take off or land on a planet with an atmosphere if that is ever required. In fact, the creators of Space: 1999 purposely used “boxy” looking Eagle spacecraft, knowing that the intention would be to only use these spacecraft on the Moon (though during the series they often have to land on planets with atmospheres, which can be very inefficient for this sort of spacecraft).

The Jupiter 2 spacecraft from Lost in Space. Photofest.

We will soon discuss the technologies that could get us to other star systems, but a considerable amount of science fiction has been dedicated to travel within our Solar System so we will start closer to home. For decades, the focus was on Mars and Martians, in such classics as H.G. Wells’ (1898!) War of the Worlds, Arthur C. Clarke’s The Sands of Mars, Ray Bradbury’s Martian Chronicles, Philip K. Dick’s Martian Timeslip and Robert Heinlein’s Stranger in a Strange Land (just to name a few). Even some updated texts focus on Mars, such as Kim Stanley Robinson’s Mars Trilogy concerning terra-forming Mars, and the 2001 film Mission to Mars. An early film, Rocketship X-M (1950), shows the difficulty of space travel and speculates about what we might encounter on a first accidental mission to Mars (incidentally, this was the first film to show a female astronaut, and also showed a red-tinted Martian surface in an otherwise black and white film that is close to the real thing). In addition, 1960’s Angry Red Planet features another ill-fated adventure on a (less realistic) orange-tinted Mars with tentacled plants and “rat bat spider crabs.” The film Robinson Crusoe on Mars shows how a lone astronaut must survive on the inhospitable surface of the planet after his escape capsule crashes. Sending humans to Mars need not be confined to fiction. As Robert Zubrin and others have noted, we could potentially get to Mars with current technology; however, it requires commitment to certain types of flight paths (Zubrin 1996). The primary reason why only certain specific paths can be taken is that fuel usage will be considerably higher in certain cases. As Earth and Mars travel in their orbits around the Sun, we might think it would be best to travel between the two planets when they are closest together (conjunction). However, this would have serious negative consequences. The problem is that the Earth would be traveling quickly in its own orbit around the Sun. To overcome this velocity and “jump” toward Mars would take a considerable amount of fuel. Since most fuel is used at launch and in immediate post-launch maneuvers, this sort of very expensive maneuver would be insurmountable, and is rarely ever seriously considered. The most economical choice is actually the opposite case, when each planet is in a position directly on the opposite side of the Sun from the other. In this case, a rocket can easily get into an orbit that is in the same direction as the Earth’s orbit around the Sun. With small corrections, the rocket can then curve in a more highly elliptical orbit that meets up with Mars. The recent television series Defying Gravity keeps to Mars and Venus. Before astronomers realized that Venus would be far too hot to support life, Venus was also a fixation of science fiction. Venus was the focus of such B movies as The Doomsday Machine, in which a team of male and female astronauts are sent to Venus in order to restart the human race, which is about to be killed off by a “doomsday” weapon set off by the Chinese. There was also the adaptation of the Russian film Planeta Burg (released in the U.S. as Voyage to the Prehistoric Planet) in which a team of astronauts and their robot meets up with difficulties from dinosaur-like creatures on the Venusian surface and eventually discover the remnants of an ancient Venusian civilization. The possibility of life on Venus stuck around long enough to make it into the Outer Limits episode “Cold Hands, Warm Heart.” Here Colonel Jeff Barton (William Shatner) returns from an exploratory mission to Venus. He almost immediately exhibits the symptom of perpetually feeling extremely cold. Eventually he shows more obvious signs of genetic mutation, such as hands that now look to be that of some unidentifiable creature and there are also changes to his blood. In the end it’s realized that he started mutating after an encounter with a Venusian that he had since forgotten (presumably any Venusian would find our temperatures to be unbearably cold therefore the mutations also explain the earlier symptoms). An episode of the British action/mystery/science fiction television series The Avengers, “From Venus with Love,” starts with amateur astronomers mysteriously dying off. All had been interested in observing Venus, and look to have been murdered. At first some investigators conjecture that there is something, maybe even intelligent life, on Venus that is somehow causing the deaths. To the credit of the writers, the characters do mention that life as we know it would be impossible on Venus because it is so hot. This theory is eventually abandoned when a more conventional murder plot is revealed. In the “Journey with Fear” episode of Voyage to the Bottom of the Sea, executive officer of the submarine Seaview, Chip Morton, is teleported to Venus by aliens who call themselves Centaurs, and by their own admission, are not originally from Venus, but another star system. Unfortunately, the common factor in most of these treatments is inaccuracy (often severe).

The starship Enterprise from Star Trek: The Original Series. Various incarnations made it into various films and television shows of the Star Trek franchise. NBC/Photofest.

However, film and television haven’t just confined themselves to the inner Solar System. Journey to the 7th Planet, though interesting in places as a film, unrealistically depicts encounters with a powerful alien on Uranus (although one thing they get right is that Uranus should be extremely cold. When they start encountering much warmer areas with breathable air, they suspect something some entity is projecting illusions, which turns out to be so). Improbably, in the Doctor Who episode “The Sunmakers,” the TARDIS lands on Pluto, finding a highly technological society there. As the Doctor learns, Pluto is made habitable by artificial suns created by an alien society, supplying these suns at great cost to the humans eking out an existence there.

Trips to Mars and the rest of the outer Solar System may no longer seem much like science fiction (our interplanetary probes have landed on a moon of Saturn, and the most distant ones are now almost 10 billion miles from Earth). However, we have not yet achieved human spaceflight past the Moon. Accomplishing this now seems decades off even if there were to be a present-day commitment to using current technology, so it is definitely not a certainty. It’s certainly a matter of debate whether we should spend the effort and dollars on human spaceflight. Robotic probes tend to be many times cheaper than similarly destined ships with personnel. Robotic probes would also be safer, since they don’t require anyone to go into space. In the ST:TOS episode “The Ultimate Computer,” the inventor of the M5 computer (Dr. Richard Daystrom), the greatest computer of his generation (in the 23rd century), states that he created this computer that will be able to eventually fully automate starships in order to keep people from needing to meet harm in exploring the Galaxy. However, we must at least consider the option of manned space flight for understanding science fiction that will devote itself to travel to the outer Solar System and beyond. In addition, we may also have to consider whether we are missing our only opportunity to build up space travel while we can. Ultimately, living separately from Earth may be the only way that the human race can survive. For instance, we may fall victim to global climate change or nuclear conflict. Even if we avoid all possible calamities, we must eventually face the fact that the Sun will become a red giant that will engulf the Earth within five billion years. J. Richard Gott makes a strong case for there being likely only one “space age,” and that we are in it now, and that if we don’t make the most of it now, we will have to remain on Earth and be forced to accept our fate (Gott 2002). In that instance, living on Mars would also be seriously be compromised, but perhaps by that time we would have been able to move out of the Solar System completely. One way of potentially overcoming this danger is to build colonies in space or to colonize a reasonably suitable planet, such as Mars. Most official long-term plans for going to Mars over the years have had the same problematic similarities in that they depend on extremely large infrastructures that take decades to create. At the time of this writing there is some uncertainty over both the immediate and long-term goals of the human spaceflight program of NASA. The U.S. Space Shuttle program that started in 1981 has now been ended, with incomplete plans for a follow-on vehicles and potential follow-on programs. The future may lie with a number of other nations, especially Russia and China, who have potential for going back to the Moon and then perhaps on to Mars. There are also a number of other countries with unmanned space programs (India, Japan, Israel, Iran) as well as private U.S. companies that have invested in rocketry (such as Elon Musk’s Space Exploration Technologies Corporation, better known as Space X, and Orbital Sciences). Another effort is being led by Dutch entrepreneur Bas Lansdorp. His intention is to raise funds for a one-way mission to Mars (dubbed Mars One) with volunteers who will build a colony and stay there. Though some have dismissed this effort as underfunded and foolhardy (or even suicidal), strategies involving non-traditional funding sources and approaches may indeed end up being the best way to get to Mars (or anywhere else) without constantly kicking the can down the road until two decades in the future (Engber 2014).

The crew from the 2000 film Mission to Mars (Touchstone Pictures), a film about a modern journey to the red planet: Phil Ohlmeyer (Jerry O’Connell), Luke Graham (Don Cheadle), Jim McConnell (Gary Sinise), Woody Blake (Tim Robbins), and Maggie McConnell (Kim Delaney). Buena Vista/Photofest.

Some other destinations have also been emphasized, such as the enigmatic moon of Jupiter, Europa, long thought to have conditions suitable for life underneath a thick surface layer of ice. An ill- fated mission to this moon is subject of a recent film, Europa Report (2013). The realistic, yet fictional, footage of Europa gives some credibility to the film, as well as the fact that they never really understand exactly what has confronted them. A similar mission with a similar fate was the subject of the recent film Astronaut: The Last Push.

So, what would prevent us from using today’s rockets to go to the stars? We might expect that one solution would be to use brute force. That is, we can supply a rocket with a large enough amount of fuel to accelerate it to near light speeds, regardless of how low the exhaust velocity is. The rocket equation, at least at first glance, would seem to allow this. However, using the rocket equation and the typical exhaust velocities for rockets today, we see that this mass ratio (the mass of the full rocket divided by the mass of the empty rocket) would be approximately 10 raised to the 40,000th power! Aside from being an untenable number on most calculators and computers, we know that in the lifetime of the Universe we would not be able to come up with this amount of fuel, let alone actually carry it with us in an interstellar rocket. As we will see later, the problem is even worse as we get to near light speeds. Clearly, this would be an unacceptable solution. In principle, we could go a long way toward making this possible if we were able to send material out the back of the rocket at much higher velocities than we can with hot gases. Today’s rocket exhaust velocities are only two thousandths of one percent of the speed of light, and thus it would take over 200,000 years to get to the nearest star system. Alpha Centauri is the closest star (actually, a double star system) with planets but Proxima Centauri is about one trillion miles closer. Proxima may actually be a third star in the Alpha Centauri system, widely separated from the other two stars, but we have not yet found conclusive evidence to support this hypothesis (Beech 2015). Each of these stars would leave us with similar approximate numbers, assuming relatively low (and probably realistic) mass ratios (Goswami 1983). We could get there faster if the spacecraft could get some gravity assists from passing by massive planets in our own Solar System, such as Jupiter. During such a pass, momentum is transferred between the planet and the spacecraft. The spacecraft gains momentum by speeding up, and the planet must then lose momentum, though the amount of momentum loss by the planet is insignificant and will not change the path of the planet in a measurable way. Alpha Centauri (for now, including Proxima) is not only the nearest star system besides the Sun but also the nearest with a planetary discovery and is a bit more than four light years (24 trillion miles) from Earth, and the next closest, Epsilon Eridani, is more than two times farther than that. Lawrence Crowell discusses other stars within about 20 light years of the Sun. Most such stars either do not have any detected planets (and thus would make less interesting targets) or are potentially unlikely to have habitable planets due to being M dwarfs, though as we see in Chapter 9, life in such star systems may be possible after all, perhaps even abundant (Crowell 2007). For more possibilities, we could always go further into the Galaxy and within 20–30 light years there are several more Sun-like stars with planets, but these aren’t very likely to be the first targets for space travel unless further observations show that they are the only stars to have Earth-like or otherwise habitable planets. Clearly, for practical interstellar travel, we’ll need much higher exhaust velocities. In 2013, Kite and Howard called for more research into the technologies (mostly funded through the Department of Energy) that would allow for a robotic probe to the Alpha Centauri planetary system (Kite and Howard 2013). They argue, for instance, that funding this probe could lead to a new, and presumably successful, focus on fusion research. Ben Zukerman of UCLA replied to this article with his own letter to Physics Today, suggesting that there would likely be very limited public buy-in to interstellar travel of any kind unless the star system in question was known to be habitable (if not actually habited). A similar point was made a few years earlier by Lawrence Crowell in Can Star Systems Be Explored? (Crowell 2007). As Zuckerman points out, this would likely require a next generation telescope dedicated to planet finding, such as TPF discussed in Chapter 2 (which could be more than a decade off). In any case, we discuss progress on various technologies that can be used for interstellar travel below (B. Zuckerman 2014).

|

Rocketship X-M, the first Hollywood film about a trip to Mars. Depicted here are Dr. Lisa Van Horn (Osa Massen), Col. Floyd Graham (Lloyd Bridges), Harry Chamberlain (Hugh O’Brian), Dr. Karl Eckstrom (John Emery), and Maj. William Corrigan (Noah Beery, Jr.). Lippert Pictures Inc./Photofest. |

|

The Angry Red Planet (American International Pictures, 1959), another early film attempt at depicting Mars, which in this version is full of carnivorous plants and rat-bat-spider-crab monsters. Depicted are the crew: Colonel Tom O’Bannion (Gerald Mohr), Dr. Iris Ryan (Naura Hayden), Professor Theodore Gettell (Les Tremayne), and Sam Jacobs (Jack Kruschen). American International Pictures/Photofest. |

We should note though, that even if we can achieve high exhaust velocities, we want to be careful not to accelerate too fast, especially if we are interested in human space travel. Typically, we will want to keep acceleration to about the same as Earth’s gravity so that the humans aboard won’t feel any extra strain on their bodies. This acceleration will then put an additional limitation on how quickly we could get to the nearest stars. For instance, even if a rocket had an exhaust velocity close to the speed of light, and taking into account a typical mass in fuel, it could take about two years to accelerate to greater than 99 percent of the speed of light (Crowell 2007). Adjusting the mass in fuel we wish to use, and allowing for either slightly lower or higher final speeds can allow us to accelerate toward the speed of light a bit faster or slower, but practically speaking, we can’t do this in less than a year because we wish to avoid harmful accelerations, and would not want to extend it past tens of years in order to avoid taking up too much time in one astronaut’s life and avoiding the necessity of a generational ship. Considering time dilation effects that we discussed in Chapter 1, it will probably be impractical to go much faster than about 85% of the speed of light. Once we get to higher speeds, the differences between how time passes for someone on board the fast spacecraft will be dramatically different than on Earth. Even for an unmanned probe, it seems unlikely that the public will get behind funding a probe that may not return any results for 100 years, even if from the perspective of the probe, it reaches the target star in just a few years. This problem is exacerbated with people. It seems unlikely that most human astronauts will be able to tolerate returning to an Earth on which almost everyone they knew, including their youngest relatives, would have likely died long before their return.

One option that is already in development for small spacecraft is the ion drive, for which a beam of ionized atoms is created by a hot filament to be used as exhaust (Kaku, The Physics of the Impossible 2008). Though at present the exhaust velocities of ion drives are very low, the ion beam can operate for years (as opposed to the seconds or minutes of a chemical rocket), leading to large change in momentum over the duration of the flight. Thus, though the original exhaust velocities are low, these drives are much more efficient at achieving a desired final velocity. This makes such drives poor for initial launch from a planet’s surface, but ideal for continuing on in space. We can then imagine a probe intended for the outer Solar System that is initially lifted into space by a chemical rocket, but then continues on with the ion drive (Crowell 2007). An alternate method for this type of drive is to ionize hydrogen and have the plasma guided out of the rocket as thrust using magnetic fields (Crowell 2007). Though ST:TOS (e.g., “Spock’s Brain”) occasionally discussed various spacecraft that ran on ion power, it seems unlikely that they were thinking of this type of ion power, since it can’t push a very high mass object very far. However, it is possible that they were thinking of a considerably more scaled up version that could have been used for certain maneuvers. Another possibility for fuel is the use of either fission or fusion reactions (as we discussed in Chapter 1, and which also applies to the Sun, as discussed in Chapter 2 and weapons as discussed in Chapter 8). As we saw in Chapter 1, controlled nuclear fusion is very difficult to achieve, so first there was much consideration given to nuclear fission rockets, since fission reactors had already been developed. The idea was to use fission reactions to heat up hydrogen gas that could then be used as an exhaust gas to propel the spacecraft. As we have seen, in nuclear fission, an atomic nucleus is split to result in a new atom and a tremendous amount of energy as well as other resultant particles such as neutrons. However, many safety issues plagued these early projects. Chief among them was the possibility of the reactants going into an uncontrolled chain reaction (a bomb, essentially). Nuclear fission propulsion was revived again during early discussions of a manned mission to Mars; however, was eventually cut from the plans. During the late 1950s, serious consideration was given to another type of nuclear rocket, and one such project was Project Orion (G. Dyson 2002). Though the original scope of the project was to stick to interplanetary travel, one of the top scientists working on the endeavor, Freeman Dyson, now of the Institute for Advanced Study, has published work on a potential scaled up version that could go to the nearest stars (F. Dyson 1968). In principle, it is possible to accelerate a rocket to about one-tenth of the speed of light using nuclear fission and fusion explosions (from bombs already in stock, or similar ones produced for this purpose). In a fusion reaction, two atomic nuclei combine to create a new heavier element and energy. Some reactions are much more efficient at producing energy than others, and furthermore, some only occur under very extreme conditions (ultra-high temperatures and densities). Dyson’s idea was to use the shock waves produced by nuclear bombs to drive the starship. A great advantage of this design is that it makes use of an energy source that we already possess. However, as Dyson explains, nuclear bombs are great at releasing fantastic amounts of energy, but not comparatively fantastic amounts of momentum. In the end, a “pusher plate” was designed that could absorb much momentum from the explosion by purposely directing a jet of gas from the explosion toward the pusher plate.

However, a major concern in planning such a spacecraft was figuring out how it could survive numerous nuclear explosions. Certainly, if two cities (Hiroshima and Nagasaki) had been devastated by 15-kiloton nuclear weapons, how could a spaceship survive a one-megaton explosion, let alone a series of them? The answer is in the design of the outer hull of the ship. One idea would be to cover the hull of this ship with a material that can absorb the energy well. If the outer hull can absorb heat well (without melting) it can survive such explosions. However, it would probably take billions of kilograms (millions of tons) of copper to do so (and that is just the outer part of the ship). For comparison, a full Space Shuttle had a mass of about one million kilograms. Perhaps a better solution would be to cover the hull with a thinner substance that can slowly ablate after each explosion. This would protect the ship without adding so much mass. In fact, this was the basic idea behind heat shields in spacecraft such as the ones used in the Apollo program. Still, this spacecraft is ultimately limited by the fact that it is a traditional reaction rocket that must carry along the fuel, and would become far too unwieldy for going to any but the nearest stars. However, the greatest disadvantage of this design is that the Nuclear Test Ban Treaty would not allow us to test these weapons for use in a spacecraft, and thus the idea has long been abandoned. Additionally, this program and other alternative ideas for space travel became victims of the success of the chemical rocket program that was already making significant progress in getting us toward the Moon. Even the effort towards the Moon was known to be complex and expensive, and not much credence was given to alternative ideas that would add their own complexity, and of course, expense. Nonetheless, in the 1970s the core idea behind the Orion Project was revived by the British Interplanetary Society in the form of the Daedalus Project. Though only ever studied conceptually, the Daedalus concept replaced bombs with fusion pellets. Unfortunately, the type of fusion reaction proposed, that between deuterium and Helium-3, though possible theoretically, has never been achieved. Furthermore, the sheer amount of Helium-3 needed could only be found by “mining” the atmosphere of Jupiter first (Matloff 2012). These difficulties prevented the project from really getting off the ground. However, this study did show how much more efficient a spacecraft such as Orion could be if it were to be updated with cutting edge (or beyond cutting edge) energy sources (Kondo 2003). In 2009 the BIS started an updated version of Daedalus called Icarus (Icarus being the mythological son of Daedalus) that would include, among other things, updated fusion technology (though no one process has been selected, it is unlikely to involve Helium-3, since the collection of rare Helium-3 from the gas giants was one of the main aspects that led to the Daedalus project’s demise) and better scientific return for the proposed mission (Obousy 2012). The 2014 Syfy mini-series, Ascension, starts with the supposition that an atomic rocket of this variety (named Ascension) actually secretly was sent out to the nearby star, Proxima Centauri, in the 1960s on a 100-year mission as a generational spaceship (the concept of which is discussed further below). A twist is eventually introduced that reveals that Ascension never left Earth, and the pretense of a generational spaceship was being kept up (at least to the inhabitants) as a social experiment. This sort of plot twist (of “the space flight was all really just an experiment” variety) has a long history in science fiction, such as in The Outer Limits episode “Nightmare” (one of the few episodes that were redone as part of the 90s reboot of this series).

Another related idea is to create a nuclear fusion reactor that scoops up hydrogen from space as its fuel, thus obviating the need to bring fuel along for the ride. This concept was first proposed by Robert Bussard in 1960. This drive system is featured in Poul Anderson’s 1970 novel, Tau Zero, in which a ship of this type loses its ability to decelerate on its way to the relatively nearby Beta Virginis star system. A similar idea is alluded to in several Star Trek episodes. For instance, in ST:TNG episode “Night Terrors.” At the end of this episode, they must release hydrogen into space to start a reaction that will free an alien species into space. Initiating this release, Data states “activating Bussard collectors.” At first glance, the implication is that the Enterprise would use normal hydrogen collected from space in its fusion reactors. However, we know from Star Trek: Voyager (ST:V) episode “Unforgettable” that the collectors are also used for deuterium (however, we’ll soon see that in reality, this would be far-fetched). Though the Bussard “scoop” solves one problem, it creates others. Firstly, an extremely large scoop would be needed. Using Bussard’s original assumptions about the density of hydrogen in interstellar space and speed of the spacecraft, he determined that a scoop 100,000 km2 in area would be needed. However, since that time, it’s been discovered that the density of hydrogen within a few light years of the Sun is much lower (perhaps more than 100 times) than the average for the Galaxy (Cox and Reynolds 1987), which is the number that Bussard used and is widely quoted in texts (Spitzer 1978). It’s likely that this local cavity was created by a supernova explosion in the vicinity of the Sun about one to ten million years ago. This would clearly require an even more immense scoop. An even larger scoop would be needed for the rarer isotopes of hydrogen, such as deuterium, or for other gases, such as helium. Perhaps one way around this is to purposely ionize the hydrogen atoms in the path of the ship, and then use a magnetic field as a scoop. However, this process may end up taking in more energy than it delivers. In addition, the direct fusion reaction of a proton with another proton is not very efficient, so we would have to make up for this deficiency by being able to collect more and more hydrogen from space. Furthermore, though fusion research has continued for decades (primarily through research in thermonuclear Tokamak reactors, inertial confinement, and beam confinement) we have not yet achieved any kind of controlled fusion reaction on Earth for more than a fraction of a second, and none yet can produce more energy than they take in, so it will have to be some time before we make use of controlled fusion reactions in space. Despite this fact, several science fiction television series have focused on nuclear fusion reaction to power starships. In Lost in Space, the interstellar spacecraft Jupiter 2 is described as running on “atomic motors” and possessing “reactor chambers,” and perhaps a fusion concept is what they were embracing, though few coherent details are given throughout the series, and conflicting details have been presented in fan publications in more recent years (though some do indeed at least mention “fusion reactors”). Similar concepts are introduced for the “impulse” engines of Star Trek (the engines used on maneuvers that don’t require exceeding the speed of light). The Ark of The Starlost was supposed to have been driven by a controlled fusion reactor as well.

Naively applying the rocket equation, if we wanted to get to a final velocity that is 99% of the speed of light, and we could attain an exhaust velocity of about one tenth of the speed of light using a nuclear pulsed rocket like that discussed above, we would need a fuel supply that is about 20,000 times the mass of the rocket and payload. This would be prohibitive, especially if we wanted a massive payload, such as a band of intrepid space explorers and all of their equipment. However, even so, it’s only about 20 times the mass ratios used for the Moon landings (though these started with huge rockets full of fuel, eventually shed in stages, and the ended with the comparatively tiny capsule of the Apollo spacecraft landing in the Pacific Ocean). In reality though, the mass ratio problem is even worse than that stated above. Including the effects of special relativity, which we must do once something moves at speeds within a few percent of the speed of light, the amount of fuel needed would really need to be about 320 billion times the mass of the rocket and payload.1 As a rocket (or anything else) accelerates closer and closer to the speed of light, the amount of energy needed for even small increases in speed becomes exorbitant. The problem is exacerbated even more for interstellar flight, since we would likely need to decelerate the rocket at its destination, then speed back up (and once again slow down) to get back home. Without showing further calculations here, it is important to note that even modest increases of the exhaust velocity will lead to huge savings in fuel, as would lowering the final velocity a bit. The largest sacrifice in lowering the final velocity would be in adding total time to the voyage.

The ultimate solution may be to use anti-matter, since the reaction of matter with anti-matter is the most efficient known reaction, and is thus able to produce generous amount of energy for low amounts of fuel. Thus, for similar mass ratios as with chemical rockets, we would be able to start with much higher exhaust velocities and end with velocities approaching the speed of light. The reaction between protons and anti-protons is known to create energetic particles called pions, and these could be potentially used as the exhaust for a matter/anti-matter rocket (Forward 1986). Alternatively, the high energy light itself created in such reactions can be used as exhaust, or perhaps used to heat a gas that can be used as exhaust. A major disadvantage of this method, even though it is extremely efficient, is that anti-matter is extremely rare and only now created as a by-product of high energy particle experiments. Furthermore, anti-matter is generally very rare in the Universe. Though there are some locations in our Galaxy where anti-matter might be more abundant, none are close to the Earth, so would not be feasible sources of anti-matter. Another disadvantage of this method is similar to the problem we would have had in using nuclear weapons to fuel the ship. That is, if we use this drive when on or close to a planet, we could do tremendous damage to life there (or even to the crew, if we aren’t careful). It has been suggested by Charles Adler, among others, that this can be used to be an advantage, if the drive of the ship were used as a weapon against threatening foes (Adler 2014). We discuss similar subjects further in Chapter 8. Michael O’Brien’s recent novel Voyage to Alpha Centauri focuses on the first manned mission to Alpha Centauri made possible by the discoveries of (fictional) Nobel prize-winner Neil de Hoyos, making the use of anti-matter in the spacecraft engines more practical. Anti-matter has made a journey to Alpha Centauri of nine years possible. As pictured here, the spacecraft is immense, with nearly 1,000 crew/passengers, including de Hoyos.

Instead of using a reaction rocket, we could think of other means of propulsion. One possibility is the use of a solar sail made of a very thin aluminum sheet (Rogers 2008). That is, pressure from light of the Sun can act against a large deployed sail to push the craft into deep space. In some sense, this is still dependent on the conservation of momentum, since the momentum of the light is being transferred to the sail. However, it is fundamentally different from a traditional reaction rocket because fuel is not being carried along for the ride. This is the same process that leads to dust tails of comets being pushed away from the direction of the Sun as the comet orbits. In addition, there are several possible sail shapes that would have advantages, such as being more easily deployable. Since sunlight would greatly weaken as the spacecraft goes to the outer Solar System and beyond, solar light would not be a reliable means of propelling the spacecraft to another star system. However, in the future, such technology could be extended to the point at which powerful lasers could be set up on the Moon to help accelerate the sail to a large fraction of the speed of light. Such a sail is featured in Stephen Baxter’s novel Proxima. However, the sail itself needs to be extremely large (hundreds of miles across), the lasers need to be thousands of times more powerful than the current power usage of the entire Earth, and with no current means of getting to the Moon (though we had the capability 45 years ago), this project will certainly have to be put off for decades. An additional problem is that the spacecraft would need to decelerate and then re-accelerate as it approaches another star system. This would require having a similar sort of laser set up in the other star system, which of course is not possible if no other craft have been there before! One possible solution is to focus light from a laser on our Moon onto the spacecraft even up to a few light years away. This is considerably easier said than done, since this would require building a lens larger in diameter than the Sun, with the mass of a large asteroid. On the bright side, efforts such as this can be scaled down if we make the spacecraft as small as possible (such as for a stellar probe, instead of a manned spacecraft). Also, in more modest form, the craft could be a method for reaching trans-Neptunian objects (TNOs) at hundreds of astronomical units from the Sun without taking decades (the New Horizons probe got to Pluto, itself classified as a TNO, in nine years). Though there have been some successful tests of deployment of solar sails, none have resulted in a craft that was actually propelled by a sail. Yet, because development of the craft itself is a matter of improving upon current technology, and because the energy requirements, though extremely large, are not as large as those required by other means of interstellar travel, this might be one of the more hopeful ideas for getting to another star system. Another possibility for “rocketless rocketry” (a term coined by Robert Forward) would be a magnetic rail gun. A rail gun can be created by sending a large current through conducting rails. The rails then set up a very strong magnetic field that can then apply a force to a metallic object, acting like a gun. Velocities with known rail guns are great enough to potentially send an object into orbit around the Earth. Though the idea is very enticing since the object of interest can be accelerated at Earth, this leads to some difficulties as well. The biggest problem is that a rail gun does most of its accelerating over a relatively short distance (and short period of time), and thus the experienced “g” forces will be too great for a human to withstand. However, a completely inanimate probe might be able to survive this more readily. A related problem is that when objects coming out of a rail gun hit the air, they become deformed. If used as a means for accelerating a spacecraft, we could perhaps place the rail gun in space or on the Moon to avoid this problem. To go to another star system, however, would take an enormous space-based rail gun that would accelerate to about 5,000-g. Since no human could survive this sort of acceleration, this mode of transportation, at least for now, would be reserved for the heartiest of probes (Kaku, The Physics of the Impossible 2008).

We might be able to approach this problem from another direction. That is, instead of being concerned completely with the nature of the fuel and the exhaust speed or even using a sail as described above, we can focus on making the vehicle and payload very small. Both Frank Tipler and Michio Kaku separately discuss the possibility of these nano-ships. Tipler shows that an anti-matter powered nano-ship could accelerate to one tenth the speed of light and coast to a nearby star with less than 2 kg of exhaust gas and less than 4 milligrams of anti-matter (Tipler 1994). The focus here has to be in getting a device that is very small to be very useful, because if it cannot relay information back to us, then it will be useless. We have to keep in mind here that even just to send signals back to Earth (or to receive such signals) would require a large radio dish, so communications would not be possible with a very small probe (at least not an independent one). To mitigate such problems as many molecules as possible have to be directly related to the functioning of the probe. Another focus of these nano-ships is to get them to be self-replicating. In this way, we could potentially indirectly create a much more effective probe, or set of probes, by making a small number of self-replicating nano-ships. The new nano-ships can then take on either very similar functions, or be programmed to take on new functions. However, there are additional problems with nano-ships. The primary problem is that they can be easily deflected off course.

Broadly stated, Mach’s Principle essentially states that the mass distributed through the Universe influences the inertia of any other individual mass (say, for instance, a rocket launching here on Earth). In this sense, the principle has been absorbed into general relativity, and as stated in Chapter 1, we know that the geometry of space is dependent upon the distribution of mass in the Universe, and, this in turn will affect any other individual mass in the Universe. However, the details of Mach’s Principle, as calculated in general relativity say a bit more than this general statement. Essentially they would lead us to conclude that the mass of the rest of the Universe would lead to any other individual mass feeling just a little bit of resistance to any other force applied to it. In engineering terms, the rest of the Universe out there can lead to extra thrust on an object here. But is this a “real world” effect? Calculating the precise effect on an individual extended object (that is, a real life object and not just a “point mass”) is complicated. However, James Woodward has determined that there can be small, but measurable Mach effects on objects the size of typical desktop lab equipment. Confirming this effect, and then scaling up by factors of up to one million for practical use as a rocket thruster may take decades or more, but it’s encouraging to know that reconsidering “old physics” for practical engineering usage in rockets could lead to alternatives to either chemical rockets or some of the other long considered alternatives mentioned above that have not come to fruition (Woodward 2013).

Recently there have been tests at NASA’s Johnson Space Center of a resonant cavity thruster. The device works by bouncing around microwaves in a cavity. In tests it has shown a very small thrust of about 40 microNewtons. Similar to the thruster described in the previous section, these forces are about one millionth of that experienced by a book feeling the Earth’s gravity. The investigators admit to not having an understanding of the physical effects that might be causing the thrust though they do appeal to the possibility that this is related to a “quantum vacuum virtual plasma.”

Just as traveling near to the speed of light can change how we measure both time and spatial dimensions, there are other effects that will have direct consequences on how we perceive light coming from stars. Both the color of light and the direction from where the light is originating will be highly distorted if the observer (an astronaut aboard a spacecraft) is approaching the speed of light. For stars in front of the ship, light will be blue-shifted, meaning that the stars will appear to be bluer than they actually are. For the stars in back of the ship, light will be red-shifted, thus these stars will appear to be redder than they actually are. The effect on measured direction of the light is called aberration, and leads to the angle between the ship’s direction and the direction of the starlight being compacted toward the direction of motion of the ship. This effect is described in detail in Poul Anderson’s 1970 novel, Tau Zero, and more recently in Peter Cawdron’s Galactic Exploration in which several ships traveling near to the speed of light explore separate parts of the Galaxy. An opposite effect will occur in the direction of the rear of the ship: stars’ light will appear to be coming from well away from the rear direction of the ship. Though these effects may seem extreme and hard to overcome, any navigation system controlled by computer can carefully take these effects into account and properly navigate the ship. However, as the spacecraft accelerates, it would be extremely difficult for an astronaut to make any attempt to recognize individual stars or constellations with the unaided eye (Goswami 1983).

Perhaps the most dramatic solution of all is that of faster-than-light travel. This sort of method is casually mentioned in such classics as Star Wars and Battlestar Galactica and in much more detail, in the various incarnations of Star Trek. Though often posited as impossible by physicists, this is only strictly true if an object itself is moving faster-than-light within local space. However, faster-than-light travel with respect to the global surroundings (the very distant Galaxy) might be possible if it is local space itself that is changing in order to get a starship from point A to point B. One benefit of this kind of faster-than-light travel is that it avoids time dilation. That is, time won’t seem to be slowed down for the crew, as compared to the outside world, which is quite important for a show such as Star Trek, as the crew does not want to receive an emergency order to go to a very distant planet, potentially to save lives, only to find out that everyone involved in the crisis had died decades or even centuries ago. A potential real life version of the warp-drive of Star Trek was first theorized by Miguel Alcubierre in 1994. Before we understand the details of Alcubierre’s theory, we must first understand some concepts behind Einstein’s General Theory of Relativity. One primary idea is that a particular distribution of mass and energy will dictate the geometry of space around it. Perhaps the most famous case of this a black hole, which will create a deep “well” of space around a very dense concentration of matter. However, any mass at all will distort space and time to some degree. In the Alcubierre theory, space is warped in a fashion that allows a traveler to move faster than light if they remain in this “warp bubble.” This bubble can contract ahead of the ship, and expand behind it. However, there are a number of important drawbacks. Firstly, the warp bubble can only be created by negative matter or energy. Negative matter would be unusual indeed, and it is not known if any exists anywhere in the Universe. Negative energy only exists in very small quantities as a result of the Casimir Effect. The quantity of energy needed to create these bubbles is enormous and you have to have these bubbles created in advance of traveling. Therefore, it seems that “on demand” warp drive would be difficult to manage (Krauss 1995). However, this remains a very interesting case of how science fiction helped to inspire a theoretical physicist to come up with valid equations that could explain Warp Drive and faster-than-light travel, even if it is not practical due to vast energy requirements.

Another related concept that could also help with space travel in the far future is that of wormholes. The original theorizing of wormholes goes back to Einstein himself, and his student Nathan Rosen. In studying general relativity of black holes, they realized that a natural result would be a “bridge” to another part of the Universe (or possibly another Universe entirely) where a “white hole” could open up. It was soon realized that nothing manmade could make this journey, since the tidal forces at the black hole would become infinite and rip apart any person or spacecraft as the black hole is approached. Over time however, some interest in wormholes increased as gravity theorists were wondering whether it was possible to create a wormhole that would not necessarily destroy a space traveler. As the story goes, Carl Sagan was looking for a way to get a character in a novel he was writing (Contact) to take shortcuts through space. His original idea was to somehow make use of black holes and Einstein-Rosen type wormholes. However, talking to a theoretical physicist friend, Kip Thorne of Caltech, it became clear that tidal forces near the black hole would probably kill Sagan’s protagonist before she got anywhere else, and a more hopeful avenue would be a different type of wormhole (Thorne 1994). Wormholes are essentially shortcuts through space that could take matter from one point in the Universe to some other point, possible many light years away from the first wormhole mouth. Like the warp bubbles discussed earlier, stable wormholes can only exist as a result of configurations of negative matter or energy. Therefore, they will meet with the same difficulties: finding negative energy or matter in the first place, and finding vast amounts of it. We will revisit wormholes in more detail when we discuss time travel in a future chapter. However, this is yet another interesting case of how research in theoretical physics was directly inspired by science fiction.

The world of science fiction has offered some potential solutions to work around these problems of space travel in the future, and some seem promising, in the sense that they are allowed by physical laws, but are likely still far too costly in terms of energy. A workaround that was introduced early in science fiction is that of cryogenic suspended animation (sometimes also called cryonics), that is, the slowing down of metabolism by dramatically cooling the human body. Probably the earliest example in film is from the 1954 film Gog, which has numerous items we’ll be discussing in future chapters. The film is set in a testing facility for a future space station. Among the experiments is one testing the “freezing” of astronauts, to be used in space. In fact, one of the first unexplained deaths that occur in this film is one of the scientists running this experiment. Mark Glassy discusses two early films that dealt solely with the topic of cryogenic suspended animation, The Man with Nine Lives and Frozen Alive, both addressing the topic of re-animating people after they have been frozen (Glassy 2001). Another early example from television is Science Fiction Theater’s “The Long Sleep” episode (Ivan Tors was the producer of both Gog and Science Fiction Theater), in which a doctor experiments on inducing hibernation in non-hibernating animals. This doctor is forced to apply his technique to a dying child in order to save him. One early example is in the British sci-fi marionette series from 1963, Space Patrol (this was called Planet Patrol in the U.S. to avoid confusion with a series that had the name Space Patrol already). They used what they called “freezing” (suspended animation) as a way to avoid excess accelerations during interplanetary travel (this has some similarities to the laser stasis field used for similar purposes in the 1956 film, Forbidden Planet). In the 1964 Twilight Zone episode “The Long Morrow,” an astronaut is sent on a 40-year mission to another star system (and back). He is placed in suspended animation to help prevent aging during the trip. In the end, he purposely comes out of suspended animation early so that he will be the same age as the woman he fell in love with just before the mission. However she, also thinking of the age difference upon his return, purposely went into hibernation on Earth to reduce her aging (in this sense, it’s a science fiction re-working of the “Gift of the Magi”). “Freezing” is also a key element in the 1965 pilot episode of Lost in Space and was returned to from time to time during the series. The intention was to put the entire family in suspended animation during what was to be a five-year voyage to a planet orbiting Alpha Centauri, one of the closest stars to Earth. The Robinson family in Lost in Space only stayed in suspended animation for a short time before their mission was derailed by a saboteur, Dr. Zachary Smith, but the “freezing tubes” were used briefly in other instances, such as to reduce the effects on the human body during hard landings. A similar “freezing” device was used by the astronauts in the original Planet of the Apes (and led to one crew member dying when her freezing compartment was breached). Another type of suspended animation using drugs during a six-week space mission was implemented in the film Journey to the Far Side of the Sun. Similarly, an “eternity drug” was used for this purpose in the aforementioned short story, “Far Centaurus.” In one way or another, this made it into many different science fiction series, including ST:TOS in the episode “Space Seed” that introduces Khan, Kirk’s nemesis who reappears in the second Star Trek film as well. In The Starlost episode “Lazarus from the Mist,” an engineer is revived from suspended animation to potentially help in getting the Ark back on course. However, it turns out that this engineer had a fatal disease, and would be terminal upon revival. In addition, he has no knowledge of nuclear reactors that would be needed to fix the Ark. In another space-ark themed episode, the Doctor Who episode “The Ark in Space,” a space station orbiting the Earth has kept a large number of personnel frozen over time. In the Space: 1999 episode “Earthbound,” we see that an advanced species, the Kaldorians, have developed a very advanced form of suspended animation that can preserve the full essence of a person’s soul and body. In a later episode of this series, “The Exiles,” one of many capsules suddenly orbiting the Moon is taken in for inspection. Upon opening it, they realize that the capsule contains a humanoid (named Cantar), apparently frozen. They are successful in reviving him and find out he is from the nearby planet Golos, having been exiled for political reasons 300 years prior. They then go out to retrieve his wife, Zova. It is soon realized that they were actually exiled violent criminals from Golos. In Space: 1999 there were also similar plots involving reviving aliens who were long ago placed in a form of suspended animation (always turning out to be more than they bargained for), such as in “The Mark of Archanon” and “The End of Eternity.” As part of the premise of Buck Rogers in the 25th Century, the titular character becomes accidentally thrown into suspended animation when his spacecraft gets thrown into a distant orbit of the Sun and he becomes frozen, only to be thawed out hundreds of years later.

For decades in biological research and medical research glycerol (glycerin) has been used to protect tissue in cryogenic preservation. Ethylene glycol, commonly used as an automotive antifreeze, is a very similar compound. Both act to inhibit crystal growth of water as it cools to form ice. Some research with mammals has been conducted, and there is some evidence that people may be able to survive if their body temperature is brought below freezing. Such research has called for dramatic procedures, such as replacing blood with a special fluid that acts like a natural anti-freeze. Some of these animals were revived successfully (Kaku, The Physics of the Impossible 2008). A major problem is in seeing that everything from internal organs to skin is kept from forming ice crystals, as this will destroy the tissue (Glassy 2001). In the future, this may be a way to prolong the life of those who have inoperable or otherwise incurable conditions (though probably not for years, as portrayed in fiction). The 1973 comedy Sleeper featured an ordinary man, Miles Monroe (Woody Allen), frozen for 200 years after an unsuccessful operation, who was then later revived. Though treated in comic fashion, the film does show the difficulty such a person would have in adapting to society upon coming out of this kind of hibernation. Not surprisingly, such techniques also have the possibility of being abused. For instance, in the Twilight Zone episode “The Rip van Winkle Caper,” three criminals steal gold, and then wait out 100 years in suspended animation, hoping that there will be no one left interested in capturing them once they awake (they forget to take into account the possibility that gold will completely devalue in that amount of time, which is precisely what happens).

A second popular workaround discussed at length by Simon Caroti is the intergenerational starship or “space ark” (Caroti 2011). On television, this concept was most famously presented in The Starlost. With Ben Bova as science adviser, there were some initial attempts to bring serious science into the story. For instance, it was understood that a nuclear fusion reactor would be necessary to power the ship and that the reactor would get its nuclear fuel from hydrogen in space. Unfortunately, extreme budget cuts led to a much more stripped down version of what was originally intended and attention to these sorts of scientific details was severely compromised. A considerably more effective presentation of a similar story was given in Space: 1999’s episode “Mission of the Darians.” In this episode the crew of the ship had suffered a nuclear accident and was faced with a dilemma of how to survive without sufficient food supply. The survivors resorted to a form of high-tech cannibalism, by converting their mutant compatriots to energy and basic proteins (Muir, Exploring Space: 1999 1997). A more updated version is presented in the web based series, “The Ark.” Though this is perhaps the easiest way to circumvent low ship speeds, it introduces a host of other problems. The primary concern, as with most other forms of interstellar travel, is that this would require large amounts of energy. In fact Simon Caroti, quoting the result of Frank Drake’s calculations, shows that a 100-year journey to a star within about 10 light years, and with 100 crewmen, would use as much energy as a major country would in 100 years (Caroti 2011). This is thousands of times more energy than a typical nuclear power plant could possibly create, so clearly we would need a very efficient energy source. Another issue is that it may not be worth it to send out such a slow ship when over such a long period, human progress is likely to lead to a much more efficient technique. This exact problem is the premise of the classic 1944 A E. van Vogt short story “Far Centaurus,” in which the crew of a 500-year mission gets to Alpha Centauri, only to find out that many generations of people from Earth have set up colonies on all the planets of the Centaurus system of stars (there is a similar turn of events in the Twilight Zone episode “The Long Morrow”). Though the crewmen in some sense are treated like mythological figures, they are not able to function in the much more advanced society. Some of these themes and plot lines are further explored in Van Vogt’s novel, Rogue Ship. Something of a concern that is often introduced as a plot device in science fiction is that folks over many generations in such an intergenerational spacecraft may lose knowledge of how to navigate and repair the ship. In fact in many plot-lines, such as in Heinlein’s Orphans in the Sky, Brian Aldiss’ Non-Stop, and Ellison’s Phoenix without Ashes (the story behind television’s The Starlost) and Star Trek: TOS episode “For the World Is Hollow and I Have Touched the Sky,” inhabitants forget they are on a ship at all! In the latter case, the ship was disguised as a habitable asteroid. In the aforementioned mini-series, Ascension, the twist is near to the opposite of this: the crew thinks they are on an intergenerational space ark, but are actually all participants in an Earth-based experiment, and the spaceship is a fiction that has been perpetrated for decades.

Peter Cawdron had a different purpose for generational ships in his Galactic Exploration novellas. Here, because extraterrestrial civilizations are discovered to be quite rare, one must go on extragalactic voyages to find intelligent life. The long voyages require a number of generations to act as crew even traveling near to the speed of light to the Andromeda Galaxy (there was a similar concept in the ST:TOS episode “By Any Other Name,” in which the Kelvans, from Andromeda, appropriate the Enterprise to take it back there to help in their colonization efforts).

Since humans have to breathe oxygen and said oxygen has to be at an acceptable pressure, temperature and density, if we don’t have those conditions where we intend to go, then we have to create them artificially. In early space travel, as is true today, the main concerns were with the compartments of small spacecraft, as well as with space suits. The primary concept of a space suit is to inflate an airtight suit to atmospheric pressure (or some reasonable fraction at which humans can survive) so that the astronaut won’t be exposed to the vacuum. In addition, the suit must protect from extremely high and low temperatures, micrometeorites, and blinding light. The space suit matured from earlier developments in pressure suits for high altitude airplane travel with the first inventions coming out of necessity between World War I and World War II as planes needed to go to increasingly higher altitudes (Thomas and McMann 2006).

The space suit as depicted in science fiction has been discussed at length by others, including Westfahl (2012) and Gooden (2012). An early Ray Bradbury short story, “Kaleidoscope,” depicts astronauts thrown into space with only their space suits after their ship is torn open in an accident. The astronauts slowly die off as they run out of oxygen and then burn up in the atmosphere. This story seems to have influenced a scene from the Space: 1999 episode “War Games,” in which Commander John Koenig is ejected from his Eagle spacecraft, alone and dying in just his space suit, left to think for an hour and half about his existence. However, most depictions of space suits were fairly crude for several decades of film. Even the television era only saw minor improvements in realistic depictions. For instance the many space walks of Lost in Space never saw the crew wearing a true pressure suit. Particularly as Westfahl points out, the critical point in realism for depiction of space suits was soon after television coverage of the U.S. space shots. For instance, the space suits of 2001: A Space Odyssey (released in April 1968) were more realistic than those that came before.

In early space missions, for use within the capsules, oxygen under pressure was brought up in canisters. For later missions, such as the ISS, oxygen is created via electrolysis of water, which is made of both hydrogen and oxygen. However, these methods both depend on supplies brought from Earth (Rogers 2008). Clearly, this could be a problem for future space travel that may extend into the farther reaches of the Solar System, or even deep space. The early science fiction television series, Fireball XL5, though intended mainly for children, tried to solve this problem by having the astronauts take “oxygen pills” with no attention to how the astronauts would deal with pressure and temperature differences in space without pressure suits (though the thought of oxygenating astronauts’ blood has been given serious study).

Other than the means for traveling in space, in science fiction (and also, to an extent, in reality) we encounter various other issues. For instance, for any voyage of substantial length, simulated gravity would be highly preferred to living in a low gravity environment. We know that long periods of time living in microgravity can lead to severe muscle atrophy and bone loss in astronauts, as well as many other issues related to health and comfort, such as sleeping, eating, and using the toilet (Rogers 2008). Though “simulated gravity” is often mentioned in science fiction, it is less often understood how it is accomplished. Simulated gravity can be accomplished by spinning the spacecraft (or part of the spacecraft). To a person who is within the rotating environment, there will be a centrifugal force felt that would act to push the astronaut toward the wall (if the surface of the walls are parallel to the spin axis). If we set up the craft so that the astronaut can begin to walk on what might originally be perceived to be a wall, he can walk all around the spacecraft on this outside wall, so long as there is sufficient spinning. Near the axis of spinning, the forces felt would be zero, and the astronauts would still be weightless, but there would be increased weight felt as the astronauts approach the outermost walls of the craft, where weight would reach its maximum. This centrifugal force felt by the astronaut is often referred to as a “fictitious” force, since it does not have its origin due to the interaction of objects. However, this term is somewhat deceiving, as it implies that what is felt is somehow unreal (Goswami 1983). We see a repeated example of this in the British series Space Patrol. Their regularly used spacecraft is a rotating torus, and the astronauts are definitely shown moving around in the ship under Earth-like gravity. However, it would have been more realistic had they actually shown crew members walking on the curved inner surface of the spacecraft.

In addition to being concerned about the effects of low gravity, we also have to be concerned with accelerations that are much stronger than normal surface gravity on Earth. This will particularly be a concern at launch, when accelerations can be at their greatest. But for any of the methods of travel discussed, a major concern will be that you must not accelerate the spacecraft so greatly that you will kill the occupants! As an example, we might be tempted to “put our foot on the gas” to get to the nearest star with known planets. However, if the ship is manned, it is very important not to accelerate too much at any point in the voyage. Usually, these forces felt are quantified in terms of multiples of the acceleration due to gravity here on Earth (“g”). We know that at several g, people will start to feel significantly heavier and begin to have difficulty moving around. Finally, at about 25 g, no movement even at the extremities will be possible. No human has ever been tested under conditions greater than 45 g, but it is suspected that before 100 g, a human would not be able to survive (Rogers 2008). In fact, by the time ST:TNG was on the air, the creators realized that quick acceleration as pictured in the ST:TOS would lead to g-forces that would certainly kill our favorite characters (as well as the usual group of red-shirts). Therefore, writers cleverly invented “inertial dampers” that would somehow cancel out the g-forces. Though this is really just an ad-hoc solution with no satisfactory explanation, at least they were aware that they had to address a serious issue of physics that came to the attention of many fans (Krauss, The Physics of Star Trek 1995).