Somebody should be thinking about what the regulation of AI should look like. But I think the regulation shouldn’t start with the view that its goal is to stop AI and put back the lid on a Pandora’s box, or hold back the deployment of these technologies and try and turn the clock back.

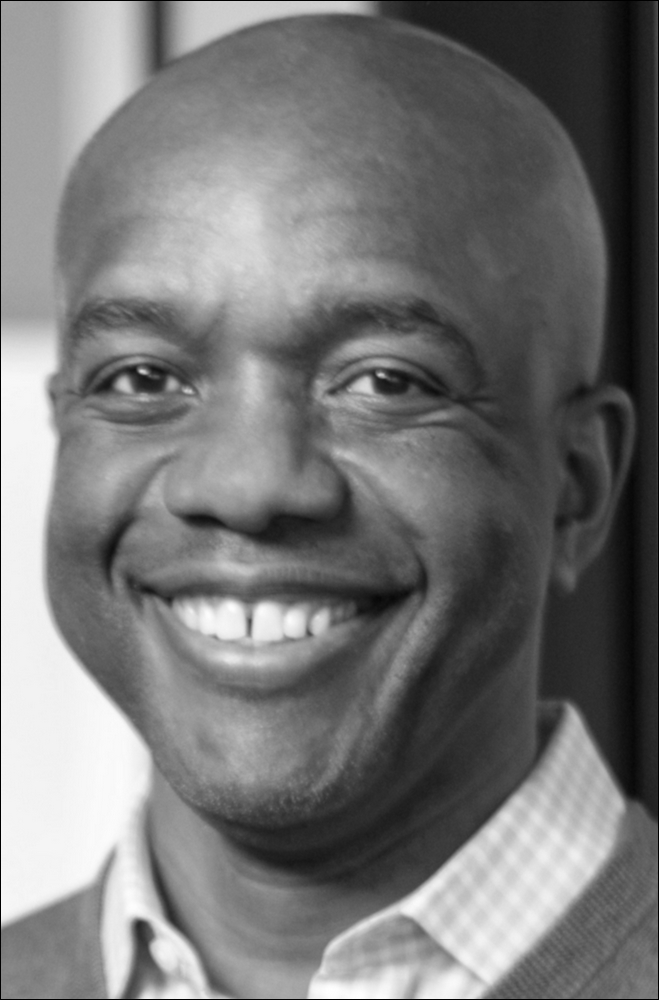

CHAIRMAN AND DIRECTOR OF MCKINSEY GLOBAL INSTITUTE

James is a senior partner at McKinsey and Chairman of the McKinsey Global Institute, researching global economic and technology trends. James consults with the chief executives and founders of many of the world’s leading technology companies. He leads research on AI and digital technologies and their impact on organizations, work, and the global economy. James was appointed by President Obama as vice chair of the Global Development Council at the White House and by US Commerce Secretaries to the Digital Economy Board and National Innovation Board. He is on the boards of the Oxford Internet Institute, MIT’s Initiative on the Digital Economy, the Stanford-based 100-Year Study on AI, and he is a fellow at DeepMind.

MARTIN FORD: I thought we could start by having you trace your academic and career trajectory. I know you came from Zimbabwe. How did you get interested in robotics and artificial intelligence and then end up in your current role at McKinsey?

JAMES MANYIKA: I grew up in a segregated black township in what was then Rhodesia, before it became Zimbabwe. I was always inspired by the idea of science, partly because my father had been the first black Fulbright scholar from Zimbabwe to come to the United States of America in the early 1960s. While there, my father visited NASA at Cape Canaveral, where he watched rockets soar up into the sky. And in my early childhood after he came back from America, my father filled my head with the idea of science, space, and technology. So, I grew up in this segregated township, thinking about science and space, building model planes and machines out of whatever I could find.

When I got to university after the country had become Zimbabwe, my undergraduate degree was in electrical engineering with heavy doses of mathematics and computer science. And while there a visiting researcher from the University of Toronto got me involved in a project on neural networks. That’s when I learned about Rumelhart Backpropagation and the use of logisti sigmoid functions in neural network algorithms.

Fast forward, I did well enough to get a Rhodes scholarship to go to Oxford University, where I was in the Programming Research Group, working under Tony Hoare, who is best known for inventing Quicksort and for his obsession with formal methods and axiomatic specifications of programming languages. I studied for a master’s degree in mathematics and computer science and worked a lot on mathematical proofs and the development and verification of algorithms. By this time, I’d given up on the idea that I would be an astronaut, but I thought that at least if I worked on robotics and AI, I might get close to science related to space exploration.

I wound up in the Robotics Research Group at Oxford, where they were actually working on AI, but not many people called it that in those days because AI had a negative connotation at the time, after what had recently been a kind of “AI Winter” or a series of winters, where AI had underdelivered on its hype and expectations. So, they called their work everything but AI—it was machine perception, machine learning, it was robotics or just plain neural networks; but no-one in those days was comfortable calling their work AI. Now we have the opposite problem, everyone wants to call everything AI.

MARTIN FORD: When was this?

JAMES MANYIKA: This was in 1991, when I started my PhD at the Robotics Research Group at Oxford. This part of my career really opened me to working with a number of different people in the robotics and AI fields. So, I met people like Andrew Blake and Lionel Tarassenko, who were working on neural networks; Michael Brady, now Sir Michael, who was working on machine vision; and I met Hugh Durrant-Whyte, who was working on distributed intelligence and robotic systems. He became my PhD advisor. We built a few autonomous vehicles together and we also wrote a book together drawing on the research and intelligence systems we were developing.

Through the research I was doing, I wound up collaborating with a team at the NASA Jet Propulsion Laboratory that was working on the Mars rover vehicle. NASA was interested in applying the machine perception systems and algorithms that they were developing to the Mars rover vehicle project. I figured that this was as close as I’m ever going to get to go into space!

MARTIN FORD: So, there was actually some code that you wrote running on the rover, on Mars?

JAMES MANYIKA: Yes, I was working with the Man Machine Systems group at JPL in Pasadena, California. I was one of several visiting scientists there working on these machine perception and navigation algorithms, and some of them found their way onto the modular and autonomous vehicle systems and other places.

That period at Oxford in the Robotics Research Group is what really sparked my interest in AI. I found machine perception particularly fascinating: the challenges of how to build learning algorithms for distributed and multi-agent systems, how to use machine learning algorithms to make sense of environments, and how to develop algorithms that could autonomously build models of those environments, in particular, environments where you had no prior knowledge of them and had to learn as you go—like the surface of Mars.

A lot of what I was working on had applications not just in machine vision, but in distributed networks and sensing and sensor fusion. We were building these neural network-based algorithms that were using a combination of Bayesian networks of the kind Judea Pearl had pioneered, Kalman filters and other estimation and prediction algorithms to essentially build machine learning systems. The idea was that these systems could learn from the environment, learn from input data from a wide range of sources of varying quality, and make predictions. They could build maps and gather knowledge of the environments that they were in; and then they might be able to make predictions and decisions, much like intelligent systems would.

So, eventually I met Rodney Brooks, who I’m still friends with today, during my visiting faculty fellowship at MIT, where I was working with the Robotics Group at MIT and the Sea Grant project that was building underwater robots. During this time, I also got to know people like Stuart Russell, who’s a professor in robotics and AI at Berkeley, because he had spent time at Oxford in my research group. In fact, many of my colleagues from those days have continued to do pioneering work, people like John Leonard, now a Robotics Professor at MIT and Andrew Zisserman, at DeepMind. Despite the fact that I’ve wandered off into other areas in business and economics, I’ve stayed close to the work going on in AI and machine learning and try to keep up as best as I can.

MARTIN FORD: So, you started out with a very technical orientation, given that you were teaching at Oxford?

JAMES MANYIKA: Yes, I was on the faculty and a fellow at Balliol College, Oxford, and I was teaching students courses in mathematics and computer science and as well as on some of the research we were doing in robotics.

MARTIN FORD: It sounds like a pretty unusual jump from there to business and management consulting at McKinsey.

JAMES MANYIKA: That was actually as much by accident as anything else. I’d recently become engaged, and I had also received an offer from McKinsey to join them in Silicon Valley; and I thought it would be a brief, interesting detour, to go to McKinsey.

At the time, like many of my friends and colleagues, such as Bobby Rao, who had also been in the Robotics Research Lab with me, I was interested in building systems that could compete in the DARPA driverless car challenge. This was because a lot of our algorithms were applicable to autonomous vehicles and driverless cars and back then, the DARPA challenge was one of the places where you could apply those algorithms. All of my friends were moving to Silicon Valley then. Bobby was at that time a post-doc at Berkeley working with Stuart Russell and others, and so I thought I should take this McKinsey offer in San Francisco. It was a way to be close to Silicon Valley and to be close to where some of the action, including the DARPA challenge, was taking place.

MARTIN FORD: What is your role now at McKinsey?

JAMES MANYIKA: I’ve ended up doing two kinds of things. One is working with many of the pioneering technology companies in Silicon Valley, where I have been fortunate to work with and advise many founders and CEOs. The other part, which has grown over time, is leading research at the intersection of technology and its impact on business and the economy. I’m the chairman of the McKinsey Global Institute, where we research not just technology but also macroeconomic and global trends to understand their impact on business and the economy. We are privileged to have amazing academic advisors that include economists who also think a lot about technology’s impacts, people like Erik Brynjolffson, Hal Varian, and Mike Spence, the Nobel laureate, and even Bob Solow in the past.

To link this back to AI, we’ve been looking a lot at disruptive technologies, and tracking the progress of AI, and I’ve stayed in constant dialogues as well as collaborated with AI friends like Eric Horvitz, Jeff Dean, Demis Hassabis, and Fei-Fei Li, and also learning from legends like Barbara Grosz. While I’ve tried to stay close to the technology and the science, my MGI colleagues and I have spent more time thinking about and researching the economic and business impacts of these technologies.

MARTIN FORD: I definitely want to delve into the economic and job market impact, but let’s begin by talking about AI technology.

You mentioned that you were working on neural networks way back in the 1990s. Over the past few years, there’s been this explosion in deep learning. How do you feel about that? Do you see deep learning as the holy grail going forward, or has it been overhyped?

JAMES MANYIKA: We’re only just discovering the power of techniques such as deep learning and neural networks in their many forms, as well as other techniques like reinforcement learning and transfer learning. These techniques all still have enormous headroom; we’re only just scratching the surface of where they can take us.

Deep learning techniques are helping us solve a huge number of particular problems, whether it’s in image and object classification, natural language processing or generative AI, where we predict and create sequences and outputs whether its speech, images, and so forth. We’re going to make a lot of progress in what is sometimes called “narrow AI,” that is, solving particular areas and problems using these deep learning techniques.

In comparison, we’re making slower progress on what is sometimes called “artificial general intelligence” or AGI. While we’ve made more progress recently than we’ve done in a long time, I still think progress is going to be much, much slower towards AGI, just because it involves a much more complex and difficult set of questions to answer and will require many more breakthroughs.

We need to figure out how to think about problems like transfer learning, because one of the things that humans do extraordinarily well is being able to learn something, over here, and then to be able to apply that learning in totally new environments or on a previously unencountered problem, over there. There are definitely some exciting new techniques coming up, whether in reinforcement learning or even simulated learning—the kinds of things that AlphaZero has begun to do—where you self-learn and self-create structures, as well start to solve wider and different categories of challenges, in the case of AlphaZero different kinds of games. In another direction the work that Jeff Dean and others at Google Brain are doing using AutoML is really exciting. That’s very interesting from the point of helping us start to make progress in machines and neural networks that design themselves. These are just a few examples. One could say all of this progress is nudging us towards AGI. But these are really just small steps; much, much more is needed, there are whole areas of high-level reasoning etc. that we barely know how to tackle. This is why I think AGI is still quite a long way away.

Deep learning is certainly going to help us with narrow AI applications. We’re going to see lots and lots and lots and lots of applications that are already being turned into new products and new companies. At the same time, it’s worth pointing out that there are still some practical limitations to the use and application of machine learning, and we have pointed this out in some of our MGI work.

MARTIN FORD: Do you have any examples?

JAMES MANYIKA: For example, we know that many of these techniques still largely rely on labelled data, and there’s still lots of limitations in terms of the availability of labelled data. Often this means that humans must label underlying data, which can be a sizable and error-prone chore. In fact, some autonomous vehicle companies are hiring hundreds of people to manually annotate hours of video from prototype vehicles to help train the algorithms. There are some new techniques that are emerging to get around the issue of needing labeled data, for example, in-stream supervision pioneered by Eric Horvitz and others; the use of techniques like Generative Adversarial Networks or GANs, which is a semi-supervised technique through which usable data can be generated in a way that reduces the need for datasets that require labeling by humans.

But then we still have a second challenge of needing such large and rich data sets. It is quite interesting that you can more or less identify those areas that are making spectacular progress simply by observing which areas have access to a huge amount of available data. So, it is no surprise that we have made more progress in machine vision than in other applications, because of the huge volume of images and now video being put on the internet every day. Now, there are some good reasons—regulatory, privacy, security, and otherwise—that may limit data availability to some extent. And this can also, in part, explain why different societies are going to experience differential rates of progress on making data available. Countries with large populations, naturally, generate larger volumes of data, and different data use standards may make it easier to access large health data sets, for example, and use that to train algorithms. So, in China you might see more progress in using AI in genomics and “omics” given larger available data sets.

So, data availability is a big deal and may explain why some areas of AI applications take off much faster in some places than others. But we’ve also got other limitations to deal with, like we still don’t have generalized tools in AI and we still don’t know how to solve general problems in AI. In fact, one of the fun things, and you may have seen this, is that people are now starting to define new forms of what used to be the Turing test.

MARTIN FORD: A new Turing Test? How would that work?

JAMES MANYIKA: Steve Wozniak, the co-founder of Apple, has actually proposed what he calls the “coffee test” as opposed to Turing tests, which are very narrow in many respects. A coffee test is kind of fun: until you get a system that can enter an average and previously unknown American home and somehow figure out how to make a cup of coffee, we’ve not solved AGI. The reason why that sounds trivial but at the same time quite profound is because you’re solving a large number of unknowable and general problems in order to make that cup of coffee in an unknown home, where you don’t know where things are going to be, what type of coffee maker it is or other tools they have, etc. That’s very complex generalized problem-solving across numerous categories of problems that the system would have to do. Therefore, it may be that we need Turing tests of that form if you want to test for AGI, and maybe that’s where we need to go.

I should point out the other limitation, which is the question of potential issues not so much in the algorithm, but in the data. This is a big question which tends to divide the AI community. One view is the idea that these machines are probably going to be less biased than humans. You can look at multiple examples, such as human judges and bail decisions where using an algorithm could take out many of the inherent human biases, including human fallibility and even time of day biases. Hiring and advancement decisions could be another similar area like this, thinking about Marianne Bertrand and Sendhil Mullainathan’s work looking at the difference in calls back received by different racial groups who submitted identical resumes for jobs.

MARTIN FORD: That’s something that has come up in a number of the conversations I’ve had for this book. The hope should be that AI can rise above human biases, but the catch always seems to be that that the data you’re using to train the AI system encapsulates human bias, so the algorithm picks it up.

JAMES MANYIKA: Exactly, that’s the other view of the bias question that recognizes that the data itself could actually be quite biased, both in its collection, the sampling rates—either through oversampling or undersampling—and what that means systematically, either to different groups of people or different kinds of profiles.

The general bias problem has been shown in quite a spectacular fashion in lending, in policing and criminal justice cases, and so in any dataset that we have want to use, we could have large-scale biases already built it, many likely unintended. Julia Angwin and her colleagues at ProPublica have highlighted such biases in their work, as has MacArthur Fellow Sendhil Mullainathan and his colleagues. One of the most interesting findings to come out of that work, by the way, is that algorithms may be mathematically unable to satisfy different definitions of fairness at the same time, so deciding how we will define fairness is becoming a very important issue.

I think both views are valid. On the one hand, machine systems can help us overcome human bias and fallibility, and yet on the other hand, they could also introduce potentially larger issues of their own. This is another important limitation we’re going to need to work our way through. But here again we are starting to make progress. I am particularly excited about the pioneering work that Silvia Chiappa at DeepMind is doing using counterfactual fairness and causal model approaches to tackle fairness and bias.

MARTIN FORD: That’s because the data directly reflects the biases of people, right? If it’s collected from people as they’re behaving normally, using an online service or something, then the data is going to end up reflecting whatever biases they have.

JAMES MANYIKA: Right, but it can actually be a problem even if individuals aren’t necessarily biased. I’ll give you an example where you can’t actually fault the humans per se, or their own biases, but that instead shows us how our society works in ways that create these challenges. Take the case of policing. We know that, for example, some neighborhoods are more policed than others and by definition, whenever neighborhoods are more policed, there’s a lot more data collected about those neighborhoods for algorithms to use.

So, if we take two neighborhoods, one that is highly policed and one that is not—whether deliberately or not—the fact is that the data sampling differences across those two communities will have an impact on the predictions about crime. The actual collection itself may not have shown any bias, but because of oversampling in one neighborhood and undersampling in another, the use of that data could lead to biased predictions.

Another example of undersampling and oversampling can be seen in lending. In this example, it works the other way, where if you have a population that has more available transactions because they’re using credit cards and making electronic payments, we have more data about that population. The oversampling there actually helps those populations, because we can make better predictions about them, whereas if you then have an undersampled population, because they’re paying in cash and there is little available data, the algorithm could be less accurate for those populations, and as a result, more conservative in choosing to lend, which essentially biases the ultimate decisions. We have this issue too in facial recognitions systems which has been demonstrated in the work of Timnit Gebru, Joy Buolamwini, and others.

It may not be the biases that any human being has in developing the algorithms, but the way in which we’ve collected the data that the algorithms are trained on that introduces bias.

MARTIN FORD: What about other kinds of risks associated with AI? One issue that’s gotten a lot of attention lately is the possibility of existential risk from superintelligence. What do you think are the things we should legitimately worry about?

JAMES MANYIKA: Well, there are lots of things to worry about. I remember a couple of years ago, a group of us, that included many of the AI pioneers and other luminaries, including the likes of Elon Musk and Stuart Russell, met in Puerto Rico to discuss progress in AI as well concerns and areas that needed more attention. The group ended up writing about what some of the issues are, in a paper that was published by Stuart Russell, and what we should worry about, and pointing out where there was not enough attention and research going into analyzing these areas. Since that meeting, the areas to worry about have begun to change a little bit in the last couple of years, but those areas included everything—including things like safety questions.

Here is one example. How do you stop a runaway algorithm? How do you stop a runaway machine that gets out of control? I don’t mean in a Terminator sense, but even just in the narrow sense of an algorithm that is making wrong interpretations, leading to safety questions, or even simply upsetting people. For this we may need what has been referred to as the Big Red Button, something several research teams are working on DeepMind’s work with gridworlds, for example, has demonstrated that many algorithms could theoretically learn how to turn off their own “off-switches”.

Another issue is explainability. Here, explainability is a term used to discuss the problem that with neural networks: we don’t always know which feature or which dataset influenced the AI decision or prediction, one way or the other. This can make it very hard to explain an AI’s decision, to understand why it might be reaching a wrong decision. This can matter a great deal when predictions and decisions have consequential implications that may affect lives for example when AI is used in criminal justice situations or lending applications, as we’ve discussed. Recently, we’ve seen new techniques to get at the explainability challenge emerge. One promising technique is the use of Local-Interpretable-Model Agnostic Explanations, or LIME. LIME tries to identify which particular data sets a trained model relies on most to make a prediction. Another promising technique is the use of Generalized Additive Models, or GAMs. These use single feature models additively and therefore limit interactions between features, and so changes in predictions cane be determined as features are added.

Yet another area we should think about more is the “detection problem,” which is where we might find it very hard to even detect when there’s malicious use of an AI system—which could be anything from a terrorist to a criminal situation. With other weapons systems, like nuclear weapons, we have fairly robust detection systems. It’s hard to set off a nuclear explosion in the world without anybody knowing because you have seismic tests, radioactivity monitoring, and other things. With AI systems, not so much, which leads to an important question: How do we even know when an AI system is being deployed?

There are several critical questions like this that still need a fair amount of technical work, where we must make progress, instead of everybody just running away and focusing on the upsides of applications for business and economic benefits.

The silver lining of all this is that groups and entities are emerging and starting to work on many of these challenges. A great example is the Partnership on AI. If you look at the agenda for the Partnership, you’ll see a lot of these questions are being examined, about bias, about safety, and about these kinds of existential threat questions. Another great example is the work that Sam Altman, Jack Clarke and others at OpenAI are doing, which aims to make sure all of society benefits from AI.

Right now, the entities and groups that are making the most progress on these questions have tended to be places that have been able to attract the AI superstars, which, even in 2018, tends to be a relatively small group. That will hopefully diffuse over time. We’ve also seen some relative concentrations of talent go to places that have massive computing power and capacity, as well as places that have unique access to lots of data, because we know these techniques benefit from those resources. The question is, in a world in which there’s a tendency for more progress to go to where the superstars are, and where the data is available, and where the computer capacity is available, how do you make sure this continues to be widely available to everybody?

MARTIN FORD: What do you think about the existential concerns? Elon Musk and Nick Bostrom talk about the control problem or the alignment problem. One scenario is where we could have a fast takeoff with recursive improvement, and then we’ve got a superintelligent machine that gets away from us. Is that something we should be worried about at this point?

JAMES MANYIKA: Yes, somebody should be worrying about those questions—but not everybody, partly because I think the time frame for a super intelligent machine is so far away, and because the probability of that is fairly low. But again, in a Pascal-wager like sense, somebody should be thinking about those questions, but I wouldn’t get society all whipped up about the existential questions, at least not yet.

I like the fact that a smart philosopher like Nick Bostrom is thinking about it, I just don’t think that it should be a huge concern for society as a whole just yet.

MARTIN FORD: That’s also my thinking. If a few think tanks want to focus on these concerns, that seems like a great idea. But it would be hard to justify investing massive governmental resources at this point. And we probably wouldn’t want politicians delving into this stuff in any case.

JAMES MANYIKA: No, it shouldn’t be a political issue, but I also disagree with people who say that there is zero probability that this could happen and say that no-one should worry about it.

The vast majority of us shouldn’t be worried about it. I think that we should be more worried about these more specific questions that are here now, such as safety, use and misuse, explainability, bias, and the economic and workforce effects questions and related transitions. Those are the bigger, more real questions that are going to impact society beginning now and running over the next few decades.

MARTIN FORD: In terms of those concerns, do you think there’s a place for regulation? Should governments step in and regulate certain aspects of AI, or should we rely on industry to figure it out for themselves?

JAMES MANYIKA: I don’t know what form regulation should take, but somebody should be thinking about regulation in this new environment. I don’t think that we’ve got any of the tools in place, any of the right regulatory frameworks in place at all right now.

So, my simple answer would be yes, somebody should be thinking about what the regulation of AI should look like. But I think the regulation shouldn’t start with the view that its goal is to stop AI and put back the lid on a Pandora’s box, or hold back the deployment of these technologies and try and turn the clock back.

I think that would be misguided because first of all, the genie is out of the bottle; but also, more importantly, there’s enormous societal and economic benefit from these technologies. We can talk more about our overall productivity challenge, which is something these AI systems can help with. We also have societal “moonshot” challenges that AI systems can help with.

So, if regulation is intended to slow things down or stop the development of AI then I think that’s wrong, but if regulation is intended to think about questions of safety, questions of privacy, questions of transparency, questions around the wide availability of these techniques so that everybody can benefit from them—then I think those are the right things that AI regulation should be thinking about.

MARTIN FORD: Let’s move on to the economic and business aspects of this. I know the McKinsey Global Institute has put out several important reports on the impact of AI on work and labor.

I’ve written quite a lot on this, and my last book makes the argument that we’re really on the leading edge of a major disruption that could have a huge impact on labor markets. What’s your view? I know there are quite a few economists who feel this issue is being overhyped.

JAMES MANYIKA: No, it is not overhyped. I think we’re on the cusp and we’re about to enter a new industrial revolution. I think these technologies are going to have an enormous, transformative and positive impact on businesses, because of their efficiency, their impact on innovation, their impact on being able to make predictions and to find new solutions to problems, and in some case go beyond human cognitive capabilities. The impact of AI on business to me, based on our research at MGI, is for the businesses undoubtedly positive.

The impact on the economy is also going to be quite transformational too, mostly because this is going to lead to productivity gains, and productivity is the engine of economic growth. This will all take place at a time when we’re going to have aging and other effects that will create headwinds for economic growth. AI and automation systems, along with other technologies, are going to have this transformational and much-needed effect on productivity, which in the long term leads to economic growth. These systems can also significantly accelerate innovation and R&D, which leads to new products and services and even business models that will transform the economy.

I’m also quite positive about the impact on society in the sense of being able to solve the societal “moonshot” challenges I hinted at before. This could a new project or application that yields new insights into a societal challenge or proposes a radical solution or leads to the development of a breakthrough technology. This could be in healthcare, climate science, humanitarian crises or in discovering new materials. This is another area that my colleagues and I are researching where it’s clear that AI techniques from image classification to natural language processing and object identification can make a big contribution in many of these domains.

Having said all of that, if you say AI is good for business, good for economic growth, and helps tackle societal moonshots, then the big question is—what about work? I think this is a much more mixed and complicated story. But I think if I were to summarize my thoughts about jobs, I would say there will be jobs lost, but also jobs gained.

MARTIN FORD: So, you believe the net impact will be positive, even though a lot of jobs will be lost?

JAMES MANYIKA: While there will be jobs lost, there’ll also be jobs gained. In the “jobs gained” side of the story, jobs will come from the economic growth itself, and from the resulting dynamism. There’s always going to be demand for work, and there are mechanisms, through productivity and economic growth, that lead to the growth of jobs and the creation of new jobs. In addition, there are multiple drivers of demand for work that are relatively assured in the near- to mid-term, these include, again, rising prosperity around the world as more people enter the consuming class and so on. Another thing which will occur is something called “jobs changed,” and that’s because these technologies are going to complement work in lots of interesting ways, even when we don’t fully replace people doing that work.

We’ve seen versions of these three ideas of jobs lost, jobs gained, and jobs changed before with previous eras of automation. The real debate is, what are the relative magnitudes of all those things, and where do we end up? Are we going to have more jobs lost than jobs gained? That’s an interesting debate.

Our research at MGI suggests that we will come out ahead, that there will be more jobs gained than jobs lost; this of course is based on a set of assumptions around a few key factors. Because it’s impossible to make predictions, we have developed scenarios around the multiple factors involved, and in our midpoint scenarios we come out ahead. The interesting question is, even in a world with enough jobs, what will be the key workforce issues to grapple with, including the effect on things like wages, and the workforce transitions involved? The jobs and wages picture is more complicated than the effect on business and the economy, in terms of growth, which as I said, is clearly positive.

MARTIN FORD: Before we talk about jobs and wages, let me focus on your first point: the positive impact on business. If I were an economist, I would immediately point out that if you look at the productivity figures recently, they’re really not that great—we are not seeing any increases in productivity yet in terms of the macro-economic data. In fact, productivity has been pretty underwhelming, relative to other periods. Are you arguing that there’s just a lag before things will take off?

JAMES MANYIKA: We at MGI recently put out a report on this. There are a lot of reasons why productivity growth is sluggish, one reason being that in the last 10 years we’ve had the lowest capital intensity period in about 70 years.

We know that capital investment, and capital intensity, are part of the things that you need to drive productivity growth. We also know the critical role of demand—most economists, including here at MGI, have often looked at the supply-side effects of productivity, and not as much at the demand side. We know that when you’ve got a huge slowdown in demand you can be as efficient as you want in production, and measured productivity still won’t be great. That’s because the productivity measurement has a numerator and a denominator: the numerator involves growth in value-added output, which requires that output is being soaked up by demand. So, if demand is lagging for whatever reason, that hurts growth in output, which brings down productivity growth, regardless of what technological advances there may have been.

MARTIN FORD: That’s an important point. If advancing technology increases inequality and holds down wages, so it effectively takes money out of the pockets of average consumers, then that could dampen down demand further.

JAMES MANYIKA: Oh, absolutely. The demand point is absolutely critical, especially when you’ve got advanced economies, where anywhere between 55% and 70% of the demand in those economies is driven by consumer and household spending. You need people earning enough to be able to consume the output of everything being produced. Demand is a big part of the story, but I think there is also the technology lag story that you mentioned.

To your original question, I had the pleasure between 1999 and 2003 to work with one of the academic advisors of the McKinsey Global Institute, Bob Solow, the Nobel laureate. We were looking at the last productivity paradox back in the late 1990s. In the late ‘80s, Bob had made the observation that became known as The Solow Paradox, that you could see computers everywhere except in the productivity numbers. That paradox was finally resolved in the late ‘90s, when we had enough demand to drive productivity growth, but more importantly, when we had very large sectors of the economy—retail, wholesale, and others—finally adopting the technologies of the day: client-server architectures, ERP systems. This transformed their business processes and drove productivity growth in very large sectors in the economy, which finally had a big enough effect to move the national productivity needle.

Now if you fast-forward to where we are today, we may be seeing something similar in the sense that if you look at the current wave of digital technologies, whether we’re talking about cloud computing, e-commerce, or electronic payments, we can see them everywhere, we all carry them in our pockets, and yet productivity growth has been very sluggish for several years now. But if you actually systematically measure how digitized the economy is today, looking at the current wave of digital technologies, the surprising answer is: not so much, actually, in terms of assets, processes, and how people work with technology. And we are not even talking about AI yet or the next wave of technologies with these assessments of digitization.

What you find is that the most digitized sectors—on a relative basis—are sectors like the tech sector itself, media and maybe financial services. And those sectors are actually relatively small in the grand scheme of things, measuring as a share of GDP or as a share of employment, whereas the very large sectors are, relatively speaking, not that digitized.

Take a sector like retail and keep in mind that retail is one of the largest sectors. We all get excited by the prospect of e-commerce and what Amazon is doing. But the amount of retail that is now done through e-commerce is only about 10%, and Amazon is a large portion of that 10%. But retail is a very large sector with many, many small- and medium-sized businesses. That already tells you that even in retail, one of the large sectors which we’d think of as highly digitized, in reality, it turns out we really haven’t yet made much widespread progress yet.

So, we may be going through another round of the Solow paradox. Until we get these very large sectors highly digitized and using these technologies across business processes, we won’t see enough to move the national needle on productivity.

MARTIN FORD: So, you’re saying that globally we haven’t even started to see to the impact of AI and advanced forms of automation yet?

JAMES MANYIKA: Not yet. And that gets to another point worth making: we’re actually going to need productivity growth even more than we can imagine, and AI, automation and all these digital technologies are going to be critical to driving productivity growth and economic growth.

To explain why, let’s look at the last 50 years of economic growth, and you look at that for the G20 countries (which make up a little more than 90% of global GDP), the average economic GDP growth over the last 50 years where we have the data, so between 1964 and 2014, was 3.5%. This was the average GDP growth across those countries. If you do classic growth decomposition and growth accounting work, it shows that GDP and economic growth comes from two things: one is productivity growth, and the other is expansions in the labor supply.

Of the 3.5% of average GDP growth we’ve had in the last 50 years, 1.7% has come from expansions in the labor supply, and the other 1.8% has come from productivity growth over those 50 years. If you look to the next 50 years, the growth from expansions in the labor supply is going to come crashing down from the 1.7% that it’s been the last 50 years to about 0.3%, because of aging and other demographic effects.

So that means that in the next 50 years we’re going to rely even more than we have in the past 50 years on productivity growth. And unless we get big gains in productivity, we’re going to have a downdraft in economic growth. If we think productivity growth matters right now for our current growth, which it does, it’s going to matter even more for the next 50 years if we still want economic growth and prosperity.

MARTIN FORD: This is kind of touching on the economist Robert Gordon’s argument that may be there’s not going to be much economic growth in the future. (Robert Gordon’s 2017 book The Rise and Fall of American Growth, offers a very pessimistic view of future economic growth in the United States)

JAMES MANYIKA: While Bob Gordon’s saying there may not be economic growth, he’s also questioning whether we’re going to have big enough innovations, comparable to electrification and other things like that, to really drive economic growth. He’s skeptical that there’s going to be anything as big as electricity and some of the other technologies of the past.

MARTIN FORD: But hopefully AI is going to be that next thing?

JAMES MANYIKA: We hope it will be! It is certainly a general-purpose technology like electricity, and in that sense should benefit multiple activities and sectors of the economy.

MARTIN FORD: I want to talk more about The McKinsey Global Institute’s reports on what’s happening to work and wages. Could you go into a bit more detail about the various reports you’ve generated and your overall findings? What methodology do you use to figure out if a particular job is likely to be automated and what percentage of jobs are at risk?

JAMES MANYIKA: Let’s take this in three parts: “jobs lost,” “jobs changed,” and then “jobs gained,” because there’s something to be said about each of these pathways.

In terms of “jobs lost,” there’s been lots of research and reports, and it’s become a cottage industry speculating on the jobs question. At MGI the approach we’ve taken we think is a little bit different in two ways. One is that we’ve conducted a task-based decomposition, and so we’ve started with tasks, as opposed to starting with whole occupations. We’ve looked at something like over 2,000 tasks and activities using a variety of sources, including the O*NET dataset, and other datasets that we’ve got by looking at tasks. Then, the Bureau of Labor Statistics in the US tracks about 800 occupations; so, we mapped those tasks into the actual occupations.

We’ve also looked at 18 different kinds of capabilities required to perform these tasks, and by capabilities, I’m talking everything from cognitive capabilities to sensory capabilities, to physical motor skills that are required to fulfill these tasks. We’ve then tried to understand to what extent technologies are now available to automate and perform those same capabilities, which then we can map back to our tasks and show what tasks machines can perform. We’ve looked at what we’ve called “currently demonstrated technology,” and what we’re distinguishing there is technology that has actually been demonstrated, either in a lab or in an actual product, not just something that’s hypothetical. By looking at these “currently demonstrated technologies,” we can provide a view into the next decade and a half or so, given typical adoption and diffusion rates.

By looking at all this, we have concluded that on a task level in the US economy, roughly about 50% of activities—not jobs, but tasks, and it’s important to emphasize this—that people do now are, in principle, automatable.

MARTIN FORD: You’re saying that half of what workers do could conceivably be automated right now, based on technology we already have?

JAMES MANYIKA: Right now, it is technically feasible to automate 50% of activities based on currently demonstrated technologies. But there are also separate questions, like how do those automatable activities then map into whole occupations?

So, when we then map back into occupations, we actually find that only about 10% of occupations have more than 90% of their constituent tasks automatable. Remember this is a task number, not a jobs number. We also find that something like 60% of occupations have about a third of their constituent activities automatable—this mix of course varies by occupation. This 60-30 already tells you that many more occupations will be complemented or augmented by technologies than will be replaced. This leads to the “jobs changed” phenomena I mentioned earlier.

MARTIN FORD: I recall that when your report was published, the press put a very positive spin on it—suggesting that since only a portion of most jobs will be impacted, we don’t need to worry about job losses. But if you had three workers, and a third of each of their work was automated, couldn’t that lead to consolidation, where those three workers become two workers?

JAMES MANYIKA: Absolutely, that’s where I was going to go next. This is a task composition argument. It might give you modest numbers initially, but then you start to realize that work could be reconfigured in lots of interesting ways.

For instance, you can combine and consolidate. Maybe the tipping point is not that you need all of the tasks in an occupation to be automatable; rather, maybe when you get close to say, 70% of the tasks being automatable, you may then say, “Let’s just consolidate and reorganize the work and workflow altogether.” So, the initial math may begin with modest numbers, but when you reorganize and consolidate the work, the number of impacted jobs start to get bigger.

However, there is yet another set of considerations that we’ve looked at in our research at MGI which we think have been missing in some of the other assessments on the automation question. Everything that we have described so far is simply asking the technical feasibility question, which gives you those 50% numbers, but that is really only the first of about five questions you need to ask.

The second question is around the cost of developing and deploying those technologies. Obviously, just because something’s technically feasible, doesn’t mean it will happen.

Look at electric cars. It’s been demonstrated we could build electric cars, and in fact that was a feasible thing to do more than 50 years ago, but when did they actually show up? When the costs of buying it, maintaining it, charging it, etc., became reasonable enough that consumers wanted to buy them and companies want to deploy them. That’s only happened very recently.

So, the cost of deployment is clearly an important consideration and will vary a lot, depending on whether you’re talking about systems that are replacing physical work, versus systems that are replacing cognitive work. Typically, when you’re replacing cognitive work, it’s mostly software and a standard computing platform, so the marginal cost economics can come down pretty fast, so that doesn’t cost very much.

If you’re replacing physical work, on the other hand, then you need to build a physical machine with moving parts; and the economics of those things, while they’ll come down, they’re not going to come down as fast as where things are just software. So, the cost of deployment is the second important consideration, which then starts to slow down deployment rates that might initially be suggested by simply looking at technical feasibility.

The third consideration is labor-market demand dynamics, taking into account labor quality and quantity, as well as the wages associated with that. Let me illustrate this by thinking in terms of two different kinds of jobs. We’ll look at an accountant, and we’ll look at a gardener. First let’s see how these considerations could play out in these occupations.

First, it is technically easier to automate large portions of what the accountant does, mostly data analysis, data gathering, and so forth, whereas it’s still technically harder to automate what a gardener does, which is mostly physical work in a highly unstructured environment. Things in these kinds of environments aren’t quite lined up exactly where you want them to be—as they would be in a factory, for example, and there’s unforeseen obstacles that can be in the way. So, the degree of technical difficulty of automating those tasks, our first question, is already far higher than your accountant.

Then we get to the second consideration: the cost of deploying the system, which goes back to the argument I just made. In the case of the accountant, this requires software with near zero-marginal cost economics running on a standard computing platform. With the gardener, it’s a physical machine with many moving parts. The cost economics of deploying a physical machine is always going to be—even as costs come down, and they are coming down for robotic machines—more expensive than the software to automate an accountant.

Now to our third key consideration, that is the quantity and quality of labor, and the wage dynamics. Here again it favors an automating the accountant, rather than automating the gardener. Why? Because we pay a gardener, on average in the United States, something like $8 an hour; whereas we pay an accountant something like $30 an hour. The incentive to automate the accountant is already far higher than the incentive to automate the gardener. As we work our way through this, we start to realize that it may very well be that some of these low-wage jobs may actually be harder to automate, from both a technical and economic perspective.

MARTIN FORD: This sounds like really bad news for university graduates.

JAMES MANYIKA: Not so fast. Often the distinction that’s made is high wage versus low wage; or high skill versus low skill. But I really don’t know if that’s a useful distinction.

The point I want to make is that the activities likely to be automated don’t line up neatly with traditional conceptions of wages structures or skills requirements. If the work that’s being done looks like mostly data collection, data analysis, or physical work in a highly structured environment, then much of that work is likely to be automated, whether it’s traditionally been high wage or low wage, high skill or low skill. On the other hand, activities that are very difficult to automate also cut across wage structures and skills requirements, including tasks that require judgment or managing people, or physical work in highly unstructured and unexpected environments. So many traditionally low wage and high wage jobs are exposed to automation, depending on the activities, but also many other traditionally low wage and high wages jobs may be protected from automation.

I want to make sure we cover all the different factors at play here, as well. The fourth key consideration has to do with benefits including and beyond labor substitution. There are going to be some areas where you’re automating, but it’s not because you’re trying to save money on labor, it is because you’re actually getting a better result or even a superhuman outcome. Those are places where you’re getting better perception or predictions that you couldn’t get with human capabilities. Eventually, autonomous vehicles will likely be an example of this, once they reach the point where they are safer and commit fewer errors than humans driving. When you start to go beyond human capabilities and see performance improvements, that can really speed up the business case for deployment and adoption.

The fifth consideration could be called societal norms, which is a broad term for the potential regulatory factors and societal acceptance factors we may encounter. A great example of this can be seen in driverless vehicles. Today, we already fully accept the fact that most commercial planes are only being piloted by an actual pilot less than 7% of the time. The rest of the time, the plane is flying itself. The reason no-one really cares about the pilot situation, even if it goes down to 1%, is because no-one can see inside the cockpit. The door is closed, and we’re sitting on a plane. We know there’s a pilot in there, but whether we know that they’re flying or not doesn’t matter because we can’t see. Whereas with a driverless car, what often freaks people out is the fact that you can actually look in the driver’s seat and there’s no-one there; the car’s moving on its own.

There’s a lot of research going on now looking at people’s social acceptance or comfort with interacting with machines. Places like MIT are looking at social acceptance across different age groups, across different social settings, and across different countries. For example, in places like Japan, having a physical machine in a social environment is a bit more acceptable than in some other countries. We also know that, for example, different age groups are more or less accepting of machines, and it can vary depending on different environments or settings. If we move to a medical setting, with a doctor who goes into the back room to use a machine, out of view, and then just comes back with your diagnosis—is that okay? Most of us would accept that situation, because we don’t actually know what happened in the back room with the doctor. But if a screen wheels into your room and a diagnosis just pops up without a human there to talk you through it, would we be comfortable with that? Most of us probably wouldn’t be. So, we know that social settings affect social acceptance, and that this is going to also affect where see these technologies adopted and applied in the future.

MARTIN FORD: But at the end of the day, what does this mean for jobs across the board?

JAMES MANYIKA: Well, the point is that as you work your way through these five key considerations, you start to realize that the pace and extent of automation, and indeed the scope of the jobs that are going to decline, is actually a more deeply nuanced picture that’s likely to vary from occupation to occupation and place to place.

In our last report at MGI, which considered the factors I just described, and in particular considered wages, costs and feasibility, we developed a number of scenarios. Our midpoint scenario suggests that as many as 400 million jobs could be lost globally by 2030. This is an alarmingly large number, but as a share of the global labor force that is about 15%. It will be higher, though, in advanced countries than in developing countries, given labor-market dynamics, especially wages, that we’ve been discussing.

However, all these scenarios are obviously contingent on whether the technology accelerates even faster, which it could. If it did, then our assumption about “currently demonstrated technology” would be out of the window. Further, if the costs of deploying come down even faster than we anticipate, that would also change things. That’s why we’ve got these wide ranges in the scenarios that we’ve actually built for how many jobs would be lost.

MARTIN FORD: What about the “jobs gained” aspect?

JAMES MANYIKA: The “jobs gained” side of things is interesting because we know that whenever there’s a growing and dynamic economy, there will be growth in jobs and demand for work. This has been the history of economic growth for the last 200 years, where you’ve got vibrant, growing economies with a dynamic private sector.

If we look ahead to the next 20 years or so, there are some relatively assured drivers of demand for work. One of them is rising global prosperity as more people around the work enter the consuming class and demand products and services. Another is aging; and we know that aging is going to create a lot of demand for certain kinds of work that will lead to growth in a whole host of jobs and occupations. Now there’s a separate question as to whether those will turn into well-paying jobs or not, but we know that the demand for care work and other things is going to go up.

At MGI we’ve also looked at other catalysts, like whether we’re going to ramp up adaptation for climate change, retrofitting our systems and our infrastructure—which could drive demand for work above and beyond current course and speed. We also know that if societies like the United States and others finally get their act together to look at infrastructure growth, and make investments in infrastructure, then that’s also going to drive demand for work. So, one place where work’s going to come from is a growing economy and these specific drivers of demand for work.

Another whole set of jobs are going to come from the fact that we’re actually going to invent new occupations that didn’t previously exist. One of the fun analyses we did at MGI—and this was prompted by one of our academic advisors, Dick Cooper at Harvard—was to look at the Bureau of Labor Statistics. This typically tracks about 800 occupations, and there’s always a line at the bottom called “Other.” This bucket of occupations called “Other” typically reflects occupations that in the current measurement period have not yet been defined and didn’t exist, so the Bureau doesn’t have a category for them. Now, if you had looked at the Labor Statistics list in 1995, a web designer would have been in the “Other” category because it hadn’t been imagined previously, and so it hadn’t been classified. What’s interesting is that the “Other” category is the fastest-growing occupational category because we’re constantly inventing occupations that didn’t exist before.

MARTIN FORD: This is an argument that I hear pretty often. For example, just 10-years ago, jobs that involve social media did not exist.

JAMES MANYIKA: Exactly! If you look at 10-year periods in the United States, at least 8% to 9% of jobs are jobs that didn’t exist in the prior period—because we’ve created them and invented them. That’s going to be another source of jobs, and we can’t even imagine what those will be, but we know they’ll be there. Some people have speculated that category will include new types of designers, and people who trouble shoot and manage machines and robots. This undefined, new set of jobs will be another driver of work.

When we’ve looked at the kind of the jobs gained, and considered these different dynamics, then unless the economy tanks and there’s massive stagnation, the numbers of jobs gained are large enough to more than make up for the jobs lost. Unless, of course, some of variables change catastrophically underneath us, such as a significant acceleration in the development and adoption of these technologies, or we end up with massive economic stagnation. Any combination of those things and then yes, we’ll end up with more jobs lost than jobs gained.

MARTIN FORD: Ok, but if you look at the employment statistics, aren’t most workers employed in pretty traditional areas, such as cashiers, truck drivers, nurses, teachers, doctors or office workers? These are all job categories that were here 100 years ago, and that’s still where the vast majority of the workforce is employed.

JAMES MANYIKA: Yes, the economy is still made up of a large chunk of those occupations. While some of these will decline, few will disappear entirely and certainly not as quickly as some are predicting. Actually, one of the things that we’ve looked at is where, over the last 200 years, we’ve seen the most massive job declines. For example, we studied what happened to manufacturing in the United States, and the shift from agriculture to industrialization. We looked at 20 different massive job declines in different countries and what happened in each, compared to the range of scenarios for job declines due to automation and AI. It turned out the ranges that we anticipate now are not out of the norm, at least in the next 20 years anyway. Now beyond that, who knows? Even with some very extreme assumptions, we’re still well within the ranges of shifts that we have seen historically.

The big question, at least in the next 20 or so years, is whether there will be enough work for everybody. As we discussed, at MGI we conclude there will be enough work for everybody, unless we get to those very extreme assumptions. The other important question we must ask ourselves is how big are the scale of transitions that we’ll see between those occupations that are declining, and those occupations that are be growing? What level of movement will we see from one occupation to another, and how much will the workplace need to adjust and adapt to machines complementing people as opposed to people losing their jobs?

Based on our research, we’re not convinced that on our current course and speed we’re well set up to manage those transitions in terms of skilling, educating, and on-the-job training. We actually worry more about that question of transition than about the “Will there be enough work?” question.

MARTIN FORD: So, there really is the potential for a severe skill mismatch scenario going forward?

JAMES MANYIKA: Yes, skill mismatches is a big one. Sectoral and occupation changes, where people have to move from one occupation to another, and adapt to higher or lower skill, or just different skills.

When you look at the transition in terms of sectoral and geographic locational questions, say in the United States, there will be enough work, but then you go down to the next level to look at the likely locations for that work, and you see the potential for geographic locational mismatches, where some places look like they’ll be more in a hole than other places. These kinds of transitions are quite substantial, and it’s not quite clear if we’re ready for them.

The impact on wages is another important question. If you look at the likely occupational shifts, so many of the occupations that are likely to decline have tended to be the middle-wage occupations like accountants. Many well-paying occupations have involved data analysis in one form or another. They have also involved physical work in highly structured environments, like manufacturing. And so that’s where many of the occupations that are going to decline sit on the wage spectrum. Whereas many of the occupations that are going to grow—like the care work we just talked about—are occupations that, at today’s current wage structures, don’t pay as well. These occupational mix shifts will likely cause a serious wage issue. We will need to either change the market mechanisms for how these wage dynamics work or develop some other mechanisms that shape the way these wages are structured.

The other reason to worry about the wage question comes from a deeper examination of the narrative that many of us as technologists have been saying so far. When we say, “No, don’t worry about it. We’re not going to replace jobs, machines are going to complement what people do,” I think this is true, our own MGI analysis suggests that 60% of occupations will only have about a third of their activities automated by machines, which means people will be working alongside machines.

But if we examine this phenomenon with wages in mind, it’s not so clear-cut because we know that when people are complemented by machines, you can have a range of outcomes. We know that, for example, if a highly skilled worker is complemented by a machine, and the machine does what it does best, and the human is still doing highly value-added work to complement the machine, that’s great. The wages for that work are probably going to go up, productivity will go up and it’ll all work out wonderfully well all round, which is a great outcome.

However, we could also have the other end of the spectrum, where if the person’s being complemented by a machine—even if the machine is only 30% of the work, but the machine is doing all the value-added portion of that work—then what’s left over for the human being is deskilled or less complex. That can lead to lower wages because now many more people can do those tasks that previously required specialized skills, or required a certification. That means that what you’ve done by introducing machines into that occupation could potentially put pressure on wages in that occupation.

This idea of complementing work has this wide range of potential outcomes, and we tend just to celebrate the one end of the result spectrum, and not talk as much about the other, deskilled, end of the spectrum. This by the way also increases the challenge of reskilling on an ongoing basis as people work alongside ever evolving and increasingly capable machines.

MARTIN FORD: A good example of that is the impact of GPS on London taxi drivers.

JAMES MANYIKA: Yes, that’s a great example of where the labor-supplied limiting portion was really “the Knowledge” of all the streets and shortcuts in the minds of the London taxi drivers. When you devalue that skill because of GPS systems, what’s left over is just the driving, and many more people can drive and get you from A to B.

Another example here, in an old form of deskilling, is to think about call center operators. It used to be that your call center person actually had to know what they were talking about often at a technical level in order to be helpful to you. Today, however, organizations embedded that knowledge into the script that they read. What’s left over for the most part is just someone who can read a script. They don’t really need to know the technical details, at least not as much as before; they just need to be able to follow and read the script, unless they get to a real corner case, where they can escalate to a deep expert.

There are many examples of service work and service technician work, whether it’s through the call center, or even people physically showing up to done on-site repairs, where some portions of that work are going through this massive deskilling—because the knowledge is embedded in either technology, or scripts, or some other way to encapsulate the knowledge required to solve the problem. In the end, what’s left over is something much more deskilled.

MARTIN FORD: So, it sounds like overall, you’re more concerned about the impact on wages than outright unemployment?

JAMES MANYIKA: Of course you always worry about unemployment, because you can always have this corner-case scenario that could play out, which results in a game over for us as far as employment is concerned. But I worry more about these workforce transition issues, such as skills shifts, occupational shifts and how will we support people through these transitions.

I also worry about the wage effects, unless we evolve how we value work in our labor markets. In a sense this problem has been around for a while. We all say that we value people who look after our children, and we value teachers; but we’ve never quite reflected that in the wage structure for those occupations, and this discrepancy could soon get much bigger, because many of the occupations that are likely to grow are going to look like that.

MARTIN FORD: As you noted earlier, that can feed back into the consumer-demand problem, which in itself dampens down productivity and growth.

JAMES MANYIKA: Absolutely. That would create a vicious cycle that further hurts demand for work. And we need to move quickly. The reason why the reskilling and on-the-job training portions are a really important thing is, first of all, because those skills are changing pretty rapidly, and people are going to need to adapt pretty rapidly.

We already have a problem. We have pointed this out in our research that if you look across most advanced economies at how much these countries spend on on-the-job training, the level of on-the-job training has been declining in the last 20 to 30 years. Given that on-the-job training is going to be a big deal in near the future, this is a real issue.

The other measure you can also look at is what is typically called “active labor-market supports.” These are things that are separate from on-the-job training and are instead the kind of support you provide workers when they’re being displaced, as they transition from one occupation to another. This is one of the things I think we screwed up in the last round of globalization.

With globalization, one can argue all day along about how globalization was great for productivity, economic growth, for consumer choice, and for products. All true, except when you look at the question of globalization through the worker lens; then it’s problematic. The thing that didn’t happen effectively was providing support for the workers who were displaced. Even though we know the pain of globalization was highly localized in specific places and sectors, they were still significant enough and really affected many real people and communities. If you and your 9 friends worked in apparel manufacturing in the US in 2000, a decade later only 3 of those jobs still exist, and the same is true if you and your 9 friends worked in a textile mill. Take Webster County in Mississippi where one third of jobs were lost due to what happened to apparel manufacturing, which was a major part of that community. We can say this will probably work out at an overall level, but that isn’t very comforting if you’re one of the workers in these particularly hard-hit communities.

If we say that we’re going to need to support both workers who have been, and those who are going to be, dislocated through these work transitions and will need to go from one job to another, or one occupation to another, or one skill-set to another, then we’re starting from behind. So, the worker transition challenges are a really big deal.

MARTIN FORD: You’re making the point that we’re going to need to support workers, whether they’re unemployed or they’re transitioning. Do you think a universal basic income is potentially a good idea for doing that?

JAMES MANYIKA: I’m conflicted about the idea of universal basic income in the following sense. I like the fact that we’re discussing it, because it’s an acknowledgment that we may have a wage and income issue, and it’s provoking a debate in the world.

My issue with it is that I think it misses the wider role that work plays. Work is a complicated thing because while work provides income, it also does a whole bunch of other stuff. It provides meaning, dignity, self-respect, purpose, community and social effects, and more. By going to a UBI-based society, while that may solve the wage question, it won’t necessarily solve these other aspects of what work brings. And, I think we should remember that there will still be lots of work to be done.

One of the quotes that really sticks with me and I find quite fascinating is from President Lyndon B. Johnson’s Blue-Ribbon Commission on “Technology, Automation, and Economic Progress,” which incidentally included Bob Solow. One of the report’s conclusions is that “The basic fact is that technology eliminates jobs, not work.”

MARTIN FORD: There’s always work to be done, but it might not be valued by the labor market.

JAMES MANYIKA: It doesn’t always show up in our labor markets. Just think about care work, which in most societies tends to be done by women and is often unpaid. How do we reflect the value of that care work in our labor markets and discussions on wages and incomes? The work will be there. It’s just whether it’s paid work, or recognized as work, and compensated in that way.

I like the fact that UBI is provoking the conversation about wages and income, but I’m not sure it solves the work question as effectively as other things might do. I prefer to consider concepts like conditional transfers, or some other way to make sure that we are linking wages to some kind of activity that reflects initiative, purpose, dignity, and other important factors. These questions of purpose, meaning and dignity may in the end be what defines us.

JAMES MANYIKA is a senior partner at McKinsey & Company and chairman of the McKinsey Global Institute (MGI). James also serves on McKinsey’s Board of Directors. Based in Silicon Valley for over 20 years, James has worked with the chief executives and founders of many of the world’s leading technology companies on a variety of issues. At MGI, James has led research on technology, the digital economy, as well as growth, productivity, and globalization. He has published a book on AI and robotics, another on global economic trends as well as numerous articles and reports that have appeared in business media and academic journals.

James was appointed by President Obama as vice chair of the Global Development Council at the White House (2012-16) and by Commerce Secretaries to the US Commerce Department’s Digital Economy Board of Advisors and the National Innovation Advisory Board. He serves on the boards of the Council on Foreign Relations, John D. and Catherine T. MacArthur Foundation, Hewlett Foundation, and Markle Foundation.

He also serves on academic advisory boards including the Oxford Internet Institute, MIT’s Initiative on the Digital Economy. He is on the standing committee for the Stanford-based 100 Year Study on Artificial Intelligence, a member of the AIIndex.org team, and a fellow at DeepMind.

James was on the engineering faculty at Oxford University and a member of the Programming Research Group and the Robotics Research Lab, a fellow of Balliol College, Oxford, a visiting scientist at NASA Jet Propulsion Labs, and a faculty exchange fellow at MIT. A Rhodes Scholar, James received his DPhil, MSc, and MA from Oxford in Robotics, Mathematics, and Computer Science, and a BSc in electrical engineering from University of Zimbabwe as an Anglo-American scholar.