CHAPTER FIVE

The Brain is Constantly Transforming

What makes our brain more or less predisposed to change?

Is it true that it’s much harder to learn things–like a new language or to play an instrument–when we are older? Why is it easy for some of us to learn music and so difficult for others? Why do we all learn to speak naturally, and yet many of us struggle with maths? Why is learning some things so arduous and others so simple?

In this chapter we will navigate into the history of learning, effort and virtue, mnemonic techniques, the drastic cerebral transformation when we learn to read, and our brain’s capacity for change.

Virtue, oblivion, learning, and memory

Plato tells of a stroll in fifth-century BC Athens during which Socrates and Menon heatedly discussed virtue. Is it possible to learn it? If so, how? In the midst of the debate, Socrates presents a phenomenal argument: virtue cannot be learned. What’s more, nothing can be learned. Each of us already possesses all knowledge. So learning actually means remembering.* This conjecture, so beautiful and bold, was implanted in different versions of Socratic teaching in thousands and thousands of classrooms across the world.

It’s strange. The great master of antiquity was questioning the more intuitive version of education. Teaching is not transmitting knowledge but rather teachers help their students to express and evoke knowledge they already have. This argument is central to Socratic thought. According to him, at each birth, one of the many souls wandering about in the land of the gods descends to confine itself within the newborn body. Along the way it crosses the River Lethe, where it forgets everything it knew. It all begins with oblivion. The path of life, and of pedagogy, is a constant remembering of that which we forgot when crossing the Lethe.

Socrates proposed to Menon that even the most ignorant of slaves already knows the mysteries of virtue and the most sophisticated elements of maths and geometry. When Menon showed his incredulity, Socrates did something extraordinary, he suggested resolving the discussion in the realm of experiments.

The universals of human thought

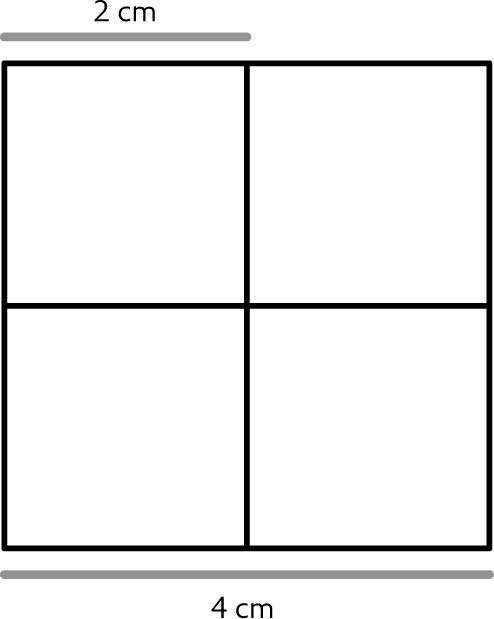

Menon then called over one of his slaves, who became the unexpected protagonist of a formidable landmark in the history of education. Socrates drew a square in the sand and fired off a volley of questions. Just as mathematical works are a record of the most refined and elaborate Greek thought, Menon’s slave’s answers revealed the popular intuitions, the common sense, of the period.

In the first key passage of the dialogue, Socrates asks: ‘How must I change the length of the sides so a square’s area doubles?’ Think of an answer quickly, make a hunch without getting into elaborate reflection. That is probably what the slave did when he responded: ‘I simply double the length of the sides.’ Then Socrates proceeded to draw the new square in the sand and the slave discovered that it was made up of four squares identical to the original one.

The slave then discovered that doubling the side of the square quadrupled its area. And Socrates continued his question-and-answer game. Along the way, by responding on the basis of what he already knew, the slave expressed the geometrical principles that he intuited. And he was able to learn from his own errors and correct them.

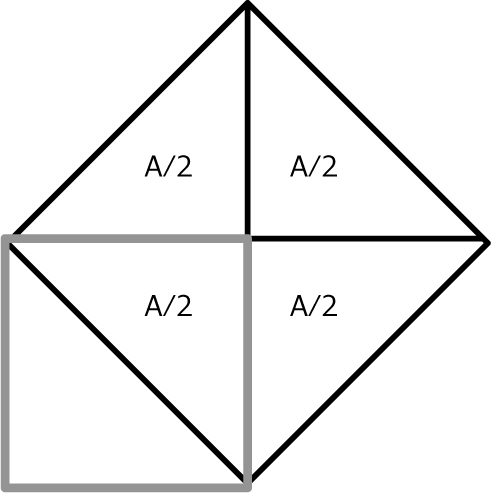

Towards the end of the dialogue, Socrates drew a new square in the sand, whose side was the diagonal of the original square.

And then the slave could clearly see that it was made up of four triangles, while the original was made up of only two.

‘Do you agree that this is the side of a square whose area is twice the original’s?’ asked Socrates.

To which the slave answered yes, thus sketching out the basis of the Pythagorean theorem, the quadratic relationship between the sides and the diagonal.

The dialogue concludes with the slave, who realizes, just by answering questions, the basis of one of the most highly valued theorems in Western culture.

‘What do you think, Menon? Did the slave express any opinion that was not his own?’ asked Socrates.

‘No,’ replied Menon.

The psychologist and educator Antonio Battro understood that this dialogue was the seed of an unparalleled experiment into whether there are intuitions that persist over centuries and millennia. I undertook this task with my graduate student Andrea Goldin, a biologist. We posed Socrates’ questions to children, teenagers and adults and found that their responses, 2,500 years after the original dialogue, were almost identical. We are very similar to the Ancient Greeks,* we get the same things right and we make the same mistakes. This shows that there are ways of reasoning that are so deeply entrenched that they travel in time through cultures, changing little.

It doesn’t matter–here–whether the Socratic dialogue actually took place or not. Perhaps it was merely a mental simulation by Socrates, or by Plato. However, we did show that it is plausible for the dialogue to have happened just as it was written. When faced with the same questions, people respond–millennia later–just as the slave did.

My motivation for doing this experiment was to investigate the history of human thought and examine the hypothesis that simple mathematical intuitions expressed in fifth-century Athens could be identical to those expressed by twenty-first-century students in South America or elsewhere in the world.

Andrea’s motivation was different. Her drive was to understand how science can improve education–an ambition I learned by her side–and this led her to ask very different questions in the same experiment: was the dialogue really as effective as presumed? Is answering questions a good way to learn?

The illusion of discovery

In our experiment, Andrea proposed, once the dialogue was finished, to show each student a new square of a different colour and different size and ask them to use it to generate a new one with twice the area. It seemed to me that the task was too easy; it couldn’t be exactly the same as what had been taught. So I suggested we test what they’d learned in a more demanding way. Could they extend the rule to new shapes; for example, a triangle? Could they generate a square whose area was half–instead of double–the original square?

Luckily Andrea stood her ground. As she had supposed, a large number of the participants–almost half, in fact–failed the simpler test. They couldn’t replicate what they believed they had learned. What happened?

The first key to this mystery has already appeared in this book; the brain, in many cases, has information that it cannot express or evoke explicitly. It is like having a word on the tip of your tongue. So the first possibility is that this information was effectively acquired through the dialogue but not in a way that can be used and expressed.

An example in daily life can help us to understand the mechanisms in play. Someone is given a lift time after time to the same destination. One day they have to take the wheel to follow the route they have been driven along thousands of times and find out they don’t know where they’re going. This doesn’t mean that they didn’t look at the route, or weren’t paying attention. There is a process of consolidating knowledge that needs practice. This argument is central throughout the problem of learning; it is one thing to assimilate knowledge per se and another to assimilate it in order to be able to express it. A second example is the learning of technical skills, like playing the guitar. We watch the teacher, we see clearly how he or she articulates their fingers in order to make a chord, but, when it is our turn, we are unable to do the same thing.

The analysis of the Socratic dialogue shows that just as extensive practice is needed to learn procedures (playing an instrument, reading or riding a bike), it is also necessary for conceptual learning. But there is a crucial difference. In learning an instrument we recognize immediately that seeing is not enough to learn. Yet with conceptual learning both the teacher and the student feel that a well-sketched-out argument can be taken in without difficulty. That is an illusion. In order to learn concepts, meticulous practice is required, just as when learning to type.

Our further exploration of Menon’s dialogue revealed a pedagogical disaster. The Socratic process turns out to be very gratifying for the teacher. The students’ response seems highly successful. But when the class is put to the test, the result is not always so promising. My hypothesis is that this educational process sometimes fails for two reasons: the lack of practice in using the acquired knowledge and the focus of attention, which should not be placed on small fragments of facts that are already known but rather on how to combine them to produce new knowledge. We have already sketched out the first argument and we will explore it in further depth over the next few pages. A concise example of the second can be found in educational practice.

Beyond demographic, economic and social factors–which are, of course, decisive–there are countries in which mathematical teaching works better than in others. For example, in China students learn more than is expected–based on the GDP and other socioeconomic variables–and in the United States less. What explains this difference?

In the United States, teachers solving a complex multiplication like 173 × 75 on the blackboard usually ask the children things they already know: ‘How much is 5 × 3?’ And they all, in unison, answer: ‘Fifteen.’ It is gratifying because the entire class gets the right answer. But the trap lies in the fact that the children were not taught the only thing they didn’t know, the path. Why start with 5 × 3 and then do 5 × 7, and not the other way around? How should they combine this information and how do they establish a plan for being able to resolve the other steps in the problem 173 × 75? This is the same error we found in the Socratic dialogue. Menon’s slave would never have drawn the diagonal on his own. The big secret to solving this problem is not in realizing, once the diagonal has been drawn, how to count the four triangles. The key is in how to get the student to come up with the idea that the solution requires thinking of the diagonal. The pedagogical error lies in bringing the student’s attention to fragments of the problem that had already been solved.

In China, on the other hand, in order to learn to multiply 173 × 75, the teacher asks: ‘How do you think this is solved? Where do we start?’ This, first of all, takes the students out of their comfort zone, inquiring about something they do not know. They have to establish how to break down this complex calculation into a series of steps: First multiply 5 × 3, and write the result, and then multiply 5 × 70, and so on… Secondly, it leads them to make an effort and, eventually, to make mistakes. The two teaching methods coincide in that they are based on questions. But one asks about already known fragments and the other about the path that unites the fragments.

Learning through scaffolding

In our investigation into the contemporary responses to Menon’s dialogue we found something odd. Those who followed the dialogue to the letter learned less. On the other hand, those who skipped over some questions learned more. The odd thing is that more teaching–more of the dialogue–favoured less learning. How can we resolve this enigma?

We found the answer in a research programme carried out by the psychologist and educator Danielle McNamara in order to decipher a text’s legibility. Her project, vastly influential in the worlds of academia and educational practice, shows that the most pertinent variables are not the ones you would expect, such as attention, intelligence and effort. The most decisive, in fact, was what the reader already knew about the subject before starting.

This led us to a very different reasoning from the one any of us would have naturally sketched out in the classroom; learning doesn’t fail because of distraction or lack of attention. In fact, students with almost no prior knowledge can follow the dialogue with great concentration, but their attention is focused on each step, on the trees and not the forest; while those students whose knowledge already brings them close to the solution will not need so much concentration to follow the dialogue.

So Andrea and I sketched out a seemingly paradoxical hypothesis: those who pay more attention learn less. In order to test it out we created a pioneering experiment, the first simultaneous recording of cerebral activity while one person was teaching and another was learning.

The results were conclusive. Those who learned less activated their prefrontal cortex more, which is to say, they made more effort. To such an extent that, by measuring cerebral activity during the dialogue, we could predict whether a student would later pass the exam.

Of course, it is not always true that paying more attention means learning less. With equal prior knowledge, those who pay more attention achieve greater results. But in this dialogue–and so many others in school–it turns out that effort is inversely related to prior knowledge. Those with less knowledge follow the dialogue step by step, in detail. Yet those who are able to skip over whole parts can do so because they already know many of the fragments. The path is well learned only when one can follow it, without needing to stop at every step.

This idea is closely linked to the concept introduced in the 1920s by the great Russian psychologist Lev Vygotsky of the zone of proximal development, which made such an impact on pedagogy. Vygotsky argued that there must be a reasonable distance between what students can do for themselves and what a mentor demands of them. Later in the book we will revisit this idea when looking at how to lessen the gap between teachers and students by having the children themselves act as mentors. But at this point I want to leap through another window that was opened in the minute analysis of the Socratic dialogue: learning, effort and leaving one’s comfort zone.

Effort and talent

We intuit that the few people who learn to play the guitar like a rock star such as Prince* do so based on a certain mix of biological and social factors. But to understand how these elements interact with each other and, above all, how to use that knowledge better to learn and teach, we need to divide this general concept into smaller sections.

The idea that genetic factors determine the maximum skill that each of us can achieve is very deep-seated. In other words, anyone can learn music or football to a certain level, but only a few virtuosos can reach the level of João Gilberto** or Lionel Messi. Great talents are born, not made. They were touched with a magic wand, they have a gift.

This idea that we all go through a similar educational trajectory, but the ceiling depends on a biological predisposition, was coined and sketched in 1869 by Francis Galton, one of the most versatile and prolific British scientists. The clearest example appears when the predisposition is a body trait. For example, becoming a professional basketball player is much more likely if you are tall. It is hard to become a great tenor without having been born with the proper vocal apparatus.

Galton’s idea is simple and intuitive but doesn’t coincide with reality. When investigating in detail how great experts learned what they know, and avoiding the temptation to draw general conclusions based more on myth, it turns out that the first two premises of Galton’s argument are wrong. The upper limit of learning is not so genetically based, nor is the path towards that upper limit so independent of genetics. Genetics are involved in both parts, but not decisively in either.

Ways of learning

The great neurologist Larry Squire sketched a taxonomy that divides learning into two large categories. Declarative learning is conscious and can be explained in words. A good example of this is learning the rules of a game; once the instructions are learned, they can be taught (declared) to a new player. Nondeclarative learning includes skills and habits that are usually achieved without the learner being aware of the process. These are types of knowledge that would be difficult to make explicit in the form of language, such as by explaining them to someone else.

The more implicit ways of learning are, in fact, so unconscious that we don’t even recognize that there was something to learn: for example, learning to see. We can easily identify that a face expresses an emotion but we are unable to declare this knowledge in order to make machines that can emulate this process. Our ability to see is innate in most people. So much so that the inverse of this naturalness of the gaze has poetic strength. The Uruguayan author Eduardo Galeano wrote: ‘And the sea was so immense, so brilliant, that the boy was struck dumb by its beauty. And when he finally managed to speak, trembling, stuttering, he asked his father: “Help me see!”’ A similar thing happens when learning to walk or keep our balance. These faculties are so well-incorporated that it seems they’ve always been there, that we never had to learn them.

These two categories are useful when exploring the vast space of learning. However, it is equally important to understand that they are inevitably abstractions and exaggerations; almost all learning in real life is part declarative and part implicit.

For example, learning to walk is an implicit and procedural form of learning, it doesn’t require instructions or explanations, and it’s learned slowly and after a lot of practice. Yet there are many aspects which can be consciously controlled. The same thing happens with breathing, which is fundamentally an unconscious process. It would not be sensible to delegate to each of our distracted free wills something that would be fatal if forgotten. But, to a certain point, we can control our breathing consciously, its rhythm, volume, flow. And it is breathing, the bodily function that spans the conscious and the unconscious, that is used as a universal bridge in meditative practices and other exercises to learn to direct one’s consciousness to new places.

Establishing this bridge between the implicit and the declarative turns out to be, as we will see, a key variable in every form of learning.

The OK threshold

A fundamental concept for understanding how much we can improve is called the OK threshold, the level at which everything feels fine. People engaged in learning to type, for example, begin by searching out each letter with their eyes, exerting great effort and concentration. Like Menon’s slave, they pay attention to each step. But later it seems as if their fingers have a life of their own. When we touch-type, our brains are somewhere else, reflecting on the text, talking to someone else or daydreaming. What’s curious is that once we have reached this level of ability, despite typing for hours and hours, we no longer improve. In other words, the learning curve grows until it reaches a value where it stabilizes. Most people reach speeds close to sixty words per minute. But, of course, this value is not the same for everyone; the world record is held by Stella Pajunas, who managed to type at the extraordinary pace of 216 words per minute.

This example seems to confirm Galton’s argument; he maintained that each of us reaches our own inherent ceiling. Yet by doing methodical, sustained exercises to increase our speed, all of us can improve substantially. What happens is that we stagnate very far from our maximum performance, at a point at which we benefit from what we’ve learned but we do not generate further learning, a comfort zone in which we find a tacit balance between the desire to improve and the effort that would require. This point is the OK threshold.

The history of human virtue

What happens in the example of typing speed occurs with almost everything we learn in life. One example that most of us experience is reading. After years of intense effort at school, many of us achieve reading quickly and with little effort. We read more and more books, without increasing our reading speed. Yet if any of us revisited a methodical, sustained process, and devoted time and effort, we could significantly increase our speed without losing comprehension along the way.

The narrative of learning in each of our life cycles is replicated in the history of culture and sport. In the early twentieth century, the fastest runners of the times achieved the extraordinary feat of running a marathon in two and a half hours. In the early twenty-first century, this time isn’t enough to qualify for the Olympics. This is not limited to sports, of course. Some compositions by Tchaikovsky were technically so difficult that in his day they were never played. The violinists of the period thought that they were impossible. Today they are still considered challenging but there are many violinists who can play them.

Why is it that we can now achieve feats that years earlier were impossible? Is it that, as Galton’s hypothesis suggests, our constitution–our genes–changes? Of course not. Human genetics, over those seventy years, has remained essentially the same. Is it because technology has radically changed? Again, the answer is no. Perhaps that would be a valid argument for some disciplines, but a marathon runner with trainers from a hundred years ago–and even barefoot–could today achieve times that were once impossible. Likewise, a contemporary violinist could now play Tchaikovsky’s works with period instruments.

This deals a fatal blow to Galton’s argument. The limits of human performance are not genetic. Violinists today manage to play those pieces because they can devote more hours to their practice, because the point at which they feel the goal is accomplished has changed, and because they have better training procedures. This is good news; it means that we can build on these examples to attain goals that today are inconceivable.

Fighting spirit and talent: Galton’s two errors

When we judge athletes we usually separate their competitive spirit from their talent as if they were two different substrata. There are the Roger Federers of the world–who have talent–and the Rafael Nadals–who are mainly driven by intense competitive spirit to the extent that they leave their bodies and souls on the field. A typical observer views those with innate talent with a distant respect that denotes admiration for a gift, a competitor’s divine privilege. Fighting spirit, on the other hand, feels more human because it is associated with will and the feeling that we could all achieve it. This is Galton’s hunch: the gift, the ceiling of talent, is innate, and fighting spirit, the path to advancement through learning, is available to all of us. Both of these conjectures, however, are wrong.

In fact, the ability to give one’s all out on the pitch is perhaps one of the elements most determined by genetic makeup. It is, in fact, an element of temperament, which refers to a vast term that defines personality traits including emotiveness and sensitivity, sociability, persistence and focus. In the mid-twentieth century, an American children’s psychiatrist, Stella Chess, and her husband, Alexander Thomas, began a tour de force study that would be a landmark in the science of personality. As in a film by Richard Linklater, they meticulously followed the development of hundreds of children from different families, from the day they were born through adulthood. They measured nine traits of their temperaments:

(1) Their activity levels and types.

(2) The degree of regularity in their diet, and sleeping and waking habits.

(3) Their willingness to try something new.

(4) Their adaptability to changes in the environment.

(5) Their sensitivity.

(6) The intensity and energy level of their responses.

(7) Their general mood–happy, weepy, pleasant, nasty or friendly.

(8) Their degree of distraction.

(9) Their persistence.

They found that while these traits were not immutable, they at least persisted to a striking extent throughout their development. And, what’s more, they were expressed clearly and precisely in the first days of life. Over the last fifty years, this foundational study by Chess and Thomas has been continued with a multitude of variations. The conclusion is always the same: a significant portion of the variance–between 20 and 60 per cent–in temperament is explained by the genetic package we are born with.

If genes explain more or less half of our temperament, the other half is explained by the environment and social petri dish in which we develop. But which specific elements of our environment? Of almost all cognitive variables, the most decisive factor is the home a child grows up in. Siblings are similar not only because they carry similar genes but also because they develop on the same playing field. But there are exceptions. Different studies on adoptions and twins show that the home contributes very little to the development of some aspects of temperament.

Searching for the nature of human altruism, an Austrian behavioural economist, Ernst Fehr, has shown this quite conclusively for one of the foundational traits of temperament: the predisposition to sharing. When children choose between keeping two toys or sharing them equally with a friend–throughout diverse cultures, different continents and different socioeconomic strata–the younger sibling is usually less predisposed to share. In retrospect, this seems natural; the younger one was raised according to ‘if you don’t ask you don’t get’. When the younger siblings get something, they keep it for themselves in their jungle filled with older predators. All parents with more than one child recognize that the anxiety, fragility and above all ignorance in which a first child is raised are not repeated. As a result of this, some social aspects of young children, such as their disposition to share, do not depend so much on the home experience but are rather learned on other playing fields of life.

Our excursion to the science of temperament sheds light on why Galton’s intuition–which persists today as a very popular myth–is wrong. It certainly feels as if we could all potentially attain the ability to give one’s all–as opposed to talent, which feels like a natural gift that only very few have. But in the list of temperament traits that vary little through our lives, we find the main ingredients for giving one’s all: the intensity and the energy of the responses, the general mood, the degree of distraction, the capacity to persist and the intensity of basal activity. And with this we can understand why the capacity of giving one’s all is an ability that varies widely across individuals and is quite difficult to change.

This explanation is mostly based on the work of Stella Chess and Alexander Thomas, who have meticulously observed the persistence and malleability of different personality traits that make us what we are. We still need to understand what specific aspects of our biological constitution, of our genes and of our brains, regulate the ability to give one’s all. The answer to this question is, in my opinion, far from being complete. But we will see later in this chapter that it is closely related to aspects of the motivation and reward system that define temperament and are a gateway to learning.

Now we must topple the opposite myth. What we perceive as talent is not an innate gift but rather, almost always, the fruit born of hard work. Let’s use an emblematic case to defend this argument: perfect pitch, the ability to recognize or produce a musical note without any point of reference. Perfect pitch is one of the most widely recognized cases of a gift of talent. Somebody with this aptitude is usually considered a musical genius, so out of the ordinary as to be viewed as some sort of mutant, like an X-Man of music, gifted with a genetic package that gives them this unusual virtue. Once again, a lovely idea … but erroneous, a myth.

Perfect pitch can be trained, and almost everyone can achieve it. In fact, most children have almost perfect pitch, but without practice it atrophies. And children who begin training at a conservatory at a young age have a very high incidence of perfect pitch. Once again, this is not due to genius but to hard work. Diana Deutsch, one of the finest researchers on music and the brain, made an extraordinary discovery: people who live in China and Vietnam have a much higher predisposition to perfect pitch. What is the origin of this peculiarity? It turns out that in Mandarin and Cantonese, as well as in Vietnamese, words change their meaning based on tone. So, for example, in Mandarin the sound ‘ma’ pronounced in different tones can mean mother or horse and, if that weren’t confusing enough, it also means marijuana. So tone has an absolute value–as much as the musical note F is different from D or G–and there is a higher motivation for learning this relationship between a particular tone and the meaning it represents in China–to distinguish mother from horse, for example–but not as much in other parts of the world. Therefore, the motivation and pressure exerted by the language extend to music in something that ends up being much less sophisticated and less revealing of genetics and geniuses than it seems.

The fluorescent carrot

While I was completing my doctorate in New York, a group of friends and I played an absurd game. We tried to control the temperature of our fingertips, which isn’t the most exciting achievement in the world, but does demonstrate an important principle, namely that we can voluntarily regulate certain aspects of our physiology that are seemingly inaccessible. We were, in the fantasy of those moments, students of Charles Xavier at the school of young mutants.

With a thermometer on my fingertip, I observed that the temperature fluctuated between 31 and 36 degrees Celsius. Then I tried to raise that temperature. Sometimes I was successful and the temperature of my fingertip became raised and sometimes it didn’t. These variations were spontaneous and random and proved that in spite of my wish to do so I couldn’t control them. However, after two or three days of practice something astonishing happened. I managed to manipulate the thermometer at will, although imprecisely. Two days later, I had perfect control. I could control the temperature of my fingertip just by using my thoughts. Anyone can do it. This learning process is mysterious because it is not declarative. It is likely that I learned to relax my hand, thus changing the blood flow and controlling the temperature. But I couldn’t–and still can’t–precisely explain in words what exactly it was that I learned.

This innocent game reveals a fundamental concept for many of the brain’s learning mechanisms. For example, when trying to move the arm for the first time to reach something, an infant explores a large repertoire of neuronal commands. Some, coincidentally, turn out to be effective. And here is the first key point: in order to select efficient commands one must visualize their consequences. Later this mechanism becomes more refined and the baby has no need to rehearse all of the neuronal commands. For the ones that have been selected, the brain generates an expectation of success, which allows learners to simulate the consequences of their actions without having to carry them out, like football players who don’t run after the ball because they know they can’t reach it.

And therein lies the second key point in learning, known as prediction error, which we have already touched on in Chapter 2. The brain calculates the difference between what is expected and what is in fact achieved. This algorithm allows us to refine the motor programme and, with that, achieve a much more precise control over our actions. That is how we learn to play tennis or an instrument. This learning mechanism is so efficient that it became common currency in the world of automatons and artificial intelligence. A drone literally learns to fly, and a robot to play ping-pong, using this procedure, which is as simple as it is effective.

In the same way, we can learn to control all sorts of devices with our thoughts. In a not-so-distant future, the projection of this principle will generate a landmark in the history of humanity. The body might no longer be necessary as an intermediary. It would be enough to want to call someone for a device to decode the gesture and carry it out without hands or voices, without a mediating body. In the same way, we can extend the sensory landscape. The human eye is not sensitive to colours beyond violet, but there is no essential limit to this. Bees, for example, get to see in the ultraviolet world. We can use photographic techniques to mimic that world, but the resulting colours are only approximations of what a bee might see. Bats and dolphins also hear sounds that are inaudible to our ears. Nothing stands in the way of us someday connecting electronic sensors capable of detecting this vast portion of the universe that today is opaque to our senses. We can also impregnate ourselves with new senses. For example, having a compass directly connected to the brain in order to allow us to feel the north in the same way we feel the cold. The mechanism for achieving it is essentially the same as the one I described in the innocuous game of the fingertip temperature. The only difference is the technology.

This learning procedure requires being able to visualize the consequences of each neuronal instruction. So by increasing the range of things we visualize, we also widen the number we learn to control. Not only in terms of external devices but also in our inner world, in our own body.

Controlling the temperature of your fingertip with your will is undoubtedly a trivial example of this principle, but it sets an extraordinary precedent. Is it possible for us to train the brain to control aspects of our bodies that seem completely detached from our consciousness and from the realm of things we can exert our will on? What if we could visualize the state of our immune system? What if we could visualize states of euphoria, happiness or love?

I would venture to say that we will be able to improve our health when we manage to visualize aspects of our physiology that are currently invisible to us. This already is the case in a few specific areas. For example, it is now possible to visualize the pattern of cerebral activity that corresponds to a state of chronic pain and, based on this visualization, control and lessen it. This could be taken much further, and we would be able to regulate our defence system in order to overcome diseases that now seem insurmountable. Research could be focused on this fertile territory for what today seems like miraculous healing but in the future could be visualized and made standard.

The geniuses of the future

The myth of genetic talent is based on rare cases and exceptions, on stories and photos that show precocious geniuses with their innocent youthful faces rubbing elbows with the big names of the world’s elite. The psychologists William Chase and Herbert Simon toppled this myth by closely investigating the progression of the great chess geniuses. None of them achieved an exceptionally high level of skill before having completed 10,000 hours of training. What was perceived as precocious genius was shown to be based, in fact, upon intensive and specialized training from a very young age.

The vicious circle functions more or less like that: the parents of little X convince themselves that their offspring is a violin virtuoso and they give the child the confidence and motivation to practise, and, therefore, X improves greatly, to the point of seeming talented. Acting as if someone has talent is an effective way to get them actually to have it. It seems to be a self-fulfilling prophecy. But it is much more subtle than the mere psychological configuration of ‘I think, therefore I am.’ The prophecy produces a series of processes that catalyse the more difficult aspect of learning: tolerating the tedium of the effort involved in deliberate practice.

All this comes up hard against the more extreme exceptions. What do we do with what seems obvious? For example, that Messi was already an indisputable football genius from a very young age. How do we match up the detailed analysis of development by experts with what our intuition tells us?

Firstly, the argument of effort does not rule out the existence of a certain innate condition.* But, in addition, believing that he wasn’t an expert at eight years old is the beginning of the wrongheaded thinking. At that age, Messi already had more football experience than most people on the planet. The second consideration is that there are hundreds–thousands–of children who do extraordinary things with a ball. But only one of them grew up to be Leo Messi. The error is in supposing that we can predict which children will be the geniuses of the future. The psychologist Anders Ericsson, with careful monitoring of the education of virtuosos in various disciplines, proved that it is almost impossible to predict how much an individual might achieve based on performance in the early stages. This final blow to what we think we know about nurturing talent and effort is very revealing.

The expert and the novice use completely different systems of resolution and cerebral circuits, as we will see. Learning how to do something skilfully is not about improving the cerebral machinery with which we would originally resolve it. The solution is much more radical: replacing it completely for one with different mechanisms and idiosyncrasies. This idea was first hinted at by the celebrated study that Chase and Simon made of expert chess players.

A circus trick that some great chess players practise from time to time is playing games with their eyes closed. Some are capable of extraordinary feats. Miguel Najdorf played on forty-five different boards simultaneously, with his eyes blindfolded. He won thirty-nine games, reached a draw in four, and lost two, breaking the world record for simultaneous games.

In 1939 Najdorf had travelled to Argentina to participate in a Chess Olympiad, representing Poland. Najdorf was Jewish, and as the Second World War had broken out while he was away, he decided not to come back to Europe. His wife, son, parents and four brothers died in a concentration camp. In 1972, Najdorf explained the personal reasons behind his remarkable deed of playing forty-five boards: ‘I didn’t do it for the fun of pulling it off. I had the hope that this news would reach Germany, Poland, and Russia, and that one of my family members would read it and get in touch with me.’ But no one did. The greatest human feats are, in the end, a struggle against loneliness.*

There were 1,440 pieces in play on 45 boards; 90 kings, 720 pawns. Najdorf followed them all in unison to lead his 45 armies, split between black and white, with his eyes covered. Of course, he must have had an extraordinary memory, and have been a very special, unique person with a true gift to be able to do this. Or was he?

A grandmaster, just by looking at a diagram of a chess match for a few seconds, can reproduce it perfectly. Without any effort, as if their hands were working on their own steam, the chess master can place the pieces exactly where the diagram indicates they should be. Yet a person who doesn’t know chess, when faced with this same exercise, would barely remember the position of four or five pieces. It would indeed seem that chess players have much better memories. But that is not the case.

Chase and Simon proved this by using diagrams with pieces spread out randomly on the table. Under those conditions, the masters only remembered, like everyone else, a few pieces. Chess players do not have extraordinary memories but rather the ability developed through practice to create a narrative–visual or spoken–for an abstract problem. This discovery does not only hold true for chess, but for any other form of human knowledge. For example, anyone can remember a Beatles song but will have difficulty recalling a sequence made up of the same words but presented randomly. Now try to remember this same sentence that is long but not complex. And this one: sentence but same long complex try now not remember this that. The song is easy to remember because the text and the music have a narrative. We do not remember it word by word but rather we remember the path the words comprise.

Heirs to Socrates and Menon, Chase and Simon hit upon the key to establishing the path to virtue and knowledge. And the secret, as we will see, consists in recycling old brain circuits to adapt themselves to new functions.

Memory palace

Mnemonic skill is often confused with genius. Someone who juggles with their hands is skilful, but someone who does so with their memory seems to be a genius. And yet, they are not really all that different. We learn to develop a prodigious memory the same way we learn to play tennis, with the recipe we’ve already discussed: practice, effort, motivation and visualization.

When books were rare objects, all stories were disseminated orally. To keep a story from vanishing, people had to use their brains as memory repositories. So, out of necessity, many were seasoned at learning by rote. The most popular mnemonic technique, called ‘the memory palace’, was created in that period. It is attributed to Simonides, the Greek lyric poet from the island of Ceos. The story goes that Simonides had the luck to be the only survivor of the collapse of a palace in Thessalia. The bodies were all mutilated, so it was almost impossible to recognize them and bury them properly. All they had was Simonides’ memory. And he realized, to his surprise, that he could vividly recall the exact place where each of the guests had been sitting when the building collapsed. As a result of this tragedy he had discovered a fantastic technique, the memory palace. He understood that he could remember any arbitrary list of objects if he visualized them in his palace. This was, in fact, the beginning of modern mnemotechnics.

With his palace, Simonides identified an idiosyncratic trait of human memory. The technique works because we all have a fabulous spatial memory. To prove this, you only need to think of how many maps and routes (through towns or people’s homes, of buses, through cities and buildings) you can recall with no effort whatsoever. This original seed of a discovery came to fruition in 2014, when John O’Keefe and the Norwegian couple May-Britt and Edvard Moser won the Nobel Prize in Medicine for finding a system of coordinates in the hippocampus that articulates this formidable spatial memory. It is an age-old system that is even more refined in small rodents–who are extraordinary navigators–than in our mastodon minds. Situating ourselves in space has always been necessary. Unlike remembering countries’ capitals, numbers and other things, which our brains never evolved to do.

Here we see an important idea. An ideal way for us to adapt to new cultural needs is by recycling brain structures that evolved in other contexts to fulfil other functions. The memory palace is a very paradigmatic example. We all struggle to remember numbers, names or shopping lists. But we can easily recall hundreds of streets, the nooks and crannies of our childhood homes, or the houses of our friends when we were growing up. The secret of the memory palace is establishing a bridge between those two worlds, what we want to remember, but find it difficult to, and space, where our memory is right at home.

Read this list and take thirty seconds to try to remember it: napkin, telephone, horseshoe, cheese, tie, rain, canoe, anthill, ruler, tea, pumpkin, thumb, elephant, barbecue, accordion.

Now close your eyes and try to repeat it in the same order. It seems difficult, almost impossible. Yet someone who has built their palace–which requires a few hours of work–can easily remember a list like this. The palace can be open or closed, an apartment building or a house; then what you have to do is go through each room and place, one by one, each of the objects on the list. You have to do more than just name them. In each room you have to create a vivid image of the object in that place. The image must be emotionally powerful, perhaps sexual, violent or scatological. The unusual mental stroll, in which we peek into each room and see the most bizarre images featuring those objects in our own palace, will persist in our memories much more than the words.

So a prodigious memory is based on finding good images for those objects we wish to remember. The task of memorizing is somewhere between architecture, design and photography, all creative tasks. Memory, which we tend to perceive as a rigid and passive aspect of our thought, is actually a creative exercise.

In short, improving our memories doesn’t mean increasing the space in the drawer where the memories are kept. The substratum of memory is not a muscle that grows with exercise and is strengthened. When technology made it possible, Eleanor Maguire confirmed this premise by investigating the very factory of memories. She discovered that the brains of the great champions of memory are anatomically indistinguishable from anyone else’s. Nor were those champions more intelligent or better at remembering things outside of the realm they had studied, just like virtuoso chess players. The only difference was that the prodigious memorizers use the spatial structures of their memories. They have managed to recycle their spatial maps in order to remember arbitrary objects.

The morphology of form

One of the most spectacular cerebral transformations occurs when we learn to see. This happens so early in our lives that we do not have any memory of how we perceived the world before seeing. From a stream of light, our visual system manages to identify shapes and emotions in a tiny fraction of a second, and what is even more extraordinary is that it happens without any sort of effort or conscious realization that something must be done. But converting light into shapes is so difficult that we have yet to create machines that can do it. Robots go into outer space, play chess better than the greatest masters, and fly aeroplanes, but they cannot see.

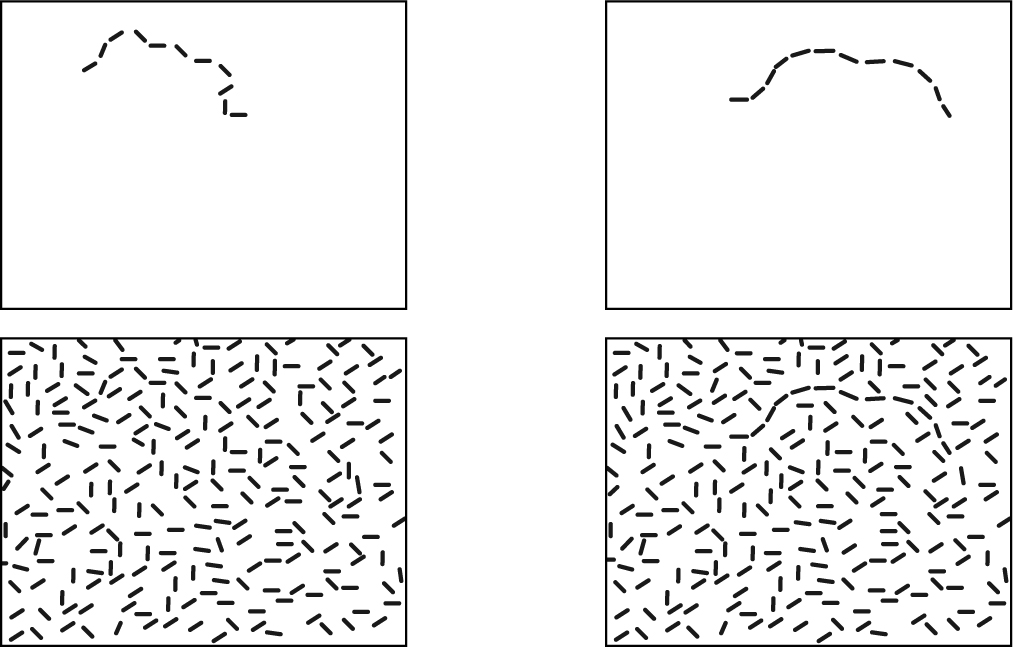

To understand how the brain pulls off such a feat, we must find its limits, see exactly where it fails. To do that, we will look at a simple yet eloquent example. When we are trying to think about how we see, an image is definitely worth more than a thousand words.

The two objects in the following figure are very similar. And both, of course, are very easy to recognize. But when they are submerged in a sea of dotted lines something quite extraordinary happens. The visual brain works in two completely different ways. It is impossible not to see the object on the right; it’s as if it were another colour and was literally popping out. Something different happens with the object on the left. We see the dotted lines that make up the snake only with much effort, and our perception is unstable; when we are focused on one part, the rest vanishes and blends into the texture.

We can think of the object we see easily as a melody in which the notes follow each other harmonically and are naturally perceived as a whole, while the other object is more like random notes. Just as with music, the visual system has rules that define how we organize an image and that dictate how we perceive and what we remember. When an object is grouped in a natural, integrated way that does not require much effort, it is said to be gestaltian, named for the group of psychologists who, in the early twentieth century, discovered the rules by which the visual system constructs shapes. These rules, like those of language, are learned.

Let’s look at how this works in the brain. Can we train and modify the brain to detect any object almost instantaneously and automatically? In the process of answering this question, we will sketch a theory of human learning.

A monster with slow processors

Most silicon computers today work with just a few processors. These computers calculate very quickly, but can only respond to one thing at a time. Our brains, on the other hand, are parallel machines–to a massive degree; which is to say, they are simultaneously making millions and millions of calculations. Perhaps this is one of the most distinctive aspects of the human brain and, to a large extent, it allows us quickly and effectively to solve problems that we are still unable to delegate to even the most powerful computers. This is one of the areas of most intense effort in computer science and yet the attempt to develop massively parallel computers has produced only elusive results. These researchers are presented with two fundamental difficulties: the first is simply to find a way to produce that number of processors economically; and the second is to get them all to share information.

In a parallel computer, each processor does its job. But the result of all that collective work has to be coordinated. One of the most mysterious aspects of the brain is how it manages to unite all the information processed in parallel. This is profoundly linked to consciousness. Which is why, if we understand how the brain brings together the information it calculates on a massive scale, we will be much closer to revealing the mechanism of consciousness. And we will have discovered the processes of how to learn.

The secret of virtuosity is in being able to recycle this parallel machinery and adapt it to new functions. The great mathematician sees mathematics. The chess master sees chess. And this happens because the visual cortex is the most extraordinary parallel machine known to humankind.

The visual system is comprised of superimposed maps. For example, the brain has a map that is devoted to codifying colour. In a region called V4,* modules of approximately a millimetre in size, called globs, are formed, and each one identifies different subtleties of colour in a very precise region of the image.

The big advantage to this system is that recognizing something does not require sequentially sweeping over it point by point. This turns out to be particularly important in the brain. It takes a long time for a neuron to load and route information from one to the next, which means that the brain can process between three and fifteen computing cycles in a second. That is nothing compared to the billions of cycles per second of the tiny processor in a mobile phone.

The brain solves the intrinsic slowness of its biological fabric with an almost infinite army of neural circuits.* So the conclusion is simple, and as I will argue in the next few pages will be the key to the enigma of learning: any function that can be resolved in the parallel structures (maps) of the brain will be done effectively and efficiently. It will also be perceived as automatic. On the other hand, functions that use the brain’s sequential cycle are carried out slowly and are perceived with great effort and fully consciously. Learning in the brain is, to a great extent, parallelizing.

The repertoire of visual maps includes movement, colour, contrast and direction. Some maps identify more sophisticated objects as two contiguous circles. In other words, eyes that watch us. That is what produces the strange sensation of turning your head quickly towards someone watching you. How did you know they were watching you before turning your gaze? The reason is that the brain is exploring the possibility that someone is watching us throughout the entire space and in parallel, often without any conscious register of it. The brain detects a different attribute in one of its maps, and generates a signal that communicates with the attention and motor control system of the parietal cortex as if** saying: ‘Turn your eyes over there because something important is happening.’ These maps are like factory settings of a range of innate skills. They are efficient and at the same time fulfil a very specific task. But they can be modified, combined and rewritten. And in this lies the key to learning.

Our inner cartographers

The cerebral cortex is organized into columns of neurons, and each one carries out a specific function. That was discovered by David Hubel and Torsten Wiesel, earning them the Nobel Prize in Physiology. When studying how those maps developed they found that there were critical periods. The visual maps have a natural programme of genetic development but they need visual experience to consolidate. Like a river that needs water running through it in order to maintain its shape.

The retina, especially in the first phases of development, generates spontaneous activity, stimulating itself in complete darkness. The brain recognizes this activity as light, without differentiating whether it comes from outside the body or not. Therefore the development based on activity begins before we open our eyes. Cats, for example, are born with their eyes closed. They are actually training their visual system with inner light. In cats, humans and other mammals, these maps develop in early infancy and are already consolidated after a few months. Hubel and Wiesel’s discovery converges with another myth: that learning certain things as an adult is an impossible mission. We are going to revisit this idea and offer some moderate optimism: learning later in life is much more plausible than we imagine but requires much time and effort–the same amount we devote to such tasks in our early infancy, although we have now forgotten that. After all, babies and kids spend hours, days, months and years of their lives learning to speak, walk and read. What adult puts everything else to one side to devote all their time and effort to learning something new?

In retrospect, some of this is obvious. Radiologists, as adults, learn to see x-rays. After much work they can easily identify oddities that no one else can see. It is the clear result of a transformation in their adult visual cortex. In fact, for radiologists, such detection is quick, automatic and almost emotional, as when we have a visceral response of annoyance when we see ‘grammatical‘ errors. What happens in the brain that can so radically transform our way of perceiving and thinking?

Fluorescent triangles

Science has some curious repetitions. Those who come up with extraordinary, paradigmatic ideas are often the very same ones who later topple them. Torsten Wiesel, after establishing the dogma of the critical periods, got together with Charles Gilbert, one of his students at Harvard, to prove just the opposite, that the visual cortex continues to reorganize itself even in full adulthood.

When I arrived in Gilbert and Wiesel’s laboratory–by that point it had moved to New York–to begin my doctorate, the myth had already been turned on its head. The question was no longer whether the adult brain could learn, but how exactly it did. What happens in the brain when we become experts in something?

We devised an experiment to be able to carefully investigate this question in the laboratory. This required making certain concessions in a process of simplification. So instead of using expert radiologists, we created experts in triangles. Something with very little merit as a skill or profession but which has the laboratory advantage of being a simple way to simulate a learning process.

We showed a group of people an image filled with shapes that after 200 milliseconds disappeared in a flash. They had to find a triangle in that mess. They looked at us like we were crazy. It was impossible. They simply hadn’t had enough time to see it.

We knew that if the test had been finding a red triangle among many blue ones, everyone would have solved it easily. And we know why. We have a parallel system that, in eighty milliseconds, can sweep the space in unison in order to solve a difference of colour, but our visual cortices do not have a similar map devoted to identifying triangles. Can we develop that ability? If so, we would be opening a window on to how we learn.

Over hundreds of attempts, many of the participants were frustrated to find they saw nothing. But after hours and hours of repeating this boring task, something magical happened: the triangle began to glow, as if it were a different colour, as if it were impossible not to see it. So we know that with much effort we can see something that previously seemed impossible. And that it can be done as an adult. The big advantage to this experiment is that it allowed us to study what happens in the brain as we learn.

The parallel brain and the serial brain

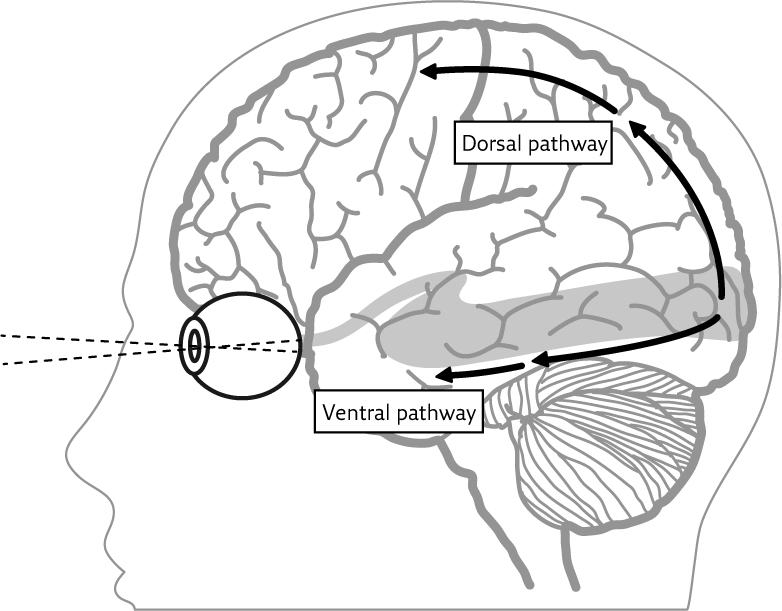

The cerebral cortex is organized into two large systems. One is the dorsal, which–if your head is looking upwards–continues along the back of the body, and the ventral, which corresponds to the belly. In functional terms, this division is much more pertinent than the more popular division of the hemispheres. The dorsal part includes the parietal and frontal cortex, which have to do with consciousness, with cerebral activity that deals with action, and with a slow, sequential working of the brain. The ventral part of the cerebral cortex is associated with automatic and generally unconscious functions, and corresponds to a rapid, parallel way of working.

We found two fundamental differences in the cerebral activity of the triangle experts. Their primary visual cortex–in the ventral system–activated much more when they saw triangles than when they saw other shapes they hadn’t been trained to identify. And at the same time their frontal and parietal cortices deactivated. This explains why for them seeing triangles no longer entailed effort. This is not specific to triangles. A similar transformation is observed when a person trains to recognize something (for example, musicians learning to read scores, a gardener learning to recognize a parasite on a plant, or coaches who realize in a matter of seconds that their team is flailing out on the field).

Dorsal pathway: Produces learning

Ventral pathway: OK threshold

Dorsal pathway: Slow

Ventral pathway: Fast

Dorsal pathway: Mental effort

Ventral pathway: Automatic

Dorsal pathway: Sequential

Ventral pathway: Parallel

Dorsal pathway: Flexible and versatile

Ventral pathway: Rigid and Stereotypical

Dorsal pathway: Reads letter by letter

Ventral pathway: Automatic reading

Learning: a bridge between two pathways in the brain

The cortex is organized into the dorsal system and the ventral system. Learning consists of a process of transferring from one system to the other. When we learn to read, the slow, effortful system that works ‘letter by letter’ (dorsal system) is replaced by the other, which is capable of detecting entire words without effort and much faster (ventral system). But when the conditions are not favourable for the ventral system (for example, if the letters are written vertically) we go back to using the dorsal, which is slow and serial but has the flexibility to adapt to different circumstances. In many cases, learning means freeing the dorsal system to automatize a process so we can devote our attention and mental effort to other matters.

The repertoire of functions: learning is compiling

The brain has a series of maps in the ventral cortex that allow it to carry out some functions rapidly and efficiently. The parietal cortex allows for the combination of each of those maps, but through a slow process that requires effort.

However, the human brain has the ability to change its repertoire of automatic operations. After thousands and thousands of rehearsals, a new function can be added to the ventral cortex. We can think of this as a process of outsourcing, as if the conscious brain were delegating this function to the ventral cortex. Conscious resources, requiring mental effort and limited to the capacities of the frontal and parietal cortices, could be devoted to other tasks. This is a key to learning how to read that is enormously relevant to educational practice. Expert readers, who read cover to cover with no effort, delegate the reading; readers who are learning don’t, and their conscious mind is fully occupied by the task.

The process of automatization is tangible in the example of arithmetic. When children first learn to add 3 + 4, they count on their fingers, making the parietal cortex work hard. But at some point in the learning ‘three plus four is seven’ almost becomes a poem. Their brains are no longer moving imaginary objects or real fingers one by one, but going to a memorized chart. The addition has been outsourced. Then a new phase starts. Those same children begin to solve 4 × 3 in the same slow, laboured way–using the parietal and frontal cortex–‘4 + 4 is 8. And 8 + 4 is 12.’ Then they develop another way to out-source, automatizing the multiplication in a memory table in order to move on to more complex calculations.

An almost analogous process explains the virtuosos we looked at earlier. When chess masters solve complicated chess problems, what activates most differently is their visual cortex. We can summarize by saying that they don’t think more, but rather they see better.* The same thing happens with great mathematicians who, when solving complicated theorems, activate their visual cortex. In other words, the virtuoso managed to recycle a cortex ancestrally devoted to identifying faces, eyes, movement, dots and colours in order to utilize it in a much more abstract realm.

Automatizing reading

The principle we can infer from the triangle experts explains what is perhaps the most decisive transformation in education: turning visual scribbles (letters) into the voices of words. Since reading is the universal window on to knowledge and culture, this gives it a special pertinence over the rest of the human skills.

Why do we begin to read at five years old and not at four or six? Is that better? Is it best to learn to read by breaking down each word into the letters that make it up or the other way around, by reading the whole word and associating it with a meaning? Given reading’s pertinence, these aren’t decisions that should be made from a stance of it looks to me like, but rather they should be built on a body of evidence that brings together the experience of years and years of practice and a knowledge of the cerebral mechanisms that support the development of reading.

As in other realms of learning, the expert reader also outsources. Those who read poorly not only read more slowly; what most holds them back is that their system of effort and concentration is focused on the reading and not on thinking about what the words mean. That is why dyslexia is often diagnosed by a deficit in reading comprehension. But it doesn’t have anything to do with intelligence, rather simply with the fact that the effort is being put somewhere else. In order to be able to empathize with this, try to remember these words while you read the next paragraph: tree, bicycle, mug, fan, peach, hat.

Sometimes, when reading in a foreign language we’ve just started to learn, we come to realize that we’ve understood very little, because all of our attention was focused on translating. The same idea applies to all learning processes. When someone starts to study percussion, their focus is on the new rhythm they are learning. At some point that rhythm becomes internalized and automatic, and only then can they concentrate on the melody that floats above it, on the harmony that accompanies it or on other rhythms that are in a dialogue with that one simultaneously.

Do you remember the words now? And if you do, what was the last paragraph about? Successfully completing both tasks is difficult because each one occupies a limited system in the frontal and parietal cortex. Your attention chooses between juggling those six words so they don’t vanish in your memory or following a text. Rarely can it focus on both.

The ecology of alphabets

Almost all children learn language very well. As a mature adult, I arrived in France without being able to speak more than the simplest words of French and it seemed strange to me that a small child I met who knew nothing of Kant’s philosophy or calculus or the Beatles could speak French perfectly. It must surely have seemed strange to the same kid that a grown-up was incapable of something so simple as correctly pronouncing a word. This everyday example demonstrates how the human brain can display a mental virtuosity that has very little to do with other aspects we associate with culture and intelligence.

One of Chomsky’s ideas is that we learn spoken language so effectively because it is built on a faculty for which the brain is prepared. As we have already seen, the brain is not a tabula rasa. It already has some built-in functions, and the problems that depend on them are more easily resolved.

In the same way that Chomsky argued that there are elements common to all spoken languages, there is also a common thread to all alphabets. Of course, the thousands of alphabets that exist, many already in disuse, are very different. But if we look at them all together, we immediately notice some regularities. The most striking is that their construction is based on just a few hand strokes. Hubel and Wiesel won their Nobel Prize for discovering precisely that each neuron of the primary visual cortex detects strokes in the small window it is sensitive to. The strokes are the basis of the entire visual system, the bricks of its form. And all alphabets are built with these bricks.

There are horizontal and vertical lines, angles, arches, slashes. And when you count the most frequent strokes in all alphabets there is extraordinary regularity: those strokes that are most common in nature are also the most common in alphabets. This isn’t the product of a deliberate, rational design; alphabets just evolved to use a material that is quite similar to the visual material we are used to dealing with. Alphabets usurp elements for which our visual system is already fine-tuned. It’s like starting with an advantage, since reading is close enough to what the visual system has already learned. If we try to teach with alphabets that have no relationship to what our visual system naturally recognizes, the experience of reading would be much, much more tedious. And, the other way around: when we see cases of reading difficulties, we can ease that process by making the material to be learned into something more digestible, more natural, more easily consumed–something for which the brain is prepared.

The morphology of the word

New readers pronounce a letter as if in slow motion. After many repetitions, this process becomes automatic; the ventral part of the visual system creates a new circuit able to recognize letters. This detector is built by recombining previously existing circuits that identify strokes. And in turn these become new bricks in the visual system that, like Lego pieces, are recombined to recognize syllables (of two or three successive letters). The cycle continues, with the syllables like new atoms of reading. At this point, a child reads the word ‘father’ in two cycles, one for each syllable. Later, when reading is firmly established, the word is read in just one sweep, whole, as if it were a single object. In other words, reading goes from being a serial process to a parallel one. At the end of the process of reading, readers form a brain function capable of extracting most words–except for extremely long and compound words–as a whole.

How do we know that adults read word by word? The first proof is in a reader’s eyes, which move and stop on each word. Each one of these stops lasts about 300 milliseconds and then jumps abruptly and rapidly to the next word. In writing systems like English, that move from left to right, we focus very close to the first third of the word, since we mentally sweep from there to the right, towards the future of reading. This very precise process is, of course, implicit, automatic and unconscious.

The second proof is in measuring the time it takes to read a word. If we read it letter by letter, the time would be proportional to the length of the words. However, the time a reader takes to read a word made up of two, four or five letters is exactly the same. This is the great virtue of parallelization; it doesn’t matter if there are one, ten, a hundred or a thousand nodes to which we must apply the operation. In reading, this parallelization has a limit in very long and compound words, such as sternocleidomastoid.* But within a range of two to seven letters reading time is almost identical. On the contrary, for someone who is just learning to read or for dyslexics, the reading time increases proportionally to the number of letters in a word.

We saw that the talent students have when starting their studies is not a good predictor of how good they will be after many years of learning. We now understand why.

In France, based on the finding that expert readers read word by word, one group concluded–erroneously–that the best way to teach children how to read was through holistic reading which, instead of starting by identifying the sounds of each letter, starts by reading entire words as a whole. This method quickly spread in popularity, probably because it has a good name. Who doesn’t want their child to learn with the holistic method? But it was an unprecedented pedagogical disaster that led to many children having reading difficulties. And, with the argument sketched here, you can understand why the holistic method didn’t work. Reading in a parallelized way is the final phase that can only be reached by first constructing the intermediate functions.

The two brains of reading

Throughout this book we have focused on two different brain systems: the frontoparietal, which is versatile but slow and demands effort; and the ventral system, which is devoted to some specific functions that are carried out automatically and very quickly.

These two systems coexist, and their relevance varies over the course of the learning process. As seasoned readers, we primarily utilize the ventral system, although the parietal system is working residually, as becomes evident when we read complex handwriting or when the letters are not configured in their natural form, either vertically, from right to left, or separated by big spaces. In these cases, the circuits of the ventral cortex–which are not very flexible–cease to function. And then we read similarly to how a dyslexic person does.* In fact, it is harder to read a CAPTCHA** because it has irregularities that make the ventral system unable to recognize it. That is a way to dial back into the latent serial system of reading and find ourselves in the same situation we were long ago when we learned to read.

The temperature of the brain

When we learn, the brain changes. For example, the synapses–from the Greek for ‘join together’–that connect different neurons can multiply their connections or vary the efficiency of an already established connection. All of this changes the neuronal networks. But the brain has other sources of plasticity; for example, the morphological properties or genetic expression of its neurons can change. And in some very specific cases, the number of brain cells can increase, although this is very rare. In general the adult brain learns without augmenting its neuronal mass.

Today the term ‘plasticity’ is used to refer to the brain’s ability to transform. It is a popular metaphor but the term leads to an incorrect assumption: that the brain is moulded and stretched, gets crumpled up and smoothed out, like a muscle, although none of that actually happens.

What makes the brain more or less predisposed to change? With materials, the critical parameter that dictates their predisposition to change is temperature. Iron is rigid and not malleable, but when heated it can change shape and later be reconfigured into another form that it retains when cooled. What is the equivalent in the brain to temperature? First of all, as Hubel and Wiesel proved, there is the stage of development. A baby’s brain does not have the same degree of malleability as an adult’s. Yet, as we have seen, this is not an immutable variable. Is motivation the fundamental difference between a child and an adult?

Motivation promotes change for a simple reason that we have already discussed: a motivated person works harder. Marble is not exactly plastic but, if we go at it for hours with a chisel, it will eventually change shape. The notion of plasticity is relative to the effort we are willing to put in to make a change. But this doesn’t yet bring us to the notion of temperature, of predisposition to change. What happens in the brain when we are motivated that predisposes it to change? Can we emulate this cerebral state in order to promote learning? The answer is in understanding which chemical soup of neurotransmitters promotes synaptic transformation and, therefore, cerebral change.

Before getting into the microscopic detail of brain chemistry, we should have a look at a more canonical way of learning: memory. Almost all of us remember the events of 9/11, the planes crashing at the North and South Towers of the World Trade Center. What’s most surprising is that, fifteen years later, we not only remember those images of the burning towers but we also can recall with striking clarity where we were and who we were with when it happened. That deeply emotional moment makes everything around it, both the relevant–the attack–and the irrelevant, stick in the memory. This is why those who have been through a traumatic experience often have a very difficult time erasing that memory, which can be activated by fragments of the episode, the place where it happened, a similar smell, a person who was there, or any other detail. Memories are formed as episodes; in the moments our neuronal register is most sensitive, we vividly remember not only what activated that sensitivity, but also everything that formed around that episode.

This is an example of a more general principle. When we are emotionally aroused or when we receive a reward (monetary, sexual, emotional, chocolate) the brain is more predisposed to change. To understand how this happens, we have to switch tools and enter into the microscopic world. And that voyage will take us to California, to the laboratory of a neurobiologist, Michael Merzenich.

In his experiment, monkeys had to identify the higher pitched of two tones, as when we tune an instrument. As the two tones became more similar, they began to perceive them as identical even though they weren’t. This allowed him to investigate the limits of the resolution of the auditory system. Like any other virtue, this can also be trained.

The auditory cortex, just like the visual one, is organized into a pattern, a cluster of neurons grouped into columns. Each column is specialized to detect a particular frequency. So, in parallel, the auditory cortex analyses the frequency structure (the notes) of a sound.

In the map of the auditory cortex, each frequency has a dedicated region. Merzenich already knew that if a monkey is actively trained to recognize tones of a particular frequency, something quite extraordinary happens: the column representing that frequency expands, like a country that grows by invading its neighbouring territories. The question that concerns us here is the following: what allows that change to happen? Merzenich observed that the mere repetition of a tone wasn’t enough to transform the cortex. Yet if that tone occurs at the same time as a pulse of activity in the ventral tegmental area, a region deep in the brain that produces dopamine, then the cortex reorganizes itself. It all comes together. In order for a cortical circuit to reorganize, there needs to be a stimulus occurring in that window of time which releases dopamine (or other similar neurotransmitters). In order for us to learn, we need motivation and effort. It isn’t magic or dogma. We now know that this produces dopamine, which lessens the brain’s resistance to change.

We can think of dopamine as the water that makes clay more pliable, and the sensory stimulus as the tool that marks a groove in that damp clay. Neither can transform the material on its own. Working dry clay is a waste of time. Wetting it if you aren’t going to sculpt it is as well. This is the basis of the learning programme that we began discussing with Galton’s idea: the brain learns when it is exposed to stimuli that transform it. It is a slow and repetitive task to establish those grooves of new circuits that automatize a process. And the transformation requires, in addition to effort and training, a cerebral cortex that is in a state sensitive to change.

To sum up, we looked at Galton’s error in order to understand how learning is forged: the ceiling is not as genetically established as is commonly assumed and the path is also social and cultural. We also saw that virtuosos carry out their expert tasks in a qualitatively different way, not only by improving the original procedure. And that to persevere in learning we have to work with motivation and effort, outside our comfort zone and the OK threshold. What we recognize as a performance ceiling is usually not. It is an equilibrium point.

In short, it is never too late to learn. If something changes in adulthood it is that our motivation gets stuck in what we’ve already learned and not swept up in the whirlwind of discovering and learning. Recovering that enthusiasm, that patience, that motivation and that conviction seems to be the natural starting point for those who truly want to learn.