Without a profound simplification the world around us would be an infinite, undefined tangle that would defy our ability to orient ourselves and decide upon our actions … We are compelled to reduce the knowable to a schema.

—Primo Levi, The Drowned and the Saved

First baseball umpire: “I call ’em as I see ’em.”

Second umpire: “I call ’em as they are.”

Third umpire: “They ain’t nothin’ till I call ’em.”

When we look at a bird or a chair or a sunset, it feels as if we’re simply registering what is in the world. But in fact our perceptions of the physical world rely heavily on tacit knowledge, and mental processes we’re unaware of, that help us perceive something or accurately categorize it. We know that perception depends on mental doctoring of the evidence because it’s possible to create situations in which the inference processes we apply automatically lead us astray.

Have a look at the two tables below. It’s pretty obvious that one of the tables is longer and thinner than the other.

Figure 1. Illusion created by the psychologist Roger Shepard.1

Obvious, but wrong. The two tables are of equal length and width.

The illusion is based on the fact that our perceptual machinery decides for us that we’re looking at the end of the table on the left and the side of the table on the right. Our brains are wired so that they “lengthen” lines that appear to be pointing away from us. And a good thing, too. We evolved in a three-dimensional world, and if we didn’t tamper with the sense impression—what falls on the eye’s retina—we would perceive objects that are far away as being smaller than they are. But what the unconscious mind brings to perception misleads us in the two-dimensional world of pictures. As a result of the brain’s automatically increasing the size of things that are far away, the table on the left appears longer than it is and the table on the right appears wider than it is. When the objects aren’t really receding into the distance, the correction produces an incorrect perception.

Schemas

We aren’t too distressed when we discover that lots of unconscious processes allow us to correctly interpret the physical world. We live in a three-dimensional world, and we don’t have to worry about the fact that the mind makes mistakes when it’s forced to deal with an unnatural, two-dimensional world. It’s more unsettling to learn that our understanding of the nonmaterial world, including our beliefs about the characteristics of other people, is also utterly dependent on stored knowledge and hidden reasoning processes.

Meet “Donald,” a fictitious person experimenters have presented to participants in many different studies.

Donald spent a great amount of his time in search of what he liked to call excitement. He had already climbed Mt. McKinley, shot the Colorado rapids in a kayak, driven in a demolition derby, and piloted a jet-powered boat—without knowing very much about boats. He had risked injury, and even death, a number of times. Now he was in search of new excitement. He was thinking, perhaps, he would do some skydiving or maybe cross the Atlantic in a sailboat. By the way he acted one could readily guess that Donald was well aware of his ability to do many things well. Other than business engagements, Donald’s contacts with people were rather limited. He felt he didn’t really need to rely on anyone. Once Donald made up his mind to do something it was as good as done no matter how long it might take or how difficult the going might be. Only rarely did he change his mind even when it might well have been better if he had.2

Before reading the paragraph about Donald, participants first took part in a bogus “perception experiment” in which they were shown a number of trait words. Half the participants saw the words “self-confident,” “independent,” “adventurous,” and “persistent” embedded among ten trait words. The other half saw the words “reckless,” “conceited,” “aloof,” and “stubborn.” Then the participants moved on to the “next study,” in which they read the paragraph about Donald and rated him on a number of traits. The Donald paragraph was intentionally written to be ambiguous as to whether Donald is an attractive, adventurous sort of person or an unappealing, reckless person. The perception experiment reduced the ambiguity and shaped readers’ judgments of Donald. Seeing the words “self-confident,” “persistent,” and so on resulted in a generally favorable opinion of Donald. Those words conjure up a schema of an active, exciting, interesting person. Seeing the words “reckless,” “stubborn,” and so on triggers a schema of an unpleasant person concerned only with his own pleasures and stimulation.

Since the 1920s, psychologists have made much use of the schema concept. The term refers to cognitive frameworks, templates, or rule systems that we apply to the world to make sense of it. The progenitor of the modern concept of schema is the Swiss developmental psychologist Jean Piaget. For example, Piaget described the child’s schema for the “conservation of matter”—the rule that the amount of matter is the same regardless of the size and shape of the container that holds it. If you pour water from a tall, narrow container into a short, wide one and ask a young child whether the amount of water is more, less, or the same, the child is likely to say either “more” or “less.” An older child will recognize that the amount of water is the same. Piaget also identified more abstract rule systems such as the child’s schema for probability.

We have schemas for virtually every kind of thing we encounter. There are schemas for “house,” “family,” “civil war,” “insect,” “fast food restaurant” (lots of plastic, bright primary colors, many children, so-so food), and “fancy restaurant” (quiet, elegant decor, expensive, high likelihood the food will be quite good). We depend on schemas for construal of the objects we encounter and the nature of the situation we’re in.

Schemas affect our behavior as well as our judgments. The social psychologist John Bargh and his coworkers had college students make grammatical sentences out of a scramble of words, for example, “Red Fred light a ran.”3 For some participants, a number of the words—“Florida,” “old,” “gray,” “wise”—were intended to call up the stereotype of an elderly person. Other participants made sentences from words that didn’t play into the stereotype of the elderly. After completing the unscrambling task, the experimenters dismissed the participants. The experimenters measured how rapidly the participants walked away from the lab. Participants who had been exposed to the words suggestive of elderly people walked more slowly toward the elevator than unprimed participants.

If you’re going to interact with an old person—the schema for which one version of the sentence-unscrambling task calls up—it’s best not to run around and act too animated. (That is, if you have positive attitudes toward the elderly. Students who are not favorably disposed toward the elderly actually walk faster after the elderly prime!)4

Without our schemas, life would be, in William James’s famous words, “a blooming, buzzing confusion.” If we lacked schemas for weddings, funerals, or visits to the doctor—with their tacit rules for how to behave in each of these situations—we would constantly be making a mess of things.

This generalization also applies to our stereotypes, or schemas about particular types of people. Stereotypes include “introvert,” “party animal,” “police officer,” “Ivy Leaguer,” “physician,” “cowboy,” “priest.” Such stereotypes come with rules about the customary way that we behave, or should behave, toward people who are characterized by the stereotypes.

In common parlance, the word “stereotype” is a derogatory term, but we would get into trouble if we treated physicians the same as police officers, or introverts the same as good-time Charlies. There are, however, two problems with stereotypes: they can be mistaken in some or all respects, and they can exert undue influence on our judgments about people.

Psychologists at Princeton had students watch a videotape of a fourth grader they called “Hannah.”5 One version of the video reported that Hannah’s parents were professional people. It showed her playing in an obviously upper-middle-class environment. Another version reported that Hannah’s parents were working class and showed her playing in a run-down environment.

The next part of the video showed Hannah answering twenty-five academic achievement questions dealing with math, science, and reading. Hannah’s performance was ambiguous: she answered some difficult questions well but sometimes seemed distracted and flubbed easy questions. The researchers asked the students how well they thought Hannah would perform in relation to her classmates. The students who saw an upper-middle-class Hannah estimated that she would perform better than average, while those who saw the working-class Hannah assumed she would perform worse than average.

It’s sad but true that you’re actually more likely to make a correct prediction about Hannah if you know her social class than if you don’t. In general, it’s the case that upper-middle-class children perform better in school than working-class children. Whenever the direct evidence about a person or object is ambiguous, background knowledge in the form of a schema or stereotype can increase accuracy of judgments to the extent that the stereotype has some genuine basis in reality.

The much sadder fact is that working-class Hannah starts life with two strikes against her. People will expect and demand less of her, and they will perceive her performance as being worse than if she were upper middle class.

A serious problem with our reliance on schemas and stereotypes is that they can get triggered by incidental facts that are irrelevant or misleading. Any stimulus we encounter will trigger spreading activation to related mental concepts. The stimulus radiates from the initially activated concept to the concepts that are linked to it in memory. If you hear the word “dog,” the concept of “bark,” the schema for “collie,” and an image of your neighbor’s dog “Rex” are simultaneously activated.

We know about spreading activation effects because cognitive psychologists find that encountering a given word or concept makes us quicker to recognize related words and concepts. For example, if you say the word “nurse” to people a minute or so before you ask them to say “true” or “false” to statements such as “hospitals are for sick people,” they will say “true” more rapidly than if they hadn’t just heard the word “nurse.”6 As we’ll see, incidental stimuli influence not only the speed with which we recognize the truth of an assertion but also our actual beliefs and behavior.

But first—about those umpires who started off this chapter. Most of the time we’re like the second umpire, thinking that we’re seeing the world the way it really is and “calling ’em as they are.” That umpire is what philosophers and social psychologists call a “naive realist.”7 He believes that the senses provide us with a direct, unmediated understanding of the world. But in fact, our construal of the nature and meaning of events is massively dependent on stored schemas and the inferential processes they initiate and guide.

We do partially recognize this fact in everyday life and realize that, like the first umpire, we really just “call ’em as we see ’em.” At least we see that’s true for other people. We tend to think, “I’m seeing the world as it is, and your different view is due to poor eyesight, muddled thinking, or self-interested motives!”

The third umpire thinks, “They ain’t nothin’ till I call ’em.” All “reality” is merely an arbitrary construal of the world. This view has a long history. Right now its advocates tend to call themselves “postmodernists” or “deconstructionists.” Many people answering to these labels endorse the idea that the world is a “text” and no reading of it can be held to be any more accurate than any other. This view will be discussed in Chapter 16.

The Way to a Judge’s Heart Is Through His Stomach

Spreading activation makes us susceptible to all kinds of unwanted influences on our judgments and behavior. Incidental stimuli that drift into the cognitive stream can affect what we think and what we do, including even stimuli that are completely unrelated to the cognitive task at hand. Words, sights, sounds, feelings, and even smells can influence our understanding of objects and direct our behavior toward them. That can be a good thing or a bad thing, depending.

Which hurricane is likely to kill more people—one named Hazel or one named Horace? Certainly seems it could make no difference. What’s in a name, especially one selected at random by a computer? In fact, however, Hazel is likely to kill lots more people.8 Female-named hurricanes don’t seem as dangerous as male-named ones, so people take fewer precautions.

Want to make your employees more creative? Expose them to the Apple logo.9 And avoid exposing them to the IBM logo.

It’s also helpful for creativity to put your employees in a green or blue environment (and avoid red at all costs).10 Want to get lots of hits on a dating website? In your profile photo, wear a red shirt, or at least put a red border around the picture.11 Want to get taxpayers to support education bond issues? Lobby to make schools the primary voting location.12 Want to get the voters to outlaw late-term abortion? Try to make churches the main voting venue.

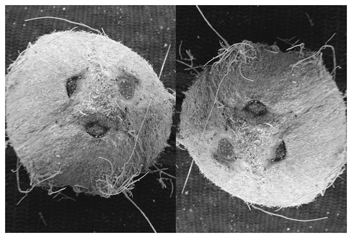

Want to get people to put a donation for coffee in the honest box? On a shelf above the coffee urn, place a coconut like the one on the left in the picture below. That would be likely to cause people to behave more honestly. An inverted coconut like the one on the right would likely net you nothing. The coconut on the left is reminiscent of a human face (coco is Spanish for head) and people subconsciously sense their behavior is being monitored. (Tacitly, of course—people who literally think they’re looking at a human face would be in dire need of an optometrist or a psychiatrist, possibly both.)

Actually, it’s sufficient to just have a picture of three dots in the orientation of the coconut on the left to get more contributions.13

Want to persuade someone to believe something by giving them an editorial to read? Make sure the font type is clear and attractive. Messy-looking messages are much less persuasive.14 But if the person reads the editorial in a seafood store or on a wharf, its argument may be rejected15—if the person is from a culture that uses the expression “fishy” to mean “dubious,” that is. If not, the fishy smell won’t sway the person one way or the other.

Starting up a company to increase IQ in kids? Don’t call it something boring like Minnesota Learning Corporation. Try something like FatBrain.com instead. Companies with sexy, interesting names are more attractive to consumers and investors.16 (But don’t actually use FatBrain.com. That’s the name of a company that really took off after it changed its drab name to that one.)

Bodily states also find their way into the cognitive stream. Want to be paroled from prison? Try to get a hearing right after lunch. Investigators found that if Israeli judges had just finished a meal, there was a 66 percent chance they would vote for parole.17 A case that came up just before lunch had precisely zero chance for parole.

Want someone you’re just about to meet to find you to be warm and cuddly? Hand them a cup of coffee to hold. And don’t by any means make that an iced coffee.18

You may recall the scene in the movie Speed where, immediately after a harrowing escape from death on a careening bus, two previously unacquainted people (played by Keanu Reeves and Sandra Bullock) kiss each other passionately. It could happen. A man who answers a questionnaire administered by a woman while the two are standing on a swaying suspension bridge high above a river is much more eager to date her than if the interview takes place on terra firma.19 The study that found this effect is one of literally dozens that show that people can misattribute physiological arousal produced by one event to another, altogether different one.

If you’re beginning to suspect that psychologists have a million of these, you wouldn’t be far wrong. The most obvious implication of all the evidence about the importance of incidental stimuli is that you want to rig environments so that they include stimuli that will make you or your product or your policy goals attractive. It’s obvious when stated that way. Less obvious are two facts: (1) The effect of incidental stimuli can be huge, and (2) you want to know as much as you possibly can about what kinds of stimuli produce what kinds of effects. A book by Adam Alter called Drunk Tank Pink is a good compendium of many of the effects we know about to date. (Alter chose the title because of the belief of many prison officials and some researchers that pink walls make inebriated men tossed into a crowded holding cell less prone to violence.)

A less obvious implication of our susceptibility to “incidental” stimuli is the importance of encountering objects—and especially people—in a number of different settings if a judgment about them is to be of any consequence. That way, incidental stimuli associated with given encounters will tend to cancel one another out, resulting in a more accurate impression. Abraham Lincoln once said, “I don’t like that man. I must get to know him better.” To Lincoln’s adage, I’d add: Vary the circumstances of the encounters as much as possible.

Framing

Consider the Trappist monks in two (apocryphal) stories. Monk 1 asked his abbot whether it would be all right to smoke while he prayed. Scandalized, the abbot said, “Of course not; that borders on sacrilege.” Monk 2 asked his abbot whether it would be all right to pray while he smoked. “Of course,” said the abbot, “God wants to hear from us at any time.”

Our construal of objects and events is influenced not just by the schemas that are activated in particular contexts, but by the framing of judgments we have to make. The order in which we encounter information of various kinds is one kind of framing. Monk 2 was well aware of the importance of order of input for framing his request.

Framing can also be a matter of choosing between warring labels. And those labels matter not just for how we think about things and how we behave toward them, but also for the performance of products in the marketplace and the outcome of public policy debates.

Your “undocumented worker” is my “illegal alien.” Your “freedom fighter” is my “terrorist.” Your “inheritance tax” is my “death tax.” You are in favor of abortion because you regard it as a matter of exercising “choice.” I am opposed because I am “pro-life.”

My processed meat, which is 75 percent lean, is more attractive than your product, which has 25 percent fat content.20 And would you prefer a condom with a 90 percent success rate or one with a 10 percent failure rate? Makes no difference if I pit them against each other as I just did. But students told about the usually successful condom think it’s better than do other students told about the sometimes unsuccessful condom.

Framing can affect decisions that are literally a matter of life or death. The psychologist Amos Tversky and his colleagues told physicians about the effects of surgery versus radiation for a particular type of cancer.21 They told some physicians that, of 100 patients who had the surgery, 90 lived through the immediate postoperative period, 68 were still alive at the end of a year, and 34 were still alive after five years. Eighty-two percent of physicians given this information recommended surgery. Another group of physicians were given the “same” information but in a different form. The investigators told them that 10 of 100 patients died during surgery or immediately after, 32 had died by the end of the year, and 66 had died by the end of five years. Only 56 percent of physicians given this version of the survival information recommended surgery. Framing can matter. A lot.

A Cure for Jaundice

We often arrive at judgments or solve problems by use of heuristics—rules of thumb that suggest a solution to a problem. Dozens of heuristics have been identified by psychologists. The effort heuristic encourages us to assume that projects that took a long time or cost a lot of money are more valuable than projects that didn’t require so much effort or time. And in fact that heuristic is going to be helpful more often than not. A price heuristic encourages us—mostly correctly—to assume that more expensive things are superior to things of the same general kind that are less expensive. A scarcity heuristic prompts us to assume that rarer things are more expensive than less rare things of the same kind. A familiarity heuristic causes Americans to estimate that Marseille has a bigger population than Nice and Nice has a bigger population than Toulouse. Such heuristics are helpful guides for judgment—they’ll often give us the right answer and normally beat a stab in the dark, often by a long shot. Marseille does indeed have a bigger population than Nice. But Toulouse has a bigger population than Nice.

Several important heuristics were identified by the Israeli cognitive psychologists Amos Tversky and Daniel Kahneman.

The most important of their heuristics is the representativeness heuristic.22 This rule of thumb leans heavily on judgments of similarity. Events are judged as more likely if they’re similar to the prototype of the event than if they’re less similar. The heuristic is undoubtedly helpful more often than not. Homicide is a more representative cause of death than is asthma or suicide, so homicides seem more likely causes than asthma or suicide. Homicide is indeed a more likely cause of death than asthma, but there are twice as many suicide deaths in the United States in a given year than homicide deaths.

Is she a Republican? In the absence of other knowledge, using the representativeness heuristic is about the best we can do. She is more similar to—representative of—my stereotype of Republicans than my stereotype of Democrats.

A problem with that kind of use of the representativeness heuristic is that we often have information that should cause us to assign less weight to the similarity judgment. If we meet the woman at a chamber of commerce lunch, we should take that into account and shift our guess in the Republican direction. If we meet her at a breakfast organized by Unitarians, we should shift our guess in the Democrat direction.

A particularly unnerving example of how the representativeness heuristic can produce errors concerns one “Linda.” “Linda is thirty-one years old, single, outspoken, and very bright. She majored in philosophy. As a student, she was deeply concerned with issues of discrimination and social justice and also participated in antinuclear demonstrations.” After reading this little description, people were asked to rank eight possible futures for Linda.23 Two of these were “bank teller” and “bank teller and active in the feminist movement.” Most people said that Linda was more likely to be a bank teller active in the feminist movement than just a bank teller. “Feminist bank teller” is more similar to the description of Linda than “bank teller” is. But of course this is a logical error. The conjunction of two events can’t be more likely than just one event by itself. Bank tellers include feminists, Republicans, and vegetarians. But the description of Linda is more nearly representative of a feminist bank teller than of a bank teller, so the conjunction error gets made.

Examine the four rows of numbers below. Two were produced by a random number generator and two were generated by me. Pick out the two rows that seem to you to be most likely to have been produced by a random number generator. I’ll tell you in just a bit which two they are.

1 1 0 0 0 1 1 1 1 1 1 1 0 0 1 0 0 1 0 0 1

1 1 0 0 0 0 0 1 0 1 0 1 0 1 0 1 0 0 0 0 0

1 0 1 0 1 1 1 1 0 1 0 1 0 0 0 1 1 1 0 1 0

0 0 1 1 0 0 0 1 1 0 1 0 0 0 0 1 1 1 0 1 1

Representativeness judgments can influence all kinds of estimates about probability. Kahneman and Tversky gave the following problem to undergraduates who hadn’t taken any statistics courses.24

A certain town is served by two hospitals. In the larger hospital about forty-five babies are born each day, and in the smaller hospital about fifteen babies are born each day. As you know, about 50 percent of all babies are boys. The exact percentage of baby boys, however, varies from day to day. Sometimes it may be higher than 50 percent, sometimes lower.

For a period of one year, each hospital recorded the days on which more than 60 percent of the babies born were boys. Which hospital do you think recorded more such days?

Most of the students thought that the percent of babies that were boys would be the same in the two hospitals. As many thought it would be the larger hospital that would have the higher percentage as thought it would be the smaller hospital.

In fact, it’s vastly more likely that percentages of sixty-plus for boys would occur in the small hospital. Sixty percent is equally representative (or, rather, nonrepresentative) of the population value whether the hospital is small or large. But deviant values are far more likely when there are few cases than when there are many.

If you doubt this conclusion, try this. There are two hospitals, one with five births per day and one with fifty. Which hospital do you think would be expected to have 60 percent or more boy babies on a given day? Still recalcitrant? How about five babies versus five thousand?

The representativeness heuristic can affect judgments of the probability of a limitless number of events. My grandfather was once a well-to-do farmer in Oklahoma. One year his crops were ruined by hail. He had no insurance, but he didn’t bother to get any for the coming year because it was so unlikely the same thing would happen two years in a row. That’s an unrepresentative pattern for hail. Hail is a rare event and so any particular sequence of hail is unlikely. Unfortunately, hail doesn’t remember whether it happened last year in northwest Tulsa or in southeast Norman. My grandfather did get hailed out the next year. He didn’t bother to get insurance for the next year because it was really inconceivable that hail would strike the same place three years in a row. But that in fact did happen. My grandfather was bankrupted by his reliance on the representativeness heuristic to judge probabilities. As a consequence, I’m a psychologist rather than a wheat baron.

Back to those rows of numbers I asked you about earlier. It’s the top two rows that are genuinely random. They were two of the first three sequences I pulled from a random number generator. Honest. I did not cherry-pick beyond throwing out the one sequence. The last two rows I made up because they’re more representative of a random sequence than random sequences are. The problem is that our conception of the randomness prototype is off kilter. Random sequences have too many more long runs (00000) and too many more regularities (01010101) than they “should.” Bear this in mind when you see a basketball player score points five times in a row. There’s no reason to keep passing the ball to him any more than to some other player. The player with the “hot hand” is no more likely to make the shot than another player with a comparable record for the season.25 (The more familiar you are with basketball, the less likely you are to believe this. The more familiar you are with statistics and probability theory, the more likely you are to believe it.)

The basketball error is characteristic of a huge range of mistaken inferences. Simply put, we see patterns in the world where there are none because we don’t understand just how un-random-looking random sequences can be. We suspect the dice roller of cheating because he gets three 7s in a row. In fact, three 7s are much more likely than 3, 7, 4 or 2, 8, 6. We hail a friend as a stock guru because all four of the stocks he bought last year did better than the market as a whole. But four hits is no less likely to happen by chance than two hits and two misses or three hits and one miss. So it’s premature to hand over your portfolio to your friend. The representativeness heuristic sometimes influences judgments about causality. I don’t know whether Lee Harvey Oswald acted alone in the assassination of John F. Kennedy or whether there was a conspiracy involving other people. I have no doubt, though, that part of the reason so many people have been convinced that there was a conspiracy is that they find it implausible that an event of such magnitude could have been effected by a single, quite unprepossessing individual acting alone.

Some of the most important judgments about causality that we make concern the similarity of a disease and treatment for the disease. The Azande people of Central Africa formerly believed that burnt skull of the red bush monkey was an effective treatment for epilepsy. The jerky, frenetic movements of the bush monkey resemble the convulsive movements of epileptics.

The Azande belief about proper treatment for epilepsy would have seemed sensible to Western physicians until rather recently. Eighteenth-century doctors believed in a concept called the “doctrine of signatures.” This was the belief that diseases could be cured by finding a natural substance that resembles the disease in some respect. Turmeric, which is yellow, would be effective in treating jaundice, in which the skin turns yellow. The lungs of the fox, which is known for strong powers of respiration, were considered a remedy for asthma.

The belief in the doctrine of signatures was derived from a theological principle: God wishes to help us find the cures for diseases and gives us helpful hints in the form of color, shape, and movement. He knows we expect the treatment to be representative of the illness. This now sounds dubious to most of us, but in fact the representativeness heuristic continues to underlie alternative medicine practices such as homeopathy and Chinese traditional medicine—both of which are increasing in popularity in the West.

Representativeness is often the basis for predictions when other information would actually be more helpful. About twenty years out from graduate school a friend and I were talking about how successful our peers had been as scientists. We were surprised to find how wrong we were about many of them. Students we thought were sure to do great things often turned out to have done little in the way of good science; students we thought were no great shakes turned out to have done lots of excellent work. In trying to figure out why we could have been so wrong, we began to realize that we had relied on the representativeness heuristic. Our predictions were based in good part on how closely our classmates matched our stereotype of an excellent psychologist—brilliant, well read, insightful about people, fluent. Next we tried to see whether there was any way we could have made better predictions. It quickly became obvious: the students who had done good work in graduate school did good work in their later career; those who hadn’t fizzled.

The lesson here is one of the most powerful in all psychology. The best predictor of future behavior is past behavior. You’re rarely going to do better than that. Honesty in the future is best predicted by honesty in the past, not by whether a person looks you steadily in the eye or claims a recent religious conversion. Competence as an editor is best predicted by prior performance as an editor, or at least by competence as a writer, and not by how verbally clever a person seems or how large the person’s vocabulary is.

Another important heuristic Tversky and Kahneman identified is the availability heuristic. This is a rule of thumb we use to judge the frequency or plausibility of a given type of event. The more easily examples of the event come to mind, the more frequent or plausible they seem. It’s a perfectly helpful rule most of the time. It’s easier to come up with the names of great Russian novelists than great Swedish novelists, and there are indeed more of the former than the latter. But are there more tornadoes in Kansas or in Nebraska? Pretty tempting to say Kansas, isn’t it? Never mind that the Kansas tornado you’re thinking about never happened.

Are there more words with the letter r in the first position or the third position? Most people say it’s the first position. It’s easier to come up with words beginning with r than words having an r in the third position—because we “file” words in our minds by their initial letters and so they’re more available as we rummage through memory. But in fact there are more words with r in the third position.

One problem with using the availability heuristic for judgments of frequency or plausibility is that availability is tangled up with salience. Deaths by earthquake are easier to recall than deaths by asthma, so people overestimate the frequency of earthquake deaths in their country (by a lot) and underestimate the frequency of asthma deaths (hugely).

Heuristics, including the representativeness heuristic and the availability heuristic, operate quite automatically and often unconsciously. This means it’s going to be hard to know just how influential they can be. But knowing about them allows us to reflect on the possibility that we’ve been led astray by them in a particular instance.

Summing Up

It’s possible to make fewer errors in judgment by following a few simple suggestions implicit in this chapter.

Remember that all perceptions, judgments, and beliefs are inferences and not direct readouts of reality. This recognition should prompt an appropriate humility about just how certain we should be about our judgments, as well as a recognition that the views of other people that differ from our own may have more validity than our intuitions tell us they do.

Be aware that our schemas affect our construals. Schemas and stereotypes guide our understanding of the world, but they can lead to pitfalls that can be avoided by recognizing the possibility that we may be relying too heavily on them. We can try to recognize our own stereotype-driven judgments as well as recognize those of others.

Remember that incidental, irrelevant perceptions and cognitions can affect our judgment and behavior. Even when we don’t know what those factors might be, we need to be aware that much more is influencing our thinking and behavior than we can be aware of. An important implication is that it will increase accuracy to try to encounter objects and people in as many different circumstances as possible if a judgment about them is important.

Be alert to the possible role of heuristics in producing judgments. Remember that the similarity of objects and events to one another can be a misleading basis for judgments. Remember that causes need not resemble effects in any way. And remember that assessments of the likelihood or frequency of events can be influenced simply by the readiness with which they come to mind.

Many of the concepts and principles you’re going to read about in this book are helpful in avoiding the kinds of inferential errors discussed in this chapter. These new concepts and principles will supplement, and sometimes actually replace, those you normally use.